1. Introduction

With the development of the Internet, user-generated online review texts have exploded, and there exists valuable information in these massive textual data that can help to contribute to business decision-making, policy formulation, and so on. How to extract this information quickly and accurately remains a challenge to this day, and in order to meet this challenge, the research field of Sentiment Analysis has arisen. Sentiment Analysis automatically analyses and understands the sentiment in large-scale text data and obtains the user’s emotions and opinions about it, thus providing valuable information for business decision making, e.g., for a business or an organization, Sentiment Analysis provides detailed sentiment information about the products, services, characteristics and other aspects of the detailed sentiment information, which helps to target improvement and optimization, thus enhancing user experience and increasing marketing volume so that the enterprise and customers end up in a win–win situation. Traditional sentiment analysis research mainly focuses on coarse-grained text, i.e., prediction at the sentence or document level [

1], identifying the overall sentiment of the whole sentence or document. And coarse-grained sentiment analysis is difficult to meet user needs for personalization. In this case, fine-grained aspect level sentiment analysis (ALSA) is proposed to identify and understand the sentiment tendency of specific aspects of a sentence. For example, in the sentence “The taste of food is delicious and the price is reasonable, but the service is worst”, when the given aspect is taste of food, the affective polarity identified by ALSA is positive, whereas when the given aspect is price or service, the affective polarity is positive and negative, respectively.

In the early days of sentiment analysis research, machine learning approaches were mainly used, where the input features were based on manual design requiring expertise and experience [

2], and the classifiers were trained and optimized using traditional machine learning algorithms, such as support vector machines [

3] and decision trees. While these methods perform well in dealing with sentiment analysis problems, they usually require a lot of manual feature design and parameter tuning, and their classification effectiveness depends heavily on the quality of the features [

4]. With the rise of deep learning, since they are not deliberately designed for feature engineering, they have shown superior performance in many natural language processing tasks, such as in machine translation, semantic recognition, question answering, text summarization, etc. As for the task of sentiment analysis, recurrent neural networks, long- and short-term memory networks and gated recurrent units have become the mainstream research methods [

5] and achieved good results [

6]. Attentional mechanisms have also been widely used in this task [

7]. Applying pre-trained language models to downstream tasks has been a research hotspot in recent years; pre-trained word embeddings have been usually obtained in previous tasks using GloVe [

8] and Word2vec [

9], but the obtained word embeddings cannot represent polysemous words, and when the BERT pre-trained language model was proposed, this problem was effectively solved, and then GPT, RobERTa [

10], etc., were proposed, and the word representation capability was further enhanced. Depending on the powerful representation capability of these advanced pre-trained language models, the contextual information and semantic associations in the text can be captured more accurately, which leads to a significant performance improvement in various downstream tasks.

Recently, aspect-level sentiment analysis using syntactic information and graph neural networks has been heavily researched and achieved good performance. Zhang et al. [

11] argued that early models lacked a constraint mechanism to incorporate syntactic information and dependencies between long-distance words, which resulted in the models incorrectly considering syntactically irrelevant context words as cues for determining aspectual sentiments, and therefore used sentence dependencies as neighbors to guide GCN for information aggregation and dissemination and obtained good results. Huang et al. [

12] proposed a new approach using graph attention networks that effectively integrates syntactic information to improve aspect-level sentiment classification. Zhu et al. [

13] integrated the global and local structural information of sentences. By constructing word-document graphs to capture the global dependencies between words, and simultaneously using syntactic structure analysis to mine potential local structural information in sentences, excellent performance results were obtained on multiple datasets. Sun et al. [

14] utilized long- and short-term memory networks to learn the features of sentences, and further enhanced the embedding representation on the dependency tree by graph convolutional networks, achieving excellent performance results on four benchmark datasets. There is great improvement in accuracy. However, there is a lack of utilization of sentiment-related knowledge for specific aspects of sentences and fusion of aspect-specific information. With further research, integrating external sentiment knowledge as auxiliary information into aspect-level sentiment analysis tasks is expected to further enhance the performance of the model. Liang et al. [

15] constructed a new enhanced sentence dependency graph by using SenticNet sentiment general knowledge on the dependency graph of sentences. Liu et al. [

16] incorporated GCN into the gating mechanism to enhance the GCN ability to node information to be fully aggregated. Meanwhile, contextual sentiment knowledge was incorporated into the graph convolutional network to enhance the model’s perception of sentiment features, which further proved the effectiveness of sentiment general knowledge in aspect-level sentiment analysis tasks.

Given the limitations of the above models, this paper proposes a novel aspect-level sentiment analysis model to address these issues. Firstly, aspectual features are supplemented to the syntax-aware module and semantic enhancement module, and external sentiment knowledge is integrated into a graph convolutional network to enhance the model’s ability to perceive the sentiment information; secondly, the semantic information of the sentence is obtained by using a multi-head self-attention mechanism and Point-wise Convolutional Transformer. The obtained syntactic and semantic information is pooled and spliced to obtain the final feature representation based on specific aspects of the sentence.

The main contributions of this paper are as follows:

- (1)

For the task of aspect-level sentiment analysis, we propose a novel model that integrates both syntactic and semantic aspects of sentence-specific aspects of sentiment tendencies.

- (2)

External affective general knowledge is introduced to enhance the model’s ability to perceive affective information, and additional aspect-specific information is added to the model to increase the model’s sensitivity to different specific aspects of the sentence.

- (3)

Extensive experiments on three benchmark aspect-level sentiment analysis datasets outperform the benchmark models compared, demonstrating the significant superiority of our proposed ASGCN model in aspect-level sentiment analysis tasks.

2. Related Work

Aspect-level sentiment analysis belongs to the fine-grained research area of sentiment analysis tasks, and its main challenge lies in the more complex task of accurately identifying the sentiment tendencies associated with specific aspects at the sentence level compared to traditional sentence-level and document-level sentiment analysis. This task requires the model to understand the text in greater detail and dig deeper into the sentiment information associated with specific aspects in the sentence, thus placing higher demands on the accuracy and complexity of the algorithm. With the development of deep learning, researchers have gradually introduced a variety of innovative neural network structures and attention mechanisms that meet this requirement and improve the performance of models in aspect-level sentiment analysis tasks. For example, Tang et al. [

17] used an LSTM network to simultaneously establish the semantic correlations between the top text-to-target word and the bottom text-to-target word in a sentence. The accuracy of target-related sentiment classification was greatly improved. With the introduction of the attention mechanism, many models have emerged that integrate the attention mechanism with neural networks. Wang et al. [

18] proposed a unidirectional LSTM aspect-level sentiment classification model based on the attention mechanism. For different input aspects, the most important sentiment features corresponding to them in the sentence can be captured. Tang et al. [

19] used the attention mechanism to design a deep memory network, where each layer of the network learns an abstract representation of the text. Through the superposition of multiple layers of attention, the model learns a highly complex function of the sentence for a specific aspect, which has a high capacity of abstract data representation, to represent important affective information in the text. Ma et al. [

20] argued that the target and the context should be treated equally, based on which an interactive attention model was designed to establish deep semantic associations between context and target items. The model obtains not only sentence-to-aspect attention, but also aspect-to-sentence attention, and then combines them for sentiment classification. Ren et al. [

21] designed a lightweight and efficient model using gated CNNs, which integrates stacked gated convolutions and attention mechanisms. Liu et al. [

22] utilized a multilayer attention mechanism, including intra-layer and inter-layer attention mechanisms that generate hidden state representations of sentences. In the intra-layer attention mechanism, multi-head self-attention and pointwise feed-forward structure were designed. In the inter-level attention mechanism, global attention is introduced to capture the interaction information between context and aspect words, and based on this, a feature-focused attention mechanism is proposed to enhance the model’s sentiment recognition ability. In recent years, the research field of aspect-oriented sentiment analysis has witnessed the emergence of a number of models that employ a combination of syntactic information and graph convolutional networks. These models capture the sentiment information of the target aspect more accurately by making full use of the syntactic structure and graph convolution operations in the text. This is because syntactic information provides syntactic relationships between words, while graph convolutional networks capture complex associations in the text through graph structures, enabling the model to understand the textual context more comprehensively. Zhang et al. [

23] proposed a two-layer interactive graph convolutional network model for sentiment analysis. Tian et al. [

24] devised a type-aware graph convolutional network model that utilizes not only the syntactic information but also explicitly inter-word dependency types, and attention integration was proposed in order to distinguish between different edges in the graph. Zhang et al. [

25] pruned the syntactic dependency tree, got rid of the noisy information, and built a semantic-based GCN and a syntactic-based GCN.

3. Methodology

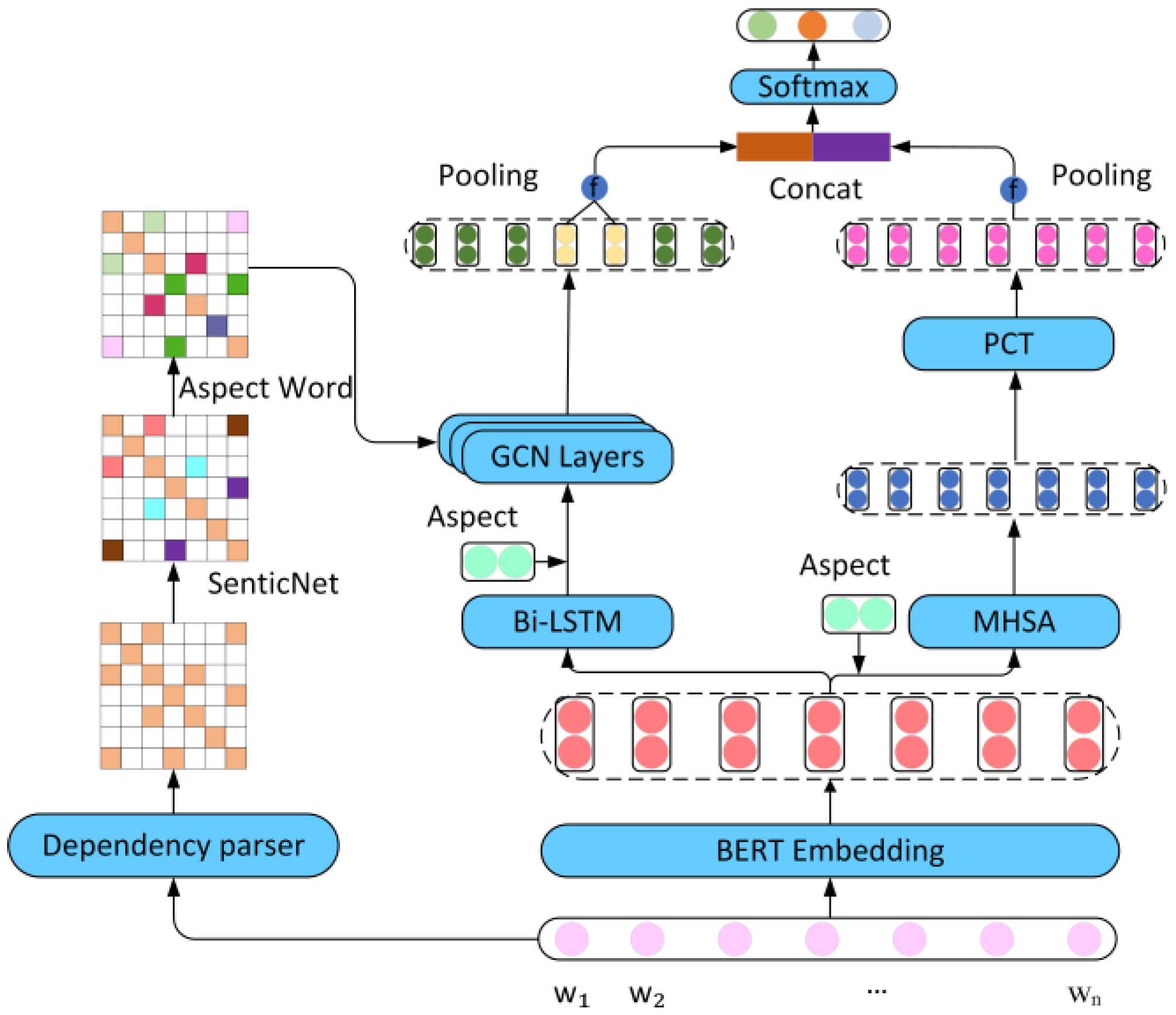

In this section, the SAGCN model proposed in this paper is introduced in detail, and the specific model architecture is shown in

Figure 1, which consists of BERT, external sentiment common sense knowledge, and graph convolutional network. Firstly, BERT is used to pre-train the language model to obtain the word embedding representation of the fused context, then the aspect-specific sentiment representation after syntactic restriction is obtained through the syntactic-aware module on the left side, and then the sentiment semantics is supplemented by the syntactic-assisted module on the right side. The representations obtained from the syntax-aware and semantic complementary modules are spliced after average pooling, and finally classified using the Softmax function to obtain aspect-specific sentiment polarity in the sentence.

3.1. Definition of Tasks

Given a sentence which consists of n words, denoted by , we select one of the aspects corresponding to this sentence, denoted by ; T is a subset of P which consists of m words, where a + 1 and are the start and end indexes of the particular aspect, respectively, and the aim of the ALSA task study is to identify the affective polarity of the particular aspect in the given sentence.

3.2. BERT Embedding

In this study, a BERT pre-trained language model is used to obtain the embedding vectors of each word in the input sentence, i.e., the discrete symbols of the sentence are mapped to real vectors to capture the semantic and association information between the data for downstream models. Unlike directly inputting a sentence, and inspired by literatures [

26,

27] and so on, this paper inputs the text into the BERT model in the form of a sentence-aspect pair, i.e., [CLS]Sentence [SEP]Aspect [SEP] to obtain an embedding representation of the sentence and the aspect. This is expressed as follows:

3.3. Syntax-Aware Module

3.3.1. BiLSTM

In order to obtain richer contextual semantic information, this paper inputs the text vectorized representation

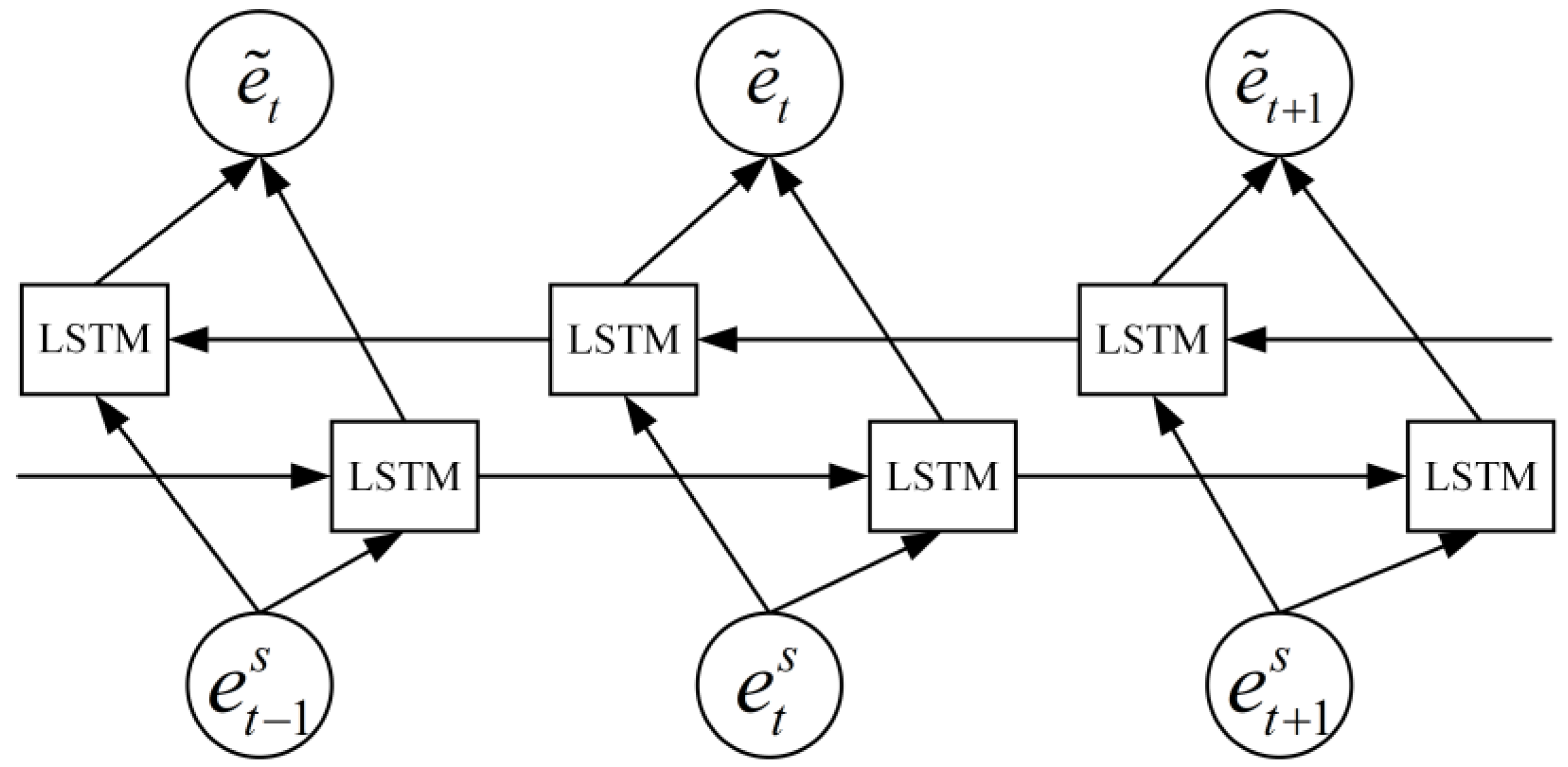

obtained by BERT into the BiLSTM network. The specific structure of BiLSTM is shown in

Figure 2. It can be seen that BiLSTM has two independent BiLSTM units in each time step. This structure allows for the model to capture both past and future dependencies simultaneously, and thus better capture the long-term dependencies and contextual information in the sequence. Linking the corresponding parallel hidden representations of the forward and backward BiLSTM modelling into a higher-dimensional representation generates richer semantic information, which works well in many sequence tasks such as language modelling, machine translation, sentiment analysis, etc. This bi-directional structure also helps to process various relations and patterns in the input sequence, improving the model’s ability to understand sequence data. The specific calculations are shown in Equations (3)–(6).

where

denotes that the vectors are processed by LSTM in a back-to-front order in the sequence.

3.3.2. Emotional Common Sense Knowledge and Aspect-Enhanced Syntax Map

In order to take the dependencies between words in a sentence, which are exploited by graph convolutional networks, inspired by literature [

11,

14], in this paper, we use the spaCy (SpaCy toolkit:

https://spacy.io/.) (accessed on 6 February 2023) tool to obtain a syntactic dependency tree, and then we use the dependency tree to construct a corresponding adjacency matrix

for each input sentence. If there is a dependency relationship between word

and word

, then

is set to 1, otherwise

= 0, followed by assuming that each word is adjacent to itself, i.e., the diagonals of the adjacency matrix A are all set to 1. The specific formula is shown in Equation (7):

Then, in order to enhance the ordinary syntactic dependency graph and to highlight the affective dependencies between individual words in a sentence, SenticNet7 is introduced into the model as an external source of affective commonsense knowledge, which is a conceptual-level knowledge base that assigns semantics to 300,000 concepts. SenticNet7 contains a large amount of information about affective-related concepts, words and the associations between them in order to facilitate the inference of textual emotional information. It also helps to identify and understand the emotional states embedded in the text. In this paper, in order to integrate sentiment knowledge into graph convolutional neural networks, the calculated sentiment scores of the words contained in SenticNet7 in the four sentiment dimensions representations are utilized, with the sentiment scores of each word distributed between −1 and 1. The sentiment scores corresponding to some of the words are shown in

Table 1. The sentiment score matrix

is defined as the sum of the sentiment scores of two words, as shown in Equation (8):

In the above equation, , which means that word is neutral or does not exist in the SenticNet7 sentiment knowledge base.

In addition, currently available aspect-level sentiment analysis models based on graph convolutional networks usually do not fully consider the focus on specific aspects when constructing graphs. Therefore, in this paper, in order to further enhance the sentiment dependencies between context words and aspect words based on SenticNet7, an aspect enhancement matrix is proposed, denoted as

if

or

belongs to an aspect word, and

otherwise, where

is the element contained in matrix

. Eventually, the adjacency matrix enhanced by external emotional knowledge and aspect words can be obtained. As shown in the calculation of Equation (9),

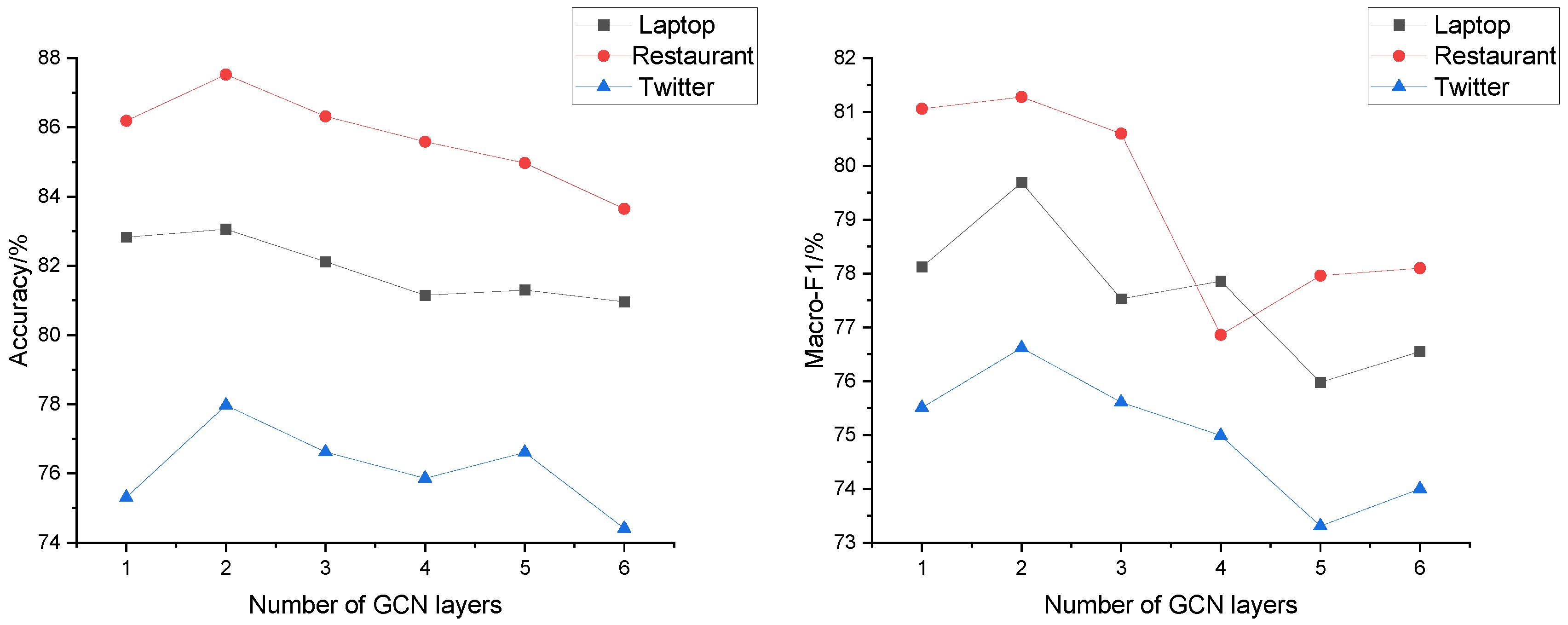

3.3.3. Syntax-Enhanced Graph Convolution

Graph Convolutional Neural Networks were proposed by Kipf et al. [

28] in 2016. The core idea is to update the feature representation of each node by aggregating the features of each node with those of its neighbors. This aggregation process uses the topology of the graph to define the relationships between nodes and learns weights to determine how much influence different neighboring nodes have on the current node. This is also similar to the traditional convolutional neural network operation of aggregating information within a node’s neighborhood for encoding local features of unstructured data. By stacking multiple layers of graph convolution, the network can progressively learn richer and more advanced node representations to adapt to more complex graph data. Influenced by literature [

14], in this paper, the final grammar graph obtained in the previous section is fed into the GCN layer in order to learn the sentiment representations of specific aspects of sentences subject to grammatical constraints, and hidden representations of each node on the first layer of

GCN are computed as shown in Equations (10) and (11):

where

denotes the element in the adjacency matrix,

is the representation of the j node of the previous GCN layer, the inputs of the particular initial GCN are the hidden representation of the BiLSTM and the splicing of the original aspect-specific embedding

,

is the feature representation of the i node in the current layer,

is the degree corresponding to the i node, and weights

and bias

are trainable parameters.

3.4. Semantic Assistance Module

3.4.1. Multi-Head Self-Attention

The syntax-aware module can extract syntactic and part of semantic information, but the model still lacks the complement of semantic information. In order to capture more semantic information, a semantic auxiliary module is introduced, in which instead of using the traditional BiLSTM to obtain semantic information, the hidden state of the embeddings is computed using multi-head self-attention. It can be computed in parallel, making full use of semantic relations between words; it does not have to consider order and distance, not to mention the loss of information due to long-term dependency [

29], and it can capture different semantic information in different subspaces to obtain a richer semantic representation. Its input is a splice of sentence vector

and the mean of aspect vector

. The calculation process is shown in Equations (12) and (13):

3.4.2. Point-Wise Convolutional

The Point-wise Convolutional Transformer can transform the hidden representation generated by the multi-head self-attention mechanism. It highlights the features related to aspectual words, thus improving the sensitivity of the model to emotional information in order to better capture emotional information. The convolution kernel of Point-wise Convolutional is 1. For input sequence

, the specific formula is shown below.

where

is the ELU nonlinear activation function,

and

are two trainable weight matrices, “

” denotes the convolution operator,

and

are the bias.

3.5. Feature Fusion

Inspired by literature [

14], in the grammar-aware module, only the aspect vectors among them are selected for the aggregation of features, because these vectors are encoded by bi-directional long- and short-term memory network units and graph convolutional networks, respectively, which incorporate contextual semantic information and emotion-dependent information, and in this paper, the aspect vectors are average pooled so as to retain the vast majority of the information in the vectors, which is denoted by

.

In the semantic assistance module all the outputs after Point-wise Convolutional Transformer are average pooled to obtain the final representation of the semantics

, and then the syntactic and semantic representations are connected to obtain the final integrated representation of aspect-specific sentiment information. After linear variation, it is projected into the target space. The sentiment space probability distribution y is obtained after passing the Softmax function, and the specific formula is shown below.

3.6. Training of the Model

This model uses the cross-entropy loss function as an objective function to measure the difference between the predicted values and the actual labels. The bootstrap model is gradually optimized during the training process so that it can make more accurate predictions. The specific calculation formula is shown below.

where c denotes the number of categories, s is the number of training samples,

is the actual labelled value of sample i,

is the probability that sample i is predicted to be category j, λ is the regularity coefficient, and θ is the set of all parameters in the model. During the training process, the loss size of the predicted and labelled values are compared, and the loss function is adjusted to derive the model parameters by continuously performing forward and backward propagation, and the model parameters are optimally updated using the gradient descent method so that the loss function reaches the minimum value.

5. Conclusions

In this paper, we propose a syntax-aware and graph convolutional network-based sentiment analysis model for aspect-level sentiment analysis tasks. The model first uses BERT to pre-train the language model to obtain embedded representations of sentences and aspectual vocabulary, and then obtains syntactic knowledge and semantic information of sentences through syntactic-aware and semantic-assisted modules, respectively. In the syntactic-aware module, sentence dependency graphs are obtained through dependency parsing, and then external affective common sense knowledge is introduced into dependency graphs, used to augment the dependency graphs and provide more accurate sentiment representations of the different aspects of the sentence. In the semantic auxiliary module, multi-head self-attention and Point-wise Convolutional are used to supplement the semantic information and enrich the sentiment features of specific aspects. In order to highlight the importance of aspect information, specific aspect information is additionally supplemented in both semantic and syntactic modules. The experimental results on three benchmark data demonstrate the effectiveness of the SAGCN model proposed in this paper. Compared to the most basic CDT model consisting of graph convolutional networks, the accuracy of the SAGCN model in this paper is improved by 5.58% in the Laptop data, whereas the accuracy on the Restaurant and Twitter datasets is improved by 5.23% and 3.31%, respectively. The accuracy of classification is greatly improved.

Although the SAGCN model achieved good results in the ALSA task, there are still some limitations, and we will further improve our method based on these limitations in future research. Firstly, the model overly relies on dependencies between words and ignores other complex relationships in the sentence, which may lead to a decrease in the accuracy of the model. For this problem we plan to integrate the composition tree into the aspect-level sentiment analysis model, enriching the syntactic information through the composition tree and improving the accuracy of the model’s classification. Secondly, the dependence on pre-trained models such as BERT may affect the performance of the model and the repeatability of the experiments. To address this problem, we plan to freeze the weights of the pre-trained models and control the version of the model to minimize the sensitivity of the model to changes in external resources and improve the reliability and repeatability of the experiments. Thirdly, the model architecture is relatively complex, which is not convenient for practical application. For this, we consider pruning or compressing the model to reduce the model size and the number of parameters so as to improve the scalability and efficiency of the model in practical application.