The Temperature Field Prediction and Estimation of Ti-Al Alloy Twin-Wire Plasma Arc Additive Manufacturing Using a One-Dimensional Convolution Neural Network

Abstract

1. Introduction

2. Datasets Building

2.1. Experiment Description

2.2. Finite Element Method Description

3. Methodology

3.1. Basic Workflow

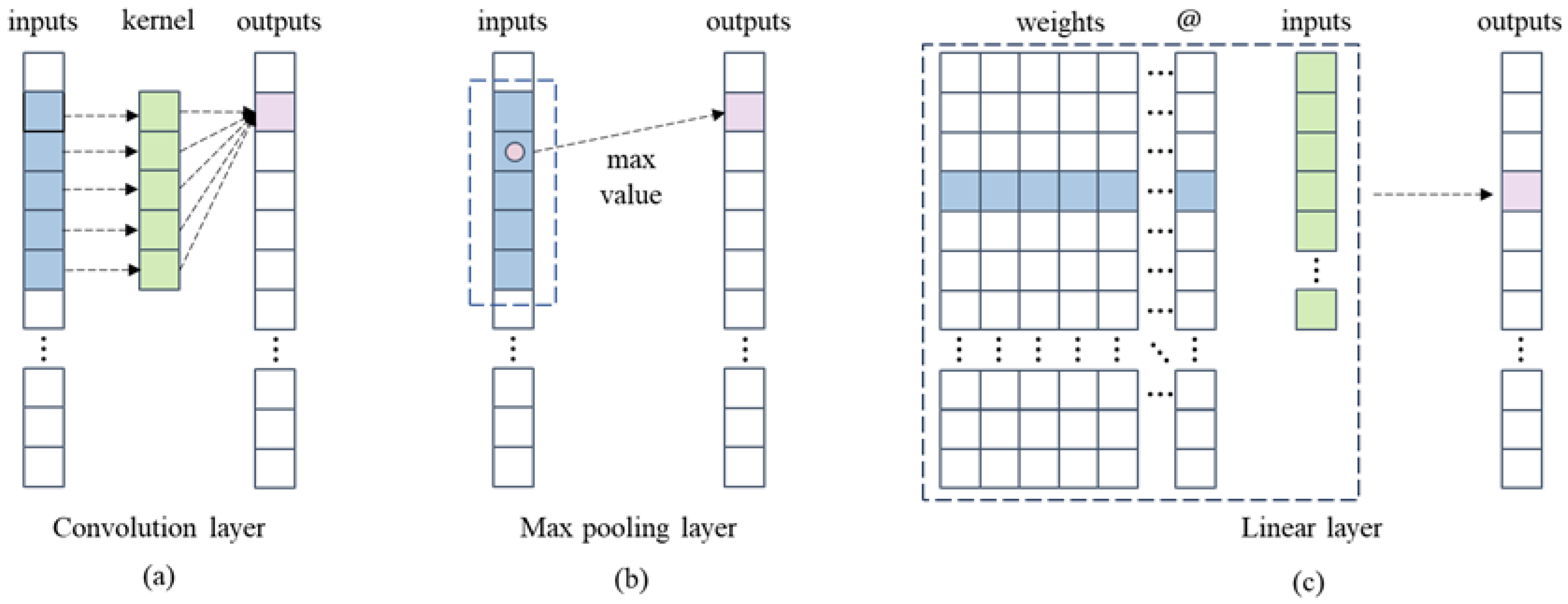

3.2. Architecture of the Conv1D Network

4. Results and Discussion

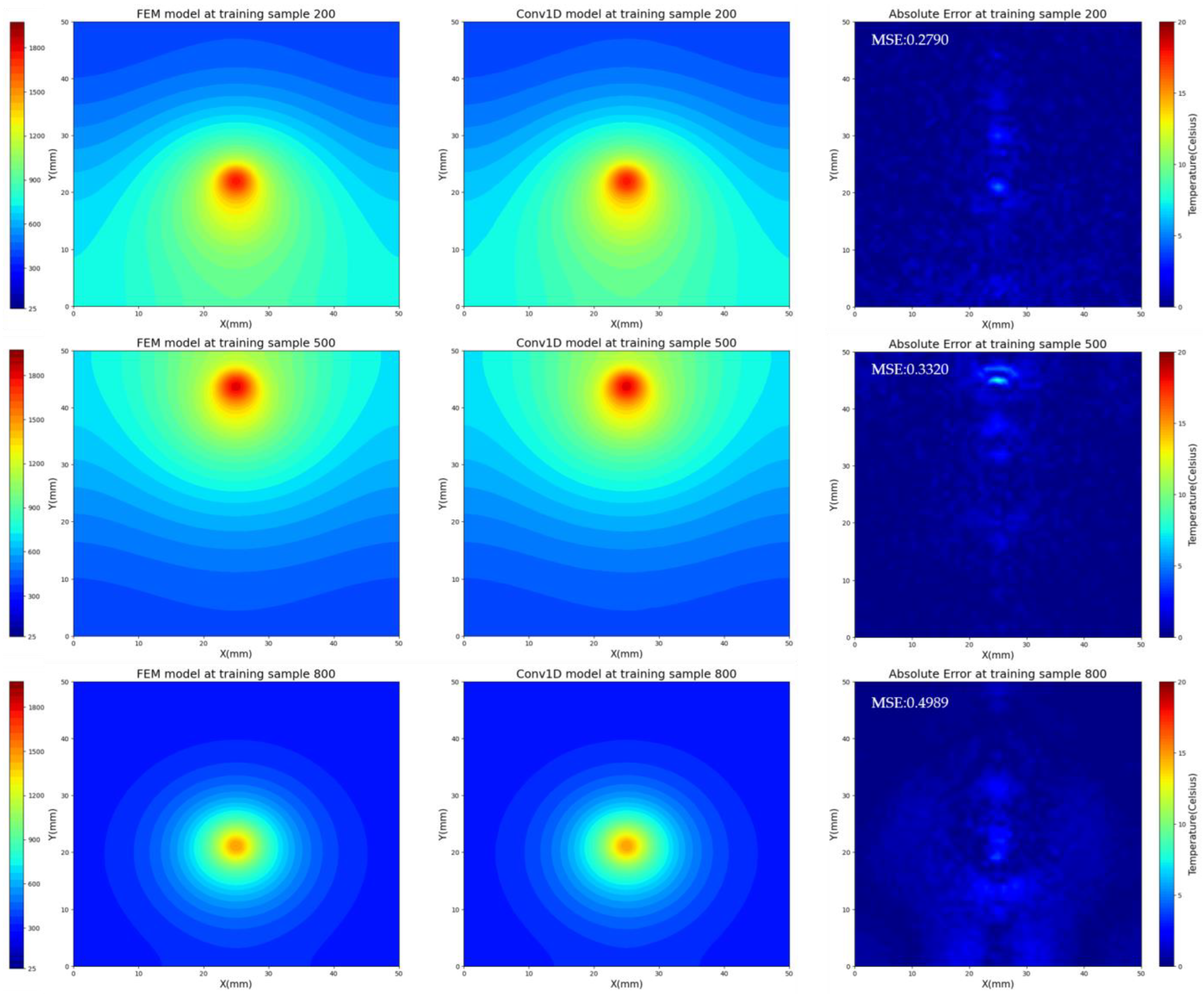

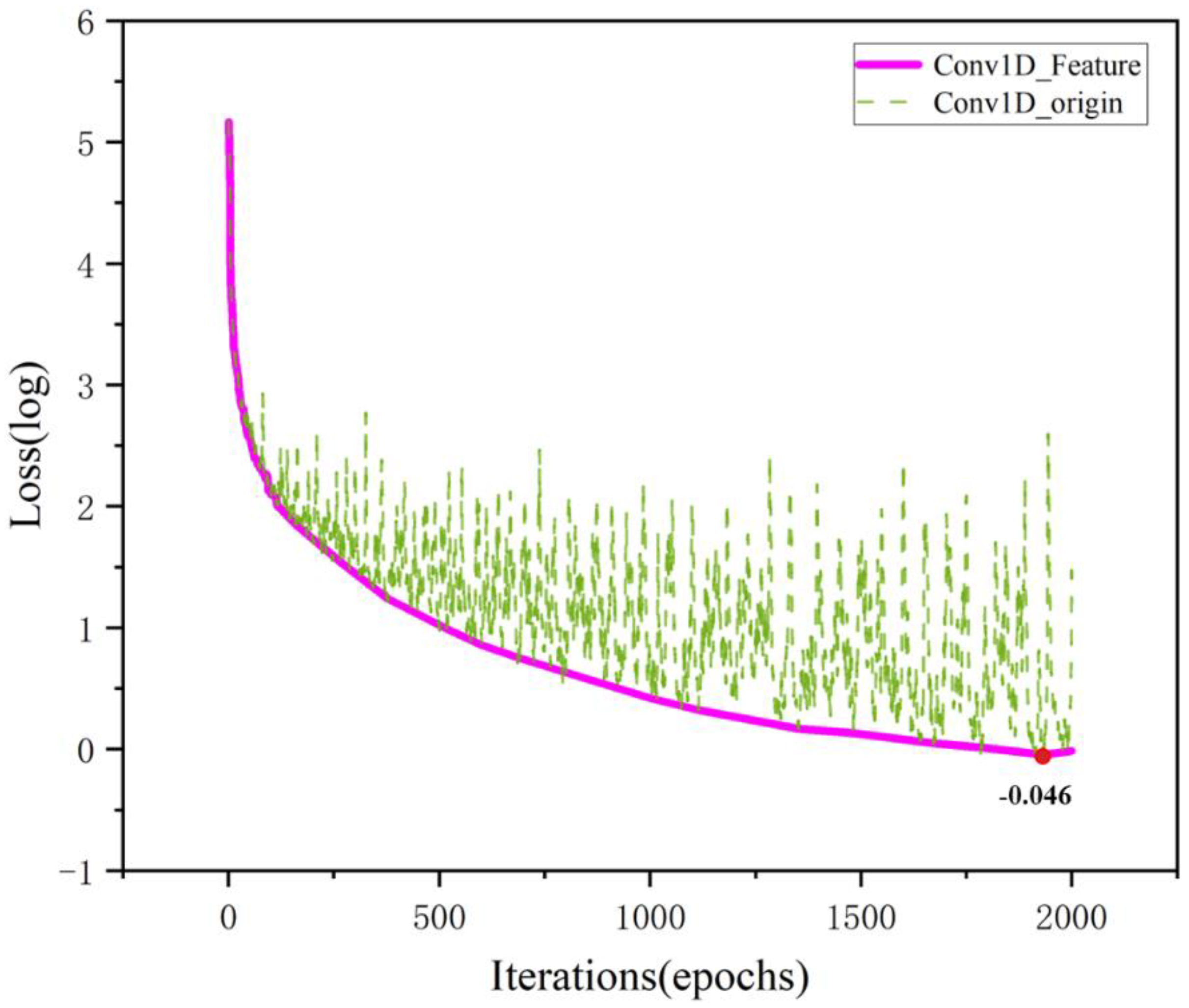

4.1. The Performance of Conv1D Model at Training Set

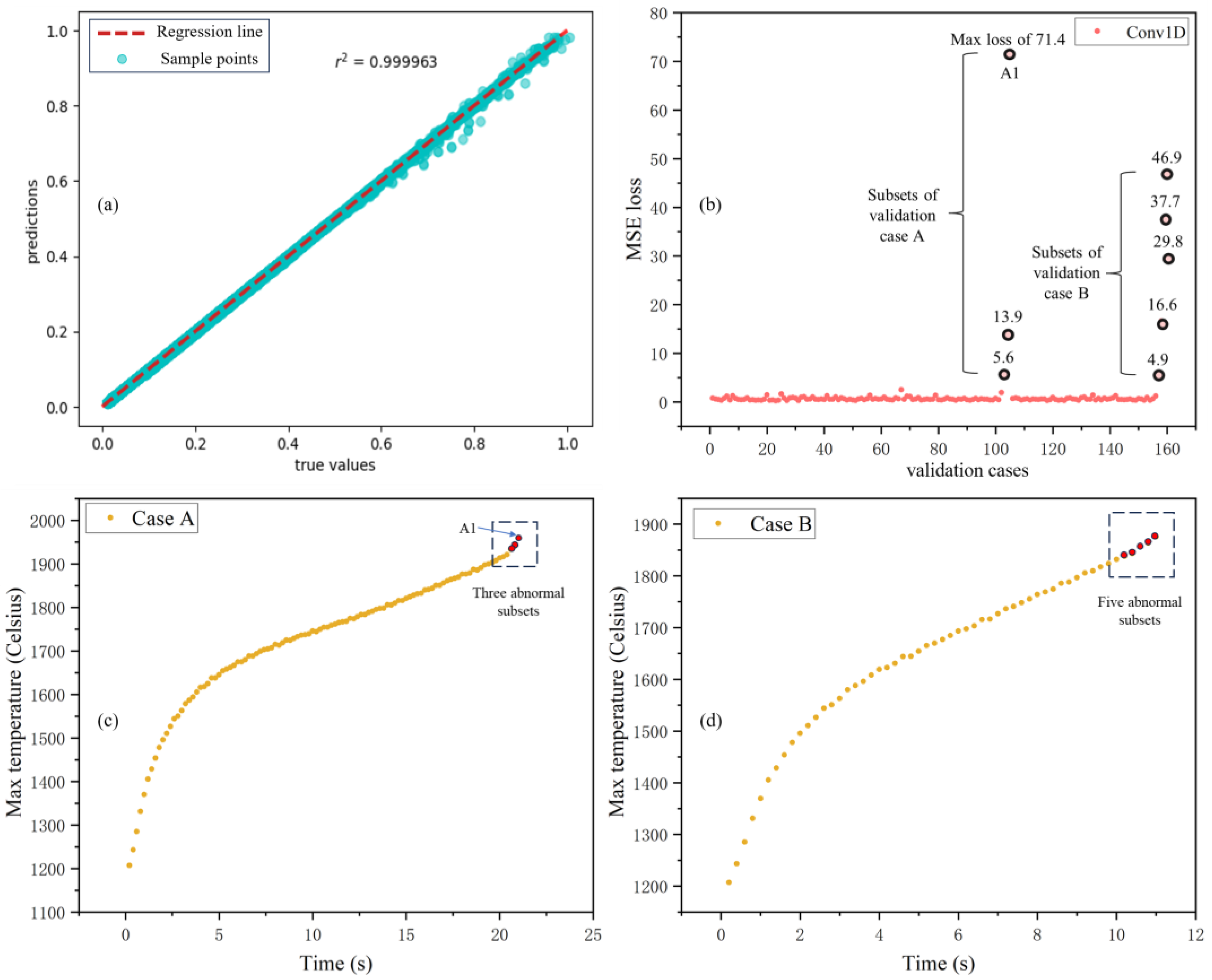

4.2. The Performance of Conv1D Model at Validation Set

4.3. The Performance of Conv1D Model for Computational Cost

5. Conclusions

- The article organically combined the FEM with the machine learning method and transfer training methods. A basic training and transfer training workflow was proposed, which provides a large amount of training data. At the same time, it transforms the model’s training process into a dynamic process to strengthen the model’s prediction robustness.

- The one-dimensional convolutional neural network model designed in this paper can effectively be fed one-dimensional processed features and predict temperature results during the manufacturing process. The MSE of the temperature field predicted by the neural network was reached within 0.5, and the prediction accuracy exceeded 99%.

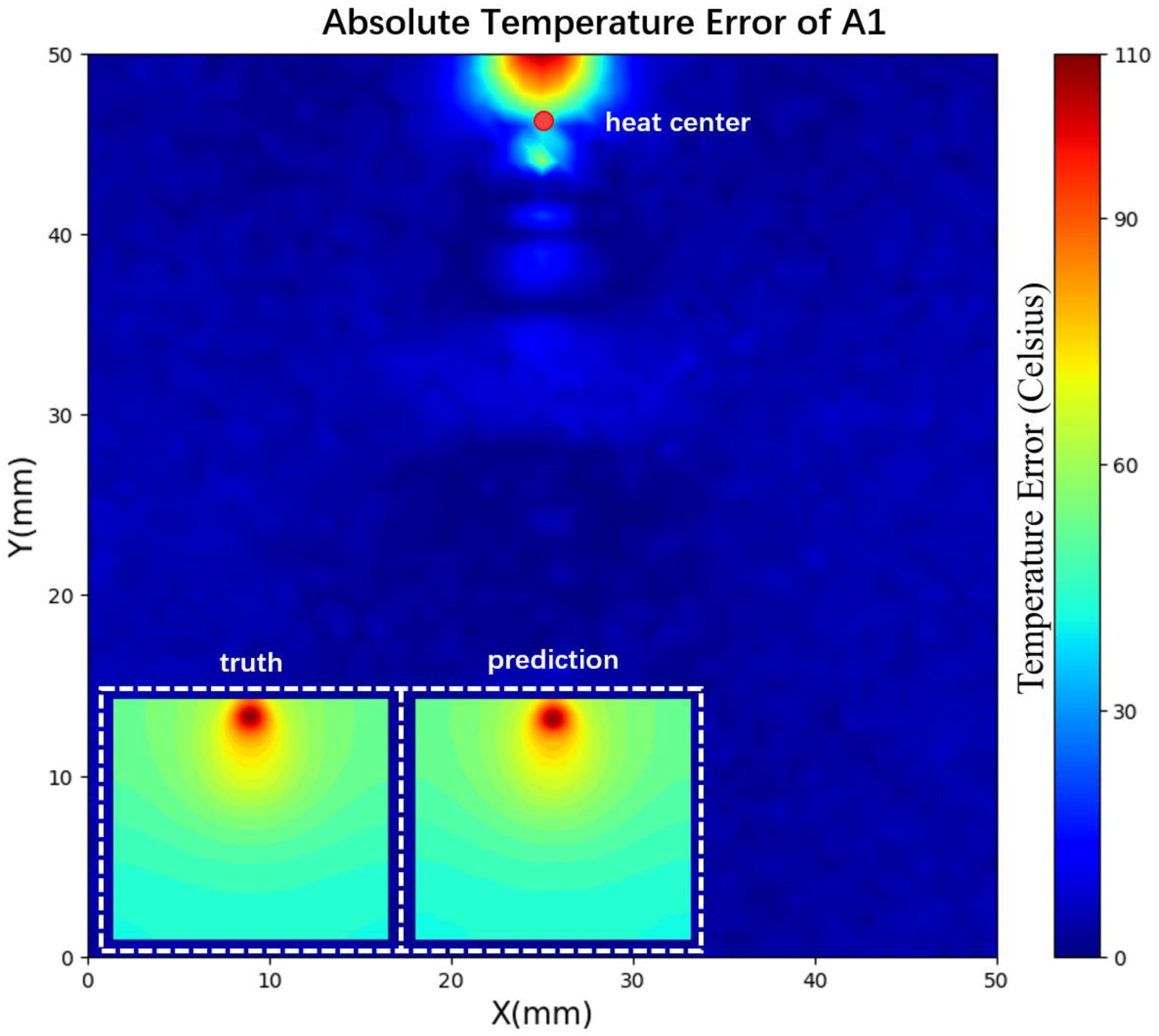

- The model performed well in the validation set and had a good robustness. The R2 of the prediction results in the validation set could reach 0.999963, and the main error was concentrated in the high-temperature region of the workpiece. Through transfer training, the prediction error could be reduced to the desired value after 30 iterations.

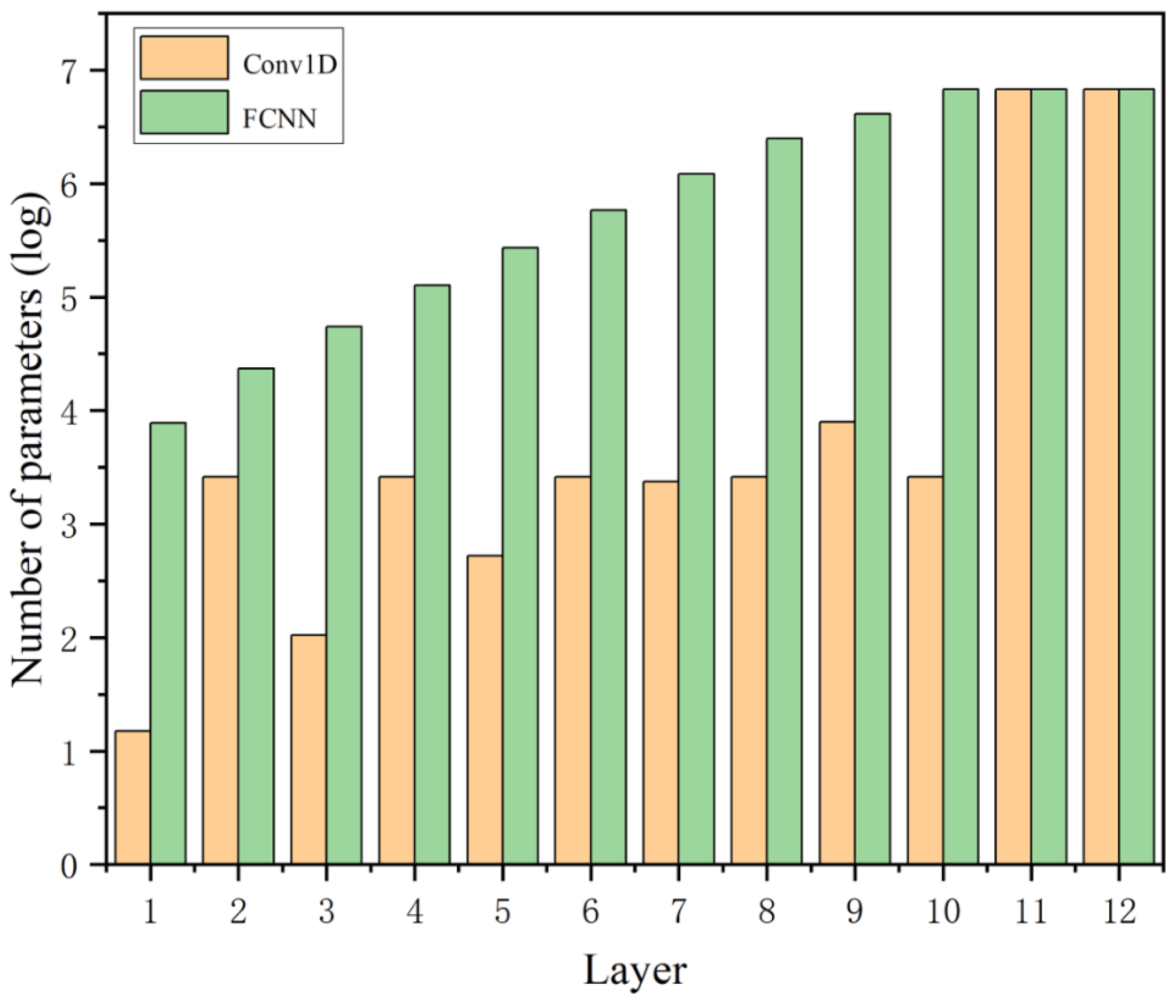

- The proposed Conv1D model had a better performance than the fully connected neural network model by using 50% of the running time, 80% of the training time, and only 50% of the ROM occupation. Compared with the traditional FEM prediction of temperature, the neural network model has obvious advantages in running time and ROM usage.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

References

- Zhou, W.L.; Jia, C.B.; Zhou, F.Z.; Wu, C.S. Dynamic evolution of keyhole and weld pool throughout the thickness during keyhole plasma arc welding. J. Mater. Process. Technol. 2023, 322, 118206. [Google Scholar] [CrossRef]

- Zhu, Q.; Liu, Z.; Yan, J. Machine learning for metal additive manufacturing: Predicting temperature and melt pool fluid dynamics using physics-informed neural networks. Comput. Mech. 2021, 67, 619–635. [Google Scholar] [CrossRef]

- Aghili, S.; Shamanian, M.; Najafabadi, R.A.; Keshavarzkermani, A.; Esmaeilizadeh, R.; Ali, U.; Marzbanrad, E.; Toyserkani, E. Microstructure and oxidation behavior of NiCr-chromium carbides coating prepared by powder-fed laser cladding on titanium aluminide substrate. Ceram. Int. 2020, 46, 1668–1679. [Google Scholar] [CrossRef]

- Deng, L.; Hu, F.; Ma, M.; Huang, S.; Xiong, Y.; Chen, H.; Li, L.; Peng, S. Electronic Modulation Caused by Interfacial Ni-O-M (M = Ru, Ir, Pd) Bonding for Accelerating Hydrogen Evolution Kinetics. Angew. Chem. Int. Ed. 2021, 60, 22276–22282. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.L.; Liang, Z.L.; Guo, Y.H.; Sun, Z.G.; Wang, Y.Q.; Zhou, L. A study on the Grain Refinement Mechanism of Ti-6Al-4V Alloy Produced by Wire Arc Additive Manufacturing Using Hydrogenation Treatment Processes. J. Alloys Compd. 2021, 890, 161634. [Google Scholar] [CrossRef]

- Chen, Y.; Lei, Z.L.; Heng, Z. Influence of laser beam oscillation on welding stability and molten pool dynamics. In Proceedings of the 24th National Laser Conference & Fifteenth National Conference on Laser Technology and Optoelectronics, Shanghai, China, 17–20 October 2020; Volume 11717, pp. 512–517. [Google Scholar] [CrossRef]

- Fayazfar, H.; Salarian, M.; Rogalsky, A.; Sarker, D.; Russo, P.; Paserin, V.; Toyserkani, E. A critical review of powder-based additive manufacturing of ferrous alloys: Process parameters, microstructure and mechanical properties. Mater. Des. 2018, 144, 98–128. [Google Scholar] [CrossRef]

- Liu, W.W.; Saleheen, K.M.; Tang, Z.J. Review on scanning pattern evaluation in laser-based additive manufacturing. Opt. Eng. 2021, 60, 070901. [Google Scholar] [CrossRef]

- Jing, H.; Ye, X.; Hou, X.; Qian, X.; Zhang, P.; Yu, Z.; Wu, D.; Fu, K. Effect of Weld Pool Flow and Keyhole Formation on Weld Penetration in Laser-MIG Hybrid Welding within a Sensitive Laser Power Range. Appl. Sci. 2022, 12, 4100. [Google Scholar] [CrossRef]

- Panwisawas, C.; Perumal, B.; Ward, R.W.; Turner, N.; Turner, R.P.; Brooks, J.W.; Basoalto, H.C. Keyhole formation and thermal fluid flow-induced porosity during laser fusion welding in titanium alloys: Experimental and modeling. Acta Mater. 2017, 126, 251–263. [Google Scholar] [CrossRef]

- Trautmann, M.; Hertel, M.; Feussel, U. Numerical simulation of TIG weld pool dynamics using smoothed particle hydrodynamics. Int. J. Heat Mass Transf. 2017, 115, 842–853. [Google Scholar] [CrossRef]

- Cho, W.I.; Woizeschke, P.; Schultz, V. Simulation of molten pool dynamics and stability analysis in laser buttonhole welding. Procedia CIRP 2018, 74, 687–690. [Google Scholar] [CrossRef]

- Zhang, Y.J.; Hong, G.S.; Ye, D.S.; Zhu, K.P.; Fuh, J.Y.H. Extraction and evaluation of melt pool, plume and spatter information for powder-bed fusion AM process monitoring. Mater. Des. 2018, 156, 458–469. [Google Scholar] [CrossRef]

- Shevchik, S.A.; Kenel, C.; Leinenbach, C.; Wasmer, K. Acoustic emission for in situ quality monitoring in additive manufacturing using spectral convolution neural networks. Addit. Manuf. 2018, 21, 598–604. [Google Scholar] [CrossRef]

- Montazeri, M.; Rao, P. Sensor-Based Build Condition Monitoring in Laser Powder Bed Fusion Additive Manufacturing Process Using a Spectral Graph Theoretic Approach. J. Manuf. Sci. Eng. 2018, 140, 091002. [Google Scholar] [CrossRef]

- Xie, X.; Bennett, J.; Saha, S.; Lu, Y.; Cao, J.; Liu, W.K.; Gan, Z. Mechanistic data-driven prediction of as-built mechanical properties in metal additive manufacturing. Comput. Mater. 2021, 7, 86. [Google Scholar] [CrossRef]

- Chowdhury, S.; Anand, S. Artificial Neural Network Based Geometric Compensation for Thermal Deformation in Additive Manufacturing Processes. In Proceedings of the International Manufacturing Science and Engineering Conference, Blacksburg, VA, USA, 27 June–1 July 2016; Volume 3, p. V003T08A006. [Google Scholar] [CrossRef]

- Mriganka, R.; Olga, W. Data-driven modeling of thermal history in additive manufacturing. Addit. Manuf. 2020, 32, 101017. [Google Scholar] [CrossRef]

- Ren, K.; Chew, Y.; Zhang, Y.F.; Fuh, J.Y.H.; Bi, G.J. Thermal field prediction for laser scanning paths in laser aided additive manufacturing by physics-based machine learning. Comput. Methods Appl. Mech. Eng. 2020, 362, 112734. [Google Scholar] [CrossRef]

- Raissi, M.; Perdikaris, P.; Karniadakis, G.E. Physics Informed Deep Learning (Part I): Data-driven Solutions of Nonlinear Partial Differential Equations. arXiv 2017, arXiv:1711.10561. [Google Scholar] [CrossRef]

- Raissi, M.; Perdikaris, P.; Karniadakis, G.E. Physics Informed Deep Learning (Part II): Data-driven Solutions of Nonlinear Partial Differential Equations. arXiv 2017, arXiv:1711.10566. [Google Scholar] [CrossRef]

- Baydin, A.G.; Pearlmutter, B.A.; Radul, A.A.; Siskind, J.M. Automatic differentiation in machine learning: A survey. J. Mach. Learn. Res. 2018, 18, 1–43. [Google Scholar] [CrossRef]

- Lu, L.; Meng, X.H.; Mao, Z.P.; Karniadakis, G.E. DeepXDE: A Deep Learning Library for Solving Differential Equations. Soc. Ind. Appl. Math. 2021, 63, 208–228. [Google Scholar] [CrossRef]

- Li, S.L.; Wang, G.; Di, Y.L.; Wang, L.P.; Wang, H.D.; Zhou, Q.J. A physics-informed neural network framework to predict 3D temperature field without labeled data in process of laser metal deposition. Eng. Appl. Artif. Intell. 2023, 120, 105908. [Google Scholar] [CrossRef]

- Ren, K.; Chew, Y.; Liu, N.; Zhang, Y.F.; Fuh, J.Y.H.; Bi, G.J. Integrated numerical modeling and deep learning for multi-layer cube deposition planning in laser aided additive manufacturing. Virtual Phys. Prototyp. 2021, 16, 318–332. [Google Scholar] [CrossRef]

- Zhu, Z.W.; Anwer, N.; Huang, Q.; Mathieu, L. Machine learning in tolerancing for additive manufacturing. CIRP Ann. 2018, 67, 157–160. [Google Scholar] [CrossRef]

- Hemmasian, A.; Ogoke, F.; Akbari, P.; Malen, j.; Beuth, J.; Farimani, A.B. Surrogate modeling of melt pool temperature field using deep learning. Addit. Manuf. Lett. 2023, 5, 100123. [Google Scholar] [CrossRef]

- Ness, K.L.; Paul, A.; Sun, L.; Zhang, Z.L. Towards a generic physics-based machine learning model for geometry invariant thermal history prediction in additive manufacturing. J. Mater. Process. Technol. 2022, 302, 117472. [Google Scholar] [CrossRef]

- Zhang, S.W.; Kong, M.; Miao, H.; Memon, S.; Zhang, Y.J.; Liu, S.X. Transient temperature and stress fields on bonding small glass pieces to solder glass by laser welding: Numerical modeling and experimental validation. Sol. Energy 2020, 209, 350–362. [Google Scholar] [CrossRef]

- Bai, X.; Colegrove, P.; Ding, J.; Zhou, X.; Diao, C.; Bridgeman, P.; Hönnige, J.R.; Zhang, H.; Williams, S. Numerical analysis of heat transfer and fluid flow in multilayer deposition of PAW-based wire and arc additive manufacturing. Int. J. Heat Mass Transf. 2018, 124, 504–516. [Google Scholar] [CrossRef]

- Hou, X.; Ye, X.; Qian, X.; Zhang, X.; Zhang, P.; Lu, Q.; Yu, Z.; Shen, C.; Wang, L.; Hua, X. Heat Accumulation, Microstructure Evolution, and Stress Distribution of Ti–Al Alloy Manufactured by Twin-Wire Plasma Arc Additive. Adv. Eng. Mater. 2022, 1, 2101151. [Google Scholar] [CrossRef]

- Hou, X.; Ye, X.; Qian, X.; Zhang, P.; Lu, Q.; Yu, Z.; Shen, C.; Wang, L.; Hua, X. Study on Crack Generation of Ti-Al Alloy Deposited by Plasma Arc Welding Arc. J. Mater. Eng. Perform. 2023, 32, 3574–3576. [Google Scholar] [CrossRef]

- Li, C.; Zhang, S.H.; Qin, Y.; Estupinan, E. A systematic review of deep transfer learning for machinery fault diagnosis. Neurocomputing 2020, 407, 121–135. [Google Scholar] [CrossRef]

- Hasan, M.J.; Kim, J.M. Bearing fault diagnosis under variable rotational speeds using stockwell transform-based vibration imaging and transfer learning. Appl. Sci. 2018, 8, 2357. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Rehmer, A.; Kroll, A. On the vanishing and exploding gradient problem in Gated Recurrent Units. IFAC-Pap. 2020, 53, 1243–1248. [Google Scholar] [CrossRef]

- Esfamdiari, Y.; Balu, A.; Ebrahimi, K.; Vaidya, U.; Elia, N.; Sarkar, S. A fast saddle-point dynamical system approach to robust deep learning. Neural Netw. 2021, 139, 33–44. [Google Scholar] [CrossRef] [PubMed]

- Hua, Y.; Yu, C.H.; Peng, J.Z.; Wu, W.T.; He, Y.; Zhou, Z.F. Thermal performance estimation of nanofluid-filled finned absorber tube using deep convolutional neural network. Appl. Sci. 2022, 12, 10883. [Google Scholar] [CrossRef]

- Spodniak, M.; Semrád, K.; Draganová, K. Turbine Blade Temperature Field Prediction Using the Numerical Methods. Appl. Sci. 2021, 11, 2870. [Google Scholar] [CrossRef]

- Liao, S.H.; Xue, T.J.; Jeong, J.; Webster, S.; Ehmann, K.; Cao, J. Hybrid thermal modeling of additive manufacturing processes using physics-informed neural networks for temperature prediction and parameter identification. Comput. Mech. 2023, 72, 499–512. [Google Scholar] [CrossRef]

- Xie, J.B.; Chai, Z.; Xu, L.M.; Ren, X.K.; Liu, S.; Chen, X.Q. 3D temperature field prediction in direct energy deposition of metals using physics informed neural network. Int. J. Adv. Manuf. Technol. 2022, 119, 3449–3468. [Google Scholar] [CrossRef]

| Si | Cu | Zn | Mn | Fe | Al | |

|---|---|---|---|---|---|---|

| ER1100 | 0.03 | 0.02 | 0.013 | 0.003 | 0.18 | Bal |

| O | Fe | N | C | H | Ti | |

|---|---|---|---|---|---|---|

| ERTI-2 | 0.08–0.16 | 0.12 | 0.015 | 0.03 | 0.008 | Bal |

| TA2 | 0.25 | 0.3 | 0.05 | 0.1 | 0.015 | Bal |

| Name | Units | Value | |

|---|---|---|---|

| Manufacturing parameters | DC Current | A | 90 |

| Voltage | V | 80 | |

| Welding speed | 90 | ||

| Material properties | Density | 3.525 | |

| Thermal conductivity | |||

| Enthalpy |

| Running Time | Read-Only Memory Occupation | R2 | |

|---|---|---|---|

| Conv1D | 0.7 s | 52 MB | 0.99999251 |

| FCNN | 1.2 s | 111.5 MB | 0.99999597 |

| FEM | 3 min 55 s | 721 MB | 1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pan, N.; Ye, X.; Xia, P.; Zhang, G. The Temperature Field Prediction and Estimation of Ti-Al Alloy Twin-Wire Plasma Arc Additive Manufacturing Using a One-Dimensional Convolution Neural Network. Appl. Sci. 2024, 14, 661. https://doi.org/10.3390/app14020661

Pan N, Ye X, Xia P, Zhang G. The Temperature Field Prediction and Estimation of Ti-Al Alloy Twin-Wire Plasma Arc Additive Manufacturing Using a One-Dimensional Convolution Neural Network. Applied Sciences. 2024; 14(2):661. https://doi.org/10.3390/app14020661

Chicago/Turabian StylePan, Nanxu, Xin Ye, Peng Xia, and Guangshun Zhang. 2024. "The Temperature Field Prediction and Estimation of Ti-Al Alloy Twin-Wire Plasma Arc Additive Manufacturing Using a One-Dimensional Convolution Neural Network" Applied Sciences 14, no. 2: 661. https://doi.org/10.3390/app14020661

APA StylePan, N., Ye, X., Xia, P., & Zhang, G. (2024). The Temperature Field Prediction and Estimation of Ti-Al Alloy Twin-Wire Plasma Arc Additive Manufacturing Using a One-Dimensional Convolution Neural Network. Applied Sciences, 14(2), 661. https://doi.org/10.3390/app14020661