Abstract

As the metaverse evolves, characterized by its immersive and interactive landscapes, it presents novel opportunities for empathy research. This study aims to systematically review how empathy manifests in metaverse environments, focusing on two distinct forms: specific empathy (context-based) and universal empathy (generalized). Our analysis reveals a predominant focus on specific empathy, driven by the immersive nature of virtual settings, such as virtual reality (VR) and augmented reality (AR). However, we argue that such immersive scenarios alone are insufficient for a comprehensive exploration of empathy. To deepen empathetic engagement, we propose the integration of advanced sensory feedback mechanisms, such as haptic feedback and biometric sensing. This paper examines the current state of empathy in virtual environments, contrasts it with the potential for enriched empathetic connections through technological enhancements, and proposes future research directions. By fostering both specific and universal empathy, we envision a metaverse that not only bridges gaps but also cultivates meaningful, empathetic connections across its diverse user base.

1. Introduction

Empathy, the ability to understand and share others’ emotions, is widely acknowledged as a fundamental human trait [1,2]. It serves a crucial role in social interactions, fostering connections and cooperation among individuals [3]. With the advent of new technologies, researchers are exploring innovative ways to cultivate empathy, such as through immersive virtual reality scenarios and empathic chatbots that interpret emotional cues.

The metaverse, a computer-generated, fully immersive, and interactive world, has evolved from chatbots, digital avatars, virtual reality (VR), and augmented reality (AR) to create a fully immersive, embodied, and shared space where humans and the digital world blend seamlessly [4]. As individuals immerse themselves in virtual environments and interact with digital avatars, empathy becomes more crucial for meaningful interactions. However, empathy is often shaped by the specifics of the situation, emotional state, and past experiences [5].

Traditionally, empathy has been categorized into two broad types: cognitive empathy and emotional (or affective) empathy [6]. Cognitive empathy refers to the ability to understand another individual’s emotional state [6,7], while emotional empathy, also known as affective empathy, involves experiencing another person’s feelings oneself [6,7]. Blair describes cognitive empathy as related to the theory of mind, which is the capacity to represent or understand the mental state of others [8]. Emotional empathy, on the other hand, can be divided into two forms: mimicking another’s emotional response and reacting to emotional stimuli [6,8]. While these traditional frameworks provide a foundation, this study particularly focuses on specific and universal empathy, as they manifest within the metaverse.

Specific empathy, also known as context-based empathy, refers to the capacity to empathize with others within specific contexts or environments, wherein external factors trigger empathetic responses [4]. Unlike cognitive empathy, which assumes an objective understanding of another’s mind, specific empathy acknowledges the inevitable influence of one’s own experiences and contexts on this process. This calls into question the idea that empathy can be completely objective, highlighting how our own experiences and contexts shape our ability to understand others. On the other hand, universal empathy, also known as social empathy, refers to the ability to empathize with others without boundaries or filters [5]. It involves feeling concern for others and encompasses a broad sense of interconnectedness, rejecting notions of individuality and separation as false beliefs. Universal empathy extends beyond loved ones to include strangers and all humankind, fostering pro-social values and behaviors that prioritize the well-being of others without egoistic motives [5]. Universal empathy represents a more generalized form of empathy, transcending specific contexts, being an impartial spectator [9].

Recent research has leveraged the metaverse to examine how empathy manifests and how immersive environments enhance it through embodied avatars, contextual awareness, and shared virtual experiences. However, some studies challenge the emphasis on immersive experiences alone, suggesting that empathy fatigue or desensitization can occur with repeated exposure to virtual empathy scenarios [10,11]. Others highlight the importance of social context and interpersonal communication over technology in fostering meaningful empathy. These perspectives indicate that empathy in the metaverse may not be solely reliant on immersion but requires a more nuanced approach that integrates social and psychological elements [12].

The main contribution of this article is to systematically review how empathy manifests in the metaverse and to classify it into specific and universal empathy. Additionally, we propose the integration of advanced sensory feedback mechanisms, such as haptic feedback and biometric sensing, to deepen empathetic engagement in virtual settings. By investigating the current state of research on AR, VR, and avatars, we aim to provide a comprehensive understanding of how these technologies can foster empathy and to propose future research directions. Our primary research questions are as follows:

- Can empathy in the metaverse be classified into two categories (specific vs. universal) and which category is more prevalent?

- What are the unique features that define specific empathy in metaverse environments, particularly in relation to context and storytelling?

- What are the key gaps in our current understanding of specific empathy within the metaverse context?

The remainder of this paper is structured as follows: Section 2 details the methods used for conducting the systematic review and data extraction. Section 3 explores empathy within the metaverse. Section 4 analyses previous research on empathy in the metaverse, with a focus on specific empathy. Section 5 discusses the scope of specific empathy and universal empathy, along with their limitations and future directions. Finally, Section 6 presents the paper’s conclusions.

2. Methods

This study aims to systematically review empathy applications in both situated and universal contexts within metaverse environments, employing the PRISMA methodology for this purpose [13]. Previous studies relevant to our research scope were gathered using keywords associated with empathy and immersive technologies. For empathy-related searches, terms including “empathy”, “general empathy”, “context-based empathy”, and “situated empathy” were utilized. “Empathy” serves as the core term, reflecting the overall subject of investigation. “General empathy” was selected to encompass the universal aspect of empathetic experiences that transcend specific contexts, aligning with the psychological perspective of empathy as an inherent human capacity to connect with others’ emotional states. “Context-based empathy” and “situated empathy” were included to specifically target the nuanced ways in which empathy is experienced within environments or situations, highlighting the importance of context in eliciting empathetic responses.

To encompass immersive technologies, we employed keywords including “virtual reality”, “augmented reality”, “avatar”, and “immersive empathy”. These terms were designed to capture relevant studies that explore how immersive technologies facilitate the experience of empathy. These keywords and corresponding search strings are detailed Table 1.

Table 1.

Keywords and search strings.

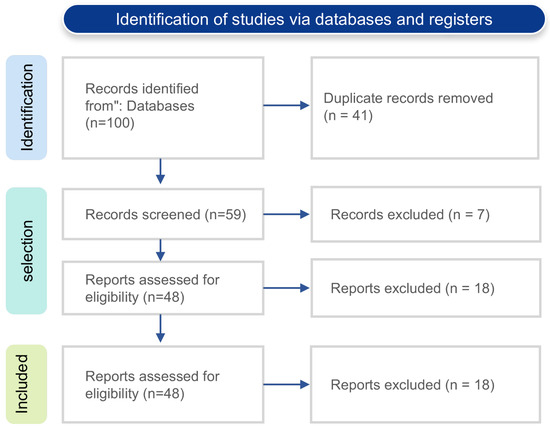

A comprehensive search was conducted across the following databases: Scopus, Web of Science, IEEE Xplore, and PubMed. These databases were selected due to their extensive coverage of studies in psychology, virtual reality, and immersive technology. The search was restricted to articles published between January 2016 and July 2023, capturing the most recent developments in empathy and metaverse-related research. Figure 1 outlines our systematic review process using PRISMA 2020. Following the initial search, 100 articles were subject to screening, resulting in the removal of 41 duplicates. We conducted a title and abstract screening process for the remaining 59 articles, leading to the exclusion of 7. Following a full-text assessment, 18 articles were further excluded. We, then proceeded with data extraction from the remaining 30 papers.

Figure 1.

Review process using PRISMA.

We applied the following criteria to determine which studies would be included: studies focusing on empathy in virtual or immersive environments, particularly specific or universal empathy; research on VR, AR, or avatar-based experiences that elicited empathetic responses; and articles written in English and published after 2020. The exclusion criteria were studies not directly addressing empathy, such as those focusing solely on the technical aspects of VR or AR without considering user emotional engagement, and articles not peer-reviewed, such as editorials, opinion pieces, or unpublished theses. Data extraction was performed using a pre-defined template to capture relevant information from each study. Key factors considered during the data extraction process included type of empathy (situated or universal), technologies used (VR, AR, avatars), study participants (age, demographics, sample size), methodology (qualitative or quantitative approach), and empathy measurement tools (self-report surveys, behavioral analysis, biometric tools). The extracted data are compiled and summarized in Table 2, detailing the key factors evaluated during data collection.

Table 2.

Factors considered for paper extraction.

3. Understanding Empathy in the Metaverse

With rapid technological advancements, the metaverse has gained attention for its potential to bridge virtuality and reality [14]. A notable aspect emerging in metaverse discussions is the role of ‘empathy’ in shaping digital interpersonal connections [15]. As we explore the possibilities of the metaverse further, it becomes increasingly crucial to consider how empathy can be nurtured and manifested within this digital landscape.

Within the metaverse, empathy plays a pivotal role, bridging differences and nurturing meaningful connections through understanding and respect. Whether by navigating conflict, creating inclusive experiences, or fostering learning through diverse perspectives, empathy serves as the cornerstone for enriching the virtual world. By prioritizing empathy, we could develop a metaverse that transcends mere technology, becoming a symbol of collaboration, understanding, and, ultimately, a positive force for all. Therefore, exploring how design, interaction, and communication within the metaverse can promote empathy is a key research interest.

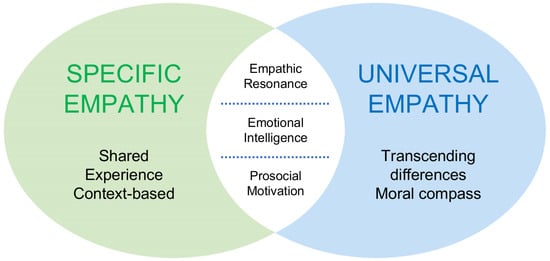

Although earlier studies have primarily focused on the metaverse’s potential for fostering empathy, a significant distinction emerges when considering the types of empathy involved. Empathy, a nuanced construct, can be divided into “specific” empathy, which relates to understanding and sharing emotions in particular contexts, and universal empathy, which involves the ability to understand and share others’ emotions regardless of context. The unique features of the metaverse seem to be more conducive to eliciting empathy within specific contexts [16]. The relationship between specific and universal empathy is illustrated in Figure 2.

Figure 2.

Types of empathy.

4. Specific Empathy in the Metaverse

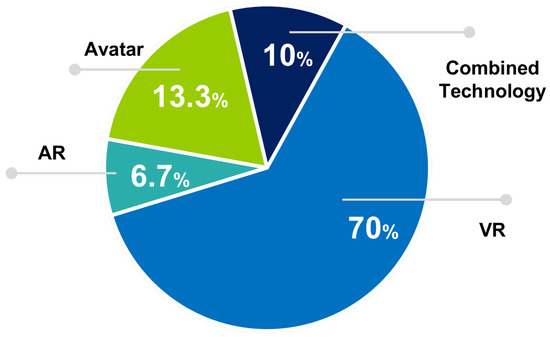

As previously discussed, the characteristics of the metaverse make the implementation of specific empathy more feasible, as it is easier to empathize with a specific environment [17]. A scenario made by a developer to evoke empathy in participants offered a means to control and regulate the system. Figure 3 depicts studies on specific empathy in the metaverse.

Figure 3.

Research on specific empathy in the metaverse.

4.1. VR Technology and Empathic Response

Approximately 21 studies have utilized VR technology to prompt empathetic responses, predominantly through the creation of scenarios or storytelling environments. For instance, Bae et al. employed VR to stimulate empathy through interactive storytelling, utilizing devices such as POV (point of view) shifts and flashbacks to establish deeper emotional connections [18]. However, their focus was primarily on design considerations, VR implementation, and plans for measuring empathy through a think-aloud protocol. A more in-depth evaluation of the impact of changing POVs and flashbacks on player emotions and engagement could provide valuable insights into the project’s success. Similarly, Calvert et al.’s study compared the effectiveness of VR with interactive scenes, like POV swaps and flashbacks, to passive 360° videos in promoting student engagement and empathy for soldiers’ sacrifices in history learning [19]. Although the study demonstrated that highly engaged students exhibited higher levels of empathy, it did not investigate whether increased engagement directly leads to increased empathy or if other influencing factors are at play. Additionally, another study explored VR as a platform for researching cultural imagination, memory studies, and public engagement, highlighting its potential for cultivating intimacy and empathy through VR interviews and as a form of art therapy for traumatic memories [20].

Fernandez et al. found that the use of the Virtual Journalist tool enhances thinking-based empathy and can bridge cultural empathy gaps, providing new insights into how culture influences empathy. However, the study mentions the limited size of the sample, which could potentially limit the generalizability of the findings [21]. Corriette et al., on the other hand, conducted a study using a VR training tool to aid psychiatrists in developing empathy for patients of color by simulating real-world scenarios using lifelike avatars; this study also had very few samples [22]. Similarly, Kiarostami et al. aimed to explore and measure specific empathy towards the hardships faced by the international community through a virtual reality experiment, with the purpose of raising awareness and understanding of their unique challenges [23]. The key finding of Gerry’s study was that virtual reality technology has the potential to stimulate empathy through embodied interaction and enhance empathic accuracy, thereby fostering a deeper understanding of others’ perspectives and emotions [24]. In contrast, Posluszny et al. found that participants expressed a desire to help but felt overwhelmed and lacked an understanding of climate change [25]. Hekiert et al. demonstrated the successful use of VRPT to induce empathy towards out-groups, highlighting the potential of VR technology as a tool for promoting intercultural sensitivity and understanding [26].

Research has also delved into eliciting empathy in children. For instance, Camilleri et al. utilized a VR classroom experience to alter attitudes and perceptions towards individuals with autism, suggesting its potential to enhance empathy and understanding [27]. Similarly, Muravevskaia and Gardner-McCune demonstrated that virtual reality technology can create immersive environments that foster empathy and understanding of others’ experiences, regardless of individual backgrounds or contexts [28]. Furthermore, another study by Muravevskaia and Gardner-McCune focused on designing and evaluating empathic experiences of young children in VR environments using a VR empathy game, providing children with opportunities to interact with VR characters representing various emotions, such as fear and anger [29].

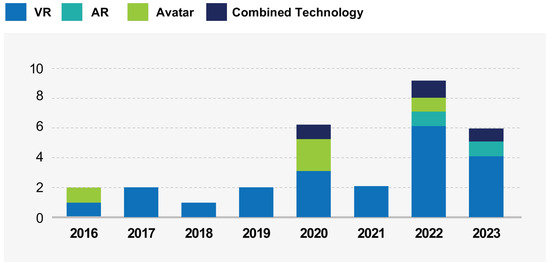

Empathy elicitation in psychological contexts has been extensively studied. Salminen et al. found that biofeedback based on EEG frontal asymmetry resulted in higher self-reported empathy compared to respiration rate feedback, with both combined leading to the highest perceived empathy [30]. Similarly, Hecquard et al. discovered that physiology-based affective haptic feedback enhanced empathy and the connection to a virtual presenter in a social VR scenario [31]. Furthermore, another study effectively increased the understanding of panic disorder among participants through a specific empathy experience within a virtual environment [32]. Li et al. proposed a VR gaming application to enhance empathy for patients with Parkinson’s disease among medical students, utilizing immersive experience and assessment [33]. Additionally, Li and Luo’s study examined VR as a tool for empathy training to prevent depression and its recurrence, focusing on fostering social support and mutual empathy for at-risk individuals [34]. Figure 4 shows the empathy-eliciting technologies organized by year.

Figure 4.

Empathy-eliciting technologies organized by year.

4.2. Social Issues and Empathy Training

Research on eliciting empathy through social issues was also considered. McEvoy et al. showed that virtual reality simulations can effectively elicit empathy-related responses in bystanders to prevent bullying. However, the simulations should have included photorealistic graphics, interactive features, and prominent customization tailored to individual needs [35]. Additionally, Lamb’s study discussed how VR simulations can assist police officers in understanding others’ perspectives and reducing biases [36]. An abstract overview of the research and the type of content or people focused on is presented in Table 3.

In addition to the effectiveness of virtual reality simulations in eliciting empathy-related responses, it is crucial to consider the potential barriers identified by Igras-Cybulska et al. Their research highlights how individuals may avoid engaging with these VR applications due to the emotional labor required in specific empathy experiences [37]. This underscores the importance of not only incorporating features like photorealistic graphics and interactivity but also addressing the emotional challenges users may face, ensuring that VR experiences are designed in a way that fosters genuine empathy and engagement.

Guarese et al. conducted research on specific empathy using AR, emphasizing the importance of avoiding blind spots and highlighting the potential of AR methods for indoor guidance [38]. Meanwhile, Valente et al. aimed to provide visualization of emotional state feedback based on raw physiological data for in situ communication [39].

Table 3.

The effect of virtual reality technologies on empathy elicitation.

Table 3.

The effect of virtual reality technologies on empathy elicitation.

| Ref. | Context | Type |

|---|---|---|

| [18] | Point of view and flashbacks | Flashback/storytelling |

| [19] | Historical events | |

| [20] | Lost homes | |

| [27] | Children with difficulties | For children |

| [28] | Social skill learning | |

| [29] | Social skill learning | |

| [30] | Empathy with bio-signalling | Psychology-based |

| [31] | Empathy with Haptic feedback | |

| [32] | Panic disorder | |

| [33] | Parkinson’s disease | |

| [34] | Depression prevention | |

| [40] | Depression prevention | |

| [21] | Journalism | Culture and environment |

| [22] | People of color | |

| [23] | Campus | |

| [24] | Art creation | |

| [25] | Climate | |

| [26] | Empathy towards out-group | |

| [35] | Bullying prevention | Social issues |

| [37] | Empathy building | |

| [36] | Police force |

4.3. Other Technologies for Enhanced Empathy

Several studies have explored the use of avatars to elicit specific empathy. Cinieri et al. introduced a promising prototype aimed at enhancing human–avatar interactions within virtual reality environments [41]. Hervás et al. proposed an effective avatar-based system designed to address empathy and socialization issues, particularly among individuals with Down syndrome and intellectual disabilities [42]. Loveys et al. emphasized the importance of developing and evaluating an autonomous empathy system for digital humans, with potential applications in healthcare and customer service [43]. Jin et al. explored self-associated emotional empathy perceptions through avatar social systems, examining scenarios like self-mirror and self-adorable interactions. Their study revealed that the self-associated avatar empathy system, particularly in scenarios involving self-image and authority figures, fosters higher empathic responses compared to scenarios featuring cartoon characters. Additionally, they found that the mirror effect, characterized by self-mirror empathy characters, outperformed authoritative effects in peer-to-peer interactions [44].

Combined technologies have also been used to elicit empathy. Borhani and Ortega utilized a VR system employing avatar gender swapping to explore empathy in stereotypical threat conditions, aiming to facilitate understanding and emotional sharing [45]. Kuchelmeister et al. used an interactive virtual reality experience immersing viewers into the emotional and perceptual world of Viv, a character living with dementia. This combination of VR and an avatar creates a sense of presence and engagement in a virtual environment [46]. Zhang’s study introduced an expanded interactive storytelling structure for virtual reality, integrating emotional modelling and tracking to augment user engagement and empathy with virtual characters [47].

The systematic review of thirty studies conducted within this research underscores a critical discovery: the utilization of virtual reality (VR) and augmented reality (AR) technologies markedly enhances the elicitation of specific empathy, demonstrating effectiveness across a spectrum of applications. Predominantly, empirical evidence from approximately 70% of the reviewed studies indicates that VR technologies, through the deployment of immersive storytelling and interactive environments, significantly promote empathetic responses among participants. Moving towards the implications of these findings, it is imperative to address the integration of physiological bio-signals such as heart rate variability and electroencephalogram (EEG) patterns into VR and AR frameworks. This integration is suggested as a vital advancement, enabling the customization of empathy elicitation experiences to align with individual physiological responses, thereby deepening engagement with and enhancing the efficacy of empathy training interventions. The incorporation of bio-signals is advocated for as a methodological enhancement that could bridge existing gaps in personalized empathy training, offering a more granular understanding of the mechanisms underpinning empathy within virtual environments.

5. Discussion

Our exploration into the metaverse reveals its profound capacity to foster empathy among users through immersive and interactive experiences. The metaverse, characterized by a shared sense of space, presence, and time, facilitates not just a new platform for interaction but a novel environment for the development and expression of empathy [5]. This digital realm offers unique opportunities for users to engage in experiences that might not be available to them in the physical world, thus opening up new avenues for empathy research and applications. In this review, we also examine how these immersive experiences foster empathy, addressing one of the key research questions of this study.

5.1. Expanding Empathy: From Situated to Universal Empathy

Empathy’s complexity, especially within the metaverse, necessitates an exploration of both specific empathy (situated or context-based) and universal empathy. Situated or context-based empathy emerges as a focal point in the metaverse, given its unique ability to provide immersive contexts, thereby enhancing the control and assessment of empathic engagement. By immersing users in these highly controlled environments, the metaverse enables us to explore empathy with precision, which is one of the key factors influencing empathy development in virtual environments. Melloni’s study further enriches this perspective by illustrating how empathy’s manifestation is significantly shaped by various contextual factors—ranging from the victim’s age, instances of aggression, and perceived risks to the dynamics of interpersonal relationships [17]. These findings emphasize the importance of context in shaping empathic responses within the metaverse, which distinguishes it as a unique platform for empathy research. This addresses the second research question regarding the factors influencing empathy development in virtual spaces.

However, while situated empathy allows us to connect with others in context-driven, immersive scenarios, it has limitations. These contexts may prioritize visual and auditory inputs while neglecting other sensory dimensions, such as touch, smell, and bio-signals [30,48,49]. This limited sensory engagement may result in a narrower scope of empathy that is heavily reliant on immediate context, potentially overlooking important emotional cues.

To address these limitations, it is necessary to consider a broader form of empathy, often referred to as universal empathy. Universal empathy, as described by Drigas, extends beyond specific contexts and involves a broader concern for others, driven by pro-social values and behaviors [5]. An ideal concept regarding universal empathy is being an impartial spectator [9]. However, the journey towards achieving universal empathy faces various technological, psychological, and social constraints. It is a prolonged process that necessitates the development of systems capable of simulating human-like characteristics. Despite the current limitations in technology, there have been promising advancements in this area. For example, research on integrating touch and smell into VR systems has the potential to transform how we interact with virtual environments and each other, facilitating deeper emotional connections and fostering greater empathy.

5.2. Future Directions and Technological Advancements

Research has explored the integration of touch and smell into VR and AR systems. This technological advancement is necessary to bridge the limitations of situated empathy and broaden emotional engagement, as explored in this review. For example, Cowan et al. suggest that incorporating scent into VR environments could enhance brand experiences, potentially impacting consumer behavior and marketing strategies within virtual reality [49]. Although this study did not specifically investigate empathy, it underscores the significance of incorporating additional human senses into these technologies. In particular, touch has a strong connection to empathy. A study by Gallance et al. cites several studies demonstrating how touch can convey emotions and influence social behaviors, such as compliance and relationship dynamics [50]. Additionally, Hoppe et al. found that social touch cues can bridge the gap in perceived agency between agents and avatars, making agents appear more “human” [48]. Expanding upon these findings to explore the elicitation of empathy through touch or smell could yield valuable insights into fostering empathy even in the absence of contextual cues.

Analysis of EEG and ECG signals has also been conducted during empathy elicitation. Integrating EEG and ECG into VR holds significant potential for revolutionizing empathy in virtual interactions. Salminen et al. found that EEG frontal asymmetry synchronization correlates with heightened perceived empathy, suggesting a link between EEG activity and empathetic responses [30]. Extending this study may offer real-time insights into emotional states, which could create more immersive, personalized, and emotionally resonant VR experiences that foster deeper connections among users.

5.3. Limitations of Empathy in the Metaverse

While the metaverse holds significant promise for fostering empathy, several limitations must be considered. First, there are technological limitations—such as the current challenges in fully integrating multi-sensory feedback systems, including touch and smell—which may limit the immersive potential needed to evoke deep empathetic responses. Furthermore, the absence of robust longitudinal studies hinders our understanding of how sustained exposure to empathy-eliciting virtual scenarios affects users over time.

From a psychological perspective, the risk of empathy fatigue or desensitization is an important limitation. Repeated exposure to emotionally charged virtual scenarios might diminish users’ ability to maintain empathetic responses, leading to emotional detachment. Additionally, the inherent limitation of virtual environments to fully replicate real-world complexities restricts the depth of empathy that can be fostered. Addressing these challenges will be crucial for the metaverse to fully realize its potential as an empathy-enhancing tool.

5.4. Limitations of the Study

This study is subject to certain limitations. First, the technological constraints discussed above—such as the limited integration of touch, smell, and other sensory feedback mechanisms in VR environments—reduce the immersive potential necessary to evoke deeper empathy. Additionally, the reliance on short-term studies limits our understanding of the long-term effects of empathy elicitation in the metaverse. Furthermore, while this study addresses both situated and universal empathy, the lack of comprehensive multi-sensory studies leaves room for future research on how senses beyond sight and sound could influence empathic responses. Lastly, this research is exploratory, and future studies should focus on the practical application and development of tools that can reliably simulate human-like characteristics in virtual environments to foster deeper emotional connections.

Despite initial challenges, the metaverse demonstrates promising potential for cultivating empathy. Technological advancements, such as full-body tracking, richer avatars, and advanced haptic feedback, are narrowing the sensory gap. Research on AI’s emotional intelligence aims to enhance communication and understanding. Through collective efforts, the metaverse has the potential to become a powerful tool for strengthening human connections and empathy.

6. Conclusions

This study highlights the potential of the metaverse to foster empathy, particularly by examining the distinction between specific and universal empathy. The majority of the literature focuses on specific empathy, where immersive, context-driven experiences in virtual environments help elicit emotional engagement. However, universal empathy, which extends beyond immediate contexts to foster broad, pro-social connections, remains underexplored in current research.

Technological advancements, such as multi-sensory feedback (touch, smell) and bio-signal monitoring (EEG, ECG), are identified as key innovations that could deepen empathetic engagement in virtual settings. These technologies, while promising, are still in their infancy when it comes to integration within the metaverse, and further research is needed to assess their full potential for enhancing empathy.

This study also identifies several gaps in the literature. First, while the short-term effects of empathy in the metaverse have been studied, there is a lack of longitudinal research that explores the sustainability of empathetic responses over time. Second, while the role of specific empathy is well documented, there is a significant gap in our understanding of how universal empathy can be effectively fostered within virtual environments. Third, the ethical and psychological challenges of empathy fatigue and desensitization in response to repeated virtual scenarios are areas that warrant further investigation.

Practically, integrating empathy into the metaverse offers significant potential for applications in education, healthcare, and social interaction, among other fields. For instance, empathy-driven simulations could be used to develop emotional intelligence, train healthcare professionals, and promote cross-cultural understanding. However, the development of these applications requires overcoming both technological barriers and addressing the ethical implications of using virtual platforms for emotional engagement.

In conclusion, the metaverse provides a rich avenue for exploring and fostering empathy, but realizing its full potential will require addressing key gaps in the literature, advancing multi-sensory technologies, and carefully considering the long-term effects of empathy-driven virtual experiences. Future research should focus on closing these gaps by developing frameworks that balance the benefits of empathy in virtual environments with the potential risks of over-exposure and desensitization.

Author Contributions

Conceptualization, A.D., H.-J.Y., S.-w.K., J.-e.S. and S.-H.K.; methodology, A.D., H.-J.Y., S.-w.K., J.-e.S. and S.-H.K.; writing—original draft preparation, A.D.; supervision and project administration were performed by H.-J.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIT) (RS-2023-00219107). This work was supported by the Institute of Information and Communications, Technology Planning and Evaluation (IITP) under the Artificial Intelligence Convergence Innovation Human Resources Development (IITP-2023-RS-2023-00256629) grant funded by the Korea government (MSIT).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Davis, C.M. What is empathy, and can empathy be taught? Phys. Ther. 1990, 70, 707–711. [Google Scholar] [CrossRef] [PubMed]

- Singer, T.; Lamm, C. The social neuroscience of empathy. Ann. N. Y. Acad. Sci. 2009, 1156, 81–96. [Google Scholar] [CrossRef]

- Berardi, M.K.; White, A.M.; Winters, D.; Thorn, K.; Brennan, M.; Dolan, P. Rebuilding communities with empathy. Local Dev. Soc. 2020, 1, 57–67. [Google Scholar] [CrossRef]

- Stellar, J.E.; Duong, F. The little black box: Contextualizing empathy. Curr. Dir. Psychol. Sci. 2023, 32, 111–117. [Google Scholar] [CrossRef]

- Drigas, A.; Papoutsi, C. A New Pyramid Model of Empathy: The Role of ICTs and Robotics on Empathy. Int. J. Online Biomed. Eng. 2023, 19, 67–91. [Google Scholar] [CrossRef]

- Smith, A. Cognitive empathy and emotional empathy in human behavior and evolution. Psychol. Rec. 2006, 56, 3–21. [Google Scholar] [CrossRef]

- Soto, J.A.; Levenson, R.W. Emotion recognition across cultures: The influence of ethnicity on empathic accuracy and physiological linkage. Emotion 2009, 9, 874. [Google Scholar] [CrossRef] [PubMed]

- Blair, R.J.R. Responding to the emotions of others: Dissociating forms of empathy through the study of typical and psychiatric populations. Conscious. Cogn. 2005, 14, 698–718. [Google Scholar] [CrossRef]

- Betzler, M.; Keller, S. Shared belief and the limits of empathy. Pac. Philos. Q. 2021, 102, 267–291. [Google Scholar] [CrossRef]

- Sora-Domenjó, C. Disrupting the “empathy machine”: The power and perils of virtual reality in addressing social issues. Front. Psychol. 2022, 13, 814565. [Google Scholar] [CrossRef]

- Bertrand, P.; Guegan, J.; Robieux, L.; McCall, C.A.; Zenasni, F. Learning Empathy Through Virtual Reality: Multiple Strategies for Training Empathy-Related Abilities Using Body Ownership Illusions in Embodied Virtual Reality. Front. Robot. AI 2018, 5, 326671. [Google Scholar] [CrossRef] [PubMed]

- Chen, V.H.H.; Ibasco, G.C. All it takes is empathy: How virtual reality perspective-taking influences intergroup attitudes and stereotypes. Front. Psychol. 2023, 14, 1265284. [Google Scholar] [CrossRef] [PubMed]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef] [PubMed]

- Shi, F.; Ning, H.; Zhang, X.; Li, R.; Tian, Q.; Zhang, S.; Zheng, Y.; Guo, Y.; Daneshmand, M. A new technology perspective of the Metaverse: Its essence, framework and challenges. Digit. Commun. Netw. 2023; in press. [Google Scholar] [CrossRef]

- McStay, A. Replika in the Metaverse: The moral problem with empathy in ‘It from Bit’. AI Ethics 2023, 3, 1433–1445. [Google Scholar] [CrossRef]

- Paananen, V.; Kiarostami, M.S.; Lik-Hang, L.; Braud, T.; Hosio, S. From Digital Media to Empathic Spaces: A Systematic Review of Empathy Research in Extended Reality Environments. ACM Comput. Surv. 2023, 56, 1–40. [Google Scholar] [CrossRef]

- Wiederhold, B.K. Embodiment empowers empathy in virtual reality. Cyberpsychol. Behav. Soc. Netw. 2020, 23, 725–726. [Google Scholar] [CrossRef]

- Bae, B.C.; Jang, S.j.; Ahn, D.K.; Seo, G. A vr interactive story using pov and flashback for empathy. In Proceedings of the 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Osaka, Japan, 23–27 March 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 844–845. [Google Scholar]

- Calvert, J.; Abadia, R.; Tauseef, S.M. Design and testing of a virtual reality enabled experience that enhances engagement and simulates empathy for historical events and characters. In Proceedings of the 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Osaka, Japan, 23–27 March 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 868–869. [Google Scholar]

- Guo, C.N. The Imaginations of Daily Life in VR: Rebuilding Lost Homes through Animated Memories. In Proceedings of the 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Atlanta, GA, USA, 22–26 March 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 177–182. [Google Scholar]

- Fernandez, A.A.; Kim, C.C.; Gunasekaran, T.S.; Pai, Y.S.; Minamizawa, K. Virtual Journalist: Measuring and Inducing Cultural Empathy by Visualizing Empathic Perspectives in VR. In Proceedings of the 2023 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Sydney, Australia, 16–20 October 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 667–672. [Google Scholar]

- Corriette, B.; Parsons, D.; Alim, C.; Barrett, T.; Cranston, T.; Washington, G. Using VR to Elicit Empathy in Current and Future Psychiatrists for their Patients of Color. In Proceedings of the 2023 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Shanghai, China, 25–29 March 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 187–190. [Google Scholar]

- Kiarostami, M.S.; Visuri, A.; Hosio, S. We are Oulu: Exploring situated empathy through a communal virtual reality experience. In Proceedings of the 2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Christchurch, New Zealand, 12–16 March 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 966–967. [Google Scholar]

- Gerry, L.J. Paint with me: Stimulating creativity and empathy while painting with a painter in virtual reality. IEEE Trans. Vis. Comput. Graph. 2017, 23, 1418–1426. [Google Scholar] [CrossRef]

- Posluszny, M.; Park, G.S.; Spyridakis, I.; Katznelson, S.; O’Brien, S. Promoting sustainability through virtual reality: A case study of climate change understanding with college students. In Proceedings of the 2020 IEEE Global Humanitarian Technology Conference (GHTC), Online, 29 October–1 November 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–8. [Google Scholar]

- Hekiert, D.; Igras-Cybulska, M.; Cybulski, A. Designing VRPT experience for empathy toward out-groups using critical incidents and cultural explanations. In Proceedings of the 2021 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Bari, Italy, 4–8 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 506–507. [Google Scholar]

- Camilleri, V.; Montebello, M.; Dingli, A.; Briffa, V. Walking in small shoes: Investigating the power of VR on empathising with children’s difficulties. In Proceedings of the 2017 23rd International Conference on Virtual System & Multimedia (VSMM), Dublin, Ireland, 31 October–4 November 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–6. [Google Scholar]

- Muravevskaia, E.; Gardner-McCune, C. Social Presence in VR Empathy Game for Children: Empathic Interaction with the Virtual Characters. In Proceedings of the 2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Christchurch, New Zealand, 12–16 March 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 742–743. [Google Scholar]

- Muravevskaia, E.; Gardner-McCune, C. VR Empathy Game: Creating Empathic VR Environments for Children Based on a Social Constructivist Learning Approach. In Proceedings of the 2022 International Conference on Advanced Learning Technologies (ICALT), Bucharest, Romania, 1–4 July 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 360–362. [Google Scholar]

- Salminen, M.; Järvelä, S.; Ruonala, A.; Harjunen, V.J.; Hamari, J.; Jacucci, G.; Ravaja, N. Evoking physiological synchrony and empathy using social VR with biofeedback. IEEE Trans. Affect. Comput. 2019, 13, 746–755. [Google Scholar] [CrossRef]

- Hecquard, J.; Saint-Aubert, J.; Argelaguet, F.; Pacchierotti, C.; Lécuyer, A.; Macé, M. Fostering empathy in social Virtual Reality through physiologically based affective haptic feedback. In Proceedings of the 2023 IEEE World Haptics Conference (WHC), Delft, The Netherlands, 10–13 July 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 78–84. [Google Scholar]

- Russell, V.; Barry, R.; Murphy, D. Have experience: An investigation into vr empathy for panic disorder. In Proceedings of the 2018 IEEE Games, Entertainment, Media Conference (GEM), Galway, Ireland, 15–17 August 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–9. [Google Scholar]

- Li, Y.J.; Ducleroir, C.; Stollman, T.I.; Wood, E. Parkinson’s disease simulation in virtual reality for empathy training in medical education. In Proceedings of the 2021 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Lisbon, Portugal, 27 March–1 April 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 56–59. [Google Scholar]

- Li, Y.J.; Luo, H.I. Depression prevention by mutual empathy training: Using virtual reality as a tool. In Proceedings of the 2021 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Lisbon, Portugal, 27 March–1 April 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 60–63. [Google Scholar]

- McEvoy, K.A.; Oyekoya, O.; Ivory, A.H.; Ivory, J.D. Through the eyes of a bystander: The promise and challenges of VR as a bullying prevention tool. In Proceedings of the 2016 IEEE Virtual Reality (VR), Greenville, SC, USA, 19–23 March 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 229–230. [Google Scholar]

- Lamb, H. Can VR help to make policing fairer? Eng. Technol. 2020, 15, 44–49. [Google Scholar] [CrossRef]

- Igras-Cybulska, M.; Cybulski, A.; Gałuszka, D.; Smolarczyk, J. Empathy building ‘in the wild’-a reflection on an avoidance of the emotional engagement. In Proceedings of the 2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Christchurch, New Zealand, 12–16 March 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 204–208. [Google Scholar]

- Guarese, R.; Pretty, E.; Fayek, H.; Zambetta, F.; van Schyndel, R. Evoking empathy with visually impaired people through an augmented reality embodiment experience. In Proceedings of the 2023 IEEE Conference Virtual Reality and 3D User Interfaces (VR), Shanghai, China, 25–29 March 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 184–193. [Google Scholar]

- Valente, A.; Lopes, D.S.; Nunes, N.; Esteves, A. Empathic aurea: Exploring the effects of an augmented reality cue for emotional sharing across three face-to-face tasks. In Proceedings of the 2022 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Christchurch, New Zealand, 12–16 March 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 158–166. [Google Scholar]

- Li, Y.J.; Huang, A.; Sanku, B.S.; He, J.S. Designing an Empathy Training for Depression Prevention Using Virtual Reality and a Preliminary Study. In Proceedings of the 2023 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Shanghai, China, 25–29 March 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 44–52. [Google Scholar]

- Cinieri, S.; Kapralos, B.; Uribe-Quevedo, A.; Lamberti, F. Eye Tracking and Speech Driven Human-Avatar Emotion-Based Communication. In Proceedings of the 2020 IEEE 8th International Conference on Serious Games and Applications for Health (SeGAH), Vancouver, BC, Canada, 12–14 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–5. [Google Scholar]

- Hervás, R.; Johnson, E.; de la Franca, C.G.L.; Bravo, J.; Mondéjar, T. A learning system to support social and empathy disorders diagnosis through affective avatars. In Proceedings of the 2016 15th International Conference on Ubiquitous Computing and Communications and 2016 International Symposium on Cyberspace and Security (IUCC-CSS), Granada, Spain, 14–16 December 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 93–100. [Google Scholar]

- Loveys, K.; Sagar, M.; Billinghurst, M.; Saffaryazdi, N.; Broadbent, E. Exploring empathy with digital humans. In Proceedings of the 2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Christchurch, New Zealand, 12–16 March 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 233–237. [Google Scholar]

- Jin, X.; Guo, Z.; Zhou, H.; Hu, C.; Zhang, W.; Lou, H. Avatar Social System on Self-associated Emotional Empathy Perceptions. In Proceedings of the 2020 8th International Conference on Orange Technology (ICOT), Daegu, Republic of Korea, 18–21 December 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–4. [Google Scholar]

- Borhani, Z.; Ortega, F.R. A Virtual Reality System for Gender Swapping to Increase Empathy Against Stereotype Threats in Computer Science Job Interviews. In Proceedings of the 2023 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Sydney, Australia, 16–20 October 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 648–649. [Google Scholar]

- Kuchelmeister, V.; Bennett, J.; Ginnivan, N.; Kenning, G.; Papadopoulos, C.; Dean, B.; Neidorf, M. The Visit. In Proceedings of the 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Atlanta, GA, USA, 22–26 March 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 853–854. [Google Scholar]

- Zhang, M. “Presence” and “Empathy”—Design and Implementation Emotional Interactive Storytelling for Virtual Character. In Proceedings of the 5th International Conference on Control and Computer Vision, Xiamen, China, 19–21 August 2022; pp. 120–126. [Google Scholar]

- Hoppe, M.; Rossmy, B.; Neumann, D.P.; Streuber, S.; Schmidt, A.; Machulla, T.K. A human touch: Social touch increases the perceived human-likeness of agents in virtual reality. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–11. [Google Scholar]

- Cowan, K.; Ketron, S.; Kostyk, A.; Kristofferson, K. Can you smell the (virtual) roses? The influence of olfactory cues in virtual reality on immersion and positive brand responses. J. Retail. 2023, 99, 385–399. [Google Scholar] [CrossRef]

- Gallace, A.; Spence, C. The science of interpersonal touch: An overview. Neurosci. Biobehav. Rev. 2010, 34, 246–259. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).