Extracting Sentence Embeddings from Pretrained Transformer Models

Abstract

1. Introduction

- We provide an extensive and organized review of related work on producing sentence-level embeddings from transformer models.

- We experimentally test how multiple combinations of various of the most promising token aggregation and sentence representation post-processing techniques impact the performance of three classes of different tasks and properties of representations on several models.

- We propose two competitive and simple static token models as baselines: random embeddings and averaged representations (“Avg”).

- We propose an improvement for BERT: the BERT + Avg combined model. We experimentally test many weights and layers of how the representations of BERT and Avg can be most effectively mixed.

2. Related Work

2.1. Composing Word Vectors

2.1.1. Formal Semantics

2.1.2. Tensor Products

2.1.3. Averaging

2.1.4. Weighted Average

2.1.5. Clustering

2.1.6. Spectral Methods

2.1.7. Using Special Tokens

2.1.8. Aggregating through Layers

2.1.9. Other Means of Composition

2.2. Reshaping Representation Spaces

2.2.1. Isotropy

Word Frequencies

Outliers

Criticism

2.2.2. Post-Processing Methods

Z-Score Normalization

All-But-The-Top (ABTT)

Whitening

2.2.3. Retrofitting

2.2.4. Other Methods

2.2.5. Similarity Measures

2.3. Learning Sentence Embeddings Directly

2.3.1. Paragraph Vectors

2.3.2. To RNN- and Transformer-Based Models

2.3.3. Contrastive Learning Approaches

Feature/Vector/Embedding-Level Augmentations

Token-Level Augmentations

Positives by Relative Placement

Positives by Two Networks

Positives and Negatives from Supervised Data

Direction of Contrastive Learning for NLP

3. Methods

3.1. Problem Formulation

Additional Context Data

3.2. Models

3.2.1. BERT

Input

Transformer Block

Multi-Head Self-Attention

3.2.2. Prompting Method (T0, T4)

3.2.3. Averaged BERT (Avg.)

Combining Averaged BERT and Regular BERT (BERT + Avg.)

3.2.4. BERT2Static (B2S, B2S-100)

3.2.5. Random Embeddings (RE)

3.3. Aggregating Tokens

3.4. Post-Processing Embeddings

3.4.1. Z-Score Normalization

3.4.2. Quantile Normalization to Uniform Distribution (quantile-u)

3.4.3. Whitening

3.4.4. All-But-The-Top (ABTT)

3.4.5. Normalization

3.4.6. Learning Post-Processing

3.5. Evaluation

3.5.1. Clustering Tasks

Agnews

Biomedical

GoogleTS

Searchsnippets

Stackoverflow

Tweet

3.5.2. Semantic Textual Similarity (STS) Tasks

3.5.3. Downstream Classification Tasks

Binary Classification

Ternary Classification

Fine-Grained Classification

3.5.4. Isotropy

3.5.5. Alignment and Uniformity

4. Results

4.1. Token Aggregation and Post-Processing Techniques

4.1.1. Avg. versus B2S and B2S-100

4.1.2. BERT versus Random Embeddings

4.1.3. Isotropy

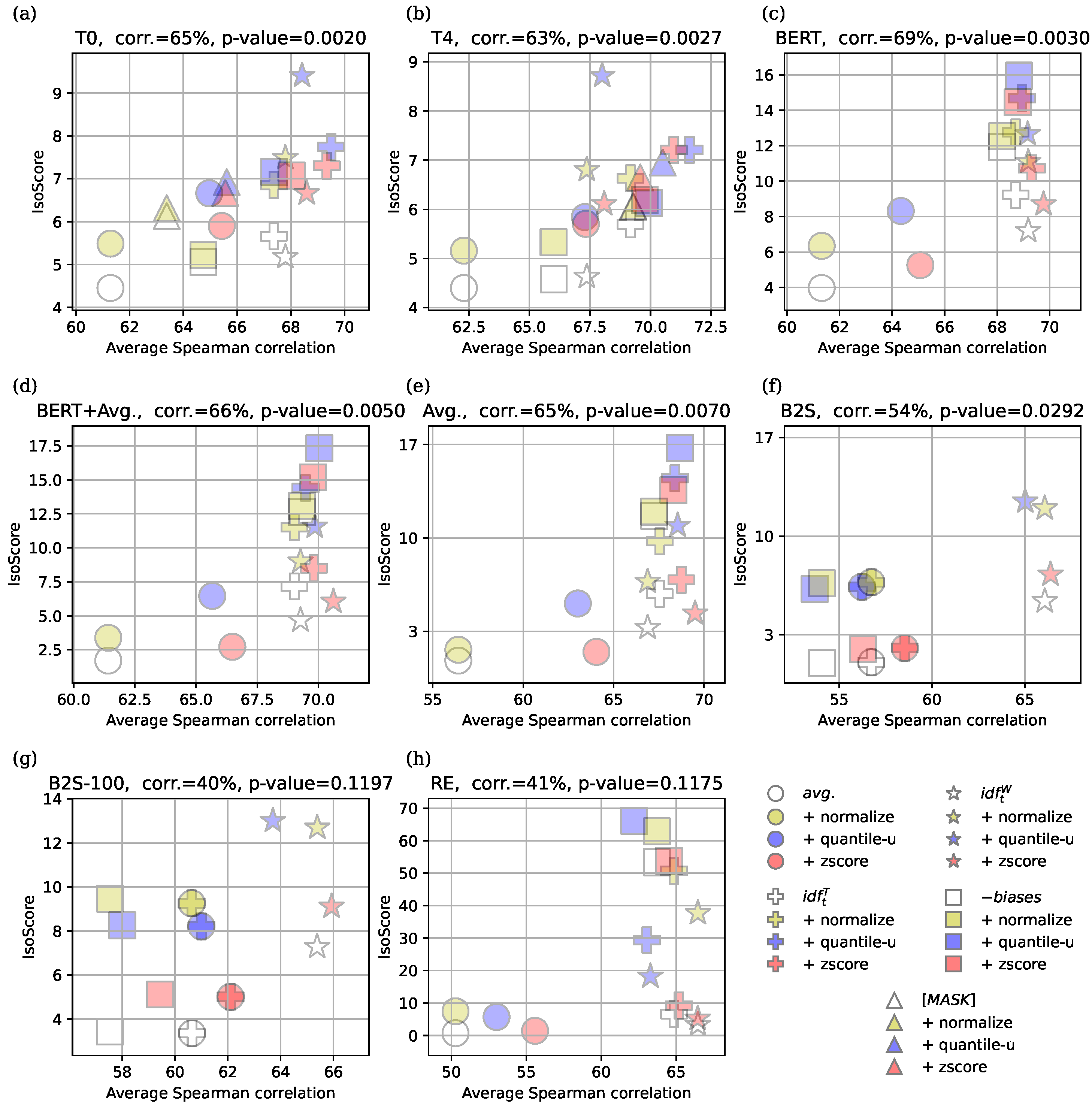

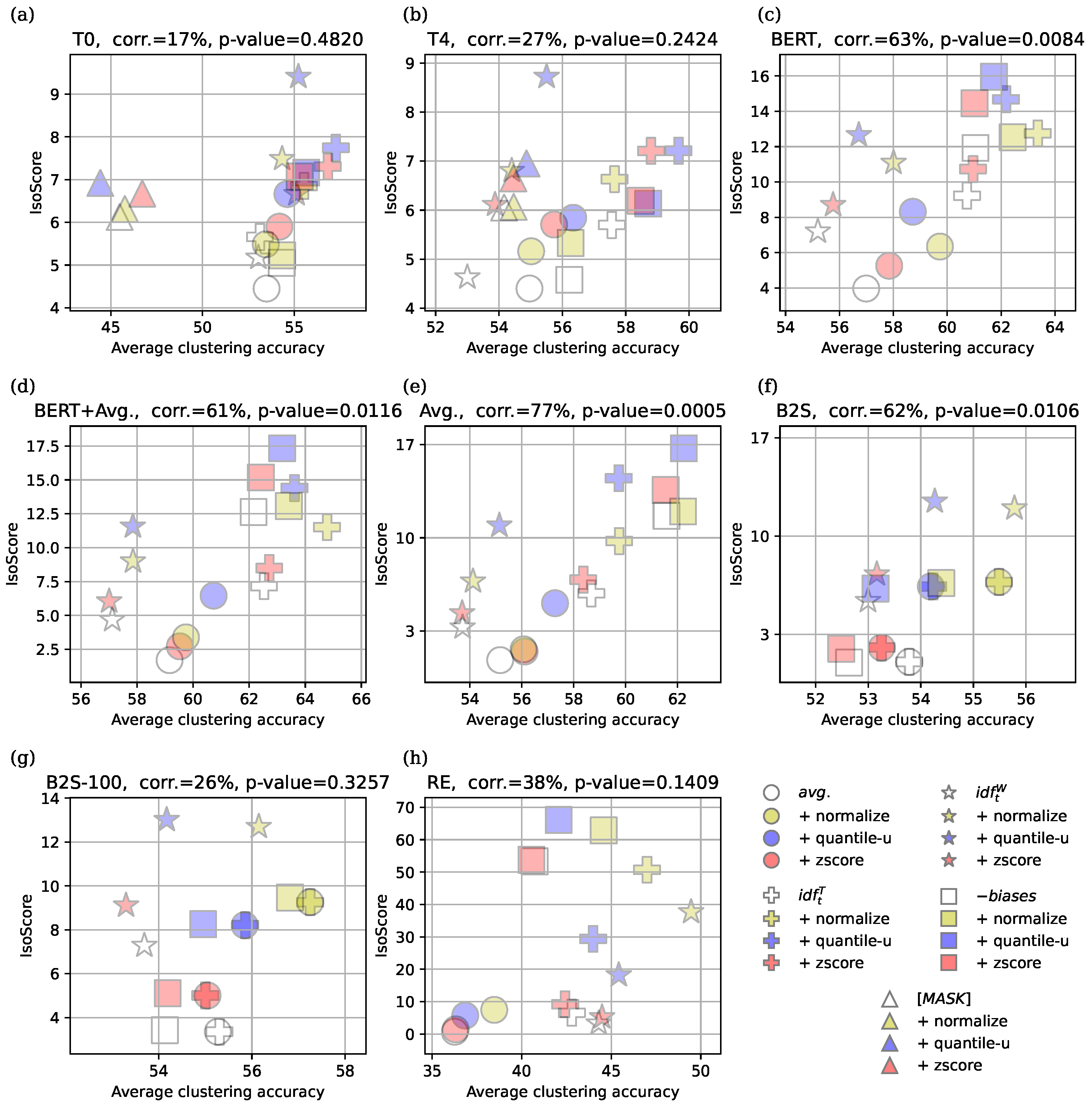

IsoScore Correlation with Task Performance

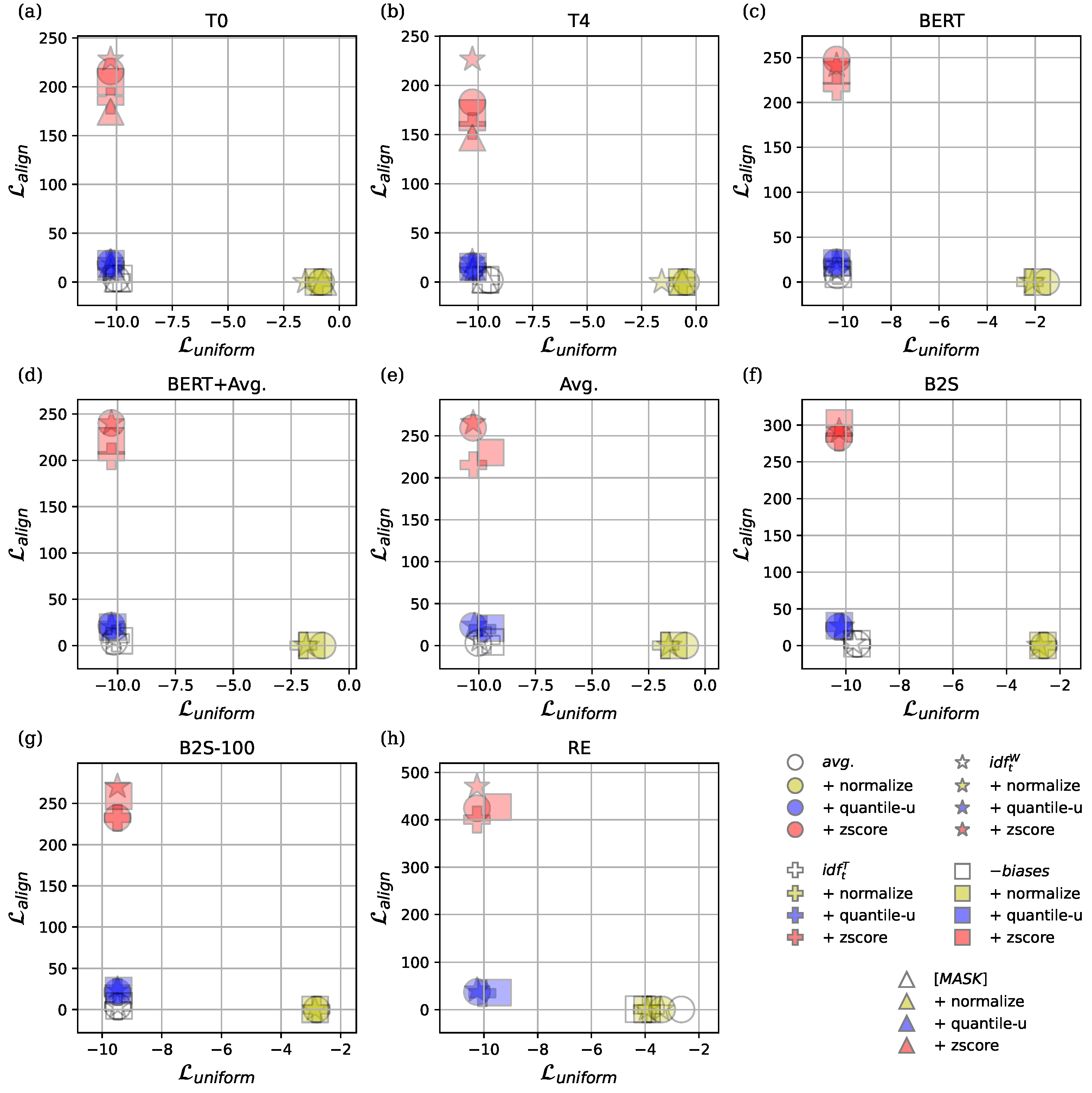

4.1.4. Alignment and Uniformity

4.2. Using Prompts

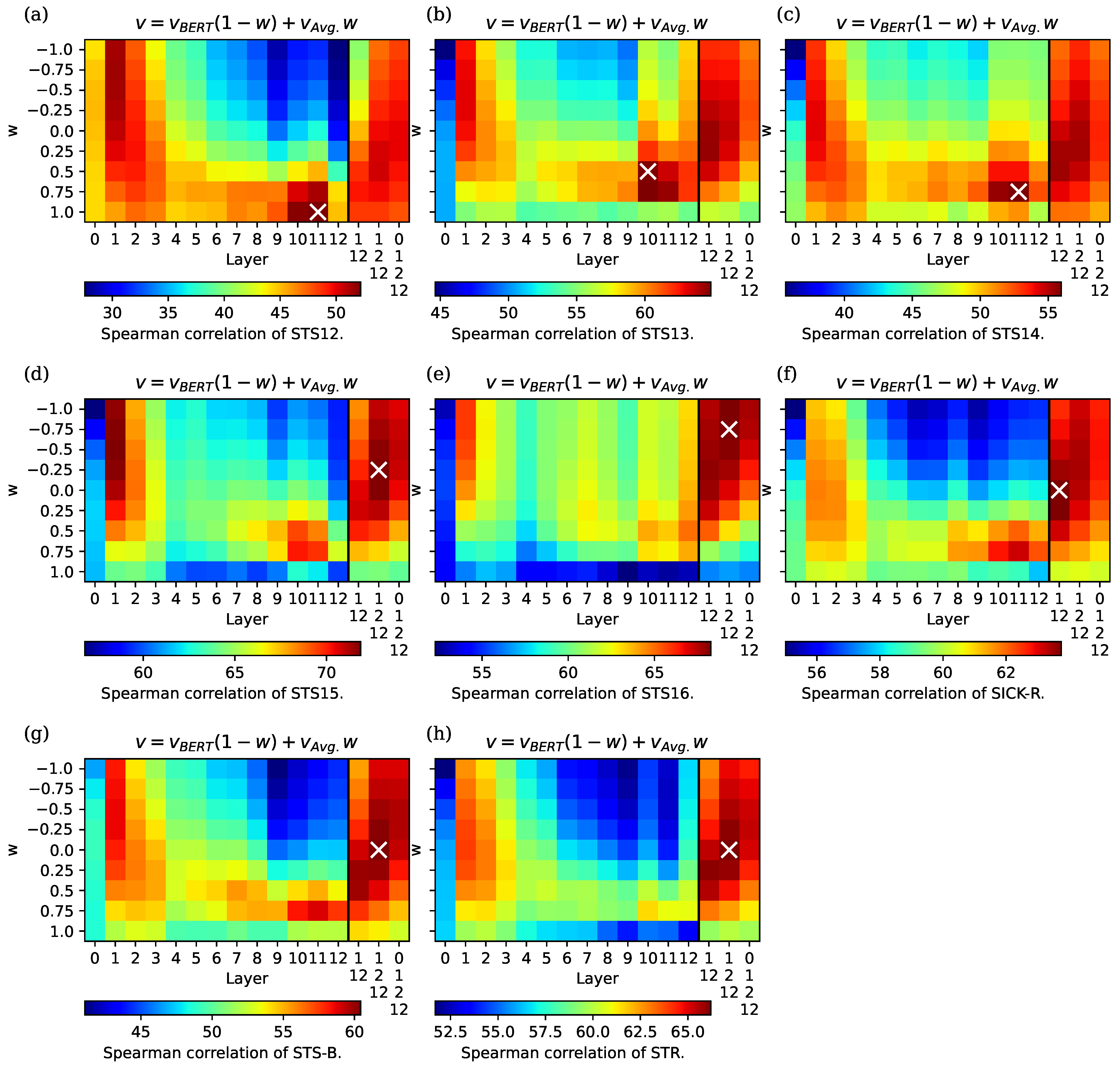

4.3. BERT + Avg. Model

Layers

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Detailed Performance on Individual Tasks

Appendix A.1. Token Aggregation and Post-Processing

| Model | T0 | T4 | BERT | BERT + Avg. | Avg. | B2S | B2S-100 | RE |

|---|---|---|---|---|---|---|---|---|

| Layer | Last | First + Last | – | |||||

| avg. | 48.7 | 53.9 | 45.1 | 48.7 | 48.5 | 47.2 | 54.2 | 34.7 |

| + normalize | 48.7 | 53.9 | 45.1 | 48.7 | 48.5 | 47.2 | 54.2 | 34.7 |

| + quantile-u | 52.1 | 56.2 | 48.7 | 51.9 | 51.5 | 45.8 | 52.4 | 40.1 |

| + quantile- | 50.2 | 55.1 | 46.6 | 50.7 | 50.3 | 44.6 | 52.5 | 36.9 |

| + whiten | 41.9 | 42.9 | 43.2 | 44.5 | 46.0 | 45.0 | 44.6 | 44.1 |

| + | 46.2 | 50.4 | 47.3 | 52.8 | 57.7 | 54.7 | 55.0 | 54.2 |

| + zscore | 51.5 | 54.1 | 50.2 | 53.4 | 53.1 | 49.1 | 54.7 | 43.6 |

| 56.2 | 56.8 | 57.5 | 58.7 | 58.3 | 57.6 | 56.9 | 55.4 | |

| + normalize | 56.2 | 56.8 | 57.5 | 58.7 | 58.3 | 57.7 | 56.9 | 55.4 |

| + quantile-u | 56.2 | 56.5 | 56.4 | 57.9 | 57.5 | 54.5 | 53.6 | 52.0 |

| + quantile- | 57.0 | 57.5 | 57.1 | 58.7 | 58.4 | 55.4 | 54.4 | 52.6 |

| + whiten | 43.7 | 43.8 | 46.1 | 46.7 | 47.2 | 45.3 | 44.7 | 44.6 |

| + | 52.6 | 53.3 | 57.5 | 58.8 | 58.7 | 57.5 | 56.8 | 55.5 |

| + zscore | 56.1 | 55.9 | 57.4 | 58.7 | 58.2 | 56.9 | 56.4 | 55.6 |

| 59.2 | 64.4 | 57.3 | 59.0 | 59.0 | 47.2 | 54.2 | 55.1 | |

| + normalize | 59.2 | 64.4 | 57.3 | 59.0 | 59.0 | 47.2 | 54.2 | 55.1 |

| + quantile-u | 58.5 | 62.6 | 56.5 | 58.2 | 58.1 | 45.8 | 52.4 | 52.3 |

| + quantile- | 59.1 | 63.9 | 57.0 | 58.8 | 58.9 | 44.6 | 52.5 | 52.9 |

| + whiten | 43.8 | 45.3 | 46.0 | 46.7 | 47.4 | 45.0 | 44.6 | 44.6 |

| + whitenW | 52.6 | 56.3 | 56.9 | 58.2 | 58.4 | 54.7 | 55.0 | 55.1 |

| + zscore | 56.9 | 59.7 | 57.7 | 59.3 | 59.3 | 49.1 | 54.7 | 55.6 |

| -biases | 55.1 | 58.2 | 58.6 | 62.2 | 62.9 | 45.1 | 51.9 | 57.5 |

| + normalize | 55.1 | 58.2 | 58.6 | 62.2 | 62.8 | 45.1 | 51.9 | 57.5 |

| + quantile-u | 56.0 | 59.6 | 57.9 | 60.9 | 61.2 | 44.0 | 50.3 | 54.6 |

| + quantile-uW | 54.7 | 59.1 | 58.2 | 61.5 | 61.6 | 42.7 | 50.3 | 55.1 |

| + whitenW | 51.8 | 54.0 | 57.2 | 60.2 | 61.6 | 53.2 | 53.3 | 58.1 |

| + whiten | 42.5 | 43.2 | 45.1 | 46.2 | 47.6 | 44.2 | 44.2 | 46.2 |

| + zscore | 55.1 | 57.5 | 57.8 | 60.4 | 60.6 | 47.4 | 52.7 | 58.2 |

| [MASK] | 55.2 | 60.6 | ||||||

| + normalize | 55.2 | 60.6 | ||||||

| + quantile-u | 56.0 | 60.8 | ||||||

| + quantile- | 56.6 | 61.7 | ||||||

| + whiten | 47.7 | 48.0 | ||||||

| + whitenW | 52.5 | 57.7 | ||||||

| + zscore | 55.4 | 59.8 | ||||||

| Model | T0 | T4 | BERT | BERT + Avg. | Avg. | B2S | B2S-100 | RE |

|---|---|---|---|---|---|---|---|---|

| Layer | Last | First + Last | – | |||||

| avg. | 62.6 | 64.7 | 64.3 | 63.4 | 56.6 | 61.4 | 66.2 | 48.8 |

| + normalize | 62.6 | 64.7 | 64.3 | 63.4 | 56.6 | 61.4 | 66.2 | 48.8 |

| + quantile-u | 68.4 | 70.9 | 68.3 | 69.0 | 65.5 | 60.4 | 68.9 | 54.8 |

| + quantile-uW | 64.0 | 66.5 | 65.7 | 66.8 | 64.0 | 59.2 | 67.2 | 53.3 |

| + whiten | 76.0 | 76.6 | 77.6 | 78.3 | 78.0 | 76.4 | 76.1 | 75.1 |

| + whitenW | 62.2 | 66.5 | 64.8 | 65.8 | 66.2 | 60.6 | 60.8 | 63.7 |

| + zscore | 70.7 | 72.5 | 70.4 | 70.7 | 67.1 | 62.8 | 68.9 | 55.9 |

| 75.4 | 74.7 | 77.2 | 79.1 | 77.8 | 77.5 | 77.3 | 72.5 | |

| + normalize | 75.4 | 74.7 | 77.2 | 79.1 | 77.8 | 77.5 | 77.3 | 72.5 |

| + quantile-u | 76.7 | 76.0 | 77.7 | 79.3 | 78.7 | 77.1 | 76.3 | 73.4 |

| + quantile-uW | 75.6 | 74.7 | 77.1 | 79.3 | 79.1 | 77.2 | 76.7 | 74.2 |

| + whiten | 76.8 | 76.0 | 78.3 | 78.6 | 77.6 | 77.1 | 76.7 | 74.6 |

| + whitenW | 68.1 | 68.1 | 74.1 | 75.2 | 75.4 | 73.1 | 73.9 | 72.7 |

| + zscore | 76.7 | 76.4 | 78.2 | 79.7 | 79.3 | 77.5 | 78.0 | 73.0 |

| 70.8 | 73.7 | 74.8 | 76.5 | 76.0 | 61.4 | 66.2 | 68.3 | |

| + normalize | 70.8 | 73.7 | 74.8 | 76.5 | 76.0 | 61.4 | 66.2 | 68.3 |

| + quantile-u | 74.7 | 77.0 | 76.0 | 77.2 | 76.7 | 60.4 | 68.9 | 71.9 |

| + quantile-uW | 72.0 | 74.6 | 74.8 | 76.4 | 76.0 | 59.2 | 67.2 | 71.5 |

| + whiten | 77.1 | 78.0 | 78.1 | 78.3 | 77.3 | 76.4 | 76.1 | 74.0 |

| + whitenW | 67.2 | 71.0 | 70.3 | 70.4 | 70.1 | 60.6 | 60.8 | 68.1 |

| + zscore | 75.9 | 77.7 | 76.2 | 76.9 | 75.8 | 62.8 | 68.9 | 69.8 |

| -biases | 66.5 | 68.7 | 68.4 | 69.9 | 68.1 | 56.3 | 60.5 | 61.3 |

| + normalize | 66.5 | 68.7 | 68.4 | 69.9 | 68.1 | 56.3 | 60.5 | 61.3 |

| + quantile-u | 70.9 | 73.5 | 70.8 | 71.9 | 70.3 | 55.0 | 63.2 | 66.2 |

| + quantile-uW | 67.4 | 70.3 | 68.6 | 69.9 | 68.2 | 53.9 | 61.3 | 64.4 |

| + whitenW | 63.1 | 67.1 | 65.2 | 65.7 | 65.9 | 56.6 | 56.7 | 63.0 |

| + whiten | 76.2 | 76.8 | 77.9 | 78.5 | 78.1 | 73.3 | 73.1 | 74.7 |

| + zscore | 73.0 | 74.9 | 72.5 | 72.9 | 70.6 | 58.5 | 64.0 | 64.6 |

| [MASK] | 63.4 | 76.2 | ||||||

| + normalize | 63.4 | 76.2 | ||||||

| + quantile-u | 68.1 | 77.3 | ||||||

| + quantile-uW | 65.2 | 76.0 | ||||||

| + whiten | 73.1 | 77.6 | ||||||

| + whitenW | 61.7 | 70.9 | ||||||

| + zscore | 69.4 | 76.9 | ||||||

| Model | T0 | T4 | BERT | BERT + Avg. | Avg. | B2S | B2S-100 | RE |

|---|---|---|---|---|---|---|---|---|

| Layer | Last | First + Last | – | |||||

| avg. | 53.9 | 54.5 | 54.6 | 55.4 | 51.7 | 55.9 | 61.2 | 48.2 |

| + normalize | 53.9 | 54.5 | 54.6 | 55.4 | 51.7 | 55.9 | 61.2 | 48.2 |

| + quantile-u | 57.8 | 60.1 | 58.1 | 60.0 | 58.2 | 53.5 | 62.9 | 52.3 |

| + quantile-uW | 55.6 | 57.1 | 57.0 | 59.4 | 58.1 | 53.5 | 62.2 | 52.1 |

| + whiten | 64.0 | 65.0 | 66.2 | 68.1 | 69.4 | 67.7 | 68.5 | 68.3 |

| + whitenW | 53.5 | 57.0 | 57.6 | 61.1 | 64.5 | 60.7 | 61.3 | 64.6 |

| + zscore | 58.6 | 61.3 | 58.7 | 61.2 | 59.6 | 55.7 | 61.3 | 53.5 |

| 65.5 | 65.9 | 65.2 | 67.7 | 67.8 | 67.2 | 67.8 | 67.6 | |

| + normalize | 65.5 | 65.9 | 65.2 | 67.7 | 67.8 | 67.2 | 67.8 | 67.6 |

| + quantile-u | 66.7 | 66.7 | 66.5 | 69.0 | 69.3 | 67.2 | 68.1 | 66.4 |

| + quantile-uW | 66.5 | 66.6 | 66.2 | 68.9 | 69.3 | 67.1 | 68.0 | 67.0 |

| + whiten | 65.4 | 65.4 | 67.6 | 68.5 | 68.8 | 68.3 | 68.7 | 67.9 |

| + whitenW | 59.2 | 59.4 | 64.7 | 67.0 | 68.2 | 66.6 | 67.4 | 68.0 |

| + zscore | 65.7 | 65.3 | 66.2 | 68.8 | 69.2 | 66.8 | 68.0 | 67.8 |

| 61.6 | 63.9 | 64.3 | 66.7 | 67.1 | 55.9 | 61.2 | 65.5 | |

| + normalize | 61.6 | 63.9 | 64.3 | 66.7 | 67.1 | 55.9 | 61.2 | 65.5 |

| + quantile-u | 64.2 | 66.5 | 65.8 | 67.8 | 68.0 | 53.5 | 62.9 | 65.3 |

| + quantile-uW | 62.9 | 65.4 | 65.3 | 67.5 | 67.8 | 53.5 | 62.2 | 65.7 |

| + whiten | 65.2 | 66.8 | 67.5 | 68.3 | 68.5 | 67.7 | 68.5 | 67.4 |

| + whitenW | 58.0 | 61.6 | 63.0 | 64.6 | 65.3 | 60.7 | 61.3 | 65.3 |

| + zscore | 64.5 | 66.8 | 65.1 | 67.1 | 67.2 | 55.7 | 61.3 | 65.7 |

| -biases | 60.7 | 62.0 | 63.8 | 66.9 | 66.9 | 53.1 | 58.1 | 64.7 |

| + normalize | 60.7 | 62.0 | 63.8 | 66.9 | 66.9 | 53.1 | 58.1 | 64.7 |

| + quantile-u | 63.4 | 65.9 | 65.2 | 67.9 | 67.8 | 50.8 | 59.8 | 65.2 |

| + quantile-uW | 61.9 | 64.3 | 64.8 | 67.7 | 67.6 | 50.9 | 59.2 | 65.3 |

| + whitenW | 57.3 | 60.4 | 62.8 | 65.5 | 67.3 | 58.5 | 58.9 | 66.2 |

| + whiten | 64.9 | 66.2 | 68.3 | 69.6 | 70.4 | 65.8 | 66.4 | 68.8 |

| + zscore | 64.1 | 66.3 | 64.8 | 67.4 | 67.2 | 53.3 | 58.6 | 65.3 |

| [MASK] | 53.9 | 63.9 | ||||||

| + normalize | 53.9 | 63.9 | ||||||

| + quantile-u | 56.1 | 65.1 | ||||||

| + quantile-uW | 54.8 | 64.7 | ||||||

| + whiten | 60.8 | 66.3 | ||||||

| + whitenW | 51.5 | 61.3 | ||||||

| + zscore | 56.7 | 65.1 | ||||||

| Model | T0 | T4 | BERT | BERT + Avg. | Avg. | B2S | B2S-100 | RE |

|---|---|---|---|---|---|---|---|---|

| Layer | Last | First + Last | – | |||||

| avg. | 70.2 | 70.6 | 70.5 | 70.0 | 64.2 | 69.8 | 74.2 | 62.1 |

| + normalize | 70.2 | 70.6 | 70.5 | 70.0 | 64.2 | 69.8 | 74.2 | 62.1 |

| + quantile-u | 71.8 | 73.7 | 72.7 | 74.3 | 72.4 | 68.9 | 73.6 | 61.4 |

| + quantile-uW | 71.5 | 72.5 | 72.3 | 74.1 | 72.6 | 69.4 | 74.4 | 62.7 |

| + whiten | 62.6 | 62.9 | 64.0 | 65.9 | 69.3 | 69.3 | 68.8 | 67.9 |

| + whitenW | 70.2 | 71.8 | 71.9 | 74.9 | 77.0 | 74.7 | 75.2 | 74.7 |

| + zscore | 71.9 | 73.0 | 72.6 | 74.3 | 72.4 | 69.3 | 73.9 | 64.3 |

| 75.4 | 74.4 | 75.8 | 75.4 | 71.5 | 73.7 | 74.4 | 74.4 | |

| + normalize | 75.4 | 74.4 | 75.8 | 75.4 | 71.5 | 73.7 | 74.4 | 74.4 |

| + quantile-u | 75.1 | 74.2 | 75.3 | 75.7 | 73.7 | 73.4 | 71.9 | 68.5 |

| + quantile-uW | 76.5 | 75.6 | 76.3 | 76.9 | 74.8 | 74.6 | 73.7 | 71.1 |

| + whiten | 62.7 | 61.4 | 64.3 | 65.2 | 66.3 | 66.8 | 66.7 | 65.0 |

| + whitenW | 72.2 | 71.6 | 75.0 | 76.5 | 76.6 | 75.4 | 75.5 | 74.4 |

| + zscore | 74.2 | 73.1 | 75.2 | 76.2 | 75.0 | 73.8 | 74.4 | 73.2 |

| 74.1 | 75.2 | 76.2 | 76.8 | 75.1 | 69.8 | 74.2 | 73.8 | |

| + normalize | 74.1 | 75.2 | 76.2 | 76.8 | 75.1 | 69.8 | 74.2 | 73.8 |

| + quantile-u | 75.2 | 77.1 | 76.0 | 76.8 | 75.7 | 68.9 | 73.6 | 69.5 |

| + quantile-uW | 75.4 | 77.0 | 76.9 | 77.8 | 76.7 | 69.4 | 74.4 | 71.6 |

| + whiten | 63.1 | 64.2 | 64.5 | 66.0 | 68.5 | 69.3 | 68.8 | 67.2 |

| + whitenW | 72.9 | 74.6 | 75.4 | 76.2 | 75.8 | 74.7 | 75.2 | 73.1 |

| + zscore | 75.0 | 76.0 | 75.9 | 76.8 | 75.8 | 69.3 | 73.9 | 72.7 |

| -biases | 72.9 | 73.3 | 76.2 | 77.3 | 75.2 | 67.3 | 70.8 | 74.3 |

| + normalize | 72.9 | 73.3 | 76.2 | 77.3 | 75.2 | 67.3 | 70.8 | 74.3 |

| + quantile-u | 74.1 | 76.1 | 76.3 | 77.5 | 76.2 | 66.3 | 70.1 | 69.6 |

| + quantile-uW | 73.7 | 75.3 | 76.8 | 78.4 | 77.3 | 66.9 | 70.9 | 71.7 |

| + whitenW | 72.6 | 73.7 | 75.4 | 77.0 | 77.3 | 72.6 | 72.6 | 74.8 |

| + whiten | 64.2 | 64.8 | 65.2 | 66.5 | 69.1 | 67.4 | 66.4 | 66.8 |

| + zscore | 74.4 | 75.1 | 75.7 | 76.9 | 75.7 | 67.0 | 70.7 | 73.6 |

| [MASK] | 67.0 | 74.1 | ||||||

| + normalize | 67.0 | 74.1 | ||||||

| + quantile-u | 68.0 | 74.7 | ||||||

| + quantile-uW | 68.3 | 75.7 | ||||||

| + whiten | 61.0 | 65.1 | ||||||

| + whitenW | 67.7 | 74.5 | ||||||

| + zscore | 68.0 | 72.9 | ||||||

| Model | T0 | T4 | BERT | BERT + Avg. | Avg. | B2S | B2S-100 | RE |

|---|---|---|---|---|---|---|---|---|

| Layer | Last | First + Last | – | |||||

| avg. | 69.1 | 69.7 | 67.9 | 65.2 | 56.4 | 55.2 | 58.5 | 55.5 |

| + normalize | 69.1 | 69.7 | 67.9 | 65.2 | 56.4 | 55.1 | 58.5 | 55.5 |

| + quantile-u | 70.1 | 72.4 | 70.3 | 71.0 | 67.0 | 55.9 | 59.5 | 54.8 |

| + quantile-uW | 69.7 | 71.5 | 69.8 | 70.0 | 65.3 | 54.6 | 57.9 | 55.1 |

| + whiten | 65.4 | 65.9 | 67.1 | 68.9 | 69.9 | 65.0 | 63.0 | 67.1 |

| + whitenW | 64.7 | 67.2 | 69.9 | 71.5 | 71.1 | 63.1 | 62.8 | 68.3 |

| + zscore | 69.3 | 71.0 | 72.0 | 73.2 | 69.8 | 60.2 | 62.3 | 60.4 |

| 70.1 | 69.1 | 73.9 | 73.0 | 69.5 | 68.8 | 68.0 | 71.9 | |

| + normalize | 70.1 | 69.1 | 73.9 | 73.0 | 69.5 | 68.8 | 68.0 | 71.9 |

| + quantile-u | 70.5 | 69.9 | 73.7 | 74.2 | 72.9 | 68.1 | 65.8 | 68.9 |

| + quantile-uW | 70.5 | 69.8 | 74.0 | 74.4 | 72.7 | 67.9 | 66.2 | 69.6 |

| + whiten | 64.6 | 64.0 | 68.0 | 68.9 | 69.1 | 65.6 | 63.4 | 67.3 |

| + whitenW | 70.0 | 69.1 | 75.6 | 76.0 | 74.8 | 69.6 | 68.7 | 72.4 |

| + zscore | 72.2 | 71.7 | 75.6 | 76.4 | 74.8 | 70.3 | 69.5 | 72.2 |

| 72.9 | 73.1 | 72.8 | 72.0 | 69.7 | 55.2 | 58.5 | 69.1 | |

| + normalize | 72.9 | 73.1 | 72.8 | 72.0 | 69.7 | 55.2 | 58.5 | 69.1 |

| + quantile-u | 73.9 | 75.0 | 72.8 | 73.0 | 71.8 | 55.9 | 59.5 | 67.3 |

| + quantile-uW | 73.7 | 74.6 | 72.7 | 72.7 | 71.2 | 54.6 | 57.9 | 67.5 |

| + whiten | 67.0 | 68.6 | 68.0 | 68.7 | 69.5 | 65.0 | 63.0 | 67.8 |

| + whitenW | 69.0 | 71.0 | 73.2 | 72.8 | 71.3 | 63.1 | 62.8 | 69.3 |

| + zscore | 73.1 | 73.1 | 74.4 | 74.4 | 73.1 | 60.2 | 62.3 | 70.1 |

| -biases | 70.1 | 71.9 | 72.2 | 72.1 | 68.4 | 52.8 | 56.3 | 65.4 |

| + normalize | 70.1 | 71.9 | 72.2 | 72.1 | 68.4 | 52.8 | 56.3 | 65.4 |

| + quantile-u | 71.1 | 73.7 | 72.3 | 73.3 | 71.6 | 53.5 | 57.5 | 64.7 |

| + quantile-uW | 70.6 | 73.2 | 72.1 | 72.7 | 70.4 | 52.3 | 55.8 | 64.4 |

| + whitenW | 65.2 | 68.0 | 71.0 | 72.1 | 71.5 | 61.1 | 60.9 | 68.5 |

| + whiten | 64.9 | 66.2 | 67.3 | 68.5 | 69.3 | 63.4 | 61.4 | 67.4 |

| + zscore | 71.1 | 72.5 | 73.3 | 74.1 | 72.0 | 58.2 | 60.4 | 66.7 |

| [MASK] | 67.2 | 71.0 | ||||||

| + normalize | 67.2 | 71.0 | ||||||

| + quantile-u | 68.2 | 71.8 | ||||||

| + quantile-uW | 67.7 | 71.7 | ||||||

| + whiten | 65.5 | 70.0 | ||||||

| + whitenW | 64.1 | 69.4 | ||||||

| + zscore | 66.6 | 68.9 | ||||||

| Model | T0 | T4 | BERT | BERT + Avg. | Avg. | B2S | B2S-100 | RE |

|---|---|---|---|---|---|---|---|---|

| Layer | Last | First + Last | – | |||||

| avg. | 60.4 | 61.0 | 59.0 | 59.9 | 54.2 | 56.1 | 60.7 | 46.5 |

| + normalize | 60.4 | 61.0 | 59.0 | 59.9 | 54.2 | 56.1 | 60.7 | 46.5 |

| + quantile-u | 67.7 | 70.9 | 63.6 | 65.2 | 61.7 | 56.9 | 61.1 | 52.4 |

| + quantile-uW | 62.8 | 65.0 | 61.3 | 63.5 | 59.7 | 54.7 | 60.4 | 50.5 |

| + whiten | 68.2 | 70.0 | 68.7 | 70.0 | 69.6 | 66.7 | 64.6 | 68.1 |

| + whitenW | 62.1 | 65.8 | 62.6 | 67.3 | 69.4 | 65.1 | 64.1 | 67.5 |

| + zscore | 68.7 | 71.1 | 65.0 | 66.7 | 63.0 | 58.8 | 62.5 | 54.6 |

| 69.0 | 68.2 | 70.3 | 69.6 | 66.4 | 68.0 | 66.9 | 69.8 | |

| + normalize | 69.0 | 68.2 | 70.3 | 69.6 | 66.4 | 68.0 | 66.9 | 69.8 |

| + quantile-u | 69.4 | 68.6 | 69.3 | 69.6 | 67.8 | 66.7 | 64.7 | 64.4 |

| + quantile-uW | 69.4 | 68.8 | 70.2 | 70.3 | 68.1 | 67.0 | 65.3 | 65.7 |

| + whiten | 65.6 | 65.0 | 67.9 | 68.0 | 67.2 | 65.8 | 64.1 | 66.5 |

| + whitenW | 67.0 | 66.6 | 71.2 | 72.1 | 71.5 | 69.8 | 68.2 | 70.4 |

| + zscore | 70.7 | 69.9 | 71.0 | 71.7 | 70.1 | 69.0 | 67.8 | 70.0 |

| 68.8 | 70.7 | 69.6 | 69.3 | 67.3 | 56.1 | 60.7 | 67.0 | |

| + normalize | 68.8 | 70.7 | 69.6 | 69.3 | 67.3 | 56.1 | 60.7 | 67.0 |

| + quantile-u | 72.4 | 75.1 | 69.2 | 69.3 | 67.7 | 56.9 | 61.1 | 64.2 |

| + quantile-uW | 70.4 | 73.2 | 69.7 | 69.6 | 67.7 | 54.7 | 60.4 | 65.0 |

| + whiten | 69.6 | 72.2 | 68.4 | 68.4 | 67.7 | 66.7 | 64.6 | 67.0 |

| + whitenW | 67.8 | 71.0 | 69.6 | 69.9 | 68.7 | 65.1 | 64.1 | 67.1 |

| + zscore | 72.4 | 74.7 | 70.1 | 70.3 | 68.6 | 58.8 | 62.5 | 67.4 |

| -biases | 64.7 | 65.4 | 70.2 | 71.0 | 67.8 | 52.9 | 57.3 | 66.6 |

| + normalize | 64.7 | 65.4 | 70.2 | 71.0 | 67.8 | 52.9 | 57.3 | 66.6 |

| + quantile-u | 70.1 | 73.4 | 69.9 | 71.0 | 68.9 | 54.2 | 58.1 | 62.8 |

| + quantile-uW | 66.1 | 69.2 | 70.3 | 71.5 | 69.0 | 51.7 | 57.1 | 63.9 |

| + whitenW | 66.1 | 68.5 | 69.6 | 71.3 | 70.9 | 61.8 | 60.8 | 68.8 |

| + whiten | 68.1 | 69.9 | 69.5 | 69.9 | 69.3 | 64.6 | 62.4 | 66.5 |

| + zscore | 71.1 | 73.2 | 70.2 | 71.1 | 68.9 | 56.1 | 59.4 | 67.4 |

| [MASK] | 68.5 | 73.3 | ||||||

| + normalize | 68.5 | 73.3 | ||||||

| + quantile-u | 70.8 | 74.8 | ||||||

| + quantile-uW | 69.3 | 74.4 | ||||||

| + whiten | 70.5 | 74.3 | ||||||

| + whitenW | 65.3 | 70.9 | ||||||

| + zscore | 70.5 | 73.7 | ||||||

| Model | T0 | T4 | BERT | BERT + Avg. | Avg. | B2S | B2S-100 | RE |

|---|---|---|---|---|---|---|---|---|

| Layer | Last | First + Last | – | |||||

| avg. | 64.4 | 64.2 | 63.8 | 63.1 | 60.3 | 60.2 | 61.2 | 53.1 |

| + normalize | 64.4 | 64.2 | 63.8 | 63.1 | 60.3 | 60.2 | 61.2 | 53.1 |

| + quantile-u | 66.1 | 66.3 | 64.9 | 64.7 | 62.5 | 61.0 | 61.8 | 54.8 |

| + quantile-uW | 64.9 | 64.7 | 64.3 | 64.1 | 61.6 | 59.7 | 61.0 | 53.1 |

| + whiten | 61.0 | 60.8 | 60.4 | 59.1 | 55.1 | 55.3 | 55.4 | 53.3 |

| + whitenW | 63.1 | 63.2 | 63.7 | 63.9 | 61.5 | 61.0 | 59.9 | 58.4 |

| + zscore | 66.3 | 66.7 | 65.0 | 64.9 | 62.8 | 62.1 | 63.1 | 56.3 |

| 62.5 | 62.0 | 61.7 | 59.7 | 57.5 | 61.9 | 60.1 | 57.4 | |

| + normalize | 62.5 | 62.0 | 61.7 | 59.7 | 57.5 | 61.9 | 60.2 | 57.4 |

| + quantile-u | 63.0 | 62.6 | 61.8 | 60.4 | 58.5 | 60.8 | 59.4 | 52.9 |

| + quantile-uW | 62.9 | 62.5 | 61.8 | 60.5 | 59.0 | 61.1 | 59.5 | 54.7 |

| + whiten | 57.7 | 57.5 | 57.1 | 55.9 | 53.8 | 54.5 | 54.7 | 52.0 |

| + whitenW | 60.9 | 61.1 | 61.9 | 60.8 | 59.0 | 61.2 | 60.1 | 56.7 |

| + zscore | 63.7 | 63.4 | 63.2 | 62.3 | 60.9 | 62.9 | 61.3 | 57.3 |

| 64.4 | 65.0 | 62.8 | 61.1 | 59.2 | 60.2 | 61.3 | 56.8 | |

| + normalize | 64.4 | 65.0 | 62.8 | 61.1 | 59.2 | 60.2 | 61.2 | 56.8 |

| + quantile-u | 66.0 | 66.3 | 63.2 | 61.6 | 59.7 | 61.0 | 61.8 | 54.3 |

| + quantile-uW | 65.0 | 65.6 | 63.0 | 61.3 | 59.5 | 59.7 | 61.0 | 55.2 |

| + whiten | 59.9 | 60.3 | 58.1 | 56.8 | 54.4 | 55.3 | 55.4 | 52.5 |

| + whitenW | 63.5 | 64.2 | 62.4 | 61.0 | 58.9 | 61.0 | 59.9 | 56.3 |

| + zscore | 66.2 | 66.5 | 63.7 | 62.4 | 60.8 | 62.1 | 63.1 | 57.0 |

| -biases | 65.1 | 65.9 | 65.6 | 65.1 | 63.8 | 59.0 | 59.7 | 59.7 |

| + normalize | 65.1 | 65.9 | 65.6 | 65.1 | 63.8 | 59.0 | 59.7 | 59.7 |

| + quantile-u | 66.9 | 67.7 | 66.0 | 65.7 | 64.7 | 59.9 | 60.2 | 56.5 |

| + quantile-uW | 65.6 | 66.5 | 65.8 | 65.5 | 64.4 | 58.6 | 59.3 | 57.3 |

| + whitenW | 64.8 | 65.4 | 64.7 | 63.9 | 61.3 | 59.8 | 58.6 | 58.0 |

| + whiten | 61.3 | 61.1 | 60.3 | 58.9 | 55.4 | 54.2 | 54.1 | 53.1 |

| + zscore | 67.1 | 67.8 | 66.3 | 66.3 | 65.6 | 61.1 | 62.0 | 60.4 |

| [MASK] | 64.8 | 65.0 | ||||||

| + normalize | 64.8 | 65.0 | ||||||

| + quantile-u | 67.1 | 66.0 | ||||||

| + quantile-uW | 65.6 | 65.6 | ||||||

| + whiten | 61.7 | 61.8 | ||||||

| + whitenW | 63.3 | 64.2 | ||||||

| + zscore | 67.1 | 66.2 | ||||||

| Model | T0 | T4 | BERT | BERT + Avg. | Avg. | B2S | B2S-100 | RE |

|---|---|---|---|---|---|---|---|---|

| Layer | Last | First + Last | – | |||||

| avg. | 60.9 | 59.8 | 65.4 | 65.6 | 59.4 | 48.2 | 48.9 | 53.3 |

| + normalize | 60.9 | 59.8 | 65.4 | 65.6 | 59.4 | 48.2 | 48.9 | 53.3 |

| + quantile-u | 65.7 | 67.8 | 68.1 | 69.2 | 65.4 | 47.3 | 47.9 | 53.6 |

| + quantile-uW | 63.6 | 64.1 | 67.3 | 68.8 | 65.3 | 48.0 | 48.6 | 53.7 |

| + whiten | 68.9 | 69.6 | 70.1 | 69.7 | 67.0 | 51.4 | 48.8 | 62.1 |

| + whitenW | 65.2 | 67.7 | 66.7 | 68.7 | 68.6 | 51.3 | 49.2 | 63.2 |

| + zscore | 66.6 | 68.9 | 66.7 | 67.5 | 64.5 | 50.1 | 50.1 | 56.0 |

| 68.2 | 67.8 | 71.9 | 70.9 | 66.3 | 53.8 | 51.5 | 62.6 | |

| + normalize | 68.2 | 67.8 | 71.9 | 70.9 | 66.3 | 53.8 | 51.5 | 62.6 |

| + quantile-u | 69.7 | 69.5 | 72.5 | 72.7 | 70.0 | 52.4 | 49.7 | 59.7 |

| + quantile-uW | 69.2 | 69.0 | 72.5 | 72.8 | 70.4 | 53.3 | 50.8 | 61.2 |

| + whiten | 69.5 | 68.8 | 70.1 | 68.9 | 66.2 | 51.9 | 49.5 | 61.1 |

| + whitenW | 68.2 | 67.8 | 70.8 | 70.6 | 67.7 | 52.4 | 49.9 | 61.7 |

| + zscore | 69.5 | 68.9 | 71.4 | 71.2 | 68.7 | 54.0 | 52.2 | 62.4 |

| 67.1 | 67.5 | 71.8 | 70.8 | 66.9 | 48.2 | 48.9 | 63.3 | |

| + normalize | 67.1 | 67.5 | 71.8 | 70.8 | 66.9 | 48.2 | 48.9 | 63.3 |

| + quantile-u | 71.0 | 73.2 | 72.1 | 71.9 | 69.3 | 47.3 | 47.9 | 59.3 |

| + quantile-uW | 69.8 | 71.6 | 72.0 | 72.0 | 69.7 | 48.0 | 48.6 | 60.6 |

| + whiten | 70.4 | 71.1 | 70.4 | 69.0 | 66.3 | 51.4 | 48.8 | 61.4 |

| + whitenW | 69.0 | 71.3 | 70.4 | 70.0 | 67.6 | 51.3 | 49.2 | 62.1 |

| + zscore | 70.8 | 73.2 | 71.4 | 71.3 | 69.3 | 50.1 | 50.1 | 63.1 |

| -biases | 62.8 | 62.5 | 70.7 | 70.1 | 64.9 | 46.0 | 45.8 | 60.3 |

| + normalize | 62.8 | 62.5 | 70.7 | 70.1 | 64.9 | 46.0 | 45.8 | 60.3 |

| + quantile-u | 66.7 | 69.6 | 72.2 | 72.1 | 68.6 | 45.7 | 45.0 | 58.0 |

| + quantile-uW | 65.2 | 67.1 | 71.9 | 71.9 | 68.4 | 46.2 | 45.5 | 58.9 |

| + whitenW | 67.8 | 69.3 | 69.2 | 69.2 | 66.4 | 49.4 | 47.0 | 60.1 |

| + whiten | 69.1 | 69.5 | 69.6 | 68.5 | 65.0 | 49.6 | 46.9 | 59.6 |

| + zscore | 68.3 | 70.8 | 69.7 | 69.1 | 65.9 | 48.5 | 47.6 | 60.0 |

| [MASK] | 67.0 | 70.1 | ||||||

| + normalize | 67.0 | 70.1 | ||||||

| + quantile-u | 70.7 | 73.5 | ||||||

| + quantile-uW | 69.1 | 73.2 | ||||||

| + whiten | 70.5 | 72.3 | ||||||

| + whitenW | 68.1 | 72.6 | ||||||

| + zscore | 70.7 | 73.1 | ||||||

| Model | T0 | T4 | BERT | BERT + Avg. | Avg. | B2S | B2S-100 | RE |

|---|---|---|---|---|---|---|---|---|

| Layer | Last | First + Last | – | |||||

| avg. | 85.4 | 80.7 | 81.2 | 84.4 | 79.0 | 85.0 | 85.0 | 28.0 |

| + normalize | 85.5 | 80.6 | 85.6 | 86.1 | 79.6 | 85.7 | 85.9 | 27.2 |

| + quantile-u | 85.2 | 86.9 | 84.0 | 85.1 | 79.7 | 85.2 | 85.2 | 27.5 |

| + quantile-uW | 85.4 | 86.7 | 85.3 | 85.7 | 80.6 | 85.4 | 85.4 | 27.2 |

| + whiten | 34.3 | 31.3 | 31.3 | 31.5 | 30.5 | 29.9 | 29.9 | 28.8 |

| + whitenW | 75.2 | 75.0 | 72.7 | 72.8 | 58.6 | 70.6 | 69.0 | 28.4 |

| + zscore | 86.0 | 81.2 | 81.3 | 83.8 | 79.8 | 84.8 | 85.2 | 27.7 |

| 79.5 | 81.4 | 80.6 | 84.0 | 78.0 | 83.6 | 83.7 | 39.9 | |

| + normalize | 84.9 | 85.6 | 84.7 | 85.1 | 78.3 | 85.0 | 84.9 | 41.4 |

| + quantile-u | 85.8 | 85.7 | 81.7 | 84.4 | 78.6 | 84.2 | 84.2 | 41.2 |

| + quantile-uW | 85.6 | 85.8 | 81.8 | 84.7 | 79.1 | 84.6 | 84.7 | 43.4 |

| + whiten | 30.9 | 32.0 | 31.3 | 31.8 | 30.7 | 30.7 | 30.1 | 27.6 |

| + whitenW | 74.5 | 78.1 | 73.8 | 74.4 | 70.9 | 72.5 | 72.1 | 38.4 |

| + zscore | 85.9 | 81.8 | 80.4 | 84.0 | 78.9 | 83.7 | 83.7 | 41.0 |

| 80.1 | 82.1 | 80.9 | 82.5 | 79.3 | 85.0 | 85.0 | 28.7 | |

| + normalize | 85.2 | 82.2 | 85.1 | 84.8 | 81.7 | 85.7 | 85.9 | 28.5 |

| + quantile-u | 85.9 | 87.0 | 82.4 | 81.9 | 80.9 | 85.2 | 85.2 | 28.6 |

| + quantile-uW | 85.9 | 87.0 | 82.0 | 84.4 | 82.1 | 85.3 | 85.5 | 28.4 |

| + whiten | 30.2 | 32.6 | 30.9 | 31.3 | 29.9 | 29.9 | 29.8 | 28.0 |

| + whitenW | 72.1 | 68.4 | 73.0 | 71.9 | 45.5 | 70.6 | 69.0 | 28.9 |

| + zscore | 85.9 | 82.6 | 81.1 | 82.1 | 79.7 | 84.8 | 85.2 | 28.8 |

| -biases | 85.7 | 86.4 | 81.4 | 85.6 | 83.4 | 84.0 | 83.8 | 28.5 |

| + normalize | 85.7 | 86.6 | 86.8 | 86.8 | 83.8 | 85.3 | 85.5 | 28.4 |

| + quantile-u | 85.4 | 86.7 | 85.7 | 85.4 | 83.4 | 84.7 | 84.7 | 28.5 |

| + quantile-uW | 85.6 | 87.1 | 86.1 | 86.0 | 83.6 | 84.6 | 84.9 | 29.4 |

| + whitenW | 73.5 | 73.4 | 72.4 | 72.1 | 55.5 | 68.8 | 67.6 | 28.2 |

| + whiten | 31.1 | 31.5 | 33.0 | 30.4 | 30.3 | 29.5 | 29.8 | 28.3 |

| + zscore | 86.0 | 87.1 | 81.7 | 84.8 | 83.5 | 84.4 | 84.5 | 28.1 |

| [MASK] | 74.8 | 81.9 | ||||||

| + normalize | 75.1 | 82.2 | ||||||

| + quantile-u | 63.1 | 82.1 | ||||||

| + quantile-uW | 76.1 | 84.2 | ||||||

| + whiten | 30.9 | 31.8 | ||||||

| + whitenW | 52.9 | 48.3 | ||||||

| + zscore | 76.1 | 81.7 | ||||||

| Model | T0 | T4 | BERT | BERT + Avg. | Avg. | B2S | B2S-100 | RE |

|---|---|---|---|---|---|---|---|---|

| Layer | Last | First + Last | – | |||||

| avg. | 32.7 | 32.4 | 34.8 | 36.1 | 30.7 | 33.1 | 37.0 | 19.4 |

| + normalize | 32.8 | 32.0 | 35.1 | 36.3 | 30.3 | 33.8 | 37.6 | 20.7 |

| + quantile-u | 33.6 | 32.5 | 37.0 | 39.1 | 33.1 | 32.9 | 36.7 | 21.0 |

| + quantile-uW | 33.4 | 32.5 | 35.4 | 37.9 | 34.5 | 33.0 | 37.0 | 21.1 |

| + whiten | 29.4 | 30.2 | 27.2 | 23.2 | 18.2 | 12.7 | 13.2 | 15.9 |

| + whitenW | 34.8 | 34.7 | 40.2 | 42.7 | 36.5 | 35.0 | 34.4 | 29.4 |

| + zscore | 33.5 | 33.0 | 36.1 | 37.4 | 32.1 | 32.4 | 36.1 | 20.0 |

| 31.0 | 30.0 | 32.3 | 32.3 | 28.1 | 34.5 | 34.5 | 32.1 | |

| + normalize | 31.2 | 30.2 | 32.5 | 32.6 | 28.7 | 34.7 | 34.8 | 35.2 |

| + quantile-u | 32.5 | 31.3 | 33.6 | 33.6 | 29.8 | 33.9 | 35.0 | 32.4 |

| + quantile-uW | 31.7 | 30.7 | 31.9 | 33.6 | 30.7 | 33.7 | 34.4 | 33.4 |

| + whiten | 27.3 | 27.6 | 26.4 | 20.6 | 17.1 | 11.8 | 12.4 | 16.7 |

| + whitenW | 32.8 | 32.1 | 37.0 | 38.7 | 35.4 | 37.2 | 37.2 | 30.8 |

| + zscore | 32.0 | 30.8 | 32.6 | 32.5 | 28.2 | 34.3 | 34.3 | 31.8 |

| 33.4 | 33.6 | 36.0 | 36.2 | 32.6 | 33.1 | 37.0 | 30.0 | |

| + normalize | 33.6 | 34.1 | 36.0 | 37.1 | 33.9 | 33.8 | 37.6 | 32.2 |

| + quantile-u | 35.4 | 35.3 | 36.9 | 37.2 | 34.1 | 32.9 | 36.7 | 31.3 |

| + quantile-uW | 35.0 | 35.7 | 36.6 | 37.5 | 34.7 | 33.0 | 37.0 | 32.2 |

| + whiten | 28.3 | 30.8 | 20.9 | 19.4 | 16.3 | 12.7 | 13.2 | 15.2 |

| + whitenW | 36.8 | 37.4 | 40.4 | 39.9 | 33.1 | 35.0 | 34.4 | 29.2 |

| + zscore | 34.7 | 35.4 | 36.9 | 36.8 | 32.4 | 32.4 | 36.1 | 29.5 |

| -biases | 35.1 | 32.2 | 39.0 | 39.2 | 37.2 | 31.6 | 35.9 | 29.2 |

| + normalize | 35.2 | 32.5 | 39.4 | 39.4 | 37.7 | 32.9 | 36.9 | 30.8 |

| + quantile-u | 36.0 | 33.0 | 39.8 | 39.6 | 37.2 | 32.3 | 36.2 | 29.9 |

| + quantile-uW | 36.6 | 34.6 | 39.6 | 39.9 | 37.3 | 32.4 | 36.3 | 30.9 |

| + whitenW | 38.6 | 38.7 | 41.7 | 40.3 | 34.4 | 34.4 | 33.4 | 28.7 |

| + whiten | 29.8 | 31.6 | 22.0 | 17.1 | 13.5 | 12.5 | 12.0 | 13.2 |

| + zscore | 35.6 | 32.7 | 39.4 | 39.5 | 37.0 | 30.9 | 35.6 | 28.7 |

| [MASK] | 24.0 | 34.8 | ||||||

| + normalize | 25.1 | 35.3 | ||||||

| + quantile-u | 27.4 | 35.9 | ||||||

| + quantile-uW | 25.1 | 34.7 | ||||||

| + whiten | 21.9 | 28.4 | ||||||

| + whitenW | 23.7 | 32.8 | ||||||

| + zscore | 26.4 | 35.0 | ||||||

| Model | T0 | T4 | BERT | BERT + Avg. | Avg. | B2S | B2S-100 | RE |

|---|---|---|---|---|---|---|---|---|

| Layer | Last | First + Last | – | |||||

| avg. | 63.5 | 64.3 | 66.1 | 66.1 | 65.1 | 66.2 | 65.8 | 61.2 |

| + normalize | 62.7 | 64.6 | 66.2 | 67.1 | 66.2 | 68.3 | 67.7 | 67.1 |

| + quantile-u | 64.8 | 65.1 | 67.1 | 67.4 | 67.3 | 66.3 | 66.9 | 63.5 |

| + quantile-uW | 63.9 | 64.7 | 66.4 | 68.0 | 66.7 | 66.9 | 67.2 | 63.6 |

| + whiten | 59.2 | 60.3 | 57.5 | 57.3 | 54.5 | 58.1 | 58.4 | 53.1 |

| + whitenW | 62.4 | 62.0 | 65.6 | 66.1 | 65.2 | 65.8 | 65.8 | 61.7 |

| + zscore | 63.6 | 65.0 | 66.7 | 67.1 | 65.9 | 66.0 | 66.6 | 61.8 |

| 63.5 | 62.6 | 65.2 | 66.2 | 65.6 | 64.5 | 65.8 | 63.8 | |

| + normalize | 64.3 | 63.1 | 66.0 | 66.5 | 66.0 | 66.5 | 66.7 | 69.5 |

| + quantile-u | 64.0 | 65.4 | 66.6 | 66.5 | 67.0 | 66.4 | 66.1 | 64.1 |

| + quantile-uW | 64.4 | 64.9 | 67.5 | 67.8 | 66.6 | 67.4 | 65.9 | 63.4 |

| + whiten | 57.9 | 59.9 | 58.3 | 56.5 | 55.2 | 58.9 | 58.6 | 51.4 |

| + whitenW | 61.9 | 61.4 | 66.2 | 67.7 | 66.7 | 65.5 | 66.7 | 63.3 |

| + zscore | 63.5 | 63.3 | 66.1 | 66.4 | 65.7 | 65.9 | 65.6 | 63.9 |

| 63.2 | 64.9 | 66.4 | 67.4 | 65.9 | 66.2 | 66.0 | 61.3 | |

| + normalize | 64.7 | 64.7 | 67.7 | 68.6 | 65.9 | 68.3 | 67.7 | 67.4 |

| + quantile-u | 65.2 | 65.5 | 68.5 | 67.9 | 67.5 | 66.6 | 67.0 | 63.7 |

| + quantile-uW | 64.8 | 66.2 | 67.7 | 67.3 | 67.3 | 67.1 | 67.2 | 62.9 |

| + whiten | 58.8 | 59.2 | 58.7 | 59.0 | 55.6 | 57.7 | 58.5 | 55.1 |

| + whitenW | 60.1 | 60.4 | 65.1 | 66.7 | 64.8 | 65.8 | 65.8 | 61.2 |

| + zscore | 63.8 | 65.0 | 66.4 | 67.6 | 66.3 | 66.0 | 66.3 | 62.1 |

| -biases | 63.2 | 64.1 | 66.0 | 66.3 | 65.7 | 65.7 | 65.3 | 62.0 |

| + normalize | 62.6 | 63.4 | 66.3 | 66.8 | 65.8 | 67.0 | 66.8 | 67.1 |

| + quantile-u | 64.8 | 65.1 | 66.7 | 68.0 | 67.7 | 65.7 | 65.4 | 64.0 |

| + quantile-uW | 64.4 | 65.5 | 67.1 | 67.4 | 66.7 | 66.5 | 66.7 | 64.1 |

| + whitenW | 60.9 | 61.6 | 66.0 | 66.4 | 65.2 | 63.9 | 65.5 | 63.2 |

| + whiten | 61.3 | 60.3 | 59.2 | 59.2 | 57.6 | 57.3 | 57.1 | 56.9 |

| + zscore | 63.8 | 64.9 | 65.7 | 66.7 | 66.1 | 65.3 | 65.0 | 62.7 |

| [MASK] | 45.5 | 56.0 | ||||||

| + normalize | 45.9 | 56.5 | ||||||

| + quantile-u | 47.8 | 56.2 | ||||||

| + quantile-uW | 46.9 | 56.4 | ||||||

| + whiten | 53.4 | 53.1 | ||||||

| + whitenW | 43.0 | 53.3 | ||||||

| + zscore | 47.0 | 56.0 | ||||||

| Model | T0 | T4 | BERT | BERT + Avg. | Avg. | B2S | B2S-100 | RE |

|---|---|---|---|---|---|---|---|---|

| Layer | Last | First + Last | – | |||||

| avg. | 67.3 | 73.3 | 72.2 | 70.8 | 63.6 | 72.0 | 72.0 | 23.3 |

| + normalize | 68.2 | 73.8 | 81.7 | 73.1 | 65.7 | 76.4 | 76.3 | 20.4 |

| + quantile-u | 69.8 | 72.4 | 74.1 | 73.0 | 66.8 | 75.2 | 72.9 | 22.3 |

| + quantile-uW | 69.7 | 73.5 | 81.6 | 74.7 | 68.6 | 75.7 | 75.1 | 21.7 |

| + whiten | 33.8 | 36.1 | 32.3 | 31.7 | 26.3 | 22.9 | 24.8 | 21.2 |

| + whitenW | 47.7 | 49.2 | 58.3 | 61.8 | 56.3 | 58.8 | 58.3 | 24.0 |

| + zscore | 67.9 | 73.2 | 72.7 | 71.3 | 63.7 | 72.3 | 72.1 | 22.8 |

| 67.1 | 67.0 | 71.9 | 70.4 | 63.0 | 69.9 | 69.9 | 29.2 | |

| + normalize | 68.4 | 69.4 | 81.8 | 73.0 | 65.2 | 81.4 | 81.4 | 36.6 |

| + quantile-u | 70.3 | 71.6 | 73.8 | 72.5 | 65.9 | 74.4 | 72.2 | 30.7 |

| + quantile-uW | 70.8 | 72.5 | 80.6 | 74.0 | 67.1 | 74.8 | 80.7 | 32.1 |

| + whiten | 32.7 | 34.8 | 33.0 | 31.7 | 23.7 | 27.3 | 24.1 | 20.5 |

| + whitenW | 47.8 | 51.9 | 64.5 | 66.1 | 61.9 | 67.6 | 68.5 | 30.0 |

| + zscore | 69.8 | 70.0 | 72.2 | 70.4 | 62.7 | 70.1 | 70.2 | 29.7 |

| 55.1 | 75.3 | 72.0 | 70.5 | 59.3 | 72.0 | 72.0 | 26.1 | |

| + normalize | 63.2 | 75.6 | 81.2 | 76.9 | 60.9 | 76.4 | 76.3 | 24.8 |

| + quantile-u | 68.8 | 78.5 | 74.6 | 76.1 | 59.1 | 75.2 | 72.9 | 26.1 |

| + quantile-uW | 71.2 | 78.7 | 81.3 | 77.0 | 61.0 | 75.7 | 75.1 | 25.8 |

| + whiten | 30.6 | 35.7 | 30.8 | 29.8 | 25.0 | 22.9 | 24.8 | 21.3 |

| + whitenW | 46.8 | 46.1 | 62.7 | 63.0 | 52.9 | 58.8 | 58.3 | 26.7 |

| + zscore | 68.6 | 79.1 | 73.3 | 72.4 | 59.0 | 72.3 | 72.1 | 25.8 |

| -biases | 66.5 | 70.4 | 82.6 | 73.0 | 71.7 | 70.5 | 71.9 | 22.8 |

| + normalize | 67.0 | 70.5 | 82.9 | 74.8 | 73.8 | 75.2 | 80.9 | 22.6 |

| + quantile-u | 68.8 | 80.3 | 80.7 | 73.8 | 72.3 | 73.5 | 73.8 | 23.8 |

| + quantile-uW | 69.8 | 80.6 | 82.6 | 76.5 | 74.2 | 75.6 | 72.7 | 22.9 |

| + whitenW | 48.4 | 46.0 | 64.5 | 64.4 | 54.9 | 57.1 | 53.6 | 23.9 |

| + whiten | 31.6 | 36.3 | 33.2 | 29.3 | 22.7 | 23.3 | 23.9 | 22.0 |

| + zscore | 67.6 | 79.8 | 82.1 | 72.6 | 71.4 | 72.6 | 72.6 | 23.3 |

| [MASK] | 61.1 | 69.9 | ||||||

| + normalize | 61.0 | 69.6 | ||||||

| + quantile-u | 60.3 | 69.6 | ||||||

| + quantile-uW | 61.4 | 69.8 | ||||||

| + whiten | 31.1 | 34.0 | ||||||

| + whitenW | 39.4 | 45.3 | ||||||

| + zscore | 61.8 | 69.8 | ||||||

| Model | T0 | T4 | BERT | BERT + Avg. | Avg. | B2S | B2S-100 | RE |

|---|---|---|---|---|---|---|---|---|

| Layer | Last | First + Last | – | |||||

| avg. | 25.0 | 30.9 | 35.9 | 43.5 | 41.9 | 14.8 | 20.2 | 39.2 |

| + normalize | 25.4 | 30.7 | 36.9 | 42.8 | 42.3 | 15.0 | 20.7 | 39.0 |

| + quantile-u | 27.8 | 32.7 | 38.3 | 45.9 | 45.1 | 14.7 | 20.5 | 38.6 |

| + quantile-uW | 25.7 | 32.4 | 38.4 | 46.9 | 47.9 | 15.0 | 20.4 | 40.0 |

| + whiten | 39.8 | 39.7 | 34.2 | 17.8 | 13.0 | 9.8 | 12.6 | 12.2 |

| + whitenW | 28.9 | 33.9 | 49.4 | 57.3 | 62.0 | 21.7 | 23.5 | 56.6 |

| + zscore | 26.7 | 33.1 | 37.3 | 44.2 | 43.6 | 14.0 | 19.8 | 39.2 |

| 28.6 | 29.8 | 30.6 | 37.7 | 39.1 | 16.1 | 17.5 | 55.4 | |

| + normalize | 29.0 | 30.3 | 31.1 | 37.2 | 38.0 | 16.8 | 18.6 | 62.7 |

| + quantile-u | 29.8 | 31.1 | 32.0 | 38.1 | 40.7 | 16.8 | 17.5 | 57.7 |

| + quantile-uW | 30.0 | 30.9 | 32.1 | 39.9 | 40.8 | 16.3 | 18.0 | 58.8 |

| + whiten | 30.6 | 30.1 | 26.1 | 15.4 | 12.2 | 9.7 | 9.7 | 12.5 |

| + whitenW | 29.8 | 31.4 | 40.0 | 50.3 | 55.8 | 16.2 | 17.1 | 56.8 |

| + zscore | 30.2 | 30.6 | 31.1 | 37.4 | 38.7 | 16.1 | 16.8 | 54.1 |

| 36.2 | 39.0 | 54.2 | 63.4 | 60.5 | 14.8 | 20.2 | 61.6 | |

| + normalize | 36.9 | 38.6 | 55.1 | 66.6 | 62.4 | 15.0 | 20.7 | 70.6 |

| + quantile-u | 38.7 | 40.7 | 57.1 | 64.3 | 63.1 | 14.7 | 20.5 | 63.7 |

| + quantile-uW | 38.6 | 40.0 | 57.7 | 65.6 | 63.0 | 15.0 | 20.4 | 65.2 |

| + whiten | 43.5 | 44.6 | 32.1 | 19.7 | 16.6 | 9.8 | 12.6 | 16.7 |

| + whitenW | 35.7 | 36.7 | 59.9 | 63.1 | 58.7 | 21.7 | 23.5 | 61.6 |

| + zscore | 38.3 | 40.6 | 54.5 | 62.0 | 58.9 | 14.0 | 19.8 | 60.7 |

| -biases | 28.5 | 34.5 | 44.8 | 56.7 | 59.7 | 14.3 | 18.2 | 53.9 |

| + normalize | 28.4 | 35.3 | 45.2 | 57.0 | 58.7 | 14.6 | 19.4 | 63.6 |

| + quantile-u | 31.0 | 36.8 | 44.5 | 56.7 | 59.3 | 14.0 | 19.0 | 58.0 |

| + quantile-uW | 30.8 | 35.5 | 46.4 | 59.9 | 60.6 | 14.8 | 19.3 | 57.4 |

| + whitenW | 35.1 | 36.1 | 55.4 | 62.8 | 61.0 | 21.0 | 20.9 | 52.2 |

| + whiten | 42.6 | 38.7 | 21.4 | 15.7 | 11.3 | 8.0 | 11.6 | 11.3 |

| + zscore | 31.5 | 36.9 | 45.2 | 57.5 | 57.9 | 13.4 | 18.5 | 53.0 |

| [MASK] | 26.4 | 35.5 | ||||||

| + normalize | 26.5 | 36.1 | ||||||

| + quantile-u | 26.0 | 37.7 | ||||||

| + quantile-uW | 24.9 | 37.0 | ||||||

| + whiten | 22.7 | 41.0 | ||||||

| + whitenW | 18.0 | 32.2 | ||||||

| + zscore | 27.3 | 37.4 | ||||||

| Model | T0 | T4 | BERT | BERT + Avg. | Avg. | B2S | B2S-100 | RE |

|---|---|---|---|---|---|---|---|---|

| Layer | Last | First + Last | – | |||||

| avg. | 47.0 | 48.2 | 51.8 | 54.1 | 50.7 | 51.7 | 51.7 | 46.5 |

| + normalize | 46.2 | 48.3 | 53.0 | 53.2 | 52.4 | 53.7 | 55.2 | 56.4 |

| + quantile-u | 46.6 | 48.5 | 51.9 | 53.9 | 51.7 | 50.8 | 52.9 | 48.2 |

| + quantile-uW | 46.9 | 48.0 | 53.6 | 53.4 | 53.0 | 51.4 | 51.1 | 47.8 |

| + whiten | 15.9 | 16.3 | 15.9 | 17.0 | 18.5 | 18.1 | 17.2 | 17.6 |

| + whitenW | 44.9 | 44.1 | 51.1 | 51.2 | 51.7 | 50.5 | 49.9 | 49.0 |

| + zscore | 47.5 | 49.0 | 53.0 | 53.3 | 51.4 | 50.0 | 50.5 | 46.4 |

| 48.6 | 47.2 | 50.5 | 52.1 | 48.5 | 49.4 | 50.8 | 45.4 | |

| + normalize | 48.3 | 47.8 | 51.9 | 52.7 | 48.5 | 50.3 | 50.4 | 51.5 |

| + quantile-u | 49.0 | 47.9 | 52.7 | 51.9 | 48.8 | 49.8 | 49.9 | 46.4 |

| + quantile-uW | 48.3 | 47.9 | 52.7 | 52.8 | 48.3 | 49.4 | 49.6 | 46.0 |

| + whiten | 15.3 | 15.1 | 15.7 | 16.2 | 18.3 | 17.4 | 17.0 | 15.9 |

| + whitenW | 43.0 | 42.3 | 50.0 | 50.8 | 50.1 | 49.8 | 49.3 | 45.5 |

| + zscore | 49.3 | 46.7 | 52.2 | 51.4 | 48.0 | 48.9 | 49.1 | 46.3 |

| 50.8 | 50.5 | 55.0 | 55.2 | 54.5 | 51.7 | 51.7 | 49.0 | |

| + normalize | 49.6 | 50.6 | 55.1 | 54.7 | 53.6 | 53.7 | 55.2 | 58.5 |

| + quantile-u | 49.7 | 51.0 | 53.5 | 54.3 | 53.7 | 50.8 | 52.9 | 50.5 |

| + quantile-uW | 50.0 | 52.3 | 53.4 | 54.8 | 53.3 | 51.4 | 51.1 | 51.7 |

| + whiten | 17.6 | 18.0 | 15.5 | 16.5 | 18.2 | 18.1 | 17.2 | 17.6 |

| + whitenW | 44.4 | 46.6 | 51.9 | 53.2 | 54.0 | 50.5 | 49.9 | 48.8 |

| + zscore | 49.7 | 50.0 | 53.5 | 55.4 | 53.8 | 50.0 | 50.5 | 47.8 |

| -biases | 47.1 | 49.6 | 52.4 | 52.3 | 51.7 | 49.6 | 49.6 | 48.0 |

| + normalize | 47.3 | 49.3 | 54.0 | 55.7 | 53.4 | 51.3 | 51.3 | 55.1 |

| + quantile-u | 47.9 | 50.4 | 53.0 | 55.6 | 53.4 | 48.6 | 50.7 | 48.2 |

| + quantile-uW | 48.8 | 50.0 | 53.4 | 54.4 | 53.5 | 50.0 | 49.0 | 47.4 |

| + whitenW | 45.9 | 46.0 | 52.2 | 53.0 | 53.3 | 47.3 | 47.5 | 47.4 |

| + whiten | 16.3 | 16.9 | 16.4 | 17.4 | 18.7 | 18.7 | 17.7 | 18.5 |

| + zscore | 47.3 | 49.4 | 52.0 | 53.5 | 53.2 | 48.3 | 49.0 | 47.4 |

| [MASK] | 41.1 | 47.0 | ||||||

| + normalize | 41.0 | 47.1 | ||||||

| + quantile-u | 42.0 | 47.7 | ||||||

| + quantile-uW | 41.9 | 47.4 | ||||||

| + whiten | 14.9 | 16.6 | ||||||

| + whitenW | 38.4 | 41.7 | ||||||

| + zscore | 41.6 | 46.7 | ||||||

Appendix A.2. BERT + Avg. Model in Different Layers

References

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.S.; Dean, J. Distributed Representations of Words and Phrases and their Compositionality. In Advances in Neural Information Processing Systems; Burges, C., Bottou, L., Welling, M., Ghahramani, Z., Weinberger, K., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2013; Volume 26. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.U.; Polosukhin, I. Attention is All you Need. In Advances in Neural Information Processing Systems; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Hart, B.; Risley, T. The early catastrophe: The 30 million word gap by age 3. Am. Educ. 2003, 27, 4–9. [Google Scholar]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural Machine Translation by Jointly Learning to Align and Translate. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Annual Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. In Proceedings of the International Conference on Learning Representations, Vienna, Austria, 3–7 May 2021. [Google Scholar]

- Gulati, A.; Qin, J.; Chiu, C.C.; Parmar, N.; Zhang, Y.; Yu, J.; Han, W.; Wang, S.; Zhang, Z.; Wu, Y.; et al. Conformer: Convolution-augmented Transformer for Speech Recognition. arXiv 2020, arXiv:2005.08100. [Google Scholar] [CrossRef]

- Chen, L.; Lu, K.; Rajeswaran, A.; Lee, K.; Grover, A.; Laskin, M.; Abbeel, P.; Srinivas, A.; Mordatch, I. Decision Transformer: Reinforcement Learning via Sequence Modeling. In Advances in Neural Information Processing Systems; Beygelzimer, A., Dauphin, Y., Liang, P., Vaughan, J.W., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2021. [Google Scholar]

- Reimers, N.; Gurevych, I. Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 3982–3992. [Google Scholar] [CrossRef]

- Narang, S.; Chung, H.W.; Tay, Y.; Fedus, L.; Fevry, T.; Matena, M.; Malkan, K.; Fiedel, N.; Shazeer, N.; Lan, Z.; et al. Do Transformer Modifications Transfer Across Implementations and Applications? In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Online and Punta Cana, Dominican Republic, 7–11 November 2021; pp. 5758–5773. [Google Scholar] [CrossRef]

- Belinkov, Y. Probing Classifiers: Promises, Shortcomings, and Advances. Comput. Linguist. 2022, 48, 207–219. [Google Scholar] [CrossRef]

- Conneau, A.; Kiela, D.; Schwenk, H.; Barrault, L.; Bordes, A. Supervised Learning of Universal Sentence Representations from Natural Language Inference Data. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, Copenhagen, Denmark, 7–11 September 2017; pp. 670–680. [Google Scholar] [CrossRef]

- Su, J.; Cao, J.; Liu, W.; Ou, Y. Whitening Sentence Representations for Better Semantics and Faster Retrieval. arXiv 2021, arXiv:2103.15316. [Google Scholar] [CrossRef]

- Huang, J.; Tang, D.; Zhong, W.; Lu, S.; Shou, L.; Gong, M.; Jiang, D.; Duan, N. WhiteningBERT: An Easy Unsupervised Sentence Embedding Approach. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2021, Punta Cana, Dominican Republic, 16–20 November 2021; pp. 238–244. [Google Scholar] [CrossRef]

- Jiang, T.; Jiao, J.; Huang, S.; Zhang, Z.; Wang, D.; Zhuang, F.; Wei, F.; Huang, H.; Deng, D.; Zhang, Q. PromptBERT: Improving BERT Sentence Embeddings with Prompts. In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing, Abu Dhabi, United Arab Emirates, 7–11 December 2022; pp. 8826–8837. [Google Scholar]

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient Estimation of Word Representations in Vector Space. arXiv 2013, arXiv:1301.3781. [Google Scholar] [CrossRef]

- Pennington, J.; Socher, R.; Manning, C.D. GloVe: Global Vectors for Word Representation. In Proceedings of the Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- Pelletier, F.J. The principle of semantic compositionality. Topoi 1994, 13, 11–24. [Google Scholar] [CrossRef]

- Bos, J. Wide-Coverage Semantic Analysis with Boxer. In Proceedings of the Semantics in Text Processing, STEP 2008 Conference Proceedings; College Publications: Marshalls Creek, PA, USA, 2008; pp. 277–286. [Google Scholar]

- Montague, R. Universal grammar. Theoria 1970, 36, 373–398. [Google Scholar] [CrossRef]

- Bos, J. A Survey of Computational Semantics: Representation, Inference and Knowledge in Wide-Coverage Text Understanding. Lang. Linguist. Compass 2011, 5, 336–366. [Google Scholar] [CrossRef]

- Erk, K. Vector Space Models of Word Meaning and Phrase Meaning: A Survey. Lang. Linguist. Compass 2012, 6, 635–653. [Google Scholar] [CrossRef]

- Yoshikawa, M.; Mineshima, K.; Noji, H.; Bekki, D. Combining Axiom Injection and Knowledge Base Completion for Efficient Natural Language Inference. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 7410–7417. [Google Scholar] [CrossRef][Green Version]

- Clark, S.; Pulman, S.G. Combining Symbolic and Distributional Models of Meaning. In Proceedings of the AAAI Spring Symposium: Quantum Interaction, Stanford, CA, USA, 26–28 March 2007. [Google Scholar]

- Bjerva, J.; Bos, J.; van der Goot, R.; Nissim, M. The Meaning Factory: Formal Semantics for Recognizing Textual Entailment and Determining Semantic Similarity. In Proceedings of the 8th International Workshop on Semantic Evaluation (SemEval 2014), Dublin, Ireland, 23–24 August 2014; pp. 642–646. [Google Scholar] [CrossRef]

- Smolensky, P. Tensor product variable binding and the representation of symbolic structures in connectionist systems. Artif. Intell. 1990, 46, 159–216. [Google Scholar] [CrossRef]

- Mitchell, J.; Lapata, M. Composition in Distributional Models of Semantics. Cogn. Sci. 2010, 34, 1388–1429. [Google Scholar] [CrossRef] [PubMed]

- Milajevs, D.; Kartsaklis, D.; Sadrzadeh, M.; Purver, M. Evaluating Neural Word Representations in Tensor-Based Compositional Settings. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 708–719. [Google Scholar] [CrossRef]

- Jones, M.N.; Mewhort, D.J. Representing word meaning and order information in a composite holographic lexicon. Psychol. Rev. 2007, 114, 1. [Google Scholar] [CrossRef]

- Widdows, D. Semantic Vector Products: Some Initial Investigations. In Proceedings of the Second AAAI Symposium on Quantum Interaction, Stanford, CA, USA, 26–28 March 2007; College Publications: Marshalls Creek, PA, USA, 2008. [Google Scholar]

- Plate, T. Holographic reduced representations. IEEE Trans. Neural Netw. 1995, 6, 623–641. [Google Scholar] [CrossRef] [PubMed]

- Baroni, M.; Zamparelli, R. Nouns are Vectors, Adjectives are Matrices: Representing Adjective-Noun Constructions in Semantic Space. In Proceedings of the 2010 Conference on Empirical Methods in Natural Language Processing, Cambridge, MA, USA, 9–10 October 2010; pp. 1183–1193. [Google Scholar]

- Guevara, E. A Regression Model of Adjective-Noun Compositionality in Distributional Semantics. In Proceedings of the 2010 Workshop on GEometrical Models of Natural Language Semantics, Uppsala, Sweden, 16 July 2010; pp. 33–37. [Google Scholar]

- Grefenstette, E.; Dinu, G.; Zhang, Y.; Sadrzadeh, M.; Baroni, M. Multi-Step Regression Learning for Compositional Distributional Semantics. In Proceedings of the 10th International Conference on Computational Semantics (IWCS 2013)–Long Papers, Potsdam, Germany, 19–22 March 2013; pp. 131–142. [Google Scholar]

- Baroni, M.; Bernardi, R.; Zamparelli, R. Frege in Space: A Program for Composition Distributional Semantics. Linguist. Issues Lang. Technol. 2014, 9, 241–346. [Google Scholar] [CrossRef]

- Xing, C.; Wang, D.; Zhang, X.; Liu, C. Document classification with distributions of word vectors. In Proceedings of the Signal and Information Processing Association Annual Summit and Conference (APSIPA), 2014 Asia-Pacific, Siem Reap, Cambodia, 9–12 December 2014; pp. 1–5. [Google Scholar] [CrossRef]

- Yu, L.; Hermann, K.M.; Blunsom, P.; Pulman, S. Deep Learning for Answer Sentence Selection. arXiv 2014, arXiv:1412.1632. [Google Scholar] [CrossRef]

- Lev, G.; Klein, B.; Wolf, L. In Defense of Word Embedding for Generic Text Representation. In Natural Language Processing and Information Systems; Biemann, C., Handschuh, S., Freitas, A., Meziane, F., Métais, E., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 35–50. [Google Scholar]

- Ritter, S.; Long, C.; Paperno, D.; Baroni, M.; Botvinick, M.; Goldberg, A. Leveraging Preposition Ambiguity to Assess Compositional Distributional Models of Semantics. In Proceedings of the Fourth Joint Conference on Lexical and Computational Semantics, Denver, CO, USA, 4–5 June 2015; pp. 199–204. [Google Scholar] [CrossRef]

- White, L.; Togneri, R.; Liu, W.; Bennamoun, M. How Well Sentence Embeddings Capture Meaning. In Proceedings of the 20th Australasian Document Computing Symposium, Association for Computing Machinery, Parramatta, NSW, Australia, 8–9 December 2015. ADCS ’15. [Google Scholar] [CrossRef]

- Shen, D.; Wang, G.; Wang, W.; Min, M.R.; Su, Q.; Zhang, Y.; Li, C.; Henao, R.; Carin, L. Baseline Needs More Love: On Simple Word-Embedding-Based Models and Associated Pooling Mechanisms. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Melbourne, Australia, 15–20 July 2018; pp. 440–450. [Google Scholar] [CrossRef]

- Wieting, J.; Bansal, M.; Gimpel, K.; Livescu, K. Towards Universal Paraphrastic Sentence Embeddings. arXiv 2015, arXiv:1511.08198. [Google Scholar] [CrossRef]

- Aldarmaki, H.; Diab, M. Evaluation of Unsupervised Compositional Representations. In Proceedings of the 27th International Conference on Computational Linguistics, Santa Fe, NM, USA, 20–26 August 2018; pp. 2666–2677. [Google Scholar]

- Iyyer, M.; Manjunatha, V.; Boyd-Graber, J.; Daumé, H., III. Deep Unordered Composition Rivals Syntactic Methods for Text Classification. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Beijing, China, 26–31 July 2015; pp. 1681–1691. [Google Scholar] [CrossRef]

- Pham, N.T.; Kruszewski, G.; Lazaridou, A.; Baroni, M. Jointly optimizing word representations for lexical and sentential tasks with the C-PHRASE model. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Beijing, China, 26–31 July 2015; pp. 971–981. [Google Scholar] [CrossRef]

- Wieting, J.; Gimpel, K. Revisiting Recurrent Networks for Paraphrastic Sentence Embeddings. arXiv 2017, arXiv:1705.00364. [Google Scholar] [CrossRef]

- Kenter, T.; Borisov, A.; de Rijke, M. Siamese CBOW: Optimizing Word Embeddings for Sentence Representations. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Berlin, Germany, 7–12 August 2016; pp. 941–951. [Google Scholar] [CrossRef]

- Pagliardini, M.; Gupta, P.; Jaggi, M. Unsupervised Learning of Sentence Embeddings Using Compositional n-Gram Features. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long Papers), New Orleans, LA, USA, 1–6 July 2018; pp. 528–540. [Google Scholar] [CrossRef]

- Gupta, P.; Jaggi, M. Obtaining Better Static Word Embeddings Using Contextual Embedding Models. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Online, 1–6 August 2021; pp. 5241–5253. [Google Scholar] [CrossRef]

- Hill, F.; Cho, K.; Korhonen, A.; Bengio, Y. Learning to Understand Phrases by Embedding the Dictionary. Trans. Assoc. Comput. Linguist. 2016, 4, 17–30. [Google Scholar] [CrossRef]

- Joulin, A.; Grave, E.; Bojanowski, P.; Mikolov, T. Bag of Tricks for Efficient Text Classification. In Proceedings of the 15th Conference of the European Chapter of the Association for Computational Linguistics: Volume 2, Short Papers, Valencia, Spain, 3–7 April 2017; pp. 427–431. [Google Scholar]

- Choi, H.; Kim, J.; Joe, S.; Gwon, Y. Evaluation of BERT and ALBERT Sentence Embedding Performance on Downstream NLP Tasks. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 5482–5487. [Google Scholar] [CrossRef]

- Bommasani, R.; Davis, K.; Cardie, C. Interpreting Pretrained Contextualized Representations via Reductions to Static Embeddings. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 4758–4781. [Google Scholar] [CrossRef]

- Yin, W.; Schütze, H. Learning Word Meta-Embeddings. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Berlin, Germany, 5–10 July 2016; pp. 1351–1360. [Google Scholar] [CrossRef]

- Coates, J.; Bollegala, D. Frustratingly Easy Meta-Embedding—Computing Meta-Embeddings by Averaging Source Word Embeddings. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 2 (Short Papers), New Orleans, LA, USA, 1–6 June 2018; pp. 194–198. [Google Scholar] [CrossRef]

- Adi, Y.; Kermany, E.; Belinkov, Y.; Lavi, O.; Goldberg, Y. Fine-grained analysis of sentence embeddings using auxiliary prediction tasks. In Proceedings of the 5th International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Riedel, B.; Augenstein, I.; Spithourakis, G.P.; Riedel, S. A simple but tough-to-beat baseline for the Fake News Challenge stance detection task. arXiv 2017, arXiv:1707.03264. [Google Scholar] [CrossRef]

- Singh, P.; Mukerjee, A. Words are not Equal: Graded Weighting Model for Building Composite Document Vectors. In Proceedings of the 12th International Conference on Natural Language Processing, Trivandrum, India, 11–14 December 2015; pp. 11–19. [Google Scholar]

- Arora, S.; Liang, Y.; Ma, T. A simple but tough-to-beat baseline for sentence embeddings. In Proceedings of the 5th International Conference on Learning Representations, ICLR 2017, Toulon, France, 24–26 April 2017. [Google Scholar]

- Ethayarajh, K. Unsupervised Random Walk Sentence Embeddings: A Strong but Simple Baseline. In Proceedings of the Third Workshop on Representation Learning for NLP, Melbourne, Australia, 20 July 2018; pp. 91–100. [Google Scholar] [CrossRef]

- Stankevičius, L.; Lukoševičius, M. Testing pre-trained transformer models for Lithuanian news clustering. In Proceedings of the CEUR Workshop Proceeding: IVUS 2020, CEUR-WS, Kaunas, Lithuania, 23 April 2020; Volume 2698, pp. 46–53. [Google Scholar]

- Yan, Y.; Li, R.; Wang, S.; Zhang, F.; Wu, W.; Xu, W. ConSERT: A Contrastive Framework for Self-Supervised Sentence Representation Transfer. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Online, 1–6 August 2021; pp. 5065–5075. [Google Scholar] [CrossRef]

- An, Y.; Kalinowski, A.; Greenberg, J. Clustering and Network Analysis for the Embedding Spaces of Sentences and Sub-Sentences. In Proceedings of the 2021 Second International Conference on Intelligent Data Science Technologies and Applications (IDSTA), Tartu, Estonia, 15–17 November 2021; pp. 138–145. [Google Scholar] [CrossRef]

- Yang, Z.; Zhu, C.; Chen, W. Parameter-free Sentence Embedding via Orthogonal Basis. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 638–648. [Google Scholar] [CrossRef]

- Wang, B.; Kuo, C.C.J. SBERT-WK: A Sentence Embedding Method by Dissecting BERT-Based Word Models. IEEE ACM Trans. Audio Speech Lang. Proc. 2020, 28, 2146–2157. [Google Scholar] [CrossRef]

- Schakel, A.M.J.; Wilson, B.J. Measuring Word Significance using Distributed Representations of Words. arXiv 2015, arXiv:1508.02297. [Google Scholar] [CrossRef]

- Arefyev, N.; Ermolaev, P.; Panchenko, A. How much does a word weigh? Weighting word embeddings for word sense induction. arXiv 2018, arXiv:1805.09209. [Google Scholar] [CrossRef]

- Luhn, H.P. The Automatic Creation of Literature Abstracts. IBM J. Res. Dev. 1958, 2, 159–165. [Google Scholar] [CrossRef]

- Yokoi, S.; Takahashi, R.; Akama, R.; Suzuki, J.; Inui, K. Word Rotator’s Distance. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; pp. 2944–2960. [Google Scholar] [CrossRef]

- Amiri, H.; Mohtarami, M. Vector of Locally Aggregated Embeddings for Text Representation. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; pp. 1408–1414. [Google Scholar] [CrossRef]

- Gupta, V.; Karnick, H.; Bansal, A.; Jhala, P. Product Classification in E-Commerce using Distributional Semantics. In Proceedings of the COLING 2016, the 26th International Conference on Computational Linguistics: Technical Papers, Osaka, Japan, 11–16 December 2016; pp. 536–546. [Google Scholar]

- Kim, H.K.; Kim, H.; Cho, S. Bag-of-concepts: Comprehending document representation through clustering words in distributed representation. Neurocomputing 2017, 266, 336–352. [Google Scholar] [CrossRef]

- Guo, S.; Yao, N. Document Vector Extension for Documents Classification. IEEE Trans. Knowl. Data Eng. 2021, 33, 3062–3074. [Google Scholar] [CrossRef]

- Li, M.; Bai, H.; Tan, L.; Xiong, K.; Li, M.; Lin, J. Latte-Mix: Measuring Sentence Semantic Similarity with Latent Categorical Mixtures. arXiv 2020, arXiv:2010.11351. [Google Scholar] [CrossRef]

- Mekala, D.; Gupta, V.; Paranjape, B.; Karnick, H. SCDV: Sparse Composite Document Vectors using soft clustering over distributional representations. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, Copenhagen, Denmark, 9–11 December 2017; pp. 659–669. [Google Scholar] [CrossRef]

- Gupta, V.; Saw, A.; Nokhiz, P.; Netrapalli, P.; Rai, P.; Talukdar, P. P-sif: Document embeddings using partition averaging. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 7863–7870. [Google Scholar]

- Aharon, M.; Elad, M.; Bruckstein, A. K-SVD: An algorithm for designing overcomplete dictionaries for sparse representation. IEEE Trans. Signal Process. 2006, 54, 4311–4322. [Google Scholar] [CrossRef]

- Gupta, V.; Saw, A.; Nokhiz, P.; Gupta, H.; Talukdar, P. Improving Document Classification with Multi-Sense Embeddings. In Proceedings of the European Conference on Artificial Intelligence, Santiago de Compostela, Spain, 29 August–8 September 2020. [Google Scholar]

- Gupta, A.; Gupta, V. Unsupervised Contextualized Document Representation. In Proceedings of the Second Workshop on Simple and Efficient Natural Language Processing, Virtual, 10 November 2021; pp. 166–173. [Google Scholar] [CrossRef]

- Mekala, D.; Shang, J. Contextualized Weak Supervision for Text Classification. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 323–333. [Google Scholar] [CrossRef]

- Ahmed, N.; Natarajan, T.; Rao, K. Discrete Cosine Transform. IEEE Trans. Comput. 1974, 100, 90–93. [Google Scholar] [CrossRef]

- Almarwani, N.; Aldarmaki, H.; Diab, M. Efficient Sentence Embedding using Discrete Cosine Transform. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 3672–3678. [Google Scholar] [CrossRef]

- Almarwani, N.; Diab, M. Discrete Cosine Transform as Universal Sentence Encoder. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 2: Short Papers), Online, 1–6 August 2021; pp. 419–426. [Google Scholar] [CrossRef]

- Kayal, S.; Tsatsaronis, G. EigenSent: Spectral sentence embeddings using higher-order Dynamic Mode Decomposition. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 4536–4546. [Google Scholar] [CrossRef]

- Kim, T.; Yoo, K.M.; Lee, S.g. Self-Guided Contrastive Learning for BERT Sentence Representations. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Online, 1–6 August 2021; pp. 2528–2540. [Google Scholar] [CrossRef]

- Tanaka, H.; Shinnou, H.; Cao, R.; Bai, J.; Ma, W. Document Classification by Word Embeddings of BERT. In Computational Linguistics; Nguyen, L.M., Phan, X.H., Hasida, K., Tojo, S., Eds.; Springer: Singapore, 2020; pp. 145–154. [Google Scholar]

- Ma, X.; Wang, Z.; Ng, P.; Nallapati, R.; Xiang, B. Universal Text Representation from BERT: An Empirical Study. arXiv 2019, arXiv:1910.07973. [Google Scholar] [CrossRef]

- Kovaleva, O.; Romanov, A.; Rogers, A.; Rumshisky, A. Revealing the Dark Secrets of BERT. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 4365–4374. [Google Scholar] [CrossRef]

- Liu, P.; Yuan, W.; Fu, J.; Jiang, Z.; Hayashi, H.; Neubig, G. Pre-train, Prompt, and Predict: A Systematic Survey of Prompting Methods in Natural Language Processing. arXiv 2021, arXiv:2107.13586. [Google Scholar] [CrossRef]

- Zhong, Z.; Friedman, D.; Chen, D. Factual Probing Is [MASK]: Learning vs. Learning to Recall. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Online, 6–11 June 2021; pp. 5017–5033. [Google Scholar] [CrossRef]

- Wang, H.; Li, Y.; Huang, Z.; Dou, Y.; Kong, L.; Shao, J. SNCSE: Contrastive Learning for Unsupervised Sentence Embedding with Soft Negative Samples. arXiv 2022, arXiv:2201.05979. [Google Scholar] [CrossRef]

- Dalvi, F.; Khan, A.R.; Alam, F.; Durrani, N.; Xu, J.; Sajjad, H. Discovering Latent Concepts Learned in BERT. In Proceedings of the International Conference on Learning Representations, Online, 25–29 April 2022. [Google Scholar]

- Liu, N.F.; Gardner, M.; Belinkov, Y.; Peters, M.E.; Smith, N.A. Linguistic Knowledge and Transferability of Contextual Representations. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; pp. 1073–1094. [Google Scholar] [CrossRef]

- Tenney, I.; Xia, P.; Chen, B.; Wang, A.; Poliak, A.; McCoy, R.T.; Kim, N.; Durme, B.V.; Bowman, S.; Das, D.; et al. What do you learn from context? Probing for sentence structure in contextualized word representations. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Muller, B.; Elazar, Y.; Sagot, B.; Seddah, D. First Align, then Predict: Understanding the Cross-Lingual Ability of Multilingual BERT. In Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics: Main Volume, Online, 19–23 April 2021; pp. 2214–2231. [Google Scholar] [CrossRef]

- Hämmerl, K.; Libovický, J.; Fraser, A. Combining Static and Contextualised Multilingual Embeddings. In Proceedings of the Findings of the Association for Computational Linguistics: ACL 2022, Dublin, Ireland, 22–27 May 2022; pp. 2316–2329. [Google Scholar] [CrossRef]

- Ethayarajh, K. How Contextual are Contextualized Word Representations? Comparing the Geometry of BERT, ELMo, and GPT-2 Embeddings. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 55–65. [Google Scholar] [CrossRef]

- Carlsson, F.; Gyllensten, A.C.; Gogoulou, E.; Hellqvist, E.Y.; Sahlgren, M. Semantic re-tuning with contrastive tension. In Proceedings of the International Conference on Learning Representations, Addis Ababa, Ethiopia, 25–29 April 2020. [Google Scholar]

- Chung, H.W.; Fevry, T.; Tsai, H.; Johnson, M.; Ruder, S. Rethinking Embedding Coupling in Pre-trained Language Models. In Proceedings of the International Conference on Learning Representations, Virtual, 3–7 May 2021. [Google Scholar]

- Rogers, A.; Kovaleva, O.; Rumshisky, A. A Primer in BERTology: What We Know About How BERT Works. Trans. Assoc. Comput. Linguist. 2020, 8, 842–866. [Google Scholar] [CrossRef]

- Timkey, W.; van Schijndel, M. All Bark and No Bite: Rogue Dimensions in Transformer Language Models Obscure Representational Quality. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Online and Punta Cana, Dominican Republic, 7–11 November 2021; pp. 4527–4546. [Google Scholar] [CrossRef]

- Sajjad, H.; Alam, F.; Dalvi, F.; Durrani, N. Effect of Post-processing on Contextualized Word Representations. arXiv 2021, arXiv:2104.07456. [Google Scholar] [CrossRef]

- Zhao, H.; Lu, Z.; Poupart, P. Self-Adaptive Hierarchical Sentence Model. In Proceedings of the 24th International Conference on Artificial Intelligence, IJCAI’15. Buenos Aires, Argentina, 25–31 July 2015; pp. 4069–4076. [Google Scholar]

- Yang, J.; Zhao, H. Deepening Hidden Representations from Pre-trained Language Models. arXiv 2021, arXiv:1911.01940. [Google Scholar]

- Peters, M.E.; Neumann, M.; Iyyer, M.; Gardner, M.; Clark, C.; Lee, K.; Zettlemoyer, L. Deep Contextualized Word Representations. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long Papers), New Orleans, LA, USA, 1–6 June 2018; pp. 2227–2237. [Google Scholar] [CrossRef]

- Michael, J.; Botha, J.A.; Tenney, I. Asking without Telling: Exploring Latent Ontologies in Contextual Representations. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; pp. 6792–6812. [Google Scholar] [CrossRef]

- Shi, W.; Chen, M.; Zhou, P.; Chang, K.W. Retrofitting Contextualized Word Embeddings with Paraphrases. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 1198–1203. [Google Scholar] [CrossRef]

- Artetxe, M.; Schwenk, H. Massively Multilingual Sentence Embeddings for Zero-Shot Cross-Lingual Transfer and Beyond. Trans. Assoc. Comput. Linguist. 2019, 7, 597–610. [Google Scholar] [CrossRef]

- Toshniwal, S.; Shi, H.; Shi, B.; Gao, L.; Livescu, K.; Gimpel, K. A Cross-Task Analysis of Text Span Representations. In Proceedings of the 5th Workshop on Representation Learning for NLP, Online, 9 July 2020; pp. 166–176. [Google Scholar] [CrossRef]

- Socher, R.; Pennington, J.; Huang, E.H.; Ng, A.Y.; Manning, C.D. Semi-Supervised Recursive Autoencoders for Predicting Sentiment Distributions. In Proceedings of the 2011 Conference on Empirical Methods in Natural Language Processing, Edinburgh, Scotland, UK, 27–31 July 2011; pp. 151–161. [Google Scholar]

- Socher, R.; Perelygin, A.; Wu, J.; Chuang, J.; Manning, C.D.; Ng, A.; Potts, C. Recursive Deep Models for Semantic Compositionality Over a Sentiment Treebank. In Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing, Seattle, WA, USA, 18–21 October 2013; pp. 1631–1642. [Google Scholar]

- Salton, G.; Fox, E.A.; Wu, H. Extended Boolean Information Retrieval. Commun. ACM 1983, 26, 1022–1036. [Google Scholar] [CrossRef]

- Rücklé, A.; Eger, S.; Peyrard, M.; Gurevych, I. Concatenated Power Mean Word Embeddings as Universal Cross-Lingual Sentence Representations. arXiv 2018, arXiv:1803.01400. [Google Scholar]

- Lin, Z.; Feng, M.; dos Santos, C.N.; Yu, M.; Xiang, B.; Zhou, B.; Bengio, Y. A Structured Self-Attentive Sentence Embedding. In Proceedings of the International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

- Eger, S.; Rücklé, A.; Gurevych, I. Pitfalls in the Evaluation of Sentence Embeddings. In Proceedings of the 4th Workshop on Representation Learning for NLP (RepL4NLP-2019), Florence, Italy, 2 August 2019; pp. 55–60. [Google Scholar] [CrossRef]

- Rudman, W.; Gillman, N.; Rayne, T.; Eickhoff, C. IsoScore: Measuring the Uniformity of Embedding Space Utilization. In Proceedings of the Findings of the Association for Computational Linguistics: ACL 2022, Dublin, Ireland, 22–27 May 2022; pp. 3325–3339. [Google Scholar] [CrossRef]

- Mu, J.; Viswanath, P. All-but-the-Top: Simple and Effective Postprocessing for Word Representations. In Proceedings of the International Conference on Learning Representations, 30 April–3 May 2018. [Google Scholar]

- Zhou, T.; Sedoc, J.; Rodu, J. Getting in Shape: Word Embedding SubSpaces. In Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence, IJCAI-19, Macao, China, 10–16 August 2019; pp. 5478–5484. [Google Scholar] [CrossRef]

- Gao, J.; He, D.; Tan, X.; Qin, T.; Wang, L.; Liu, T. Representation Degeneration Problem in Training Natural Language Generation Models. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language Models are Unsupervised Multitask Learners. OpenAI Blog 2019, 1, 9. [Google Scholar]

- Schnabel, T.; Labutov, I.; Mimno, D.; Joachims, T. Evaluation methods for unsupervised word embeddings. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–25 September 2015; pp. 298–307. [Google Scholar] [CrossRef]

- Li, B.; Zhou, H.; He, J.; Wang, M.; Yang, Y.; Li, L. On the Sentence Embeddings from Pre-trained Language Models. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; pp. 9119–9130. [Google Scholar] [CrossRef]

- Biś, D.; Podkorytov, M.; Liu, X. Too Much in Common: Shifting of Embeddings in Transformer Language Models and its Implications. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Online, 6–11 June 2021; pp. 5117–5130. [Google Scholar] [CrossRef]

- Rajaee, S.; Pilehvar, M.T. An Isotropy Analysis in the Multilingual BERT Embedding Space. In Proceedings of the Findings of the Association for Computational Linguistics: ACL 2022, Dublin, Ireland, 22–27 May 2022; pp. 1309–1316. [Google Scholar] [CrossRef]

- Schick, T.; Schütze, H. Rare Words: A Major Problem for Contextualized Embeddings and How to Fix it by Attentive Mimicking. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 8766–8774. [Google Scholar] [CrossRef]

- Miller, G.A. WordNet: A Lexical Database for English. Commun. ACM 1995, 38, 39–41. [Google Scholar] [CrossRef]

- Lee, Y.Y.; Ke, H.; Huang, H.H.; Chen, H.H. Less is More: Filtering Abnormal Dimensions in GloVe. In Proceedings of the 25th International Conference Companion on World Wide Web, WWW ’16 Companion, Republic and Canton of Geneva, Switzerland, 11–15 April 2016; pp. 71–72. [Google Scholar] [CrossRef]

- Kovaleva, O.; Kulshreshtha, S.; Rogers, A.; Rumshisky, A. BERT Busters: Outlier Dimensions that Disrupt Transformers. In Proceedings of the Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021, Online, 1–6 August 2021; pp. 3392–3405. [Google Scholar] [CrossRef]

- Luo, Z.; Kulmizev, A.; Mao, X. Positional Artefacts Propagate Through Masked Language Model Embeddings. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Online, 1–6 August 2021; pp. 5312–5327. [Google Scholar] [CrossRef]

- Ding, Y.; Martinkus, K.; Pascual, D.; Clematide, S.; Wattenhofer, R. On Isotropy Calibration of Transformer Models. In Proceedings of the Third Workshop on Insights from Negative Results in NLP, Dublin, Ireland, 26 May 2022; pp. 1–9. [Google Scholar] [CrossRef]

- Cai, X.; Huang, J.; Bian, Y.; Church, K. Isotropy in the Contextual Embedding Space: Clusters and Manifolds. In Proceedings of the International Conference on Learning Representations, Vienna, Austria, 4 May 2021. [Google Scholar]

- Rajaee, S.; Pilehvar, M.T. A Cluster-based Approach for Improving Isotropy in Contextual Embedding Space. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 2: Short Papers), Online, 1–6 August 2021; pp. 575–584. [Google Scholar] [CrossRef]

- Rajaee, S.; Pilehvar, M.T. How Does Fine-tuning Affect the Geometry of Embedding Space: A Case Study on Isotropy. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2021, Punta Cana, Dominican Republic, 16–20 November 2021; pp. 3042–3049. [Google Scholar] [CrossRef]

- Artetxe, M.; Labaka, G.; Lopez-Gazpio, I.; Agirre, E. Uncovering Divergent Linguistic Information in Word Embeddings with Lessons for Intrinsic and Extrinsic Evaluation. In Proceedings of the 22nd Conference on Computational Natural Language Learning, Brussels, Belgium, 31 October–1 November 2018; pp. 282–291. [Google Scholar] [CrossRef]

- Wang, B.; Chen, F.; Wang, A.; Kuo, C.C.J. Post-Processing of Word Representations via Variance Normalization and Dynamic Embedding. In Proceedings of the 2019 IEEE International Conference on Multimedia and Expo (ICME), Shanghai, China, 8–12 July 2019; pp. 718–723. [Google Scholar] [CrossRef]

- Liu, T.; Ungar, L.; Sedoc, J.A. Unsupervised Post-Processing of Word Vectors via Conceptor Negation. In Proceedings of the Thirty-Third AAAI Conference on Artificial Intelligence and Thirty-First Innovative Applications of Artificial Intelligence Conference and Ninth AAAI Symposium on Educational Advances in Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019. AAAI’19/IAAI’19/EAAI’19. [Google Scholar] [CrossRef][Green Version]

- Jaeger, H. Controlling Recurrent Neural Networks by Conceptors. Technical Report No. 31. Jacobs University Bremen. arXiv, 2014; arXiv:1403.3369. [Google Scholar] [CrossRef]

- Karve, S.; Ungar, L.; Sedoc, J. Conceptor Debiasing of Word Representations Evaluated on WEAT. In Proceedings of the First Workshop on Gender Bias in Natural Language Processing, Florence, Italy, 2 August 2019; pp. 40–48. [Google Scholar] [CrossRef]

- Liang, Y.; Cao, R.; Zheng, J.; Ren, J.; Gao, L. Learning to Remove: Towards Isotropic Pre-Trained BERT Embedding. In Proceedings of the Artificial Neural Networks and Machine Learning—ICANN 2021: 30th International Conference on Artificial Neural Networks, Proceedings, Part V. Bratislava, Slovakia, 14–17 September 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 448–459. [Google Scholar] [CrossRef]

- Raunak, V.; Gupta, V.; Metze, F. Effective Dimensionality Reduction for Word Embeddings. In Proceedings of the 4th Workshop on Representation Learning for NLP (RepL4NLP-2019), Florence, Italy, 2 August 2019; pp. 235–243. [Google Scholar] [CrossRef]

- Artetxe, M.; Labaka, G.; Agirre, E. Generalizing and Improving Bilingual Word Embedding Mappings with a Multi-Step Framework of Linear Transformations. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar] [CrossRef]

- Gao, T.; Yao, X.; Chen, D. SimCSE: Simple Contrastive Learning of Sentence Embeddings. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Online and Punta Cana, Dominican Republic, 7–11 November 2021; pp. 6894–6910. [Google Scholar] [CrossRef]

- Wang, T.; Isola, P. Understanding Contrastive Representation Learning through Alignment and Uniformity on the Hypersphere. In Proceedings of the 37th International Conference on Machine Learning, Virtual, 13–18 July 2020; Volume 119, pp. 9929–9939. [Google Scholar]

- Wang, B.; Kuo, C.C.J.; Li, H. Just Rank: Rethinking Evaluation with Word and Sentence Similarities. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Dublin, Ireland, 22–27 May 2022; pp. 6060–6077. [Google Scholar] [CrossRef]

- Faruqui, M.; Dodge, J.; Jauhar, S.K.; Dyer, C.; Hovy, E.; Smith, N.A. Retrofitting Word Vectors to Semantic Lexicons. In Proceedings of the 2015 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Denver, CO, USA, 31 May– 5 June 2015; pp. 1606–1615. [Google Scholar] [CrossRef]

- Yu, Z.; Cohen, T.; Wallace, B.; Bernstam, E.; Johnson, T. Retrofitting Word Vectors of MeSH Terms to Improve Semantic Similarity Measures. In Proceedings of the Seventh International Workshop on Health Text Mining and Information Analysis, Auxtin, TX, USA, 5 November 2016; pp. 43–51. [Google Scholar] [CrossRef]