1. Introduction

DNA ploidy analysis is a laboratory technique that assesses the DNA content in a cell’s nucleus, helping classify cells based on their ploidy status. This indicates the number of complete chromosome sets in a cell. Ploidy analysis is particularly important in cancer research for identifying abnormal DNA content, supporting diagnosis and treatment decisions.

To measure DNA content, the sample must be stained with a dye that stays in the sample in amounts proportional to the DNA content of the sample at the given location. There are multiple staining options; the samples of this study were propidium iodide-stained. This type of sample can be digitized using fluorescent imaging. The dye, after being excited with a light of specific wavelength, emits light of a different wavelength that can be measured.

The sample can be cell nuclei, extracted from any body tissue that contains DNA. These analyses are usually done on a flow cytometer (FCM), an appliance that processes the sample in a liquid form, examining the objects passing in a single row in a capillary tube between usually a laser source and a detector [

1]. This technology is prevalent today, and developments are added to the original concept, both on the appliance and the reagent side [

2]. Our project explores an alternative approach to achieve the same goal, via digital imaging. The light emitted by the fluorochrome is captured by a digital camera, creating input data for image processing technologies. This technique is called image cytometry (ICM). It takes merit from the digitalization of pathology and cytology labs being equipped with machines to digitize glass slide specimens, especially those capable of fluorescent operation. In the beginning, research-oriented users adopted the benefits of digital samples. Then brightfield, immunohistochemistry (IHC) samples were evaluated more and more frequently [

3,

4,

5]. Today, diagnostic laboratories are progressively adopting digital pathology [

6,

7], with a continually expanding range of applications [

8,

9,

10]. Recently, deep learning-based methods are explored not only for object segmentation but for quality control, denoising or as an upscaling technology [

6,

7,

8].

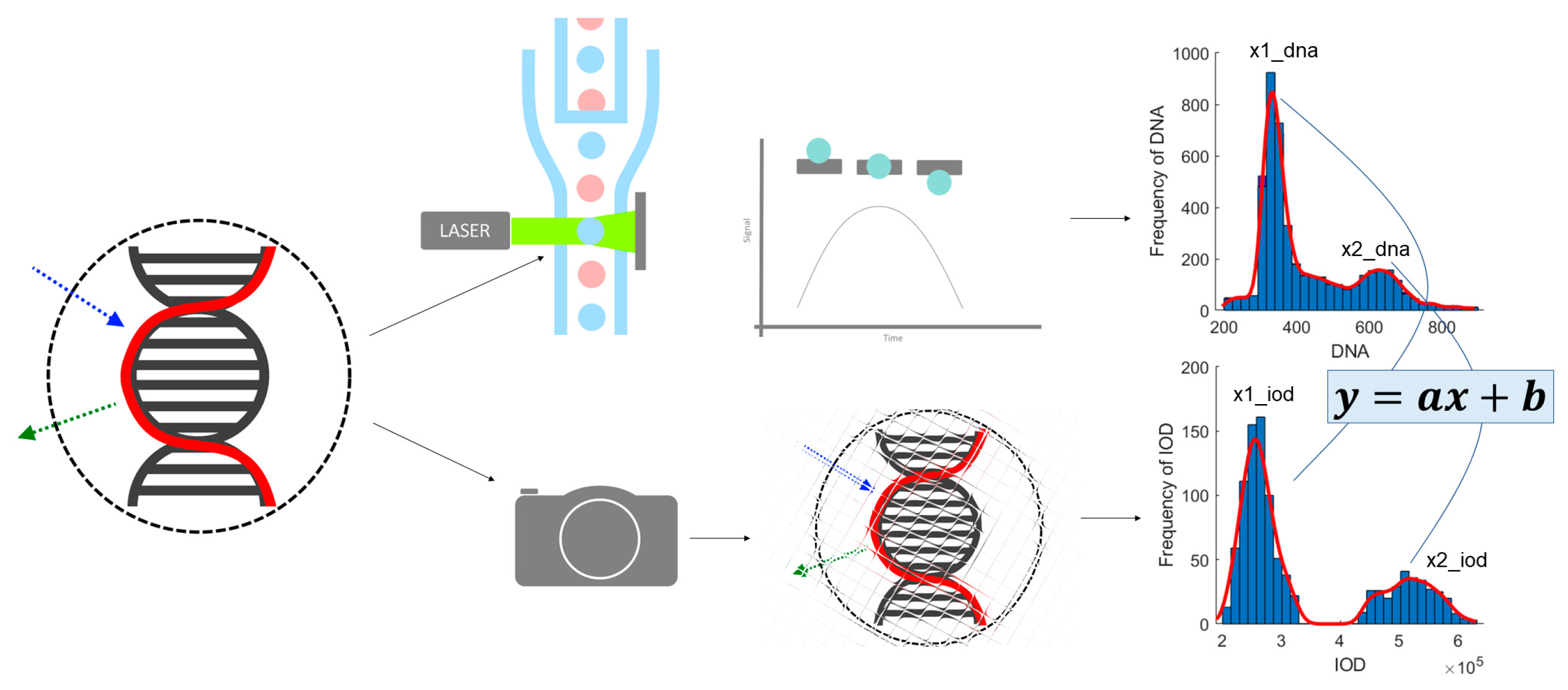

This project is to create an approach based on image processing that produces the DNA content measurements needed for ploidy analysis; from nuclei segmentation through feature extraction, to comparison of results to FCM ones derived from the same samples, calibration (

Figure 1) and finally ploidy analysis.

The evaluation of these samples can be done via computer-based image processing means. This choice holds possible benefits: lab desk space, a fixed cost, can be reduced by consolidating tools, thereby reducing maintenance expenses. Small diagnostic labs considering digital pathology or handling a higher imaging workload, may opt for the ICM approach as a replacement for FCM. For those adopting ICM, the introduction of ploidy analysis is streamlined, requiring only the extension of the sample preparation process to include placement on a glass slide and coverslipping. For labs with a heavier imaging workload, a glass-slide-only workflow becomes feasible. Additionally, the benefit of extended specimen storage, whether as a glass slide or in this case as a digital image, is crucial for future research projects, case consultation or teaching.

Measuring the overall accuracy of an image analysis algorithm can be defined on multiple levels, and through multiple metrics. In this study we applied two approaches: one is to measure the segmentation algorithms’ raw accuracy compared to the ground truth; the other is to measure the population-level DNA content’s similarity to the FCM method.

In our previous work, we proposed a calibration method to align image cytometry (ICM) results with those of flow cytometry (FCM) for DNA ploidy analysis, using healthy samples with known properties as references. The calibration approach involves analyzing the DNA content histograms from both methods, with the goal of developing a transfer function that ensures ICM can replicate the accuracy of FCM. Specifically, healthy samples containing at least two object populations—one with theoretically double the DNA content of the other—serve as a reference point, with these populations represented by their mean values on the DNA histogram’s x-axis. Ploidy analysis examines these peaks, and their relation allows for an evaluation of the accuracy of our technique. In the current study, we extend this work by focusing on optimizing nucleus segmentation, a key step in enhancing the accuracy of ICM histograms, thereby further improving the calibration process and ensuring better alignment with FCM.

The image analysis pipeline is usually comprised of a segmentation step to localize the nuclei on the sample. A separation step of some kind is necessary. The sample being a solution, the nuclei tend to form groups or clumps. Those are frequently segmented as one single object, thus greatly influencing the detection quality. After that, features are measured, like integrated optical density which represents the amount of DNA content of an object. These features then can be used to classify the nuclei, and consequently conclude the ploidy analysis: classify the cell population to be normal, or whether some deviation can be detected. Comparison of FCM and our proposed ICM method was published in [

9]. This article focuses on the object detection/segmentation part of the process, highlighted in

Figure 2.

The quality of such an algorithm is usually measured via comparison to a reference or ground truth. This type of information is hard and costly to obtain, though public datasets for similar purposes can be found [

10]. The ground truth dataset was created to establish a means of measurement for the counting problem, to be able to count the matching pairs of annotation and detected object along with all the other remaining cases.

The resulting ground truth is a set of coordinate pairs that mark the location of each object (nucleus) visible on the digital sample. In this article we aim to evaluate the algorithms examined regarding their object detection capability. Our priority goal was to evaluate object localization, but not extent.

We propose that by increasing object segmentation performance, the similarity between the FCM and ICM results will also measurably increase.

Digital pathology tools, able to create and store single-point annotations, have been on the field for a long time. It is safe to assume that there are many projects where such annotations were used to designate objects of interest. Generating a reference dataset is one of the main expenses in an image analysis (object detection, segmentation) project. It seems logical to explore the possibility of re-using them, in some cases, when creating the annotation from scratch is a costly solution, or data are scarce, similarly to this project.

Based on the pair of reference and measurement results, each object can be classified into four classes: true positive (TP), true negative (TN), false positive (FP) and false negative (FN). This information is collected into a confusion matrix, and algorithm quality measures are calculated from that. This gives the framework of comparison for the algorithms examined.

Image processing on fluorescent samples is quite different from analyzing brightfield samples. Fluorescent samples are generated in smaller quantities, caused by the cost of the technique itself. Digitization time is heavily dependent on the appliance and is mostly governed by the excitation time. Glass slide samples can take more than an hour, or even multiple hours to digitize. The data to measure are usually separated into single image channels, which simplifies the image processing task. In the case of this project, low exposure times and single channel demand resulted in a 5–10-min long digitization process. Sample thickness and light scatter in the sample makes the object boundaries harder to discern. Generally, the task of detecting/segmenting objects on fluorescent samples can be a less complex problem, that enables the usage of algorithms of simpler construction. This is fortunate, because more complex supervised deep learning AI models need considerably more annotated training data, and usually unsupervised methods need even more data. Achieving improvements in that direction seems to be an endeavor gradually more and more complicated. Transfer learning might be an option to consider, using available models and using and extending their training set with task-specific samples.

In diagnostics, AI image analysis is less widespread/accepted now, but this is changing rapidly. Explainability and tractability are among the causes (through regulatory aspects). There are projects investigating better explainable segmentation algorithms [

11], that try to deal with these aspects of modern AI. The algorithms we selected have the advantage of still being simple in concept and solving only the segmentation part of the problem, thus the risks involved remain controllable, while maintaining the equilibrium of cost and performance.

2. Materials and Methods

2.1. Samples

For this evaluation we used 15 samples of leftover healthy human blood samples containing only propidium iodide-stained cell nuclei. We placed samples on a glass slide after serving their original purpose in a flow cytometer. We coverslipped and digitized them using a glass slide scanner produced by 3DHISTECH Ltd., Budapest, Hungary (Pannoramic Scan, fluorescent setup, 5 MP sCMOS camera, 40× (Carl Zeiss AG, Jena, Germany) objective lens and an LED-based light source). The resulting resolution of the images was 0.1625 µm/pixel (compressed with jpeg, to quality 80). The ICM measurements were taken on sub-samples of these samples, sampled as lab protocols demand, in the amount to fit on a glass-covered glass slide. We identified the samples by their sequential indices throughout the assay (1M01–1M20). The samples could contain a droplet of sample of the size of approximately 15 mm in diameter. This meant an approximately 180 mm2 area populated with nuclei, roughly 7 gigapixels to process. The algorithms’ input was an 8-bit, single channel image, that was ingested by the algorithms in a tiled manner.

The FCM measurements were conducted using a Becton Dickinson FACSCAN flow cytometer with the CellQuest software (version 3.1, both hardware and software by Becton Dickinson, Franklin Lakes, NJ, USA). The samples were stained with PI for visualization of DNA content and contained more than 10,000 measured nuclei each.

2.2. Annotation

Annotation of medical images is a complex topic [

12]; the task at hand is complex because of the simplicity of the available annotation data.

For the development of Algorithm 1 more than 20,000 objects were annotated with single-point markers. This is usually called landmark annotation. We used these data to evaluate and compare the selected algorithms.

To reduce the annotation workload, we selected 1 mm2 rectangular regions on the sample for validation, containing from ~830 to ~2200 annotated nuclei. We worked with an expert with laboratory experience in placing the annotations. Cells visible on the digital samples were annotated using the Pannoramic Viewer (1.15.4) software’s Marker Counter tool. For this purpose, we selected regions without visible artefacts (bubbles, clumping, unwashed/overexposed staining). The annotation process took 20–40 min per slide, based on nucleus content.

Multiple approaches can be taken when using these types of annotation data for the evaluation, but the template is simple, as summarized in

Figure 3.

2.3. Environment

Both the segmentation algorithms and validation were run on a mobile PC with Intel(R) Core(TM) i5-9400H CPU (manufactured by Intel Corporation, Santa Clara, CA, USA), 32 GB RAM, with Python 3.11 (Python Software Foundation, Beaverton, OR, USA) on Microsoft Windows 10 Pro 22H2 (Microsoft Corporation, Redmond, WA, USA).

2.4. Segmentation Algorithms

The algorithms evaluated were implemented as a plugin for QuantCenter 2.1.0 RTM, the image processing software suite of 3DHISTECH. This system provides segmentation previews of regions on the fixed magnification that the algorithm is run on. To enable parameter fine-tuning and close to instant visual feedback, the algorithms must be computationally simple enough for the user to wait for a 2-megapixel segmentation result, to be useful.

We concluded the examination of the algorithms of three levels of complexity. We reference them as Algorithms 1, 2 and 3 respectively.

2.4.1. Algorithm 1

The first is the algorithm (our earlier proposal for the problem) described in [

13,

14,

15,

16]; it is a simple, threshold-based approach enhanced by a clump-splitting algorithm of our own development, similar in approach to [

17], developed in the same period. The image pipeline consists of the initial nucleus segmentation, a step for clump separation and a step to enhance the ability of detecting low-intensity objects on the image.

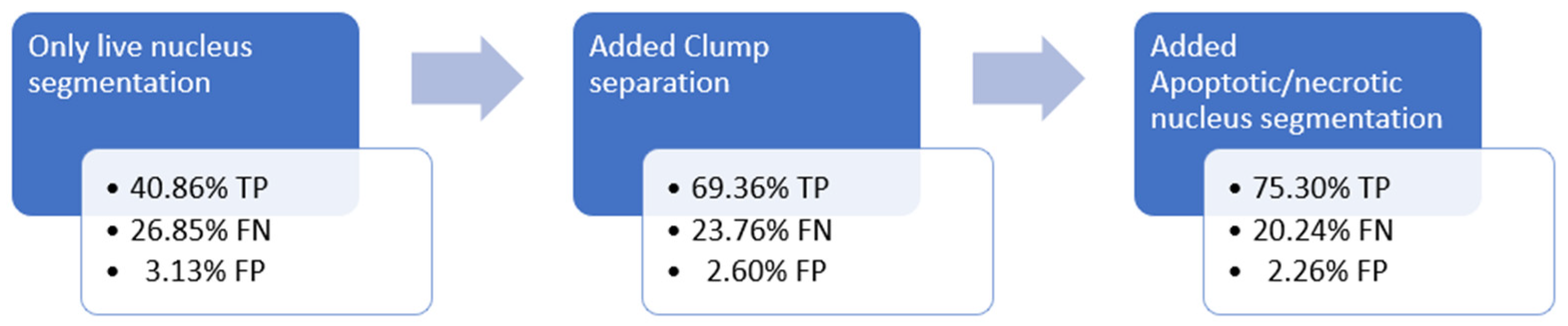

Figure 4 shows the improvement in Algorithm 1 accuracy during the development process:

During the development of Algorithm 1, the polygon-to-polygon comparison type was used, with a homogeneous representation of the predictions, using half of the object radius as diameter, from the average object size calculated from all the samples [

15]. If a single pixel overlap was present, it was considered a match [

18]. In that part of the examination, the measurement and elimination of 1: n, m: 1 prediction-measurement matches. The goal during development was to follow a “test-driven” approach, so that changes made can be evaluated in a framework that produces comparable results.

2.4.2. Algorithm 2

The second method is a relatively advanced classical algorithm that utilizes adaptive thresholding. This local technique assesses each pixel and its surrounding neighborhood, computing the Gaussian mean of pixel intensities to classify the pixel. The image pipeline used in this method is illustrated in

Figure 5 of the article [

19].

2.4.3. Algorithm 3

The third algorithm chosen is a more modern, CNN-based algorithm. We chose to use StarDist [

20] as the algorithm, and the pre-trained “2D_versatile_fluo” model included in the Python library. It has great advantages in separating staining anomalies from cell nuclei, by being constructed to segment round shapes; compared to Algorithm 1, which has no such integrated knowledge. Another aspect of this model is the diversity of the data it was trained on; multiple modalities and imaging techniques produced the input, which enhances segmentation robustness. For training our own model, we would need annotation data, of a polygonal type (in mask/label image representation); we plan to utilize these algorithms to consensually generate a ground truth and present the results in a different article.

The algorithms evaluated were selected based on their tractable behavior, which is important in clinical applications, their ability to handle the specific challenges of fluorescent sample analysis, and their computational efficiency, which is crucial for real-time diagnostic processes.

Initial evaluations of advanced approaches were carried out. Cellpose [

21,

22] performed well on fluorescent samples but was too computationally intensive for our use. Other options, such as HoVer-Net [

23], U-Net [

24], Mask R-CNN [

25] and DeepLab v3+ [

26], showed promise but require further comparison based on their computational demands and performance relative to the methods in this study. Our focus remained on how segmentation accuracy impacts population-based comparison to FCM results and the proposed calibration method.

2.5. Segmentation Validation: Matching, Counting

For the comparison to the manual, earlier, we used correlation-type metrics or a simple ratio of error classes to the whole population count [

18]. For the current article, we did not need the depth and detail of that approach, so we opted to use a confusion matrix-based approach [

27] to compare our segmentation algorithm candidates through sensitivity, precision and F1 score, as has been already successfully performed in [

28].

The comparison is based on object-level matching between annotations and measurements, considering both representational and algorithmic options. Matching can involve reducing polygonal results to a single point for simple inclusion checks or assigning dimensions to landmark annotations for various matching methods.

Figure 6 provides a visual summary of these techniques.

All the above are considered one-to-one assignments. It is possible to choose a more complex solution: registering multiple entities on either the annotations or on the object side (1:n, m:1). This can facilitate investigations of object clump splitting causing over- or undersegmentation, as was done during development of Algorithm 1 [

15].

It is also possible to use more sophisticated, optimization-type methods (linear sum assignment problem [

29,

30]) for the matching, for example, minimizing the global cost function comprising of the distance sum of every pair.

2.6. Algorithm Comparison

For comparison of the algorithms in this article we chose the landmark-to-polygon method of simple inclusion. We followed the greedy method: the first match was registered, and the involved elements excluded from further matching.

We used a confusion matrix-based evaluation method, with the following model:

TP: predicted location is included in the segmented object.

FP: the segmented object does not include any predictions.

FN: the prediction does not have any matches.

TN: none.

Predictions only represent positive findings; where no predictions and no result are present, there is no useful information. There are many possible locations where there are no detected objects, and that is correct.

Because no TN values are available, specificity and negative predictive value is zero. Sensitivity, precision and F1 score can still be calculated.

2.6.1. Precision

This metric designates the algorithm’s strength in detecting only the relevant objects. It is also called positive predictive value (PPV):

The values produced by the algorithms for this metric can be found in second table of

Section 3.1, with arithmetic mean and standard deviation values added.

2.6.2. Sensitivity

This value designates the algorithm’s strength in detecting all the relevant objects. It is also called recall, or true positive rate (TPR):

The values produced by the algorithms for this metric can be found in third table of

Section 3.1, with arithmetic mean and standard deviation values added.

2.6.3. F1 Score

This score combines sensitivity and precision symmetrically (both are valued the same) in one metric:

Comparison of algorithms is done based on the calculated F1 scores. Using macro averaged F1 values for comparison is not advised according to [

31]. A voting system can be set up, where a sample is considered a voter and votes for the best algorithm of the three, based on the F1 score achieved.

2.7. Population Level Comparison

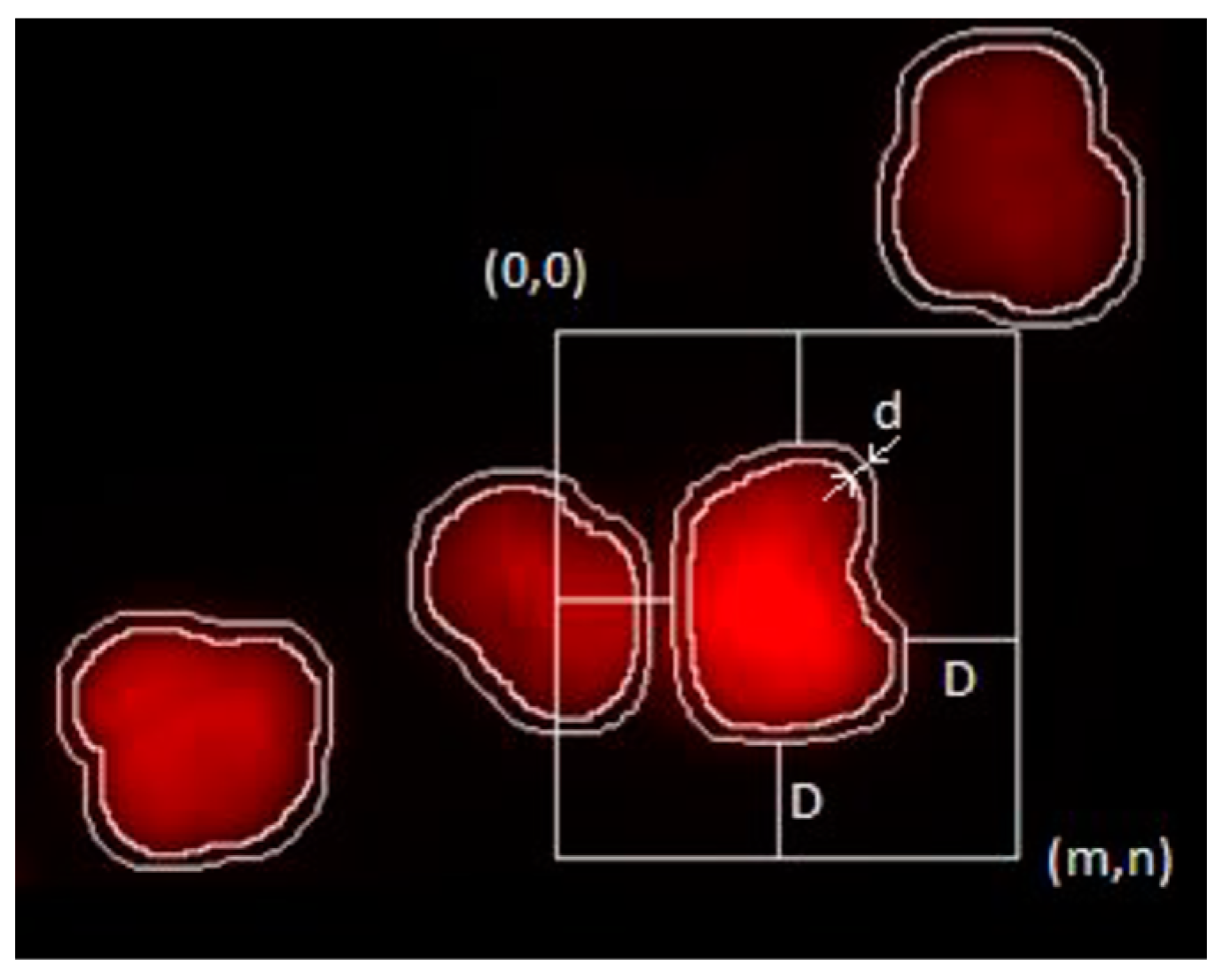

The segmented objects are used in the project for defining the region contributing to a nucleus. Features are extracted within this region to describe each data point contributing to a population-based evaluation. The most important is the integrated density (ID) for modeling DNA content. A schematic representation of how it is measured is visible in

Figure 7. The FCM DNA histogram results are also available as a reference for each examined sample. This is the basis of the measurement of accuracy on the population level.

This measurement was conducted on a grayscale digital image, and in discrete format, as follows:

where

ID is the integrated density, and

I denotes the image intensities in the

m,

n neighborhood.

If

Oi is the currently analyzed object:

then

J is an indicator function that has the value of 1 when it is a pixel corresponding to the measured object

Oi, and 0 otherwise.

The neighborhood m, n was defined to ensure at least D distance from Oi. D was selected to be 35 pixels (the average object size in pixels at this magnification) so as to exclude other entire objects from the neighborhood; d was selected to be 5 pixels based on visual inspection (this is the distance where the bulk of the effect of object proximity is eliminated from intensity values).

We have shown that ID models the FCM method’s DNA content measurements well [

9]. The nucleus populations identified in both datasets with the same process enables us to evaluate the difference that the segmentation algorithm change causes in the system. The mean values of the two populations of the DNA histogram of a healthy patient represent the 2n and 4n peaks. The peak ratio is the measured ratio of the DNA content at the 4n peak to the DNA content at the 2n peak. This theoretically equals 2.0 because, during mitosis, a normal body cell first replicates/doubles its DNA content before dividing into two new daughter cells. The location of these peaks showed great variance (even within the FCM result set), but the peak ratios show that the model parameters and the population classification are well defined.

Greater resemblance of these ratios to the ones measured on the FCM data means better overall performance.

For data manipulation and statistical evaluation, MATLAB (version R2019b) was used.

4. Discussion

There is significant improvement in object detection accuracy from Algorithm 1 to Algorithms 2 and 3, though the improvement is not consistent over all the samples (

Figure 8). Macro-averaged F1 score, micro-averaged F1 score and the voting model are in consensus regarding the order of algorithms.

Looking at precision, Algorithm 1 performs better than Algorithms 2 and 3. In sensitivity, Algorithms 2 and 3 perform considerably better than Algorithm 1, comprising the differences visible in the F1 scores.

Visual inspection confirmed that Algorithms 2 and 3 perform better in two regards: clump separation and low-intensity object segmentation. Algorithm 2 is both more sensitive to darker objects and is a bit stronger in clump-handling than Algorithm 3; this results in the best F1 score of the three. During the creation of Algorithm 1, the clump separation module was a key step in creating an algorithm that would perform consistently on samples of different object densities. Algorithms 2 and 3 were intrinsically better in this regard: no performance decrease of notable measure was detected in relation to the density of objects on the samples, though Algorithm 3 could still be improved in this regard (there is possibility to fine-tune this behavior). Comparing the algorithms in this dimension might be part of a future investigation.

Interestingly, the complex but still classical method of Algorithm 2 performs best of the three on this sample set, though only very slightly—keeping in mind that Algorithm 3 can still be fine-tuned to this dataset (annotation with more information is needed).

It is important to note that clumps of objects are often comprised of objects of inhomogeneous intensities. It is also important to mention that low-intensity objects (on which Algorithms 2 and 3 perform significantly better) are usually apoptotic/necrotic cell nuclei that do not take part in the ploidy analysis result itself, and are filtered from the population during the later stages of the data analysis process.

Considering all the above: replacing Algorithm 1 with any of the other two is an improvement.

Based on the close results, the option for more fine-tuning and its extendibility, we chose Algorithm 3 to proceed to the population-level evaluation.

5. Conclusions

The results show that using more complex algorithms for this problem gives us better performance, but interestingly the increment is in the sensitivity measure. Precision was already high with even the simplest algorithm proposed by us a good few years ago. We were also able to show that the sample density, being an interesting factor in developing Algorithm 1, is less important for these more complex algorithms. They perform similarly across the samples in that regard (though we have seen cases where there is still room for improvement).

The results also show (

Figure 12 and

Table 7) that using a more accurate segmentation algorithm increases the accuracy (similarity to the reference FCM measurements) of results at the DNA histogram level.

Examining the samples #11 and #15, and comparing the algorithms regarding their accuracy in segmenting objects of different intensities is also something that could be worth pursuing, especially because during visual inspection multiple cases were encountered where the annotations were self-contradictory in the case of low-intensity objects.

Revision of the ground truth both in location and intensity might be considered based on the algorithms’ findings. Investigating the possibility to upgrade the ground truth data automatically, utilizing the segmentation results generated by multiple algorithms seems a challenging, though viable route for improvement.

While more complex algorithms such as U-Net, Mask R-CNN and DeepLab v3+ could potentially enhance segmentation accuracy, the available data may limit the achievable improvements (the non-significant difference between Algorithms 2 and 3 might be a precursor to this). There may be diminishing returns where increasing algorithm complexity yields only marginal accuracy gains, suggesting that the dataset itself—such as annotation quality or sample variability—may hinder further significant performance improvements, despite using more advanced models.

A focused study is needed to directly compare the (earlier) proposed calibration technique to current calibration practices, particularly using a greater number of samples. Such a study would provide more robust statistical validation of the system’s accuracy and reliability, helping to confirm its suitability for broader clinical adoption. By expanding the sample size and including various biological conditions, such research could better evaluate the technique’s effectiveness in real-world settings.

It is interesting to note that though annotation time took 20–40 min per sample depending on object count, all three algorithms took only seconds to run on the same region, having a clear time benefit. Adding that to the scanning time of a mean six and a half minutes (though the object segmentation step is only a part of the task), seems to be comparable to the reference technique.