1. Introduction

Collaborating on sensitive data is crucial for driving digital transformation, yet it introduces a complex interplay of conflicting interests. On the one hand, there is a clear benefit in driving digital transformation through improved data availability. On the other hand, sharing data openly clashes with various interests in the protection of sensitive information, such as the protection of business secrets in companies or the fundamental right to privacy of individuals. A prime example can be found in biomedical research, where rigorous regulation (e.g., General Data Protection Regulation, GDPR or Health Insurance Portability and Accountability Act, HIPAA) surrounding patient data frequently impedes collaboration among stakeholders. Strict privacy enforcement may hamper progress and endanger the capacity to access sufficiently large datasets essential for accurately addressing clinical inquiries. Fortunately, Privacy-Preserving Computation (PPC) techniques have emerged as promising approaches to data federation that both protect participant interests and stakeholder privacy while still enabling collaboration on federated datasets across institutional borders. These techniques allow insights to be derived from distributed datasets, known as federated analytics, or enable the training of machine learning models without sharing data, as in federated learning, which is increasingly used, for example, in medical research or industrial settings [

1,

2]. In particular, Secure Multiparty Computation (MPC or SMPC) is a fundamental building block and is considered a gold standard technique in PPC, enabling distributed parties to jointly compute a function without revealing any private data [

3]. The use of MPC techniques in real-world applications is growing as complex technology stacks become more manageable and increased computing resources are available. Expertise and cryptography knowledge also play a significant role in the dissemination of the technology [

4]. Estimating the costs and benefits of theoretically proven algorithms is often the first step for the application of MPC solutions in fields such as biomedical research.

Our use case is predominantly focused on university medicine applications. Multicentric clinical trials are the gold standard for the development of new diagnostic tools and therapies. The exact understanding of physiological and pathological features on all scales, from epidemiology to personalized medicine, requires the pooling of large patient numbers. Classically, this approach requires data sharing and centralized evaluation. However, in terms of GDPR, health data records are particularly sensitive data, and patient advocates demand better protection of patient privacy. New applications in e-health also require active and dynamic consent by the user as dominion over data shifts from centers to individuals. For these reasons, decentralized approaches to digital health have recently received much interest. COVID proximity warning apps were a recent success on a massive scale. Similarly, e.g., the German Medical Informatics Initiative considers semi-decentralized tools like DataSHIELD as an alternative to open data sharing [

5]. We are particularly interested in Federated Secure Computing (FSC), a propaedeutic framework that offers an easy-to-use API to develop distributed applications [

6]. In particular, the SIMON (SImple Multiparty ComputatiON) microservice offers pre-made statistical functions to be evaluated by MPC. Among them, the median is one of the most used metrics in any medical descriptive statistics. Patient cohorts are commonly characterized by median age, median tumor size, median therapeutic dose, and so forth. Of course, the median, as one of the most basic statistical functions, also has a wide use outside of medicine in areas as diverse as finance, economy, psychology, media, and IoT applications in automotive and smart home.

In this paper, we describe the implementation of the secure median for FSC/SIMON. We also provide benchmarks for real-world computation of rank-based statistics across a wide range of parameters and situations.

1.1. Related Work

There are several protocols for computing rank-based statistics, which differ in their proposed architecture (clients, server), privacy guarantees (input privacy, output privacy), and core technologies (Homomorphic Encryption, pure MPC, differential privacy; for an introduction to these fundamental building blocks, see [

3]). For example, Tueno et al. [

7] and Chandran et al. [

8] describe solutions for computing rank-based statistics in a star network, i.e., a large number of clients communicating with one or only a few central untrusted servers on which the evaluation is carried out while preserving the privacy of each client using garbled circuits or homomorphic encryption. In addition, Böhler and Kerschbaum [

9], proposes a differential privacy approach that involves the use of both garbled circuits and secret-sharing schemes to protect individual privacy, additionally using an exponential mechanism to mask the true output value and thus a security accuracy trade-off. In contrast, Aggarwal et al. [

10] aims to determine the exact value of a distributed dataset. For this purpose, the authors designed an efficient algorithm for jointly computing rank-based statistics while keeping individual data inputs private (see

Section 2 for details). Although their algorithm is described theoretically, practical implementations and real-world benchmarking are lacking.

The real-world implementation and benchmarking of MPC have become increasingly prominent due to advancements in efficient solutions, better hardware availability, and software improvements that reduce the computational overhead of this technology [

11]. This trend is particularly noticeable in biomedical research, including genome-wide association studies, diagnostics [

12,

13], and drug screening [

14]. In 2019, von Maltitz et al. [

15] conducted the first MPC with real oncology patient data in Germany, using a Kaplan-Meier estimator. However, such implementations typically require extensive cryptographic knowledge, as most software tools and frameworks like MP-SPDZ [

16], FRESCO [

17], or ABY [

18] require complex technical setups and the manual implementation of MPC functions using specific cryptographic primitives or protocols (see [

11] for a detailed overview). More user-friendly solutions are limited, with notable examples being the industry-level platforms, e.g., Sharemind MPC (Cybernetica AS, Tallinn, Estonia) [

19] and Carbyne (Robert Bosch GmbH, Stuttgart, Germany) [

20], as well as no-code tools like EasySMPC [

21]. Recently, Federated Secure Computing [

6] introduced an accessible architecture that shifts the burden of complex cryptographic processes to the server and provides a simple API.

1.2. Our Contribution

In this study, we present the implementation and real-world benchmarking of an algorithm for computing rank-based statistics, specifically the median, as outlined by Aggarwal et al. [

10]. Our primary motivation was to bridge the gap between theoretical advances in MPC and their practical application and evaluation, focusing on a real-world medical setting. We focused on a concrete statistical function with high demands in biomedicine, i.e., the median, from a protocol for which benchmarking and real-world evaluation was lacking. Implementing and utilizing MPC protocols can be challenging and requires a high level of cryptographic expertise. Our work addresses this by providing a comprehensive guide and evaluation in the context of FSC. By exploring this aspect, we aimed to demonstrate the practical application of MPC in medical research, offering insights into cost-benefit trade-offs and suggesting avenues for future development based on the architecture used.

1.3. Overview

The paper is structured as follows:

Section 2 introduces the preliminaries. We provide a brief description of the MPC protocol for rank-based statistics that our implementation is based on, along with the computational framework and topology on which we rely.

Section 3 describes the implementation details and the experimental setup we used to benchmark our implementation. The performance evaluation is presented in

Section 4. In

Section 5, we contextualize our findings within the broader landscape of MPC implementations of rank-based statistics, discussing their implications.

2. Preliminaries

In the following, we provide preliminaries for MPC (for a detailed introduction, see [

3]). We consider a scenario where

m parties, denoted as

, each possess a private dataset

, with a domain size

M. Collectively, these individual datasets

merge into a comprehensive dataset

of size

n.

2.1. Secure Multiparty Computation

In MPC, a set of two or more parties participate in an encrypted peer-to-peer network to jointly compute a function

without revealing each party’s private input. That means that each party only receives the exact result

y of that function and nothing more (see

Figure 1). The function considered in this work is the identification of the

kth-ranked element in a dataset. In other words, in an ordered set

the value

which has rank

k. Specifically, we are interested in the median value, i.e., finding the value that is ranked

. To achieve this goal, MPC relies on protocols defining a set of rules and procedures for the parties involved to follow. These protocols are designed to ensure that the parties can interact with each other securely and reliably, even in the presence of adversaries. By following the protocol, the parties can compute the desired function while preserving the privacy of their inputs and maintaining the security and integrity of the computation.

2.2. Threat Model

The threat model considered here is the semi-honest adversary also called “honest but curious”. They are meant for generally trustworthy parties who will not maliciously deviate from the protocol but rather cooperate to protect the privacy of their input data. The purpose is to provide some level of data privacy for the parties involved rather than data security against outside attackers or corrupted inside parties.

2.3. Protocol for the kth-Ranked Element

Aggarwal et al. [

10] propose a multiparty protocol for computing the exact median that requires only a logarithmic number of secure computations for the size

M of the domain

from which the data set

D is drawn. Each party initializes the search range to the range of

D and the candidate value to the midpoint of the range. Parties iteratively refine the search range based on securely aggregated counts of elements in their datasets less than or equal to the candidate value. This process continues until the range collapses to the true

kth-ranked value. The protocol ensures data confidentiality and scalability in MPC scenarios and is presented in Algorithm 1.

This minimizes the cryptographic overhead to just two comparisons and two secure summations per round. Achieving this is possible through widely applicable protocols, like additive secret sharing. Essentially, additive secret sharing divides shares of a secret among participants, enabling them to calculate sums without disclosing the actual values [

22]. Consequently, they can obtain the desired result while safeguarding individual contributions. Algorithm 2 provides a concrete exemplary protocol demonstrating how parties collaboratively compute the sum of their private values while preserving privacy using random numbers.

| Algorithm 1 Secure kth-Ranked Element (Aggarwal et al. [10], Protocol 3) |

Input: Data held by parties , rank k, sizes of each , data range

Output: kth-ranked element in .

- 1:

Initialize , , - 2:

repeat -

-

Each : - 3:

- 4:

, -

-

Secure computation between parties: - 5:

if and then - 6:

done - 7:

end if - 8:

if then - 9:

- 10:

end if - 11:

if then - 12:

- 13:

end if - 14:

until done

|

| Algorithm 2 Secure summation protocol utilizing additive secret sharing |

Input: Values held by parties

Output: Sum of the values .

-

Distribute random numbers between parties: - 1:

for to m do - 2:

Party generates a random number . - 3:

if then - 4:

Party sends to . - 5:

else - 6:

Party sends to . - 7:

end if - 8:

end for -

-

Add randomness: - 9:

for to m do - 10:

if then - 11:

Party computes adjusted value . - 12:

else - 13:

Party computes adjusted value . - 14:

end if - 15:

end for -

- 16:

Parties share adjusted values and calculate: - 17:

return

|

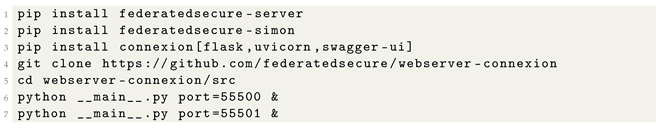

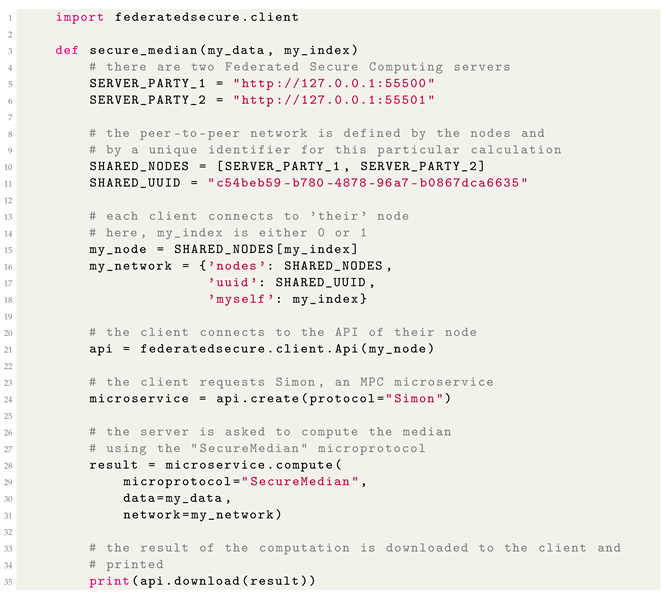

2.4. Secure Computing Framework

A framework, in this context, refers to the structural foundation or the underlying structure within which a protocol operates. It provides a systematic way to design, analyze, and implement secure multiparty computation protocols. FSC is a recently developed free and open-source framework offloading complex cryptographic tasks to a server while providing an easy-to-use API to develop distributed applications (see Ballhausen and Hinske [

6] for a detailed description of the framework). In this architecture, every party runs its own server in a secure peer-to-peer network. By offloading the cryptography server side, the client side is free of dependencies and simple to program. The Framework provides microservices exposed through a RESTful API (The entire codebase is freely available at

https://github.com/federatedsecure, last accessed: 20 August 2024).

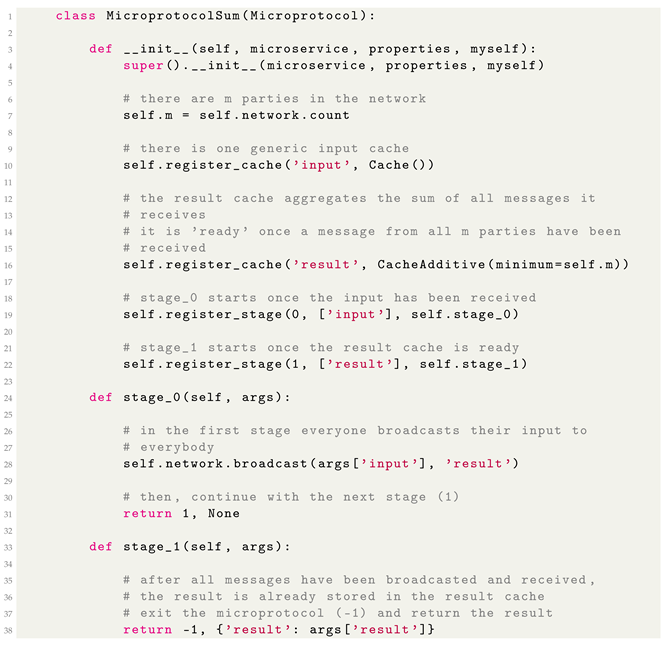

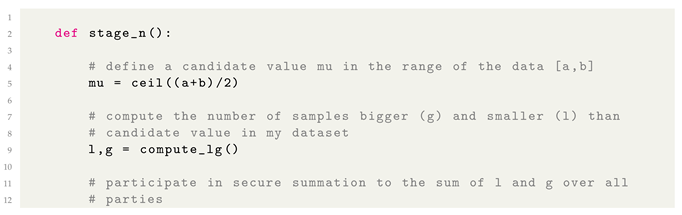

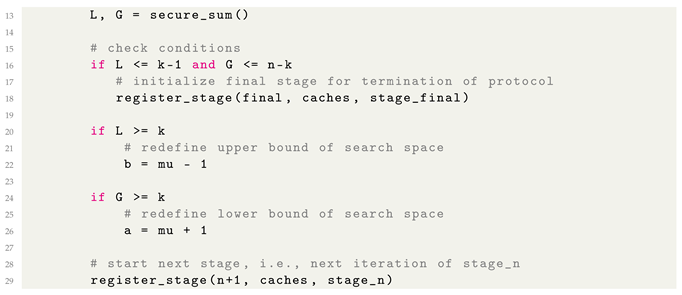

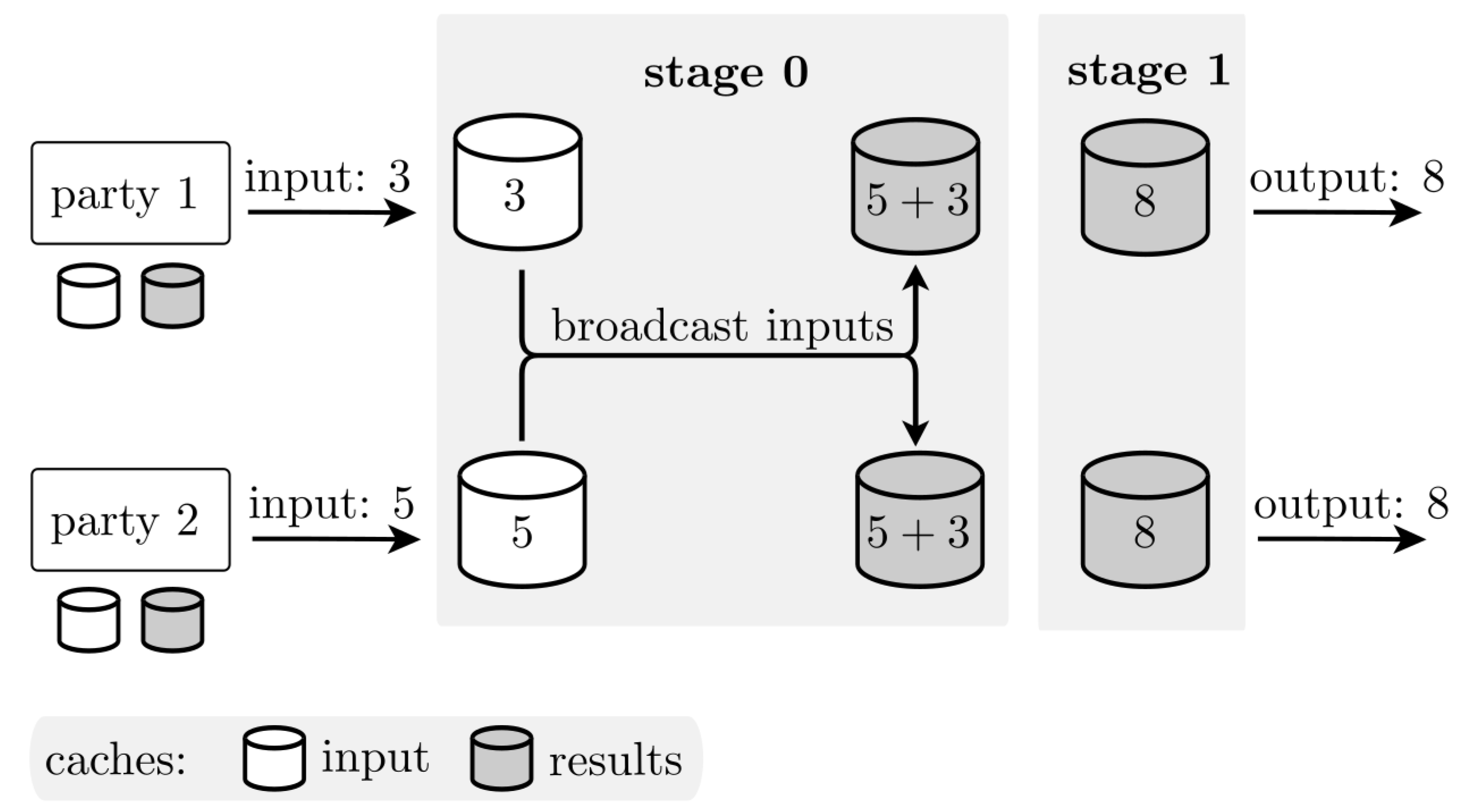

One of these microservices, SIMON, provides MPC functionality. Unlike monolithic MPC frameworks, SIMON does not provide a universal runtime for arbitrary bytecode. Instead, distinct functions must be individually implemented. Typically, these are provided server-side and run ‘stage’ per stage with message passing between parties happening between stages. The messages are stored and aggregated in ‘caches’. The next stage proceeds once all necessary inputs have been received in their respective caches. For example, a (not secure) computation of the sum of the inputs of M parties is displayed in Listing 1.

| Listing 1. Examplary (not secure) computation of the sum in SImple Multiparty ComputatiON

(SIMON). |

![Applsci 14 07891 i001 Applsci 14 07891 i001]() |

Figure 2 illustrates this example for two parties. Each party submits its input to the input cache. In stage 0, these inputs are broadcast and stored in the result cache, where they are automatically aggregated. Once all inputs have been received, the protocol moves to stage 1, where the final aggregated result is retrieved and returned.

Please note that this example demonstrates a non-secure protocol for illustrative purposes. However, this staged approach ensures orderly execution, with each stage depending on the completion of the previous one, allowing the implementation of sequential protocols such as the secure summation protocol (see Algorithm 2), which is the basis for the secure kth-ranked element protocol (see Algorithm 1).

5. Discussion

In our research, we have successfully implemented and performed a real-world benchmarking of a specialized protocol for the computation of rank-based statistics, with a focus on the median, as developed by Aggarwal et al. [

10]. We have provided much-needed benchmarking and real-world evaluation of this protocol, demonstrating its viability and effectiveness in practical applications, a prerequisite for real-world settings, including medical research.

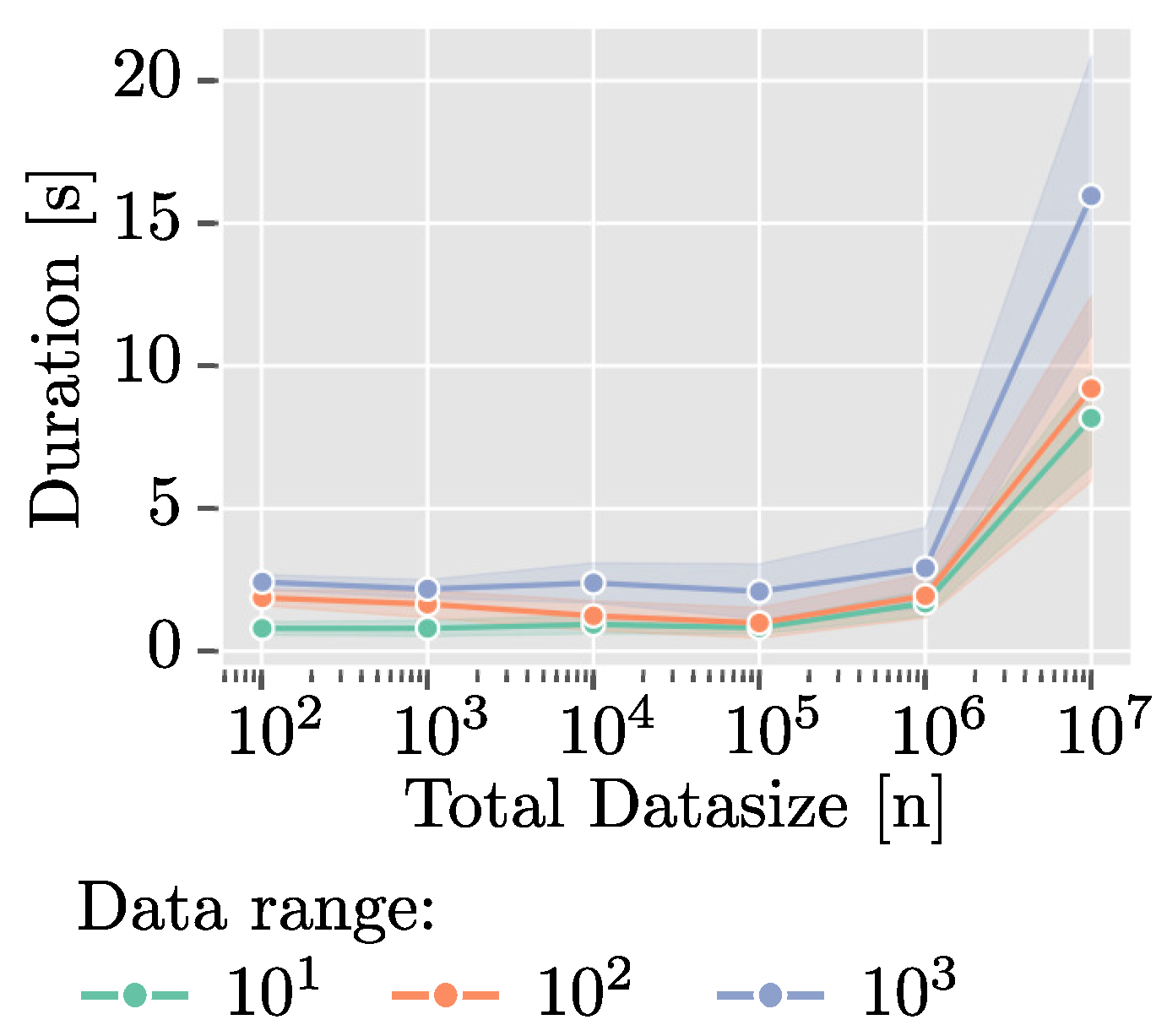

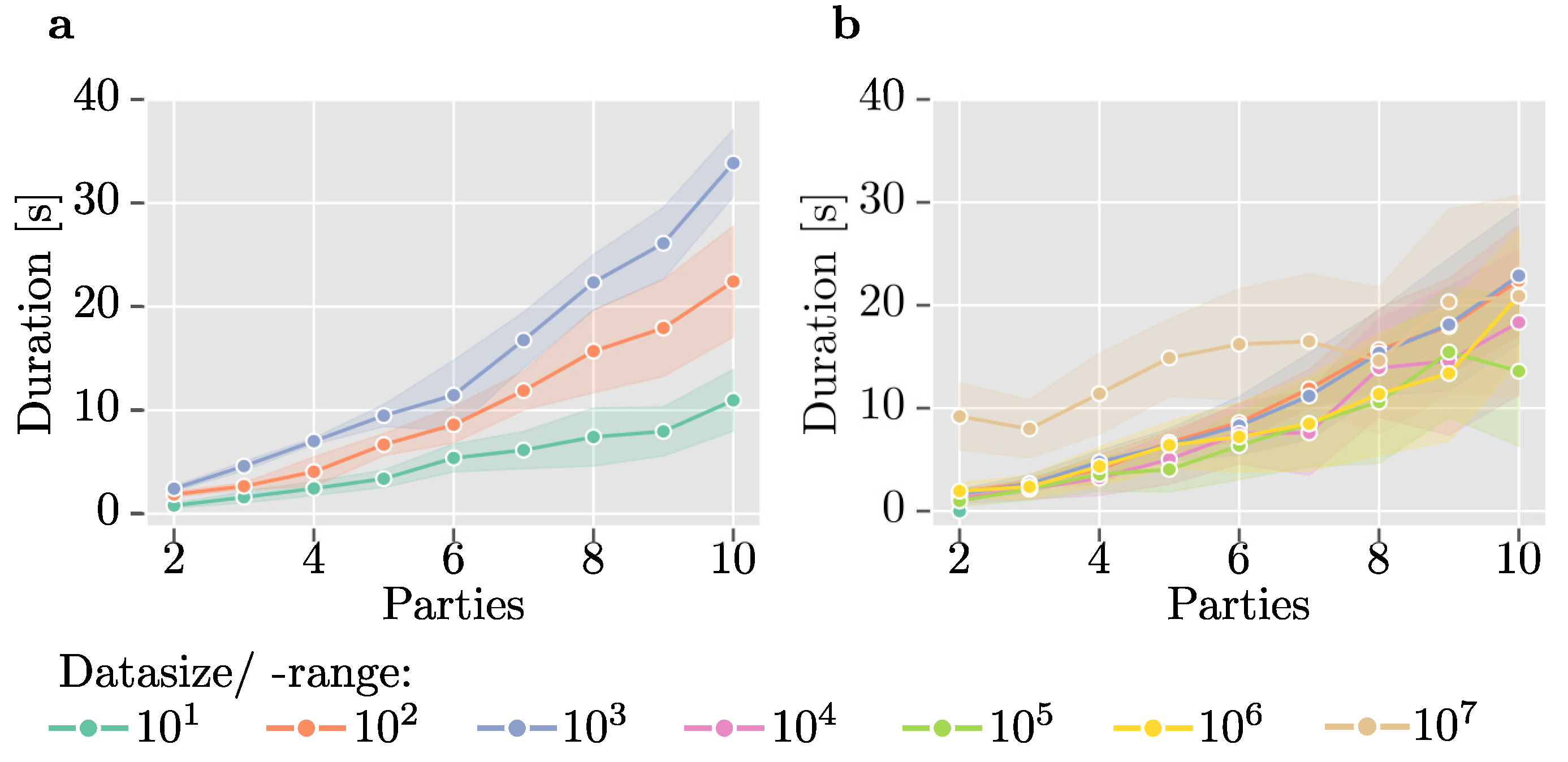

In particular, we show that the protocol scales well with increasing data size up to

entries, maintaining runtimes between 1 and 4 s, ignoring network overhead. However, for larger datasets (>

entries), the exponential growth in runtime (>15 s) highlights the need for optimization when dealing with very large datasets. Possible solutions include parallelization of processes running locally within each stage on each server or increasing computing resources. Moreover, our analysis indicates a linear growth in runtime as the number of parties increases, evident in both fixed-size and fixed-range experiments. This suggests that the protocol’s efficiency remains manageable even with additional parties, as the runtime consistently remains below one minute for scenarios involving up to ten parties. Such settings are common in fields like biomedical research, e.g., where a few hospitals contribute to a multicentric study and jointly want to compute statistics over their databases without revealing individual inputs. However, for scenarios involving a larger number of parties with only a few data points, a central server model, such as the star network described in [

7,

8], may be more suitable. This model involves all parties communicating with a central party, reducing communication costs and offloading complex computations to the central untrusted party. Notably, Chandran et al. [

8] demonstrated that for 100 parties, each holding one data point, the runtime can be reduced to under a minute, provided the central party has sufficient computing power (32 GB RAM in their example).

Nevertheless, the runtime in our experiments using AWS EC2 instances was dominated by networking overhead. The instances kept minimal and unoptimized were limited to AWS free tier (EC2 t2.micro), classified as “low to moderate” by AWS [

24]. This is evident from the measured RTT of up to 222 ms between AWS instances, highlighting the importance of minimizing latency in distributed MPC setups. Böhler and Kerschbaum [

9] demonstrated significantly lower runtimes of 1 to 2 s for their differentially private median algorithm with RTTs of 12–25 ms, though comparisons are challenging due to their purpose-optimized implementation.

Additionally, we benchmarked our implementation using real-world datasets to demonstrate its practical applicability in medical research. Networking overhead significantly increases runtime, especially for the heart disease dataset (from 16 to 17 s locally to over 800 s with AWS regions), highlighting the need for strategic resource selection. Despite this, the protocol’s performance on real-world data shows its deployment potential, particularly when network latency is minimized.

5.1. Security Analysis

The security of the underlying secure median algorithm has been demonstrated by Aggarwal et al. [

10]. They address both semi-honest parties, who adhere to the protocol but seek to gain additional information and malicious adversaries, who may deviate from the protocol and provide fabricated inputs. While they propose additional steps to extend security to the malicious case, our implementation currently supports only the semi-honest case. A primary weakness in our setting occurs when one of two parties, or all but one of several parties, provides empty input to the computation. Generally, this issue cannot be entirely prevented, as empty or extreme values might be valid inputs. For use cases where such inputs are not expected, parties should impose additional constraints on input data, such as a minimum number of input elements or a specified input range. They should also verify the validity of inputs in the initial stage of the protocol and check their plausibility between stages. Apart from these measures, our implementation does not introduce any additional communication between the parties compared to the original protocol. Consequently, parties do not gain any extra information from each other, and the security analysis of the original algorithm remains valid.

While the datasets remain hidden, in general, revealing the exact

kth-ranked value, e.g., the median, reveals one data point and thus the exact value of one individual, which could be used to compromise data of targeted individuals [

25]. One solution to additionally protect the output of the computation is to use additional differential privacy techniques, either adding noise to the output or randomly selecting a value from a probabilistic distribution of possible values [

9,

26,

27]. Despite concerns about increased computational overhead, Pettai and Laud [

26] argue that this overhead is minimal, while Böhler and Kerschbaum [

9] demonstrate the remarkable efficiency of a specific protocol.

5.2. Limitations

This study has several limitations that should be noted. Our benchmarking was conducted in a semi-honest setting, meaning our implementation does not address scenarios involving malicious actors. While Aggarwal et al. [

10] describe additional measures for handling malicious settings and commercial solutions like Sharemind MPC [

4] as a backend to FSC offer protections against such threats, these models were outside the scope of our baseline implementation.

Additionally, our performance evaluation used synthetic datasets to explore various sizes and participant counts. Although this approach provides insights into computational scaling, it may not fully capture the complexities and variability of real-world data. To address this, we incorporated two medical datasets, which, while limited to the healthcare domain, offer insights into the computational scaling of real-world data irrespective of the domain. The medical context, though, is a particularly interesting application domain due to the sensitivity of patient information, highlighting the need for privacy-preserving techniques in practical applications.

Additionally, we chose not to minimize network latencies in our experiments to demonstrate a range of possible computation times with real datasets. We also conducted experiments using hyperscalers in various regions to cover the spectrum of network latency. In practice, parties could replicate their data privately within the same region, allowing secure peer-to-peer computations to be performed in a low-latency environment, thus achieving computation times closer to the lower end observed in our experiments in local deployment.