Machine Reading Comprehension Model Based on Fusion of Mixed Attention

Abstract

1. Introduction

1.1. Research Background and Problem Statement

1.2. Main Contributions of the Research

1.3. Outline of the Paper

2. Problem Formulation and Research Motivation

2.1. Analysis and Discussion of Results

2.2. Background and Investigation of Machine Reading Comprehension

2.2.1. Early Research and Development

2.2.2. Development of Datasets

2.2.3. Model Development

2.2.4. Experimental Methods and Evaluation Criteria

2.2.5. Future Research Directions

3. Prior Research

4. Method and Models

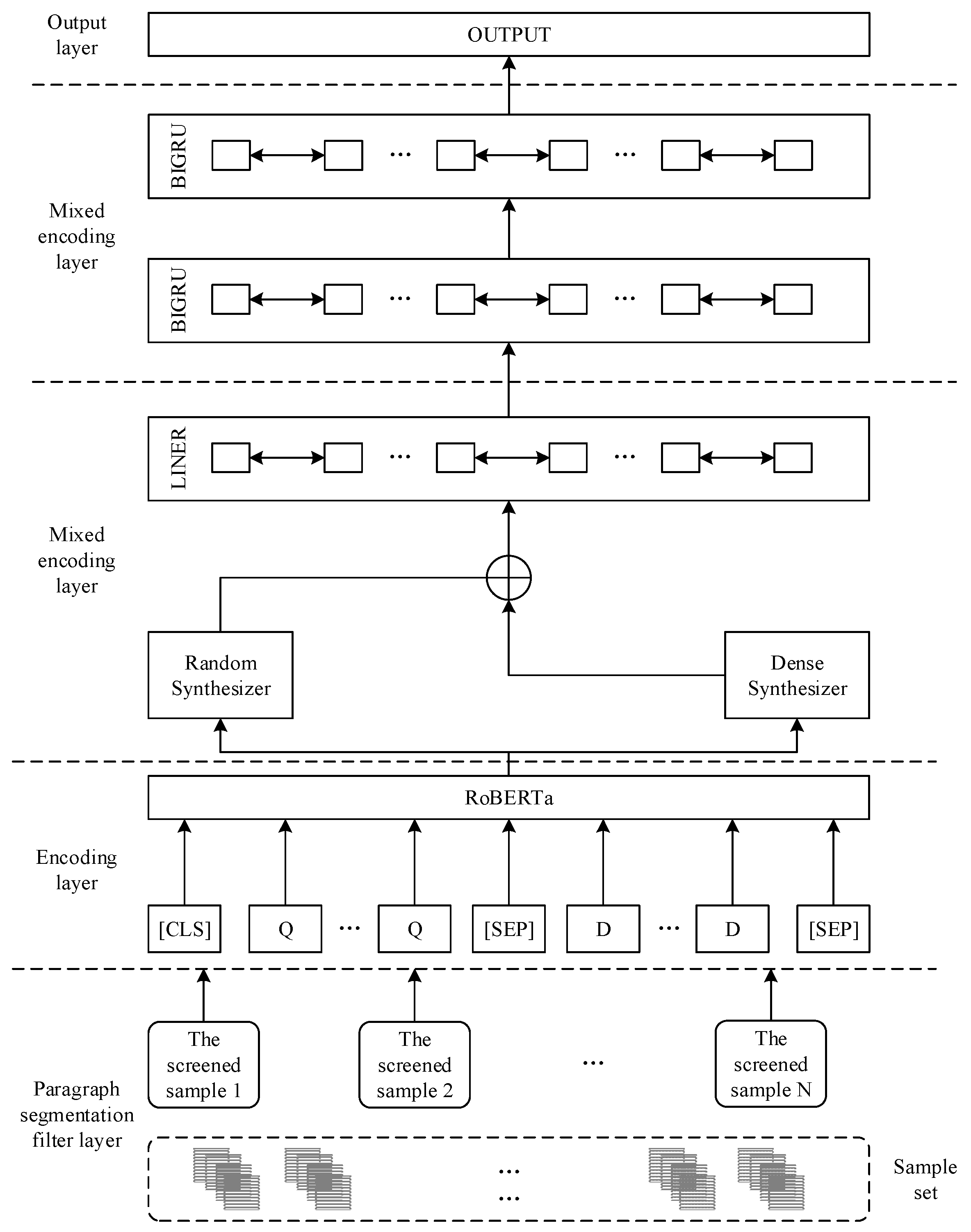

4.1. Model Construction

4.2. Encoding Layer

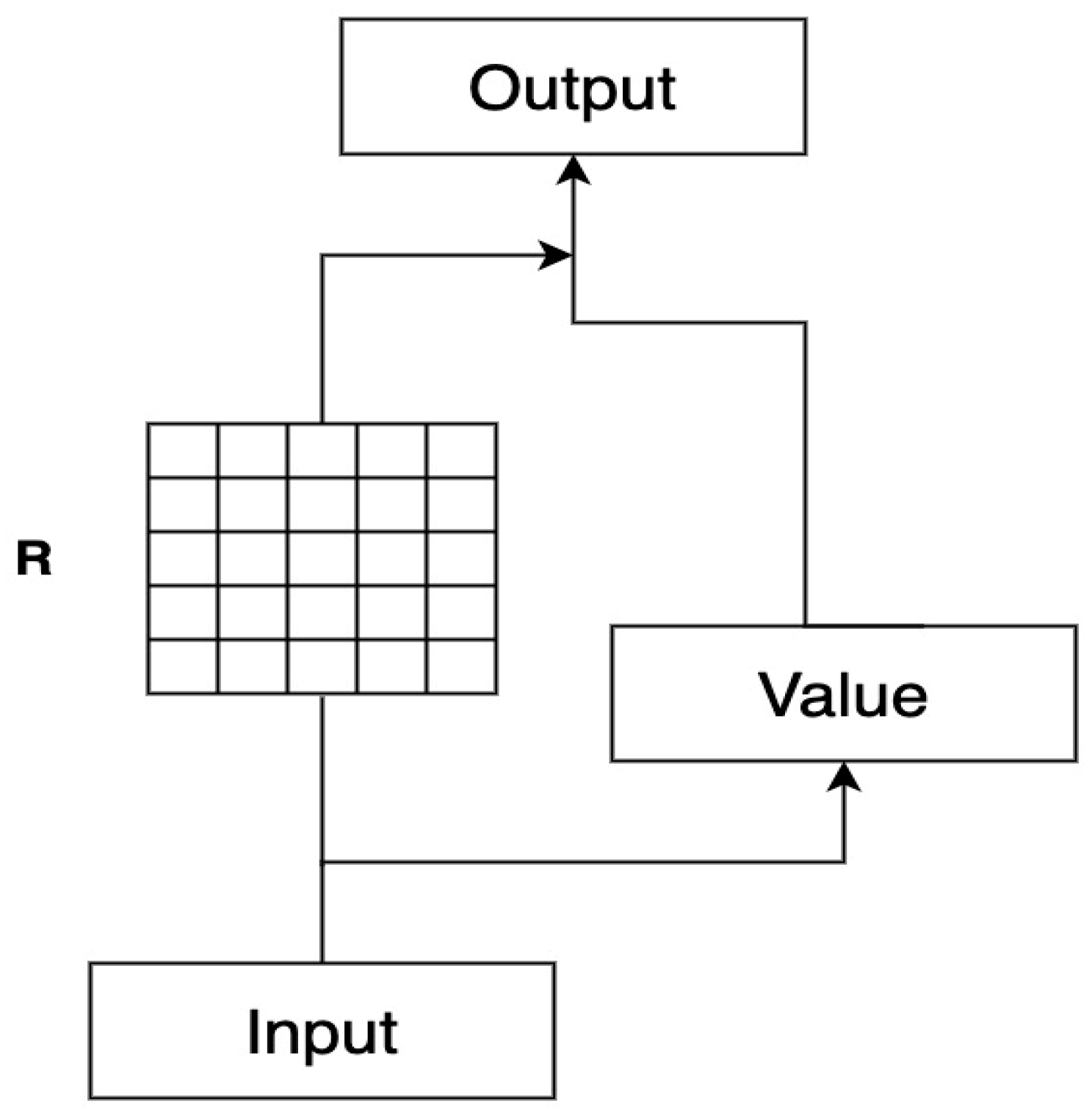

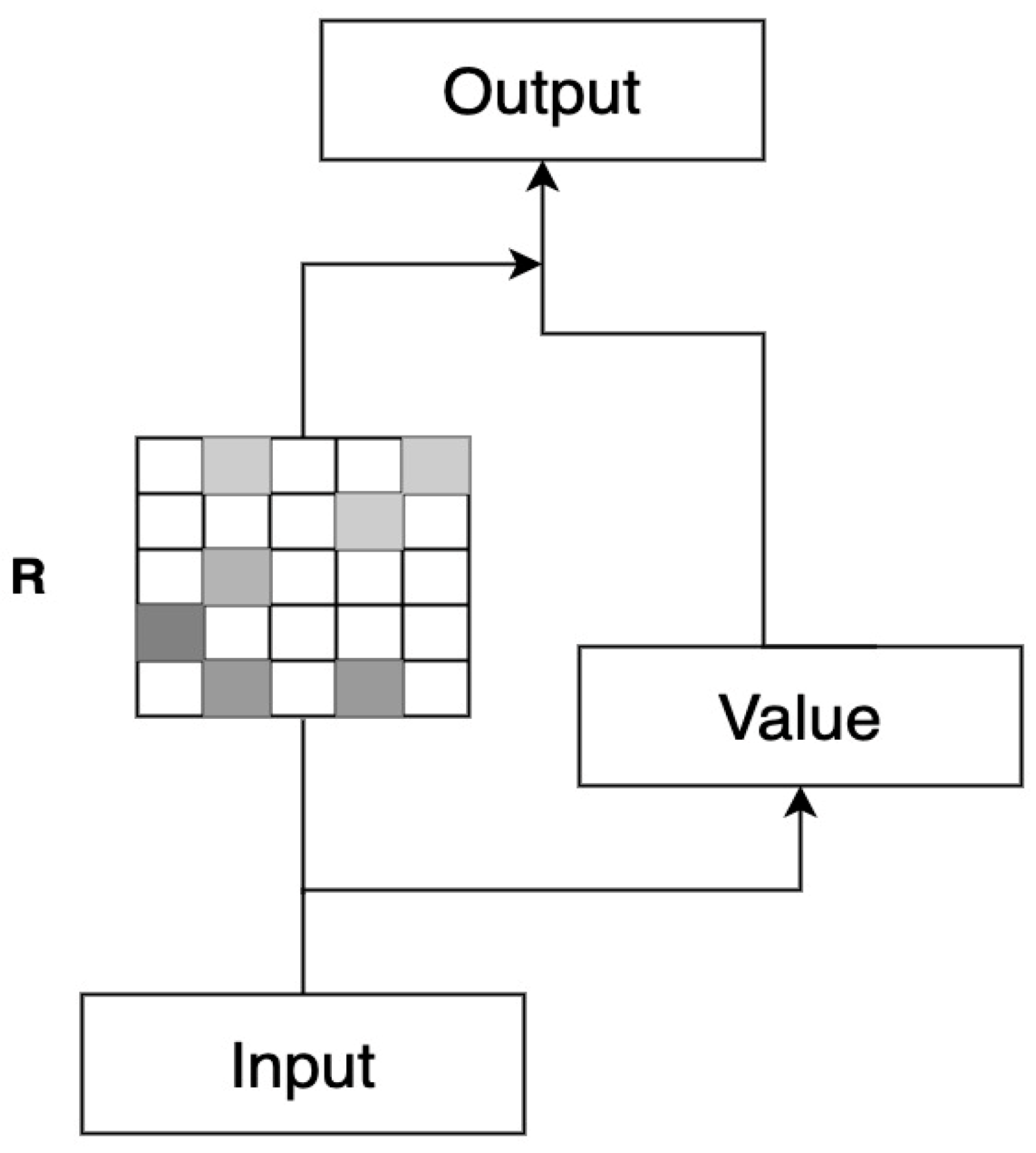

4.3. Mixed Attention Layer

4.4. Fusion Modeling Layer

4.5. Output Layer

5. Experimentation

5.1. Dataset

5.2. Evaluation Criteria

5.3. Experiment Parameter Configuration

5.4. Ablation Experiment

6. In-Depth Discussion and Comparative Analysis of Experimental Results

6.1. Comprehensive Evaluation of Model Performance

6.2. Specific Challenges in Processing Long Text

6.3. Analysis of Different Question Types

6.4. Comparison of Reasoning Types

6.5. Further Exploration of Machine Reading Comprehension Models on the BiPaR Dataset

6.6. Model Adaptation and Challenges

6.7. Prospect Research Developments

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Nguyen, A.D.; Li, M.; Lambert, G.; Kowalczyk, R.; McDonald, R.; Vo, Q.B. Efficient Machine Reading Comprehension for Health Care Applications: Algorithm Development and Validation of a Context Extraction Approach. JMIR Form. Res. 2024, 8, e52482. [Google Scholar] [CrossRef] [PubMed]

- Duan, J.; Liu, S.; Liao, X.; Gong, F.; Yue, H.; Wang, J. Chinese EMR Named Entity Recognition Using Fused Label Relations Based on Machine Reading Comprehension Framework. IEEE/ACM Trans. Comput. Biol. Bioinform. 2024. ahead of print. [Google Scholar] [CrossRef]

- Qian, Z.; Zou, T.; Zhang, Z.; Li, P.; Zhu, Q.; Zhou, G. Speculation and negation identification via unified Machine Reading Comprehension frameworks with lexical and syntactic data augmentation. Eng. Appl. Artif. Intell. 2024, 131, 107806. [Google Scholar] [CrossRef]

- Kakulapati, V.; Reddy, J.G.; Reddy, K.K.K.; Reddy, P.A.; Reddy, D. Analysis of Machine Reading Comprehension Problem Using Machine Learning Techniques. J. Sci. Res. Rep. 2023, 29, 20–31. [Google Scholar] [CrossRef]

- Guan, B.; Zhu, X.; Yuan, S. A T5-based interpretable reading comprehension model with more accurate evidence training. Inf. Process. Manag. 2024, 61, 103584. [Google Scholar] [CrossRef]

- Lample, G.; Conneau, A. Cross-lingual language model pretraining. arXiv 2019, arXiv:1901.07291. [Google Scholar] [CrossRef]

- Yu, Z.; Cao, R.; Tang, Q.; Nie, S.; Huang, J.; Wu, S. Order matters: Semantic-aware neural networks for binary code similarity detection. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 1145–1152. [Google Scholar]

- Wang, S.; Jiang, J. Machine comprehension using match-lstm and answer pointer. In Proceedings of the 5th International Conference on Learning Representations (ICLR 2017), Toulon, France, 24–26 April 2016; pp. 1–11. [Google Scholar]

- Seo, M.; Kembhavi, A.; Farhadi, A.; Hajishirzi, H. Bidirectional attention flow for machine comprehension. In Proceedings of the 5th International Conference on Learning Representations (ICLR 2017), Toulon, France, 24–26 April 2016; pp. 1–13. [Google Scholar]

- Rajpurkar, P.; Jia, R.; Liang, P. Know what you don’t know: Unanswerable questions for SQuAD. arXiv 2018, arXiv:1806.03822. [Google Scholar] [CrossRef]

- Wang, W.; Yang, N.; Wei, F.; Chang, B.; Zhou, M. Gated self-matching networks for reading comprehension and question answering. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics, Vancouver, BC, Canada, 30 July–4 August 2017; pp. 189–198. [Google Scholar]

- Tay, Y.; Bahri, D.; Metzler, D.; Juan, D.-C.; Zhao, Z.; Zheng, C. Synthesizer: Rethinking self-attention for transformer models. In Proceedings of the 38th International Conference on Machine Learning, Hong Kong, China, 18–24 July 2021; pp. 10183–10192. [Google Scholar]

- Huang, Z.; He, L.; Yang, Y.; Li, A.; Zhang, Z.; Wu, S.; Wang, Y.; He, Y.; Liu, X. Application of machine reading comprehension techniques for named entity recognition in materials science. J. Cheminform. 2024, 16, 76. [Google Scholar] [CrossRef] [PubMed]

- Liu, L.; Liu, M.; Liu, S.; Ding, K. Event extraction as machine reading comprehension with question-context bridging. Knowl.-Based Syst. 2024, 299, 112041. [Google Scholar] [CrossRef]

- Liu, S.; Zhang, S.; Ding, K.; Liu, L. JEEMRC: Joint Event Detection and Extraction via an End-to-End Machine Reading Comprehension Model. Electronics 2024, 13, 1807. [Google Scholar] [CrossRef]

- Ahmed, Y.; Telmer, C.A.; Zhou, G.; Miskov-Zivanov, N. Context-aware knowledge selection and reliable model recommendation with ACCORDION. Front. Syst. Biol. 2024, 4, 1308292. [Google Scholar] [CrossRef]

- Haiyang, Z.; Chao, J. Verb-driven machine reading comprehension with dual-graph neural network. Pattern Recognit. Lett. 2023, 176, 223–229. [Google Scholar]

| Statistical Item | Article | Question | Answer |

|---|---|---|---|

| Number | 1,431,429 | 301,574 | 665,723 |

| The Average Length | 1793 (char) | 26 (char) | 299 (char) |

| Parameter | Reference Value |

|---|---|

| seq_length | 512 |

| Learning-rate | 0.00005 |

| batch_size | 8 |

| Optimization | Adam |

| hidden_size | 768 |

| Num_hidden_heads | 12 |

| warmup_proportion | 0.1 |

| epochs | 4 |

| Hidden_Activation | Gelu |

| Directionality | Bidi |

| Model | ROUGE-L | BLEU-4 |

|---|---|---|

| match-LSTM | 34.8 | 44.5 |

| BiDAF | 38.9 | 41.5 |

| Baseline_Bert | 44.1 | 45.4 |

| Baseline_MacBert | 50.1 | 52.1 |

| Baseline_RoBERTa | 48.2 | 54.2 |

| Baseline_RoBERTa_wwm | 51.2 | 52.3 |

| CS—Reader | 56.6 | 57.9 |

| Hybrid Model | 60.1 | 59.9 |

| Model | ROUGE-L | BLEU-4 |

|---|---|---|

| match-LSTM | 33.6 | 34.5 |

| BiDAF | 36.3 | 39.5 |

| Baseline_Bert | 41.1 | 42.2 |

| Baseline_MacBert | 49.8 | 51.9 |

| Baseline_RoBERTa | 49.2 | 54.1 |

| Baseline_RoBERTa_wwm | 51.9 | 51.6 |

| CS—Reader | 57.6 | 58.9 |

| Hybrid Model | 61.1 | 60.9 |

| Model | ROUGE-L | BLEU-4 | AVG |

|---|---|---|---|

| Hybrid Model | 61.1 | 60.9 | 61.0 |

| Hybrid Attention | 56.9 | 55.1 | 56.0 |

| Multiple Fusion | 54.9 | 54.5 | 54.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Ma, N.; Guo, Z. Machine Reading Comprehension Model Based on Fusion of Mixed Attention. Appl. Sci. 2024, 14, 7794. https://doi.org/10.3390/app14177794

Wang Y, Ma N, Guo Z. Machine Reading Comprehension Model Based on Fusion of Mixed Attention. Applied Sciences. 2024; 14(17):7794. https://doi.org/10.3390/app14177794

Chicago/Turabian StyleWang, Yanfeng, Ning Ma, and Zechen Guo. 2024. "Machine Reading Comprehension Model Based on Fusion of Mixed Attention" Applied Sciences 14, no. 17: 7794. https://doi.org/10.3390/app14177794

APA StyleWang, Y., Ma, N., & Guo, Z. (2024). Machine Reading Comprehension Model Based on Fusion of Mixed Attention. Applied Sciences, 14(17), 7794. https://doi.org/10.3390/app14177794