Embedding a Real-Time Strawberry Detection Model into a Pesticide-Spraying Mobile Robot for Greenhouse Operation

Abstract

1. Introduction

2. Materials and Methods

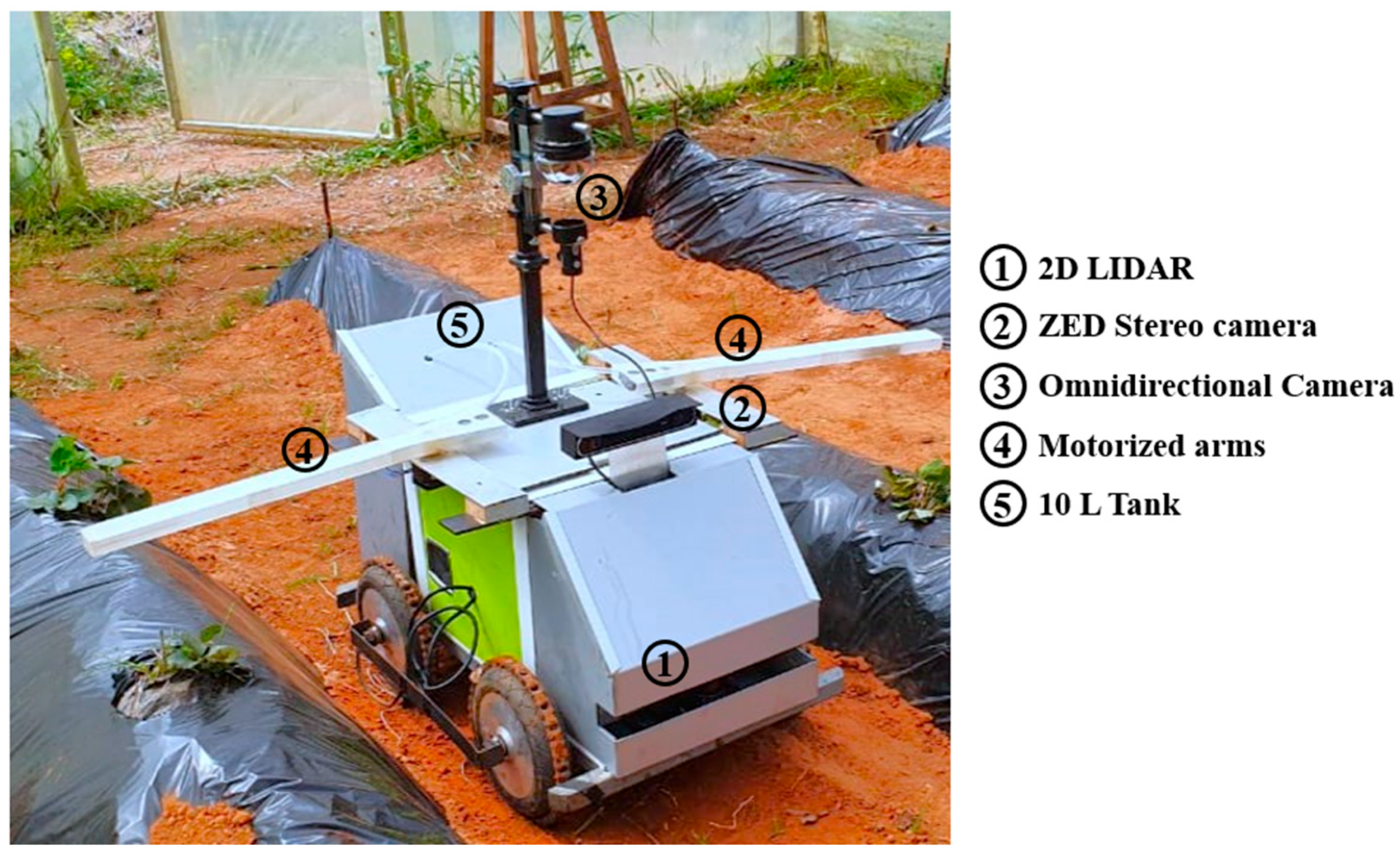

2.1. AgriEco Robot

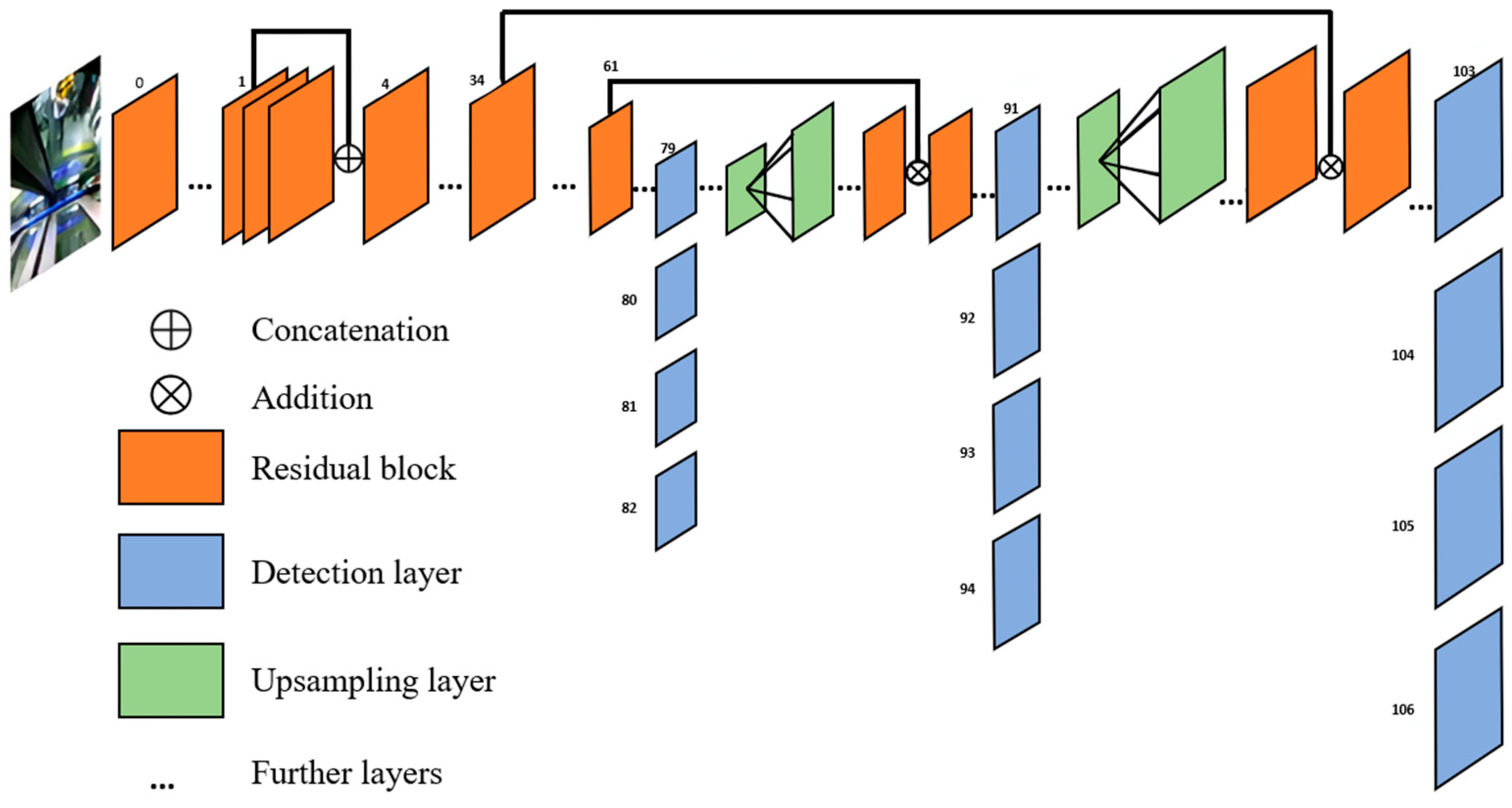

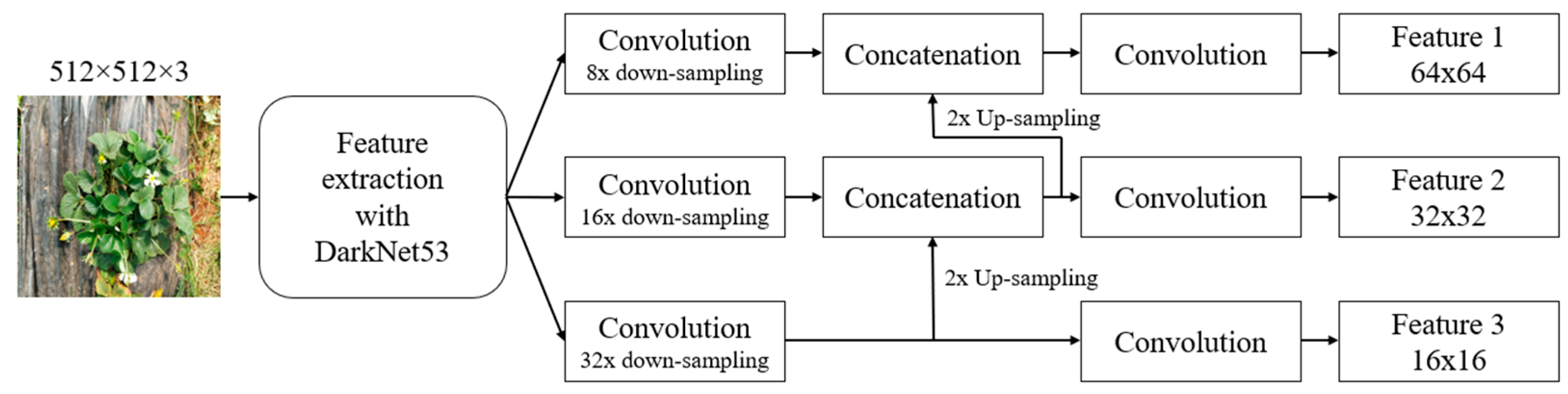

2.2. Deep Learning Model

3. Proposed Method

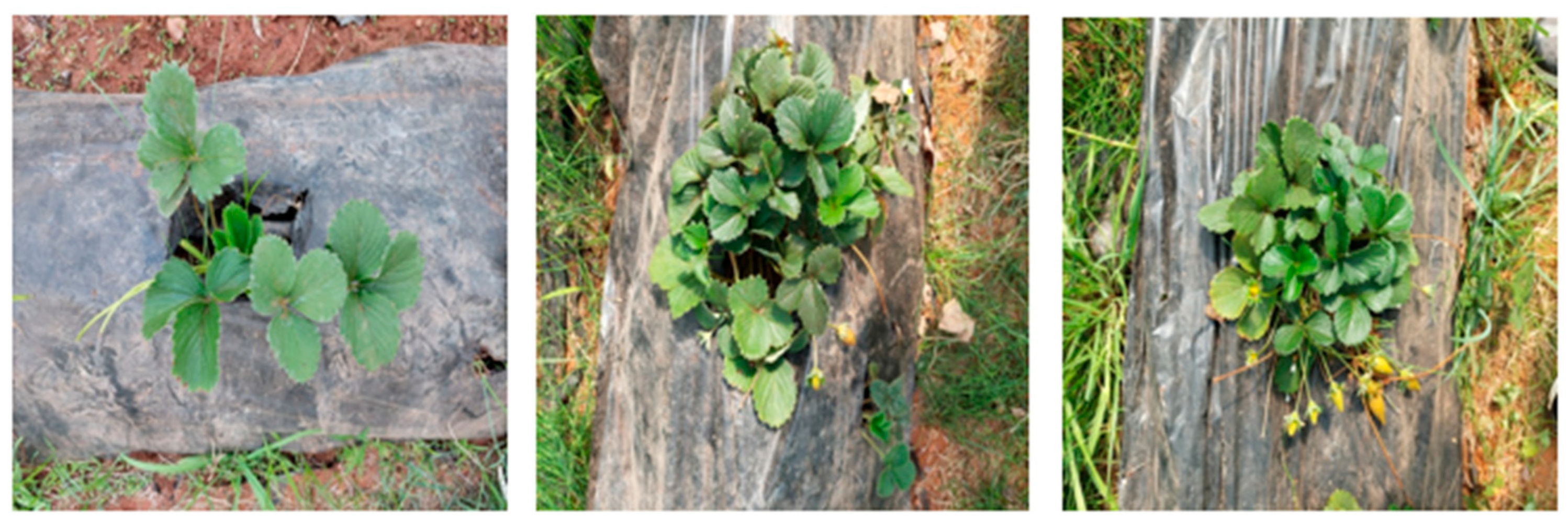

3.1. Data Acquisition

3.2. Data Augmentation

3.3. Data Pre-Processing

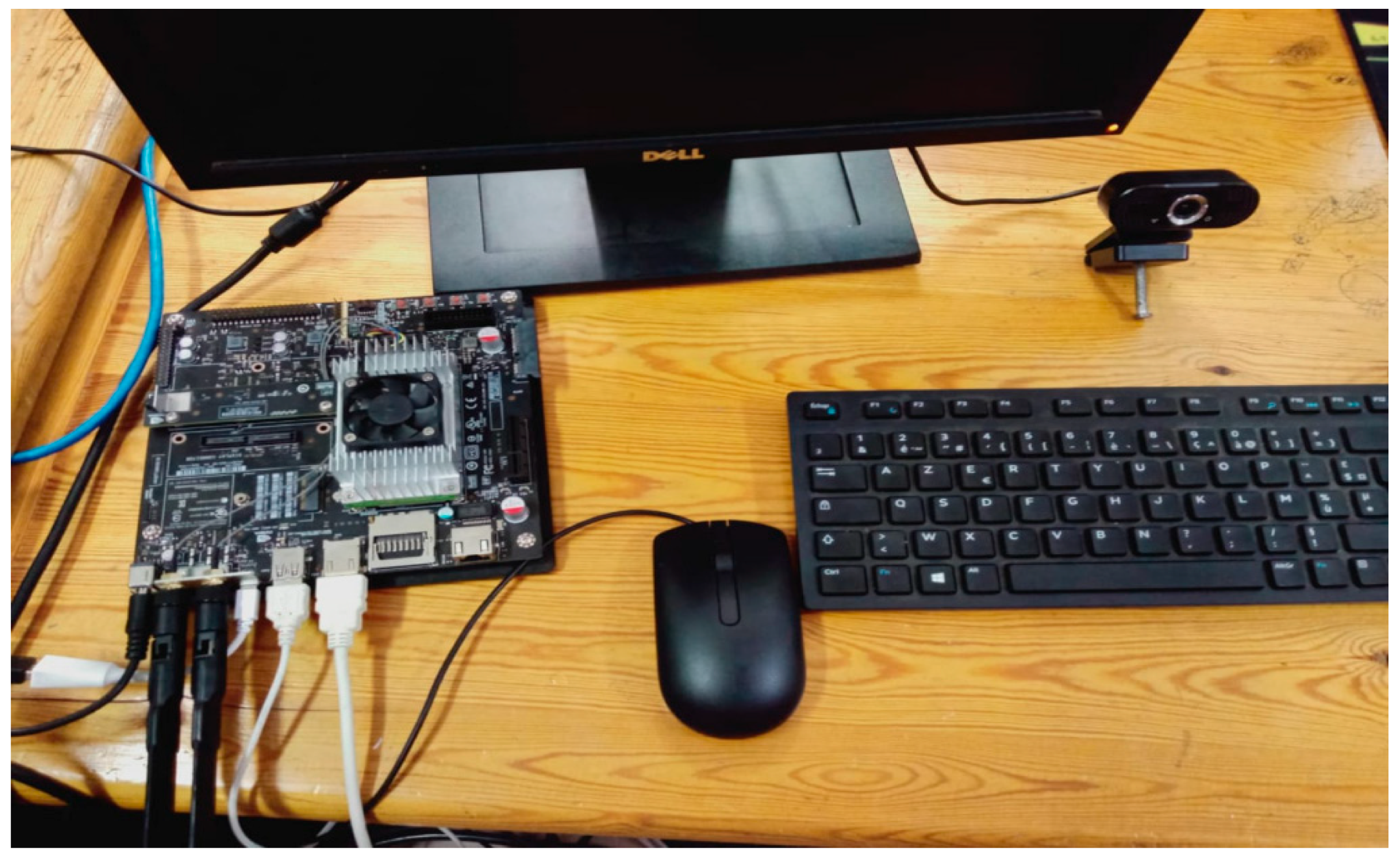

3.4. Running Environment

3.5. Model Training

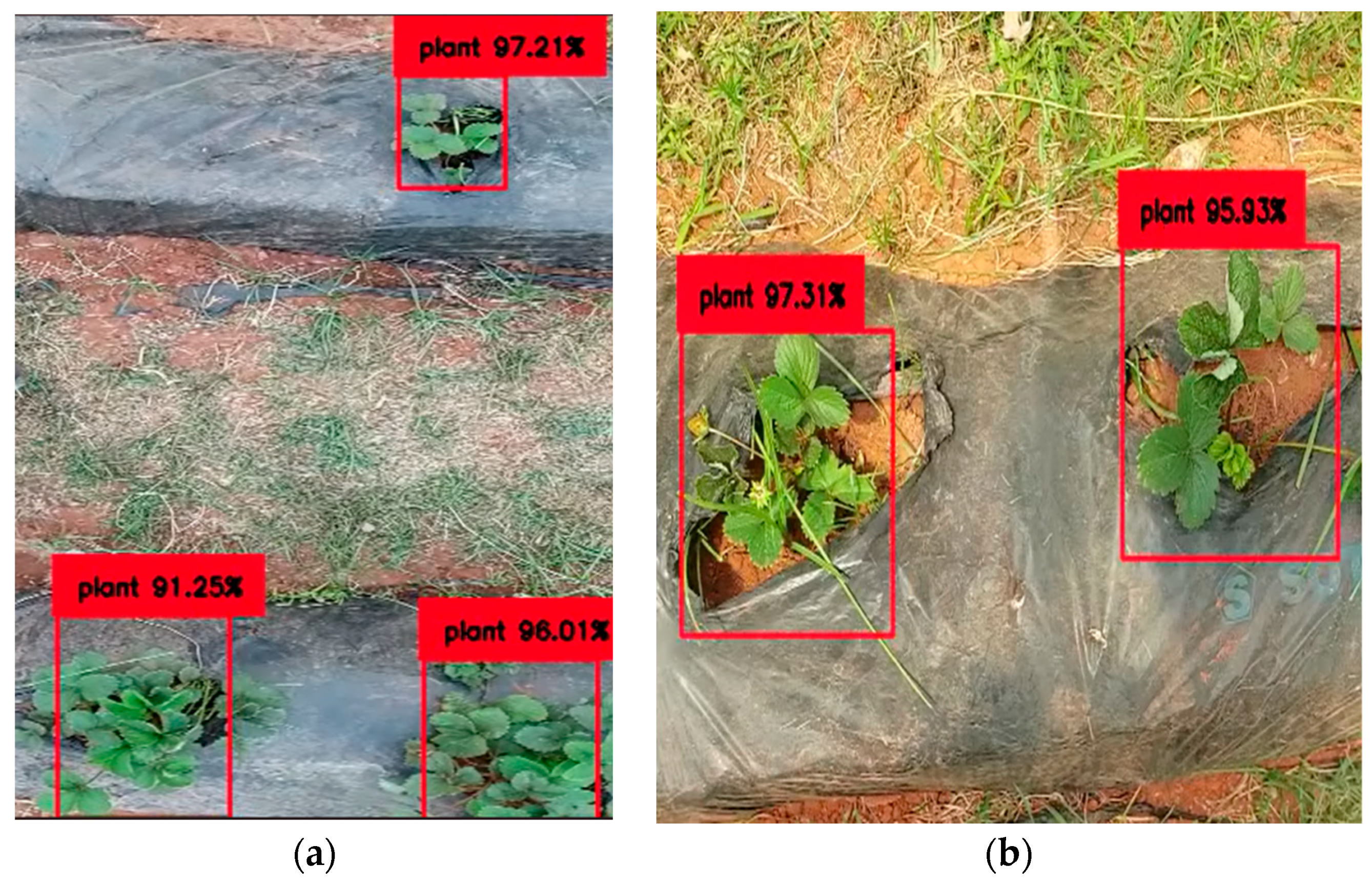

4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Food and Agriculture Organization of the United Nations (Ed.) The Future of Food and Agriculture: Trends and Challenges; Food and Agriculture Organization of the United Nations: Rome, Italy, 2017; ISBN 978-92-5-109551-5. [Google Scholar]

- Morocco—GDP Distribution Across Economic Sectors 2012–2022. Statista. Available online: https://www.statista.com/statistics/502771/morocco-gdp-distribution-across-economic-sectors/ (accessed on 6 February 2024).

- Morocco: Food Share in Merchandise Exports. Statista. Available online: https://www.statista.com/statistics/1218971/food-share-in-merchandise-exports-in-morocco/ (accessed on 6 February 2024).

- Oluwole, V. Morocco’s Fresh Strawberry Exports Generate up to $70 Million in Annual Revenue. Available online: https://africa.businessinsider.com/local/markets/moroccos-fresh-strawberry-exports-generate-up-to-dollar70-million-in-annual-revenue/yrkgkzv (accessed on 6 February 2024).

- Li, Y.; Wang, H.; Dang, L.M.; Sadeghi-Niaraki, A.; Moon, H. Crop pest recognition in natural scenes using convolutional neural networks. Comput. Electron. Agric. 2020, 169, 105174. [Google Scholar] [CrossRef]

- Pierce, F.J.; Nowak, P. Aspects of Precision Agriculture. In Advances in Agronomy; Sparks, D.L., Ed.; Academic Press: Cambridge, MA, USA, 1999; Volume 67, pp. 1–85. [Google Scholar] [CrossRef]

- Hamuda, E.; Glavin, M.; Jones, E. A survey of image processing techniques for plant extraction and segmentation in the field. Comput. Electron. Agric. 2016, 125, 184–199. [Google Scholar] [CrossRef]

- Liu, X.; Chen, S.W.; Liu, C.; Shivakumar, S.S.; Das, J.; Taylor, C.J.; Underwood, J.; Kumar, V. Monocular Camera Based Fruit Counting and Mapping with Semantic Data Association. IEEE Robot. Autom. Lett. 2019, 4, 2296–2303. [Google Scholar] [CrossRef]

- Singh, V.; Misra, A.K. Detection of plant leaf diseases using image segmentation and soft computing techniques. Inf. Process. Agric. 2017, 4, 41–49. [Google Scholar] [CrossRef]

- Malik, M.H.; Zhang, T.; Li, H.; Zhang, M.; Shabbir, S.; Saeed, A. Mature Tomato Fruit Detection Algorithm Based on improved HSV and Watershed Algorithm. IFAC-PapersOnLine 2018, 51, 431–436. [Google Scholar] [CrossRef]

- Wang, X.; Vladislav, Z.; Viktor, O.; Wu, Z.; Zhao, M. Online recognition and yield estimation of tomato in plant factory based on YOLOv3. Sci. Rep. 2022, 12, 8686. [Google Scholar] [CrossRef]

- Treboux, J.; Genoud, D. Improved Machine Learning Methodology for High Precision Agriculture. In Proceedings of the 2018 Global Internet of Things Summit (GIoTS), Bilbao, Spain, 4–7 June 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Sa, I.; Ge, Z.; Dayoub, F.; Upcroft, B.; Perez, T.; McCool, C. DeepFruits: A Fruit Detection System Using Deep Neural Networks. Sensors 2016, 16, 1222. [Google Scholar] [CrossRef]

- Lamb, N.; Chuah, M.C. A Strawberry Detection System Using Convolutional Neural Networks. In Proceedings of the 2018 IEEE International Conference on Big Data (Big Data), Seattle, WA, USA, 10–13 December 2018; pp. 2515–2520. [Google Scholar] [CrossRef]

- Yu, Y.; Zhang, K.; Yang, L.; Zhang, D. Fruit detection for strawberry harvesting robot in non-structural environment based on Mask-RCNN. Comput. Electron. Agric. 2019, 163, 104846. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: New York, NY, USA, 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: New York, NY, USA, 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Fu, L.; Feng, Y.; Wu, J.; Liu, Z.; Gao, F.; Majeed, Y.; Al-Mallahi, A.; Zhang, Q.; Li, R.; Cui, Y. Fast and accurate detection of kiwifruit in orchard using improved YOLOv3-tiny model. Precis. Agric. 2021, 22, 754–776. [Google Scholar] [CrossRef]

- Parico, A.I.B.; Ahamed, T. An Aerial Weed Detection System for Green Onion Crops Using the You Only Look Once (YOLOv3) Deep Learning Algorithm. Eng. Agric. Environ. Food 2020, 13, 42–48. [Google Scholar] [CrossRef]

- Liu, G.; Nouaze, J.C.; Touko Mbouembe, P.L.; Kim, J.H. YOLO-Tomato: A Robust Algorithm for Tomato Detection Based on YOLOv3. Sensors 2020, 20, 2145. [Google Scholar] [CrossRef] [PubMed]

- He, Z.; Karkee, M.; Zhang, Q. Detecting and Localizing Strawberry Centers for Robotic Harvesting in Field Environment. IFAC-PapersOnLine 2022, 55, 30–35. [Google Scholar] [CrossRef]

- Xie, Z.; Chen, R.; Lin, C.; Zeng, J. A Lightweight Real-Time Method for Strawberry Ripeness Detection Based on Improved Yolo. Available online: https://ssrn.com/abstract=4570965 (accessed on 6 February 2024).

- Zhang, Y.; Yu, J.; Chen, Y.; Yang, W.; Zhang, W.; He, Y. Real-time strawberry detection using deep neural networks on embedded system (rtsd-net): An edge AI application. Comput. Electron. Agric. 2022, 192, 106586. [Google Scholar] [CrossRef]

- Yang, S.; Zhang, J.; Bo, C.; Wang, M.; Chen, L. Fast vehicle logo detection in complex scenes. Opt. Laser Technol. 2019, 110, 196–201. [Google Scholar] [CrossRef]

- Ghafar, A.S.A.; Hajjaj, S.S.H.; Gsangaya, K.R.; Sultan, M.T.H.; Mail, M.F.; Hua, L.S. Design and development of a robot for spraying fertilizers and pesticides for agriculture. Mater. Today Proc. 2023, 81, 242–248. [Google Scholar] [CrossRef]

- Ma, B.; Hua, Z.; Wen, Y.; Deng, H.; Zhao, Y.; Pu, L.; Song, H. Using an improved lightweight YOLOv8 model for real-time detection of multi-stage apple fruit in complex orchard environments. Artif. Intell. Agric. 2024, 11, 70–82. [Google Scholar] [CrossRef]

- Solimani, F.; Cardellicchio, A.; Dimauro, G.; Petrozza, A.; Summerer, S.; Cellini, F.; Renò, V. Optimizing tomato plant phenotyping detection: Boosting YOLOv8 architecture to tackle data complexity. Comput. Electron. Agric. 2024, 218, 108728. [Google Scholar] [CrossRef]

- Madasamy, K.; Shanmuganathan, V.; Kandasamy, V.; Lee, M.Y.; Thangadurai, M. OSDDY: Embedded system-based object surveillance detection system with small drone using deep YOLO. J. Image Video Proc. 2021, 2021, 19. [Google Scholar] [CrossRef]

- Abanay, A.; Masmoudi, L.; Ansari, M.E.; Gonzalez-Jimenez, J.; Moreno, F.-A.; Abanay, A.; Masmoudi, L.; Ansari, M.E.; Gonzalez-Jimenez, J.; Moreno, F.-A. LIDAR-based autonomous navigation method for an agricultural mobile robot in strawberry greenhouse: AgriEco Robot. AIMS Electron. Electr. Eng. 2022, 6, 317–328. [Google Scholar] [CrossRef]

- Abanay, A.; Masmoudi, L.; El Ansari, M. A calibration method of 2D LIDAR-Visual sensors embedded on an agricultural robot. Optik 2022, 249, 168254. [Google Scholar] [CrossRef]

- Min, S.K.; Delgado, R.; Byoung, W.C. Comparative Study of ROS on Embedded System for a Mobile Robot. J. Autom. Mob. Robot. Intell. Syst. 2018, 12, 61–67. [Google Scholar]

- Amert, T.; Otterness, N.; Yang, M.; Anderson, J.H.; Smith, F.D. GPU Scheduling on the NVIDIA TX2: Hidden Details Revealed. In Proceedings of the 2017 IEEE Real-Time Systems Symposium (RTSS), Paris, France, 5–8 December 2017; pp. 104–115. [Google Scholar] [CrossRef]

- Jiang, P.; Qi, A.; Zhong, J.; Luo, Y.; Hu, W.; Shi, Y.; Liu, T. Field cabbage detection and positioning system based on improved YOLOv8n. Plant Methods 2024, 20, 96. [Google Scholar] [CrossRef]

- Zhou, J.; Hu, W.; Zou, A.; Zhai, S.; Liu, T.; Yang, W.; Jiang, P. Lightweight Detection Algorithm of Kiwifruit Based on Improved YOLOX-S. Agriculture 2022, 12, 7. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the Computer Vision—ECCV 2014, Zurich, Switzerland, 6–12 September 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. Comput. Vis. Pattern Recognit. 2018, 1804, 1–6. [Google Scholar]

- Beyaz, A.; Gül, V. YOLOv4 and Tiny YOLOv4 Based Forage Crop Detection with an Artificial Intelligence Board. Braz. Arch. Biol. Technol. 2023, 66, e23220803. [Google Scholar] [CrossRef]

- Li, C.; Zhang, B.; Li, L.; Li, L.; Geng, Y.; Cheng, M.; Xiaoming, X.; Chu, X.; Wei, X. Yolov6: A single-stage object detection framework for industrial applications. In Proceedings of the Twelfth International Conference on Learning Representations (ICLR2024), Vienna, Austria, 7–11 May 2024; Available online: https://openreview.net/forum?id=7c3ZOKGQ6s (accessed on 10 July 2024).

- Ponnusamy, V.; Coumaran, A.; Shunmugam, A.S.; Rajaram, K.; Senthilvelavan, S. Smart Glass: Real-Time Leaf Disease Detection using YOLO Transfer Learning. In Proceedings of the 2020 International Conference on Communication and Signal Processing (ICCSP), Melmaruvathur, India, 28–30 July 2020; pp. 1150–1154. [Google Scholar] [CrossRef]

- Qin, Z.; Wang, W.; Dammer, K.-H.; Guo, L.; Cao, Z. Ag-YOLO: A Real-Time Low-Cost Detector for Precise Spraying with Case Study of Palms. Front. Plant Sci. 2021, 12, 753603. [Google Scholar] [CrossRef]

- Buzzy, M.; Thesma, V.; Davoodi, M.; Mohammadpour Velni, J. Real-Time Plant Leaf Counting Using Deep Object Detection Networks. Sensors 2020, 20, 6896. [Google Scholar] [CrossRef]

- Dai, Y.; Liu, W.; Li, H.; Liu, L. Efficient Foreign Object Detection Between PSDs and Metro Doors via Deep Neural Networks. IEEE Access 2020, 8, 46723–46734. [Google Scholar] [CrossRef]

- Wu, D.; Wang, Y.; Xia, S.-T.; Bailey, J.; Ma, X. Skip Connections Matter: On the Transferability of Adversarial Examples Generated with ResNets. In Proceedings of the 2020 International Conference on Learning Representations, Addis Ababa, Ethiopia, 30 April 2020; Available online: https://openreview.net/forum?id=BJlRs34Fvr (accessed on 17 July 2023).

- Mubashar, M.; Ali, H.; Grönlund, C.; Azmat, S. R2U++: A multiscale recurrent residual U-Net with dense skip connections for medical image segmentation. Neural Comput. Appl. 2022, 34, 17723–17739. [Google Scholar] [CrossRef]

- Peng, Y.; Zhang, L.; Liu, S.; Wu, X.; Zhang, Y.; Wang, X. Dilated Residual Networks with Symmetric Skip Connection for image denoising. Neurocomputing 2019, 345, 67–76. [Google Scholar] [CrossRef]

- Wang, H.; Hu, Z.; Guo, Y.; Yang, Z.; Zhou, F.; Xu, P. A Real-Time Safety Helmet Wearing Detection Approach Based on CSYOLOv3. Appl. Sci. 2020, 10, 6732. [Google Scholar] [CrossRef]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized Intersection over Union: A Metric and A Loss for Bounding Box Regression. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 658–666. [Google Scholar] [CrossRef]

- Bargoti, S.; Underwood, J.P. Image Segmentation for Fruit Detection and Yield Estimation in Apple Orchards. J. Field Robot. 2017, 34, 1039–1060. [Google Scholar] [CrossRef]

- Sun, C.; Shrivastava, A.; Singh, S.; Gupta, A. Revisiting Unreasonable Effectiveness of Data in Deep Learning Era. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; IEEE: New York, NY, USA, 2017; pp. 843–852. [Google Scholar] [CrossRef]

- Tian, Y.; Yang, G.; Wang, Z.; Wang, H.; Li, E.; Liang, Z. Apple detection during different growth stages in orchards using the improved YOLO-V3 model. Comput. Electron. Agric. 2019, 157, 417–426. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Skalski, P. Makesense.Ai. Available online: https://github.com/SkalskiP/make-sense (accessed on 18 July 2023).

- Sutanto, A.R.; Kang, D.-K. A Novel Diminish Smooth L1 Loss Model with Generative Adversarial Network. In Proceedings of the Intelligent Human Computer Interaction, Daegu, Republic of Korea, 24–26 November 2020; Singh, M., Kang, D.-K., Lee, J.-H., Tiwary, U.S., Singh, D., Chung, W.-Y., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 361–368. [Google Scholar] [CrossRef]

| YOLO Version | Parameters | Processing Speed (FPS) | mAP (COCO) |

|---|---|---|---|

| YOLOv3 [38] | 61.5 million | ~10–15 FPS | 57.9% |

| YOLOv4-tiny [39] | 6 million | ~25–30 FPS | 36.9% |

| YOLOv5-Small [40] | 7.2 million | ~20–25 FPS | 39.4% |

| YOLOv5-medium [40] | 21.2 million | ~15–20 FPS | 45.4% |

| CPU | Intel® i7 8th Gen |

| GPU | NVidia GTX 1070 |

| Frequency | 3.2 GHz |

| RAM | 16 GB |

| Storage | 500 Gb SSD |

| Operating system | Windows 10 |

| Programming platform | Python 3.8 |

| Training process | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

| Best validation loss | 8.75 | 8.43 | 8.69 | 8.40 | 8.33 | 8.00 | 7.75 | 8.25 | 8.10 | 7.94 |

| Epoch | 13 | 17 | 10 | 12 | 8 | 13 | 7 | 15 | 11 | 9 |

| Iterations | 8000 |

| Batch size | 4 |

| Learning rate | 0.0001 |

| Epochs | 10 |

| Confidence threshold | 0.5 |

| Model | Precision | Recall | F1 Score |

|---|---|---|---|

| YOLOv3 | 0.73 | 0.95 | 0.83 |

| YOLOv3-tiny | 0.60 | 0.71 | 0.65 |

| Model | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| YOLOv3 | YOLOv3 with ONNX | YOLOv3-Tiny | YOLOv3-Tiny with ONNX | ||||||

| Data | Resolution | mAP | FPS | mAP | FPS | mAP | FPS | mAP | FPS |

| Video | 1920 × 1080 | 93.5% | 3 | 96.3% | 6 | 89.5% | 5 | 90.7% | 8 |

| Live video | 640 × 480 | 90.4% | 4 | 94% | 8 | 86.1% | 7 | 88.3% | 10 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

El Amraoui, K.; El Ansari, M.; Lghoul, M.; El Alaoui, M.; Abanay, A.; Jabri, B.; Masmoudi, L.; Valente de Oliveira, J. Embedding a Real-Time Strawberry Detection Model into a Pesticide-Spraying Mobile Robot for Greenhouse Operation. Appl. Sci. 2024, 14, 7195. https://doi.org/10.3390/app14167195

El Amraoui K, El Ansari M, Lghoul M, El Alaoui M, Abanay A, Jabri B, Masmoudi L, Valente de Oliveira J. Embedding a Real-Time Strawberry Detection Model into a Pesticide-Spraying Mobile Robot for Greenhouse Operation. Applied Sciences. 2024; 14(16):7195. https://doi.org/10.3390/app14167195

Chicago/Turabian StyleEl Amraoui, Khalid, Mohamed El Ansari, Mouataz Lghoul, Mustapha El Alaoui, Abdelkrim Abanay, Bouazza Jabri, Lhoussaine Masmoudi, and José Valente de Oliveira. 2024. "Embedding a Real-Time Strawberry Detection Model into a Pesticide-Spraying Mobile Robot for Greenhouse Operation" Applied Sciences 14, no. 16: 7195. https://doi.org/10.3390/app14167195

APA StyleEl Amraoui, K., El Ansari, M., Lghoul, M., El Alaoui, M., Abanay, A., Jabri, B., Masmoudi, L., & Valente de Oliveira, J. (2024). Embedding a Real-Time Strawberry Detection Model into a Pesticide-Spraying Mobile Robot for Greenhouse Operation. Applied Sciences, 14(16), 7195. https://doi.org/10.3390/app14167195