AnimalEnvNet: A Deep Reinforcement Learning Method for Constructing Animal Agents Using Multimodal Data Fusion

Abstract

1. Introduction

- 1.

- We introduce a new technique called AnimalEnvNet, which combines LSTM, CNN, and Attention Mechanism processes to extract and merge features from trajectory data and remote sensing images. This technique successfully simulates animal behavioural tendencies.

- 2.

- By using a deep reinforcement learning framework, we improved the model’s capacity to generalise and adapt by allowing the agents to perceive environmental input and acquire animal behaviour tactics.

- 3.

- This work on the subject of animal behaviour modelling addresses the shortcomings of conventional methodologies, offering fresh insights and approaches for investigating the behavioural patterns of animals.

2. Materials

2.1. Trajectory Data

2.2. Remote Sensing Image Data

3. Methods

- 1.

- During the data preprocessing stage, the historical trajectory data and remote sensing image data are subjected to meticulous processing.

- 2.

- Proposal of a method for constructing animal agents through multimodal data fusion. The feasibility of the AnimalEnvNet model in simulating animal behaviour was evaluated using AnimalEnvNet.

- 3.

- Use of a multi-layer perceptron-based Actor-Critic model for policy generation and value evaluation in reinforcement learning tasks.

3.1. Data Preprocessing

3.1.1. Trajectory Data

3.1.2. Remote Sensing Image Data

| Serial Number | Field Name | Range of Values | Remarks |

|---|---|---|---|

| 1 | Month | 1–12 | One-Hot Encoding |

| 2 | Day | 1–31 | One-Hot Encoding |

| 3 | Lunar day | 1–31 | One-Hot Encoding |

| 4 | Hour | 0–23 | One-Hot Encoding |

| 5 | Minute | 0–59 | One-Hot Encoding |

| 6 | Seconds | / | Normalise |

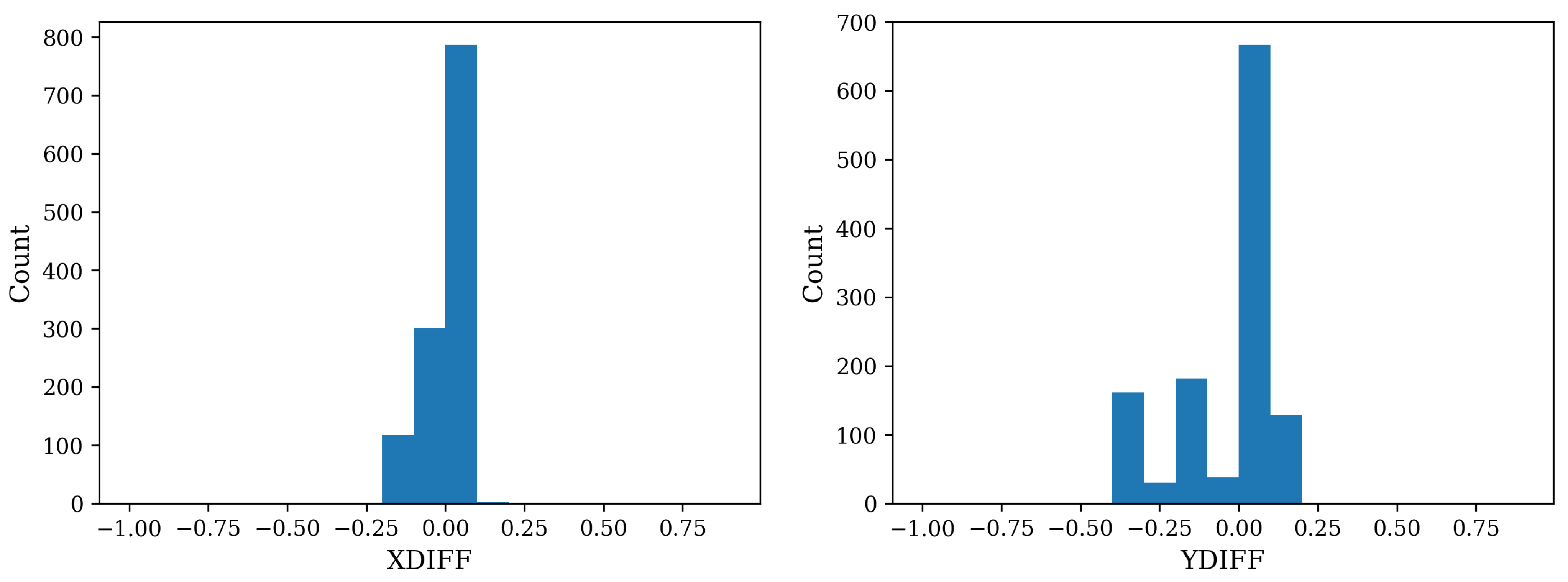

| 7 | XDIFF | / | Normalise |

| 8 | YDIFF | / | Normalise |

| 9 | Height | / | Normalise |

3.2. AnimalEnvNet Network

3.2.1. Trajectory Feature Extraction

3.2.2. Environmental Feature Extraction

3.2.3. Feature Fusion and Dimensionality Reduction

3.3. Agent Training by Reinforcement Learning

3.3.1. Action Space

3.3.2. Observation Space

3.3.3. Agent Reward Calculation

4. Results

4.1. Experimental Design

4.2. Comparison and Evaluation Results of Different Models

4.3. Results of Reinforcement Training for Agent

4.4. Ablation Study

- 1.

- AnimalEnvNet: The complete AnimalEnvNet model with all components included.

- 2.

- A: Directly combines the outputs of LSTM and CNN without using the Attention Mechanism.

- 3.

- B: Replaces the CNN module with simple fully connected layers.

- 4.

- C: Replaces the LSTM module with simple fully connected layers.

- 5.

- D: Removes the Feature Fusion with Dimensionality Reduction module. Directly concatenates the outputs of LSTM and CNN without feature fusion.

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Abrahms, B.; Carter, N.H.; Clark-Wolf, T.J.; Gaynor, K.M.; Johansson, E.; McInturff, A.; Nisi, A.C.; Rafiq, K.; West, L. Climate change as a global amplifier of human–wildlife conflict. Nat. Clim. Chang. 2023, 13, 224–234. [Google Scholar] [CrossRef]

- Yuan, X.; Wang, Y.; Ji, P.; Wu, P.; Sheffield, J.; Otkin, J.A. A global transition to flash droughts under climate change. Science 2023, 380, 187–191. [Google Scholar] [CrossRef]

- Hill, G.M.; Kawahara, A.Y.; Daniels, J.C.; Bateman, C.C.; Scheffers, B.R. Climate change effects on animal ecology: Butterflies and moths as a case study. Biol. Rev. 2021, 96, 2113–2126. [Google Scholar] [CrossRef] [PubMed]

- Harvey, J.A.; Tougeron, K.; Gols, R.; Heinen, R.; Abarca, M.; Abram, P.K.; Basset, Y.; Berg, M.; Boggs, C.; Brodeur, J. Scientists’ warning on climate change and insects. Ecol. Monogr. 2023, 93, e1553. [Google Scholar] [CrossRef]

- Shaw, A.K. Causes and consequences of individual variation in animal movement. Mov. Ecol. 2020, 8, 12. [Google Scholar] [CrossRef] [PubMed]

- Jonsen, I.D.; Flemming, J.M.; Myers, R.A. Robust state–space modeling of animal movement data. Ecology 2005, 86, 2874–2880. [Google Scholar] [CrossRef]

- Heit, D.R.; Wilmers, C.C.; Ortiz-Calo, W.; Montgomery, R.A. Incorporating vertical dimensionality improves biological interpretation of hidden Markov model outputs. Oikos 2023, 2023, e09820. [Google Scholar] [CrossRef]

- Matsuo, Y.; LeCun, Y.; Sahani, M.; Precup, D.; Silver, D.; Sugiyama, M.; Uchibe, E.; Morimoto, J. Deep learning, reinforcement learning, and world models. Neural Netw. 2022, 152, 267–275. [Google Scholar] [CrossRef]

- Patterson, T.A.; Thomas, L.; Wilcox, C.; Ovaskainen, O.; Matthiopoulos, J. State-space models of individual animal movement. Trends Ecol. Evol. 2008, 23, 87–94. [Google Scholar] [CrossRef]

- Hooten, M.B.; Johnson, D.S.; McClintock, B.T.; Morales, J.M. Animal Movement: Statistical Models for Telemetry Data; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar]

- Nathan, R.; Monk, C.T.; Arlinghaus, R.; Adam, T.; Alós, J.; Assaf, M.; Baktoft, H.; Beardsworth, C.E.; Bertram, M.G.; Bijleveld, A.I. Big-data approaches lead to an increased understanding of the ecology of animal movement. Science 2022, 375, eabg1780. [Google Scholar] [CrossRef]

- Cífka, O.; Chamaillé-Jammes, S.; Liutkus, A. MoveFormer: A Transformer-based model for step-selection animal movement modelling. bioRxiv 2023. [Google Scholar] [CrossRef]

- Sakiyama, T.; Gunji, Y.-P. Emergence of an optimal search strategy from a simple random walk. J. R. Soc. Interface 2013, 10, 20130486. [Google Scholar] [CrossRef] [PubMed]

- Reynolds, A.M. Towards a mechanistic framework that explains correlated random walk behaviour: Correlated random walkers can optimize their fitness when foraging under the risk of predation. Ecol. Complex. 2014, 19, 18–22. [Google Scholar] [CrossRef]

- Reynolds, A.M. Mussels realize Weierstrassian Levy walks as composite correlated random walks. Sci. Rep. 2014, 4, 4409. [Google Scholar] [CrossRef] [PubMed]

- Carlson, A.D.; Souček, B. Computer simulation of firefly flash sequences. J. Theor. Biol. 1975, 55, 353–370. [Google Scholar] [CrossRef] [PubMed]

- Garcia Adeva, J.J. Simulation modelling of nectar and pollen foraging by honeybees. Biosyst. Eng. 2012, 112, 304–318. [Google Scholar] [CrossRef]

- Molina-Delgado, M.; Padilla-Mora, M.; Fonaguera, J. Simulation of behavioral profiles in the plus-maze: A Classification and Regression Tree approach. Biosystems 2013, 114, 69–77. [Google Scholar] [CrossRef] [PubMed]

- van Vuuren, B.J.; Potgieter, L.; van Vuuren, J.H. An agent-based simulation model of Eldana saccharina Walker. Nat. Resour. Model. 2017, 31, e12153. [Google Scholar] [CrossRef]

- Anderson, J.H.; Downs, J.A.; Loraamm, R.; Reader, S. Agent-based simulation of Muscovy duck movements using observed habitat transition and distance frequencies. Comput. Environ. Urban Syst. 2017, 61, 49–55. [Google Scholar] [CrossRef]

- Wijeyakulasuriya, D.A.; Eisenhauer, E.W.; Shaby, B.A.; Hanks, E.M. Machine learning for modeling animal movement. PLoS ONE 2020, 15, e0235750. [Google Scholar] [CrossRef]

- Yu, Y.; Si, X.; Hu, C.; Zhang, J. A review of recurrent neural networks: LSTM cells and network architectures. Neural Comput. 2019, 31, 1235–1270. [Google Scholar] [CrossRef]

- Smagulova, K.; James, A.P. A survey on LSTM memristive neural network architectures and applications. Eur. Phys. J. Spec. Top. 2019, 228, 2313–2324. [Google Scholar] [CrossRef]

- Press, C.; Heyes, C.; Kilner, J.M. Learning to understand others’ actions. Biol. Lett. 2010, 7, 457–460. [Google Scholar] [CrossRef]

- Pineau, J.; Bellemare, M.G.; Islam, R.; Henderson, P.; François-Lavet, V. An Introduction to Deep Reinforcement Learning. Found. Trends® Mach. Learn. 2018, 11, 219–354. [Google Scholar] [CrossRef]

- Najar, A.; Bonnet, E.; Bahrami, B.; Palminteri, S. The actions of others act as a pseudo-reward to drive imitation in the context of social reinforcement learning. PLoS Biol. 2020, 18, e3001028. [Google Scholar] [CrossRef] [PubMed]

- Jonsen, I.D.; Grecian, W.J.; Phillips, L.; Carroll, G.; McMahon, C.; Harcourt, R.G.; Hindell, M.A.; Patterson, T.A. aniMotum, an R package for animal movement data: Rapid quality control, behavioural estimation and simulation. Methods Ecol. Evol. 2023, 14, 806–816. [Google Scholar] [CrossRef]

- Chiara, V.; Kim, S.Y. AnimalTA: A highly flexible and easy-to-use program for tracking and analysing animal movement in different environments. Methods Ecol. Evol. 2023, 14, 1699–1707. [Google Scholar] [CrossRef]

- Arce Guillen, R.; Lindgren, F.; Muff, S.; Glass, T.W.; Breed, G.A.; Schlägel, U.E. Accounting for unobserved spatial variation in step selection analyses of animal movement via spatial random effects. Methods Ecol. Evol. 2023, 14, 2639–2653. [Google Scholar] [CrossRef]

- Scharf, H.R.; Buderman, F.E. Animal movement models for multiple individuals. Wiley Interdiscip. Rev. Comput. Stat. 2020, 12, e1506. [Google Scholar] [CrossRef]

- He, P.; Klarevas-Irby, J.A.; Papageorgiou, D.; Christensen, C.; Strauss, E.D.; Farine, D.R. A guide to sampling design for GPS-based studies of animal societies. Methods Ecol. Evol. 2023, 14, 1887–1905. [Google Scholar] [CrossRef]

- Alhichri, H.; Alswayed, A.S.; Bazi, Y.; Ammour, N.; Alajlan, N.A. Classification of Remote Sensing Images Using EfficientNet-B3 CNN Model With Attention. IEEE Access 2021, 9, 14078–14094. [Google Scholar] [CrossRef]

- Nonaka, E.; Holme, P. Agent-based model approach to optimal foraging in heterogeneous landscapes: Effects of patch clumpiness. Ecography 2007, 30, 777–788. [Google Scholar] [CrossRef]

- Cristiani, E.; Menci, M.; Papi, M.; Brafman, L. An all-leader agent-based model for turning and flocking birds. J. Math. Biol. 2021, 83, 45. [Google Scholar] [CrossRef] [PubMed]

- Rew, J.; Park, S.; Cho, Y.; Jung, S.; Hwang, E. Animal Movement Prediction Based on Predictive Recurrent Neural Network. Sensors 2019, 19, 4411. [Google Scholar] [CrossRef] [PubMed]

- Fletcher, K.; European Space Agency. Sentinel-2: ESA’s Optical High-Resolution Mission for GMES Operational Services; ESA Communications: Noordwijk, The Netherlands, 2012; p. vi. 70p. [Google Scholar]

- Senty, P.; Guzinski, R.; Grogan, K.; Buitenwerf, R.; Ardö, J.; Eklundh, L.; Koukos, A.; Tagesson, T.; Munk, M. Fast Fusion of Sentinel-2 and Sentinel-3 Time Series over Rangelands. Remote Sens. 2024, 16, 1833. [Google Scholar] [CrossRef]

- Sherstinsky, A. Fundamentals of recurrent neural network (RNN) and long short-term memory (LSTM) network. Phys. D Nonlinear Phenom. 2020, 404, 132306. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaria, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 53. [Google Scholar] [CrossRef] [PubMed]

- Niu, Z.; Zhong, G.; Yu, H. A review on the attention mechanism of deep learning. Neurocomputing 2021, 452, 48–62. [Google Scholar] [CrossRef]

- Zhou, C.; Huang, B.; Fränti, P. A review of motion planning algorithms for intelligent robots. J. Intell. Manuf. 2022, 33, 387–424. [Google Scholar] [CrossRef]

- Fabbri, R.; Costa, L.D.F.; Torelli, J.C.; Bruno, O.M. 2D Euclidean distance transform algorithms: A comparative survey. ACM Comput. Surv. 2008, 40, 1–44. [Google Scholar] [CrossRef]

- Eschmann, J. Reward function design in reinforcement learning. In Reinforcement Learning Algorithms: Analysis and Applications; Springer: Cham, Switzerland, 2021; pp. 25–33. [Google Scholar]

- Reynolds, A.M.; Lepretre, L.; Bohan, D.A. Movement patterns of Tenebrio beetles demonstrate empirically that correlated-random-walks have similitude with a Levy walk. Sci. Rep. 2013, 3, 3158. [Google Scholar] [CrossRef] [PubMed]

- Togunov, R.R.; Derocher, A.E.; Lunn, N.J.; Auger-Méthé, M. Characterising menotactic behaviours in movement data using hidden Markov models. Methods Ecol. Evol. 2021, 12, 1984–1998. [Google Scholar] [CrossRef]

- Proulx, C.L.; Proulx, L.; Blouin-Demers, G. Improving the realism of random walk movement analyses through the incorporation of habitat bias. Ecol. Model. 2013, 269, 18–20. [Google Scholar] [CrossRef]

- Griffiths, C.A.; Patterson, T.A.; Blanchard, J.L.; Righton, D.A.; Wright, S.R.; Pitchford, J.W.; Blackwell, P.G. Scaling marine fish movement behavior from individuals to populations. Ecol. Evol. 2018, 8, 7031–7043. [Google Scholar] [CrossRef] [PubMed]

- Roy, A.; Fablet, R.; Bertrand, S.L. Using generative adversarial networks (GAN) to simulate central-place foraging trajectories. Methods Ecol. Evol. 2022, 13, 1275–1287. [Google Scholar] [CrossRef]

- Saxena, D.; Cao, J. Generative adversarial networks (GANs) challenges, solutions, and future directions. ACM Comput. Surv. 2021, 54, 1–42. [Google Scholar] [CrossRef]

| Configuration | Type Model/Parameter |

|---|---|

| Processor | Intel Core i5 9400F |

| Graphics Card | Nvidia Tesla P100 16 GB |

| Memory | 64 GB DDR4 |

| Hard Disk | 1T SSD |

| Operating System | Ubuntu 18.04 |

| Deep Learning Framework | Pytorch 2.1.0 |

| Model Name | Architecture | Learning Parameters |

|---|---|---|

| LSTM-basic | 2 LSTM units, Attention, Fully Connected | Loss: MSELoss, LR: 0.001 |

| GANS | Generator and Discriminator | Loss: MSELoss, LR: 0.0002 |

| AnimalEnvNet | Deep reinforcement learning, LSTM, Attention, Fully Connected | Loss: MSELoss, LR: 0.001 |

| Model Name | Training Batch | Model Loss | MAE | Mean | Model Size |

|---|---|---|---|---|---|

| LSTM-basic | 100 | 0.0021 | 102.9 | 109.2 | 2 M |

| GANS | 100 | 0.0007 | 55.5 | 79.5 | 17 MB |

| AnimalEnvNet | 100 | 0.0002 | 28.4 | 64.2 | 18 MB |

| Parameter Name | Value | Remarks |

|---|---|---|

| batch-size | 32 | Batch Size |

| learn-rate | 1 × 10−2 | Learning Rate |

| gamma | 0.99 | Bonus Discount |

| buffer_size | 512 | Experience Buffer Size |

| repeat_count | 16 | Number of Network Updates |

| eval_step | 64 | Estimated Number of Steps |

| reward_scale | 1 × 104 | Reward Scaling |

| lambda_gae_adv | 0.95 | Advantage Estimation Parameters |

| lambda_entropy | 0.05 | Exploration Scaling |

| Model Name | MAE | Convergence Rate | Training Time (Seconds/Batch) |

|---|---|---|---|

| AnimalEnvNet | 28.4 | 55 batches | 49 |

| A | 40.2 | 80 batches | 45 |

| B | 55.3 | 100 batches | 35 |

| C | 60.7 | 120 batches | 30 |

| D | 45.1 | 90 batches | 40 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Z.; Wang, D.; Zhao, F.; Dai, L.; Zhao, X.; Jiang, X.; Zhang, H. AnimalEnvNet: A Deep Reinforcement Learning Method for Constructing Animal Agents Using Multimodal Data Fusion. Appl. Sci. 2024, 14, 6382. https://doi.org/10.3390/app14146382

Chen Z, Wang D, Zhao F, Dai L, Zhao X, Jiang X, Zhang H. AnimalEnvNet: A Deep Reinforcement Learning Method for Constructing Animal Agents Using Multimodal Data Fusion. Applied Sciences. 2024; 14(14):6382. https://doi.org/10.3390/app14146382

Chicago/Turabian StyleChen, Zhao, Dianchang Wang, Feixiang Zhao, Lingnan Dai, Xinrong Zhao, Xian Jiang, and Huaiqing Zhang. 2024. "AnimalEnvNet: A Deep Reinforcement Learning Method for Constructing Animal Agents Using Multimodal Data Fusion" Applied Sciences 14, no. 14: 6382. https://doi.org/10.3390/app14146382

APA StyleChen, Z., Wang, D., Zhao, F., Dai, L., Zhao, X., Jiang, X., & Zhang, H. (2024). AnimalEnvNet: A Deep Reinforcement Learning Method for Constructing Animal Agents Using Multimodal Data Fusion. Applied Sciences, 14(14), 6382. https://doi.org/10.3390/app14146382