1. Introduction

Electric vehicles (EVs) have seen a significant increase in adoption over the past decade [

1]. Sales of EVs, such as city cars, buses, scooters and e-bikes, are supported by a quest for more energy-efficient urban transport. Furthermore, light-duty electric vehicles fit perfectly with new urban mobility macro trends, such as car and bike sharing [

2]. Technological advances in electronic devices and batteries have played a major role in successes in recent years; in particular, lithium-based technology [

3,

4] has established itself as the most widely adopted choice for energy storage in EVs [

5]. The price of lithium-ion batteries has been reduced by approximately 97% since their introduction on the market [

6], facilitating the market penetration of electric vehicles. Projections of future adoption indicate that EVs could achieve a position of dominance before 2050 within urban areas [

1].

EV performance is strongly influenced by the battery and the specific battery management system (BMS). The main responsibilities of the BMS is to ensure the safety and reliability of the battery by estimating the most relevant states of the battery system, such as its state-of-charge (SoC) and state of health (SoH) [

7]. The BMS collects real-time data about the temperature, voltage and current of the cells embedded into the battery packs, then uses these data processed by embedded algorithms to estimate SoC and SoH.

SoC measures the amount of energy left inside an electric battery. Its correct estimation is an essential element to ensure the safety of the vehicle and, therefore, the safety of any human using the vehicle or in its proximity. It can be calculated using Equation (

1) as follows:

where

is the charging current, the charge delivered to the battery is

and

is the total charge the battery can hold [

8].

SoC plays a key role in the optimized management of the vehicle’s electrical energy, enabling efficient use of battery power. However, the SoC is not a direct observable quantity in a low-cost way, at least from the consumer perspective, and the relationship between it and the observable physical quantities of the battery is not linear. Due to the importance and complexity of the estimation process, SoC prediction has been the focus of numerous recent studies.

Traditional estimation methods not based on machine learning (ML) techniques have shown numerous limitations. One of the simplest approaches to estimate SoC is the Coulomb Counting (CC) method. It has been adopted for small electrical devices due to its simple computational nature. However, the conventional CC algorithm has been deemed unsuitable for the online SoC estimation problem due to the following two main problems: the initial value of the SoC should be known, and high error accumulation tends to occur [

9]. Furthermore, the aging process and temperature could affect the accuracy of SoC calculation by varying the Coulomb efficiency. SoC estimation via the Ampere-hour (Ah) counting method has also been widely used due to the low computational complexity required. This algorithm is based on current measurement, representing one of its main problems due to current sensor drift and current measurement error. The current measurement error increases over time and is not reliable without re-calibration [

10]. In general, it has been suggested that to achieve acceptable levels of accuracy through Ah counting, a re-calibration of the method is required, which cannot exceed 10 days, and this is not usually allowed for automotive applications.

SoC estimation methods based on closed-loop models, such as filter- or observer-based approaches, overcome the shortcomings of traditional approaches [

11]. However, these algorithms rely on a physical model of the batteries, and obtaining an accurate model to simulate the electrochemical dynamics of lithium-ion batteries is extremely challenging [

12]. In particular, these approaches consist of the following two steps: physical modeling of the battery and implementation of an algorithm such as an Extended Kalman Filter (EKF), Unscented Kalman Filter (UKF) or Luenberger Observer (LO). However, LO estimation performance may deteriorate considerably in the case of strongly non-linear systems such as battery systems due to its linear properties [

13]. The same shortcoming can be highlighted in the case of a simple linear Kalman Filter (KF). Therefore, EKF was proposed, which attempts to linearize through Taylor-series expansion, characterizing nonlinearities of battery models but causing high levels of truncation errors [

14]. UKF reduces the error caused by linearization, and it can suffer from divergence between the noise distribution assumed a priori and the true distribution of the real non-linear system, adversely affecting SoC predictions [

15]. Although model-based techniques for SoC estimation are powerful, they are characterized by theoretical and practical complications. From a theoretical point of view, these techniques use physical and chemical theories such as electrochemical reaction or polarization consisting of numerous complex equations and functions [

16]. On the practical side, there is a need for a domain expert to spend a long period of time developing an accurate and robust physical model because accuracy is influenced by how well the model replicates the real system, which is extremely complex in the case of batteries. The more accurate the model, the more effort that has to be put into the design of the control system. Furthermore, model-based techniques have a long online lead time due to the low speed of complex calculations such as partial differential equations [

17]. Model parameter identification is also usually a lengthy and expensive process. Consequently, model-based approaches are often not practical to implement.

In contrast, data-driven SoC estimation can be used successfully without detailed knowledge of the typical processes of the physical battery model [

18]. This implies a shorter development time as it did not require the construction of a complex mathematical model and the understanding of complex chemical reactions. In addition, parametrization was performed automatically through self-learning mechanisms in data-driven approaches [

19], which were also effective against uncertainties in measurement offsets and noise, as opposed to model-based methods whose performance deteriorated due to uncertainties caused by measurement noise. Thanks to a continuous improvement in computational power and data availability, further ML techniques were developed, such as Deep Learning [

20] which proven to outperform traditional ML models in numerous tasks [

21]. Among the data-driven models used to estimate SoC, greater accuracy of artificial neural networks (ANN) approaches was noted compared to traditional ML models such as support vector machines [

22]. The increase in the usage of ANNs in research was also due to their great ability to fit the dynamics of non-linear systems and their lower complexity in terms of understanding the mechanisms behind the prediction. The performance of feed forward networks (FNN) was overtaken by more sophisticated recurrent ANN [

23], due to their greater ability to retain sequential information typical of the nature of the data used to predict the SoC. Simple FNN shown poor performances on time series data [

24]. The major limitation of Deep Learning approaches was related to the large number of data needed to train the model. Collecting data and organising it into datasets for monitoring the battery of electric vehicles was achieved by means of new-generation sensors strategically placed in the battery pack. This sensor system allowed a large amount of data to be stored during the use of electric vehicles and then made it available for the training of data-driven techniques [

25].

However, it is important to remind that the computational capacity of embedded systems deployed in EVs is limited, while the goal of estimating SoC under short latencies and accurately is of paramount and practical importance. Numerous optimisation techniques have recently been developed to enable the successful deployment of ANN on embedded devices, such as microcontrollers. For instance, 8-bit quantisation allowed the memory footprint of the model to be considerably reduced to a fourth compared to floating point 32 bits (fp32), making possible to combine next-generation edge devices with Artificial Intelligence (AI) capabilities [

26].

Technological progress in the low cost hardware components of BMS, such as sensors to measure physical properties of interest and microcontrollers (MCUs) to process signals [

27], have made the application of edge AI techniques viable [

28]. AI algorithms deployed to MCU make it possible to perform SoC inference, guaranteeing great accuracy to its estimation. Edge AI avoids the use of cloud resources to run AI models, increasing privacy, scalability and by reducing power consumption, memory and latency without the data leaving the edge device where they are collected to feed ML inference workloads. A central feature of edge computing is that computation is moved to the edge of the internet of things (IoT) network within resource-constrained embedded devices that are not equipped to run computationally intensive tasks as the cloud does [

29].

In recent years there has been growing attention towards the field of tiny machine learning (TinyML), which aims to combine the powerful inferential capabilities of ANN with the computational efficiency of ultra-low power embedded devices [

30]. The cornerstone of TinyML is to reduce the number of ANN’s parameters to the minimum without drastically compromising their performance, so as to obtain accurate results with much lower latency than cloud computing. The TinyML foundation [

31] was established in 2019 to federated a community easing the adoption of ML approaches at the edge on resource limited low power (under 1 mW) embedded devices, such as MCU. Therefore, this research focused on the study of tiny ANNs in resource-constrained devices.

This paper is structured as follows. In

Section 3, the specific challenge guiding this research is defined. Subsequently, in

Section 2, a review of the existing literature is performed. In

Section 4, the contribution to the field is presented;

Section 5 describes the dataset which shapes the problem under consideration;

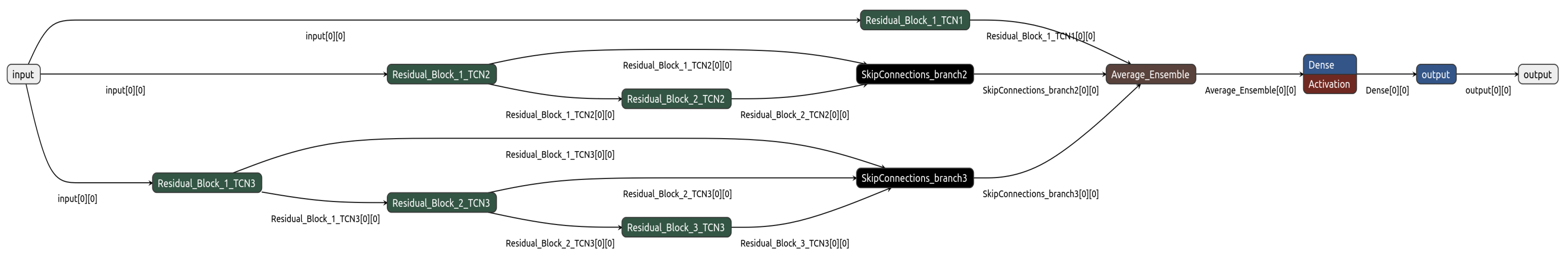

Section 6 describes the AI topologies subject of accuracy investigations by this paper;

Section 7 and

Section 9 are dedicated to the experimental and deployability analysis, respectively;

Section 12 is dedicated to study computational complexity and inference time required to compute SoC for each cell of the battery pack;

Section 13 lists main findings gathered by this work and proposes some future developments.

5. Dataset Description

The data on which the models were trained and tested were derived from the public IEEE dataport dataset composed of data from 72 real driving cycles of a BMW i3 car powered by a battery with a capacity of 60 Ah [

68]. This data source contained data on driving behaviour but also on the car’s secondary services, such as air conditioning, and the environmental conditions under which the vehicle was affected by. The existing literature suggested that these three factors influenced the energy consumption of EVs [

69,

70,

71], therefore this work decided to rely on this dataset. Each trip had a varying amount of time steps, while each step was composed by 47 features:

environment data, such as temperature and elevation;

vehicle data, such as velocity and throttle;

battery data, such as voltage, current, temperature and SoC;

heating circuit data, such as internal temperature and heating power.

About the IEEE dataport dataset, only the cycles belonging to category B were consistent, therefore all trips in category A were not considered by this study. In addition, data from driving cycles were discharged and where the battery was recharged during the trip, as shown in

Figure 1, there are trips that recorded an increase in SoC over time. Eventually, category B trips chosen to train the models were numbered as follows: 01, 06, 14, 15, 17, 18, 19, 22, 30, 35. Two independent test sets were created to evaluate the performance of the models on unseen data, the first had cycles 10 and 16, the second had cycles 10 and 37. The final dataset used to train the tiny ANNs consisted of 191,203 instances. The first test dataset included 35,517 instances, while the second had 34,403 instances.

To predict the SoC, feature selection was performed from the initial 47 features characterising each observation. In particular, each feature was plotted to verify the presence or absence of anomalies. Subsequently, correlation between different variables was studied (

Figure 2) to identify and remove highly correlated variables, thus improving the computational efficiency of the training phase without losing relevant information.

As explained by [

72], the BMW i3 featured a 96-cell battery pack. To model the battery behaviour accurately, an Equivalent Circuit Model (ECM) was defined at the pack level, including ohmic resistance. The reference model was parameterised using measurement data and further tests. Separate measurements were conducted to determine the static effect of the Open Circuit Voltage (OCV), crucial for accurately estimating the SoC. Charge and discharge measurements were performed at low currents, less than 3 A, to minimise dynamic effects. Charging was carried out at an average current of 2.8 A and discharging at an average of 2.5 A, with additional load from vehicle systems such as headlights and ventilation. The data collected were used to set a look-up table dependent only on the SoC, which was initially determined using an Ah counting method. Therefore, the referenced SoC of the battery pack of the BMW i3 was obtained through a combination of physical measurements, like current and voltage during charge/discharge cycles, and subsequent modelling using an ECM, which integrated dynamic and static effects to accurately represent battery behaviour.

The correlation matrix (

Figure 2) on the 47 features showed a high correlation between all features belonging to the vehicle temperature measurements, i.e., not the external ambient and battery temperature. In addition, there were other combinations of variables characterised by a strong correlation, like: Heating Power CAN, Heating Power Lin and Requested Heating Power. To avoid the multi-collinearity problem [

73],

Figure 3 10 features were chosen to tackle the regression task. For this reason, every sample in both the testing and training datasets consisted of 10 features and the SoC target ground truth. Each instance represented a distinct point in time during the driving journey and thus provided a snapshot of the relevant data collected from the vehicle at that instant. The 10 features characterising each observation and their correlation matrix are described as follows:

Battery Current [A]: this column indicated the amount of electric current flowing into the vehicle’s battery. Electric current is measured in amperes (A) and represents the rate of flow of electric charges within the battery.

Battery Voltage [V]: it referred to the electrical potential difference across the terminals of the vehicle’s battery. It is measured in volts (V) and indicates the level of electrical charge stored in the battery.

Battery Temperature [°C]: this feature provided information about the temperature of the vehicle’s battery in degrees Celsius (°C).

Velocity [km/h]: it represented the speed of the vehicle and is measured in kilometers per hour (km/h).

Throttle [%]: throttle percentage indicated the position of the accelerator pedal as a percentage of its full range. It reflects the driver’s input in terms of acceleration or deceleration of the vehicle.

Requested Heating Power [W]: this column represented the amount of power requested for heating purposes in the vehicle, measured in watts (W).

Heater Voltage [V]: it referred to the electrical potential difference across the heater component in the vehicle, measured in volts (V).

Elevation [m]: it indicated the height of the vehicle’s location above sea level and is measured in meters (m).

Ambient Temperature [°C]: it represented the temperature of the surrounding environment outside the vehicle, measured in degrees Celsius (°C).

Cabin Temperature Sensor [°C]: this feature indicated the temperature measured by a sensor located inside the vehicle cabin, measured in degrees Celsius (°C).

Both the testing and training data were normalised through the MinMaxScaler approach, as the features had different ranges of values so the scaling process had a positive effect on the predictive capabilities of the supervised learning models developed. In particular, the statistics utilised to scale the training dataset were the same as those used to scale the test data in order to ensure that the parameters of the training transformation were the same as those employed in the testing phase. Furthermore, the datasets were processed in order to generate windows comprising exactly 100 consecutive samples. In this way, the pre-processed training and test datasets contained data representing windows of 100 consecutive sequential observations where each was characterised by 10 features. As a consequence, the models input was a tensor with shape 100 × 10 features.

7. Experiments and Accuracy Results

All experiments were conducted with Google Colab, CPU runtime selected, in a Python 3.10.12 environment with the following library versions:

keras == 2.15.0,

numpy == 1.25.2,

pandas == 2.0.3,

scikit-learn == 1.2.2,

scipy == 1.11.4,

TensorFlow == 2.15.0.

TF_DETERMINISTIC_OPS, introduced to ensure determinism in operations, i.e., to achieve the same result each time the program was executed with the same inputs.

TF_NUM_INTRAOP_THREADS, introduced to limit the number of threads to 1 in parallel execution within individual operations.

TF_NUM_INTEROP_THREADS, introduced to limit the number of threads to 1 in parallel execution between multiple operations.

OMP_NUM_THREADS, introduced to limit the number of threads used by OpenMP to 1.

The seed within the pseudorandom number generator and the environment variables described above were set to provide reproducibility and consistency of each model performance.

Table 1 shows the number of parameters of each model in the first column and the ratio between the amount of training data and the number of parameters in the second column.

To add robustness to the accuracy values achieved during the training phase and to reduce the risk of the models being overfitted to the data, the K-fold cross validation (CV) was adopted. The training dataset was divided into five different folds and therefore each model was trained five times: each training took place on 4 folds and 1 validation fold, with the validation fold changing for each training. The optimiser Adam was chosen as the stochastic gradient descent algorithm, the loss function to optimise on was Mean Squared Error (MSE) because of the regression problem to be solved. To further reduce the risk of overfitting, the technique of Early Stopping versus calculated loss on the validation fold was introduced with a patience parameter equal to 50.

Table 2 shows the average of the 5 MSE values achieved during the K-fold CV for each model.

Before deploying the models on an embedded tiny MCU, their accuracy were evaluated on the two test groups described in the

Section 5. The

Table 3 shows the MSE values of the ANN architectures achieved using the test data. The computed MSE was dimensionless because the target variable SoC on which the error was measured represented the percentage ratio between two values having the same unit of measurement, in this case the measured quantity was current so the unit of measurement was ampere (A). By dividing two values with the same unit of measurement, a dimensionless result was obtained. The

Table 4 shows the Mean Absolute Percentage Error (MAPE) values of the ANN architectures achieved using the test data which confirms the TCN lowest error on both test cycles.

Looking at the

Table 1, the stateful LSTM model was characterised by the lowest number of parameters yet its test and validation MSE was higher compared to other developed networks. The approach incorporating TCNs was the third largest in terms of the number of parameters, the LMU layer network being the first. Based on

Table 3 and Table 15, the parallel TCN architecture achieved the best results in both test sets, with the best average MSE and standard deviation MSE achieved during the K-fold CV. The LSTM Stateless architecture exhibited a good trade-off between MSE and the number of parameters.

9. Deployability on Micro-Controllers

Deployability on tiny MCU and performance characterization were studied in terms of the memory occupied by the tiny neural networks and the number of multiply-accumulated operations (MACC) required by their execution. The evaluation run on two MCUs typically employed in the Automotive and Internet of Things (IoT) solutions. The SR5E1-EVBE3000D [

78] MCU is dedicated to automotive applications and deployed in electric vehicles. It is equipped with a dual split-lock ARM Cortex-M7 process, an internal RAM memory of 488 KiB and an internal FLASH memory of 2 MB, with a clock frequency of 300 MHz. STM32H5731-DK [

79] MCU, instead, is for IoT applications, equipped with an ARM Cortex-M7 processor at 280 MHz, an internal RAM memory of 1184 KiB and an external RAM memory of 16 MB, an internal FLASH memory of 2MB and an external FLASH memory of 64 MB, and relies on 128 Mbits SDRAM. The STM32Cube.AI Developer Cloud tool eased the deployment of the pre-trained networks on these two MCUs. It automatically converted pre-trained ANN models into optimized ANSI C code for these low-power edge devices. The results achieved by deploying the fp32 neural architecture on the two MCUs are shown below.

Table 7 and

Table 8 report the RAM and FLASH memory occupancy.

Table 9 and

Table 10 report the memory, in KiB, occupied by the storage of activation tensors and the weights assigned to the connections between neurons in the layers, respectively.

Table 11 and

Table 12 report the number of operations executed by the MCUs and the inference time in milliseconds. The LMU layer was not supported by the STM32Cube.AI platform at the time this study was performed. Therefore, it was not included in the deployability analysis and this is left to the future work.

As per

Table 3 and

Table 4, parallel TCNs architecture achieved the best accuracy on both test sets, however it featured the highest MACC and inference time on both MCUs. On the other hand, the dense architecture was the highest in KiB size for storing the activations in RAM on both MCUs. The deployability results of the dense architecture and parallel TCNs were due to the higher number of parameters compared to the other networks. LSTM stateful, having been the model characterised by the lowest number of parameters, was expected to achieve low MACC count and inference time and indeed achieved the lowest, on both SR5E1-EVBE3000 and STM32H5731-DK, FLASH memory footprint.

Few ANNs were subsequently quantized to 8 bits through the TensorFlow Lite Post Training Quantize (PTQ) procedure, and then accelerated using the STM32MP257F-EV1 [

80] microprocessor (MPU). This is equipped with dual Arm Cortex-A35 running at 1.5 GHz, one Arm Cortex-M33 and a neural co-processing unit (NPU). The inference time was considerably reduced both using 1,

Table 13, and two cores,

Table 14. At the time of this study, the TensorFlow Lite procedure did not support the optimisation of recurrent ANNs. Therefore, architectures with LSTM and GRU layers could not be quantized to 8 bits and were kept as fp32.

12. Battery Pack Architecture Analysis

The battery pack, which provides electrical energy to the vehicle’s inverter to drive the electric motor, usually consists of a number of modules with battery cells connected in series or in parallel or a combination of both. A crucial function of the BMS is to make sure that the different cells in the battery pack keep the same charge capacity during charge and discharge cycles. By keeping the cells balanced in terms of energy stored, the BMS extends the life of the battery pack (and its elements) as its longevity is affected by overcharge and depth of discharge states. Unbalances between the charge levels of different cells can exacerbate these issues. Consequently, this paper investigated the total time required to compute the SoC at a finer granularity level considering all cells composing the battery pack. As it was also suggested by Zhong et al. [

81] to estimate the SoC at pack level, the SoC for each individual cell shall be computed and then combine them to compute the overall value:

The use of a single MCU installed on the battery connected via a common data transport bus to the individual cells was assumed be available. With a single MCU, the approach chosen was the linear accumulation of the individual inference times of the SoC inference for each cell.

Table 16 shows the number of cells, their configuration within battery pack and the overall inference time for different automobile models. The pack configurations are arranged in an “xSyP” structure, where x denotes the number of cells connected in series to form a module and y denotes the number of modules connected in parallel to form the battery pack [

82]. For example, Nissan Leaf had 96 cells connected in series to form a module and 2 modules connected in parallel to form the pack, for a total of 192 cells. All data concerning the different electric car models were collected from the EV Database [

83]. All these vehicles featured Lithium-ion battery type.

In the first scenario, the STM32MP257F-EV1 MPU (2 cores) was adopted. The Parallel TCNs architecture provided the fastest results (

Table 14). The RAM occupied was 4.92 MB (

Table 14). Total inference time was computed by multiplying the number of pack cells by 0.88 ms, inference time (

Table 17 column STM32MP257F-EV1).

In the second scenario, the MCU SR5E1-EVBE3000D automotive MCU was adopted. The LSTM Stateless was chosen, since it exhibited the best trade-off among inference time (

Table 11), MSE (

Table 3), RAM and Flash usage (

Table 7). The MCU RAM and Flash occupied was 4.91 KiB and 22 KiB (

Table 7) respectively. Total inference time was computed by multiplying the number of pack cells by 20.64 ms inference time (

Table 17 column SR5E1-EVBE3000D).

The results (ref.

Table 17) showed a significant inference time difference between the 8bits Parallel TCNs deployed on a STM32MP2 and the fp32 LSTM Stateless topology deployed on the SRE51, mainly due to the highest computing capabilities of the STM32MP257F (2 cores at 1.5 GHz) and due to the more efficient 8 bits TCN workload. The inference time was higher on automobiles with high module parallelism, such as Tesla Model S Plaid, Lucid Air Grand Touring and Rimac Nevera (column Number of cells of

Table 16).

Therefore, a second approach was considered. It assumed that a MCU was connected to each “xS” structure. This to enable “yP” parallel computation. The total inference time was computed by multiplying the “xS” by the inference time of the individual cell. This because each MCU could perform the SoC computations of the individual “xS” cells.

Table 18, the column STM32MP257F-EV1 shows the final values computed using the inference time of the Parallel TCNs architecture deployed on 2 cores: 0.88 ms. The column SR5E1-EVBE3000D reports the final values computed with the inference time of LSTM Stateless topology, 20.64 ms.

Table 18 shows that the Parallel TCNs network achieved low inference times.