Mathematical Tools for Simulation of 3D Bioprinting Processes on High-Performance Computing Resources: The State of the Art

Abstract

1. Introduction

- Pre-printingIn the print cartridge, a cell-laden bioink can be considered a composite material. Therefore, even in the absence of different compounds such as rheological enhancers or additional biomaterials, high-cell-density suspensions behave as colloidal systems that exhibit printability. The impact of the cells embedding on the viscoelastic properties of the bioink is further complicated by the possibility that the cells are surrounded by a pericellular matrix, which could alter their mechanical characteristics, the hydrodynamic radius, and boundary conditions at the fluid interface [15].It is worth noting that cells can interact with each other, during the pre-printing phase and during the whole process, adding a further level of complexity to the overall system. Furthermore, different cell sources can be also considered when dealing with interface tissues such osteochondral one, which involves bone and cartilage tissues. Nonetheless, a bioink must prevent cell sedimentation to preserve a uniform cell suspension [16]. The cells in the ink are no longer evenly distributed when they settle. This may result in clogged nozzles as well as an uneven cell distribution in the finished printed structure (i.e., more cells in the early printed layers than in the latter ones). Creating bigger and more complex scaffolds may exacerbate the concerns related to cell sedimentation because these structures usually demand longer printing times (i.e., printing full-scale tissues or organs can take hours or even days). In conclusion, since cells can flow when a force is applied, a bioink would avoid cell sedimentation while yet remaining printable [14].Additionally, the volume occupied by the cells within a bioink varies according to their size and density. The hydrogel is excluded from the volume occupied by cells, which could affect the physicochemical and viscoelastic properties [15]. For example, cells may actually hinder the cross-linking process by limiting contact between reacting groups or acting as a physical barrier between various ink layers.

- During PrintingThis indicates that as a force is applied, their viscosity alters. Non-Newtonian fluids are primarily categorized into shear thickening (viscosity increases when shear rate increases) and shear thinning (viscosity reduces when shear rate increases) in response to this viscous tendency. Generally speaking, printability increases with material viscosity—at least to the point at which the internal pressures created can harm cells. Furthermore, some studies examine the impact of temperature on the viscosity of bioink. Nonetheless, they must be bioprinted at physiological conditions at 37 °C to preserve the cells [11]. Additionally, as a result of cell migration and proliferation, the distribution of cells during printing may change, which may have an impact on the bioink’s rheological characteristics. In fact, as cells interact with the matrix around them and with one other, traction forces are created that operate on the hydrogel macromolecules in a printed structure.

- Post-PrintingBioink needs to produce a 3D “milieu” that promotes cellular survival and function after printing. Because they offer an aqueous, cell-compatible environment that can mimic many of the mechanical and biochemical characteristics of the original tissue, hydrogels are frequently utilized in 3D cell culture. The simple passage of nutrients and waste products to and from encapsulated cells is made possible by their high water content and permeability. They can also provide a variety of signals to control cell phenotypic, differentiation, growth, and migration.

“What are the relevant mathematical tools for the simulation of the different phases of 3D bioprinting with a particular focus on the first principles-based (FP) models in macro and mesa-scales and how such tools should be modified/integrated to be compliant with the emerging computational resource in the Exascale Era?”.

| Sub-Search-1: | (bioprinting OR (cell-laden hydrogels) OR bioinks) |

| Sub-Search-2: | (simulation OR modeling OR (computational science)) |

| Sub-Search-3: | (macro or mesa scale FP models) |

| Sub-Search-4: | (numerical tools for computational solution of FP-model problems) |

| Sub-Search-5: | (Exascale models and algorithms). |

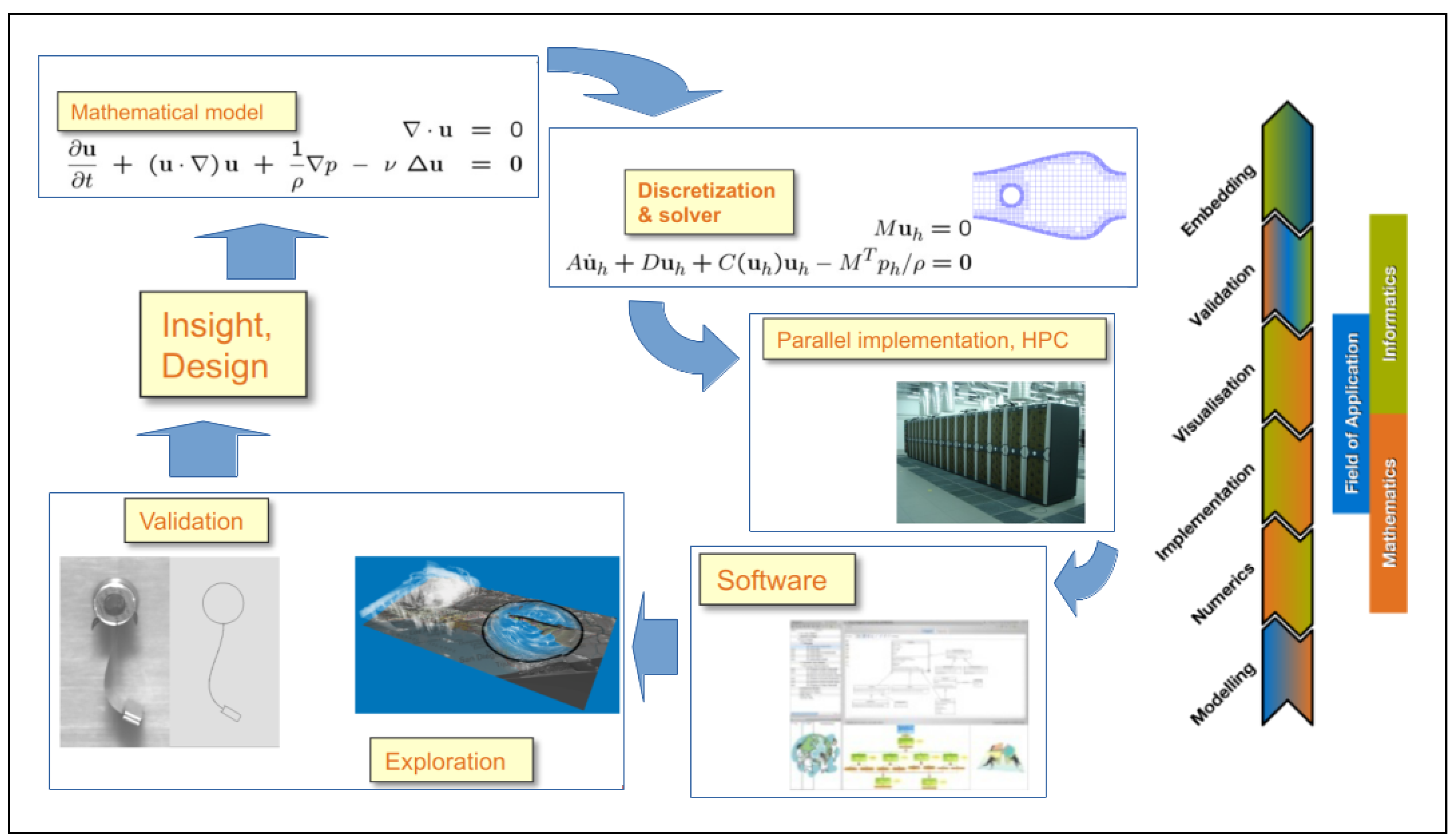

2. The Role of Computational Science (CS)

- Field experts and mathematicians jointly define the descriptive mathematical model of the problem.

- Mathematicians and computer scientists jointly define and implement the descriptive numerical model of the problem. The numerical model is described by an algorithm that can have a high computational cost due to the great number of operations. Therefore, it may be desirable to exploit the parallelism made available by resources for HPC [19,20,21,22,23,24,25].

- Field experts, mathematicians, and computer scientists jointly validate the correctness and accuracy of the numerical model and algorithm by executing its implementing software. Parallel algorithms and software are evaluated on the basis of their performance in terms of the number of performed operations per time unit, strong and weak scalability, and other useful metrics [26].

- If necessary, on the basis of the observations collected in the previous steps, a new formulation of the mathematical model is constructed.

3. An Inventory of the “In Silico” Experiments in 3D-Bioprinting of Cell-Laden Hydrogels

3.1. Pre-Printing

3.2. During Printing

3.3. Post-Printing

4. A “State of the Art” of HPC Systems in the Exascale Era

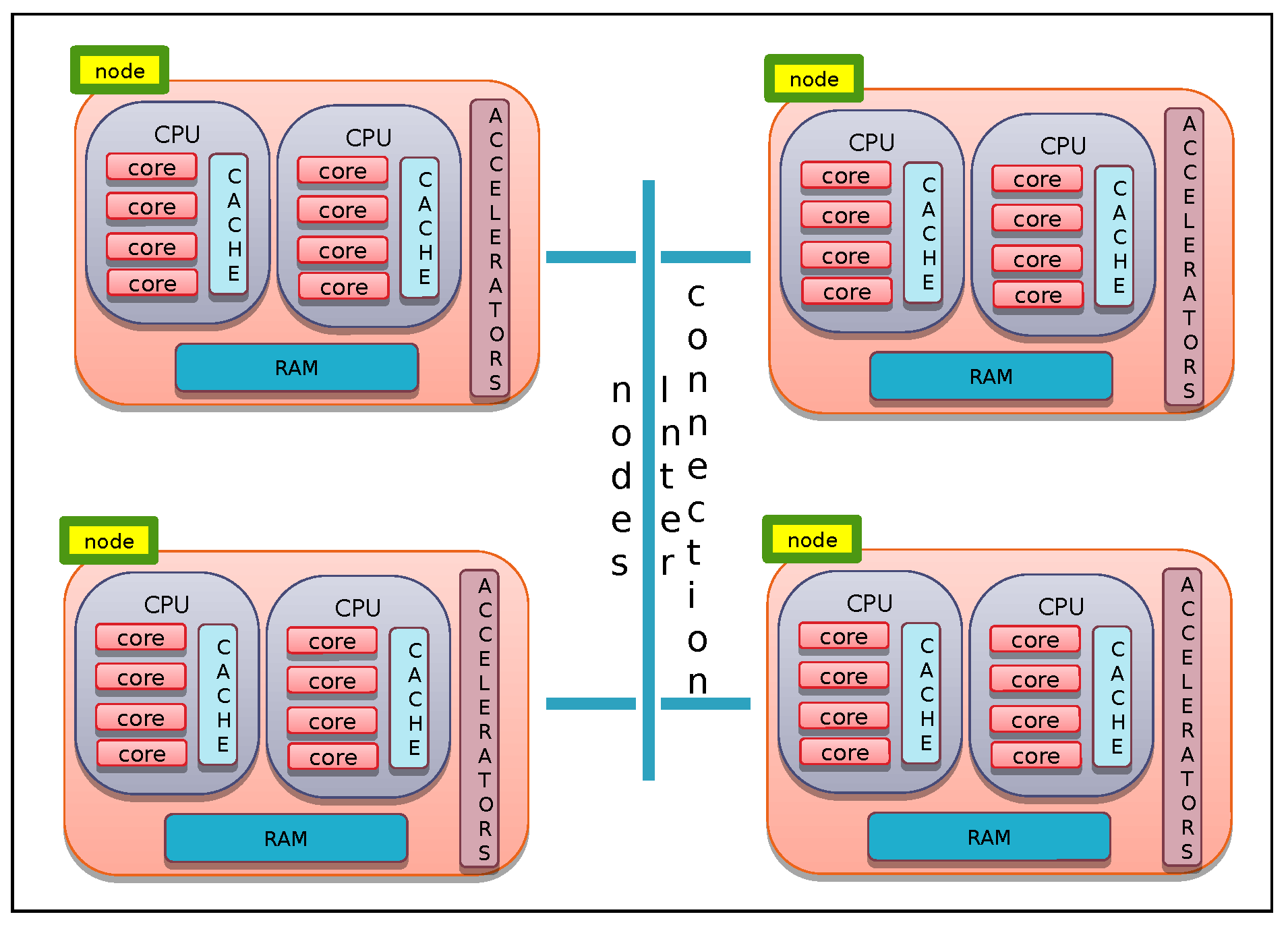

- Application programming support, which, in response to the complexity and scale of advanced computing hardware, makes available programming languages, numerical libraries, and programming models, eventually combined in hybrid and hierarchical approaches, exploit the multi-node, multi-core, and accelerators-based architecture of up-to-date HPC resources.

- New mathematical models and algorithms that can give solutions to the need for increasing amounts of data locality and the need to obtain much higher levels of concurrency and that can guarantee a high level of “scalability” [81] and “granularity” [82]. Then, it is now the time to develop new algorithms that are more energy-aware, more resilient, and characterized by reduced synchronization and communication needs.

5. Mathematical Models

5.1. Models at the Macro-Scale

5.1.1. The Navier–Stokes Equations for Viscoelastic Fluids

- Newtonian viscosity model

- Power-Law model

- Cross model

- Modified Cross model

- Carreau model

- Carreau–Yasuda model

5.1.2. The Two-Phase Flow Problem

- The representation and evolution in time of the interface.

- The way the imposed surface boundary conditions are treated.

- Representation and time evolution of the interface.To represent the two-phase flows with shifting inter-phase boundaries, various methodologies have been developed in response to the problem’s complexity, both numerically and physically. In principle, the methods can be divided into two classes: the so-called front-tracking (FT) methods and front-capturing (FC) methods.In these methods, two-phase flow is treated as a single flow with the density and viscosity smoothly varying across the moving interface which is captured in a Eulerian framework (FC) or in a Lagrangian, framework (FT), with the terms Eulerian and Lagrangian frameworks, meaning, meaning the following [100]:

- Lagrangian framework: In classical field theories, the Lagrangian specification of the flow field is a way of looking at fluid motion where the observer follows an individual fluid parcel as it moves through space and time.

- Eulerian framework: The Eulerian specification of the flow field is a way of looking at fluid motion that focuses on specific locations in the space through which the fluid flows as time passes.

The front-tracking method is based on a formulation in which a separate unstructured grid (surface grid) with nodes converging at a local velocity is used to represent the interface. One benefit of the technique is that interfacial conditions can be easily included because the interface is precisely established by the surface grid’s position. Moreover, a good approximation of the curvature allows for the consideration of surface tension force. The method’s drawback is that it requires the use of extra algorithms to compute flows with substantial interface deformations such bubble break-up and coalescence since the surface grid will be severely distorted.In contrast, the front-capturing approach uses a scalar function on a stationary grid to capture the interface as part of its implementation of the interface evolution. The ability to compute phenomena like bubble break-up and coalescence is made possible by the implicit interface capture provided by front-capturing techniques. The two main difficulties in using this approach are keeping a clear boundary between the different fluids and accurately calculating the surface tension forces [98].The Level Set approach was developed as a result of the front-capturing approach [59,60]. A different method, known as the Immersed Boundary (IB) method [58,101,102,103,104], was developed for flows across challenging geometries. It can be thought of as a combination of front-capturing and front-tracking procedures.- Level Set Method The idea on which the level set method is based is quite simple. Given an interface in of codimension one, bounding an open region , the LS method intends to analyze and compute its subsequent motion under a velocity field .This velocity depends on the position, time, the geometry of the interface (e.g., its normal or its mean curvature) and the external physics.The idea is to define a smooth function representing the interface as the set where .The level set function has the following properties:Thus, the interface is to be captured for all times t, by defining the set of points for which the values (levels) obey the following equationCoupling the level set method with problems related to two-phase Navier–Stokes incompressible flow needs the reformulation of Problem 1 as followsProblem 2.Equations of two-phase Navier–Stokes incompressible flow defined in using the level set methodwhere is the velocity field, p is the pressure field, is the gravitational acceleration, and are the piecewise constant fluid densities and stress tensors described by the Heaviside function, σ is the surface tension coefficient, κ is the curvature of the interface, is the unit normal vector outward to , and is a delta function.The function can have different format [105,106,107], which helps to avoid some disadvantages of the LS method related to the discrete solution of transport equations, which may be affected by numerical error leading to loss or gain of mass.In standard level set methods, the level set function is defined to be a signed distance functionwhere .To improve numerical robustness, “smoothed out” Heaviside functions (needed to represent density and viscosity discontinuities over the interface) are often used. For example [106],

- Immersed Boundary MethodThe Immersed Boundary Method (IBM) can be useful to describe fluid–structure interaction when the structure is intended to be an Immersed Body. In the IBM, the fluid is represented in a Eulerian coordinate system, and the configuration of the Immersed Body is described by a Lagrangian approach [101].Consider the simulation of incompressible flow around the body in Figure 4a, which is described by Problem 1 where . denotes the surrounding fluid domain occupied by the solid body domain with the boundary denoted by .In an IB method, the boundary condition would be imposed indirectly through a specification of Problem 1. In general, the specification takes the form of a source term (or forcing function) in the governing equations, which reproduces the effect of boundary [102].The original IBM, created by Peskin [58], is typically appropriate for flows with immersed elastic limits. It was designed for the combined modeling of blood flow and muscle contraction in a beating heart. A collection of massless points moves with the local fluid velocity , and these points serve as a Lagrangian tracker of the location of the Immersed Body, represented by a set of elastic fibers.Thus, the coordinate X of the Lagrangian point is governed by the equationThe effect of the Immersed Body on the surrounding fluid is essentially captured by transmitting the fiber’s stress to the fluid through a localized forcing term in the momentum equations, which is given bywhere is the Dirac delta function. The Dirac delta function in is formally defined by the following:and is also constrained to satisfy the identityThe function can be written aswhere is a given functional called “the elastic potential energy of the material” in configuration X and where the notation is shorthand for the “Fréchet derivative” of E with respect to X. Thanks to the use of the Dirac delta function, Equation (34) can be rewritten asThe forcing term is thought to be distributed over a band of cells surrounding each Lagrangian point (see Figure 4b), as the fiber locations typically do not coincide with the nodal points of the Eulerian grid. This distributed force is then imposed on the momentum equations of the surrounding nodes. As a result, a smoother distribution function, represented by d in this case, effectively replaces the delta function and may be applied to a discrete mesh. The d function allows for the rewriting of Equations (35) and (37) as follows:The choice of the distribution function d is a key ingredient in this method. Several different distribution functions were employed in the past (i.e., see [102] for a list of employed d).

Coupling the IB equations with problems related to the Navier–Stokes incompressible flow requires the reformulation of the Problem 1 as follows:Problem 3.Equations of the Navier–Stokes incompressible flow defined in surrounding an Immersed Body :where ρ is the density of the fluid, is the velocity field, p is the pressure field, is the gravitational acceleration, is the stress response to the deformation of the fluid, X is the Lagrangian coordinate of the generic point of the body, and E is the elastic potential energy of the body material. - Surface boundary conditions treatmentSince the second derivative of a discontinuous function determines the curvature , using front-capturing methods to compute the surface tension force requires particular considerations. Various methods were devised to precisely calculate the surface tension force. The most well-known method is presumably the continuum surface force (CSF) model [61]. The model considers surface tension force as a continuous, 3D effect across an interface, rather than as a boundary value condition on the interface. The continuum method eliminates the need for interface reconstruction. A model of a diffuse interface is examined, in which the surface tension force is converted into a volume force that distributes across several cell layers.We note (see Equation (20)) that surface tension per interfacial area at a point on the interface is given bywhere is the surface tension coefficient, is the curvature of the interface, and is the unit normal vector outward to .Suppose that the interface where the fluid changes from fluid 1 to fluid 2 discontinuously is replaced by a continuous transition. Applying a pressure jump at an interface brought on by surface tension is no longer appropriate. Instead, as can be shown in Figure 5, surface tension should be considered active everywhere in the transition area.As in [61], we choose a volume force at any point x of transition region aswhere is a smooth “color function” that approaches the “characteristic function”, , as the width h of the region approaches to zero,and where the “characteristic function” can be defined aswhere and represent the domains occupied by fluids 1 and 2, respectively. We note that for the curvature of the interface and for the unit normal vector outward to , the following equations are valid:The function can be defined as a convolution of the function with an interpolation function according to Brackbill et al. [61]:where has to satisfy the following conditions

- (a)

- ,

- (b)

- ,

- (c)

- is differentiable and decreases monotonically with increasing .

Volume force will result in the same total force as , but spread over the finite interface width, i.e.,Coupling the continuum surface force (CSF) model with problems related to two-phase Navier–Stokes incompressible flow requires the reformulation of the Problem 1 as follows:Problem 4.Equations of two-phase Navier–Stokes incompressible flow defined in using the CSF model:where is the velocity field, p is the pressure field, is the gravitational acceleration, and and are the piecewise constant fluid densities and stress tensors described by the Heaviside function.

5.1.3. Transport and Response of Biological and Chemical Species

5.2. Models at the Meso Scale

5.2.1. Monte Carlo-Based Methods

...a general method, suitable for fast electronic computing machines, of calculating the properties of any substance which may be considered as composed of interacting individual molecules. [111]

- .

- The -entry of the K-th power of gives the probability of transitioning from state i to state j in K steps.

- Irreducible if, for all states i and j, there exists K such that .

- A-periodic if, for all states i and j, the Greatest Common Divisor of the set is equal to 1.

- If is the stable distribution for an irreducible, a-periodic Markov Chain, then the Markov Chain can be used to sample from .

- Samples from can be used to approximate the properties of . For example, suppose f is any real-valued function on the state space S, and suppose that is a sample from ; then, the ergodic theorem [114] ensures thatwhere is the expected value .

5.2.2. Cellular Particle Dynamics

5.2.3. Cellular Automata Model

CA are simple mathematical idealizations of natural systems. They consist of a lattice of discrete identical sites, each site taking on a finite set of ... values. The values of the sites evolve in discrete time steps according to ... rules that specify the value of each site in terms of the values of neighboring sites. CA may thus be considered as discrete idealizations of the partial differential equations often used to describe natural systems [123].

- A grid of individual cells.

- A group of ingredients.

- A collection of local rules dictating how the constituents behave.

- Identified starting conditions.

- The grid, the cells, and the ingredients: A cellular automaton consists of a regular grid of cells. The grid can be in any finite number of dimensions N. Every grid cell can typically exist in a limited number of “states”, which specify the cell’s occupancy. The cell may be empty or hold a specified ingredient, which, if it is present, may be a type of particle, a specific molecule, or some other relevant thing for the topic under consideration. An illustration of a bi-dimensional CA grid with cells each, whereby some cells are occupied by ingredient A (red cells) and other cells by ingredient B (blue cells), can be found in Figure 7a.Movements and other actions on the grid are controlled by rules that are only dependent on the characteristics of the cells that are closest to the ingredient. The neighborhood of a cell is its immediate surroundings. The “Von Neumann neighborhood” is the most often employed neighborhood in two-dimensional CA investigations (see Figure 7d, where the blue cell neighborhood is pictured by the four red cells).Another common neighborhood is the “Moore neighborhood”, pictured by the red cells surrounding the blue cell in Figure 7c. Another useful neighborhood is the “extended Von Neumann neighborhood”, shown in Figure 7d by the red and green cells surrounding the blue cell.Every grid cell’s value will be impacted by how its neighborhoods are managed, including cells that are on the edge of the CA grid. Keeping the values in those cells constant is one approach that might be taken. Using distinct neighborhood definitions for these cells is an additional strategy. They might have fewer neighbors, but it is also possible to define more elaborate strategies for the cells that are closest to the edges.These cells are typically handled in a toroidal manner; for instance, in a bi-dimensional grid, one cell “goes off the top” and “enters” at the corresponding position on the bottom, and one cell “enters on” the right when it “goes off” the left (this is sometimes referred to as periodic boundary conditions in the field of partial differential equations). To illustrate this, consider taping the rectangle’s left and right sides to create a tube, and then the top and bottom edges to create a torus (see Figure 7b).

- The rules: The behaviors of the ingredients on the grid, and consequently the evolutions of the CA systems, are governed by a variety of rules. According to what is described in [69], a list of potential rule types is provided below.

- Movement rules: Movement rules define the condition that, during an iteration, the ingredients move to neighborhood cells. These rules take several forms [120]:

- The “breaking” probability, , defines the condition that two adjacent ingredients A and B may remain linked to each other.

- The “joining” parameter, , defines the condition that, if two ingredients A and B are separated by an empty cell, ingredient A moves toward or away from ingredient B.

- The “free-moving” probability of an ingredient A defines the ingredient’s propensity to move on the grid more rapidly or slowly.

- Transition rules: Transition rules define the condition that, during an iteration, an ingredient will transform to some other species. These rules take several forms [120]:

- The “simple first-order transition” probability defines the condition that an ingredient of species A will change to species B.

- The “reaction” probability defines the condition that ingredients A and B will transform into ingredients C and D, respectively, in case they “encounter” each other during their movements in the grid.

The critical characteristics of all these rules are their local nature, focusing solely on the ingredient in question and potentially any nearby ingredients.All the probabilities listed above are enforced using a random-number generator in the CA algorithm. For example, suppose we use a random-number generator which generates numbers in the interval . Suppose that one of the above rule probabilities is set to and that the random-number generator generates a number r; the ingredient A can make the “move” prescribed by the rule if and “cannot move” otherwise. - The initial conditions: The remaining conditions of the simulation must be determined after the grid type, size, and governing rules have been established, the latter by giving particular values to the previously mentioned parameters. These consist of (1) the types and quantities of the beginning ingredients; (2) the setup of the system’s initial state; (3) the number of simulation runs that are to be performed; and (4) the duration of the runs or the number of iterations they should contain.About points (3) and (4) above, it needs to be emphasized that when the CA rules are stochastic, i.e., probabilistic, each simulation run is, in effect, an independent “experiment”. This implies that the outcomes of different runs could theoretically be different. A single ingredient’s activity is typically totally unpredictable. But for the majority of cases, we will look at which collective outcome—from a run with a lot of ingredients or from a lot of runs with few ingredients—tends to show a similar pattern. As a result, two further simulation-related details must be determined: the number of independent runs that must be completed and their duration (in iterations). These numbers will be heavily influenced by the type of simulation that will be run. In certain situations, it will be preferable to let the runs continue for a sufficient amount of time in order to reach an equilibrium or steady-state condition [120].

6. Numerical Methods and Algorithms

6.1. Models at the Macro-Scale

6.1.1. Discretization in the Space-Time Domain

- Finite Elements A strong computational method for solving differential and integral equations that come up in many applied scientific and engineering domains is the Finite Element Method (FEM) [125].The fundamental idea behind the FEM is to consider a given domain as a collection of basic geometric shapes called finite elements, for which the approximation functions required to solve a differential equation can be generated systematically. Indeed, the solution u of a differential equation can be approximated, on each element e, by a linear combination of unknown parameters and appropriately selected functions :For a given differential equation, it is possible to develop different finite element models, depending on the choice of a particular type of approximation method (e.g., Galerkin, weak-form Galerkin, least-squares, subdomain, collocation, and so on). The finite element model is a set of algebraic relations among the unknown parameters of the approximation: so, solving the problem to find an approximation of u requires the solution of a system of algebraic equations with the unknown parameters .The major steps in the finite element formulation and analysis of a typical problem are as follows.

- Discretization of the domain into a set of selected finite elements. This is accomplished by subdividing the given domain into a set of subdomains where and , called finite elements (see Figure 8a). The phrase “finite element” often refers to both the geometry of the element and degree (or order) of approximation: The form of the element can be either triangle or quadrilateral, and the degree of interpolation over it can take on various forms, including linear and quadratic. The finite element mesh of the domain is the non-overlapping total (or assembly) of all elements used to represent the actual domain, and it is represented by . In general, may not equal the actual domain because of its potentially complex geometry. Nodal points, also known as nodes, are typically taken at appropriate positions in the structure, usually to simplify the element. They are used to characterize the individual elements and subsequently the complete mesh structure (see Figure 8b).

- Construction of a statement, often a weighted-integral in a weak-form statement according to Weighted Residual Methods (WRMs) [130], which is equivalent (in some sense) to the differential equation to be analyzed over a typical element. A general class of techniques, called the Weighted Residual Method, was created to obtain an approximate solution to the problem of the formwhere is a general linear differential operator. If is just an approximation of the true solution function u of (86), then an error or residual will exist such thatThe idea at the basis of WRM is to force the residual to zero in some average sense over the domain , namely,where values are the so-called weight functions. Depending upon the nature of the weight function, different types of Weighted Residual Methods can be used. The Point Collocation Method, Subdomain Collocation Method, Least Square Method, and Galerkin Method are a few of the common ones. The trial functions themselves are selected as the weight functions in the Galerkin variant of the Weighted Residual Method. So, in the Galerkin method, we set and the weak-form Galerkin model, for each element e is the following set of algebraic relations obtained from (88) and (85):or, in matrix form,whereare, respectively, an matrix and two vectors of length l. We assume that the vectors also include both the “essential” and the “natural” boundary conditions (see [125] for details). The drawback of this model for second- and higher-order differential equations is that the approximation functions should be differentiable as many times as the actual solution .The weak form in Equation (86) requires that the approximation chosen for should be at least linear in x. In addition, it requires that be made continuous across the elements. The approximation functions , in each element e, are chosen to have the so-called interpolation property, that is,where are the nodal points of the element e. The scalar is defined as follows:Then, the values of in Equation (85) coincide with the values that takes on at the same nodal points:Therefore, solving the Equation (90) allows one to identify the values that the approximation takes on in the nodal points of each element e. Functions that satisfy the interpolation property (91) are known as the Lagrange interpolation functions. The shape and number of nodes of the element are determined by the number l of linearly independent terms in the representation of . Not all geometric shapes qualify as finite element domains. It turns out that triangle- and quadrilateral-like shapes, with an appropriate number of nodes, qualify as elements.

- Finite element assembly to produce the global system of algebraic equations of the formwhere , , and are, respectively, am matrix and two vectors of length and where denotes the number of the nodes (the global grid node) of all the elements composing the finite element mesh. Therefore, the assembly procedure represents the realization of the global displacement boundary conditions as well as the inter-element compatibility of the approximation functions and the displacement field. Indeed, each global grid node is shared by different finite elements which all contribute to the solution of the unknown values on the involved nodes. Let us denote withthe set of such global grid nodes; then, a transformation exists that “maps” the “local” index i of the i-th node of the element e into the “global” index I of the same node (see Figure 8c). Thanks to the validity of the “superposition” property in FEM context due to the linear nature of the problem, for the global matrix and the global vector , the following equalities are valid:

If u is a function defined both in space and time and the linear differential operator has the following form:where does not contain terms with differentiation with respect to the time variable t, then Equation (92) can be rewritten as:where is a vector of the values assumed by the approximation in all the nodal points of the finite elements mesh and where the symbol denotes the partial derivative of the function u respect to time t: . The nonlinear Equation (96) represents an approximation to the original system of partial differential equations, which is discrete in space and continuous in time. - Finite Differences [127] The basic idea of FDM is to compute an approximation of the solution u of (86) by mean an approximation of all the differential operators defining . As for FEM, the major steps in the finite element formulation and analysis of a typical problem are:

- Discretization of the space domain into a set of nodes . This is accomplished by subdividing the given domain by mean of a grid of nodes which represent the finite difference mesh (see Figure 9a).

- Construction of approximation statements, based on a finite difference, for all the differential operators present in . Thanks to the use of Taylor expansion, the validity of the following relations can be demonstrated [137]:where , and are, respectively, named the n-th order forward, backward, and central differences with respect to and are given by, respectively.

- Forward difference

- Backward difference

- Central differencewhere .

- By substituting one of the above approximations to each space derivative operator in , we obtain the so-called space approximated operator , which can be written generally as a nonlinear algebraic operator as follows:where and m depend on the type of approximation chosen.

- The set of the following algebraic equationswhich are obtained by evaluating the equation in each node of finite difference mesh can be written, after the inclusion of boundary conditions, in matrix form aswhere , , and are, respectively, a matrix and two vectors whose generic elements arewhere . Therefore, solving Equation (103) allows us to identify the values at which the approximation takes on the nodal points of the finite element mesh. It is obvious that the accuracy of depends on the type of approximation chosen for the derivative operators that are present in .

- Finite Volumes [127,138] A space discretization technique called the finite volume approach works well for numerical modeling of several kinds of conservation laws that are represented by PDEs of the form (86) where the linear differential operator can be expressed asAs for FEM and FDM, the major steps in the finite element formulation and analysis of a typical problem are

- Discretization of the domain into a set of selected subdomains. This is accomplished by subdividing the given domain into a set of subdomains where and , called control volumes or cells (see Figure 9b), forming the finite volume mesh. Planar surfaces in 3D or straight edges in 2D define the boundaries of the control volumes. Consequently, flat faces or straight edges are used to approximate the bounding surface, if it is curved. Cell faces, or just faces, are the names given to these bounding discrete surfaces.

- Construction of approximation statements needed to identify the approximation function for the actual solution u of (104). Following the approach described for the FEM framework and based on Weighted Residual Methods, from (88), where the weight functions are chosen to be constant and equal to identity, it follows thatfor each cell of the finite volume mesh. By using the Divergence Theorem, Equation (105) can be rewritten asThanks to the use of mean value theorem, in each subdomain , Equation (106) can be re-written aswhere are the mean values respectively of functions f in subdomain (which can be considered as values of f in cell center ) and where the symbol is used to represent the volume/area/length of respectively a 3D/2D/1D space domain.One can substitute the integral on the left side of Equation (107) with a summation over the faces enclosing the c-th cell, resulting inwhere is the number of faces of the c-th cell and represents the f-th face of the c-th cell. Details about the forms for the restriction of on each are available in the literature (i.e., see [139,140]), but, for the sake of semplicity, assume, as in [139], that, on each cell, is a constant function whose value is ; then, Equation (108) can be rewritten aswhere is an approximation of F that can be defined using a variety of approaches. For this purpose, Taylor series expansions have historically been employed [141]. The end result of approximating F by in terms of cell center values (that is, the constant values ), followed by substitution into Equation (109) and by the boundary conditions incorporation, is a set of discrete linear algebraic equations of the formwhere , , and are, respectively, an matrix and two vectors. The solution u of (110) allows us to calculate the values of for all the representing the finite volume mesh.

6.1.2. Solution of the “Discrete in Space” Model

- The Time Integration algorithm The proposed space discretization methods allow the construction of similar discrete problems since they all lead to a generally non-linear system of algebraic equations in the following form:which represents an ordinary differential equation (ODE) with respect to the time variable.Depending on the type of the approximation of the time derivative present in the left term of Equation (111) and the discretization of the temporal domain , different methods are available to solve the problem described by Equation (111), which is discrete in space and continuous in time.Assuming that is the time interval and that it is discretized by a set of equally spaced points , where and , then there exist different methods—called “Time Integration Schemes (TIS)”—to compute the approximation of u at the time as a function of just the approximation of u and at the time (explicit methods) or as a function of u and at the times and (implicit methods).See [142] for a classification of such schemes.Among the rich set of available TIS, a one-parameter family of methods, called the -family, is commonly used [143]. In this family of methods, a weighted average of time derivative of a dependent variable u is approximated at two consecutive time steps by a linear interpolation of the values of the same variable u at the same two steps:By substituting Equation (112) into Equation (111), the following relation is obtainedwhereand whereSince at time (i.e., ), the right-hand side is computed using the initial values defined in the time boundary conditions, and since the vector is always known, for both times and , then the approximation of u at time (when ) can be computed by Algorithm 1.For different values of the parameter , several well-known time approximation schemes are obtained:

- , the forward difference Euler explicit scheme. The problem (113) is linear, the schema is conditionally stable, and its order of accuracy (see Definition 2 for a definition of the “order of accuracy” terms) is ;

- , the Crank–Nicolson implicit scheme. The problem (113) is nonlinear, the schema is unconditionally stable, and its order of accuracy is ;

- , the Galerkin implicit scheme. The problem (113) is nonlinear, the schema is unconditionally stable, and its order of accuracy is ;

- , the backward difference Euler implicit scheme. The problem (113) is nonlinear, the schema is unconditionally stable, and its order of accuracy is .

Algorithm 1 The algorithm implementing the -family methods for the approximated solution of the discrete in space and continuous in time problem described by Equation (111). - 1:

- procedure TimeIntegrator(, , , , , , , , T, , )

- 2:

- Input: , , , , , , , T,

- 3:

- Output:

- 4:

- Compute , and

- 5:

- for to do

- 6:

- Compute

- 7:

- Compute

- 8:

- Compute

- 9:

- Solve

- 10:

- Compute

- 11:

- Compute

- 12:

- end for

- 13:

- end procedure

For , the scheme is stable, and for , the scheme is stable only if the time step meets the following restrictions (i.e., conditionally stable schemes) (see Reddy [136]):where the greatest eigenvalue of the eigenvalue problem related to the matrix Equation (113) is denoted by :See [144] for further definitions and descriptions of the “stability” and “accuracy” terms. Generally, Algorithm 1 requires the solution of a nonlinear problem (see line 9) that can be solved by methods described in the next point 2. If the problem degenerates into a linear one (i.e., when ) its solution can be obtained by methods described at next point 3. - The Non-linear problem solver Let us consider the following nonlinear algebraic system:where and are vectors in . Assuming the existence of a solution for the system (122), an approximation of that solution can be obtained by different methods. The “Newton–Raphson methods”, and their derivatives, should be cited as the most commonly used [145]. Such methods iteratively compute an approximation of , and they are based on a Taylor series development of the left term of (122) at an already known statewhere is the Jacobi matrix of at , i.e.,Let ; thenTherefore, an approximation of can be computed using the iterative algorithm represented in Algorithm 2. The rate of convergence of the Newton-Raphson scheme is characterized by the following inequality:

Algorithm 2 The algorithm implementing the “Newton–Raphson methods” for the approximated solution of Equation (122). - 1:

- procedure NewtonSolver(, , N, )

- 2:

- Input: , , N

- 3:

- Output:

- 4:

- for to do

- 5:

- Compute

- 6:

- Compute

- 7:

- Solve

- 8:

- Compute

- 9:

- end for

- 10:

- end procedure

Depending on the approach used to approximate/compute the Jacobi-matrix (or tangent matrix) of , different derivations of the scheme can be obtained: Table 8 lists some of them. Also, when the application of the BFGS-update is considered, Algorithm 2 can be used. Only the equation system at the line 7 of Algorithm 2 has to be reformulated by introducing the BFGS-update of the inverse of the secant matrix. See Algorithm 3 for details on the BFGS reformulation of Algorithm 2.Algorithms 2 and 3 require the solution of a linear problem (see, respectively, lines 6 and 7) that can be solved by methods described in the next point 3.Details about convergence and other numerical issues are available in the literature (see [145]).Algorithm 3 The algorithm implementing the “BFGS” reformulation of the Newton–Raphson method. - 1:

- procedure BFGSNewtonSolver(, , N, )

- 2:

- Input: , , N

- 3:

- Output:

- 4:

- Compute

- 5:

- Compute

- 6:

- Solve

- 7:

- Compute

- 8:

- for to do

- 9:

- Compute

- 10:

- Compute

- 11:

- Compute

- 12:

- Compute

- 13:

- Compute

- 14:

- Compute

- 15:

- end for

- 16:

- Compute

- 17:

- end procedure

- The linear problem solver: Let us consider the linear problem of the formwhere , and are, respectively, a matrix and two vectors in and . Since the matrices coming from the discretization of problems of interest for this review are sparse if not even structured—for example, see matrices generated for the solution of Navier–Stokes equations for viscoelastic fluids in Section 6.1.3, which have a lot of zero elements (or have zero blocks)—particular attention will be spent in this work to describe methods to be used for the solution of (125) when is sparse (or structured) (see [146] for a more precise definition of the sparsity concept).There are three fundamental classes of methods that are used to solve Equation (125):

- Direct methods The direct solution of (125) is generally based on a technique called “ decomposition” [147]. This technique consists of factoring the matrix as in Equation (126) (by algorithms whose computational complexity is generally on the order of ), and it is based on the concept that triangular systems of equations are “easy” to solve:where and are, respectively, lower triangular and upper triangular matrices and where and are permutation matrices. A permutation matrix is used to represent row or column interchanges in matrix , and it is obtained from the identity matrix by applying on it the same sequence of row or column interchanges. The row or column permutations on are often required for both numerical and sparsity issues [146].The decomposition facilitates the solution of (125) effectively, particularly when solving several systems that share the same matrix . In fact, if a matrix already has a decomposition available, the linear system in (125) can be solved by (1) the solution of (forward substitution), followed by (2) the solution of (backward substitution) where . Then, the values of all the unknowns can be obtained by permuting the rows of based on the permutation matrix . The execution of both a forward and a backward substitution has a general computational complexity of ).If the matrix is symmetric and positive definite (that is, if i for all nonzero vectors x), then the decomposition of becomes and is called “Cholesky” factorization.Numerical issues related to the stability and accuracy of the presented direct solver can be found in [146].With the aim to present and discuss methods useful in computing the decomposition of sparse matrices, we propose the “Frontal methods”. Such methods have their origin in the solution of finite-element problems, but they are not restricted to this application. They are of great interest in their own right since the extension of these ideas to multiple fronts, regardless of the origin of the problem, will offer a great opportunity for parallelism [146,148,149].In the context of “Frontal methods” the matrix can be considered as the sum of submatrices of varying sizes, as happens for the matrices that are the result of the assembly process underlying the discretization using finite elements. That is, each entry is computed as the sum of one or more values:Entry in (127) is said to be fully summed (or fully assembled) if all the operations (127) have been performed, and the index of the unknown k is said to be fully summed if all the entries in its row (i.e., ) and its column (i.e., ) are fully summed.The fundamental notion of frontal techniques is to limit decomposition operations to a frontal matrix , on which Level 3 BLAS [150] is used to execute dense matrix operations. (The BLAS (Basic Linear Algebra Subprograms) are routines that provide optimized standard building blocks for performing primary vector and matrix operations. BLAS routines can be classified depending on the type of operands: Level 1: operations involving just vector operands; Level 2: operations between vectors and matrices; and Level 3: operations involving just matrix operands.) In the frontal scheme, the factorization proceeds as a sequence of partial factorization on frontal matrices , which can be represented, up to some row or column permutations, by a blocks structure:where is related to the unknown indices that are fully summed.The decomposition by Frontal methods can be described by Algorithm 4.A variation of frontal solvers is the Multifrontal method. It could be considered an improvement of the frontal solver, which, based on an appropriate order or coupling of the addends in the summation in (127), can lead to the use of several independent fronts paving the way, naturally, to the development of parallel algorithms. Efficient parallel and sequential decomposition algorithms based on Frontal and Multifrotal methods should be able to perform an analysis of the structure of matrix to define all the needed reorganizations of its row and columns (i.e., by the definition of appropriate permutations) to better exploit sparsity, preserve “good” numerical properties, and define the order of front assembly. See [146] for details about techniques to be used in such analyses.

Algorithm 4 The algorithm implementing the “Frontal method”. - 1:

- procedure FrontalDecomposition(, L)

- 2:

- Input: , L

- 3:

- Output: ,

- 4:

- 5:

- for to L do

- 6:

- Compute

- 7:

- Factorize

- 8:

- Solve

- 9:

- Solve

- 10:

- Compute

- 11:

- end for

- 12:

- 13:

- 14:

- end procedure

- Iterative methods: Direct methods could exploit the sparse linear system structure as much as possible to avoid computations with zero elements and zero-element storage. However, these methods are often too expensive for large systems, except where the matrix has a special structure. For many problems, the direct solution methods will not lead to solutions in a reasonable amount of time. So, researchers have long tried to iteratively approximate the solution starting from a “good ” initial guess for the solution [151].The Krylov subspace iteration methods (KSMs) are among the most important iterative techniques for solving linear systems because they have significantly altered how users approach huge, sparse, non-symmetric matrix problems. The techniques in question were recognized as one of the top ten algorithms that had the biggest impact on the advancement and application of science and engineering during the 1900s [110], and they seem to have also confirmed their importance in the current century due to the effort spent to improve their effectiveness and efficiency in up-to-date computing architecture.Like the majority of feasible iterative methods now in use for resolving large linear equation systems, KSM makes use of a projection process. A projection process is the standard method for taking an approximation from a subspace to the solution of a linear system [152].Let and be two N-dimensional subspaces of . Finding an approximate solution to (125) requires a projection technique onto the subspace and orthogonal to . This is carried out by imposing the conditions that belongs to and that the new residual vector is orthogonal to , where is the initial guess to the solution; that is,Note that if is written in the form , and the initial residual vector is defined as , then the above condition becames,In other words, the approximate solution can be defined aswhere the symbol denotes the Euclidean inner product. In the most general form, this is a basic projection step. A series of these projections is used in the majority of common techniques. A new pair of subspaces, and , are used in a new projection step to compute . An initial guess, , is equal to the most recent solution estimate obtained from the previous projection step. Many well-known techniques in scientific computing, such as the more complex KSM procedure or the simpler Gauss–Seidel step, have a unifying framework that is provided by projection methods.A Krylov subspace method is a method for which the subspace is the Krylov subspace [152]Variations in the choices of subspace give rise to different Krylov subspace methods. The most popular methods arise from two general options for .The first is simply and the minimum residual variation . The most representative among the KSMs is related to the choice , and it will be described in this work: the “Generalized Minimum Residual Method (GMRES)”. The second class of methods is based on defining to be a Krylov subspace method associated with , namely, (see [152] for details).The GMRES method can be used to solve sparse linear systems whose matrix is general (i.e., not necessarily symmetric or positive definite). Let matrices whose columns are an orthonormal basis for space , then can be expressed as as where . Furthermore, let and definewhere is the spectral norm, that is, the matrix norm induced by the Euclidean vector’s inner product.Then, condition (129) (or equivalently the condition (131)) is satisfied by if, and only if, is the solution to the following minimum problem (see Proposition in Saad [152]):A schema for the GMRES iterative algorithm, useful to compute the approximation of the solution for problem (125), is represented in Algorithm 5.

Algorithm 5 The GMRES iterative algorithm. - 1:

- procedure GMRES(, , , N, )

- 2:

- Input: , , N

- 3:

- Output:

- 4:

- Compute

- 5:

- Assign

- 6:

- for to N do

- 7:

- Compute an orthonormal basis for

- 8:

- Compute

- 9:

- Compute

- 10:

- end for

- 11:

- end procedure

Different methods can be used to perform steps at line 7 of Algorithm 5. The most common ones are related with the Gram–Schmidt or Householder procedures, which both compute, at the step n, both the matrix and the upper Hessenberg matrix (see [153] for the definition of upper Hessenberg matrix) , for which the following relation is valid [152]:Thanks to Equation (135) (see Proposition in Saad [152]), the function can be rewritten aswhere is the constant vector defined as . The solution to the minimum problem at line 8 of Algorithm 5 is easy and inexpensive to compute since it requires the solution of an least-squares problem where n is typically small and where the coefficient matrix is an upper triangular matrix.Although KS methods are well founded theoretically, they are all likely to experience slow convergence when dealing with problems arising from applications where the numerical features of the coefficient matrix are not “good”. The matrix’s “condition number” is the primary parameter that describes its numerical “goodness”. The condition number of a square matrix is defined asIn numerical analysis, the condition number of a matrix is a way of describing how well or badly a linear system with as a coefficient matrix could be numerically solved in an effective way: if is small, the problem is well-conditioned; otherwise, the problem is rather ill-conditioned and it can hardly be solved numerically in an effective way [147].In these situations, preconditioning is essential to the effectiveness of Krylov subspace algorithms. Preconditioners, in general, are modifications to initial linear systems that make them “easier” to solve.Identifying a preconditioning matrix is the first stage in the preconditioning process. The matrix can be defined in a variety of ways, but it must meet a few minimal requirements. The most crucial one is that linear system must be cheap to solve because the preconditioned algorithms will need to solve linear systems with coefficient matrix at each stage. Furthermore, should be nonsingular and “close” to matrix in some sense (i.e., ) [152].For example, if the preconditioner is applied from the left, the modification leads to the preconditioned linear systemwhose coefficients matrix should have better numerical features than because it is “near” the identity matrix . An incomplete factorization of the original matrix is one of the easiest ways to define a preconditioner. This involves taking into account the formula , in which represents the residual or factorization error and and represent the lower and upper portions of , respectively, with the same nonzero structure. Calculating this incomplete factorization, often known as , is not too difficult or expensive.More sophisticated preconditioners are available, such as the preconditioners based on Domain Decomposition Methods and those based on Multigrid Methods (i.e., see [152] for the basic panoramic of such tools).It is worth mentioning that if the matrix is symmetric and positive definite, then specialized algorithms exist in the context of KSM: the Conjugate Gradient (CG) method is the most famous among those [152]. - Hierarchical/Multilevel methods: When solving linear systems resulting from discretized partial differential equations (PDEs), preconditioned Krylov subspace algorithms tend to converge more slowly as the systems get bigger. There is a significant loss of efficiency because of this decline in the convergence rate. The convergence rates that the Multigrid method class can achieve, on the other hand, are theoretically independent of the mesh size. Discretized elliptic PDEs are the primary focus of Multigrid methods, which distinguish them significantly from the preconditioned Krylov subspace approach. Later, the technique was expanded in many ways to address nonlinear PDE problems as well as problems not described by PDEs [152]. The most important contribution in this sense is related to the Algebraic Multigrid (AMG) [154]. The main ingredients of multilevel methods to solve (125) are as follows.

- (a)

- A hierarchyof H problems along with restriction and prolongation operators to move between levels, whereand wherewithfor each ;

- (b)

- A relaxation operator, , for “smoothing”. is in general a tool to compute iteratively an approximation for the solution of the linear system from the initial guess .

Algorithm 6 describes the recursive implementation schema of AMG methods based on the ingredients listed above, where is the initial guess for solution .Algorithm 6 Implementation schema of AMG methods. - 1:

- function AMG (H, , , , , , )

- 2:

- Input:H, , , , , ,

- 3:

- Output:

- 4:

- Pre-smooth times

- 5:

- Get residual

- 6:

- Restrict residual

- 7:

- Restrict matrix

- 8:

- if then

- 9:

- Solve Coarse System

- 10:

- else

- 11:

- 12:

- for to do

- 13:

- Recursive Solve

- 14:

- end for

- 15:

- end if

- 16:

- Correct by prolongation

- 17:

- Post-smooth times

- 18:

- return

- 19:

- end function

The Multigrid schema is defined by the parameter , which controls the number of times AMG is iterated in line 13 of Algorithm 6. The V-cycle Multigrid is obtained for the situation . The W-cycle Multigrid is the case where . The illustrations in Figure 10 show how complicated the ensuing inter-grid up and down moves can be. Seldom is the case used [152].

Details about restriction, prolongation, and relaxation operators can be found in Stuben [154]. - The Saddle Point Problem solvers Let us consider the block linear systems of the formwhere , and . If one or more of the following requirements are met by the constituent blocks A, , , and C, then the linear system (143) defines a (generalized) “Saddle Point problem” [155]:

- C1 A is symmetric ();

- C2 the symmetric part of A, , is positive semidefinite;

- C3 ;

- C4 C is symmetric and positive semidefinite;

- C5 .

In addition to the standard differentiation between direct and iterative techniques, generalized Saddle Point problem-solving algorithms can be broadly classified into two groups: segregated and coupled methods [155].- Segregated methods and , the two unknown vectors, are computed independently via segregated procedures. This method entails solving two linear systems (referred to as reduced systems), one for each of , that are less in size than . Each reduced system in segregated techniques can be addressed using an iterative or direct approach. The Schur complement reduction method, which is based on a block LU factorization of the block matrix in Equation (143) (also known as the global matrix ), is one of the primary examples of the segregated approach. Indeed, if A is nonsingular, the Saddle Point matrix admits the following block triangular factorization:where is the Schur complement of A in . It follows that if is nonsingular, S is also nonsingular. Using Equation (144), the linear system (143) can be transformed intoor equivalently,

Algorithm 7 The algorithm of the segregated approach to the solution of a generalized Saddle Point system. - 1:

- procedure SaddlePointSegregatedMethod(A, , , C, , , , )

- 2:

- Input:A, , , C, ,

- 3:

- Output: ,

- 4:

- Solve

- 5:

- Compute

- 6:

- Solve

- 7:

- Compute

- 8:

- Solve

- 9:

- end procedure

Then, the solution of the linear system (143) by the segregated method can be performed using Algorithm 7. Solution of linear systems at the lines 4, 6 and 8 of Algorithm 7 can be solved either directly or iteratively. This approach is attractive if the order m of the reduced system at line 6 of Algorithm 7 is small and if linear systems with coefficient matrix A can be solved efficiently. Since the solution of two linear systems with coefficient matrix A is required, it should be convenient to perform just one LU factorization of A to be used twice. The two main drawbacks are that A must be nonsingular and that the Schur complement S can be full, making it prohibitively expensive to factor or compute. When creating S, numerical instability could potentially be an issue, particularly if A is not well conditioned [155]. If S is too costly to form or factor, a Schur complement reduction can still be used by solving related linear systems using iterative techniques like KSM, which only require S in the form of matrix-vector products that involve the matrices , , and C, as well as by solving a linear system with matrix A. These techniques do not require access to individual entries of S. It is possible that the Schur complement system is not well conditioned, in which case preconditioning will be necessary. - Coupled methods Coupled methods deal with the system (143) as a whole, computing and (or approximations to them) simultaneously. Among these approaches are direct solvers based on triangular factorizations of the global matrix , as well as iterative algorithms such as KSM applied to the system as a whole (143), usually preconditioned in some way.For direct methods based on triangular factorizations of , we give a brief overview limited to the symmetric case (that is, where , and ), since no specialized direct solver exists for a nonsymmetric Saddle Point problem. Furthermore, we assume that A is positive definite and B has full rank, then the Saddle Point matrix admits the following factorization,where is a diagonal matrix and is a unit lower triangular matrix. To be more precise, A is positive definite, so its decomposition is , where is the unit lower triangular and is the diagonal (also positive definite); additionally, the Schur complement is negative definite, so its decomposition is . Thus, we are able to writewhere . Note that . However, in practice, with the original ordering, the factors will be fairly dense, and sparsity preservation requires the employment of symmetric permutations . Not every sparsity-preserving permutation is suitable, though. It is demonstrated that there are permutation matrices such that the factorization of , where is a diagonal matrix, is not available for . Moreover, some permutations might result in issues with numerical instability [155].Iterative methods are preferred for solving the linear system (143) using coupled methods, as sparse factorization methods are not completely foolproof, especially for Saddle Point systems arising from PDE problems.About the usage of iterative algorithms, we wrote some words about the use of preconditioned KSM for iterative solution of the entire linear system (143). Various preconditioning strategies designed for (generalized) Saddle Point systems are explained. To develop high-quality preconditioners for Saddle Point problems, one must take advantage of the problem’s block structure and possess a comprehensive understanding of the origin and structure of each block [155].We consider block diagonal and block triangular preconditioners for KSM applied to the coupled system as in (143). The basic block diagonal preconditioner is given bywhere both and are approximations of A and S, respectively. Several different approximations have been considered in the literature. A fairly general framework considers a splitting of A intowhere D is invertible and easy to invert. Then and are chosen.The basic block (upper) triangular preconditioner has the formand, on the other hand, the form of a (lower) triangular preconditioner iswhere, as before, both and are approximations of A and S, respectively.For the coupled solution of a linear system (143) using KSM, the availability of reasonable approximations for the block A and the Schur complement S is necessary for the development of excellent block diagonal and block triangular preconditioners. Such approximations are difficult to build and heavily rely on the specific problem at hand.

For other details about the treatment of Saddle Point problems, we suggest reading the monograph by Benzi et al. [155].

6.1.3. The Discrete Model of the Navier–Stokes Equations for Viscoelastic Fluids

- , , and are vectors of basis functions (the symbol denotes the transposition operation);

- M, N, and K indicate the number of nodal points , and at which the various unknowns are defined in each element e;

- , and are vectors of unknowns into the nodal points in each element e, i.e.,

- Continuity equation

- Momentum equation

- Constitutive equation

6.2. Models at the Meso Scale

6.2.1. The Markov Chain Monte Carlo Algorithms

| Algorithm 8 The MCMC Metropolis–Hastings algorithm. |

|

6.2.2. The Cellular Particle Dynamics Algorithms

6.2.3. The Cellular Automata Model Algorithms

- A N-dimensional grid of cells where, for each of them, the set of neighborhood is defined;

- A set of ingredients, i.e., ;

- A set of rules, i.e., .

| Algorithm 9 The asynchronous cellular automata algorithm. |

|

7. Algorithms for HPC Systems in the Exascale Era

7.1. Models for Exascale Computing

Description of multiphysics modeling, associated implementation issues such as coupling or decoupling approaches, and example applications are available in Keyes et al. [162].“Because we strongly advocate examining coupling strength before pursuing a decoupled or split strategy for solving a multiphysics problem, we propose “coupled until proven decoupled” as a perspective worthy of 21st-century simulation purposes and resources.” [162]

7.2. Parallel Solvers for Exascale Computing

- Extreme-scale computers might consist of millions of nodes with thousands of light cores or hundreds of thousands of nodes with more aggressive cores, according to technological advancements. In any case, solvers will need to enable billion-way concurrence with small thread states [157].

- Extreme-scale systems will probably encounter a high number of hardware malfunctions since they will be constructed on a vast number of components, highlighting a resilient and non-deterministic behavior. Applications should therefore anticipate a high frequency of soft mistakes (such as data value changes due to logic latch faults) and hard interruptions (device failure). Current fault-tolerance methods (those based on traditional, full checkpoint/restart) may cause significant overhead and be impractical for these systems. Solvers must therefore be equipped with methods for locating and resolving software errors and interruptions to reduce any detrimental consequences on an application [157].

- Compared to memory technologies, the cost of devices designed for floating-point operations is decreasing more quickly. Recent system trends indicate that the memory per node is already modestly increasing while memory per core is dropping. Therefore, to take advantage of parallelism, solvers will need to reduce synchronization, increase computation on local data, and shift their focus from the typical scenario of weak scaling, which involves using more resources to solve problems of larger sizes, to one that favors strong scaling, which involves using more resources to solve a problem of fixed size in order to reduce the time to solution (see [163] for a definition of weak vs. strong scaling) [157].

- Power consumption will be a major issue for future HPC systems. Although low-energy accelerators and other hardware design elements can lower the needed power, more steps must be taken to further minimize energy consumption. An approach that could be used is algorithmic study [157].

- Communication- and synchronization-avoiding algorithms The expected costs of computation at the Exascale (also in terms of energy consumption) will be more conditioned by movements from/to the memory or between different memories (i.e., “a communication”) than by the execution of floating point operations. So, when solving huge problems on parallel architectures with extreme levels of concurrency, the most significant concern becomes the cost related to communication and synchronization overheads. This is especially the case for preconditioned Krylov methods (see Section 6.1.2). New versions of such algorithms “minimizing communication” should be investigated to address the critical issue of such communication costs (see Section 7.2.3). Likewise, such popular algorithms execute tasks requiring synchronization points, for example, the computation of BLAS operators and the preconditioner applications. Then, additive algorithmic variants (with no synchronization among components) may be more attractive compared to their multiplicative counterparts [164]. Such considerations are taken into account also by “Time integrators” in the Exascale context (see Section 7.2.1). Furthermore, the linear solution process, which is at the base of the solution of almost all described problems in the previous sections, requires constantly moving the sparse matrix entries from the main memory into the CPU, without register or cache reuse. Therefore, methods that demand less memory bandwidth need to be researched. There are promising linear techniques, such as coefficient-matrix-free representations [165], that totally avoid sparse matrices, or Fast Multipole Methods (see Section 7.2.4) [157].

- Mixed-Precision Arithmetic Algorithms Using a combination of single- and double-precision arithmetic in a calculation is known as mixed-precision arithmetic. Single-precision arithmetic is typically faster than double-precision arithmetic, but some computations may be so susceptible to roundoff errors that the entire computation is typically not possible to complete with just a single precision, so mixed-precision arithmetic can be used. In order to quantify the situations in which using mixed-precision arithmetic is advantageous for a given class of algorithms, further research is required, with a particular focus on the numerical behavior of this technique. In addition to speeding up problem-solving, mixed-precision arithmetic might also need less memory. When working with mixed-precision arithmetic, it is crucial to understand how round-off errors spread and affect computed value accuracy [157]. Section 7.2.3 provides examples of mixed-precision techniques for solving linear problems.

- Fault-tolerant and resilient algorithms Allowing for nondeterminism in both data and operations is a beneficial—and perhaps essential—feature that makes fault-tolerant and robust algorithms possible. Algorithms based on pseudorandom number generators offer a multitude of possibilities at the extreme scale. Monte Carlo-like methods, or more in general, stochastic-based algorithms such as Cellular Automata, can identify resilient implementations by using stochastic replications, which can improve concurrency by several orders of magnitude by employing stochastic replications to find robust implementations [157] (see Section 7.2.5). Regarding fault-tolerant algorithms, a possible solution to the problem of global synchronous checkpoint/restart on extreme-scale systems is the use of localized checkpoints and asynchronous recovery. Recent work is focused on algorithm-based fault tolerance (ABFT): for example, much of the ABFT work on linear solvers is based on the use of checksum [157,166].

7.2.1. “Implicit-Explicit” and “Parallel in Time” Solvers

- The initial approximation vectors (see lines 5–7 of Algorithm 10) can be computed concurrently and independently of each other in an “embarrassingly parallel” approach (no communications are needed among tasks);

- The initial vectors (see lines 8–10 of Algorithm 10) can be computed by all the P tasks concurrently and independently of each other;

- In each iteration of the iterative method that is needed to refine the approximation of the vectors (see lines 11–17 of Algorithm 10), the computation of vectors can be performed concurrently and locally to the assigned task p. To complete the computation of , task p should eventually send the vector to its right neighbor task and should receive the vector from its left neighbor task .

| Algorithm 10 The algorithm of the Parallel-In-Time iterative solution of ODE. |

|

7.2.2. Composite Nonlinear Solver

| Algorithm 11 The algorithm implementing the “Newton–Krylov methods” for the approximate solution of Equation (194). |

|

7.2.3. Parallel Iterative and Direct Linear Solver

- Mixed-precision dense and sparse LU factorization based on Iterative Refinement (IR);

- Mixed-Precision GMRES based on IR;

- Compressed-Basis (CB) GMRES.

- Orthonormalize against the orthonormal basis and compute ,

- Orthonormalize columns of by a QR factorization [147].

| Algorithm 12 The m-th step of block-based GMRES Method. |

|

7.2.4. Fast Multipole Methods and “Hierarchical” Matrices

- Each node of is a subset of the index set I;

- I is the root of (i.e., the node at the 0-th level of );

- If is a leaf (i.e, a node at the L-th level of ), then ;

- If is not a leaf whose set of sons is represented by , then and

| Algorithm 13 Procedure for building the block quad-tree corresponding to a cluster tree and an admissibility condition . The index set and the value l to be used in the first call to the recursive BlockClusterQuadTree procedure are such that . |

|

| Algorithm 14 Matrix-vector multiplication of the -matrix (defined on block cluster quad-tree ) with vector . The index sets to be used in the first call to the recursive HMatrix-MVM procedure are such that . |

|

7.2.5. Parallel Monte Carlo Based Methods

- Communication- and synchronization-avoiding: To implement parallelism in an MC-like algorithm, the named dynamic bag-of-work model can be used [191]. Using such a strategy, a large task, related to the computation of M states, is split into smaller independent P subtasks, where each task computes states and where all the P subtasks are executed independently of each other in an “embarrassingly parallel” approach.

- Fault-Tolerant and Resilient: To make an MC-like algorithm resilient to hard failures, an P-out-of-Q strategy can be used [191]. Since MC-based applications “accuracy” depend only on the number M of computed states and not on which random sample set is estimated (provided that all the random samples are independent in a statistical sense), the actual size of the computation is obtained by increasing the number of subtasks from P to Q, where . The whole task is considered to be completed when P partial results, from each subtask of size states, are ready. In the P-out-of-Q approach, more subtasks are needed than are actually scheduled. Therefore, none of these subtasks will become a “key” subtask and at most faulted subtasks can be tolerated. The critical aspect is properly choosing the value Q since a “good” value for Q may prevent a few subtasks from delaying or halting the whole computation. On the other hand, an excessive value for Q could result in a much higher calculation workload with little gain to the computation outcomes. The proper choice of Q in the P-out-of-Q strategy can be determined by considering the average job-completion rate in the computing system. Suppose c is the completion probability of subtasks up to time t in the computing system. Clearly, should be approximately P, i.e., the fraction of the Q subtasks finished should equal to P. Thus, a good choice is [191].To make an MC-like algorithm resilient to a soft fault causing an error in the computation, for example, due to a change in data value in memory, strategies such as the “duplicate checking” or the “majority vote” [191] could be considered. Subtasks are repeated and executed on separate nodes in these algorithms. Comparing the outcomes of the same subtask carried out on other nodes might help identify inaccurate computational results (e.g., by ensuring that the results fall within a suitable confidence interval). When performing duplicate checking, an incorrect result must be found by doubling computations. It takes at least three times as much calculation to find an incorrect computation result in a majority vote.

8. Conclusions and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- D’Amora, U.; Ronca, A.; Scialla, S.; Soriente, A.; Manini, P.; Phua, J.W.; Ottenheim, C.; Pezzella, A.; Calabrese, G.; Raucci, M.G.; et al. Bioactive Composite Methacrylated Gellan Gum for 3D-Printed Bone Tissue-Engineered Scaffolds. Nanomaterials 2023, 13, 772. [Google Scholar] [CrossRef] [PubMed]

- D’Amora, U.; Soriente, A.; Ronca, A.; Scialla, S.; Perrella, M.; Manini, P.; Phua, J.W.; Ottenheim, C.; Di Girolamo, R.; Pezzella, A.; et al. Eumelanin from the Black Soldier Fly as Sustainable Biomaterial: Characterisation and Functional Benefits in Tissue-Engineered Composite Scaffolds. Biomedicines 2022, 10, 2945. [Google Scholar] [CrossRef] [PubMed]

- Ferroni, L.; Gardin, C.; D’Amora, U.; Calzà, L.; Ronca, A.; Tremoli, E.; Ambrosio, L.; Zavan, B. Exosomes of mesenchymal stem cells delivered from methacrylated hyaluronic acid patch improve the regenerative properties of endothelial and dermal cells. Biomater. Adv. 2022, 139, 213000. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; D’Amora, U.; Ronca, A.; Li, Y.; Mo, X.; Zhou, F.; Yuan, M.; Ambrosio, L.; Wu, J.; Raucci, M.G. In vitro and in vivo biocompatibility and inflammation response of methacrylated and maleated hyaluronic acid for wound healing. RSC Adv. 2020, 10, 32183–32192. [Google Scholar] [CrossRef] [PubMed]

- Arjoca, S.; Robu, A.; Neagu, M.; Neagu, A. Mathematical and computational models in spheroid-based biofabrication. Acta Biomater. 2023, 165, 125–139. [Google Scholar] [CrossRef] [PubMed]

- Szychlinska, M.A.; Bucchieri, F.; Fucarino, A.; Ronca, A.; D’Amora, U. Three-dimensional bioprinting for cartilage tissue engineering: Insights into naturally-derived bioinks from land and marine sources. J. Funct. Biomater. 2022, 13, 118. [Google Scholar] [CrossRef]

- Lepowsky, E.; Muradoglu, M.; Tasoglu, S. Towards preserving post-printing cell viability and improving the resolution: Past, present, and future of 3D bioprinting theory. Bioprinting 2018, 11, e00034. [Google Scholar] [CrossRef]

- Bradley, W.; Kim, J.; Kilwein, Z.; Blakely, L.; Eydenberg, M.; Jalvin, J.; Laird, C.; Boukouvala, F. Perspectives on the integration between first-principles and data-driven modeling. Comput. Chem. Eng. 2022, 166, 107898. [Google Scholar] [CrossRef]

- Kovalchuk, S.V.; de Mulatier, C.; Krzhizhanovskaya, V.V.; Mikyška, J.; Paszyński, M.; Dongarra, J.; Sloot, P.M. Computation at the Cutting Edge of Science. J. Comput. Sci. 2024, 102379. [Google Scholar] [CrossRef]

- Naghieh, S.; Chen, X. Printability—A key issue in extrusion-based bioprinting. J. Pharm. Anal. 2021, 11, 564–579. [Google Scholar] [CrossRef] [PubMed]

- Gómez-Blanco, J.C.; Mancha-Sànchez, E.; Marcos, A.C.; Matamoros, M.; Dìaz-Parralejo, A.; Pagador, J.B. Bioink Temperature Influence on Shear Stress, Pressure and Velocity Using Computational Simulation. Processes 2020, 8, 865. [Google Scholar] [CrossRef]

- Karvinen, J.; Kellomaki, M. Design aspects and characterization of hydrogel-based bioinks for extrusion-based bioprinting. Bioprinting 2023, 32, e00274. [Google Scholar] [CrossRef]

- Carlier, A.; Skvortsov, G.A.; Hafezi, F.; Ferraris, E.; Patterson, J.; Koç, B.; Oosterwyck, H.V. Computational model-informed design and bioprinting of cell-patterned constructs for bone tissue engineering. Biofabrication 2016, 8, 025009. [Google Scholar] [CrossRef]

- Hull, S.M.; Brunel, L.G.; Heilshorn, S.C. 3D Bioprinting of Cell-Laden Hydrogels for Improved Biological Functionality. Adv. Mater. 2021, 34, 2103691. [Google Scholar] [CrossRef] [PubMed]