Symbol Detection in Mechanical Engineering Sketches: Experimental Study on Principle Sketches with Synthetic Data Generation and Deep Learning

Abstract

:1. Introduction

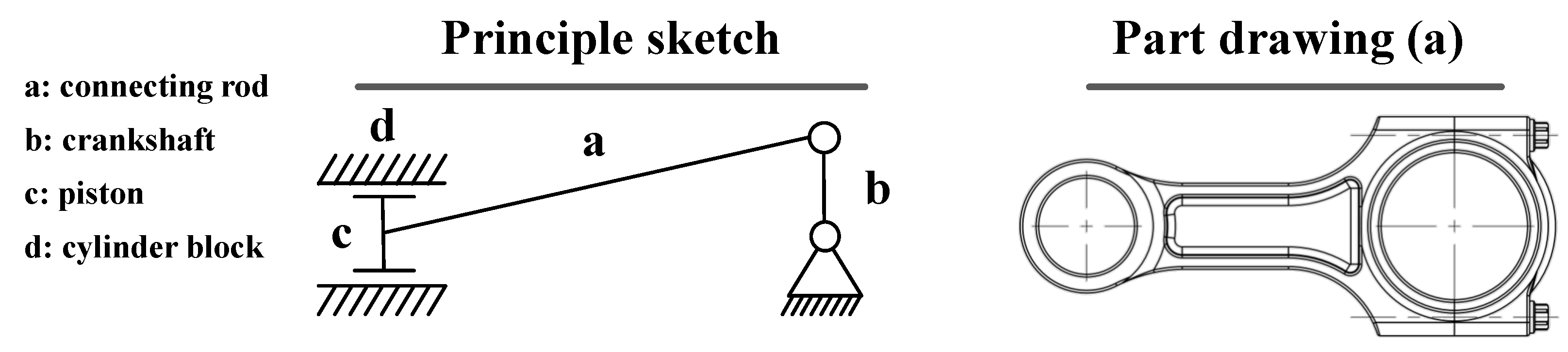

Principle Sketches

2. Related Work

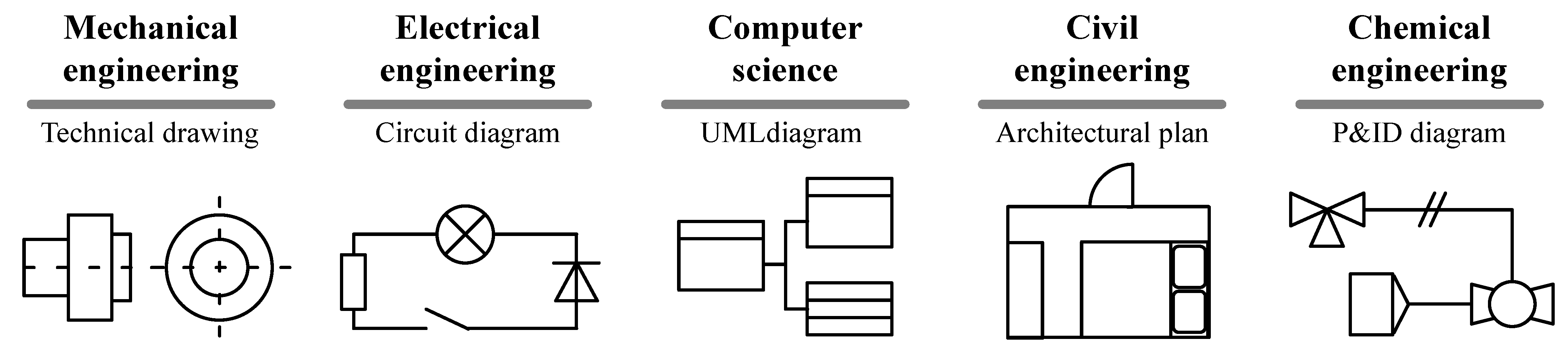

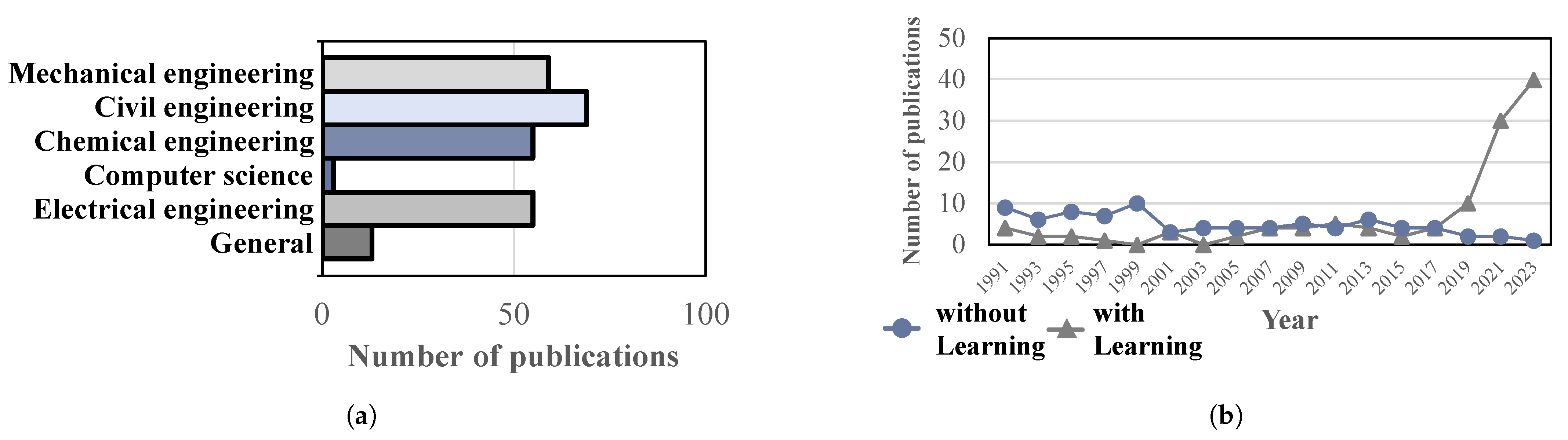

2.1. Sketch Detection

2.2. Object Detection Models

2.3. Summary and Conclusion

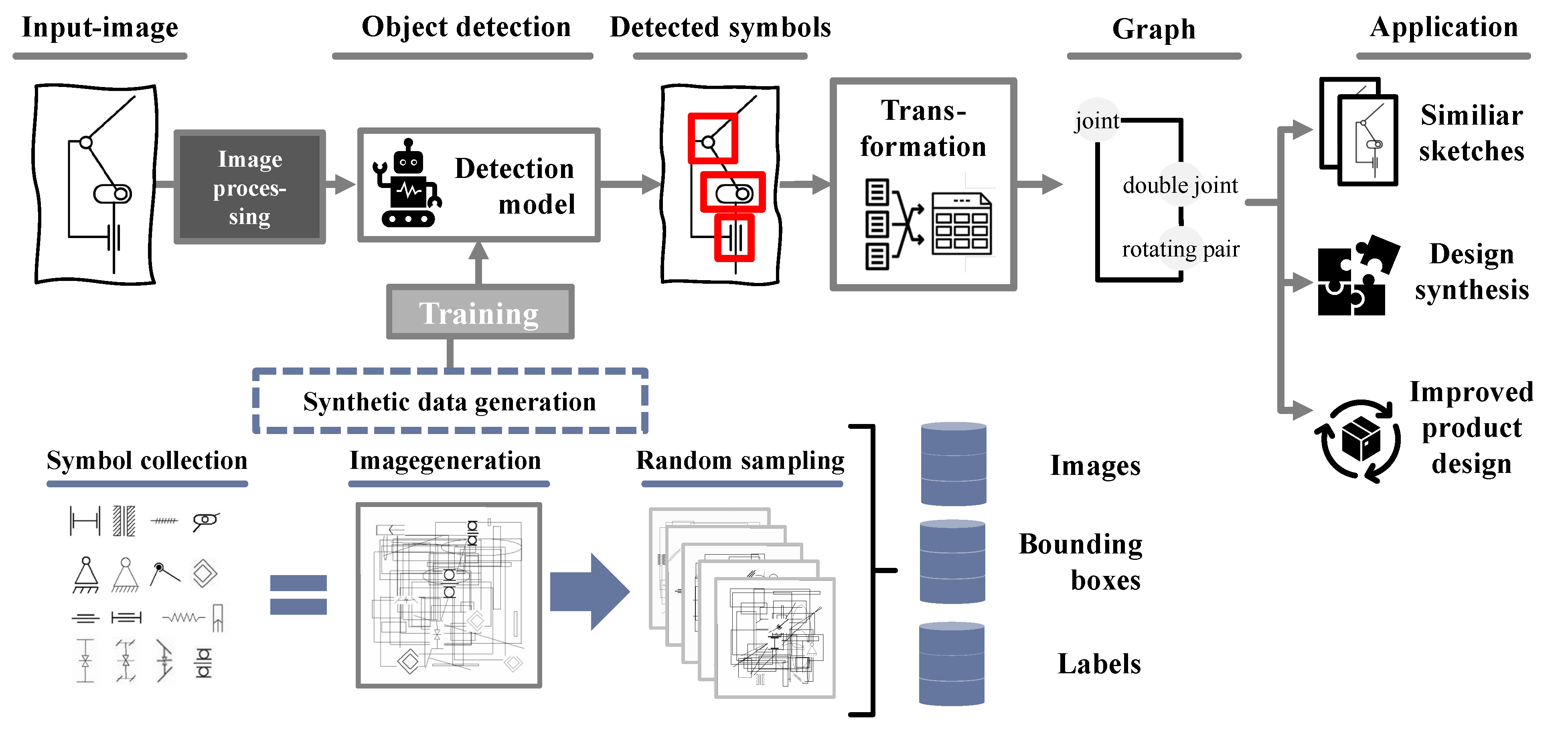

3. Method

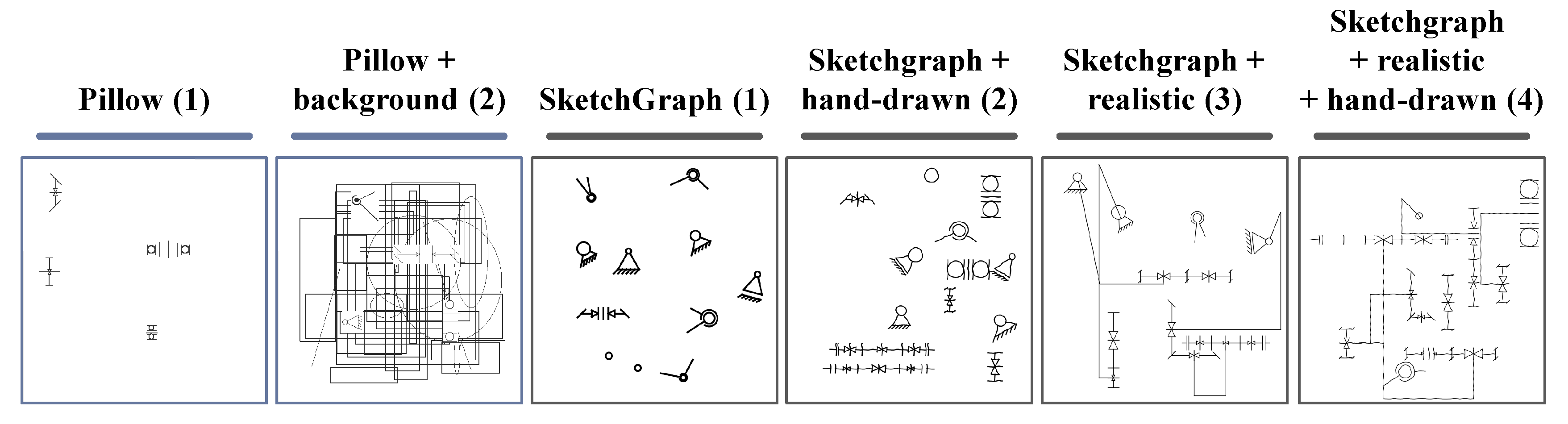

3.1. Data Generation

3.1.1. Pillow

| Algorithm 1: Overview of the data generation algorithm with the Pillow package |

|

3.1.2. SketchGraph

| Algorithm 2: Overview of the data generation algorithm with the SketchGraph package and the Onshape API |

|

4. Experiments

4.1. Training Datasets and Evaluation Metrics

4.2. Implementation

4.3. Results—Gear Stages Dataset

4.4. Results—Unknown Assemblies

4.5. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| BB | Bounding Box |

| CAD | Computer Aided Design |

| CNN | Convolutional Neural Network |

| COCO | Common Objects in Context |

| mAP | Mean Average Precision |

| OCR | Optical Character Recognition |

| RCNN | Region-based Convolutional Neural Network |

| RPN | Region Proposal Network |

| SG | SketchGraph |

| step | STandard for the Exchange of Product model data |

| YOLO | You Only Look Once |

Appendix A. Citation Numbers—Object Detection

| Name | Reference | Citation Number |

|---|---|---|

| Faster RCNN | [15] | 73,707 |

| YOLO: You only look once | [11] | 46,768 |

| Ssd: Single shot multibox detector | [62] | 37,166 |

| RCNN | [12] | 36,358 |

| MASK RCNN | [16] | 34,912 |

| Fast RCNN | [14] | 33,608 |

| RetinaNet | [63] | 28,943 |

| FPN: Feature pyramid | [64] | 25,464 |

| DETR | [65] | 11,003 |

| Cornernet | [66] | 4241 |

Appendix B. Dataset Instance—Overview

| Category | Instances | Category | Instances |

|---|---|---|---|

| bevel gear | 36 | general bearing | 216 |

| bevel gear hollow shaft | 0 | general bearing hollow shaft | 0 |

| ball joint | 0 | swivel joint | 0 |

| planetary gear | 0 | straight gear | 82 |

| planetary gear hollow shaft | 0 | straight gear hollow shaft | 0 |

| fixed bearing | 0 | helical gear | 54 |

| floating bearing | 0 | helical gear hollow shaft | 0 |

| Category | Instances | Category | Instances |

|---|---|---|---|

| bevel gear | 3 | general bearing | 117 |

| bevel gear hollow shaft | 1 | general bearing hollow shaft | 17 |

| ball joint | 0 | swivel joint | 21 |

| planetary gear | 28 | straight gear | 45 |

| planetary gear hollow shaft | 0 | straight gear hollow shaft | 0 |

| fixed bearing | 5 | helical gear | 5 |

| floating bearing | 4 | helical gear hollow shaft | 0 |

Appendix C. Literature Study—Overview

| Source | Year | Title | Engineering Domain | Process Step | Learning Technique | Dataset |

|---|---|---|---|---|---|---|

| [67] | 1979 | A Threshold Selection Method from Gray-Level Histograms | general | preprocessing | without Learning | own |

| [68] | 1990 | A system for interpretation of line drawings | general | detection | without Learning | own |

| [69] | 1994 | Precise line detection from an engineering drawing using a figure fitting method based on contours and skeletons | general | preprocessing | without Learning | own |

| [70] | 1994 | Skeleton generation of engineering drawings via contour matching | general | preprocessing | without Learning | - |

| [71] | 1994 | Finding arrows in utility maps using a neural network | general | detection | with Learning | own |

| [72] | 1996 | Automatic learning and recognition of graphical symbols in engineering drawings | general | detection | without Learning | own |

| [73] | 1998 | A new algorithm for line image vectorization | general | preprocessing | without Learning | - |

| [74] | 2000 | Adaptive document image binarization | general | preprocessing | without Learning | own |

| [75] | 2006 | Robust and accurate vectorization of line drawings | general | preprocessing | without Learning | own |

| [76] | 2012 | Multi-Level Block Information Extraction in Engineering Drawings Based on Depth-First Algorithm | general | detection + contextualization | without Learning | own |

| [77] | 2017 | Adaptive document image skew estimation | general | preprocessing | with Learning | own |

| [78] | 2019 | Anchor Point based Hough Transformation for Automatic Line Detection of Engineering Drawings | general | preprocessing | without Learning | own |

| [79] | 2020 | Deep Vectorization of Technical Drawings | general | preprocessing | with Learning | open source |

| [80] | 1993 | Management of graphical symbols in a CAD environment: A neural network approach | civil | detection | with Learning | own |

| [81] | 1994 | Symbol recognition in a CAD enviroment using a neural network | civil | detection | with Learning | own |

| [82] | 1997 | A system to understand hand-drawn floor plans using subgraph isomorphism and Hough transform | civil | detection | without Learning | own |

| [83] | 1998 | A constraint network for symbol detection in architectural drawings | civil | detection | without Learning | own |

| [84] | 2000 | A complete system for the analysis of architectural drawings | civil | detection + contextualization | without Learning | own |

| [85] | 2001 | Architectural symbol recognition using a network of constraints | civil | detection | without Learning | own |

| [86] | 2001 | Symbol recognition by error-tolerant subgraph matching between region adjacency graphs | civil | detection | without Learning | own |

| [87] | 2002 | An object-oriented progressive-simplification-based vectorization system for engineering drawings: model, algorithm, and performance | civil | preprocessing + detection | without Learning | own |

| [88] | 2003 | A model for image generation and symbol recognition through the deformation of lineal shapes | civil | detection | without Learning | synthetic |

| [89] | 2005 | Using engineering drawing interpretation for automatic detection of version information in CADD engineering drawing | civil | detection | without Learning | own |

| [90] | 2007 | Automatic analysis and integration of architectural drawings | civil | detection + contextualization | without Learning | own |

| [91] | 2008 | Knowledge Extraction from Structured Engineering Drawings | civil | detection | without Learning | own |

| [92] | 2009 | Symbol Detection Using Region Adjacency Graphs and Integer Linear Programming | civil | detection | without Learning | open source |

| [10] | 2010 | Generation of synthetic documents for performance evaluation of symbol recognition & spotting systems | civil | detection | with Learning | synthetic |

| [93] | 2010 | Symbol spotting in vectorized technical drawings through a lookup table of region strings | civil | detection | without Learning | own |

| [94] | 2011 | Statistical Grouping for Segmenting Symbols Parts from Line Drawings, with Application to Symbol Spotting | civil | detection | without Learning | open source |

| [95] | 2012 | Object recognition in floor plans by graphs of white connected components | civil | detection | without Learning | own + open source |

| [96] | 2013 | Geometric-based symbol spotting and retrieval in technical line drawings | civil | detection | with Learning | own + open source |

| [97] | 2013 | Building a Symbol Library from Technical Drawings by Identifying Repeating Patterns | civil | detection | with Learning | open source |

| [98] | 2013 | Combining geometric matching with SVM to improve symbol spotting | civil | detection | with Learning | open source |

| [99] | 2013 | Efficient symbol retrieval by building a symbol index from a collection of line drawings | civil | detection | with Learning | open source |

| [100] | 2013 | A symbol spotting approach in graphical documents by hashing serialized graphs | civil | detection | without Learning | open source |

| [101] | 2014 | Data Extraction from DXF File and Visual Display | civil | detection + contextualization | without Learning | own |

| [102] | 2016 | Automatic Hyperlinking of Engineering Drawing Documents | civil | detection | without Learning | own |

| [103] | 2017 | Graph-Based Deep Learning for Graphics Classification | civil | detection | with Learning | open source |

| [104] | 2018 | Extraction of Ancient Map Contents Using Trees of Connected Components | civil | detection | without Learning | own |

| [105] | 2018 | Object Detection in Floor Plan Images | civil | detection | with Learning | own |

| [106] | 2019 | Graph Neural Network for Symbol Detection on Document Images | civil | detection | with Learning | open source |

| [18] | 2019 | Symbol spotting for architectural drawings: state-of-the-art and new industry-driven developments | civil | detection | with Learning | own |

| [9] | 2019 | BRIDGE: Building Plan Repository for Image Description Generation, and Evaluation | civil | detection | with Learning | open source |

| [17] | 2020 | Symbol Spotting on Digital Architectural Floor Plans Using a Deep Learning-based Framework | civil | detection | with Learning | own / Open Source |

| [21] | 2020 | Floor Plan Recognition and Vectorization Using Combination UNet, Faster-RCNN, Statistical Component Analysis and Ramer-Douglas-Peucker | civil | detection | with Learning | own |

| [107] | 2021 | Knowledge-driven description synthesis for floor plan interpretation | civil | detection + contextualization | with Learning | open source |

| [25] | 2021 | Fine Grained Feature Representation Using Computer Vision Techniques for Understanding Indoor Space | civil | detection | with Learning | open source |

| [108] | 2021 | FloorPlanCAD: A Large-Scale CAD Drawing Dataset for Panoptic Symbol Spotting | civil | detection | with Learning | open source |

| [23] | 2021 | Towards Robust Object Detection in Floor Plan Images: A Data Augmentation Approach | civil | detection | with Learning | open source |

| [109] | 2021 | PU learning-based recognition of structural elements in architectural floor plans | civil | detection | with Learning | own + open source |

| [110] | 2021 | 3DPlanNet: Generating 3D Models from 2D Floor Plan Images Using Ensemble Methods | civil | detection + contextualization | with Learning | open source |

| [22] | 2022 | Automatic Detection and Classification of Symbols in Engineering Drawings | civil | detection | with Learning | own |

| [111] | 2022 | CADTransformer: Panoptic Symbol Spotting Transformer for CAD Drawings | civil | detection | with Learning | open source |

| [112] | 2022 | GAT-CADNet: Graph Attention Network for Panoptic Symbol Spotting in CAD Drawings | civil | detection | with Learning | open source |

| [24] | 2022 | Mask-Aware Semi-Supervised Object Detection in Floor Plans | civil | detection | with Learning | open source |

| [113] | 2022 | Designing a Human-in-the-Loop System for Object Detection in Floor Plans | civil | Erkennung | with Learning | synthetisches Dataset |

| [114] | 2023 | Digitalization of 2D Bridge Drawings Using Deep Learning Models | civil | detection + contextualization | with Learning | own + synthetic |

| [115] | 2023 | Improving Symbol Detection on Engineering Drawings Using a Keypoint-Based Deep Learning Approach | civil | detection | with Learning | synthetic |

| [116] | 2023 | You Only Look for a Symbol Once: An Object Detector for symbols and Regions in Documents. | civil | detection | with Learning | open source |

| [20] | 2023 | Leveraging Deep Convolutional Neural Network for Point Symbol Recognition in Scanned Topographic Maps | civil | detection | with Learning | own |

| [19] | 2023 | Towards Automatic Digitalization of Railway Engineering Schematics | civil | detection | with Learning | own |

| [117] | 2024 | Deep learning-based text detection and recognition on architectural floor plans | civil | detection | with Learning | open source + synthetic |

| [118] | 1997 | Adaptive Vectorization of Line Drawing Images | civil + electrical | preprocessing | without Learning | own |

| [119] | 2005 | Symbol recognition via statistical integration of pixel-level constraint histograms: a new descriptor | civil + electrical | detection | with Learning | open source |

| [120] | 2006 | Symbol recognition with kernel density matching | civil + electrical | detection | with Learning | open source |

| [121] | 2006 | Symbol Spotting in Technical Drawings Using Vectorial Signatures | civil + electrical | detection | without Learning | open source |

| [122] | 2007 | A Bayesian classifier for symbol recognition | civil + electrical | detection | with Learning | open source |

| [123] | 2007 | A New Syntactic Approach to Graphic Symbol Recognition | civil + electrical | detection | without Learning | open source |

| [124] | 2008 | On the Combination of Ridgelets Descriptors for Symbol Recognition | civil + electrical | detection | with Learning | open source |

| [125] | 2009 | Graphic Symbol Recognition Using Graph Based Signature and Bayesian Network Classifier | civil + electrical | detection | with Learning | own |

| [126] | 2010 | A Bayesian network for combining descriptors: application to symbol recognition | civil + electrical | detection | with Learning | open source |

| [127] | 2011 | A New Adaptive Structural Signature for Symbol Recognition by Using a Galois Lattice as a Classifier | civil + electrical | detection | with Learning | open source |

| [128] | 2019 | GSD-Net: Compact Network for Pixel-Level Graphical Symbol Detection | civil + electrical | detection | with Learning | open source |

| [129] | 2002 | TIF2VEC, An Algorithm for Arc Segmentation in Engineering Drawings | civil + electrical + mechanical | preprocessing | without Learning | open source |

| [130] | 2014 | BoR: BAG-OF-RELATIONS FOR SYMBOL RETRIEVAL | civil + electrical + P&ID | detection | without Learning | open source |

| [131] | 2013 | Img2UML: A System for Extracting UML Models from Images | computer science | detection + contextualization | without Learning | own |

| [132] | 2014 | Automatic Classification of UML Class Diagrams from Images | computer science | detection | with Learning | own |

| [133] | 2021 | Multiclass Classification of UML Diagrams from Images Using Deep Learning | computer science | detection | with Learning | own |

| [134] | 1982 | Automatic Interpretation of Lines and Text in Circuit Diagrams | electrical | detection + contextualization | without Learning | - |

| [135] | 1985 | Symbol recognition in electrical diagrams using probabilistic graph matching | electrical | detection | with Learning | own |

| [136] | 1988 | An automatic circuit diagram reader with loop-structure-based symbol recognition | electrical | detection | with Learning | open source |

| [137] | 1988 | A topology-based component extractor for understanding electronic circuit diagrams | electrical | detection | with Learning | own |

| [138] | 1990 | Translation-,rotation- and scale- invariant recognition of hand-drawn symbols in schematic diagrams | electrical | detection | with Learning | own |

| [139] | 1992 | Recognizing Hand-Drawn Electrical Circuit Symbols with Attributed Graph Matching | electrical | |||

| [140] | 1993 | Recognition of logic diagrams by identifying loops and rectilinear polylines | electrical | detection | without Learning | own |

| [141] | 1993 | A symbol recognition system | electrical | detection | with Learning | own |

| [142] | 1993 | A new system for the analysis of schematic diagrams | electrical | detection | without Learning | own |

| [143] | 1995 | Automatic understanding of symbol-connected diagrams | electrical | contextu-alization | without Learning | own |

| [144] | 2003 | Engineering drawings recognition using a case-based approach | electrical | detection | without Learning | own |

| [145] | 2003 | Symbol recognition in electronic diagrams using decision tree | electrical | detection | without Learning | own |

| [146] | 2004 | Extracting System-Level Understanding from Wiring Diagram Manuals | electrical | detection | without Learning | own |

| [147] | 2009 | A visual approach to sketched symbol recognition | electrical | detection | without Learning | own |

| [148] | 2009 | On-line hand-drawn electric circuit diagram recognition using 2D dynamic programming | electrical | detection | with Learning | own |

| [149] | 2011 | Recognition of electrical symbols in document images using morphology and geometric analysis | electrical | detection | without Learning | own |

| [150] | 2012 | Symbol recognition using spatial relations | electrical | detection | without Learning | own |

| [151] | 1995 | Electronic Schematic Recognition | electrical | detection + contextualization | without Learning | own |

| [152] | 2015 | Detection and identification of logic gates from document images using mathematical morphology | electrical | detection | with Learning | own |

| [153] | 2016 | Hand Drawn Optical Circuit Recognition | electrical | detection | with Learning | own |

| [154] | 2017 | Recognizing Electronic Circuits to Enrich Web Documents for Electronic Simulation | electrical | detection + contextualization | with Learning | own |

| [155] | 2018 | Analysis of methods for automated symbol recognition in technical drawings | electrical | detection | with Learning | own |

| [156] | 2019 | Automatic Abstraction of Combinational Logic Circuit from Scanned Document Page Images | electrical | contextu-alization | with Learning | own |

| [157] | 2020 | CIM/G graphics automatic generation in substation primary wiring diagram based on image recognition | electrical | Erkennung | with Learning | own |

| [35] | 2021 | Graph-Based Object Detection Enhancement for Symbolic Engineering Drawings | electrical | contextu-alization | with Learning | own |

| [158] | 2021 | A public ground-truth dataset for handwritten circuit diagram images | electrical | detection | with Learning | open source |

| [159] | 2022 | Substation One-Line Diagram Automatic Generation Based On Image Recongnition | electrical | detection + contextualization | with Learning | own |

| [160] | 2022 | Symbol Spotting in Electronic Images Using Morphological Filters and Hough Transform | electrical | detection | without Learning | open source |

| [161] | 2023 | Instance segmentation based graph extraction for handwritten circuit diagram images | electrical | detection | with Learning | open source |

| [162] | 2023 | ElectroNet: An Enhanced Model for Small-Scale Object Detection in Electrical Schematic Diagrams | electrical | detection + contextualization | with Learning | own |

| [163] | 2023 | Single Line Electrical Drawings (SLED): A Multiclass Dataset Benchmarked by Deep Neural Networks | electrical | Erkennung | with Learning | open source |

| [164] | 2023 | Intelligent Digitization of Substation One-Line Diagrams Based on Computer Vision | electrical | detection + contextualization | with Learning | synthetic |

| [36] | 2023 | Song, Aibo and Kun, Huang and Peng, Bowen and Chen, Rui and Zhao, Kun and Qiu, Jingyi and Wang, Kaixuan | electrical | detection + contextualization | with Learning | own |

| [165] | 2007 | An interactive example-driven approach to graphics recognition in engineering drawings | electrical + civil | detection | without Learning | own |

| [166] | 2008 | Spotting Symbols in Line Drawing Images Using Graph Representations | electrical + civil | detection | without Learning | own |

| [167] | 1994 | Isolating symbols from connection lines in a class of engineering drawings | electrical + P&ID | detection | without Learning | own |

| [168] | 1997 | A System for Recognizing a Large Class of Engineering Drawings | electrical + P&ID | detection | without Learning | own |

| [169] | 2014 | Accurate junction detection and characterization in line-drawing images | electrical, civil | detection | without Learning | open source |

| [170] | 1996 | Vector-based segmentation of text connected to graphics in engineering drawings | electrical, mechanical, civil | detection | without Learning | own |

| [171] | 1989 | Processing of engineering line drawings for automatic input to CAD | mechanical | preprocessing | without Learning | - |

| [172] | 1989 | Automatic Scanning and Interpretation of Engineering Drawings for CAD-Processes | mechanical | detection | without Learning | own |

| [173] | 1990 | Engineering drawing processing and vectorization system | mechanical | preprocessing | without Learning | - |

| [174] | 1990 | Interpretation of line drawings with multiple views | mechanical | detection + contextualization | without Learning | own |

| [175] | 1990 | Randomized Hough Transform (RHT) in Engineering Drawing Vectorization System | mechanical | detection | without Learning | own |

| [176] | 1991 | Detection of dashed lines in engineering drawings and maps | mechanical | detection | without Learning | own |

| [177] | 1992 | Celesstin: CAD conversion of mechanical drawings | mechanical | detection + contextualization | without Learning | own |

| [178] | 1992 | Dimensioning analysis | mechanical | detection | without Learning | own |

| [179] | 1992 | Knowledge-directed interpretation of mechanical engineering drawings | mechanical | detection | without Learning | own |

| [180] | 1993 | Recognition of dimensions in engineering drawings based on arrowhead | mechanical | detection | without Learning | own |

| [181] | 1994 | Detection of dimension sets in engineering drawings | mechanical | detection | without Learning | own |

| [182] | 1994 | Knowledge organization and interpretation process in engineering drawing interpretation | mechanical | detection | without Learning | own |

| [183] | 1994 | Syntactic analysis of technical drawing dimensions | mechanical | detection | without Learning | own |

| [184] | 1995 | Recognition of dimension sets and integration with vectorized engineering drawings | mechanical | detection + contextualization | without Learning | own |

| [185] | 1995 | Vector-based arc segmentation in the machine drawing understanding system environment | mechanical | detection | without Learning | own |

| [186] | 1996 | Functional parts detection in engineering drawings: Looking for the screws | mechanical | detection | without Learning | own |

| [187] | 1996 | A clustering-based approach to the separation of text strings from mixed text/graphics documents | mechanical | detection | with Learning | own |

| [188] | 1996 | Perfecting Vectorized Mechanical Drawings | mechanical | preprocessing | without Learning | own |

| [189] | 1996 | Arrowhead recognition during automated data capture | mechanical | detection | without Learning | own |

| [190] | 1997 | Orthogonal Zig-Zag: An algorithm for vectorizing engineering drawings compared with Hough Transform | mechanical | detection | without Learning | own |

| [191] | 1998 | Detection of text regions from digital engineering drawings | mechanical | detection | without Learning | own |

| [192] | 1998 | Generating multiple new designs from a sketch | mechanical | detection + contextualization | without Learning | own |

| [193] | 1998 | Segmentation and Recognition of Dimensioning Text from Engineering Drawings | mechanical | detection | without Learning | own |

| [194] | 1998 | A system for automatic recognition of engineering drawing entities | mechanical | detection + preprocessing | without Learning | own |

| [195] | 1999 | Automated CAD conversion with the Machine Drawing Understanding System: concepts, algorithms, and performance | mechanical | detection | without Learning | own |

| [196] | 1999 | Automatic extraction of manufacturable features from CADD models using syntactic pattern recognition techniques | mechanical | detection + contextualization | without Learning | own |

| [197] | 1999 | Dimension sets detection in technical drawings | mechanical | detection | without Learning | own |

| [198] | 1999 | A complete system for the intelligent interpretation of engineering drawings | mechanical | detection + contextualization | without Learning | own |

| [199] | 2000 | Symbol and character recognition: application to engineering drawings | mechanical | detection | with Learning | own |

| [200] | 2000 | Engineering Drawing Database Retrieval Using Statistical Pattern Spotting Techniques | mechanical | detection | with Learning | own |

| [201] | 2001 | Intelligent system for extraction of product data from CADD models | mechanical | detection + contextualization | with Learning | own |

| [202] | 2004 | Strategy for Line Drawing Understanding | mechanical | detection | without Learning | own |

| [203] | 2004 | A new way to detect arrows in line drawings | mechanical | detection | without Learning | own |

| [204] | 2009 | Information extraction from scanned engineering drawings | mechanical | detection + contextualization | without Learning | own |

| [205] | 2010 | An information extraction of title panel in engineering drawings and automatic generation system of three statistical tables | mechanical | detection + contextualization | without Learning | own |

| [206] | 2011 | From engineering diagrams to engineering models: Visual recognition and applications | mechanical | detection + contextualization | with Learning | synthetic |

| [207] | 2016 | Dimensional Arrow Detection from CAD Drawings | mechanical | detection | without Learning | own |

| [40] | 2017 | ConvNet-Based Optical Recognition for Engineering Drawings | mechanical | detection | with Learning | own |

| [208] | 2019 | Detection of Primitives in Engineering Drawing using Genetic Algorithm | mechanical | preprocessing | without Learning | open source |

| [209] | 2021 | Extraction of dimension requirements from engineering drawings for supporting quality control in production processes | mechanical | detection | with Learning | own |

| [210] | 2021 | An Automated Engineering Assistant: Learning Parsers for Technical Drawings | mechanical | detection + contextualization | with Learning | own |

| [211] | 2022 | Data Augmentation of Engineering Drawings for Data-Driven Component Segmentation | mechanical | detection | with Learning | synthetic |

| [37] | 2022 | AI-Based Engineering and Production Drawing Information Extraction | mechanical | detection | with Learning | synthetic |

| [212] | 2022 | Unsupervised and hybrid vectorization techniques for 3D reconstruction of engineering drawings | mechanical | detection + contextualization | with Learning | own |

| [213] | 2023 | Graph neural network-enabled manufacturing method classification from engineering drawings | mechanical | detection + contextualization | with Learning | own |

| [214] | 2023 | An Approach to Engineering Drawing Organization: Title Block Detection and Processing | mechanical | detection + contextualization | with Learning | own |

| [39] | 2023 | AI-Based Method for Frame Detection in Engineering Drawings | mechanical | detection | with Learning | own |

| [215] | 2023 | Component segmentation of engineering drawings using Graph Convolutional Networks | mechanical | detection + contextualization | with Learning | own |

| [38] | 2023 | Integration of Deep Learning for Automatic Recognition of 2D Engineering Drawings | mechanical | detection + contextualization | with Learning | own |

| [216] | 2024 | Tolerance Information Extraction for Mechanical Engineering Drawings–A Digital Image Processing and Deep Learning-based Model | mechanical | detection | with Learning | own |

| [217] | 2012 | An improved example-driven symbol recognition approach in engineering drawings | mechanical + civil | detection | without Learning | own + open source |

| [218] | 2018 | Hand-written and machine-printed text classification in architecture, engineering & construction documents | mechanical + civil | detection | with Learning | own |

| [219] | 2005 | An image-based, trainable symbol recognizer for hand-drawn sketches | mechanical + electrical | detection | with Learning | own |

| [220] | 2006 | An Efficient Graph-Based Symbol Recognizer | mechanical + electrical | detection | with Learning | own |

| [221] | 2007 | An efficient graph-based recognizer for hand-drawn symbols | mechanical + electrical | detection | with Learning | own |

| [206] | 2011 | Neural network-based symbol recognition using a few labeled samples | mechanical + electrical | detection | with Learning | synthetic + open source |

| [222] | 1994 | Graphic symbol recognition using a signature technique | P&ID | detection | without Learning | own |

| [223] | 1998 | Computer interpretation of process and instrumentation drawings | P&ID | detection | without Learning | own |

| [224] | 2006 | Using process topology in plant-wide control loop performance assessment | P&ID | contextu-alization | without Learning | - |

| [225] | 2009 | Graphic Symbol Recognition Using Auto Associative Neural Network Model | P&ID | detection | with Learning | own |

| [226] | 2015 | A 2D Engineering Drawing and 3D Model Matching Algorithm for Process Plant | P&ID | detection + contextualization | without Learning | own |

| [227] | 2016 | Automatische Analyse und detection graphischer Inhalte von SVG-basierten Engineering-Dokumenten | P&ID | detection | without Learning | own |

| [228] | 2017 | Heuristics-Based Detection to Improve Text/Graphics Segmentation in Complex Engineering Drawings | P&ID | detection | without Learning | own (industry) |

| [229] | 2018 | Symbols Classification in Engineering Drawings | P&ID | detection | with Learning | own |

| [230] | 2019 | A Digitization and Conversion Tool for Imaged Drawings to Intelligent Piping and Instrumentation Diagrams (P&ID) | P&ID | detection + contextualization | without Learning | own |

| [231] | 2019 | Automatic Information Extraction from Piping and Instrumentation Diagrams | P&ID | detection | with Learning | own |

| [232] | 2019 | Applying graph matching techniques to enhance reuse of plant design information | P&ID | contextu-alization | without Learning | own |

| [233] | 2019 | Features Recognition from Piping and Instrumentation Diagrams in Image Format Using a Deep Learning Network | P&ID | detection | with Learning | own |

| [26] | 2020 | Deep learning for symbols detection and classification in engineering drawings | P&ID | detection | with Learning | own (industry) |

| [234] | 2020 | Symbols in Engineering Drawings (SiED): An Imbalanced Dataset Benchmarked by Convolutional Neural Networks | P&ID | detection | with Learning | open source |

| [235] | 2020 | Object Detection in Design Diagrams with Machine Learning | P&ID | detection | with Learning | synthetic |

| [32] | 2020 | Deep Neural Network for Automatic Image Recognition of Engineering Diagrams | P&ID | detection | with Learning | own |

| [33] | 2020 | CNN-Based Symbol Recognition in Piping Drawings | P&ID | detection | with Learning | synthetic |

| [236] | 2020 | Graph-Based Manipulation Rules for Piping and Instrumentation Diagrams | P&ID | contextu-alization | without Learning | own |

| [237] | 2020 | Deep Learning for Text Detection and Recognition in Complex Engineering Diagrams | P&ID | detection | with Learning | own |

| [238] | 2020 | Automatic Digitization of Engineering Diagrams using Deep Learning and Graph Search | P&ID | detection + contextualization | with Learning | own |

| [239] | 2020 | Reducing human effort in engineering drawing validation | P&ID | detection + contextualization | with Learning | own |

| [240] | 2020 | Integrating 2D and 3D Digital Plant Information Towards Automatic Generation of Digital Twins | P&ID | detection + contextualization | without Learning | own |

| [241] | 2020 | Component detection in piping and instrumentation diagrams of nuclear power plants based on neural networks | P&ID | detection + contextualization | with Learning | open source |

| [242] | 2021 | OSSR-PID: One-Shot Symbol Recognition in P&ID Sheets using Path Sampling and GCN | P&ID | detection | with Learning | synthetic |

| [243] | 2021 | Deep Learning-Based Method to Recognize Line Objects and Flow Arrows from Image-Format Piping and Instrumentation Diagrams for Digitization | P&ID | detection | with Learning | own (industry) |

| [244] | 2021 | Group of components detection in engineering drawings based on graph matching | P&ID | contextu-alization | without Learning | own |

| [245] | 2021 | Automatic Digitization of Engineering Diagrams using Intelligent Algorithms | P&ID | detection | without Learning | own |

| [246] | 2021 | Engineering Drawing Validation Based on Graph Convolutional Networks | P&ID | detection | with Learning | own |

| [247] | 2021 | Digitize-PID: Automatic Digitization of Piping and Instrumentation Diagrams | P&ID | detection + contextualization | with Learning | own |

| [248] | 2021 | Automatic digital twin data model generation of building energy systems from piping and instrumentation diagrams | P&ID | detection | with Learning | own |

| [30] | 2021 | Identification of Objects in Oilfield Infrastructure using Engineering Diagram and Machine Learning Methods | P&ID | detection | with Learning | own |

| [249] | 2021 | Object detection for P&ID images using various deep learning techniques | P&ID | detection | with Learning | own |

| [27] | 2022 | Pattern Recognition Method for Detecting Engineering Errors on Technical Drawings | P&ID | detection | with Learning | own |

| [250] | 2022 | Enhanced Symbol Recognition based on Advanced Data Augmentation for Engineering Diagrams | P&ID | detection | with Learning | own + synthetic |

| [251] | 2022 | Modern Deep Learning Approaches for Symbol Detection in Complex Engineering Drawings | P&ID | detection | with LearningL | own |

| [252] | 2022 | End-to-end digitization of image format piping and instrumentation diagrams at an industrially applicable level | P&ID | detection + contextualization | with Learning | own |

| [253] | 2022 | Automated Valve Detection in Piping and Instrumentation (P&ID) Diagrams | P&ID | detection | with Learning | own |

| [254] | 2023 | A Symbol Recognition System for Single-Line Diagrams Developed Using a Deep-Learning Approach | P&ID | detection | with Learning | own + synthetic |

| [239] | 2023 | Reducing human effort in engineering drawing validation | P&ID | contextu-alization | with Learning | own |

| [29] | 2023 | Advancing P&ID Digitization with YOLOv5 | P&ID | detection | with Learning | own |

| [28] | 2023 | Improved P&ID Symbol Detection Algorithm Based on YOLOv5 Network | P&ID | detection | with Learning | own |

| [31] | 2023 | A Complete Piping Identification Solution for Piping and Instrumentation Diagrams | P&ID | detection | with Learning | own |

| [255] | 2023 | Automatic anomaly detection in engineering diagrams using machine learning | P&ID | detection + contextualization | with Learning | own |

| [256] | 2023 | Digitization of chemical process flow diagrams using deep convolutional neural networks | P&ID | detection | with Learning | own |

| [257] | 2023 | Extraction of line objects from piping and instrumentation diagrams using an improved continuous line detection algorithm | P&ID | detection + contextualization | with Learning | own |

| [258] | 2023 | Classification of Functional Types of Lines in P&IDs Using a Graph Neural Network | P&ID | detection | with Learning | own |

| [259] | 2023 | Demonstrating Automated Generation of Simulation Models from Engineering Diagrams | P&ID | detection + contextualization | with Learning | synthetisches Dataset |

| [31] | 2023 | A Complete Piping Identification Solution for Piping and Instrumentation Diagrams | P&ID | detection + contextualization | with Learning | own |

| [260] | 2024 | Rule-based continuous line classification using shape and positional relationships between objects in piping and instrumentation diagram | P&ID | detection | ohne ML | own |

| [261] | 2024 | Image format pipeline and instrument diagram recognition method based on deep learning | P&ID | detection + contextualization | with Learning | OpenSource Dataset |

| [262] | 2024 | Semi-supervised symbol detection for piping and instrumentation drawings | P&ID | detection + contextualization | with Learning | own |

| [34] | 2024 | Auto-Routing Systems (ARSs) with 3D Piping for Sustainable Plant Projects Based on Artificial Intelligence (AI) and Digitalization of 2D Drawings and Specifications | P&ID | detection + contextualization | with Learning | own |

References

- Vlah, D.; Kastrin, A.; Povh, J.; Vukašinović, N. Data-driven engineering design: A systematic review using scientometric approach. Adv. Eng. Inform. 2022, 54, 101774. [Google Scholar] [CrossRef]

- Pakkanen, J.; Huhtala, P.; Juuti, T.; Lehtonen, T. Achieving Benefits with Design Reuse in Manufacturing Industry. Procedia CIRP 2016, 50, 8–13. [Google Scholar] [CrossRef]

- Isaksson, O.; Hallstedt, S.I.; Rönnbäck, A.Ö. Digitalisation, sustainability and servitisation: Consequences on product development capabilities in manufacturing firms. In Proceedings of the DS 91: NordDesign 2018, Linköping, Sweden, 14–17 August 2018. [Google Scholar]

- Hahne, M. Systematisches Konstruieren: Praxisnah und Prägnant; Springer: Berlin/Heidelberg, Germany, 2019. [Google Scholar]

- Produktentwicklung und Projektmanagement. Konstruktionsmethodik—Methodisches Entwickeln von Lösungsprinzipien; VDI: Dusseldorf, Germany, 1997. [Google Scholar]

- Roth, K. Konstruieren mit Konstruktionskatalogen: Band 1: Konstruktionslehre, 3rd ed.; Erweitert und Neu Gestaltet; Springer: Berlin/Heidelberg, Germany, 2000. [Google Scholar] [CrossRef]

- Moreno-García, C.F.; Elyan, E.; Jayne, C. New trends on digitisation of complex engineering drawings. Neural Comput. Appl. 2019, 31, 1695–1712. [Google Scholar] [CrossRef]

- Valveny, E.; Dosch, P. Symbol recognition contest: A synthesis. In Proceedings of the International Workshop on Graphics Recognition, Barcelona, Spain, 30–31 July 2003; Springer: Berlin/Heidelberg, Germany, 2003; pp. 368–385. [Google Scholar]

- Goyal, S.; Mistry, V.; Chattopadhyay, C.; Bhatnagar, G. BRIDGE: Building Plan Repository for Image Description Generation, and Evaluation. In Proceedings of the 2019 International Conference on Document Analysis and Recognition (ICDAR), Sydney, Australia, 20–25 September 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar] [CrossRef]

- Delalandre, M.; Valveny, E.; Pridmore, T.; Karatzas, D. Generation of synthetic documents for performance evaluation of symbol recognition & spotting systems. Int. J. Doc. Anal. Recognit. (IJDAR) 2010, 13, 187–207. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Advances in Neural Information Processing Systems; Pereira, F., Burges, C., Bottou, L., Weinberger, K., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2012; Volume 25. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems 28 (NIPS 2015), Montreal, QC, Canada, 7–12 December 2015; Volume 28. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Rezvanifar, A.; Cote, M.; Albu, A.B. Symbol Spotting on Digital Architectural Floor Plans Using a Deep Learning-based Framework. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar] [CrossRef]

- Rezvanifar, A.; Cote, M.; Branzan Albu, A. Symbol spotting for architectural drawings: State-of-the-art and new industry-driven developments. IPSJ Trans. Comput. Vis. Appl. 2019, 11, 2. [Google Scholar] [CrossRef]

- Stefenon, S.F.; Cristoforetti, M.; Cimatti, A. Towards Automatic Digitalization of Railway Engineering Schematics. In Proceedings of the International Conference of the Italian Association for Artificial Intelligence, Rome, Italy, 6–9 November 2023; Springer: Berlin/Heidelberg, Germany, 2023; pp. 453–466. [Google Scholar]

- Huang, W.; Sun, Q.; Yu, A.; Guo, W.; Xu, Q.; Wen, B.; Xu, L. Leveraging Deep Convolutional Neural Network for Point Symbol Recognition in Scanned Topographic Maps. ISPRS Int. J.-Geo-Inf. 2023, 12, 128. [Google Scholar] [CrossRef]

- Surikov, I.Y.; Nakhatovich, M.A.; Belyaev, S.Y.; Savchuk, D.A. Floor Plan Recognition and Vectorization Using Combination UNet, Faster-RCNN, Statistical Component Analysis and Ramer-Douglas-Peucker. In Proceedings of the International Conference on Computing Science, Communication and Security, Gujarat, India, 26–27 March 2020; Springer: Singapore, 2020; pp. 16–28. [Google Scholar] [CrossRef]

- Sarkar, S.; Pandey, P.; Kar, S. Automatic Detection and Classification of Symbols in Engineering Drawings. arXiv 2022, arXiv:2204.13277. [Google Scholar]

- Mishra, S.; Hashmi, K.A.; Pagani, A.; Liwicki, M.; Stricker, D.; Afzal, M.Z. Towards Robust Object Detection in Floor Plan Images: A Data Augmentation Approach. Appl. Sci. 2021, 11, 11174. [Google Scholar] [CrossRef]

- Shehzadi, T.; Hashmi, K.A.; Pagani, A.; Liwicki, M.; Stricker, D.; Afzal, M.Z. Mask-Aware Semi-Supervised Object Detection in Floor Plans. Appl. Sci. 2022, 12, 9398. [Google Scholar] [CrossRef]

- Goyal, S. Fine Grained Feature Representation Using Computer Vision Techniques for Understanding Indoor Space. Ph.D. Thesis, Indian Institute of Technology Jodhpur and Jodhpur and Computer Science and Engineering, Karwar, India, 2021. [Google Scholar]

- Elyan, E.; Jamieson, L.; Ali-Gombe, A. Deep Learning for Symbols Detection and Classification in Engineering Drawings; Elsevier: Amsterdam, The Netherlands, 2020; Volume 129, pp. 91–102. [Google Scholar] [CrossRef]

- Dzhusupova, R.; Banotra, R.; Bosch, J.; Olsson, H.H. Pattern Recognition Method for Detecting Engineering Errors on Technical Drawings. In Proceedings of the 2022 IEEE World AI IoT Congress (AIIoT), Seattle, WA, USA, 6–9 June 2022; IEEE: Piscataway, NJ, USA, 2022. [Google Scholar] [CrossRef]

- Xiao, X.; Li, Z.; Zhao, S.; Yang, L.; Zhao, F.; Ge, C. Improved P&ID Symbol Detection Algorithm Based on YOLOv5 Network. In Proceedings of the 2023 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Honolulu, HI, USA, 1–4 October 2023; pp. 120–126. [Google Scholar] [CrossRef]

- Gajbhiye, S.M.; Bhamre, S.; Tadepalli, L.T.; Pillai, M.; Uplaonkar, D. Advancing P&ID Digitization with YOLOv5. In Proceedings of the 2023 International Conference on Integrated Intelligence and Communication Systems (ICIICS), Kalaburagi, India, 24–25 November 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–6. [Google Scholar]

- Ismail, M.H.A.B. Identification of Objects in Oilfield Infrastructure using Engineering Diagram and Machine Learning Methods. In Proceedings of the 2021 IEEE Symposium on Computers & Informatics (ISCI), 2021, Kuala Lumpur, Malaysia, 16 October 2021; pp. 19–24. [Google Scholar] [CrossRef]

- Liu, S.; Li, Z.; Zhao, S.; Yang, L.; Zhao, F.; Ge, C. A Complete Piping Identification Solution for Piping and Instrumentation Diagrams. In Proceedings of the 2023 IEEE International Conference on High Performance Computing & Communications, Data Science & Systems, Smart City & Dependability in Sensor, Cloud & Big Data Systems & Application (HPCC/DSS/SmartCity/DependSys), Melbourne, Australia, 17–21 December 2023; pp. 9–15. [Google Scholar] [CrossRef]

- Yun, D.Y.; Seo, S.K.; Zahid, U.; Lee, C.J. Deep Neural Network for Automatic Image Recognition of Engineering Diagrams. Appl. Sci. 2020, 10, 4005. [Google Scholar] [CrossRef]

- Zhang, Y.; Cai, J.; Cai, H. CNN-Based Symbol Recognition in Piping Drawings; American Society of Civil Engineers: Reston, VA, USA, 2020. [Google Scholar] [CrossRef]

- Kang, D.H.; Choi, S.W.; Lee, E.B.; Kang, S.O. Auto-Routing Systems (ARSs) with 3D Piping for Sustainable Plant Projects Based on Artificial Intelligence (AI) and Digitalization of 2D Drawings and Specifications. Sustainability 2024, 16, 2770. [Google Scholar] [CrossRef]

- Mizanur Rahman, S.; Bayer, J.; Dengel, A. Graph-Based Object Detection Enhancement for Symbolic Engineering Drawings. In Proceedings of the Document Analysis and Recognition—ICDAR 2021 Workshops, Lausanne, Switzerland, 5–10 September 2021; Lecture Notes in Computer Science. Barney Smith, E.H., Pal, U., Eds.; Springer International Publishing: Cham, Switzerland, 2021; Volume 12916, pp. 74–90. [Google Scholar] [CrossRef]

- Song, A.; Kun, H.; Peng, B.; Chen, R.; Zhao, K.; Qiu, J.; Wang, K. EDRS: An Automatic System to Recognize Electrical Drawings. In Proceedings of the 2021 China Automation Congress (CAC), Beijing, China, 22–24 October 2021; pp. 5438–5443. [Google Scholar] [CrossRef]

- Haar, C.; Kim, H.; Koberg, L. AI-Based Engineering and Production Drawing Information Extraction. In Proceedings of the International Conference on Flexible Automation and Intelligent Manufacturing, Detroit, MI, USA, 19–23 June 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 374–382. [Google Scholar]

- Lin, Y.H.; Ting, Y.H.; Huang, Y.C.; Cheng, K.L.; Jong, W.R. Integration of Deep Learning for Automatic Recognition of 2D Engineering Drawings. Machines 2023, 11, 802. [Google Scholar] [CrossRef]

- Kashevnik, A.; Ali, A.; Mayatin, A. AI-Based Method for Frame Detection in Engineering Drawings. In Proceedings of the 2023 International Russian Smart Industry Conference (SmartIndustryCon), Sochi, Russia, 27–31 March 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 225–229. [Google Scholar]

- Brock, A.; Lim, T.; Ritchie, J.M.; Weston, N. ConvNet-Based Optical Recognition for Engineering Drawings. In Proceedings of the ASME 2017 International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, Cleveland, OH, USA, 6–9 August 2017. [Google Scholar] [CrossRef]

- Bickel, S.; Goetz, S.; Wartzack, S. From Sketches to Graphs: A Deep Learning Based Method for Detection and Contextualisation of Principle Sketches in the Early Phase of Product Development. Proc. Des. Soc. 2023, 3, 1975–1984. [Google Scholar] [CrossRef]

- Bickel, S.; Schleich, B.; Wartzack, S. Detection and classification of symbols in principle sketches using deep learning. Proc. Des. Soc. 2021, 1, 1183–1192. [Google Scholar] [CrossRef]

- Seff, A.; Ovadia, Y.; Zhou, W.; Adams, R.P. Sketchgraphs: A large-scale dataset for modeling relational geometry in computer-aided design. arXiv 2020, arXiv:2007.08506. [Google Scholar]

- Onshape, P. API-integration with Onshape. Available online: https://www.onshape.com/de/features/integrations (accessed on 8 July 2024).

- Clark, A. Pillow (PIL Fork) Documentation. 2015. Available online: https://buildmedia.readthedocs.org/media/pdf/pillow/latest/pillow.pdf (accessed on 23 April 2024).

- github. object-detection. 2024. Available online: https://github.com/topics/object-detection (accessed on 23 April 2024).

- Roth, K. Konstruieren mit Konstruktionskatalogen: Band 2: Kataloge; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Roth, K. Konstruieren mit Konstruktionskatalogen: Band 3: Verbindungen und Verschlüsse, Lösungsfindung, 2nd ed.; Wesentlich Erweitert und neu Gestaltet; Springer eBook Collection Computer Science and Engineering; Springer: Berlin/Heidelberg, Germany, 1996. [Google Scholar] [CrossRef]

- Labisch, S.; Weber, C. Technisches Zeichnen: Intensiv und Effektiv Lernen und üben, 2nd ed.; überarb. aufl; Studium, Vieweg: Wiesbaden, Germany, 2005. [Google Scholar] [CrossRef]

- DIN ISO 3952-2:1995 DE; Vereinfachte Darstellungen in der Kinematik. Standard, International Organization for Standardization: Geneva, Switzerland, 1995.

- List, R. CATIA V5—Grundkurs für Maschinenbauer: Bauteil- und Baugruppenkonstruktion, Zeichnungsableitung, 4th ed.; aktualisierte und erw. aufl.; Studium, Vieweg + Teubner: Wiesbaden, Germany, 2009. [Google Scholar] [CrossRef]

- Madsen, D.A.; Madsen, D.P.; Standiford, K.; Krulikowski, A. Engineering Drawing & Design, 6th ed.; Cengage Learning: South Melbourne, VIC, Australia, 2017. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollar, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Abdulla, W. Mask R-CNN for Object Detection and Instance Segmentation on Keras and TensorFlow. 2017. Available online: https://github.com/matterport/Mask_RCNN (accessed on 23 April 2024).

- Jocher, G.; Stoken, A.; Chaurasia, A.; Borovec, J.; Code, N.; Xie, T.; Kwon, Y.; Michael, K.; Changyu, L.; Fang, J.; et al. ultralytics/yolov5: v6.0—YOLOv5n ’Nano’ models, Roboflow integration, TensorFlow export, OpenCV DNN support. Zenodo 2021. [Google Scholar] [CrossRef]

- Wu, Y.; Kirillov, A.; Massa, F.; Lo, W.Y.; Girshick, R. Detectron2. 2019. Available online: https://github.com/facebookresearch/detectron2 (accessed on 23 April 2024).

- Lupinetti, K.; Pernot, J.P.; Monti, M.; Giannini, F. Content-based CAD assembly model retrieval: Survey and future challenges. Comput.-Aided Des. 2019, 113, 62–81. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; Volume 27. [Google Scholar]

- Vahdat, A.; Kautz, J. NVAE: A deep hierarchical variational autoencoder. In Proceedings of the Advances in Neural Information Processing Systems 2020, virtual, 6–12 December 2020; Volume 33, pp. 19667–19679. [Google Scholar]

- Kingma, D.P.; Welling, M. Auto-encoding variational bayes. arXiv 2014, arXiv:1312.6114. [Google Scholar]

- Zou, Z.; Chen, K.; Shi, Z.; Guo, Y.; Ye, J. Object detection in 20 years: A survey. Proc. IEEE 2023, 111, 257–276. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 213–229. [Google Scholar]

- Law, H.; Deng, J. Cornernet: Detecting objects as paired keypoints. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 734–750. [Google Scholar]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man, Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Kasturi, R.; Bow, S.T.; El-Masri, W.; Shah, J.; Gattiker, J.R.; Mokate, U.B. A system for interpretation of line drawings. IEEE Trans. Pattern Anal. Mach. Intell. 1990, 12, 978–992. [Google Scholar] [CrossRef]

- Tanigawa, S.; Hori, O.; Shimotsuji, S. Precise line detection from an engineering drawing using a figure fitting method based on contours and skeletons. In Proceedings of the 12th IAPR International Conference on Pattern Recognition (Cat. No.94CH3440-5), Jerusalem, Israel, 9–13 October 1994. [Google Scholar] [CrossRef]

- Han, C.C.; Fan, K.C. Skeleton generation of engineering drawings via contour matching. Pattern Recognit. 1994, 27, 261–275. [Google Scholar] [CrossRef]

- den Hartog, J.E.; ten Kate, T.K. Finding arrows in utility maps using a neural network. In Proceedings of the 12th IAPR International Conference on Pattern Recognition (Cat. No.94CH3440-5), Jerusalem, Israel, 9–13 October 1994; Volume 2, pp. 190–194. [Google Scholar] [CrossRef]

- Messmer, B.T.; Bunke, H. Automatic learning and recognition of graphical symbols in engineering drawings. In Graphics Recognition Methods and Applications; Springer: Berlin/Heidelberg, Germany, 1996; pp. 123–134. [Google Scholar] [CrossRef]

- Chiang, J.Y.; Tue, S.; Leu, Y.C. A new algorithm for line image vectorization. Pattern Recognit. 1998, 31, 1541–1549. [Google Scholar] [CrossRef]

- Sauvola, J.; Pietikäinen, M. Adaptive document image binarization. Pattern Recognit. 2000, 33, 225–236. [Google Scholar] [CrossRef]

- Hilaire, X.; Tombre, K. Robust and accurate vectorization of line drawings. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 890–904. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.Y.; Zhao, L.F.; Hao, Y.P. Multi-Level Block Information Extraction in Engineering Drawings Based on Depth-First Algorithm. Autom. Equip. Syst. 2012, 468–471, 2100–2103. [Google Scholar] [CrossRef]

- Rezaei, S.B.; Shanbehzadeh, J.; Sarrafzadeh, A. Adaptive document image skew estimation. In Proceedings of the International MultiConference of Engineers and Computer Scientists 2017—IMECS 2017, Hong Kong, China, 15–17 March 2017; p. 97898814. [Google Scholar]

- Liu, T.; Hua, Q.; Yuan, S.; Yin, L.; Cheng, G. Anchor Point based Hough Transformation for Automatic Line Detection of Engineering Drawings. In Proceedings of the 2019 WRC Symposium on Advanced Robotics and Automation (WRC SARA), Beijing, China, 21–22 August 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar] [CrossRef]

- Vedaldi, A.; Bischof, H.; Brox, T.; Frahm, J.M. Deep Vectorization of Technical Drawings; Springer International Publishing: Cham, Switzerland, 2020; Volume 12358. [Google Scholar] [CrossRef]

- Yang, D.S.; Webster, J.L.; Renmdell, L.A.; Garrett, J.H.; Shaw, D.S. Management of graphical symbols in a CAD environment: A neural network approach. In Proceedings of the 1993 IEEE Conference on Tools with Al (TAI-93), Boston, MA, USA, 8–11 November 1993; IEEE: Piscataway, NJ, USA, 1993; pp. 272–279. [Google Scholar] [CrossRef]

- Yang, D.; Rendell, L.A.; Webster, J.L.; Shaw, D.S.; Garrett, J.H., Jr. Symbol recognition in a CAD environment using a neural network. Int. J. Artif. Intell. Tools 1994, 3, 157–185. [Google Scholar] [CrossRef]

- Lladós, J.; López-Krahe, J.; Martí, E. A system to understand hand-drawn floor plans using subgraph isomorphism and Hough transform. Mach. Vis. Appl. 1997, 10, 150–158. [Google Scholar] [CrossRef]

- Ah-Soon, C. A constraint network for symbol detection in architectural drawings. In Graphics Recognition Algorithms and Systems; Springer: Berlin/Heidelberg, Germany, 1998; pp. 80–90. [Google Scholar] [CrossRef]

- Dosch, P.; Tombre, K.; Ah-Soon, C.; Masini, G. A complete system for the analysis of architectural drawings. Int. J. Doc. Anal. Recognit. 2000, 3, 102–116. [Google Scholar] [CrossRef]

- Ah-Soon, C.; Tombre, K. Architectural symbol recognition using a network of constraints. Pattern Recognit. Lett. 2001, 22, 231–248. [Google Scholar] [CrossRef]

- Lladós, J.; Martí, E.; Villanueva, J.J. Symbol recognition by error-tolerant subgraph matching between region adjacency graphs. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 1137–1143. [Google Scholar] [CrossRef]

- Song, J.; Su, F.; Tai, C.L.; Cai, S. An object-oriented progressive-simplification-based vectorization system for engineering drawings: Model, algorithm, and performance. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 1048–1060. [Google Scholar] [CrossRef]

- Valveny, E.; Martı, E. A model for image generation and symbol recognition through the deformation of lineal shapes. Pattern Recognit. Lett. 2003, 24, 2857–2867. [Google Scholar] [CrossRef]

- Cao, Y.; Li, H.; Liang, Y. Using engineering drawing interpretation for automatic detection of version information in CADD engineering drawing. Autom. Constr. 2005, 14, 361–367. [Google Scholar] [CrossRef]

- Lu, T.; Yang, H.; Yang, R.; Cai, S. Automatic analysis and integration of architectural drawings. Int. J. Doc. Anal. Recognit. (IJDAR) 2007, 9, 31–47. [Google Scholar] [CrossRef]

- Lu, T.; Yang, Y.; Yang, R.; Cai, S. Knowledge Extraction from Structured Engineering Drawings. In Proceedings of the 2008 Fifth International Conference on Fuzzy Systems and Knowledge Discovery, Jinan, China, 18–20 October 2008; IEEE: Piscataway, NJ, USA, 2008. [Google Scholar] [CrossRef]

- Le Bodic, P.; Locteau, H.; Adam, S.; Héroux, P.; Lecourtier, Y.; Knippel, A. Symbol Detection Using Region Adjacency Graphs and Integer Linear Programming. In Proceedings of the 2009 10th International Conference on Document Analysis and Recognition, Barcelona, Spain, 26–29 July 2009; IEEE: Piscataway, NJ, USA, 2009. [Google Scholar] [CrossRef]

- Rusiñol, M.; Lladós, J.; Sánchez, G. Symbol spotting in vectorized technical drawings through a lookup table of region strings. Pattern Anal. Appl. 2010, 13, 321–331. [Google Scholar] [CrossRef]

- Nayef, N.; Breuel, T.M. Statistical Grouping for Segmenting Symbols Parts from Line Drawings, with Application to Symbol Spotting. In Proceedings of the 2011 International Conference on Document Analysis and Recognition, Beijing, China, 18–21 September 2011; IEEE: Piscataway, NJ, USA, 2011. [Google Scholar] [CrossRef]

- Barducci, A.; Marinai, S. Object recognition in floor plans by graphs of white connected components. In Proceedings of the 21st International Conference on Pattern Recognition (ICPR2012), Tsukuba, Japan, 11–15 November 2012; pp. 298–301. [Google Scholar]

- Nayef, N. Geometric-based Symbol Spotting and Retrieval in Technical Line Drawings. Ph.D. Thesis, Technische Universität Kaiserslautern, Kaiserslautern, Germany, 2013. [Google Scholar]

- Nayef, N.; Breuel, T.M. Building a Symbol Library from Technical Drawings by Identifying Repeating Patterns. In Graphics Recognition. New Trends and Challenges; Springer: Berlin/ Heidelberg, Germany, 2013; pp. 69–78. [Google Scholar] [CrossRef]

- Nayef, N.; Breuel, T.M. Combining geometric matching with SVM to improve symbol spotting. In Proceedings of the IS & T/SPIE Electronic Imaging, 2013, Burlingame, CA, USA, 3–7 February 2013; Volume 8658, pp. 141–149. [Google Scholar] [CrossRef]

- Nayef, N.; Breuel, T.M. Efficient symbol retrieval by building a symbol index from a collection of line drawings. In Proceedings of the IS&T-SPIE Electronic Imaging Symposium, Burlingame, CA, USA, 5–7 February 2013; pp. 320–331. [Google Scholar] [CrossRef]

- Dutta, A.; Lladós, J.; Pal, U. A symbol spotting approach in graphical documents by hashing serialized graphs. Pattern Recognit. 2013, 46, 752–768. [Google Scholar] [CrossRef]

- Zhang, H.; Li, X. Data Extraction from DXF File and Visual Display. In Proceedings of the HCI International 2014—Posters’ Extended Abstracts: International Conference, HCI International 2014, Heraklion, Greece, 22–27 June 2014; Proceedings, Part I 16. Springer: Cham, Switzerland, 2014; pp. 286–291. [Google Scholar] [CrossRef]

- Banerjee, P.; Choudhary, S.; Das, S.; Majumdar, H.; Roy, R.; Chaudhuri, B.B. Automatic Hyperlinking of Engineering Drawing Documents. In Proceedings of the 2016 12th IAPR Workshop on Document Analysis Systems (DAS), Santorini, Greece, 11–14 April 2016; IEEE: Piscataway, NJ, USA, 2016. [Google Scholar] [CrossRef]

- Riba, P.; Dutta, A.; Llados, J.; Fornes, A. Graph-Based Deep Learning for Graphics Classification. In Proceedings of the 2017 14th IAPR International Conference on Document Analysis and Recognition (ICDAR), Kyoto, Japan, 9–15 November 2017; IEEE: Piscataway, NJ, USA, 2017. [Google Scholar] [CrossRef]

- Drapeau, J.; Géraud, T.; Coustaty, M.; Chazalon, J.; Burie, J.C.; Eglin, V.; Bres, S. Extraction of Ancient Map Contents Using Trees of Connected Components. In Graphics Recognition. Current Trends and Evolutions; Springer: Cham, Switzerland, 2018; pp. 115–130. [Google Scholar] [CrossRef]

- Ziran, Z.; Marinai, S. Object Detection in Floor Plan Images. In Proceedings of the Artificial Neural Networks in Pattern Recognition: 8th IAPR TC3 Workshop, ANNPR 2018, Siena, Italy, 19–21 September 2018; Proceedings 8. Springer: Cham, Switzerland, 2018; pp. 383–394. [Google Scholar] [CrossRef]

- Renton, G.; Heroux, P.; Gauzere, B.; Adam, S. Graph Neural Network for Symbol Detection on Document Images. In Proceedings of the 2019 International Conference on Document Analysis and Recognition Workshops (ICDARW), Sydney, Australia, 22–25 September 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar] [CrossRef]

- Goyal, S.; Chattopadhyay, C.; Bhatnagar, G. Knowledge-driven description synthesis for floor plan interpretation. Int. J. Doc. Anal. Recognit. (IJDAR) 2021, 24, 19–32. [Google Scholar] [CrossRef]

- Fan, Z.; Zhu, L.; Li, H.; Chen, X.; Zhu, S.; Tan, P. FloorPlanCAD: A Large-Scale CAD Drawing Dataset for Panoptic Symbol Spotting. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021; IEEE: Piscataway, NJ, USA, 2021. [Google Scholar] [CrossRef]

- Evangelou, I.; Savelonas, M.; Papaioannou, G. PU learning-based recognition of structural elements in architectural floor plans. Multimed. Tools Appl. 2021, 80, 13235–13252. [Google Scholar] [CrossRef]

- Park, S.; Kim, H. 3DPlanNet: Generating 3D Models from 2D Floor Plan Images Using Ensemble Methods. Electronics 2021, 10, 2729. [Google Scholar] [CrossRef]

- Fan, Z.; Chen, T.; Wang, P.; Wang, Z. CADTransformer: Panoptic Symbol Spotting Transformer for CAD Drawings. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; IEEE: Piscataway, NJ, USA, 2022. [Google Scholar] [CrossRef]

- Zheng, Z.; Li, J.; Zhu, L.; Li, H.; Petzold, F.; Tan, P. GAT-CADNet: Graph Attention Network for Panoptic Symbol Spotting in CAD Drawings. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; IEEE: Piscataway, NJ, USA, 2022. [Google Scholar] [CrossRef]

- Jakubik, J.; Hemmer, P.; Vössing, M.; Blumenstiel, B.; Bartos, A.; Mohr, K. Designing a Human-in-the-Loop System for Object Detection in Floor Plans. In Proceedings of the AAAI Conference on Artificial Intelligence, 2022, Philadelphia, PA, USA, 27 February–22 March 2022; Volume 36, pp. 12524–12530. [Google Scholar]

- Mafipour, M.S.; Ahmed, D.; Vilgertshofer, S.; Borrmann, A. Digitalization of 2D Bridge Drawings Using Deep Learning Models. In Proceedings of the 30th International Conference on Intelligent Computing in Engineering (EG-ICE), London, UK, 4–7 July 2023. [Google Scholar]

- Faltin, B.; Schönfelder, P.; König, M. Improving Symbol Detection on Engineering Drawings Using a Keypoint-Based Deep Learning Approach. In Proceedings of the 30th EG-ICE: International Conference on Intelligent Computing in Engineering, London, UK, 4–7 July 2023. [Google Scholar]

- Smith, W.A.; Pillatt, T. You only look for a symbol once: An object detector for symbols and regions in documents. In Proceedings of the International Conference on Document Analysis and Recognition. Springer, San José, CA, USA, 21–26 August 2023; pp. 227–243. [Google Scholar]

- Schönfelder, P.; Stebel, F.; Andreou, N.; König, M. Deep learning-based text detection and recognition on architectural floor plans. Autom. Constr. 2024, 157, 105156. [Google Scholar] [CrossRef]

- Janssen, R.D.; Vossepoel, A.M. Adaptive Vectorization of Line Drawing Images. Comput. Vis. Image Underst. 1997, 65, 38–56. [Google Scholar] [CrossRef]

- Yang, S. Symbol recognition via statistical integration of pixel-level constraint histograms: A new descriptor. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 278–281. [Google Scholar] [CrossRef]

- Zhang, W.; Wenyin, L.; Zhang, K. Symbol recognition with kernel density matching. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 2020–2024. [Google Scholar] [CrossRef]

- Rusiñol, M.; Lladós, J. Symbol Spotting in Technical Drawings Using Vectorial Signatures. In Proceedings of the International Workshop on Graphics Recognition, Hong Kong, China, 25–26 August 2005; Springer: Berlin/Heidelberg, Germany, 2005; pp. 35–46. [Google Scholar] [CrossRef]

- Barrat, S.; Tabbone, S.; Nourrissier, P. A Bayesian classifier for symbol recognition. In Proceedings of the Seventh International Workshop on Graphics Recognition-GREC’2007, Curitiba, Brazil, 20–21 September 2007. 9p. [Google Scholar]

- Yu, Y.; Zhang, W.; Liu, W. A New Syntactic Approach to Graphic Symbol Recognition. In Proceedings of the Ninth International Conference on Document Analysis and Recognition (ICDAR 2007), Curitiba, Brazil, 23–26 September 2007; IEEE: Piscataway, NJ, USA, 2007. [Google Scholar] [CrossRef]

- Terrades, O.R.; Valveny, E.; Tabbone, S. On the Combination of Ridgelets Descriptors for Symbol Recognition. In Proceedings of the Graphics Recognition. Recent Advances and New Opportunities: 7th International Workshop, GREC 2007, Curitiba, Brazil, 20–21 September 2007; Selected Papers 7. Springer: Berlin/Heidelberg, Germany, 2008; pp. 40–50. [Google Scholar] [CrossRef]

- Luqman, M.M.; Brouard, T.; Ramel, J.Y. Graphic Symbol Recognition Using Graph Based Signature and Bayesian Network Classifier. In Proceedings of the 2009 10th International Conference on Document Analysis and Recognition, Barcelona, Spain, 26–29 July 2009; IEEE: Piscataway, NJ, USA, 2009. [Google Scholar] [CrossRef]

- Barrat, S.; Tabbone, S. A Bayesian network for combining descriptors: Application to symbol recognition. Int. J. Doc. Anal. Recognit. (IJDAR) 2010, 13, 65–75. [Google Scholar] [CrossRef]

- Coustaty, M.; Bertet, K.; Visani, M.; Ogier, J. A New Adaptive Structural Signature for Symbol Recognition by Using a Galois Lattice as a Classifier. IEEE Trans. Syst. Man Cybern. Part B (Cybernetics) 2011, 41, 1136–1148. [Google Scholar] [CrossRef] [PubMed]

- Ghosh, S.; Shaw, P.; Das, N.; Santosh, K.C. GSD-Net: Compact Network for Pixel-Level Graphical Symbol Detection. In Proceedings of the 2019 International Conference on Document Analysis and Recognition Workshops (ICDARW), Sydney, Australia, 22–25 August 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar] [CrossRef]

- Elliman, D. TIF2VEC, An Algorithm for Arc Segmentation in Engineering Drawings. In Graphics Recognition Algorithms and Applications; Springer: Berlin/ Heidelberg, Germany, 2002; pp. 350–358. [Google Scholar] [CrossRef]

- Santosh, K.C.; Wendling, L.; Lamiroy, B. BoR: Bag-of-Relations for Symbol Retrieval. Int. J. Pattern Recognit. Artif. Intell. 2014, 28, 1450017. [Google Scholar] [CrossRef]

- Karasneh, B.; Chaudron, M.R. Img2UML: A System for Extracting UML Models from Images. In Proceedings of the 2013 39th Euromicro Conference on Software Engineering and Advanced Applications, Santander, Spain, 4–6 September 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 134–137. [Google Scholar] [CrossRef]

- Ho-Quang, T.; Chaudron, M.R.; Samuelsson, I.; Hjaltason, J.; Karasneh, B.; Osman, H. Automatic Classification of UML Class Diagrams from Images. In Proceedings of the 2014 21st Asia-Pacific Software Engineering Conference, Washington, DC, USA, 1–4 December 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 399–406. [Google Scholar] [CrossRef]

- Shcherban, S.; Liang, P.; Li, Z.; Yang, C. Multiclass Classification of UML Diagrams from Images Using Deep Learning. Int. J. Softw. Eng. Knowl. Eng. 2021, 31, 1683–1698. [Google Scholar] [CrossRef]

- Bunke, H. Automatic Interpretation of Lines and Text in Circuit Diagrams. In Pattern Recognition Theory and Applications; Springer: Dordrecht, Germany, 1982; pp. 297–310. [Google Scholar] [CrossRef]

- Groen, F.C.; Sanderson, A.C.; Schlag, J.F. Symbol recognition in electrical diagrams using probabilistic graph matching. Pattern Recognit. Lett. 1985, 3, 343–350. [Google Scholar] [CrossRef]

- Okazaki, A.; Kondo, T.; Mori, K.; Tsunekawa, S.; Kawamoto, E. An automatic circuit diagram reader with loop-structure-based symbol recognition. IEEE Trans. Pattern Anal. Mach. Intell. 1988, 10, 331–341. [Google Scholar] [CrossRef]

- Fahn, C.S.; Wang, J.F.; Lee, J.Y. A topology-based component extractor for understanding electronic circuit diagrams. Comput. Vision Graph. Image Process. 1988, 43, 279. [Google Scholar] [CrossRef]

- Lee, S.W.; Kim, J.H.; Groen, F.C. Translation-, Rotation- and Scale- Invariant Recognition of Hand-Drawn Symbols in Schematic Diagrams. Int. J. Pattern Recognit. Artif. Intell. 1990, 04, 1–25. [Google Scholar] [CrossRef]

- Lee, S.W. Recognizing Hand-Drawn Electrical Circuit Symbols with Attributed Graph Matching. In Structured Document Image Analysis; Springer: Berlin/Heidelberg, Germany, 1992; pp. 340–358. [Google Scholar] [CrossRef]

- Kim, S.H.; Suh, J.W.; Kim, J.H. Recognition of logic diagrams by identifying loops and rectilinear polylines. In Proceedings of the 2nd International Conference on Document Analysis and Recognition (ICDAR ’93), Tsukuba Science City, Japan, 20–22 October 1993. [Google Scholar] [CrossRef]

- Cheng, T.; Khan, J.; Liu, H.; Yun, D. A symbol recognition system. In Proceedings of the 2nd International Conference on Document Analysis and Recognition (ICDAR ’93), Tsukuba Science City, Japan, 20–22 October 1993. [Google Scholar] [CrossRef]

- Hamada, A.H. A new system for the analysis of schematic diagrams. In Proceedings of the 2nd International Conference on Document Analysis and Recognition (ICDAR ’93), Tsukuba Science City, Japan, 20–22 October 1993. [Google Scholar] [CrossRef]

- Yu, B. Automatic understanding of symbol-connected diagrams. In Proceedings of the 3rd International Conference on Document Analysis and Recognition, Montreal, QC, Canada, 14–16 August 1995. [Google Scholar] [CrossRef]

- Yan, L.; Wenyin, L. Engineering drawings recognition using a case-based approach. In Proceedings of the Seventh International Conference on Document Analysis and Recognition, 2003, Edinburgh, UK, 6 August 2003. [Google Scholar] [CrossRef]

- Zesheng, S.; Jing, Y.; Chunhong, J.; Yonggui, W. Symbol recognition in electronic diagrams using decision tree. In Proceedings of the 1994 IEEE International Conference on Industrial Technology—ICIT ’94, Guangzhou, China, 5–9 December 1994; IEEE: Piscataway, NJ, USA, 1994. [Google Scholar] [CrossRef]

- Baum, L.; Boose, J.; Boose, M.; Chaplin, C.; Provine, R. Extracting System-Level Understanding from Wiring Diagram Manuals. In Graphics Recognition. Recent Advances and Perspectives; Springer: Berlin/Heidelberg, Germany, 2004; pp. 100–108. [Google Scholar] [CrossRef]

- Ouyang, T.Y.; Davis, R. A visual approach to sketched symbol recognition. In Proceedings of the 21st International Joint Conference on Artifical Intelligence, IJCAI ’09, Pasadena, CA, USA, 11–17 July 2009; Morgan Kaufmann Publishers Inc.: Burlington, MA, USA, 2009. [Google Scholar]

- Feng, G.; Viard-Gaudin, C.; Sun, Z. On-line hand-drawn electric circuit diagram recognition using 2D dynamic programming. Pattern Recognit. 2009, 42, 3215–3223. [Google Scholar] [CrossRef]

- De, P.; Mandal, S.; Bhowmick, P. Recognition of electrical symbols in document images using morphology and geometric analysis. In Proceedings of the 2011 International Conference on Image Information Processing, Shimla, India, 3–5 November 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 1–6. [Google Scholar] [CrossRef]

- Santosh, K.C.; Lamiroy, B.; Wendling, L. Symbol recognition using spatial relations. Pattern Recognit. Lett. 2012, 33, 331–341. [Google Scholar] [CrossRef]

- Bailey, D.; Norman, A.; Moretti, G. Electronic Schematic Recognition; Massey University: Wellington, New Zealand, 1995. [Google Scholar]

- Datta, R.; Mandal, P.D.S.; Chanda, B. Detection and identification of logic gates from document images using mathematical morphology. In Proceedings of the 2015 Fifth National Conference on Computer Vision, Pattern Recognition, Image Processing and Graphics (NCVPRIPG), Patna, India, 16–19 December 2015; IEEE: Piscataway, NJ, USA, 2015. [Google Scholar] [CrossRef]

- Rabbani, M.; Khoshkangini, R.; Nagendraswamy, H.S.; Conti, M. Hand Drawn Optical Circuit Recognition. Procedia Comput. Sci. 2016, 84, 41–48. [Google Scholar] [CrossRef]

- Agarwal, S.; Agrawal, M.; Chaudhury, S. Recognizing Electronic Circuits to Enrich Web Documents for Electronic Simulation. In Graphic Recognition. Current Trends and Challenges; Springer International Publishing: Cham, Switzerland, 2017; pp. 60–74. [Google Scholar] [CrossRef]

- Stoitchkov, D. Analysis of Methods for Automated Symbol Recognition in Technical Drawings. Bachelor’s Thesis, Technical University of Munich, Munich, Germany, 2018. [Google Scholar]

- Datta, R.; Mandal, S.; Biswas, S. Automatic Abstraction of Combinational Logic Circuit from Scanned Document Page Images. Pattern Recognit. Image Anal. 2019, 29, 212–223. [Google Scholar] [CrossRef]

- Peng, Z.; Yan, G.; Zhongshan, Q.; Huiyong, L.; Mouying, L.; Shengnan, L. CIM/G graphics automatic generation in substation primary wiring diagram based on image recognition. J. Physics Conf. Ser. 2020, 1617, 012007. [Google Scholar] [CrossRef]

- Thoma, F.; Bayer, J.; Li, Y.; Dengel, A. A public ground-truth dataset for handwritten circuit diagram images. In Proceedings of the International Conference on Document Analysis and Recognition, Lausanne, Switzerland, 5–10 September 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 20–27. [Google Scholar]

- Shen, C.; Lv, P.; Mao, M.; Li, W.; Zhao, K.; Yan, Z. Substation One-Line Diagram Automatic Generation Based On Image Recongnition. In Proceedings of the 2022 Global Conference on Robotics, Artificial Intelligence and Information Technology (GCRAIT), Chicago, IL, USA, 30–31 July 2022; pp. 247–251. [Google Scholar] [CrossRef]

- Ramadhan, D.S.; Al-Khaffaf, H.S.M. Symbol Spotting in Electronic Images Using Morphological Filters and Hough Transform. Sci. J. Univ. Zakho 2022, 10, 119–129. [Google Scholar]

- Bayer, J.; Roy, A.K.; Dengel, A. Instance segmentation based graph extraction for handwritten circuit diagram images. arXiv 2023, arXiv:2301.03155. [Google Scholar]

- Uzair, W.; Chai, D.; Rassau, A. Electronet: An Enhanced Model for Small-Scale Object Detection in Electrical Schematic Diagrams. 2023. Available online: https://www.researchgate.net/publication/372298462_ElectroNet_An_Enhanced_Model_for_Small-Scale_Object_Detection_in_Electrical_Schematic_Diagrams (accessed on 9 July 2024).

- Bhanbhro, H.; Hooi, Y.K.; Zakaria, M.N.B.; Hassan, Z.; Pitafi, S. Single Line Electrical Drawings (SLED): A Multiclass Dataset Benchmarked by Deep Neural Networks. In Proceedings of the 2023 IEEE 13th International Conference on System Engineering and Technology (ICSET), Shah Alam, Malaysia, 2 October 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 66–71. [Google Scholar]

- Yang, C.; Wang, J.; Yang, L.; Shi, D.; Duan, X. Intelligent Digitization of Substation One-Line Diagrams Based on Computer Vision. IEEE Trans. Power Deliv. 2023, 38, 3912–3923. [Google Scholar] [CrossRef]

- Wenyin, L.; Zhang, W.; Yan, L. An interactive example-driven approach to graphics recognition in engineering drawings. Int. J. Doc. Anal. Recognit. (IJDAR) 2007, 9, 13–29. [Google Scholar] [CrossRef]

- Qureshi, R.J.; Ramel, J.Y.; Barret, D.; Cardot, H. Spotting Symbols in Line Drawing Images Using Graph Representations. In Proceedings of the Graphics Recognition. Recent Advances and New Opportunities: 7th International Workshop, GREC 2007, Curitiba, Brazil, 20–21 September 2007; Selected Papers 7. Springer: Berlin/Heidelberg, Germany, 2008; pp. 91–103. [Google Scholar] [CrossRef]

- Yu, Y.; Samal, A.; Seth, S. Isolating symbols from connection lines in a class of engineering drawings. Pattern Recognit. 1994, 27, 391–404. [Google Scholar] [CrossRef]

- Yu, Y.; Samal, A.; Seth, S.C. A system for recognizing a large class of engineering drawings. IEEE Trans. Pattern Anal. Mach. Intell. 1997, 19, 868–890. [Google Scholar] [CrossRef]

- Pham, T.A.; Delalandre, M.; Barrat, S.; Ramel, J.Y. Accurate junction detection and characterization in line-drawing images. Pattern Recognit. 2014, 47, 282–295. [Google Scholar] [CrossRef]