Feature-Model-Based In-Process Measurement of Machining Precision Using Computer Vision

Abstract

1. Introduction

2. Measurement Algorithm

2.1. Image Preprocessing

2.1.1. Image Interpolation

2.1.2. Weighted Median Filter

2.2. Establishing a Machining Feature Model for Design

2.2.1. Parsing the Set of Points Generated by Straight Lines

2.2.2. Parsing the Point Set Generated by Arcs

2.3. Matching of Machining Feature Model with Part Drawing

| Algorithm 1: Feature-Model-based ROI Construction |

| Input: CAD 2D drawing, part image I |

| Output: ROI image |

| 1. Begin 2. convert CAD drawings to DXF format. 3. read the information of elements from the DXF format file 4. for i = the number of elements 5. if Current element = line 6. obtain the coordinates of the arc L(x, y) according to Formulas (7) and (8) 7. else If Current element = arc 8. obtain the coordinates of the straight line R(x, y) according to Formulas (10) and (11) 9. end 10. end 11. add coordinates L or R to the machining feature model Im 12. match Im with I → ROI image |

2.4. ROI-Based Canny Edge Detection

2.4.1. Gaussian Filtering for Smoothing Images

2.4.2. Gradient Calculation of ROI Pixels

2.4.3. Non-Maximum Suppression and Dual Threshold Processing

| Algorithm 2: Improved Canny Edge Detection | |

| Input: Part image I, ROI image IROI | |

| Output: Edge image Iout | |

| 1. Begin 2. I ← to compute Formulas (2) and (4) for I 3. for i = IROI(x) 4. for j = IROI(y) 5. IG(x, y) ←to compute Formula (13) for I. 6. Gp ← to compute Formula (16) for I G(x, y). 7. if Gp ≥ Gp1 and Gp ≥ Gp2 8. Gp may be an edge 9. else 10. Gp should be suppressed 11. end 12. if Gp ≥ HighThreshold, 13. Gp is a strong edge 14. else if Gp > LowThreshold 15. Gp is a weak edge 16. else 17. Gp should be suppressed 18. end 19. if Gp = LowThreshold and Gp connected to a strong edge pixel 20. Gp is a strong edge 21. else 22. Gp should be suppressed 23. end 24. Iout(x,y) = Gp 25. end 26. end | |

2.5. Actual Machining Feature Extraction

2.5.1. Hough Transform line Feature Detection

2.5.2. Hough Transform Circle Feature Detection

3. Measurement Implementation and Verification

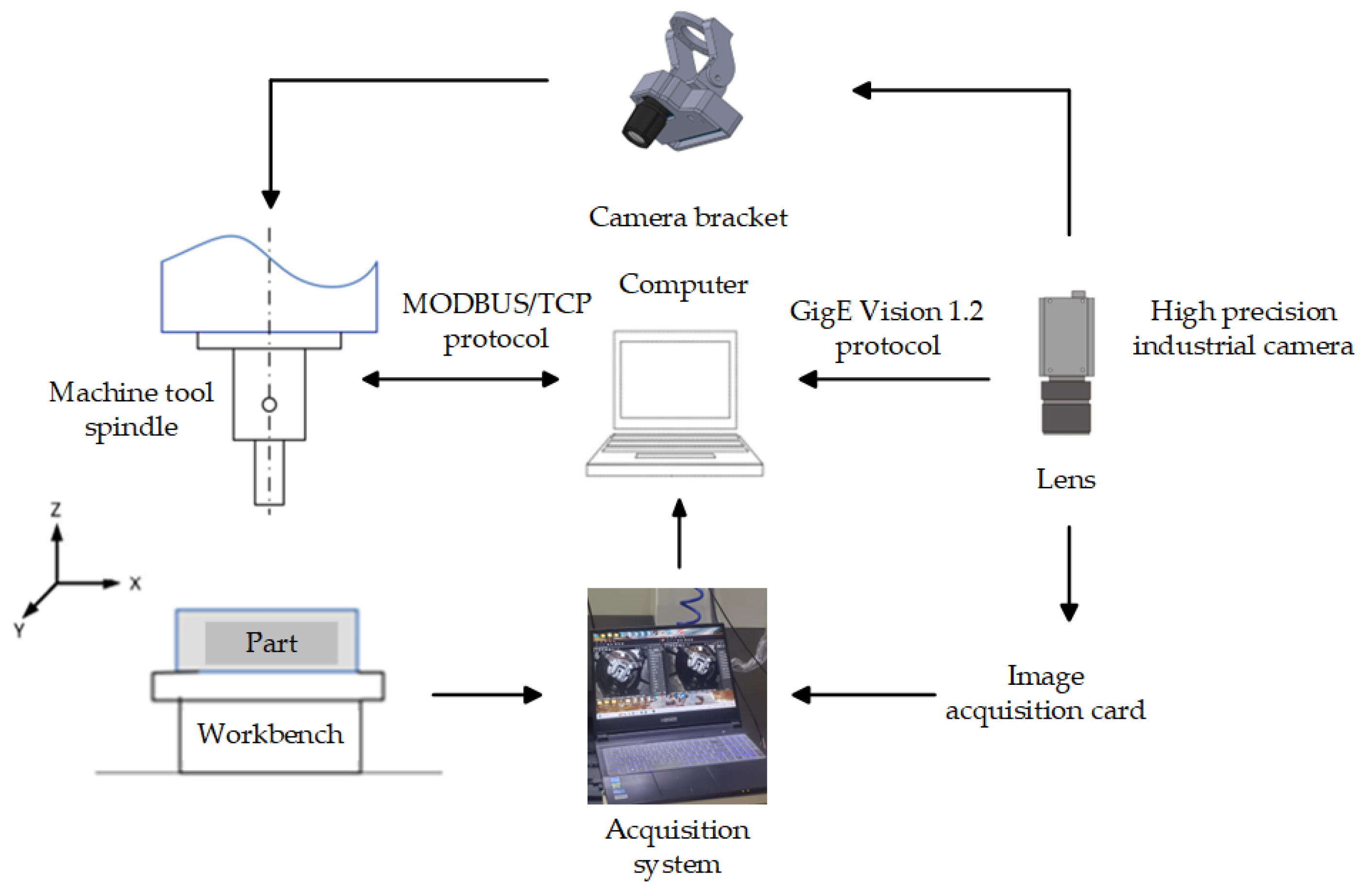

3.1. Experimental Environment Configuration

- The industrial camera is connected to the computer via a common GigE interface using a cable, allowing data transmission and control between the camera and the computer.

- The industrial camera is mounted above the machining area of the CNC milling machine, securely fixed using a bracket. This setup allows the camera to capture real-time image data of machined parts, providing data support for subsequent image processing and measurement.

- The parts to be machined are placed on the work table of the CNC milling machine, ensuring that the camera can capture the parts to be measured. This ensures that the position and orientation of the parts remain relatively stable during each machining process.

- On the constructed experimental platform, the method described in this paper is used for real-time image acquisition, edge extraction, and dimension measurement of the parts being machined. By comparing the measurement results with the actual dimensions, the performance and applicability of the algorithm are verified.

3.2. Measurement of Box Components

3.2.1. Measurement Experiment of Box Components

3.2.2. Results Analysis

3.3. Measurement of Rectangular Sealing Gaskets

3.3.1. Measurement of Straightness of Rectangular Sealing Gaskets

3.3.2. Analysis of Processing Results

3.4. Measurement of Flange Workpieces

3.4.1. Measurement of Roundness of Flange Workpiece

3.4.2. Analysis of Measurement Results

3.5. ROI Edge Extraction Analysis

4. Conclusions

- (1)

- The developed image acquisition system can perform real-time image capture during the manufacturing process of parts. By utilizing methods such as ROI extraction, image deblurring, denoising, and edge detection, the system can complete detection within 0.16 s, achieving clear workpiece contours and excellent detection speed.

- (2)

- Compared to the measurements of a coordinate measuring machine, the developed measurement method in this study achieved a relative accuracy of 97% for straightness and 96% for roundness.

- (3)

- Experimental results show that the relative accuracy between the inner groove measured in this study and the measurement results of the coordinate measuring machine reached 99%. In addition, the detection results were analyzed and compared, summarizing the factors that affect the detection accuracy of this method.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhou, G.; Zhang, C.; Li, Z.; Ding, K.; Wang, C. Knowledge-driven digital twin manufacturing cell towards intelligent manufacturing. Int. J. Prod. Res. 2020, 58, 1034–1051. [Google Scholar] [CrossRef]

- Serrano-Ruiz, J.C.; Mula, J.; Poler, R. Job shop smart manufacturing scheduling by deep reinforcement learning. J. Ind. Inf. Integr. 2024, 38, 100582. [Google Scholar] [CrossRef]

- Gao, W.; Haitjema, H.; Fang, F.Z.; Leach, R.K.; Cheung, C.F.; Savio, E.; Linares, J.M. On-machine and in-process surface metrology for precision manufacturing. Cirp Ann.-Manuf. Technol. 2019, 68, 843–866. [Google Scholar] [CrossRef]

- Peng, Y.; Huang, X.; Li, S.G. A measurement point planning method based on lidar automatic measurement technology. Rev. Sci. Instrum. 2023, 94, 015104. [Google Scholar] [CrossRef] [PubMed]

- Li, Y. Application of Computer Vision in Intelligent Manufacturing under the Background of 5G Wireless Communication and Industry 4.0. Math. Probl. Eng. 2022, 2022, 9422584. [Google Scholar] [CrossRef]

- Yang, J.C.; Wang, C.G.; Jiang, B.; Song, H.B.; Meng, Q.G. Visual Perception Enabled Industry Intelligence: State of the Art, Challenges and Prospects. IEEE Trans. Ind. Inform. 2021, 17, 2204–2219. [Google Scholar] [CrossRef]

- Pan, L.; Sun, G.D.; Chang, B.F.; Xia, W.; Jiang, Q.; Tang, J.W.; Liang, R.H. Visual interactive image clustering: A target-independent approach for configuration optimization in machine vision measurement. Front. Inf. Technol. Electron. Eng. 2023, 24, 355–372. [Google Scholar] [CrossRef]

- Wang, S.; Kobayashi, Y.; Ravankar, A.A.; Ravankar, A.; Emaru, T. A Novel Approach for Lidar-Based Robot Localization in a Scale-Drifted Map Constructed Using Monocular SLAM. Sensors 2019, 19, 2230. [Google Scholar] [CrossRef]

- Cui, G.T.; Wang, J.Z.; Li, J. Robust multilane detection and tracking in urban scenarios based on LIDAR and mono-vision. IET Image Process. 2014, 8, 269–279. [Google Scholar] [CrossRef]

- Huang, L.Q.; Zhe, T.; Wu, J.Y.; Wu, Q.; Pei, C.H.; Chen, D. Robust Inter-Vehicle Distance Estimation Method Based on Monocular Vision. IEEE Access 2019, 7, 46059–46070. [Google Scholar] [CrossRef]

- Ma, Y.B.; Zhao, R.J.; Liu, E.H.; Zhang, Z.; Yan, K. A novel autonomous aerial refueling drogue detection and pose estimation method based on monocular vision. Measurement 2019, 136, 132–142. [Google Scholar] [CrossRef]

- Sun, S.Y.; Yin, Y.J.; Wang, X.G.; Xu, D. Robust Landmark Detection and Position Measurement Based on Monocular Vision for Autonomous Aerial Refueling of UAVs. IEEE Trans. Cybern. 2019, 49, 4167–4179. [Google Scholar] [CrossRef]

- Sun, Y.; Wang, X.X.; Lin, Q.X.; Shan, J.H.; Jia, S.L.; Ye, W.W. A high-accuracy positioning method for mobile robotic grasping with monocular vision and long-distance deviation. Measurement 2023, 215, 112829. [Google Scholar] [CrossRef]

- Bai, R.; Jiang, N.; Yu, L.; Zhao, J. Research on industrial online detection based on machine vision measurement system. J. Phys. Conf. Ser. 2021, 2023, 012052. [Google Scholar] [CrossRef]

- Zhang, Z.Y.; Wang, X.D.; Zhao, H.T.; Ren, T.Q.; Xu, Z.; Luo, Y. The Machine Vision Measurement Module of the Modularized Flexible Precision Assembly Station for Assembly of Micro- and Meso-Sized Parts. Micromachines 2020, 11, 918. [Google Scholar] [CrossRef] [PubMed]

- Feldhausen, T.; Heinrich, L.; Saleeby, K.; Burl, A.; Post, B.; MacDonald, E.; Saldana, C.; Love, L. Review of Computer-Aided Manufacturing (CAM) strategies for hybrid directed energy deposition. Addit. Manuf. 2022, 56, 102900. [Google Scholar] [CrossRef]

- Angrisani, L.; Daponte, P.; Liguori, C.; Pietrosanto, A. An automatic measurement system for the characterization of automotive gaskets. In Proceedings of the IEEE Instrumentation and Measurement Technology Conference Sensing, Processing, Networking, IMTC Proceedings, Ottawa, ON, Canada, 19–21 May 1997; pp. 434–439. [Google Scholar] [CrossRef]

- Nogueira, V.V.E.; Barca, L.F.; Pimenta, T.C. A Cost-Effective Method for Automatically Measuring Mechanical Parts Using Monocular Machine Vision. Sensors 2023, 23, 5994. [Google Scholar] [CrossRef] [PubMed]

- Liu, S.Y.; Ge, Y.P.; Wang, S.; He, J.L.; Kou, Y.; Bao, H.J.; Tan, Q.C.; Li, N. Vision measuring technology for the position degree of a hole group. Appl. Opt. 2023, 62, 869–879. [Google Scholar] [CrossRef]

- Fu, X.G.; Li, H.; Zuo, Z.J.; Pan, L.B. Study of real-time parameter measurement of ring rolling pieces based on machine vision. PLoS ONE 2024, 19, e0298607. [Google Scholar] [CrossRef]

- Salah, M.; Ayyad, A.; Ramadan, M.; Abdulrahman, Y.; Swart, D.; Abusafieh, A.; Seneviratne, L.; Zweiri, Y. High speed neuromorphic vision-based inspection of countersinks in automated manufacturing processes. J. Intell. Manuf. 2023. [Google Scholar] [CrossRef]

- Huang, M.L.; Liu, Y.L.; Yang, Y.M. Edge detection of ore and rock on the surface of explosion pile based on improved Canny operator. Alex. Eng. J. 2022, 61, 10769–10777. [Google Scholar] [CrossRef]

- Ranjan, R.; Avasthi, V. Edge Detection Using Guided Sobel Image Filtering. Wirel. Pers. Commun. 2023, 132, 651–677. [Google Scholar] [CrossRef]

- Xiao, G.F.; Li, Y.T.; Xia, Q.X.; Cheng, X.Q.; Chen, W.P.; Cheng, X.Q.; Chen, W.P. Research on the on-line dimensional accuracy measurement method of conical spun workpieces based on machine vision technology. Measurement 2019, 148, 106881. [Google Scholar] [CrossRef]

- Jiang, B.C.; Du, X.; Wu, L.L.; Zhu, J.W. Visual measurement of the bearing diameter based on the homography matrix and partial area effect. Proc. Inst. Mech. Eng. Part C-J. Mech. Eng. Sci. 2024, 238, 2034–2043. [Google Scholar] [CrossRef]

- Li, B. Research on geometric dimension measurement system of shaft parts based on machine vision. Eurasip J. Image Video Process. 2018, 2018, 101. [Google Scholar] [CrossRef]

- Gao, C.; Zhou, R.G.; Li, X. Quantum color image scaling based on bilinear interpolation. Chin. Phys. B 2023, 32, 050303. [Google Scholar] [CrossRef]

- Zhang, Q.; Xu, L.; Jia, J. 100+ times faster weighted median filter (WMF). In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 2830–2837. [Google Scholar] [CrossRef]

- Gioi, R.G.V.; Randall, G. A Sub-Pixel Edge Detector: An Implementation of the Canny/Devernay Algorithm. Image Process. Line 2017, 7, 347–372. [Google Scholar] [CrossRef]

- Cao, J.F.; Chen, L.C.; Wang, M.; Tian, Y. Implementing a Parallel Image Edge Detection Algorithm Based on the Otsu-Canny Operator on the Hadoop Platform. Comput. Intell. Neurosci. 2018, 2018, 3598284. [Google Scholar] [CrossRef]

| Camera | Model: MV-CA060-11GM | ||||

| Light-Sensitive Chips | Photoreceptor Cell | Highest Resolution | High Frame Frequency | Overall Dimensions | |

| 1/1.8 in. | 3.75 × 3.75 µm | 3072 × 2048 pixel | 17 f/s | 29 × 29 × 29 mm | |

| Measurement Position | Part | Three Coordinate Measuring Machine (mm) | This Article’s Algorithm (mm) | Sub-Pixel Edge Detection Algorithm (mm) | Otsu–Canny Edge Detection Algorithm (mm) | Canny Edge Detection Algorithm (mm) |

|---|---|---|---|---|---|---|

| Length of the inner groove | part 1 | 43.993 | 43.979 | 45.374 | 43.938 | 43.997 |

| part 2 | 44.005 | 43.989 | 44.823 | 44.324 | 44.118 | |

| part 3 | 44.042 | 44.048 | 44.616 | 42.153 | 44.152 | |

| part 4 | 44.027 | 44.044 | 44.099 | 42.687 | 44.100 | |

| part 5 | 44.005 | 44.018 | 43.961 | 44.031 | 44.200 | |

| Width of the inner groove | part 1 | 30.002 | 29.991 | 29.664 | 29.096 | 30.060 |

| part 2 | 29.980 | 29.991 | 28.596 | 29.974 | 29.991 | |

| part 3 | 30.026 | 30.022 | 30.319 | 30.043 | 30.181 | |

| part 4 | 30.017 | 29.991 | 29.761 | 29.233 | 29.991 | |

| part 5 | 30.008 | 29.991 | 28.010 | 30.009 | 30.043 | |

| Distance from the circle to the edge | part 1 | 30.588 | 30.554 | 30.564 | 30.549 | 37.349 |

| part 2 | 30.581 | 30.711 | 30.498 | 30.576 | 30.047 | |

| part 3 | 30.496 | 30.411 | 30.318 | 30.623 | 35.380 | |

| part 4 | 30.496 | 30.501 | 30.569 | 30.551 | 28.858 | |

| part 5 | 30.591 | 30.581 | 30.646 | 30.676 | 17.724 |

| Measurement Position | Part | Three Coordinate Measuring Machine (mm) | This Article’s Algorithm (mm) | Sub-Pixel Edge Detection Algorithm (mm) | Otsu–Canny Edge Detection Algorithm (mm) | Canny Edge Detection Algorithm (mm) |

|---|---|---|---|---|---|---|

| straightness of the outside line 1 | part 1 | 0.015 | 0.014 | 0.013 | 0.018 | 0.021 |

| part 2 | 0.044 | 0.050 | 0.057 | 0.041 | 0.059 | |

| part 3 | 0.031 | 0.037 | 0.082 | 0.045 | 0.042 | |

| part 4 | 0.029 | 0.026 | 0.036 | 0.040 | 0.052 | |

| part 5 | 0.052 | 0.049 | 0.060 | 0.077 | 0.072 | |

| straightness of the outside line 2 | part 1 | 0.047 | 0.043 | 0.039 | 0.041 | 0.041 |

| part 2 | 0.027 | 0.031 | 0.021 | 0.037 | 0.035 | |

| part 3 | 0.040 | 0.043 | 0.066 | 0.048 | 0.051 | |

| part 4 | 0.059 | 0.052 | 0.050 | 0.049 | 0.050 | |

| part 5 | 0.047 | 0.038 | 0.046 | 0.040 | 0.042 | |

| straightness of the straight lines inside 1 | part 1 | 0.035 | 0.036 | 0.038 | 0.037 | 0.040 |

| part 2 | 0.017 | 0.023 | 0.029 | 0.038 | 0.025 | |

| part 3 | 0.015 | 0.025 | 0.023 | 0.026 | 0.030 | |

| part 4 | 0.026 | 0.025 | 0.020 | 0.031 | 0.033 | |

| part 5 | 0.027 | 0.033 | 0.033 | 0.040 | 0.043 | |

| straightness of the straight lines inside 2 | part 1 | 0.030 | 0.026 | 0.038 | 0.030 | 0.036 |

| part 2 | 0.046 | 0.040 | 0.063 | 0.061 | 0.066 | |

| part 3 | 0.016 | 0.026 | 0.038 | 0.028 | 0.035 | |

| part 4 | 0.018 | 0.014 | 0.028 | 0.032 | 0.029 | |

| part 5 | 0.023 | 0.033 | 0.033 | 0.037 | 0.040 |

| Measurement Position | Part | Three Coordinate Measuring Machine (mm) | This Article’s Algorithm (mm) | Sub-Pixel Edge Detection Algorithm (mm) | Otsu–Canny Edge Detection Algorithm (mm) | Canny Edge Detection Algorithm (mm) |

|---|---|---|---|---|---|---|

| Inner roundness | part 1 | 0.067 | 0.062 | 0.061 | 0.050 | 0.052 |

| part 2 | 0.062 | 0.068 | 0.063 | 0.130 | 0.048 | |

| part 3 | 0.069 | 0.062 | 0.079 | 0.092 | 0.095 | |

| part 4 | 0.043 | 0.043 | 0.708 | 0.069 | 0.057 | |

| part 5 | 0.070 | 0.072 | 0.068 | 0.082 | 0.078 | |

| Outer roundness | part 1 | 0.073 | 0.780 | 0.075 | 0.126 | 0.095 |

| part 2 | 0.082 | 0.070 | 0.026 | 0.022 | 0.042 | |

| part 3 | 0.076 | 0.073 | 0.097 | 0.058 | 0.062 | |

| part 4 | 0.077 | 0.070 | 0.039 | 0.031 | 0.035 | |

| part 5 | 0.070 | 0.062 | 0.076 | 0.067 | 0.073 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Z.; Liao, W.; Zhang, L.; Ren, Y.; Sun, G.; Sang, Y. Feature-Model-Based In-Process Measurement of Machining Precision Using Computer Vision. Appl. Sci. 2024, 14, 6094. https://doi.org/10.3390/app14146094

Li Z, Liao W, Zhang L, Ren Y, Sun G, Sang Y. Feature-Model-Based In-Process Measurement of Machining Precision Using Computer Vision. Applied Sciences. 2024; 14(14):6094. https://doi.org/10.3390/app14146094

Chicago/Turabian StyleLi, Zhimeng, Weiwen Liao, Long Zhang, Yuxiang Ren, Guangming Sun, and Yicun Sang. 2024. "Feature-Model-Based In-Process Measurement of Machining Precision Using Computer Vision" Applied Sciences 14, no. 14: 6094. https://doi.org/10.3390/app14146094

APA StyleLi, Z., Liao, W., Zhang, L., Ren, Y., Sun, G., & Sang, Y. (2024). Feature-Model-Based In-Process Measurement of Machining Precision Using Computer Vision. Applied Sciences, 14(14), 6094. https://doi.org/10.3390/app14146094