Privacy-Preserving Byzantine-Resilient Swarm Learning for E-Healthcare

Abstract

1. Introduction

- PBSL can not only protect the privacy of local sensitive data, but also ensure the confidentiality of the global collaborative model. Using the fully homomorphic encryption (FHE) scheme CKKS, PBSL encrypts the local gradient submitted by the medical center and aggregates to generate a global collaborative model without decryption.

- PBSL can achieve swarm learning (SL) that resists Byzantine attacks. In order to resist Byzantine attacks, we propose a privacy-protecting Byzantine-robust aggregation method, which uses secure cosine similarity to punish malicious .

- PBSL prototype is implemented by integrating deep learning and blockchain-based smart contract. Moreover, PBSL is tested using real medical datasets to evaluate its effectiveness. Experimental results show that PBSL is effective.

2. Related Work

2.1. Privacy-Preserving Federated Learning

2.2. Byzantine-Robust Federated Learning

2.2.1. Byzantine Attack

2.2.2. Byzantine-Robust Federated Learning

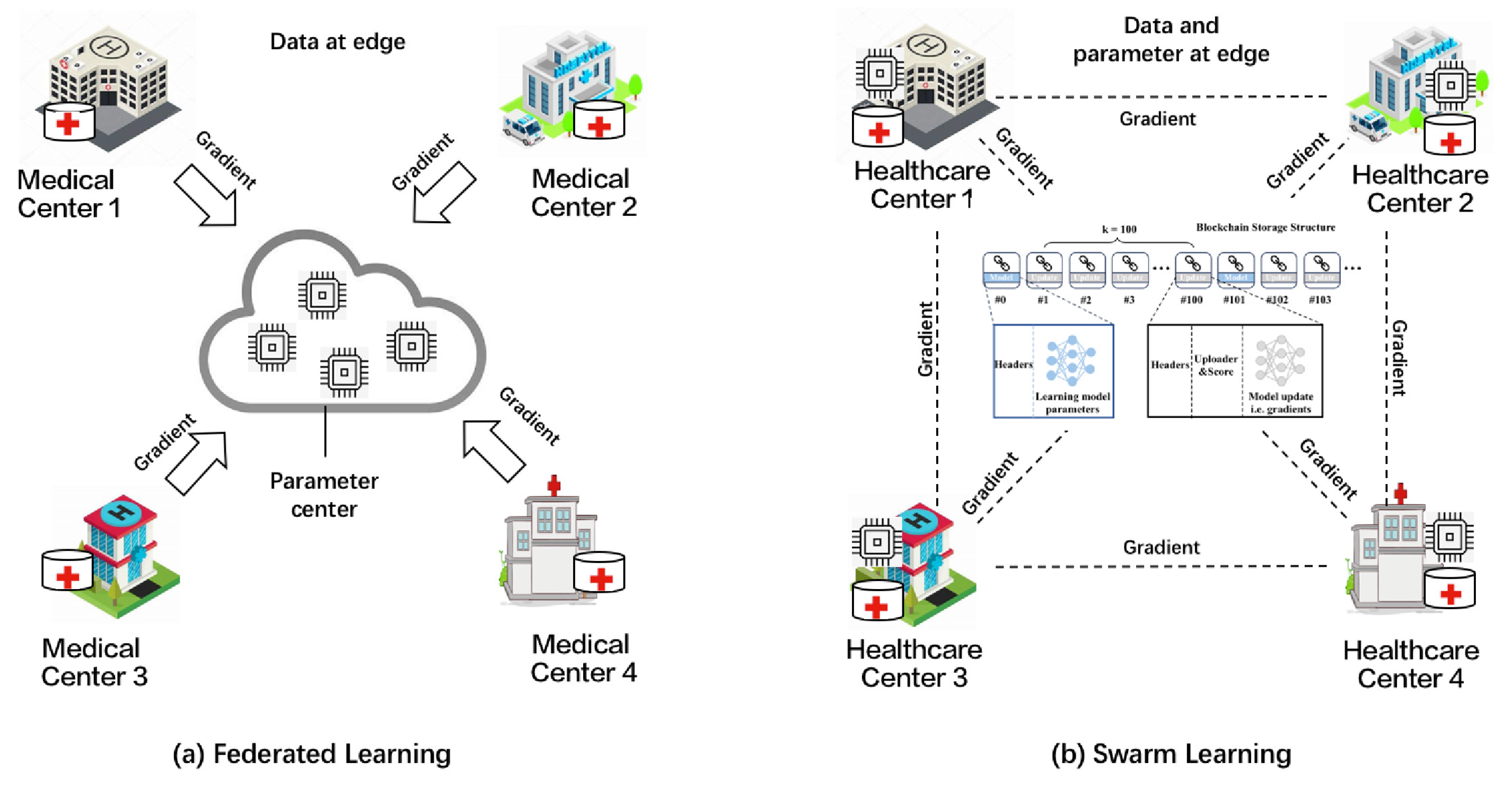

2.3. Blockchain-Based Federated Learning and Swarm Learning

3. Preliminaries

3.1. Swarm Learning

3.2. The CKKS Scheme

- (1)

- Key Generation: . This procedure generates a public key for encryption, a corresponding secret key for decryption, and a key for evaluation.

- (2)

- Encoding: . Taking an -dimensional vector and a scaling factor s as inputs, the procedure transforms to a polynomial m by encoding, i.e., , where and are the inverses of and , respectively.

- (3)

- Encryption: . Taking the given polynomial and public key as inputs, the procedure outputs the cipher-text .

- (4)

- Addition: . Taking the cipher-texts and as inputs, the procedure outputs .

- (5)

- Multiplication: . Taking the cipher-texts and and the evaluation key as inputs, the procedure outputs .

- (6)

- Rescaling: . Taking a cipher-text at level l as inputs, the procedure outputs the cipher-text in , where represents the integer closest to the real number x.

- (7)

- Decryption: . Taking the cipher-text c and the secret key as inputs, the procedure outputs the polynomial m.

- (8)

- Decoding: . Taking the plaintext polynomial as inputs, the procedure transforms into a vector using .

3.3. Threshold Fully Homomorphic Encryption

- : The algorithm inputs a security parameter , a circuit depth d, and an access structure , and outputs a public key , and secret key shares .

- : The algorithm inputs a public key and a single-bit plaintext , and outputs a cipher-text .

- : The algorithm inputs a public key , a boolean circuit of and cipher-texts encrypted under the same public key, and outputs an evaluation cipher-text .

- : The algorithm inputs a public key , a cipher-text and a secret key share . and outputs a partial decryption related to the party .

- : The algorithm inputs a public key , a set for some where we recall that we identify a party with its index i, and deterministically outputs a plaintext .

- : The algorithm inputs , d, and , and generates . Then, is divided into shares using .

- : The algorithm inputs and , computes , and output .

- : The algorithm inputs , C and , computes , and outputs .

- : The algorithm inputs , , and , computes , and outputs .

- : The algorithm inputs and , and checks if . If this is not the case, then it outputs ⊥. Otherwise, it arbitrarily chooses a satisfying set of size t and computes the Lagrange coefficients for all . Then, it computes , and outputs .

4. Problem Statement

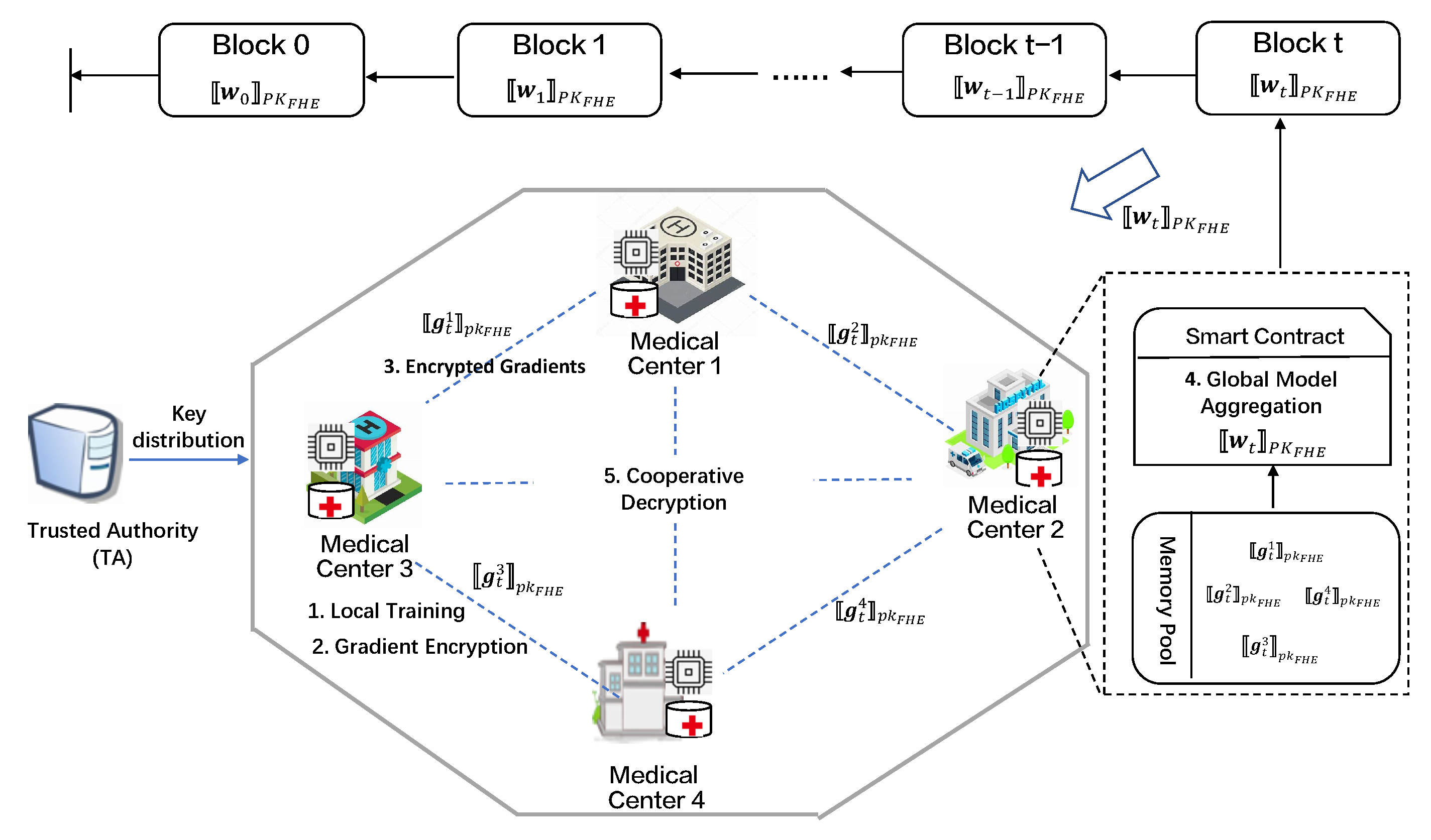

4.1. System Model

- stands for Trusted Authority and is responsible for generating initial parameters and public/secret key pairs and distributing them to .

- represents K medical centers participating in swarm learning. In our system, each trains the model using its local medical dataset, encrypts the gradient, shares the encrypted gradient to the blockchain, and cooperatively trains with other to achieve a more accurate global model.

- represents a blockchain platform made up of self-organizing networks of interconnected medical centers (). Every node (i.e., ) on blockchain has the opportunity to aggregate the global model, so can replace a central aggregator. Smart contract (SC) deployed on the blockchain homomorphically aggregates the encrypted gradients to obtain the global collaboration model.

4.2. Threat Model

- Privacy: Since local gradients contain ’ private information, if the directly upload the local gradients in plaintext, the adversaries can infer the private information of honest , which leads to data leakage of .

- Byzantine Attacks: Byzantine attack refers to the arbitrary behavior of the system participant who does not follow the designed protocol. For example, a Byzantine randomly sends a vector in place of the local gradient, or mistakenly aggregates the local gradient of all .

- Poisoning Attacks: Poison attack refers to various malicious behaviors launched by the . For example, an flips the label of the sample and uploads the toxic gradient.

4.3. Design Goals

- Confidentiality: Guaranteeing the confidentiality of the global model stored in the blockchain. Specifically, after swarm learning, only can access the global model stored in the blockchain.

- Privacy: Guaranteeing the privacy of each ’ local sensitive information. Specifically, during swarm learning, every ’ local training data cannot be leaked to other .

- Robustness: Detecting malicious and discarding their local gradients during swarm learning. Specifically, a mechanism is constructed to identify malicious medical centers and discard their submitted local model gradients.

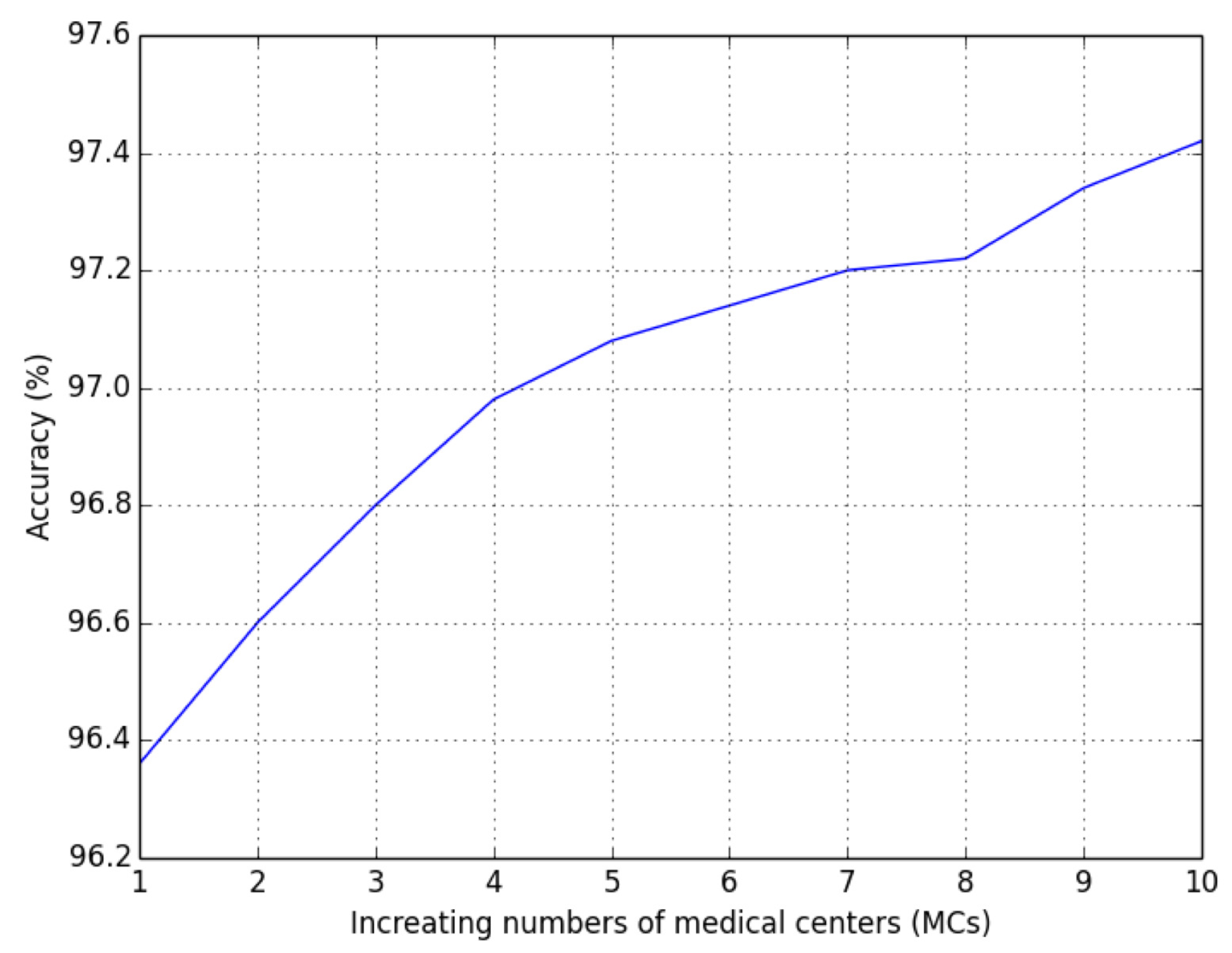

- Accuracy: While protecting data privacy and resisting malicious attacks, the model accuracy of PBSL training should be the same as that of the original SL training.

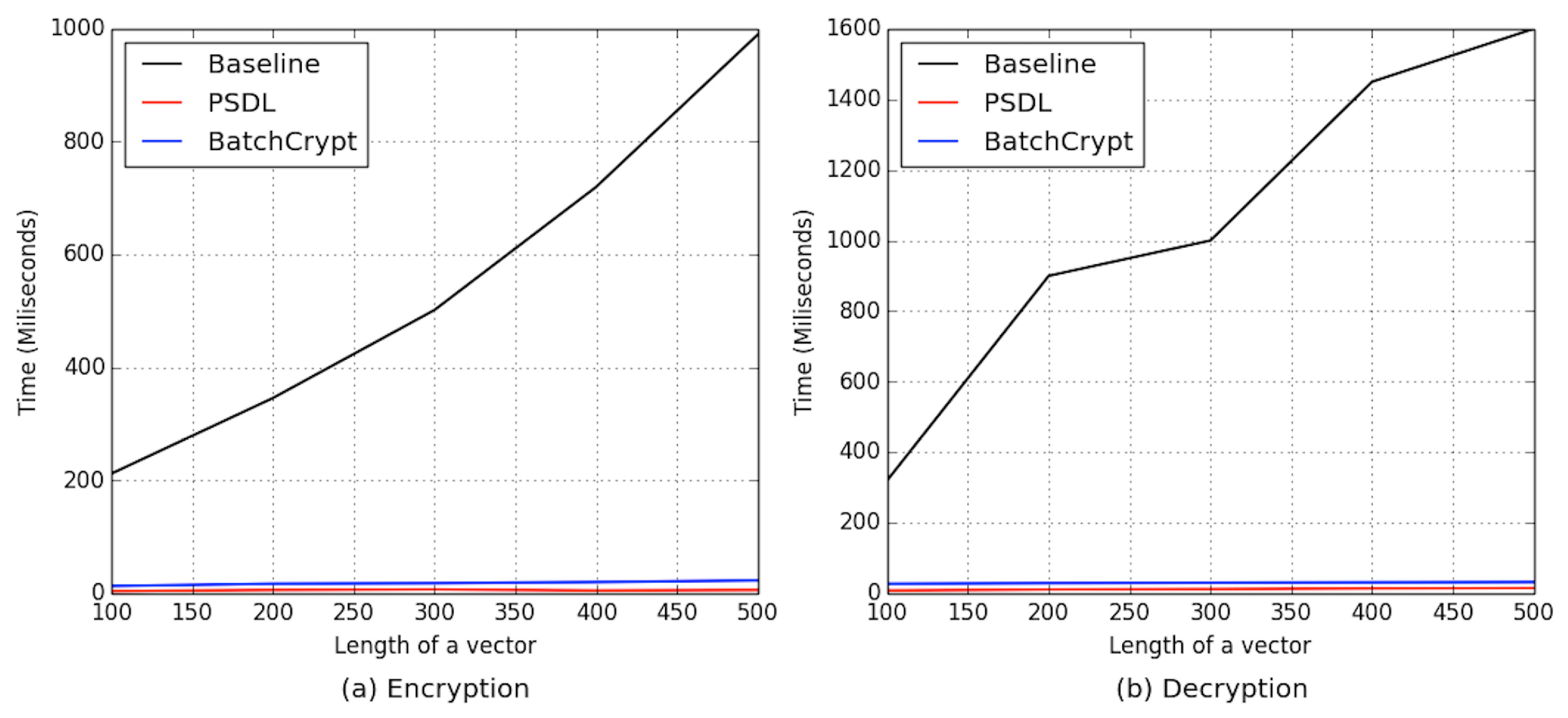

- Efficiency: Reducing the high computational and communication cost of encryption operations in a privacy-preserving SL scheme.

- Tolerance to drop-out: Guaranteeing the privacy and convergence even if up to D are delayed or dropped.

5. Our Privacy-Preserving Swarm Learning

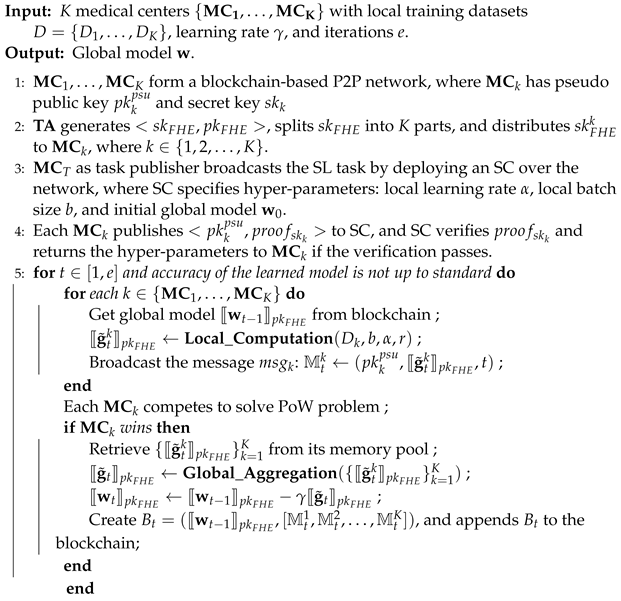

| Algorithm 1: PBSL |

|

5.1. System Initialization

5.1.1. Blockchain P2P Network Construction

5.1.2. Cryptosystem Initialization

5.1.3. Registration

5.1.4. Parameter Initialization

5.2. Local Computation

5.2.1. Local Model Training

| Algorithm 2: Local_Computation |

|

5.2.2. Normalization

5.2.3. Encryption

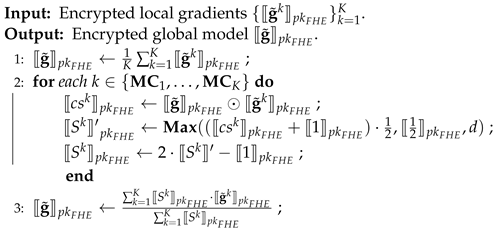

| Algorithm 3: Global_Aggregation |

|

5.2.4. Message Encapsulation and Sharing

5.3. Global Aggregation

5.3.1. PoW Competition

5.3.2. Homomorphic Aggregation

5.3.3. Secure Cosine Similarity Computation

5.3.4. Model Aggregation and Updating

5.3.5. Construction of New Block

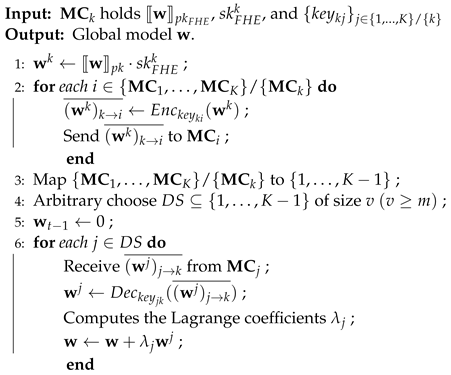

5.4. Collaborative Global Model Decryption

| Algorithm 4: Collab_Decryption |

|

6. Security Analysis of PBSL

6.1. Privacy and Confidentiality

- In the stage of local computation, is encrypted with , i.e.,where and , and splits into K distributed secret keys for . Because any with only a single cannot decrypt , PBSL can protect the privacy of at this stage.

- At the stage of global model aggregation, the winning homomorphically aggregates to generate , then homomorphically calculates by , and updatesThe whole process is performed on cipher-text. And with only a single cannot decrypt . Therefore, PBSL can protect the confidentiality of in the stage.

- At the stage of collaborative global model decryption, all decrypt the distributedly, and each shares its partially decryption result . Because any with only a single cannot decrypt , PBSL can protect the privacy of in the stage.

6.2. Byzantine-Robust

7. Evaluation

7.1. Experimental Setup

7.1.1. Experimental Environment

- A blockchain system uses Ethereum, a blockchain-based smart contracts platform [56,57,58]. Smart contracts developed in the Solidity programming language are deployed to private blockchains using Truffle v5.10And Ethereum is deployed on a desktop computer with 3.3 GHz Intel(R) Xeon(R) CPU, 16 GB memory, and Windows 10.

- CKKS Scheme is deployed using the TenSEAL library, which is a library for carrying out homomorphic encryption operations on tensors, and its code is available on Github (https://github.com/OpenMined/TenSEAL, accessed on 9 June 2024).

- MNIST dataset consists of handwritten digital pictures of 250 different people. Every sample is a black and white image with pixels. The dataset is divided into a training set with 60,000 samples and a test set with 10,000 samples. We distributed the entire dataset evenly across 10 .

- FashionMNIST dataset constitutes images in 10 categories. The whole dataset contains 60,000 samples (50,000 for training and 10,000 for testing). The dataset was also evenly distributed to 10 .

7.1.2. Byzantine Attacks

- Label Flipping Attack: By changing the label of the training sample to another category, the label flipping attacker misleads the classifier. To simulate this attack, the labels of the samples on each malicious are modified from l to , where N is the total number of labels and .

- Backdoor Attack: Backdoor attackers force classifiers to make bad decisions about samples with specific characteristics. To simulate the attack, we choose pixels as the trigger, and randomly select a number of training images on each malicious , and overwrite the pixels at the top left of each selected images. Then, we reset the labels of all the selected images to the target class.

- Arbitrary Model Attack: The model attacker arbitrarily tampers with some of the local model parameters. To reproduce the arbitrary model attack, malicious select a random number as its jth local model parameter.

- Untargeted Model Poisoning: The model attacker constructs the toxic local model that is similar to benign local models but causes the correct global gradient to reverse, or is much bigger (or smaller) than the maximum (or minimum) benign local models.

7.1.3. Evaluation Metrics

7.1.4. SL Settings

7.2. Experimental Results

7.2.1. Experimental Results on Defense Performance

7.2.2. Efficiency Comparison with Related FL Approaches

8. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Meier, C.; Fitzgerald, M.C.; Smith, J.M. Ehealth: Extending, enhancing, and evolving health care. Annu. Rev. Biomed. Eng. 2013, 15, 359–382. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Lu, R.; Ma, J.; Chen, L.; Qin, B. Privacy-preserving patient-centric clinical decision support system on naive bayesian classification. IEEE J. Biomed. Health Inform. 2016, 20, 655–668. [Google Scholar] [CrossRef] [PubMed]

- Rahulamathavan, Y.; Veluru, S.; Phan, C.W.; Chambers, J.A.; Rajarajan, M. Privacy-preserving clinical decision support system using gaussian kernel-based classification. IEEE J. Biomed. Health Inform. 2014, 18, 56–66. [Google Scholar] [CrossRef] [PubMed]

- Wiens, J.; Saria, S.; Sendak, M.; Ghassemi, M.; Liu, V.X.; Doshi-Velez, F.; Jung, K.; Heller, K.; Kale, D.; Saeed, M.; et al. Do no harm: A roadmap for responsible machine learning for healthcare. Nat Med. 2019, 2019, 1337–1340. [Google Scholar] [CrossRef] [PubMed]

- Courtiol, P.; Maussion, C.; Moarii, M.; Pronier, E.; Pilcer, S.; Sefta, M.; Manceron, P.; Toldo, S.; Zaslavskiy, M.; Le Stang, N.; et al. Deep learning-based classification of mesothelioma improves prediction of patient outcome. Nat. Med. 2019, 25, 1519–1525. [Google Scholar] [CrossRef] [PubMed]

- Warnat-Herresthal, S.; Perrakis, K.; Taschler, B.; Becker, M.; Baßler, K.; Beyer, M.; Günther, P.; Schulte-Schrepping, J.; Seep, L.; Klee, K.; et al. Scalable Prediction of Acute Myeloid Leukemia Using High-Dimensional Machine Learning and Blood Transcriptomics. iScience 2020, 23, 100780. [Google Scholar] [CrossRef] [PubMed]

- Rajkomar, A.; Dean, J.; Kohane, I. Machine learning in medicine. N. Engl. J. Med. 2019, 380, 1347–1358. [Google Scholar] [CrossRef] [PubMed]

- Savage, N. Calculating disease. Nature 2017, 550, 115–117. [Google Scholar] [CrossRef] [PubMed]

- Ping, P.; Hermjakob, H.; Polson, J.S.; Benos, P.V.; Wang, W. Biomedical informatics on the cloud: A treasure hunt for advancing cardiovascular medicine. Circ. Res. 2018, 122, 1290–1301. [Google Scholar] [CrossRef]

- Kaissis, G.A.; Makowski, M.R.; Ruckert, D.; Braren, R.F. Secure privacy-preserving and federated machine learning in medical imaging. Nat. Mach. Intell. 2020, 2, 305–311. [Google Scholar] [CrossRef]

- Konecny, J.; McMahan, H.B.; Yu, F.X.; Richtárik, P.; Suresh, A.T.; Bacon, D. Federated learning: Strategies for improving communication efficiency. arXiv 2016, arXiv:1610.05492. [Google Scholar]

- Mcmahan, H.B.; Moore, E.; Ramage, D.; Arcas, B. Federated learning of deep networks using model averaging. arXiv 2016, arXiv:1602.05629v1. [Google Scholar]

- Warnat-Herresthal, S.; Schultze, H.; Shastry, K.L.; Manamohan, S.; Mukherjee, S.; Garg, V.; Sarveswara, R.; Händler, K.; Pickkers, P.; Aziz, N.A.; et al. Swarm Learning for decentralized and confidential clinical machine learning. Nature 2021, 594, 265–270. [Google Scholar] [CrossRef] [PubMed]

- Song, C.; Ristenpart, T.; Shmatikov, V. Machine learning models that remember too much. In Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security, Dallas, TX, USA, 30 October–3 November 2017; ACM: New York, NY, USA, 2017; pp. 587–601. [Google Scholar]

- Melis, L.; Song, C.; De Cristofaro, E.; Shmatikov, V. Inference attacks against collaborative learning. arXiv 2018, arXiv:1805.04049. [Google Scholar]

- Blanchard, P.; El Mhamdi, E.M.; Guerraoui, R.; Stainer, J. Machine learning with adversaries: Byzantine tolerant gradient descent. Adv. Neural Inf. Process. Syst. 2017, 30, 119–129. [Google Scholar]

- Bagdasaryan, E.; Veit, A.; Hua, Y.; Estrin, D.; Shmatikov, V. How to backdoor federated learning. arXiv 2018, arXiv:1807.00459. [Google Scholar]

- Shokri, R.; Shmatikov, V. Privacy-preserving deep learning. In Proceedings of the ACM Conference on Computer and Communications Security, Denver, CO, USA, 12–16 October 2015; pp. 310–1321. [Google Scholar]

- Heikkila, M.A.; Koskela, A.; Shimizu, K.; Kaski, S.; Honkela, A. Differentially private cross-silo federated learning. arXiv 2020, arXiv:2007.05553. [Google Scholar]

- Zhao, L.; Wang, Q.; Zou, Q.; Zhang, Y.; Chen, Y. Privacy-preserving collaborative deep learning with unreliable participants. IEEE Trans. Inf. Forensics Secur. 2020, 15, 1486–1500. [Google Scholar] [CrossRef]

- Xu, R.; Baracaldo, N.; Zhou, Y.; Anwar, A.; Ludwig, H. HybridALpha: An efficient approach for privacy-preserving federated learning. In Proceedings of the 12th ACM Workshop on Artificial Intelligence and Security, London, UK, 15 November 2019; pp. 13–23. [Google Scholar]

- Hinton, G.; Deng, L.; Yu, D.; Dahl, G.E.; Mohamed, A.R.; Jaitly, N.; Senior, A.; Vanhoucke, V.; Nguyen, P.; Sainath, T.N.; et al. Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups. IEEE Signal Process. Mag. 2012, 29, 82–97. [Google Scholar] [CrossRef]

- Chan, T.-H.; Jia, K.; Gao, S.; Lu, J.; Zeng, Z.; Ma, Y. Pcanet: A simple deep learning baseline for image classification? IEEE Trans. Image Process. 2015, 24, 5017–5032. [Google Scholar] [CrossRef]

- Aliper, A.; Plis, S.; Artemov, A.; Ulloa, A.; Mamoshina, P.; Zhavoronkov, A. Deep learning applications for predicting pharmacological properties of drugs and drug repurposing using transcriptomic data. Mol. Pharm. 2016, 13, 2524–2530. [Google Scholar] [CrossRef]

- Budaher, J.; Almasri, M.; Goeuriot, L. Comparison of several word embedding sources for medical information retrieval. In Proceedings of the Working Notes of CLEF 2016—Conference and Labs of the Evaluation Forum, Évora, Portugal, 5–8 September 2016; pp. 43–46. [Google Scholar]

- Rav, D.; Wong, C.; Deligianni, F.; Berthelot, M.; Andreu-Perez, J.; Lo, B.; Yang, G.Z. Deep learning for health informatics. IEEE J. Biomed. Health Inf. 2017, 21, 4–21. [Google Scholar] [CrossRef]

- Bonawitz, K.; Ivanov, V.; Kreuter, B.; Marcedone, A.; McMahan, H.B.; Patel, S.; Ramage, D.; Segal, A.; Seth, K. Practical secure aggregation for privacy-preserving machine learning. In Proceedings of the ACM SIGSAC Conference on Computer and Communications Security, Dallas, TX, USA, 30 October–3 November 2017; pp. 1175–1191. [Google Scholar]

- Mohassel, P.; Zhang, Y. Secureml: A system for scalable privacy-preserving machine learning. In Proceedings of the 2017 IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 25 May 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 19–38. [Google Scholar]

- Wagh, S.; Gupta, D.; Chandran, N. Securenn: 3-party secure computation for neural network training. Proc. Priv. Enhanc. Technol. 2019, 3, 26–49. [Google Scholar] [CrossRef]

- Chaudhari, H.; Rachuri, R.; Suresh, A. Trident: Efficient 4pc framework for privacy preserving machine learning. In Proceedings of the 27th Annual Network and Distributed System Security Symposium, NDSS, San Diego, CA, USA, 23–26 February 2020. [Google Scholar]

- Phong, L.T.; Aono, Y.; Hayashi, T.; Wang, L.; Moriai, S. Privacy-preserving deep learning via additively homomorphic encryption. IEEE Trans. Inf. Forensics Secur. 2018, 13, 1333–1345. [Google Scholar] [CrossRef]

- Dong, Y.; Chen, X.; Shen, L.; Wang, D. EaSTFLy: Efficient and secure ternary federated learning. Comput. Secur. 2020, 94, 101824. [Google Scholar] [CrossRef]

- Zhang, C.; Li, S.; Xia, J.; Wang, W. BatchCrypt: Efficient homomorphic encryption for Cross-Silo federated learning. In Proceedings of the USENIX Annual Technical Conference (USENIX ATC 20), Online, 15–17 July 2020; pp. 493–506. [Google Scholar]

- Cheon, J.; Kim, A.; Kim, M.; Song, Y. Homomorphic encryption for arithmetic of approximate numbers. In Advances in Cryptology, Proceedings of the ASIACRYPT 2017: 23rd International Conference on the Theory and Applications of Cryptology and Information Security, Hong Kong, China, 3–7 December 2017; Springer: Cham, Switzerland, 2017; pp. 409–437. [Google Scholar]

- Fung, C.; Yoon, C.J.M.; Beschastnikh, I. Mitigating sybils in federated learning poisoning. arXiv 2018, arXiv:1808.04866. [Google Scholar]

- Fang, M.; Cao, X.; Jia, J.; Gong, N. Local model poisoning attacks to byzantine-robust federated learning. In Proceedings of the 29th USENIX Security Symposium (USENIX Security), Boston, MA, USA, 12–14 August 2020; pp. 1605–1622. [Google Scholar]

- Tolpegin, V.; Truex, S.; Gursoy, M.E.; Liu, L. Data poisoning attacks against federated learning systems. In Proceedings of the 25th European Symposium on Research in Computer Security, ESORICS 2020, Guildford, UK, 14–18 September 2020; Springer: Cham, Switzerland, 2020; pp. 480–501. [Google Scholar]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; Arcas, B.A.Y. Communication-efficient learning of deep networks from decentralized data. In Proceedings of the International Conference on Artificial Intelligence and Statistics, Fort Lauderdale, FL, USA, 20–22 April 2017; pp. 1273–1282. [Google Scholar]

- Yin, D.; Chen, Y.; Ramchandran, K.; Bartlett, P.L. Byzantine-robust distributed learning: Towards optimal statistical rates. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 5636–5645. [Google Scholar]

- Truex, S.; Baracaldo, N.; Anwar, A.; Steinke, T.; Ludwig, H.; Zhang, R.; Zhou, Y. A hybrid approach to privacy-preserving federated learning. In Proceedings of the 12th ACM Workshop on Artificial Intelligence and Security, London, UK, 15 November 2019; pp. 1–11. [Google Scholar]

- Liu, X.; Li, H.; Xu, G.; Chen, Z.; Huang, X.; Lu, R. Privacy-enhanced federated learning against poisoning adversaries. IEEE Trans. Inf. Forensics Secur. 2021, 16, 4574–4588. [Google Scholar] [CrossRef]

- Chen, Y.; Su, L.; Xu, J. Distributed statistical machine learning in adversarial settings: Byzantine gradient descent. Proc. ACM Meas. Anal. Comput. Syst. 2017, 1, 1–25. [Google Scholar] [CrossRef]

- Guerraoui, R.; Rouault, S. The hidden vulnerability of distributed learning in byzantium. In Proceedings of the International Conference on Machine Learning (ICML 2018), Stockholm, Sweden, 10–15 July 2018; pp. 3521–3530. [Google Scholar]

- Ramanan, P.; Nakayama, K. BAFFLE: Blockchain based aggregator free federated learning. In Proceedings of the International Conference on Blockchain (Blockchain), Virtual Event, 2–6 November 2020; pp. 72–81. [Google Scholar]

- Li, Y.; Chen, C.; Liu, N.; Huang, H.; Zheng, Z.; Yan, Q. A blockchain-based decentralized federated learning framework with committee consensus. IEEE Netw. 2021, 35, 234–241. [Google Scholar] [CrossRef]

- Kim, H.; Park, J.; Bennis, M.; Kim, S.L. Blockchained on-device federated learning. IEEE Commun. Lett. 2020, 24, 1279–1283. [Google Scholar] [CrossRef]

- Weng, J.; Zhang, J.; Li, M.; Zhang, Y.; Luo, W. DeepChain: Auditable and Privacy-Preserving Deep Learning with Blockchain-Based Incentive. IEEE Trans. Dependable Secur. Comput. 2021, 18, 2438–2455. [Google Scholar] [CrossRef]

- Warnat-Herresthal, S.; Schultze, H.; Shastry, K.; Manamohan, S.; Mukherjee, S.; Garg, V.; Sarveswara, R.; Händler, K.; Pickkers, P.; Aziz, N.A.; et al. Swarm Learning as a privacy-preserving machine learning approach for disease classification. bioRxiv 2020. [Google Scholar] [CrossRef]

- Fan, D.; Wu, Y.; Li, X. On the Fairness of Swarm Learning in Skin Lesion Classification. In Clinical Image-Based Procedures, Distributed and Collaborative Learning, Artificial Intelligence for Combating COVID-19 and Secure and Privacy-Preserving Machine Learning, Proceedings of the 10th Workshop, CLIP 2021, Second Workshop, DCL 2021, First Workshop, LL-COVID19 2021, and First Workshop and Tutorial, PPML 2021, Strasbourg, France, 27 September–1 October 2021; CoRR Abs/2109.12176; Springer: Berlin/Heidelberg, Germany, 2021. [Google Scholar]

- Oestreich, M.; Chen, D.; Schultze, J.; Fritz, M.; Becker, M. Privacy considerations for sharing genomics data. Excli J. 2021, 2021, 1243. [Google Scholar]

- Westerlund, A.; Hawe, J.; Heinig, M.; Schunkert, H. Risk Prediction of Cardiovascular Events by Exploration of Molecular Data with Explainable Artificial Intelligence. Int. J. Mol. Sci. 2021, 22, 10291. [Google Scholar] [CrossRef] [PubMed]

- Jain, A.; Rasmussen, P.M.R.; Sahai, A. Threshold Fully Homomorphic Encryption. Cryptology ePrint Archive, Report 2017/257. 2017. Available online: http://eprint.iacr.org/2017/257 (accessed on 9 June 2024).

- Paillier, P. Public-key crypto-systems based on composite degree residuosity classes. In Proceedings of the International Conference on the Theory and Application of Cryptographic Techniques, Prague, Czech Republic, 1–2 May 1999; pp. 223–238. [Google Scholar]

- Cheon, J.; Kim, D.; Lee, H.; Lee, K. Numerical method for comparison on homomorphically encrypted numbers. In Proceedings of the ASIACRYPT 2019 25th International Conference on the Theory and Application of Cryptology and Information Security, Kobe, Japan, 8–12 December 2019; pp. 415–445. [Google Scholar]

- Cao, X.; Fang, M.; Liu, J.; Gong, N.Z. FLTrust: Byzantine-robust federated learning via trust bootstrapping. In Proceedings of the Network and Distributed System Security Symposium, Virtual, 21–25 February 2021; pp. 1–18. [Google Scholar] [CrossRef]

- Li, Z.; Kang, J.; Yu, R.; Ye, D.; Deng, Q.; Zhang, Y. Consortium blockchain for secure energy trading in industrial internet of things. IEEE Trans. Ind. Inform. 2018, 14, 3690–3700. [Google Scholar] [CrossRef]

- Hu, S.; Cai, C.; Wang, Q.; Wang, C.; Luo, X.; Ren, K. Searching an encrypted cloud meets blockchain: A decentralized, reliable and fair realization. In Proceedings of the IEEE INFOCOM 2018-IEEE Conference on Computer Communications, Honolulu, HI, USA, 16–19 April 2018. [Google Scholar]

- Wang, S.; Zhang, Y.; Zhang, Y. A blockchain-based framework for data sharing with fine-grained access control in decentralized storage systems. IEEE Access 2018, 6, 437–450. [Google Scholar] [CrossRef]

- Chen, X.; Luo, J.J.; Liao, C.W.; Li, P. When machine learning meets blockchain: A decentralized, privacy-preserving and secure design. In Proceedings of the 2018 IEEE International Conference on Big Data (Big Data), Seattle, WA, USA, 10–13 December 2018; pp. 1178–1187. [Google Scholar]

| Schemes | Privacy Protection | Poisoning Defense | Central Server or Blockchain | Robust to Off-Line | Lightweight Computation |

|---|---|---|---|---|---|

| SecProbe [20] | DP-based | × | Central server | √ | √ |

| PPDL [31] | HE-based | × | Central server | × | × |

| PPML [27] | MPC-based | × | Central server | √ | × |

| Krum [16] | × | √ | Central server | × | √ |

| Trim-mean [39] | × | √ | Central server | × | √ |

| FoolsGold [35] | × | √ | Central server | × | √ |

| PEFL [41] | HE-based Paillier | √ | Central server | √ | × |

| BAFFLE [44] | × | × | Blockchain | × | × |

| BlockFL [46] | × | × | Blockchain | × | × |

| Swarm Learning [46] | × | × | Blockchain | × | × |

| BFLC [45] | × | √ | Blockchain | × | √ |

| PBSL | HE-based CKKS | √ | Blockchain | √ | √ |

| Notation | Implications |

|---|---|

| K | the number of medical centers |

| m | the threshold of Sharmir secret sharing |

| a set of K medical centers | |

| pseudo public key and secret key of medical center | |

| the security parameter | |

| the keys of CKKS cryptosystem | |

| the distributed secret keys splited from | |

| the symmetric encryption key between medical center and | |

| the symmetric key encryption or decryption algorithm using | |

| the weight of the global model after the -th iteration of training | |

| the cipher-text generated by CKKS algorithm | |

| v | the size of decryption set |

| Dataset | Scheme (Attacks) | % of Malicious MCs | ||||

|---|---|---|---|---|---|---|

| 10% | 20% | 30% | 40% | 50% | ||

| MNIST | PBSL (Untargeted) | |||||

| Bulyan (Untargeted) | ||||||

| PBSL (Targeted) | ||||||

| Bulyan (Targeted) | ||||||

| FashionMNIST | PBSL (Untargeted) | |||||

| Bulyan (Untargeted) | ||||||

| PBSL (Targeted) | ||||||

| Bulyan (Targeted) | ||||||

| LearningChain | BCFL | PBSL | ||||

|---|---|---|---|---|---|---|

| Accuracy | Overhead | Accuracy | Overhead | Accuracy | Overhead | |

| 100 | 70 | 90 | ||||

| 94 | 70 | 84 | ||||

| 98 | 74 | 88 | ||||

| 90 | 52 | 80 | ||||

| 70 | 46 | 60 | ||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, X.; Lai, T.; Li, H. Privacy-Preserving Byzantine-Resilient Swarm Learning for E-Healthcare. Appl. Sci. 2024, 14, 5247. https://doi.org/10.3390/app14125247

Zhu X, Lai T, Li H. Privacy-Preserving Byzantine-Resilient Swarm Learning for E-Healthcare. Applied Sciences. 2024; 14(12):5247. https://doi.org/10.3390/app14125247

Chicago/Turabian StyleZhu, Xudong, Teng Lai, and Hui Li. 2024. "Privacy-Preserving Byzantine-Resilient Swarm Learning for E-Healthcare" Applied Sciences 14, no. 12: 5247. https://doi.org/10.3390/app14125247

APA StyleZhu, X., Lai, T., & Li, H. (2024). Privacy-Preserving Byzantine-Resilient Swarm Learning for E-Healthcare. Applied Sciences, 14(12), 5247. https://doi.org/10.3390/app14125247