Featured Application

This study can be applied for the camera pose estimation and appearance quality inspection of large-sized complex assembled products that require high reliability, adaptability, and accuracy simultaneously.

Abstract

Balancing adaptability, reliability, and accuracy in vision technology has always been a major bottleneck limiting its application in appearance assurance for complex objects in high-end equipment production. Data-driven deep learning shows robustness to feature diversity but is limited by interpretability and accuracy. The traditional vision scheme is reliable and can achieve high accuracy, but its adaptability is insufficient. The deeper reason is the lack of appropriate architecture and integration strategies between the learning paradigm and empirical design. To this end, a learnable viewpoint evolution algorithm for high-accuracy pose estimation of complex assembled products under free view is proposed. To alleviate the balance problem of exploration and optimization in estimation, shape-constrained virtual–real matching, evolvable feasible region, and specialized population migration and reproduction strategies are designed. Furthermore, a learnable evolution control mechanism is proposed, which integrates a guided model based on experience and is cyclic-trained with automatically generated effective trajectories to improve the evolution process. Compared to the mm of the state-of-the-art data-driven method and the mm of the classic strategy combination, the pose estimation error of complex assembled product in this study is mm, which proves the effectiveness of the proposed method. Meanwhile, through in-depth exploration, the robustness, parameter sensitivity, and adaptability to the virtual–real appearance variations are sequentially verified.

1. Introduction

In the high-end equipment manufacturing industry, accurate multi-view pose estimation of complex products is an essential step for subsequent appearance quality inspection. Taking an aerospace product as an example, a piece of equipment may consist of multiple sub-modules, including dozens or even hundreds of intricate parts (complex assembled products). To ensure integrity and reliability, the product needs to be repeatedly compared with 3D models and process documents from different viewpoints to cover all visible elements to avoid defects during its assembly stage. The complexity of structure and requirements to make this work are still completed manually in most cases. In this context, if there is a method to obtain the pose of the current viewpoint, it will greatly improve the efficiency of the process. Based on the estimated pose, the 3D model can be adjusted to the same perspective as the real object so as to extract the corresponding region of interest (ROI) and filter irrelevant context [1,2]. In such a way, the reference provided by a virtual image can greatly simplify inspection and evaluation (whether it is for the operator or algorithm) while avoiding the difficulty of building an end-to-end multi-target detector. The inherent logic of pose estimation is to solve the problem of constructing a virtual–real feature connection in a scenario with diverse noise and continuously adjust incorrect correspondences through correct correspondences (e.g., feature point). For vision technology that utilizes sensor perception and algorithm judgment, this seemingly simple task for humans is quite difficult, and the challenges can be summarized as follows:

(1) Industry characteristics: In the realm of high-end equipment manufacturing, the interpretability and dependability of methods are important considerations. Although it is possible to train a specific neural network for pose estimation through a large amount of data, blindly adhering to the big data-driven learning paradigm in the pursuit of adaptability may render it challenging to address failures, abnormalities, and re-improvement, ultimately impeding promotion [3].

(2) Task features: These assembled products with high structural complexity may involve dozens or even hundreds of components, typically assembled through human or human–machine collaboration. Under such operation scenarios, it is difficult to collect enough high-quality samples at once or accurately pre-plan the imaging pose. The task features of a small sample, multiple components, and coarse initial pose greatly increase the difficulty of pose estimation/adjustment in common vision-based applications, e.g., AR/VR-based projection [4] mobile robots based on viewpoint pre-planning [5,6].

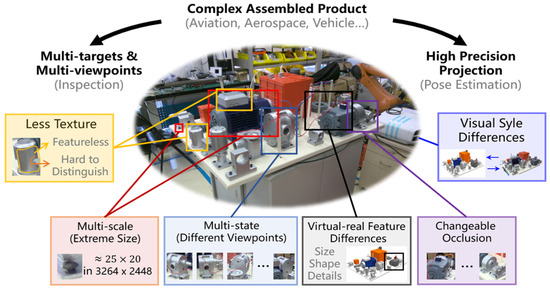

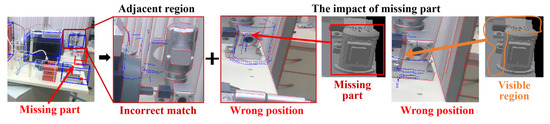

(3) Product appearance: As shown in Figure 1, the appearance features of the target and background captured from different viewpoints are quite different, and the multi-layer stacking between objects will further enhance the difference. Due to perspective effect and distortion, whether the extremely small-sized target located at the far end can be successfully recognized is sensitive to the accuracy of pose estimation. It is common that there are differences in features and visual styles between the process model and physical object. Additionally, the surface treatment of machined products can result in sparse texture and high color consistency that are not conducive to feature extraction.

Figure 1.

Difficulties of high-precision camera pose estimation for complex assembled products.

Technically, camera pose estimation focused in this study is the absolute pose estimation (APE). Research in this direction mainly includes six categories [7,8,9]:

(1) APE based on cooperative target can be further subdivided into manual markers [10,11], auxiliary projection [12], laser tracker [13], and structured light measurement [14]. In these methods, easily identifiable features are supplemented to the target manually or by automated devices to alleviate the difficulty of feature extraction and analysis during APE. This strategy is commonly used in high-precision tasks, such as calibration, and is also suitable for large structural parts with smooth surfaces and simple structures. Furthermore, with the support of a movable robot vision platform [15], APE can be transformed into matching and stitching of a 3D point cloud.

(2) APE based on geometric features includes two steps: feature extraction and relationship construction of 2D pixels and 3D coordinates (2D–D) and optimization-based APE estimation. Manual feature [16,17,18] and learning-based approaches [19] are usually adopted in the first step, in which two key issues need to be considered, namely, the adaptability of 2D features and the robustness of 2D–3D relationship evaluation. The representative optimization method for APE estimation is EPnP [20] and some other related studies [21,22,23,24]. In the estimation stage, it is essential to reduce the interference of outliers on the 2D–3D relationship and avoid getting trapped in the wrong local optimal solution. Compared with other technical routes, strategy (2) can obtain the best APE accuracy, but it is limited by the complexity of the scene to obtain enough 2D–3D pairs with correct relations at one time. Moreover, the iterative mode is easily affected by the latest observations, making the final convergent result significantly biased.

(3) APE based on mapping and retrieval is one of the commonly used strategies in the field of simultaneous localization and mapping (SLAM), emphasizing the robustness of scene feature encoding and the ability to describe differences between different viewpoints [25,26]. When the accuracy requirement is not high, the pose can be obtained by retrieving the viewpoint closest to the current feature in the map library; otherwise, the result of strategy (3) will be adopted as the initial value for strategy (2) [27].

(4) APE based on deep learning (DL) relies on a deep convolutional neural network (CNN) to perform pose estimation in an end-to-end manner. CNN-based architectures represented by classic research PoseNet series [28,29] do not require feature engineering and have a strong adaptability to feature variation. Nevertheless, there is still a gap in reliability and accuracy between strategy (4) and strategy (2) [30]. Moreover, some state-of-the-art studies indicate that close performance can be achieved in public datasets such as LineMod, LineMod-O [31,32,33], and MegaPose [34] and can adapt to unseen objects through rendering and comparison, but they all require ensuring model accuracy, fewer targets, and balanced sizes, which is significantly different from the complex assembled product.

(5) Hybrid and hierarchical strategies include methods based on functional hybrid training of multiple CNN models [35,36] and hierarchical approximation from coarse to fine [37]. Research in strategy (5) is devoted to addressing the adaptability of pose estimation to changeable feature context in open multi-scene. The accuracy achieved depends partly on the architecture and trick design of CNN and partly on the geometric feature-based APE algorithm adopted in the backend.

Through the literature review above, it can be found that the compatibility of accuracy and adaptability in pose estimation of complex assembled products under free view hinges on building an interpretable hierarchical architecture while maintaining the advantages of empirical design and learning paradigm. However, the interpretability and consistency of DL-based methods limit their application in manufacturing, while traditional APE lacks local–global collaborative control in matching and optimization mechanisms and relies on fixed empirical parameters in adaptability, resulting in quite strict requirements for initial pose in complex assembled products. Therefore, it is necessary to deeply integrate traditional APE- and DL-based paradigms in terms of execution mechanism, operation rule, and interactive strategy, laying the foundation for consistency of results, local–global feature adaptability, and traceability of exceptions, rather than simply concatenating the two and directly applying them.

Driven by this motivation, this paper proposes a novel, learnable viewpoint evolution algorithm for high-accuracy pose estimation of complex products in high-end equipment manufacturing. Compared with existing APE methods, the contribution can be summarized as follows:

- (1)

- Reconstruct the camera pose estimation into the evolution of parameter population to generate an interpretable fine-grained iterative framework;

- (2)

- To balance exploration and optimization, feasible region constraint, parent migration, and reproduction strategies are designed for local–global collaborative control;

- (3)

- Propose a guided-model-based hierarchical cyclic effective trajectory learning mechanism to reduce the empirical dependence of control and enhance adaptability.

2. Proposed Method

As shown in Figure 2, the method includes two stages, which can be divided into five modules. The initial stage is used to generate the initial pose, including the extraction of significant features, viewpoint sampling and projection, feature matching and viewpoint searching, virtual image rendering, and initialization of the evolution stage. The significant feature refers to the geometric feature (e.g., points, lines, and curves) that are well visible and distinguishable from different viewpoints. Virtual viewpoints are generated following the spherical or ellipsoidal rule [38]. The rough pose is provided by the sampled virtual viewpoint with the best similarity to the significant feature of the real image. The feature matching and similarity evaluation are performed by a weighted point pair matching algorithm [39]. Some CNN-based coarse pose estimators with a small number of samples are also suitable choices for obtaining the initial pose.

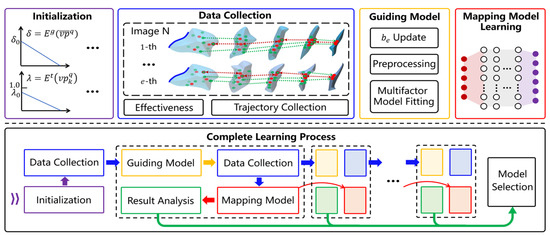

Figure 2.

Method framework.

The evolution stage is redesigned as population initialization and migration, offspring generation, viewpoint quality assessment and selection, pose feasible region construction and update, and a learnable evolution function optimization inspired by importance sampling in reinforcement learning [40].

2.1. Viewpoint Performance Assessment

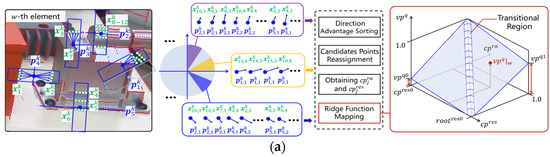

For each viewpoint , the performance reflects the average similarity of virtual–real ROI of all visible components. The geometric feature is adopted to overcome texture sparsity. Inspired by [41], as shown in Figure 3, a self-adaptive evaluation line-based feature matching and evaluation algorithm that balances structural stability and multiple considerations is designed to enhance robustness against virtual–real differences, as well as uneven correspondence. For each visible component , where belongs to the set of all visible components under , the virtual–real feature is denoted as and is projected from 3D coordinate . represents the set of points on the -th projected line segment of , and the same applies to .

Figure 3.

An improved virtual—real feature matching and similarity evaluation criterion. (a) Principles of viewpoint performance assessment. The blue and green points represent virtual features and candidate real features, respectively; (b) the effect after ridge function mapping.

For any in , a rectangular search region with changeable size along the normal direction is expanded, where the size depends on is the performance of the parent view of , and denotes the evolution function of the search factor . In the rectangular region, any point belonging to can be selected as a candidate matching point for as long as the angle between and satisfies the preset range. After searching, a redundant candidate point set of is established. Theoretically, there is always an ideal transformation that can minimize the error of with the constraints provided by root . During the evolution stage, the translational part in is the main factor that narrows the gap between . Based on the above assumptions, the quadrant is divided uniformly to roughly determine the potential direction of translation, and a pair of matching points is defined as . According to

the candidate set of in each slope range can be obtained. For each slope range, the number ratio and the distance variance between the candidate set and can be counted. The direction advantage is defined as , where represents normalization operation (all slope ranges), and candidate matching points are reassigned to in order of advantage. During the reassignment process, if there is a one-to-many situation, the candidate point belonging to the slope range that reduces the variance of the current matching distance is preferentially selected. Afterward, we can obtain , , and , where represents an incomplete set, that is, part of finds no corresponding , is the number ratio, and denotes the distance average of all matched pairs.

Evaluation criteria that rely solely on are easily affected by background noise, incomplete geometric features, and virtual–real differences. Therefore, a ridge function mapping strategy is further embedded with as an additional evaluation factor. Inspired by inverse square root unit (ISRU) [42], the mapping is defined as follows:

where is the transformation factor of . Here, (2) shows a parameter combination with suitable performance, which allows to be less sensitive to extreme ranges. Afterward, define the measurement point and the fixed point , where describes the ideal average distance error. For the discrimination of the result after mapping, let , where is the average distance error of the root . The ridge function can be planned as

where are the boundary of the transition region and . The transition region can similarly be set to be plane, making the mapped smoother. are a set of indicators adopted to adaptively modulate the propensity of and . Intuitively, it may be more suitable to set at the beginning and at the end.

In this way, the four aspects of structural stability, error direction, number of matching points, and distance error can be integrated. For the -th component, let denote the result mapped by (3). The white numbers in the second row of Figure 3 represent (first row) and (second row). To maintain consistency with the gradient direction of distance error, let in Figure 3. For some matching states that cannot effectively distinguish, provides reliable discrimination. The viewpoint performance can be calculated according to . If the and obtained by root are not updated in time during the evolution stage, the evaluation will encounter greater viewpoint ambiguity and differences in geometric features. Therefore, by configuring different rendering frequencies, the adaptability of the proposed method with respect to the degree of virtual–real difference is deeply evaluated in Section 3.

2.2. Feasible Region Construction and Update

2.2.1. Problem Planning

Projection matrix (or pose) contains seven variables if the representation approach of quaternion and translation is employed. The crossover and mutation in the traditional genetic algorithm are too exploratory to ensure the optimization of . The commonly used numerical optimization is limited by the observed and prone to fall into local optimum. Thus, it is expected to provide a range constraint in the solution space for parent to children based on observed , which can be continuously updated and contracted to the ideal solution with the adjustment of during the evolution. The range in this study is defined as pose feasible region , and for , where

represents the pixel coordinate corresponding to the best achieved by the -th visible component in the previous round. , is the initial parent selected from in the -th round, and refers to after migration to . is the evolution function of the tolerance factor for constructing . To provide a feasible constraint to the pose search, the expression of should be solved. This expression problem can be formulated as

where is the logarithmic barrier function and denotes the approximation factor to the indicative function. This is an optimization technique that uses the interior point method to remove inequality constraints. In practice, however, the optimization process of (5) is too complicated. Given different initial values, repeated iterations and optimizations of are computationally expensive. To this end, the assumption of structural stability is again applied as a basis for simplification. Specifically, only the matching relation corresponding to the best advantage in is retained to sparse to reduce the scale of problem (5). To alleviate the tedious optimization work, a combined strategy based on boundary projection and sparse trajectory approximation is provided below.

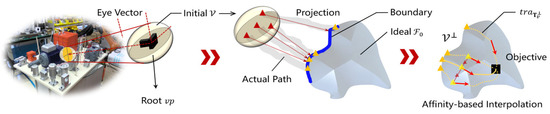

2.2.2. Initialization

As shown in Figure 4, an ellipsoidal rule [38] is used to sample the initial viewpoint pose, denoted as . Due to the small rotation of the in-plane during machine or manual imaging, the rotation of the out-of-the-plane can be regarded as a strong prior for constructing the initial viewpoint network.

Figure 4.

Pose feasible region construction.

2.2.3. Boundary Projection

For each of , the projection to the boundary of can be planned as

where is the projection result. The function is designed to measure the distance between poses, where is the quaternion representation of and defines the multiplication of quaternions. The objective function (6) is a small-scale with equality-constrained optimization problem, and the solution of each iteration can be approximately equivalent to

according to SQP [43], where is the objective function of (8) and is the transpose operation. Then, (7) can be rewritten as

at , is the variable to be solved, and is the Lagrange multiplier. It should be noted that the above calculation involves the derivation of and the update of . However, the of is only closed for multiplication, not for addition and subtraction. Therefore, a logarithmic mapping needs to be performed on to obtain based on the representation of a Lie algebra. is the subtraction defined on . Let where are their respective unit vectors. Then, the of (8) can be expanded as

The related research is mature, and hence, the calculation process of , , and (9) is omitted. After completing the optimization, the results can be recovered from to by exponential mapping. The rSQP [44] is recommended if the scale of and in other studies is still large.

2.2.4. Sparse Trajectory Approximation

Let the projected be . For each , an unconstrained objective function can be constructed as

where is the kernel function to enhance the robustness of outliers, and Huber is selected in this study. Taking as the initial state, (17) is solved in parallel based on the LM strategy, where the pose change can be recorded as , and is the number of iteration steps of the -th trajectory.

Let the optimization direction of all trajectories in the -th step be , and define the trajectory affinity as

Afterward, the horizontal interpolation is evaluated for . For all ,

Let , then can be expressed as a spherical linear interpolation operation on and respectively, in the following way.

Through the above work, the discrete approximation of can be obtained.

During evolution, once the historical best of any component is reset due to the generation of a child , the pose feasible region update can be selectively started according to the change in the . is updated from , and the update work can be summarized into four steps, including the following: (a) simplifying based on structural stability to obtain ; (b) determining and and selecting the support according to from ; (c) calculating the projection of the support to the boundary by Formulas (6)–(9); and (d) solving the optimization problem (10) and performing affinity-based interpolation with (11)–(13).

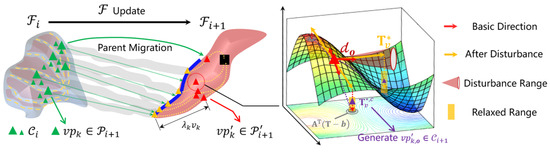

2.3. Parent Migration and Offspring Generation

As shown in Figure 5, a parent migration mechanism is proposed to ensure that the population performance can be gradually improved with the update of . The migration operation is defined as and of , which denotes the pose of the migrated parent, can be expressed as

where means to randomly pick an integer in the range and is the evolution function of the migration factor . To further align the exploration with the actual situation, we performed rotation correction on the randomly generated values.

Figure 5.

Parent migration and offspring generation strategy.

Sampling is highly exploratory while performing strictly according to the optimization direction lacks exploration. To address these problems, an offspring generation strategy based on the pose feasible region constraint is designed.

The Jacobian matrix of (10) is . For any and its , the next feasible point within the trust region can be calculated from . The basic direction is defined as

where indicates that the corresponding column of is assigned to 0 according to the gradient clipping configuration . For example, means that only the gradient of rotation is considered. In this study, includes maintaining the gradient of and clipping in four dimensions and maintaining the gradient of and clipping in three dimensions. The estimated amount of gradient descent can usually be expressed as . The probability of generating offspring in each basic direction is described as

where , , otherwise, . is the pre-allocated probability of exploration, and is the complementary set of . To prevent the imbalance of due to too few exploration directions, it is recommended to apply secondary adjustments according to the ratio of the in the two sets.

As shown in Figure 5, once the basic direction is selected, a cone-based disturbance is further performed on the rotation and translation, respectively. For simplicity, and are adopted to represent the disturbance range of angle range and scale, respectively, where are the corresponding evolution functions. Therefore, the perturbed direction , where is described as

is the unit vector, is adopted to randomly sample from the distribution . After is determined, the pose can be updated through according to and the cumulative change in pose.

To alleviate the unpredictability of actual gradient descent caused by the introduction of , a relaxed projection approach based on is established. The projection position of is determined by the hyperplane supported by its three neighbors in . The hyperplane is defined as and the relaxed factor is denoted as . , who is the offspring of , can be calculated after relaxed projection through

according to the projection theorem, where is adopted to control the constraint strength and is the evolution function of .

2.4. A Learnable Evolutionary Function Optimization

The evolution functions mentioned above include , , , , , and , which are employed to manage evolution parameters and processes based on the performance of the population. The evolution function is initialized as a linear function, and the abscissas of the head and tail are taken as and , where is the termination threshold. can be referenced by ,. The ordinates of other parameters need to be empirically provided; see Section 3 for details.

As shown in Figure 6, a learning mechanism for optimizing the evolution function is proposed. The learning stage consists of three main modules and is designed as a hierarchical cyclic process. In the data collection module, each image of the dataset is repeatedly tested according to the current evolution function, and the evolution trajectory of the viewpoint with the best effectiveness in each test is collected. The effectiveness of the -th round -th viewpoint is defined as

which can be regarded as a reverse tracking of of the trajectory generated by the final output viewpoint. For each node in , are saved to complete the trajectory collection, where represents the actual sampled value. Afterward, are normalized to prevent overwhelming effects due to the magnitude differences. It can be found that the core purpose of evolution function optimization is to build the mapping relation between the observed population performance and process adjustment through high-quality results. Intuitively, there should be a better way of exploratory decay than six separate descent strategies with fixed slopes.

Figure 6.

A learnable mechanism based on effective trajectory tracking and guided model.

The uneven performance of (there is a large variance of ) generated by linear function will make further learning difficult to converge. To this end, a guided model based on multi-model fusion is designed to provide a reasonable transition for data distribution learning. The linear model can be extended as , where controls the downward trend of the evolution curve, and is the normalized result of each parameter in . Given the head and tail points, can be modeled as functions of . Considering the correlation between parameters, factor fusion is performed as

where is the correlation matrix. In such a way, the relationship between and can be comprehensively modeled by (20). The design of this guided model is derived from an empirical understanding of the evolution process from coarse-to-fine and a priori knowledge of the interaction of parameters. For example, the correlation between and should be prioritized over the correlation between and other parameters.

Furthermore, let the of each parameter be initialized as a constant . The fitting of (20) is executed with as the dataset to obtain the guided model. Next, the guided model is applied to testing and data collection as a temporary evolution function to prepare , which implies that the distribution state of the data will be naturally constrained. A neural network with ResNet18 as the backbone and four fully connected layers as the output is employed for completing the learning of complex nonlinear mapping relationships through . The mapping model , where the input is the set of five parents, and the output is . Let be the new dataset obtained according to the mapping model. Obviously, the construction of is affected directly by the value of , which symbolizes the strictness of the intermediate guidance strategy. For the automation of the whole learning process, the value of is set with reference to following the principle of decreasing integral. For ,

Then for ,

In this study, , and the standard deviation of the output offspring performance after repeated evolution of each image is denoted as . The hierarchical cyclic learning process is shown in Figure 6, which can be specifically expressed as . Each update of is based on the previous one, and the corresponding to the one with the best is taken as the final learned evolution function.

3. Experiment and Results

Section 3.1 shows the preparation work. The cutting-edge DL-based method and classical VPS method are compared in Section 3.2. The robustness of the proposed method to pose deviation and the advantage of enabling the learning mechanism are verified in Section 3.3. Section 3.4 conducts parameter sensitivity analysis to provide a basis for parameter selection; Section 3.5 compares different strategies for offspring generation; the adaptability of the virtual–real difference is presented in Section 3.6.

3.1. Preliminary Works

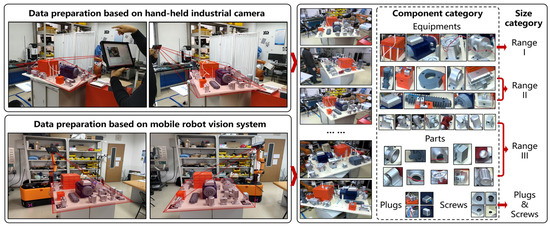

(1) Experimental platform. For this study, a complex assembled product with 113 components was constructed, including 27 different types of equipment, 10 parts (e.g., craft cap), 14 plugs, and 62 screws. The appearance of this product is inconsistent from different viewpoints. Some equipment and parts were surface-treated to exhibit low texture and high color consistency.

(2) Parameter configuration. Migration factor , scale disturbance factor , angle disturbance factor , relaxed factor , search factor , and termination threshold , . The number of parent nodes is 10, and the number of child nodes is 5.

(3) Dataset. As shown in Figure 7, the dataset consists of about 240 viewpoints, which were captured by a hand-held industrial camera and a mobile robot vision system, respectively. The working distance is about 1.8 m, the view width is about 1.5 m, and the resolution is 3264 2448. The ground truth was calculated through artificial markers and manual refinement (the beige base is only used to calculate ground truth).

Figure 7.

Practical application, data preparation, and configuration.

(4) Evaluation Indicator. The pose of complex products in the manufacturing industry mainly serves subsequent inspection, AR-based projection, or robot operation. Therefore, the positioning accuracy of each component can better reflect the practical significance of pose accuracy. For this reason, in addition to the widely used pose deviation (mean translation error and mean angular error ), we have also modified IoU as follows:

to better distinguish performance, where is the 3D 8-point box coordinate of component , represents a set of elements ( is the number) belonging to Range * (Range I, II, III, plugs, and skews).

3.2. Performance of Pose Estimation

Three commonly used solutions in APE were employed for comparison, including direct estimation, iterative estimation, and hierarchical approach. Each solution was supported by a different combination of the most common and classic algorithms. Specifically, the methods for virtual–real feature matching consist of nearest neighbor principle (S0), normal-based search [14] (S1), fusion of Turkey estimation and color [45] (S2), region-based method [16] (S3), iterative point matching [39] (S4), and global strategy FRICP [46]. The optimized part can be arbitrarily selected from EPnP [20], EPPnP, CEPnP [22], ASPnP [31], PPnP [23], and MLPnP [24]. DL-based methods employed in hierarchical strategy include PoseNet2 [29], single-shot pose [47], and state-of-the-art estimator PVNet [30] and MegaPose [34]. Another part of the latest study [31,32] has also undergone research. However, in the case of insufficient sample size/form and dense auxiliary supervision signals, these structurally complex networks are difficult to train sufficiently and prone to overfitting. Therefore, considering the different assumptions of the research, they were not compared for the sake of fairness.

The control group settings are as follows: (a) Control group I. The virtual viewpoint sampling and matching method described in Section 2 was employed to perform the rough positioning. Only the direct estimation scheme with the best performance is listed. (b) Control group II. Iterative version of Group I. For each optimization algorithm, the combination with the best performance and the combination with the method in Section 2.1 (VPS) were compared. (c) Control group III. Different feature-matching algorithms were embedded into the proposed viewpoint evolution.

The group applying the deep learning method was denoted as Group DL, which includes the following: (a) DL-A. The rough pose was adjusted by PoseNet2 with a different number of bounding box labels. The bounding boxes of two devices from Range I were set as labels in DL-A1, and the same 2 boxes from Range I, together with another 4 boxes of devices from Range II, were set as labels in Group DL-A2. (b) DL-B. The single-shot pose network was trained with the labels of DL-A1, and its output was used for PnP estimation to obtain the rough pose. The label was modified from a bounding box to an 8-point bounding box. (c) DL-C. The estimated result of DL-B was adopted as the rough pose for Group II; DL-C1 represents the combination with best performance, and DL-C2 represents the combination with VPS. (d) DL-D. PVNet is employed, whose semantic labels were generated from the minimum bounding rectangle of significant geometric features. (e) DL-E. MegaPose is selected, where the base is removed from the 3D model, and the bounding box prompt is manually set.

For all DL Groups A–D, about 120 real samples and 1200 virtual samples were prepared for model training. The configurations of the proposed method were as follows: (a) Proposed-A. Evolution function learning was disabled in this group, while only one rendering according to the rough pose was allowed. (b) Proposed-B. Evolution function learning and multiple rendering were enabled in this group, and the estimated result of DL-B was accepted as a rough pose.

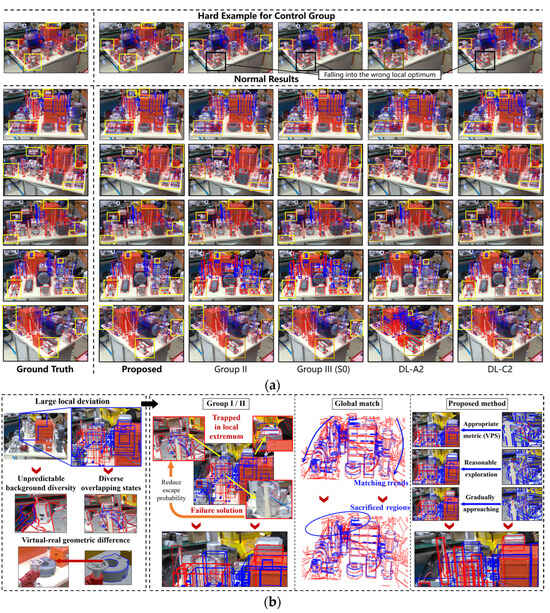

The results are listed in Table 1, and the performance is shown in Figure 8. The areas that can reflect the difference in accuracy are marked, and the higher the brightness, the more accurate. Overall, it can be summarized as the following points: (a) the effectiveness of the proposed method can be proved; (b) non-DL methods are still the first choice for high-precision applications; (c) DL module with weak annotation can be used to provide a suitable initial pose under insufficient dataset; (d) the possibility of escaping from the local optimum in feature matching is the main factor affecting the performance.

Table 1.

Camera pose estimation performance comparison.

Figure 8.

Visualization of estimation performance. (a) The error is reflected by the projected deviation of a component with an 8-point box. (b) Performance in dealing with dense noise and geometric differences.

The difficulty of the problem is evident in Figure 8a, and the results of Group I and the reasons are shown in Figure 8b. Unpredictable background diversity and various overlapping states pose challenges to the construction of correspondence in the matching process under dense noise, while virtual–real geometric difference further increases the difficulty of noise identification. Moreover, under some special initial viewpoints, there is an uneven deviation in the virtual–real correspondence, which makes it more difficult to explore the correct direction in local large-deviation areas with dense noise. Take the upper plug in the sixth row of the sub-image as an example. There is a large spatial difference between the plug and all visible components that provide the 2D–3D pairs for pose estimation. Therefore, even if these visible components are well positioned, the of the plug may still be less than 0.5. Through the results of Screws , it can be proved that a satisfactory camera pose cannot be achieved only by direct estimation. Since the local iteration mode of S4 brings more exploration to the virtual–real feature matching of each component, it shows the best performance and is listed in Table 1.

In terms of matching strategy, S3, which simultaneously fuses edge, texture, and color, is the best choice among S0–S4. The matching and weight adjustment of S4 is based on S0, so it is easily interfered with by noise and has no advantage in Group II. Compared with S0–S4, the strategies of structural stability and advantage direction in VPS effectively improve feature matching, especially in the reduction in angle error. From the perspective of the matching mechanism, the conventional iterative mode lacks exploration and cannot be solved by simply increasing the search range. Once the components with sparse texture stack seriously and the initial deviation is large, the matching process is easily affected, which further leads to a local optimal result (e.g., Figure 8b). Therefore, as shown in the hard example in Figure 8a, no matter how many iterations, there is no obvious difference between the obtained pose and the rough pose. Similarly, iterative global matching tends to maintain the majority of good (minor deviation) while sacrificing bad (large deviation), resulting in cumulative deviation.

On the other hand, the applied optimization methods usually assume that the noise follows a Gaussian distribution in their studies. Therefore, it is possible for the method to ignore outliers and escape from the local optimal state only when the distribution of noises in 2D–3D is close to the Gaussian assumption. In practice, a more common situation is that the final matching results tend to exhibit a biased state due to ignoring the support of geometric features in low-quality matching regions. For example, as shown in the result of Group II in row 5 and row 2, the projection result is skewed by the estimated pose to the side with suitable 2D–3D pairs. According to the results shown by the combination with VPS, the performance difference between the optimization algorithms is not obvious.

The results of Group III demonstrate the robustness and improved ability of the proposed method to feature-matching algorithms. Due to the iterative local exploration ability, S4 in Group III shows excellent performance, even surpassing Proposed-A. However, this approach is less efficient and has insufficient practical significance. For the DL group, the amount of dataset is the primary issue. From the results of DL-A1 and A2, DL models always tend to provide suitable results near fully trained viewpoints, while its estimation performance degrades greatly when the target participating in training is occluded. Therefore, the improvement in the DL-A2 with more labels is not significant compared with DL-A1. Transforming the pose estimation into a two-stage method of significant geometric point regression and PnP, DL-B effectively alleviates the unreliability of end-to-end estimation and is a recommended rough posed acquisition strategy. Supported with DL-B, DL-C further brings better performance to Group II, which fully illustrates the importance of the initial pose. Despite providing precise target boxes, DL-E still cannot directly adapt to complex products that are invisible.

3.3. In-Depth Analysis of the Proposed Method

3.3.1. Verifying Robustness to Initial Pose Deviation

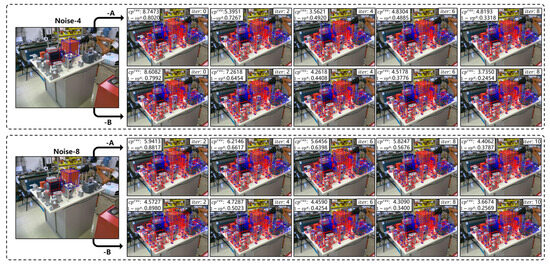

In this section, the repeatability and robustness to initial pose deviation of Proposed-A and -B were tested. The DL-B method was employed in the rough positioning process, and the test was repeated 20 times for each image. Furthermore, different max Gaussian image noises were added to the coordinates of the two 8-point bounding boxes recognized by DL-B.

In Table 2, represents the average value of in all datasets, is the standard deviation of all , and denote the extreme value of , and the same is true for , and . It can be clearly seen that the proposed method exhibits the ability to adapt to rough poses within the error range of mm. Moreover, as shown by the results of and in Noise-0, it is reasonable to believe that the proposed method has suitable repeatability, and this repeatability can be maintained until the noise exceeds the tolerance (e.g., Noise-10). As shown in Figure 9, the pose estimation process was visualized according to the number of iteration rounds. It can be found that the rough pose with large error requires more exploration, which means that the effective update of the feasible region decreases and the value of tends to increase. The update lag of reduces the probability of occurrence of offspring with suitable before termination, thereby increasing and . Due to the existence of evolution function optimization and multiple rendering mechanisms, the performance gap between -A and -B is obvious, which can also be found by the and projection errors of -A and -B with the same . Once the error of the initial pose exceeds the acceptable range, such as Noise-12, the performance of both groups is destroyed. Presently, it is necessary to modify the method parameters to improve the degree of exploration, such as increasing the initial ellipsoid size, , , and .

Table 2.

The robustness to initial pose deviation.

Figure 9.

Visualized comparison of robustness of Proposed-A and -B to pose deviation.

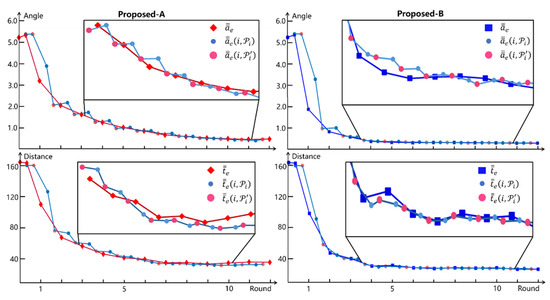

3.3.2. The Advantage of Learning Mechanism

To further explore the performance difference between Proposed-A and -B during the evolution, an additional metric is designed as

where and represent the -th round of parents and the migrated parents. Then, let be the mean of for all repetitions ( is the same), and the results are shown in Figure 10.

Figure 10.

Performance comparison of whether to enable evolution function optimization.

Overall, the final performance and improvement speed of in -B is significantly higher than that of -A, which indicates that a more reasonable parameter reduction approach is conducive to the high-quality update of , indirectly increasing the probability of local difficult components escaping the dilemma. Comparing with their respective , it can be observed that appropriate reproductive strategy can provide suitable local exploration for the composition of the optimal viewpoint and make . However, as the iterations progress, the absence of fine control for -A leads to the inability to strictly constrain the exploration. Meanwhile, the lack of timely rendering results in viewpoints with high not necessarily exhibiting smaller errors. Therefore, in the later stage, the optimal viewpoint of -A is perturbed and invalid updated, causing fluctuations in , even greater than . Proposed-B shows better result stability compared with -A, suggesting that many ineffective explorations could be avoided, and a smaller can be obtained. Therefore, the Proposed-B was employed in all subsequent experiments.

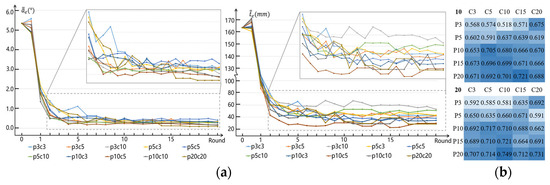

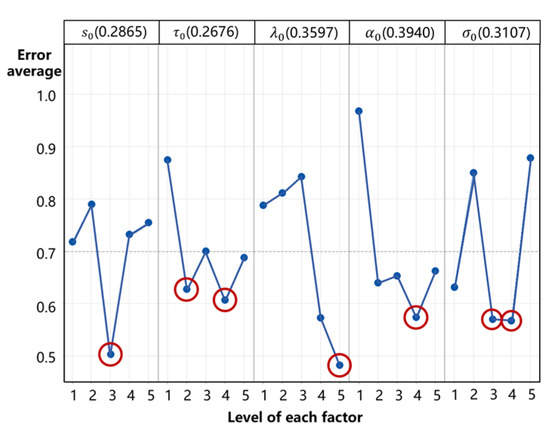

3.4. Parameter Sensitivity Analysis

Important parameters include the number of parents and children and a series of initial values {} related to the initialization of the evolution function. Sensitivity analysis was first carried out on the number of parents and children, and then an orthogonal experiment was performed for {}. Then, based on the results of the orthogonal experiment, the main factors are extracted using the mean effect plot, and further analysis is performed.

As shown in Figure 11, indicates that the number of parents and children is both 5. It should be noted that the application of guided model (20) requires additional sub-viewpoints when , while the of the parent still follows the configuration during offspring generation. In theory, more parents means greater global exploration, and more children means stronger local exploration. When is fixed, the performance first increases and then decreases with the increase in from the results in Figure 11b. This is because local exploration requires a reasonable combination with global exploration, so blindly expanding will inject too many low-quality attempts, harming the updating of and the evolution process. In terms of process, excessive makes it difficult to maintain the early advantages of evolution and premature convergence to the low-performance range. When is fixed, the performance gradually stabilizes with the increase in the . However, from a process perspective, although the results have improved, more fluctuations have emerged during this period, resulting in delayed convergence. To balance accuracy and efficiency, was adopted for subsequent experiments.

Figure 11.

The influence of the number of parents and children on the performance. (a) Pose performance during evolution. (b) Skews () under different configurations when = 10 and = 20.

Letting , , the orthogonal experiments with 5 factors and 5 levels were performed, and the results are shown in Table 3.

Table 3.

Orthogonal experiment of initial parameters.

Moreover, to further clarify the impact of each factor, we drew a corresponding 5-factor 5-level main effect plot based on Table 3. For ease of calculation, the pose error was merged as , and the results are provided in Figure 12. In terms of value range (the first row), it can be found that have a strong influence on the performance (0.3597, 0.3940) and tend to provide the best results at levels 4 and 5. This is because is a variable that controls the degree of global exploration and determines the search scope of the viewpoint quality evaluation. With the assistance of the method mechanism, an appropriate increase in can indeed contribute to early exploration. As the projection operation based on in offspring generation will provide additional limitations for exploration, has the least impact on the performance due to the close effect of at levels 3 and 4 and the fact that increasing will increase the range of viewpoint evaluation (reduce efficiency). Moreover, the balance of efficiency and performance depends on the hardware devices and application scenarios. In this study, the configuration with and {3, 4, 5, 4, 3} was ultimately selected and provided.

Figure 12.

Main effect of performance. The red circle indicates the potential level of factors.

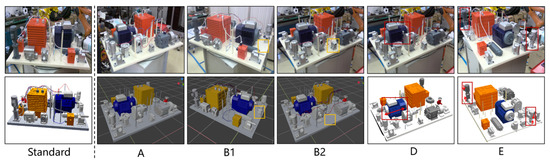

3.5. Appearance Difference Adaptability

This section verified the adaptability of the proposed method to the appearance difference (e.g., product at different stages or lightweight model) and viewpoint difference between the virtual model and real product. The setting of appearance difference is shown in Figure 13, where A, D, and E were not equipped with cables, one device belonging to Range II was removed in B1, one virtual component belonging to Range II was removed in B2, and C = B1 + B2. Additionally, the visual style of Groups A–C was different from the standard, and the part dimensions of Groups D and E were modified, as shown in Figure 13, respectively. The dataset was adjusted according to the above changes, and the experimental results are listed in Table 4.

Figure 13.

Configuration of state difference between 3D model and real product.

Table 4.

Appearance difference adaptability.

The results proved the adaptability of the proposed method to state differences, especially to visual feature changes caused by the differences in model rendering style. Moreover, pose errors of Groups A, D, and E reflect that sparse context changes such as component size modification have less impact on the performance than rendering style changes. Although the error of Group C is close to , 69.59 mm, the proposed method still has an advantage over the existing methods listed in Table 1. The impact of a missing part is significant, and the reasons are shown in Figure 14. The current object has a high similarity with the component behind it in this viewpoint, and mismatching is considered a high-quality correspondence. Moreover, when occluded components participate in matching, their features are extremely different from the region that contains a missing part but rather highly similar to the noise. Therefore, the coupling of the above situations prevents anomalies from being intercepted by VPS, causing viewpoints that should have been discarded to always participate in feasible region construction and evolution, resulting in continuous accumulation of errors.

Figure 14.

Analysis of the impact caused by missing parts.

The viewpoint difference was adjusted by setting the total rendering times of the evolution process. For example, Group 2 means that only the first two rounds enabled rendering. The virtual image rendering was performed based on the viewpoint with the best in each round. The upper limit of iteration rounds was set to 11, and the results are shown in Table 5. By comparing the results of Group 1 in Table 5 with those of Proposed-A in Section 3.2, it can be further found that the value of evolution function optimization. The results of Groups 1~3 prove the adaptability of the proposed method to the differences between virtual and real viewpoints and the geometric feature inconsistency caused by them. The pose estimation accuracy is positively correlated with the rendering times. By observing the change in from Groups 4 to 11, we can find that rendering times are not the more, the better. Since suitable results usually appear in the 6th to 9th rounds and then tend to be stable, the difference between virtual and real features brought by additional rendering will stimulate more exploration, which will lead to fluctuation in results and an increase in . Therefore, the balance between accuracy and performance should be determined based on practical applications.

Table 5.

Adaptability of the difference between virtual and real viewpoints.

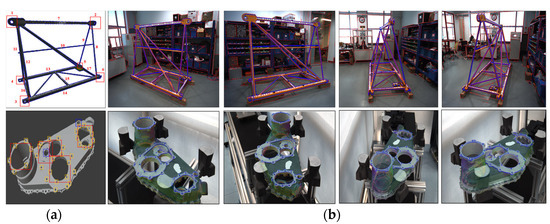

3.6. Practical Application Cases

The application cases in two real manufacturing scenarios are shown in Figure 15.

Figure 15.

Settings and application cases in real scenarios. (a) The components are divided according to size and participate in pose estimation based on this order. (b) Pose estimation performance. The blue points represent the reprojection effect of virtual features.

The first row shows a large-sized truss-type product painting task, and the second row introduces the assembly and gluing of porous structural components assisted by AR. The core task of both cases is pose estimation, and their respective problems correspond to a part of the experimental platform. The test results show that the proposed method can improve the initial error of the former from , 80 mm to , 17 mm, and increase the hole projection deviation of the latter from <0.1 to >0.5. Some valuable setting strategies and potential limitations analysis are as follows.

(1) Setting strategies. (a) Divide object set based on assembly relationships and part sizes, and define a rule for sub-object to gradually participate in evolution; (b) given an empirical viewpoint, obtain the initial pose through the strategies provided in this article or studies [48,49,50]; (c) considering both uniform distribution and matching residuals, determine the constraint features for feasible region initialization; (d) choose to adjust VPS or replace it with other matching strategies based on the object structure; (e) select the number of termination evolution rounds based on observations; and (f) choose whether calibration optimization is needed based on the characteristics of the scene.

(2) Potential limitations. (a) No significant structural features (such as flexible bodies). Lack of initial pose will result in the inability to initialize the feasible region. One possible solution is to adopt a method like MegaPose [34] that can adapt to unseen objects to provide an initial pose. (b) Excessive initial pose deviation is always a challenge. For possible failures, we recommend some possible methods from an engineering perspective to constrain the initial pose, including planning viewpoint, empirical adjustment, fixtures, etc. (c) Equipment failure. The coverage of the field of view and the rationality of the depth of field needs to be given priority consideration during the planning phase.

4. Discussion and Conclusions

In this study, we present a novel attempt at the integration of knowledge-based agent models and data-driven deep learning for accurate pose estimation of complex products. The pose estimation is reconstructed as an evolution process. To solve the problem of large-scale solution space optimization, virtual–real matching considering structural stability, evolvable feasible region constraint, and adaptive population migration and reproduction are proposed. To reduce the empirical dependency in parameter tuning rules, enhance robustness and promote rapid convergence, a guided-model-based hierarchical cyclic effective trajectory learning mechanism is proposed and embedded. Compared with the best result mm and mm in classic and latest solutions under different existing method configurations, the proposed method enjoys better performance, the pose error can reach mm, and the cause of abnormal results can be retraced.

Moreover, through experiments and analysis, we have found the following:

- (1)

- A hierarchical architecture from coarse to fine is a practical approach to image-based pose estimation while maintaining high accuracy and reliability;

- (2)

- Consistent with previous studies [28,29,30,35], cascaded DL module and geometric optimization can effectively balance adaptability and accuracy;

- (3)

- Some practical problems, such as limited samples, complex structure, and multiple elements, make it difficult to directly perform complex advanced DL-based networks. On the contrary, indirect models that only require weak annotation are easier to deploy in practical applications;

- (4)

- For global or divide-and-conquer architectures based on matching, metric, and optimization, the coordination of exploration and optimization requires more detailed regulation in the pose estimation of complex assembled products.

From the perspective of application value, through case studies and validation, it shows that the method will be beneficial for non-serious measurement applications that require camera pose in the assembly process of some complex products, such as AR projection, robot-assisted control, appearance inspection, etc.

In terms of potential limitations, it is undeniable that many specialized designs and appropriate initial poses need to be ensured. In practical applications, we recommend planning the imaging pose network or employing robot imaging for known scenarios in advance to reduce the search space. Moreover, we plan to carry out further validation in more manufacturing scenarios to identify more possible practical challenges (e.g., actual missing parts) and adopt CNN-based strategies to represent feasible regions.

Author Contributions

Conceptualization, D.Z.; methodology, D.Z.; software, D.Z. and F.K.; validation, D.Z. and F.K.; formal analysis, D.Z. and F.K.; investigation, D.Z. and F.D.; resources, F.D.; data curation, F.K.; writing—original draft preparation, D.Z.; writing—review and editing, D.Z.; visualization, D.Z. and F.K.; supervision, F.D.; project administration, F.D.; funding acquisition, F.D. All authors have read and agreed to the published version of the manuscript.

Funding

This work is financially supported by the National Natural Science Foundation of China (grant number 52375478).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Marchand, E.; Uchiyama, H.; Spindler, F. Pose Estimation for Augmented Reality: A Hands-On Survey. IEEE Trans. Visual Comput. 2016, 22, 2633–2651. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Shang, Y.; Zhang, H. A Survey on Approaches of Monocular CAD Model-Based 3D Objects Pose Estimation and Tracking. In Proceedings of the 2018 IEEE CSAA Guidance, Navigation and Control Conference (CGNCC), Xiamen, China, 10–12 August 2018. [Google Scholar] [CrossRef]

- Jia, Z.; Wang, M.; Zhao, S. A review of deep learning-based approaches for defect detection in smart manufacturing. J. Opt. 2024, 53, 1345–1351. [Google Scholar] [CrossRef]

- Eswaran, M.; Gulivindala, A.K.; Inkulu, A.K.; Bahubalendruni, M.R. Augmented reality-based guidance in product assembly and maintenance/repair perspective: A state of the art review on challenges and opportunities. Expert Syst. Appl. 2023, 213, 118983. [Google Scholar] [CrossRef]

- Hao, J.C.; He, D.; Li, Z.Y.; Hu, P.; Chen, Y.; Tang, K. Efficient cutting path planning for a non-spherical tool based on an iso-scallop height distance field. Chin. J. Aeronaut. 2023, in press. [Google Scholar] [CrossRef]

- Glorieux, E.; Franciosa, P.; Ceglarek, D. Coverage path planning with targeted viewpoint sampling for robotic free-form surface inspection. Robot. Comput. Integr. Manuf. 2020, 61, 101843. [Google Scholar] [CrossRef]

- Du, G.; Wang, K.; Lian, S.; Zhao, K. Vision-based robotic grasping from object localization, object pose estimation to grasp estimation for parallel grippers: A review. Artif. Intell. Rev. 2021, 54, 1677–1734. [Google Scholar] [CrossRef]

- Wang, H.Y.; Shen, Q.; Deng, Z.L.; Cao, X.; Wang, X. Absolute pose estimation of UAV based on large-scale satellite image. Chin. J. Aeronaut. 2023, in press. [CrossRef]

- Zhang, M.; Zhang, C.C.; Wang, W.; Du, R.; Meng, S. Research on Automatic Assembling Method of Large Parts of Spacecraft Based on Vision Guidance. In Proceedings of the 2021 2nd International Conference on Artificial Intelligence and Information Systems, ACM, Chongqing, China, 28–30 May 2021. [Google Scholar] [CrossRef]

- Qin, L.; Wang, T. Design and research of automobile anti-collision warning system based on monocular vision sensor with license plate cooperative target. Multimed. Tools Appl. 2017, 76, 14815–14828. [Google Scholar] [CrossRef]

- Jiang, C.; Li, W.; Li, W.; Wang, D.F.; Zhu, L.J.; Xu, W.; Zhao, H.; Ding, H. A Novel Dual-Robot Accurate Calibration Method Using Convex Optimization and Lie Derivative. IEEE Trans. Robot. 2024, 40, 960–977. [Google Scholar] [CrossRef]

- Huang, B.; Tang, Y.; Ozdemir, S.; Ling, H. A Fast and Flexible Projector-Camera Calibration System. IEEE Trans. Autom. Sci. Eng. 2021, 18, 1049–1063. [Google Scholar] [CrossRef]

- Nubiola, A.; Bonev, I.A. Absolute calibration of an ABB IRB 1600 robot using a laser tracker. Robot. Comput. Integr. Manuf. 2012, 29, 236–245. [Google Scholar] [CrossRef]

- Yu, H.; Huang, Y.; Zheng, D.; Bai, L.; Han, J. Three-dimensional shape measurement technique for large-scale objects based on line structured light combined with industrial robot. Optik 2020, 202, 163656. [Google Scholar] [CrossRef]

- Li, D.; Wang, H.; Liu, N.; Wang, X.; Xu, J. 3D Object Recognition and Pose Estimation from Point Cloud Using Stably Observed Point Pair Feature. IEEE Access 2020, 8, 44335–44345. [Google Scholar] [CrossRef]

- Wuest, H.; Vial, F.; Stricker, D. Adaptive line tracking with multiple hypotheses for augmented reality. In Proceedings of the Fourth IEEE and ACM International Symposium on Mixed and Augmented Reality (ISMAR’05), Vienna, Austria, 5–8 October 2005; pp. 62–69. [Google Scholar] [CrossRef]

- Han, P.; Zhao, G. A review of edge-based 3D tracking of rigid objects. Virtual Real. Intell. Hardw. 2019, 1, 580–596. [Google Scholar] [CrossRef]

- Huang, H.; Zhong, F.; Sun, Y.; Qin, X. An Occlusion-aware Edge-Based Method for Monocular 3D Object Tracking using Edge Confidence. Comput. Graph. Forum 2020, 39, 399–409. [Google Scholar] [CrossRef]

- Jau, Y.-Y.; Zhu, R.; Su, H.; Chandraker, M. Deep keypoint-based camera pose estimation with geometric constraints. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 4950–4957. [Google Scholar] [CrossRef]

- Lepetit, V.; Moreno-Noguer, F.; Fua, P. EPnP: An accurate O(n) solution to the PnP problem. Int. J. Comput. Vis. 2009, 81, 155–166. [Google Scholar] [CrossRef]

- Ferraz, L.; Binefa, X.; Moreno-Noguer, F. Leveraging Feature Uncertainty in the PnP Problem. In Proceedings of the British Machine Vision Conference 2014, British Machine Vision Association, Nottingham, UK, 1 September 2014. [Google Scholar] [CrossRef]

- Zheng, Y.; Sugimoto, S.; Okutomi, M. ASPnP: An Accurate and Scalable Solution to the Perspective-n-Point Problem. Trans. Inf. Syst. 2013, 96, 1525–1535. [Google Scholar] [CrossRef]

- Garro, V.; Crosilla, F.; Fusiello, A. Solving the PnP Problem with Anisotropic Orthogonal Procrustes Analysis. In Proceedings of the 2012 Second International Conference on 3D Imaging, Modeling, Processing, Visualization & Transmission, Zurich, Switzerland, 13–15 October 2012. [Google Scholar] [CrossRef]

- Urban, S.; Leitloff, J.; Hinz, S. MLPnP-A Real-Time Maximum Likelihood Solution to the Perspective-n-Point Problem. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, III-3, 131–138. [Google Scholar] [CrossRef]

- Arandjelovic, R.; Gronat, P.; Torii, A.; Pajdla, T.; Sivic, J. NetVLAD: CNN Architecture for Weakly Supervised Place Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 1437–1451. [Google Scholar] [CrossRef]

- Gordo, A.; Almazán, J.; Revaud, J.; Larlus, D. Deep Image Retrieval: Learning Global Representations for Image Search. In Computer Vision—ECCV 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Cham, Switzerland, 2016. [Google Scholar] [CrossRef]

- Humenberger, M.; Cabon, Y.; Guerin, N.; Morat, J.; Leroy, V.; Revaud, J.; Rerole, P.; Pion, N.; de Souza, C.; Csurka, G. Robust Image Retrieval-based Visual Localization using Kapture. arXiv 2022, arXiv:2007.13867. [Google Scholar]

- Kendall, A.; Grimes, M.; Cipolla, R. PoseNet: A Convolutional Network for Real-Time 6-DOF Camera Relocalization. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar] [CrossRef]

- Kendall, A.; Cipolla, R. Geometric Loss Functions for Camera Pose Regression with Deep Learning. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef]

- Peng, S.; Liu, Y.; Huang, Q.; Zhou, X.; Bao, H. PVNet: Pixel-wise voting network for 6dof pose estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 3212–3223. [Google Scholar] [CrossRef] [PubMed]

- Balntas, V.; Li, S.; Prisacariu, V. RelocNet: Continuous Metric Learning Relocalisation Using Neural Nets. In Computer Vision-ECCV 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2018. [Google Scholar] [CrossRef]

- Xu, Y.; Lin, K.-Y.; Zhang, G.; Wang, X.; Li, H. RNNPose: Recurrent 6-DoF Object Pose Refinement with Robust Correspondence Field Estimation and Pose Optimization. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Bukschat, Y.; Vetter, M. EfficientPose-An efficient, accurate and scalable end-to-end 6D multi object pose estimation approach. arXiv 2020, arXiv:2011.04307. [Google Scholar]

- Labbe, Y.; Manuelli, L.; Mousavian, A.; Tyree, S.; Birchfield, S.; Tremblay, J.; Carpentier, J.; Aubry, M.; Fox, D.; Sivic, J. Megapose: 6d pose estimation of novel objects via render & compare. arXiv 2022, arXiv:2212.06870. [Google Scholar]

- Brachmann, E.; Krull, A.; Michel, F.; Gumhold, S.; Shotton, J.; Rother, C. Learning 6D Object Pose Estimation Using 3D Object Coordinates. In Computer Vision—ECCV 2014; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2014; Volume 8690. [Google Scholar] [CrossRef]

- Brachmann, E.; Krull, A.; Nowozin, S.; Shotton, J.; Michel, F.; Gumhold, S.; Rother, C. DSAC-Differentiable RANSAC for Camera Localization. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef]

- Sarlin, P.-E.; Cadena, C.; Siegwart, R.; Dymczyk, M. From Coarse to Fine: Robust Hierarchical Localization at Large Scale. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar] [CrossRef]

- Ben Abdallah, H.; Jovančević, I.; Orteu, J.-J.; Brèthes, L. Automatic Inspection of Aeronautical Mechanical Assemblies by Matching the 3D CAD Model and Real 2D Images. J. Imaging 2019, 5, 81. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; De, D.L.; Wei, B.; Chen, L.; Martin, R.R. Regularization Based Iterative Point Match Weighting for Accurate Rigid Transformation Estimation. IEEE Trans. Vis. Comput. Graph. 2015, 21, 1058–1071. [Google Scholar] [CrossRef] [PubMed]

- Hanna, J.P.; Niekum, S.; Stone, P. Importance sampling in reinforcement learning with an estimated behavior policy. Mach. Learn. 2021, 110, 1267–1317. [Google Scholar] [CrossRef]

- Tjaden, H.; Schwanecke, U.; Schömer, E. Real-time monocular pose estimation of 3d objects using temporally consistent local color histograms. In Proceedings of the IEEE International Conference on Computer Vision, Institute of Electrical and Electronics Engineers Inc., Venice, Italy, 22–29 October 2017. [Google Scholar] [CrossRef]

- Carlile, B.; Delamarter, G.; Kinney, P.; Marti, A.; Whitney, B. Improving Deep Learning by Inverse Square Root Linear Units (ISRLUs). arXiv 2017, arXiv:1710.09967. [Google Scholar]

- Gill, P.E.; Wong, E. Sequential Quadratic Programming Methods. In Mixed Integer Nonlinear Programming; Lee, J., Leyffer, S., Eds.; Springer: New York, NY, USA, 2012; pp. 147–224. [Google Scholar] [CrossRef]

- Schmid, A.; Biegler, L.T. Reduced Hessian Successive Quadratic Programming for Realtime Optimization. IFAC Proc. Vol. 1994, 27, 173–178. [Google Scholar] [CrossRef]

- Huang, H.; Zhong, F.; Qin, X. Pixel-Wise Weighted Region-Based 3D Object Tracking Using Contour Constraints. IEEE Trans. Visual Comput. Graph. 2022, 28, 4319–4331. [Google Scholar] [CrossRef]

- Zhang, J.; Yao, Y.; Deng, B. Fast and Robust Iterative Closest Point. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 3450–3466. [Google Scholar] [CrossRef]

- Tekin, B.; Sinha, S.N.; Fua, P. Real-Time Seamless Single Shot 6D Object Pose Prediction. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar] [CrossRef]

- Wang, Q.; Zhou, J.; Li, Z.; Sun, X.; Yu, Q. Robust and Accurate Monocular Pose Tracking for Large Pose Shift. IEEE Trans. Ind. Electron. 2023, 70, 8163–8173. [Google Scholar] [CrossRef]

- Tian, X.; Lin, X.; Zhong, F.; Qin, X. Large-Displacement 3D Object Tracking with Hybrid Non-local Optimization. In Proceedings of the Computer Vision-ECCV 2022: 17th European Conference, Tel Aviv, Israel, 23–27 October 2022. [Google Scholar] [CrossRef]

- Stoiber, M.; Pfanne, M.; Strobl, K.H.; Triebel, R.; Albu-Schäffer, A. A sparse gaussian approach to region-based 6DoF object tracking. In Proceedings of the Computer Vision-ACCV 2020: 15th Asian Conference on Computer Vision, Kyoto, Japan, 30 November 2020. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).