GNSS-Assisted Visual Dynamic Localization Method in Unknown Environments

Abstract

1. Introduction

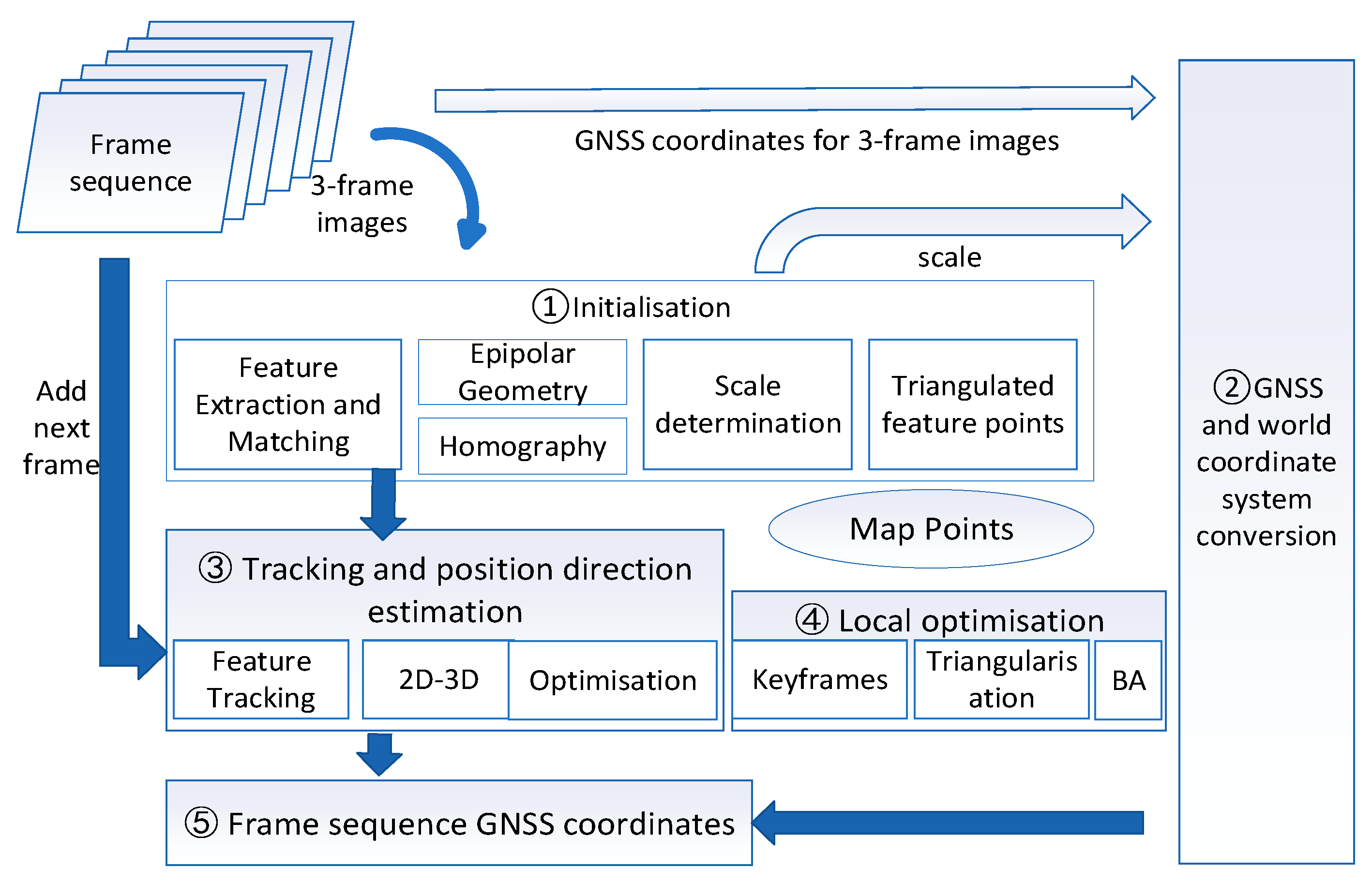

2. GNSS-Assisted Visual Dynamic Localization Method Framework

2.1. Initialization

2.1.1. Positional Solution Using Epipolar Geometry Constraints

2.1.2. Positional Solving Using a Homography Matrix

2.1.3. Model Scores

2.1.4. Scale Determinations

2.1.5. Triangulation of the Feature Points

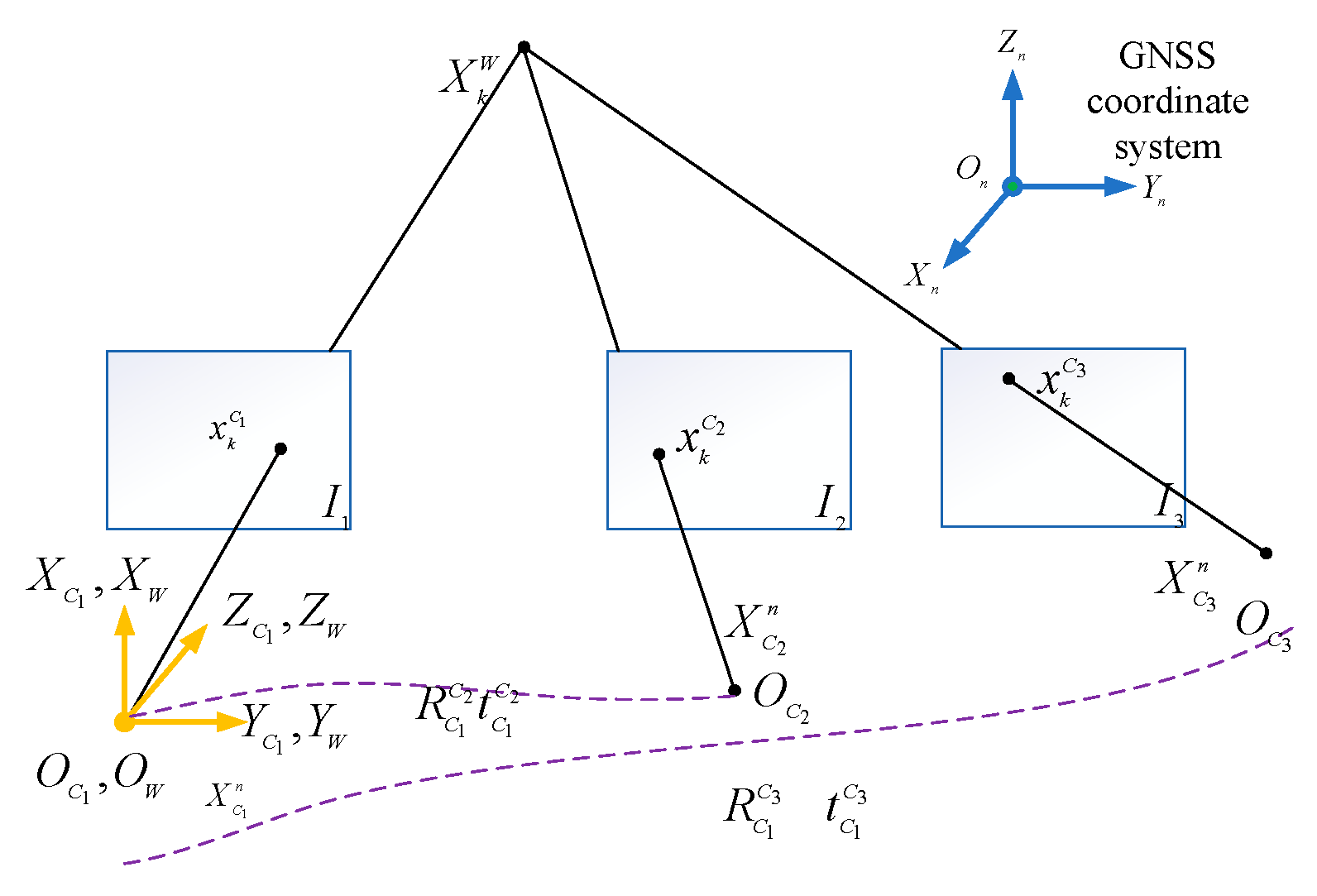

2.2. GNSS and World Coordinate System Conversion

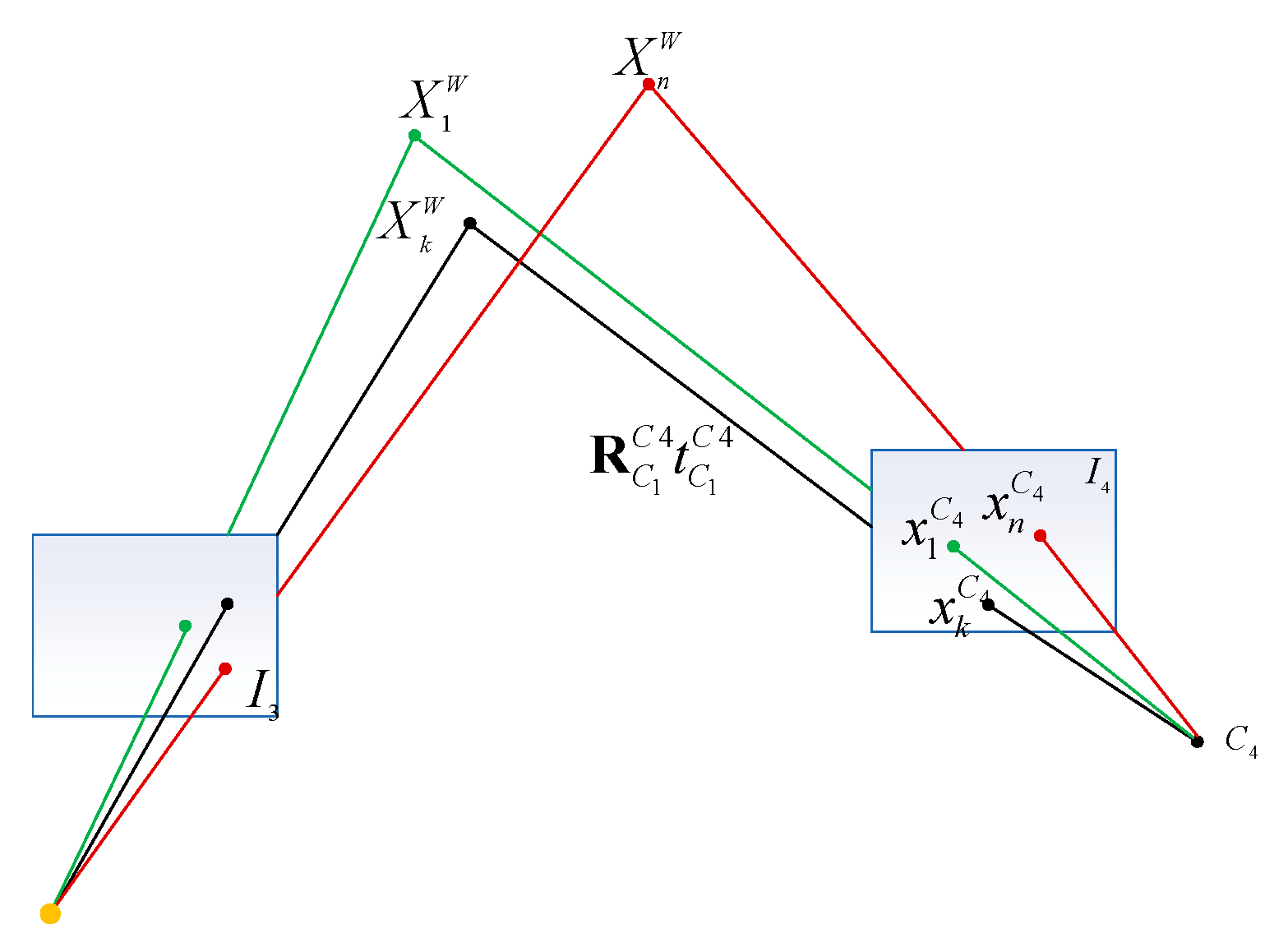

2.3. Subsequent Frame Sequence Tracking and Position-Solving

2.4. Local Optimization

2.5. Subsequent Frame Sequence GNSS Coordinate-Solving

3. The Overall Flow of the Algorithm

4. Dataset Validation

4.1. Data Processing and Analysis

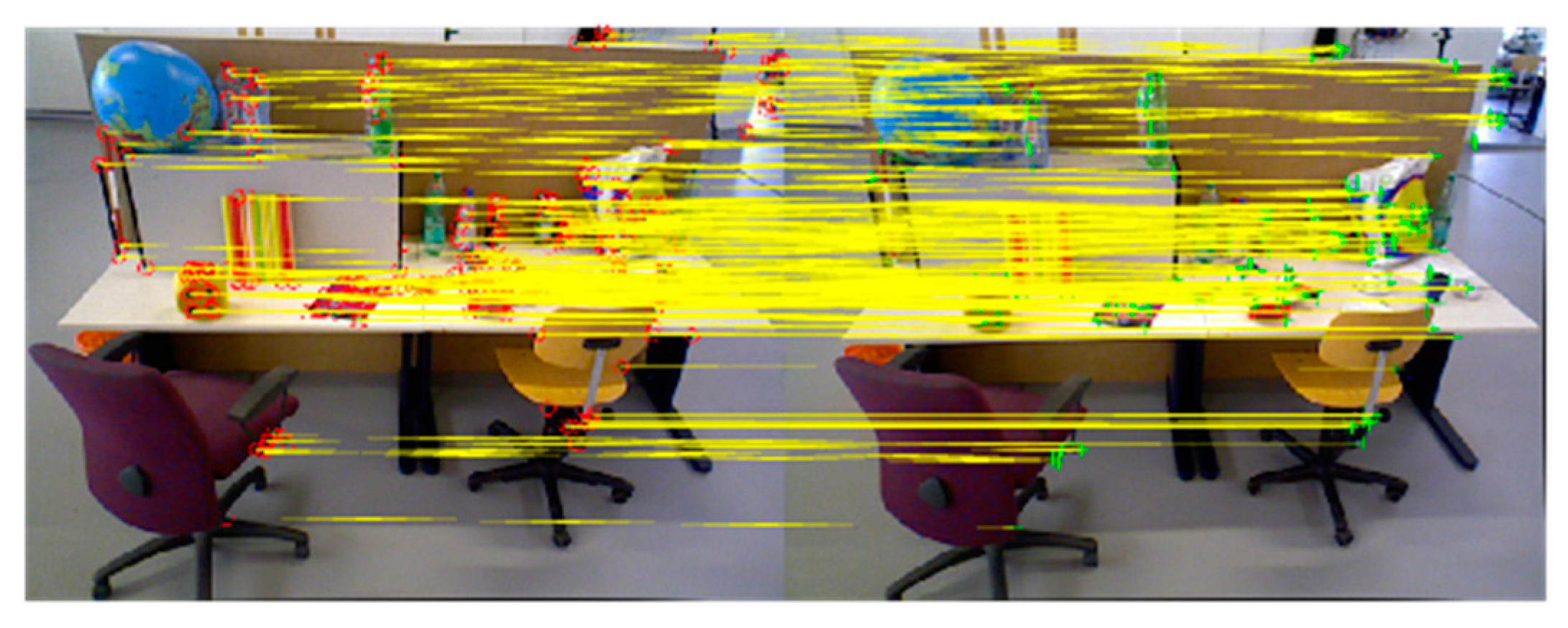

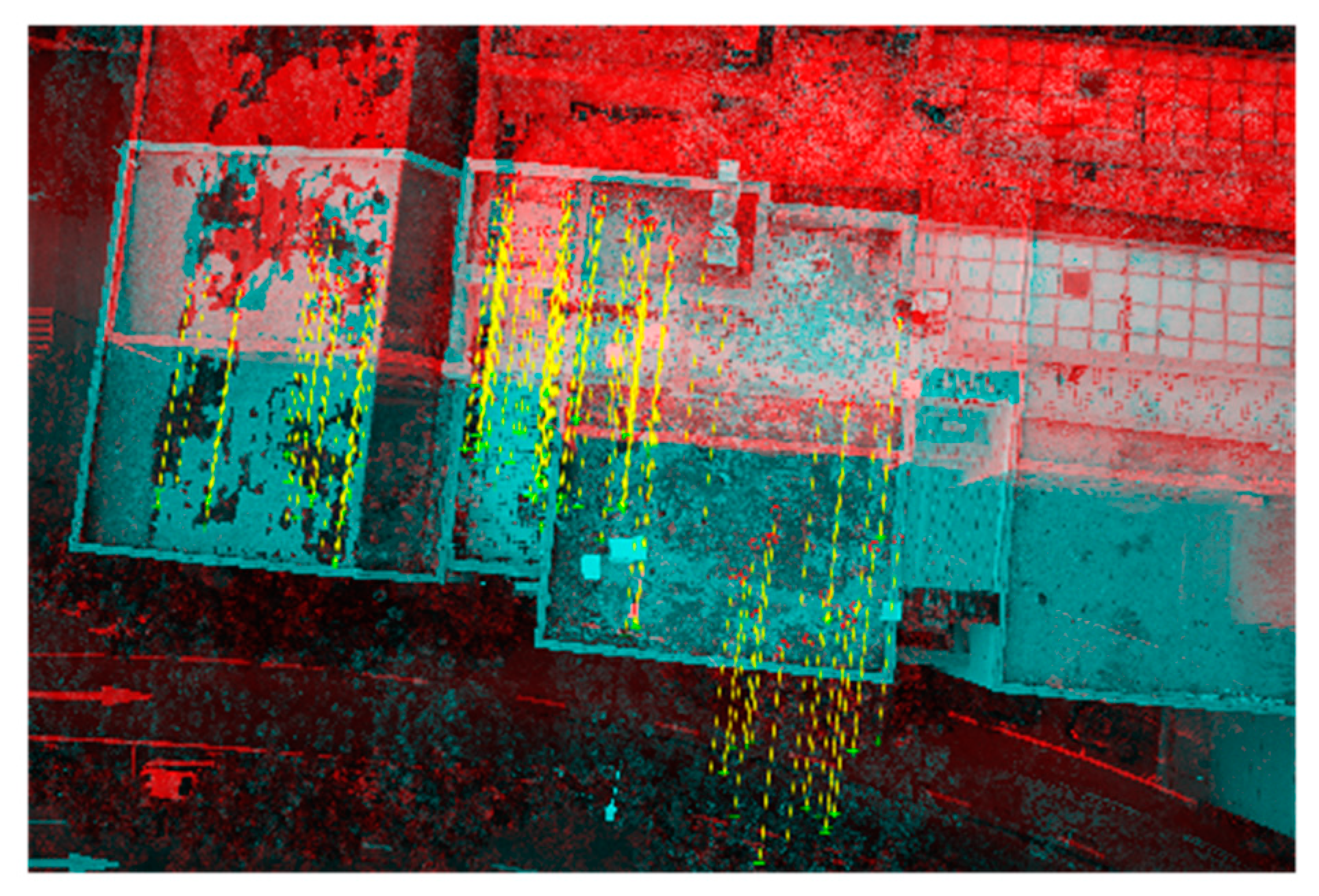

4.1.1. Feature Extraction and Matching

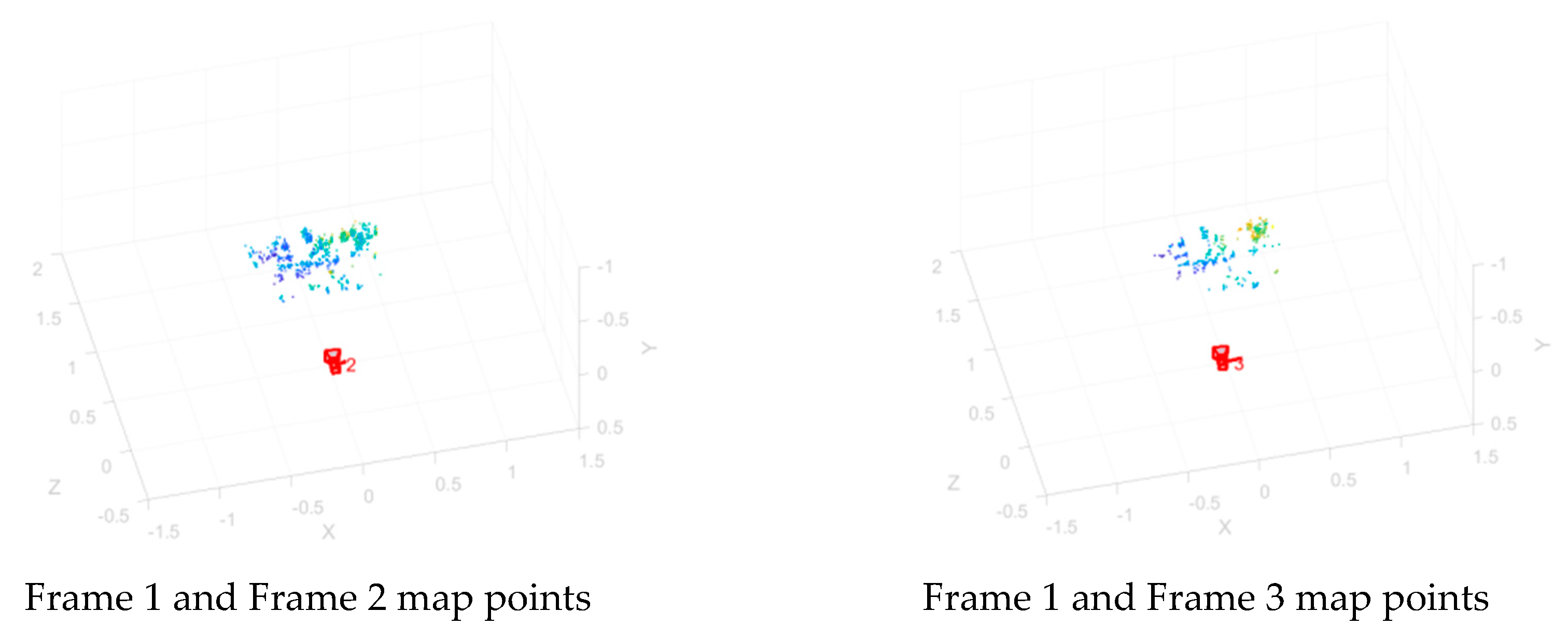

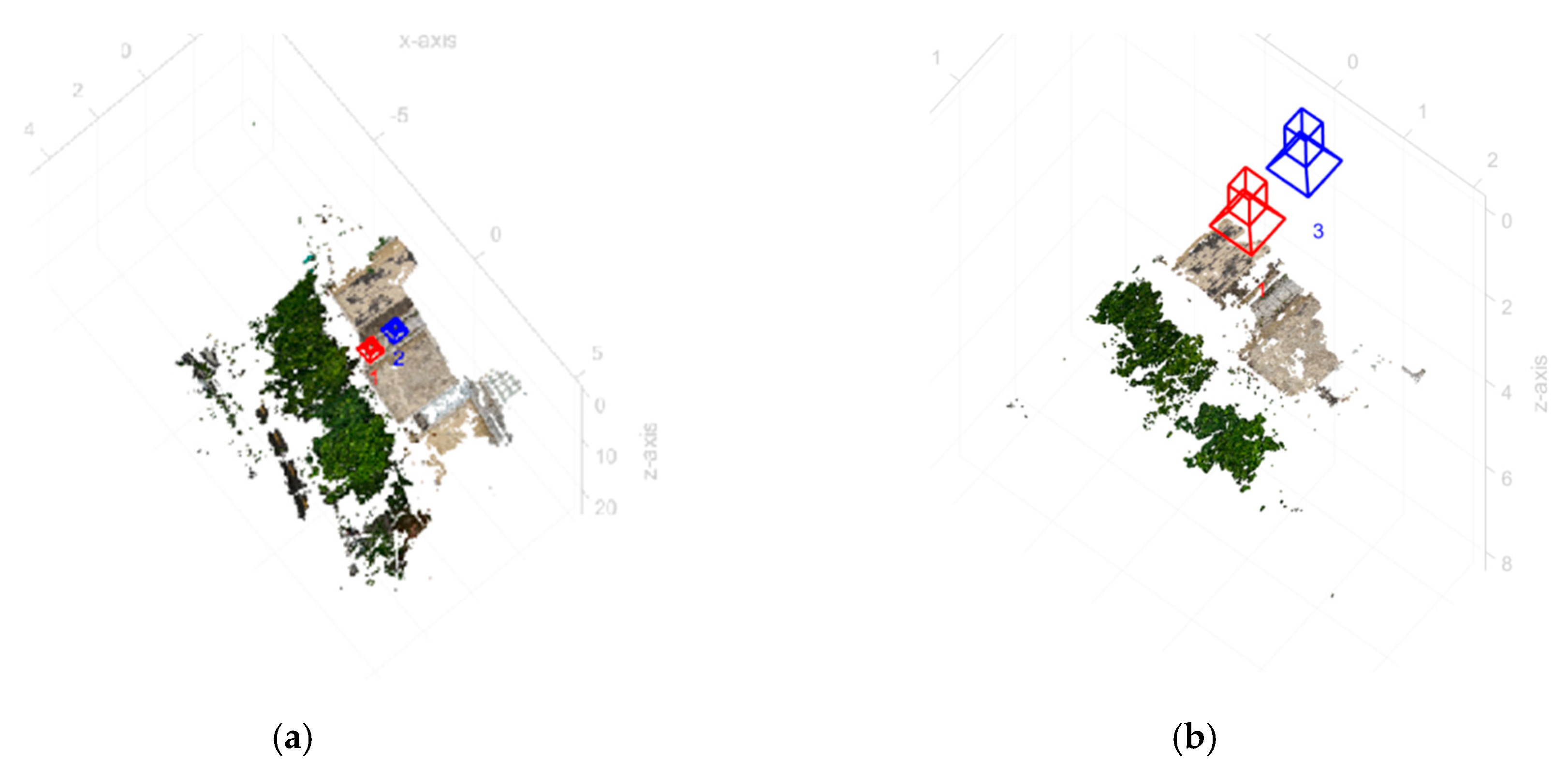

4.1.2. Triangulation

4.1.3. Motion Capture Coordinate System and World Coordinate System Transformation Matrix

4.1.4. Subsequent Camera Poses

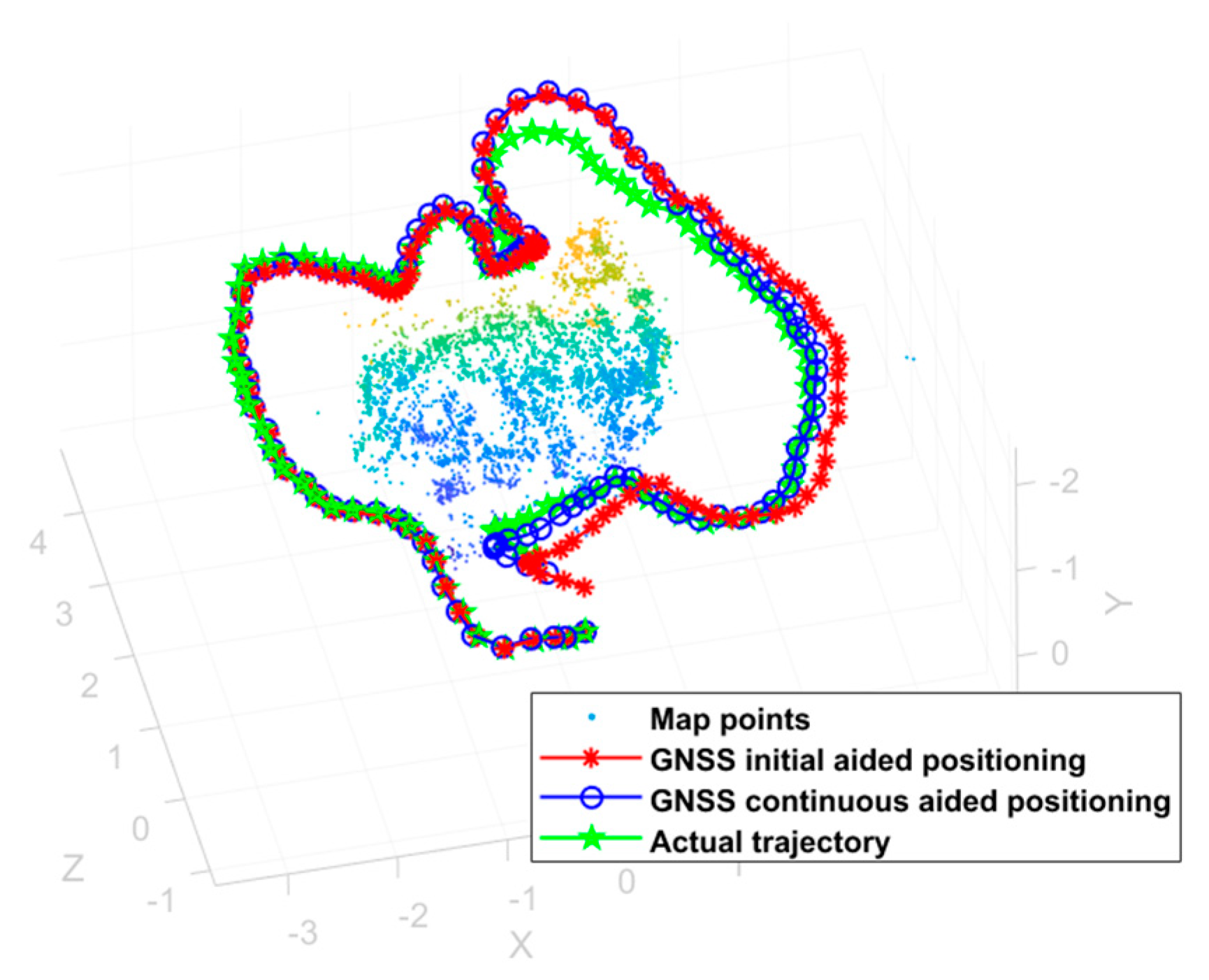

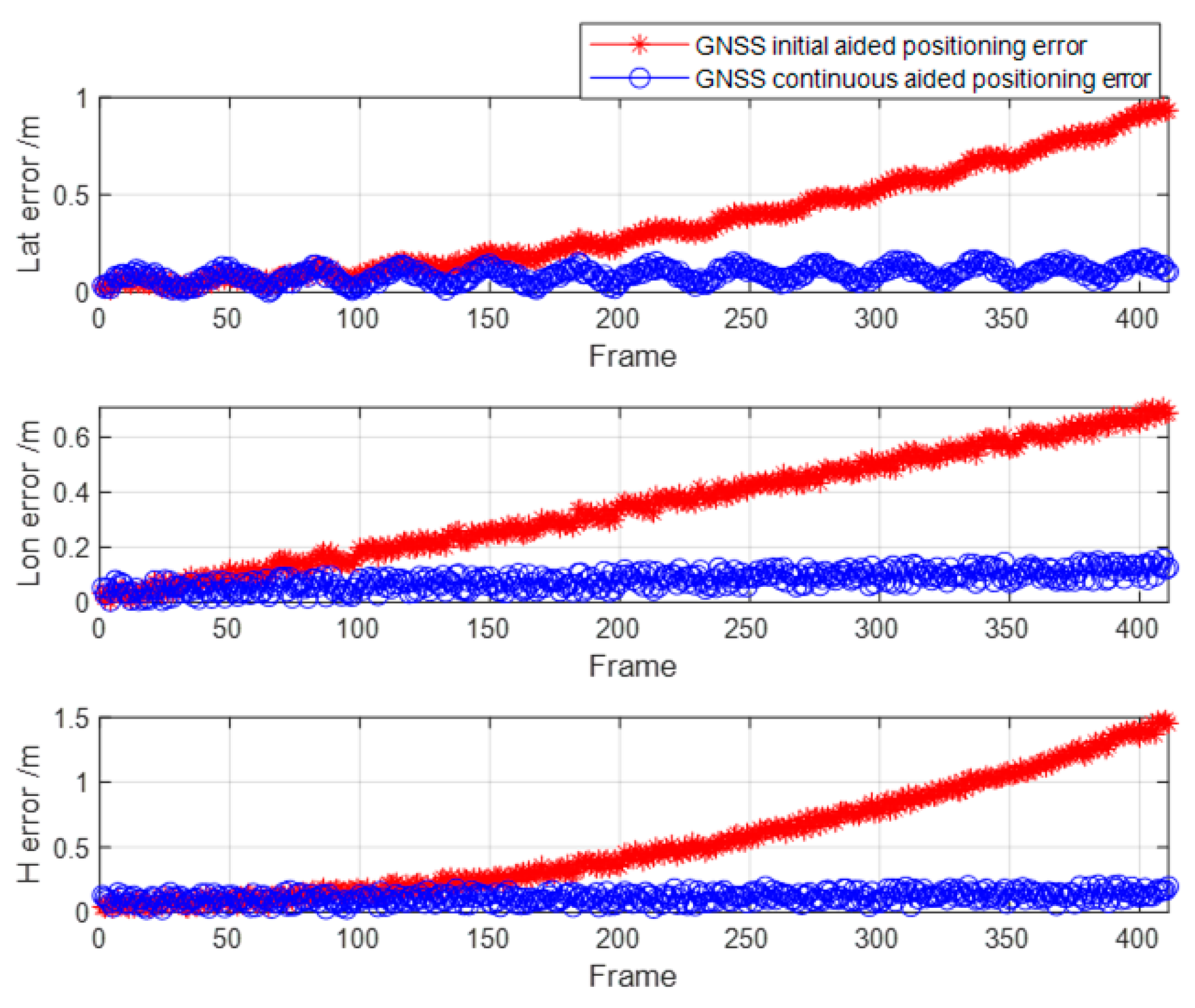

4.2. Error Statistics and Analysis

5. Experiments with Real Data

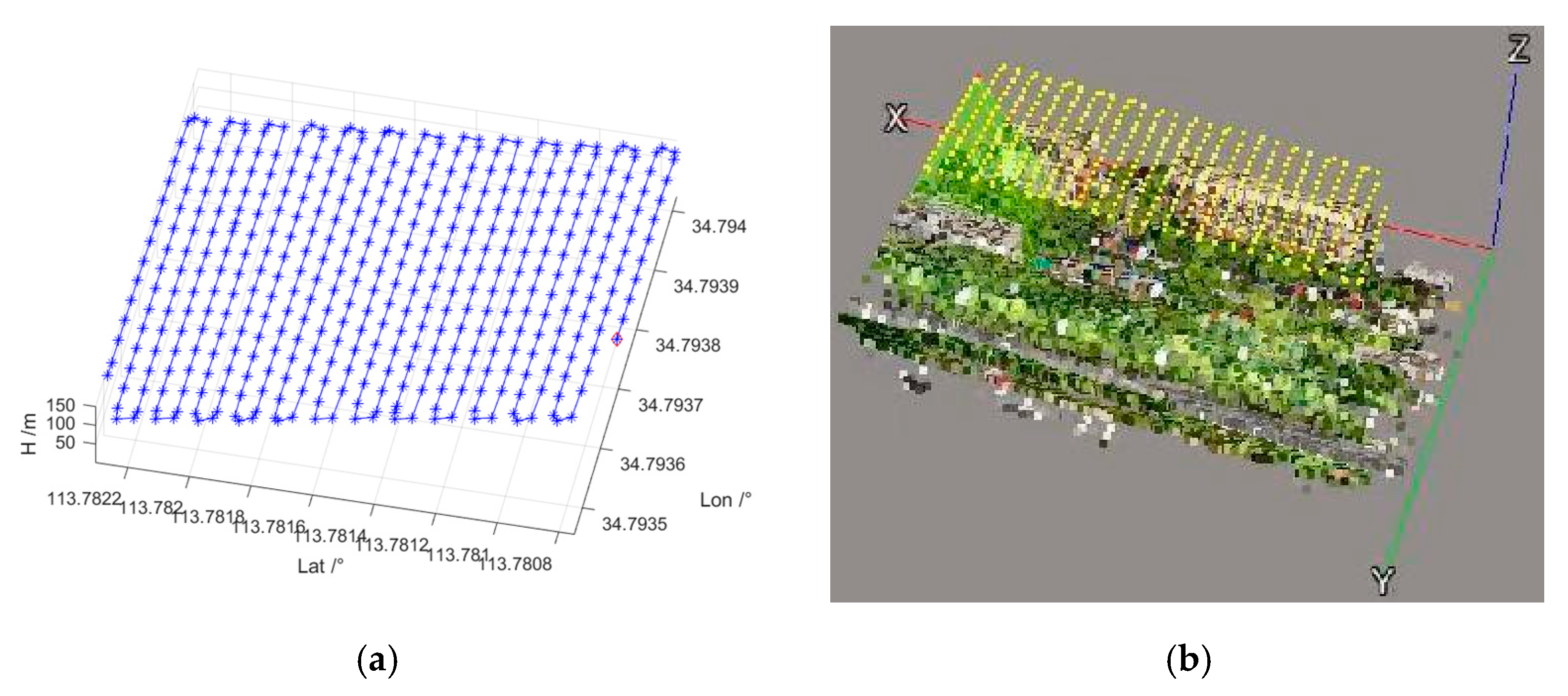

5.1. Data Processing

5.1.1. Camera Calibration

5.1.2. Image Distortion Processing

5.1.3. Feature Point Extraction and Matching

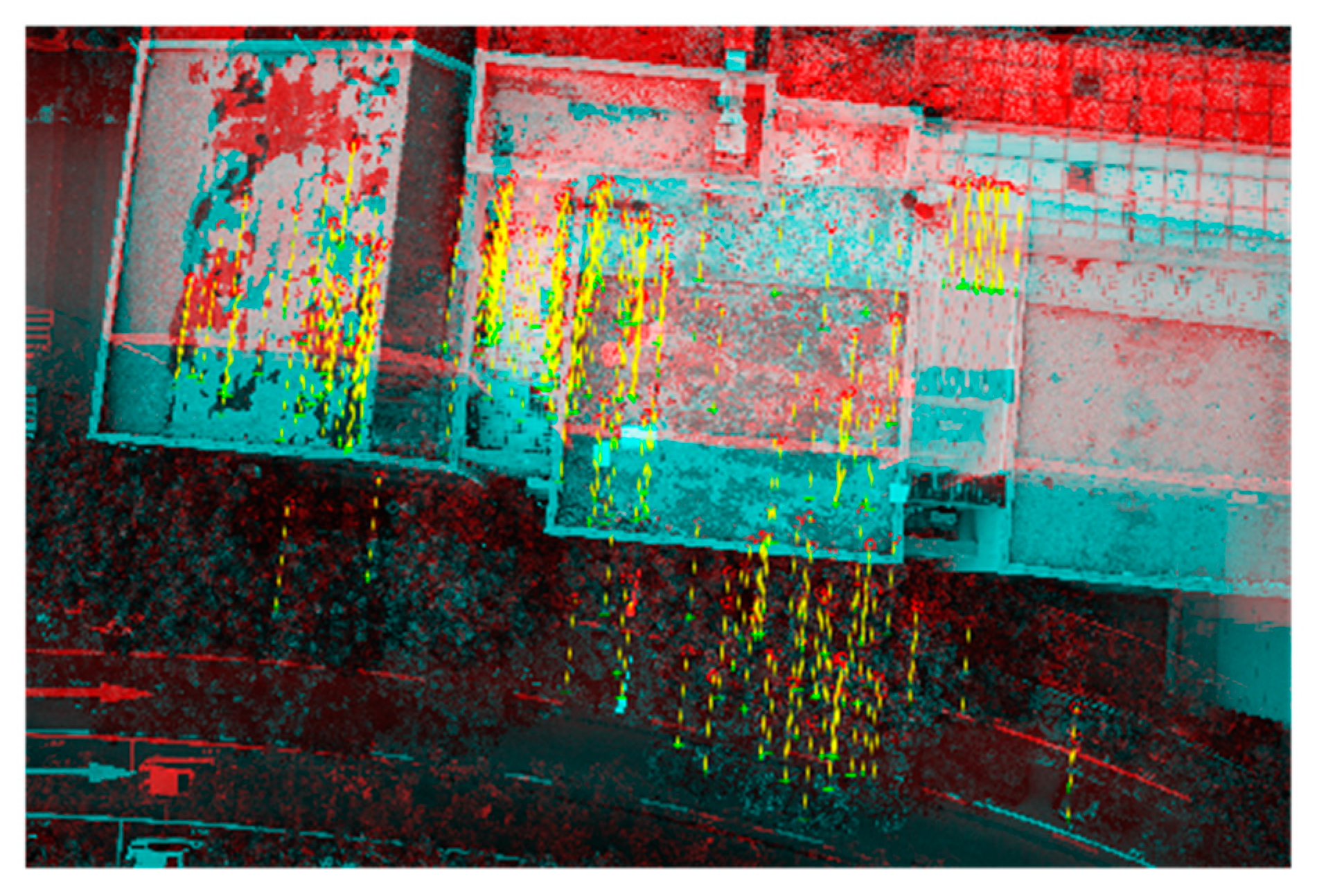

5.2. Error Statistics and Analysis

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Yang, Y. Integrated PNT system and its key technologies. J. Surv. Mapp. 2016, 5, 505–510. [Google Scholar]

- Huested, P.; Popejoy, P. National positioning, navigation, and timing architecture. In Proceedings of the AIAA SPACE 2008 Conference & Exposition, San Diego, CA, USA, 9–11 September 2008; The Institute of Aeronautics and Astronautics (AIAA): Reston, VA, USA, 2008. [Google Scholar] [CrossRef]

- Yang, Y. Basic framework of elastic PNT. J. Surv. Mapp. 2018, 7, 893–898. [Google Scholar]

- Gutierrez, N.; Belmonte, C.; Hanvey, J.; Espejo, R.; Dong, Z. Indoor localization for mobile devices. In Proceedings of the 11th IEEE International Conference on Networking, Sensing and Control, Miami, FL, USA, 7–9 April 2014; pp. 173–178. [Google Scholar] [CrossRef]

- Garraffa, G.; Sferlazza, A.; D’Ippolito, F.; Alonge, F. Localization Based on Parallel Robots Kinematics As an Alternative to Trilateration. IEEE Trans. Ind. Electron. 2022, 69, 999–1010. [Google Scholar] [CrossRef]

- Zhang, C.; Ang, M.H.; Rus, D. Robust LIDAR Localization for Autonomous Driving in Rain. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 3409–3415. [Google Scholar] [CrossRef]

- Gao, X.; Zhang, T. Fourteen Lectures on Visual SLAM; Electronic Industry Press: Beijing, China, 2017. [Google Scholar]

- Zou, X. Research on Intelligent Navigation of Autonomous Mobile Robot. Ph.D. Thesis, Zhejiang University, Hangzhou, China, 2004. [Google Scholar]

- Li, K.; Li, J.; Wang, A. Exploration of GNSS/INS/visual combined navigation data fusion research. J. Navig. Position. 2023, 11, 9–15. [Google Scholar]

- Feng, Y. Research on Combined GNSS/Visual Navigation and Positioning Technology. Master’s Thesis, University of Chinese Academy of Sciences, Xi’an, China, 2021. [Google Scholar] [CrossRef]

- Fu, H.; Ma, H.; Xiao, H. Real-time accurate crowd counting based on RGB-D information. In Proceedings of the 2012 19th IEEE International Conference on Image Processing, Orlando, FL, USA, 30 September–3 October 2012. [Google Scholar]

- Agrawal, M.; Konolige, K. Real-time Localization in Outdoor Environments using Stereo Vision and Inexpensive GPS. In Proceedings of the 18th International Conference on Pattern Recognition, Hong Kong, China, 20–24 August 2006. [Google Scholar]

- Aumayer, B.M.; Petovello, M.G.; Lachapelle, G. Stereo-Vision Aided GNSS for Automotive Navigation in Challenging Environments. In Proceedings of the 26th International Technical Meeting of the Satellite Division of the Institute of Navigation (ION GNSS+ 2013), Nashville, TN, USA, 16–20 September 2013; pp. 511–520. [Google Scholar]

- Schreiber, M.; Konigshof, H.; Hellmund, A.M. Vehicle localization with tightly coupled GNSS and visual odometry. In Proceedings of the 2016 IEEE Intelligent Vehicles Symposium (IV), Gothenburg, Sweden, 19–22 June 2016. [Google Scholar]

- Wang, L.; Shu, Y.; Fu, W. A tightly coupled GNSS positioning method using visual information. J. Navig. Position. 2020, 8, 8–12. [Google Scholar]

- Feng, Y.; Tu, R.; Han, J. Research on a GNSS/visual observation tight combination navigation and positioning algorithm. GNSS World China 2021, 46, 49–54. [Google Scholar] [CrossRef]

- Zhang, Y.; Guo, C.; Niu, R. Research on binocular vision-assisted GNSS positioning method for intelligent vehicles. Comput. Eng. Appl. 2016, 52, 192–197. [Google Scholar] [CrossRef]

- Horn, P.K.B. Closed-form solution of absolute orientation using unit quaternions. J. Opt. Soc. Am. A 1987, 4, 629–642. [Google Scholar] [CrossRef]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Anhui University Press: Hefei, China, 2002. [Google Scholar]

- Zhang, H.; Miao, C.; Zhang, L.; Zhang, Y.; Li, Y.; Fang, K. A Real-Time Simulator for Navigation in GNSS-Denied Environments of UAV Swarms. Appl. Sci. 2023, 13, 11278. [Google Scholar] [CrossRef]

- Li, S.; Xu, C.; Xie, M. A robust O(n) solution to the perspective-n-point problem. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 1444–1450. [Google Scholar] [CrossRef] [PubMed]

- Dataset Download. Available online: https://vision.in.tum.de/data/datasets/rgbd-dataset/download (accessed on 1 April 2023).

- Fetic, A.; Juric, D.; Osmankovic, D. The procedure of a camera calibration using Camera Calibration Toolbox for MATLAB. In Proceedings of the 2022 Proceedings of the 35th International Convention MIPRO, Opatija, Croatia, 21–25 May 2022; pp. 1752–1757. [Google Scholar]

- Sun, Y.; Song, L.; Wang, G. Overview of the development of foreign ground unmanned autonomous systems in 2019. Aerodyn. Missile J. 2020, 1, 30–34. [Google Scholar]

- Cao, F.; Zhang, Y.; Yan, F. Long-term Autonomous Environment Adaptation of Mobile Robots: State-of-the-art Methods and Prospects. Acta Autom. Sin. 2020, 46, 205–221. [Google Scholar]

- Dai, J.; Hao, X.; Liu, S.; Ren, Z. Research on UAV Robust Adaptive Positioning Algorithm Based on IMU/GNSS/VO in Complex Scenes. Sensors 2022, 22, 2832. [Google Scholar] [CrossRef] [PubMed]

- Dai, J.; Liu, S.; Hao, X.; Ren, Z.; Yang, X.; Lv, Y. Unmanned ground vehicle-unmanned aerial vehicle relative navigation robust adaptive localization algorithm. IET Sci. Meas. Technol. 2023, 17, 183–194. [Google Scholar] [CrossRef]

- Sun, T.; Liu, Y.; Wang, Y.; Xiao, Z. An Improved Monocular Visual-Inertial Navigation System. IEEE Sens. J. 2021, 21, 11728–11739. [Google Scholar] [CrossRef]

| Implementation Steps of the Visual Dynamic Positioning Algorithm with GNSS Assistance |

|---|

| Step 1. With the known three frames as constraints, the camera coordinate system of Frame 1 was used as the world coordinate system. Step 2. Frame 1 and Frame 2 features were extracted and matched, and Frame 1 and Frame 3 features were ex-tracted and matched. Step 3. The epipolar geometry constraint and homography matrix models were evaluated, and different models were selected for the specific scenarios for the relative position estimation to obtain . Step 4. The GNSS position coordinates of Frame 1, Frame 2, and Frame 3 were used as the constraint data and combined with to calculate the scale. Step 5. Feature point triangulation was performed using , and the first three frames’ matching feature points in the world coordinate system coordinates were obtained and put into the map point library. Step 6. The transformation matrix between the GNSS coordinate system and the world coordinate system was calculated using the known position coordinates of the three GNSS frames . Step 7. We added the next frame, determined if there were GNSS data, and if there were, we continued to Step 7; if GNSS data were available for three consecutive frames, we returned to Step 1, and if not, we moved on to Step 8. Step 8. The Frame 4 feature points were extracted, and feature matching was performed with the previous keyframe. RPNP was utilized to solve the bitmap and BA optimization was performed. We took Frame 4 as an ex-ample to obtain its bit position in the world coordinate system . Step 9. We determined if Frame 4 was a keyframe, and if it was, the feature points were triangulated with the pre-vious keyframe and reconstructed and added to the map library. BA optimization was used to improve the keyframe bitmap and map point location coordinates. Step 10. Using the GNSS coordinates of Frame 1 and obtained by Step 6, the coordinates of Frame 4 in the GNSS coordinate system were calculated. Step 11. We continued with Step 7 to obtain the GNSS coordinates for subsequent frames. |

| Parameter | Value |

|---|---|

| Resolution | [640, 480] pix |

| Intrinsics | |

| Distortion coefficients |

| Frame 1 and Frame 2 | Frame 1 and Frame 3 | |

|---|---|---|

| F | ||

| H | ||

| Ratio | 0.7409 | 0.7496 |

| Model | Epipolar Geometry | Epipolar Geometry |

| R t | ||

| Scale |

| Frame | 20 | 70 | 100 | 123 | |

|---|---|---|---|---|---|

| GNSS initial-aided positioning error | MAE_x (m) | 0.0223 | 0.0369 | 0.0844 | 0.1111 |

| MAE_y (m) | 0.0199 | 0.0681 | 0.0924 | 0.1230 | |

| MAE_z (m) | 0.0114 | 0.0579 | 0.0833 | 0.1412 | |

| GNSS continuous-aided positioning error | MAE_x (m) | 0.0245 | 0.0338 | 0.0586 | 0.0560 |

| MAE_y (m) | 0.0189 | 0.0651 | 0.0687 | 0.0820 | |

| MAE_z (m) | 0.0156 | 0.0191 | 0.0501 | 0.0626 | |

| Error | MAE/m | RMSE/m | MAXE/m | |

|---|---|---|---|---|

| GNSS initial-aided positioning errors | x | 0.3521 | 0.2686 | 0.9418 |

| y | 0.3492 | 0.1960 | 0.7052 | |

| z | 0.5338 | 0.4157 | 1.4509 | |

| GNSS continuous-aided positioning errors | x | 0.1016 | 0.0943 | 0.1823 |

| y | 0.0959 | 0.0704 | 0.1531 | |

| z | 0.1650 | 0.1807 | 0.3005 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dai, J.; Zhang, C.; Liu, S.; Hao, X.; Ren, Z.; Lv, Y. GNSS-Assisted Visual Dynamic Localization Method in Unknown Environments. Appl. Sci. 2024, 14, 455. https://doi.org/10.3390/app14010455

Dai J, Zhang C, Liu S, Hao X, Ren Z, Lv Y. GNSS-Assisted Visual Dynamic Localization Method in Unknown Environments. Applied Sciences. 2024; 14(1):455. https://doi.org/10.3390/app14010455

Chicago/Turabian StyleDai, Jun, Chunfeng Zhang, Songlin Liu, Xiangyang Hao, Zongbin Ren, and Yunzhu Lv. 2024. "GNSS-Assisted Visual Dynamic Localization Method in Unknown Environments" Applied Sciences 14, no. 1: 455. https://doi.org/10.3390/app14010455

APA StyleDai, J., Zhang, C., Liu, S., Hao, X., Ren, Z., & Lv, Y. (2024). GNSS-Assisted Visual Dynamic Localization Method in Unknown Environments. Applied Sciences, 14(1), 455. https://doi.org/10.3390/app14010455