1. Introduction

Recent advances in the fields of robotics, the Internet of Things, and above all artificial intelligence (AI) are now providing a new innovation ground for the next era of farming, often called Farming 4.0 (or Farming 5.0 in some cases). This overarching term combines research fields like precision farming, precision livestock farming, or smart farming, which are all driven by the technological advances in the information and communication technology (ICT) sector. It is thought that the broad application of novel technologies supports the achievement of various political declarations and societal goals (e.g., the Green Deal, Paris Agreement, SDGs, and Farm to Fork Strategy) [

1,

2,

3], providing critical mitigation and adaptation capacities to tackle global issues such as greenhouse gas emissions, acidification, or eutrophication. This should be achieved by assisting stakeholders in tackling the aforementioned challenges whilst ensuring productivity goals by enabling real-time monitoring, simulation, and automation capabilities through high-fidelity models and bidirectional information flows [

3,

4]. Despite the manifold benefits of novel developments in Farming 4.0 [

5,

6], technology introduction without consideration for the needs of users may actually hinder sustainability efforts [

5,

7], highlighting the importance of responsible technology development within complex social-technological-ecological systems (STES). This is especially the case for AI, as the maturity of the technology and the diverse and scalable application fields will be a critical part of many future farming technologies, with a global compound annual growth rate of 23.1 percent until 2028 [

8]. As farmers’ new roles, skills, and requirements in such an environment are changing rapidly, frameworks and design requirements are needed to understand the manifold implications of AI and guide responsible implementation to maintain autonomy and limit potentially adverse effects. Specific technological design recommendations only support a fixed set of problems. Therefore, this article focuses on expanding the understanding of multivariate dependencies in technological integration and emphasizes the importance of prioritizing human-coupled environmental actions. It presents the ability to use an STES perspective to define technological requirements for responsible AI development, exemplified by AI’s effects and mitigation potential on farmers’ autonomy. By following the structure of a “systematization of knowledge approach”, this article systematizes the threat landscape, exemplifies technological developments to mitigate the risks, provides a high-level framework for technology development, and elaborates on future work in this field.

In this context, this work aims to explore four specific subtopics in four sections:

The concept of autonomy and the introduction of the STES approach;

The systemic effects of AI and autonomous technologies and systems on the autonomy of farmers and current challenges in AI research for the preservation of farmers’ autonomy;

The principles, techniques, and methodologies for the trustworthy deployment of an AI framework;

The increase in alignment of AI systems to user needs by design and implementation from an STES perspective.

To accomplish this, the initial section introduces autonomy and the design principles of STES. The second section is organized from the STES perspective and offers a comprehensive overview of the threat landscape concerning responsible AI development. The third section illustrates the potential of technological design choices to tackle the aforementioned challenges. The fourth section discusses the high-level framework for establishing requirements within a responsible technology design process.

2. Farmers’ Autonomy within a Complex Socio-Technological-Ecological Environment

Artificial intelligence plays a crucial role in the transition to Farming 4.0, as it substantially transforms various aspects of crop and livestock farming. Technologies such as machine learning, computer vision, and robotic automation are revolutionizing practices ranging from crop monitoring to animal husbandry [

9]. However, while these technologies promise substantial benefits, they threaten farmers’ autonomy as they are causing novel social, technological, and ecological dependencies.

2.1. Concept of Autonomy

Depending on the domain and the subject under study, different notions of autonomy are present. The concept of “autonomy in farming” is multifaceted as well and can thereby also be viewed from different perspectives. It can refer to farmers’ ability to make independent decisions about their agricultural processes or maintain their financial independence [

10]. It can also refer to the autonomous systems on the field and their ability to achieve certain goals [

11]. In practice, there is a range of complex social, economic, legal, ethical, and environmental interactions (e.g., weather variability, pests, and water availability) that may positively or negatively affect the perceived freedom of choice and the ability to achieve one’s goals. However, the definition of autonomy is not limited to the “perceived ability” to make a decision but also in the absolute sense, as regulations, environmental and economic constraints, cultural practices, biased predispositions, or social embedding can limit one’s ability to make independent and beneficial choices. Thereby, autonomy is influenced by a variety of aspects, such as trust in the technology and one’s abilities, financial stability, access to information and markets, digital literacy, security and privacy of systems, as well as economic, social, technological, and psychological dependencies in all forms [

12,

13,

14,

15,

16]. Therefore, the rapid integration of AI technologies into the daily lives of farmers could threaten this autonomy in various ways. For instance, AI-driven decision-making tools could limit independent control over agricultural practices by increasing technological blindness or negatively influencing the costs associated with adopting and maintaining new technologies that can only be managed by external service providers [

7].

Table 1 gives a high-level overview of the exemplified cause–effect relationships that influence farmers’ autonomy.

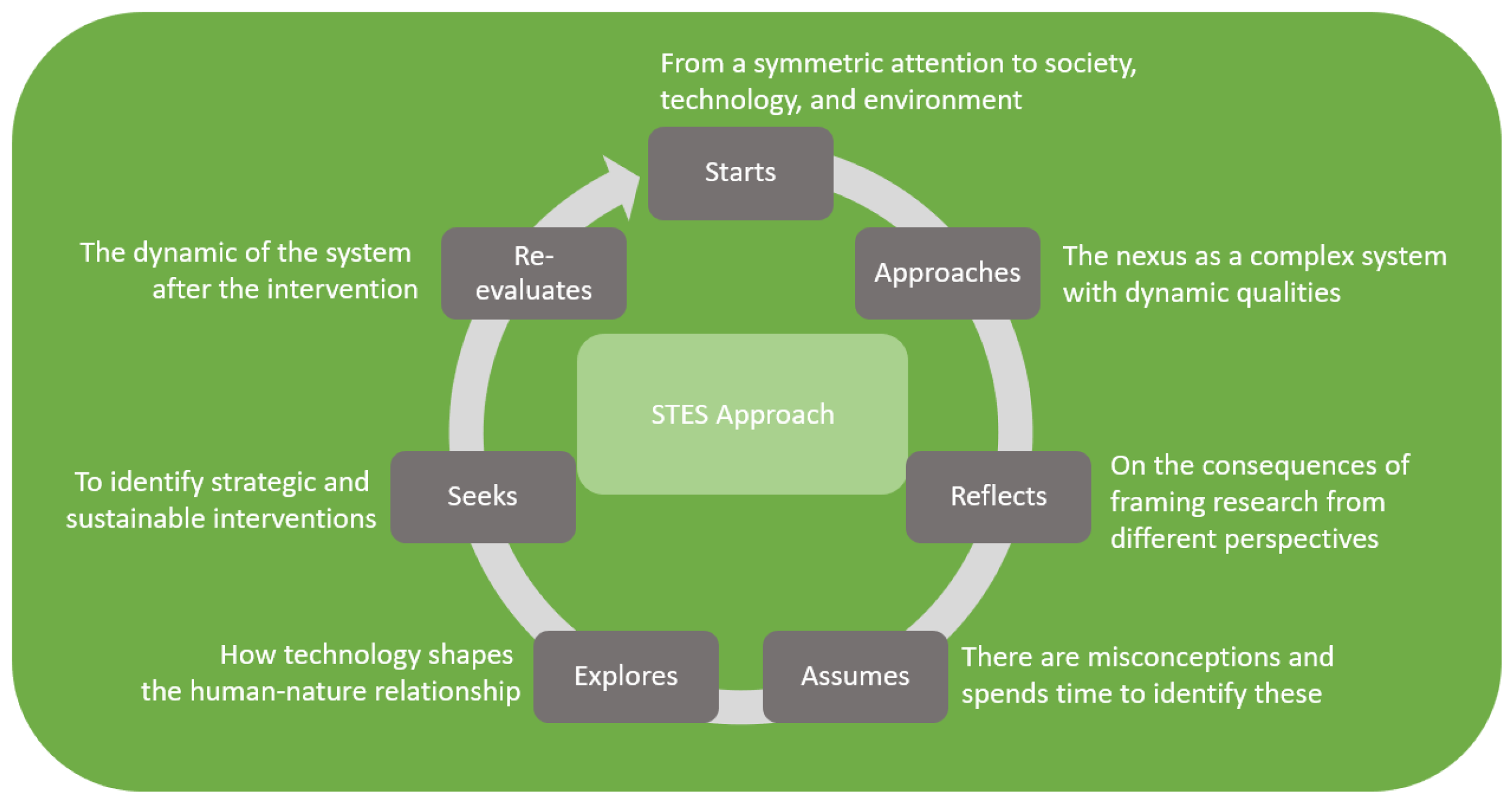

2.2. STES Approach

Many researchers [

29,

30,

31] pointed out the dangers of technology implementation in ecological environments without having a systematic understanding of the associated human–environment interactions and a conceptual framework that governs technology introduction [

32]. The farming ecosystem is a complex web of relationships between people, technologies, and the environment. Implementing AI can alter these relationships, leading to unwanted consequences that can directly and indirectly affect farmers’ decision-making processes and, ultimately, autonomy. The bridging of a socio-ecological system (SES) approach with a socio-technological (STS) approach creates a novel playground that investigates the intermediary role of technology within a socio-technological-environment system (STES) nexus. By inspecting the complex interactions in this multi-dimensional environment, an integrated technology (assessment) approach can account for technology-related dynamics to avoid simplistic, reductionist, or deterministic explanations [

29]. As an STES approach recognizes the complexity of these dynamics (uncertainty, feedback cycles, emergent phenomena, etc.), scientific progress in this area can lead to novel frameworks and approaches to designing, integrating, and maintaining technological developments and fostering sustainable and resilient management of environmental and technological systems [

31]. As many unjust algorithmic developments (bias, discrimination, harm, etc.) revolve around purely technical solutions [

32], the STES approach tries to tackle this challenge by putting the human and his or her actions in the center to pave the way for human-centered technology requirements as shown in

Figure 1. By understanding that technology and its goals are implemented in a dynamic setting of societal, personal, and environmental needs, it assesses the dynamic of the system components from the start. It is important to understand the different political consequences of framing the goals of AI implementation, to acknowledge a certain lack of understanding of all perspectives, and to be aware of one’s personal viewpoint. In doing so, the development and implementation of responsible AI systems must explore how intentional and unintentional technical mediation may result in ambiguous outcomes and feedback that displace or relocate but do not remove negative consequences. Based on this evaluation, it identifies strategic interventions and ways of changing relationships such that these create well-being for humans and non-humans alike [

29]. Finally, as the implemented STES environment is continuously changing, measures for re-evaluating the intended impact are necessary.

3. Current Challenges of AI in the Autonomy of Farmers from an STES Perspective

To assess how AI could lead to unwanted effects on the daily lives of farmers, we follow the proposed STES aspects and provide detailed risk scenarios from the social, technological, and environmental perspectives. As these perspectives are often inherently connected in a dynamic setting, a clear cut between the individual aspects is not always possible. Nevertheless, the following sections highlight the intertwined mechanisms and provide an understanding of the multifaceted challenges AI-based technology design currently faces to provide responsible and sustainable implementation.

3.1. Social Aspects

The deployment of AI technologies is set within a web of social entities that are driven by economic incentives to a considerable degree. Technology providers, farmers, third-party entities, and the farming community are interested in maximizing their individual objectives, thereby providing a variety of requirements for the technologies that sometimes compete against each other (e.g., economic scalability vs. crop diversity). As the providers drive the design of the technology, the risk for the users and broader farming communities is more prominent. Vendors tend to centralize data storage to continuously develop their algorithms (vendor lock-in) over data management platforms [

28]. The economic gains of those data are on the side of the technology provider, while the farmers are promised better products, which in turn are sold at higher prices [

28]. This threatens the long-term economic stability of farmers. The implications of rights management on trust in the context of data storage were also highlighted as a prominent factor in the survey conducted by Fleming et al. [

33].

Another factor is the distribution and access to knowledge of the systems [

34]. Centralizing specialized knowledge at the vendor site enables a continuous business model based on maintaining and upgrading the deployed systems. Conversely, this increases farmers’ dependency on AI businesses as the sole knowledge providers. Limited access to knowledge for farmers results in low maintainability and adaptation of novel technologies and ultimately in reduced capabilities to judge the AI decision process. This in turn either lowers trust or results in trusting the decision output too much, with both limiting economic exploitation of the technology. Furthermore, working with AI-based technologies creates the need for new skills in the farm workforce (for programming digital systems and software, data analytics, or machine and hardware maintenance) [

28]. At the same time, switching to automated technologies based on complex decision processes allows a shift to a more steady workforce. As repetitive and simple tasks may be annihilated, fewer migrant and part-time workers are needed, as they are potentially replaced with fewer high-skilled, full-time work personnel [

35,

36]. This creates novel requirements for technology design in terms of the user experience, as the deployed models must be designed for the specific user group or should at least be adaptable to different groups (e.g., language and visualization). Depending on the geographical area, different work requirements will lead to a non-homogeneous target audience. If the design of the AI is not adaptable to different skill levels of users, then it systematically widens the gap between demographic groups. Furthermore, various geographical and demographic differences must be accounted for to avoid social disparities, such as long-standing racial inequities [

28], gender differences [

37,

38], and also disparities in farm size [

33]. The respective models and tools are often designed for large-scale producers [

26,

39] as those are the farms that adopt digital technologies at higher rates [

40] and may further widen an already existing digital gap between farming populations [

41].

3.2. Technical Aspects

The data-driven modeling aspect of AI provides a variety of technological factors that create uncertainties in farmers’ decision-making processes. Along the AI development pipeline, this already starts with creating biases during data generation or its processing. During collection, a bias in data can be introduced by sampling solely in specific regions or types of farms, therefore expressing the ecological environment and social practices of a limited area (sampling bias). Due to the limitation to certain regions, the data might exclude different demographic properties (exclusion bias) or focus too much on crops with a high economic value (selection bias) [

42]. Such biases limit the ability of farmers to infer actions based on generalized suggestions by the AI [

43] and may produce a disadvantage for areas or crops of less economic interest (e.g., central African countries) [

26]. The diversity of measurement tools and practices may also lead to bias during data generation (measurement bias) or limited accuracy of the models in a different data environment (interoperability issue). Depending on the instruments, data may be in a different format, aggregated at different levels, or produced in a different environment (e.g., soil depth, water content, and time of day).

Further bias can be introduced in the data processing phase of the model [

44] through the selection of the model or its parameters (e.g., number of cross-validations, size of the train-test split, regularization functions, or data selection) as well as by making wrong assumptions about the modeling subject [

42]. Other algorithmic biases may be created by the optimization function used by the model, the user interface, and the feedback loop between the system and its users [

45]. Such algorithmic biases can significantly impact the model’s efficiency, trustworthiness, and deployability. This situation is aggravated if several models are incorporated into the system that exchange data between them or build on the decisions of each other [

7]. This complex data flow creates an additional challenge for the already limited transparency and explainability of deployed models [

32]. Based on this data complexity, the ability of algorithms to continuously learn by interacting with their technical, social, and environmental environment (e.g., reinforcement learning) further aggravates direct and hidden model dependencies. Such dependencies lower the transparency of the system, leading to higher costs and decreased maintainability of products based on AI [

46]. These hidden dependencies potentially initiate a cascade of unwanted feedback loops, unknown emergent effects of the models, or large-scale fragility of the system. If those issues are not addressed in the design phase, hten those design weaknesses may further increase the economic dependency of farmers on the vendors, the uncertainty in the decision-making process, and trust issues toward the technology.

Another important aspect is the amount of data available. In most cases, data will be sparse and scarce, and then deep learning techniques cannot be used [

47,

48]. A similar problem happens in health applications, and thus we can borrow techniques from that field to handle this issue [

49].

3.3. Environmental Aspects

As mentioned in the previous section, AI algorithms are often trained on a particular set of environmental conditions (e.g., soil type or climatic region) and redeployed in novel agricultural settings. Also, designers of such algorithms may lack the knowledge of traditional farm operators and make changes to the algorithm that are not aligned with personal or regional farm management practices (e.g., irrigation techniques or tillage practices). This increases the risk of unexpected behavior of the models, as the data input do not match the pretrained model or will be processed in a way that does not meet the individual user’s needs. This can lead to unknown and hidden biophysical feedback loops [

46,

50]. This decrease in accuracy may severely affect the trust in the deployed models, the system’s maintainability and explainability, farmers’ ability to make the best decisions for their farms, and the interoperability of AI technologies. Therefore, the ecological compatibility of the AI decision-making processes must be ensured both at the system and algorithm design levels [

51], with a focus on developing clear operational limits that follow transparently defined metrics [

52]. Furthermore, the model might learn indirect environmental or social key aspects (e.g., soil health, water cycle, and sowing patterns), which may lead to emergent effects from the model or leakage of sensitive information of the farms to the model providers or even other farmers.

4. Mitigation through Technological Developments

Despite the manifold challenges that surround AI technologies in the complex web of social, ecological, and technological interactions, responsible design could also leverage its ability to mediate the needs and requirements of each realm and provide a platform for the geographically diverse engagement of farmers [

53]. Most AI systems are designed to optimize the biophysical realm set by the user’s goals (e.g., the feeding of animals or watering schedule for crops) [

4,

7]. The ability to achieve this is bound to the ecological and social feedback it obtains as inputs, as well as presenting the monitored and optimized subject meaningfully to the user. Therefore, this represents a crucial point that links and navigates the perception of the user and his or her farm, includes diverse stakeholders, and represents the data in a manner that is responsible [

54,

55]. The following section provides three examples of leveraging its capabilities to increase responsible and sustainable use of the data, algorithmic, and visualization levels (see

Figure 2).

4.1. FAIR Data Principles

The findable, accessible, interoperable, and reusable (FAIR) data principles are a data management framework and open system design methodology with a particular emphasis on machine actionability in addition to supporting its reuse by individuals [

25]. These principles not only apply to data in a more narrow sense but also include algorithms, tools, and workflows that have been part of the data generation and processing pipeline. In this context,

Section 4.1 also integrates general data management design strategies to foster responsible use in AI environments.

Machine actionability is a vital process to the data principle and is particularly important in automated data processing. As AI systems can combine and automatically process diverse data sources, this autonomous agent must have the capacity to carry out the following processes:

Correctly identify the type of data;

Determine if it is worth using for the current task by analyzing the metadata or data elements;

Understand any licensing, legal, or accessibility constraints associated with the data;

Implements them correctly for the specified task [

25].

By making the data

findable through unique identifiers, diverse metadata, and common standards, the data provider ensures technical interoperability [

56] for all geographically distributed AI service providers and their users but also enables clear visualization for transparent AI processes, as the context of the data and its limitations can be displayed (e.g., data generation method, experiment set-up, and data provider). This is also vital for analyzing bias in the data, as the enhanced capabilities for the machine to understand the context within which the data are generated may provide further information for identifying critical and sensitive areas in the data. Also, subsets of the data or changes in the data (dynamic data) should be uniquely identifiable and linked to the original data [

57]. Changes to the data compared with its original source should be described in the metadata to enhance traceability, knowledge extraction, and the avoidance of potential biases.

Providing

accessibility to data via open and free protocols enables fair chances to find the necessary information for one’s needs [

58]. Accessibility does not necessarily imply open access to the data, as this could lead to other ethical concerns. However, it should clearly state under which modalities the data can be accessed. Also, the metadata should be openly visible even after the data are no longer available to enhance transparency and reproducibility [

56]. In general, access to data should be as open as possible without introducing privacy concerns [

59]. Data practices that enable privacy-aware access should be implemented in the AI processing pipeline to leverage the learning capabilities of the algorithms and better scalability and interoperability for the system. This includes practices such as anonymization of the data, synthetization, and federated machine learning algorithms. Anonymization describes the process of transforming the data in a way that sensitive information is hidden [

60]. Synthetization of data is a range of methods to create an artificial data set that inherits the characteristics and structure of the original data [

61]. Federated learning, on the other hand, is a machine learning approach that builds its model on decentralized and isolated data, thereby providing a security- and privacy-aware model training process [

26,

62,

63]. All three methodological examples can be used to enhance data availability for a diverse set of stakeholders with limited open data access or without enough data to train a model for their needs. Furthermore, providing AI services incorporating federated learning may positively affect technology adoption for a broader set of stakeholders, as existing privacy concerns are mitigated [

15].

Interoperable standards enhance the usability of the data [

25] and leverage competencies to develop accurate models and foster farm system understanding. This will be achieved with qualified references to other (meta)data that increase the knowledge creation capabilities of AI services to provide enhanced and trustworthy decisions for the farmer. Interoperability of the data is also crucial for M2M communication [

64]. Compatible data sharing between robots can increase the autonomy, flexibility, and interaction between robots, thereby supporting the overall autonomy of the farmer. Using a shared vocabulary for knowledge representation further strengthens the interpretability of the data [

56]. This enhances the explainability of AI systems, enabling farmers to make more informed decisions independently.

Lastly, fostering

reusability of data increases interoperability, as domain standards increase long-term use and ease of integration in different technological systems. This is a critical factor, as scholars [

18] and farmers [

65] alike highlighted the negative impacts on usability and technology adoption if interoperability is limited in smart farming technologies. The plurality of data descriptions ensures effective replication and (re)use in different farming environments, as the context of the data and its limitations can be better judged. This enables faster identification of bias and errors in the data and mitigates the risk of models’ unexpected behavior. Furthermore, such standards strengthen long-term maintenance capabilities and the economic autonomy of farmers, as they do not rely on outsourced and unknown data sources. As not all data must be entirely available for farmers, clear data usage licenses and detailed provenance enable farmers to understand the origin of the data, its authenticity, as well as the legal terms that can be used. This may positively impact confidence in the data and models and enable trust.

4.2. Transparent AI

Transparent AI is defined in this context as a system that provides information about its decision-making process and constitutes a high-level concept that incorporates the approaches of interpretable AI or explainable AI [

24,

66]. The first uses models that are inherently interpretable to humans [

67], whereas the second approach provides explanations for its predictions to the user [

68], primarily for models that are too complex to understand directly. In combination, the transparent AI approach not only focuses on explaining the model outputs but also provides information about the model pipeline. This creates novel potentials for human-in-the-loop design after model and product deployment [

69].

Trusting AI decision processes too much is a serious concern in most professions. Understanding the underlying criteria and attributes of an AI through interpretable or explained models can facilitate trust in one’s own and the models’ decisions and enhance collaboration by combining the farmers’ knowledge with the data provided by the AI. A farmer may confirm his or her management hypothesis or the AI-based solution by analyzing the contributing factors directly in the model (interpretable AI) or through visualized explanations (explainable AI). Visualizations such as bar charts are often used for identifying the importance of attributes [

70], heatmaps can show what parts of pictures have been used by the model [

71], partial dependence plots enable an overview of how different values of attributes influence decision outcomes [

72], or scatter plot diagrams can help users to see relationships or similarities between model outputs [

73]. Interpretable models, on the other hand, can give direct access to the probability distribution of features (naive Bayes) and the importance of individual criteria (linear or logistic regression models and decision trees), provide a logic-based structure that allows following the decision process of the model (decision trees and covering algorithms) [

43,

67,

74], or enable one to visualize the distribution of possible decision lists (Bayesian rule list) [

75]. It is important to mention that interpretable models often come at the price of limited scalability to large data sets (i.e., many attributes) and complex decision processes (i.e., nonlinear relationships between attributes) and should therefore be handled with care [

67,

74].

Both explainable and interpretable models can help detect biases (see

Figure 2), errors, or hidden dependencies in the decision process [

24,

43,

68,

71], such as by identifying sensitive attributes and their importance to the decision outcome (e.g., gender, race, height, and postal code). By understanding the underlying decision process and the importance of individual attributes, farmers can increase their decision-making competence, farm understanding, and privacy assessment [

24]. Developing a system that is also able to learn from farmers’ feedback (e.g., reinforcement learning) could further help to strengthen collaboration by aligning the outputs and decision processes with unique farm management practices [

76] or local biophysical differences (e.g., calibrating the model for a priori unknown biophysical feedbacks). This provides customizable management of AI systems for individual farm use cases [

21]. As users are often inconsistent in their feedback or may inflict errors and biases [

45,

77], particular guidance and merge criteria must be developed to ensure correct model development (e.g., an error message if an input conflicts with the expected values or has a negative effect on the accuracy).

4.3. Intuitive User Experience

The user experience, with a particular focus on user interfaces, provides the critical link between the AI-based farm management tool and the user [

77]. Design strategies should therefore be chosen to enhance the system’s mediating capabilities, optimizing various requirements such as understandability, usability, customizability, transparency, performance, or effectiveness. This is a challenge as the requirements change depending on the practitioner attributes (e.g., social background, education, use case, or goals), highlighting the need for inclusive design strategies to present information in a valuable and relevant way [

78].

Depending on the area of employment, the designers of such systems must have a profound understanding of the local workforce and potential future changes due to technology implementation. Migrant farm workers or minorities dominate the labor market in many areas. Understanding the knowledge gap that is present and providing information representations that are understandable, enable learning, ease configuration, and allow simple reconfiguration are crucial to ensuring unwanted social effects. This can be ensured in the program by visual or auditory aids, context awareness of the program, automated translation services, a comprehensive help section and clear documentation, clear and consistent design, interactive designs and visualizations that enable customizability, the inclusion of real-time support like a large language model designed to answer questions and provide an explanation about functionality, as well as the availability of human support [

76,

77]. The ability of context awareness and adaptive user interfaces could provide further support by measuring human capabilities during program interaction (e.g., eye tracking, blink reflex, and time on a page), providing suggestions for the sensory adaptation of the interface, and displaying additional material or presentations of information to enable teaching and training [

79]. In general, customization of user interfaces for respective AI models enables the ease of use for a wide variety of users, enhances data analytic capacities, and facilitates interaction and system understanding as well as use case adaptation [

18,

76].

All of this requires a priori knowledge of the user base. User research and extensive software testing in combination with the local workforce is crucial for successful and inclusive technology design. Fleming et al. [

33] highlighted the need to understand farmers’ key language, rules, norms, values, and assumptions to support technology adoption. It is stressed that gaps in equity, access, and distribution of benefits should be addressed by understanding the implicit and explicit implications of language use to navigate knowledge processing for farmers. As a shift from low-skilled manual labor to high-skilled technology workers is expected through the increasing use of AI, this creates the opportunity to provide designs that are equally engaging for different genders. It was shown that a difference in aesthetic (background image, font, and colors) or language features (distribution of masculine and female terms) could influence belonging or interest in the subject area [

80].

An inclusive user experience should also support expert and non-expert users in their decision-making process, highlighting the need for advanced methods to display and manipulate data and algorithmic dependencies and biases (as seen in

Figure 2) without an unsustainable cognitive workload [

79]. The user should be able to select subsets of the data, adjust the model parameters, and relabel or add new data. The program the machine learning framework is integrated into must support clear, concise, and relevant information on the data, metadata, and data translation in parameter settings within the AI decision process to ensure trust and long-term software maintainability by the user or for communication to the service provider. Further research has established a link between efficiency [

81], quality, model interpretability [

82], and use case adaptation [

21] if users are involved in the feature selection process of the model or can influence the model goals (e.g., precision vs. generalizability). Visualizations of the impact of features on the accuracy and model behavior (e.g., explanations given to the user) with the ability to undo actions and return to previous model states allow expert users to experiment with different strategies [

83] with the potential to increase the accuracy, farm system understanding, trust in the model results, and redeployment in different farm settings [

21].

5. AI Development Framework

Formulating technological requirements that enable the widespread integration of various factors is always difficult. Technologies can only adhere to some standards, as many requirements compete against each other (data accuracy vs. data privacy) or are limited by other constraints such as financial resources, implicit and hidden community standards in AI development, or individual preferences and world views. Therefore, this article does not provide deterministic recommendations for technology designs but strives to create an expanded view of the multivariate dependencies that come with unjust technological integration and highlights the need to put human-coupled environmental actions at the center. The inherent complexities of such have been exemplified based on farmers’ autonomy when using AI technologies while providing several design suggestions on how to combat detrimental effects. By providing a high-level framework on the social, technological, and ecological implications of the system design requirements, this article strives to support technology designers in creating responsible AI technologies and farmers in their awareness of potential risks.

The proposed framework in

Figure 3 provides a first step to targeting responsible design choices not only for AI but for algorithmic development in general. It includes several key characteristics as defined by Schiff et al. [

84]. The framework first targets an AI system’s overall scope to human well-being and then determines the lower-level sub-tools and methodologies. It directs attention to the knowledge and expertise needed to fulfill the required outputs, thereby advising the operationalization of the targets. By analyzing the social-technological-ecological dependencies within the embedded environment, as well as by asking questions about operationalization, it guides the attention to the system knowledge needed to achieve the desired AI attributes. In doing so, this framework is linked to the STES approach introduced in

Section 2 (“AI in Complex Environments”). By putting symmetric attention on the technological functionality, its users, and the implemented environment (Steps 1–3), it acknowledges the complex dependencies of AI systems and robotics in farming environments. By defining the desired attributes of the AI implementation, its users, or the farm (Step 4), it automatically reflects the impact of different perspectives on farming practices and explores how technology shapes the human–nature relationship. Thereby, it narrates the dynamics that are inherent in the daily farm operations that have to adhere to a multitude of requirements whilst aiming for economic feasibility. Steps 5–7 seek to identify strategic and sustainable interventions to strengthen the system functionality and induce positive change.

Viewing this framework as an iterative and recursive process is imperative, as many of the proposed solutions could lead to novel issues for responsible technology design. Prior understanding of the social, technological, and ecological dependencies must therefore be a primary principle for making responsible decisions about architectural and conceptual designs. By encouraging the re-evaluation of the chosen design decisions at the respective STES level, the framework opens up the question about further mechanisms to strengthen the desired attributes (Step 5). For example, the proposed mechanism of user screening for adjusted support could lead to data privacy issues or a lack of trust if sensitive information about farm attributes is processed. Therefore, such mechanisms should be joined with other strategies that enhance privacy and trust (such as localized data processing and synthetizing data for centralized model learning). Further examples of how the technologies in Step 5 support responsible AI practices can be found in

Section 5 (“Mitigation through Technological Developments”).

The framework provides easy-to-understand questions for moderately skilled users to obtain a high-level understanding of the complexities of designing responsible AI systems. It is flexible in its design, as it is not limited to a certain AI system (e.g., supervised, unsupervised, or reinforcement) or environment such as farming. However, the current framework targets the development of new AI architectures within the context of a multitude of requirements to support sustainable design decisions. Therefore, it is limited in its capacity to advise AI architects concerned with downstream AI life cycle phases (e.g., deployment and monitoring).

The differentiation between attributes and instruments (to achieve the attributes) is blurry to a certain degree. Some instruments can also be defined as attributes and vice versa, as they often have a bilateral influence. In general, attributes are considered to be of a higher hierarchy, as there is no direct and simple way to achieve them, and they are therefore often a mix of different instruments. Analyzing the synergies or trade-offs between attributes and instruments is critical in this context. As mentioned before, some instruments conflict with each other, whereas the combination of certain instruments could have a widespread positive effect greater than the sum of individual actions.

6. Future Work

As the development of AI and robotics is progressing at a fast pace [

8], future work considering the effects of such technologies is critical to leverage the positive effects for society and the environment. The effects of such technologies on attributes like autonomy or resilience are hard to measure directly and can often only be assessed qualitatively or via indirect metrics (e.g., average age of the workforce or gross margin per hectare) [

85]. Finding approaches that support technology assessment and validate the usefulness of such frameworks is therefore the subject of ongoing work. One potential success in this direction can be defined in the incorporation of robotics and AI in digital twins [

86,

87]. A digital twin is designed to approximate the behavior of an entity in virtual space based on continuous updates through the collection of data, models, and what-if simulations [

51]. In this way, it creates the functionality to explore the effects of different design choices in a safe space. By creating a digital twin at the farm level, different attributes and qualities can be defined by metrics and simulated to support designers in assessing the effects of different design decisions. As defined in [

7,

51], it would support the validation of AI models, providing an understanding of the model behavior and its boundaries.

Further work is also needed to integrate fairness indicators into the model’s development as well as to display the characteristics of such in an informative way through user interfaces or transparent AI processes. Partial dependency plots or counterfactual explanations are some of the many solutions for investigating the impact of changed values on the decision outcome but are not adequate substitutes for in-depth fairness analysis in the model pipeline. Human audits and tests are one of the most common approaches to checking for data and model fairness [

24]. Such a step is insufficient if users have control over their data generation processes and collaboratively improve the AI system for their own use case. Analysis tools that foster an understandable and explorative way for farmers to check for biases represent one of the future research challenges in this domain. This could support the integration of responsible principles in AI systems but should not be seen as a concept to shift the responsibility for trustworthy AI to the consumer.

Developing and implementing fair principles in different domains is still subject to ongoing research. Indicators and priorities have been designed for the data requirements to help with swift implementation for individual use cases. Not every fair data management principle is weighted the same in terms of importance as well as the number of indicators to achieve responsible data use [

88]. This creates bias by itself in following the standards but also provides the possibility of having a theoretically fair data standard that is not interoperable, as those are marked less critical. Further research should address this gap by having different design strategies depending on the necessary attributes one wants to adhere to.

7. Conclusions

It was shown that the development of AI systems is facing a variety of social, ecological, and technological challenges and requirements. These factors must be addressed and mediated to create AI and robotic technologies that can be called responsible and trustworthy. Exemplified through the effects on the autonomy of farmers, several key aspects have been introduced to mitigate the risks along the entire AI pipeline. These include facilitating fair data principles, particularly for the data collection and cleaning of the AI development pipeline. Approaches in the era of transparent AI can enhance the explainability and interpretability of deployed algorithms to create a baseline for trust, understandability, maintainability, and interoperability. Designs that foster intuitive user experiences have been shown to support bias avoidance when interacting with algorithms. These designs also enhance AI systems by improving cognition, learning, and usability. Based on this review, an AI development framework has been developed that provides symmetric attention to social, ecological, and technological requirements and guides the AI architect in finding sustainable solutions in this complex and dynamic environment. Further work is needed to validate the proposed framework and to investigate the effects of different AI design choices on attributes like autonomy or resilience. With this, digital twins may play a crucial role by approximating the behavior of a technological entity in virtual space.

Author Contributions

Conceptualization, methodology, software, validation, formal analysis, investigation, resources, data curation, writing—original draft preparation, visualization, project administration, funding acquisition, K.M.; writing—review and editing and supervision, R.B.-Y. All authors have read and agreed to the published version of the manuscript.

Funding

The authors acknowledge the funding of the project “Digitale Landwirtschaft” by the Austrian Federal Ministry of Education, Science and Research within the funding scheme “Digitale und soziale Transformation in der Hochschulbildung”. Furthermore, the authors acknowledge the funding by the TU Wien Bibliothek for financial support through its Open Access Funding Program. Open Access Funding by TU Wien.

Data Availability Statement

No primary data were created in this research.

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Abbreviations

The following abbreviations are used in this article:

| MDPI | Multidisciplinary Digital Publishing Institute |

| STES | Social-technological-ecological system |

| AI | Artificial intelligence |

| ICT | Information and communication technology |

| FAIR | Findable, accessible, interoperable, and reusable |

References

- Scown, M.W.; Winkler, K.J.; Nicholas, K.A. Aligning research with policy and practice for sustainable agricultural land systems in Europe. Proc. Natl. Acad. Sci. USA 2019, 116, 4911–4916. [Google Scholar] [CrossRef]

- Scown, M.W.; Brady, M.V.; Nicholas, K.A. Billions in Misspent EU Agricultural Subsidies Could Support the Sustainable Development Goals. One Earth 2020, 3, 237–250. [Google Scholar] [CrossRef]

- Araújo, S.O.; Peres, R.S.; Barata, J.; Lidon, F.; Ramalho, J.C. Characterising the Agriculture 4.0 Landscape—Emerging Trends, Challenges and Opportunities. Agronomy 2021, 11, 667. [Google Scholar] [CrossRef]

- Purcell, W.; Neubauer, T. Digital Twins in Agriculture: A State-of-the-art review. Smart Agric. Technol. 2022, 3, 100094. [Google Scholar] [CrossRef]

- Lindblom, J.; Lundström, C.; Ljung, M.; Jonsson, A. Promoting sustainable intensification in precision agriculture: Review of decision support systems development and strategies. Precis. Agric. 2017, 18, 309–331. [Google Scholar] [CrossRef]

- Banhazi, T.; Halas, V.; Maroto-Molina, F. Introduction to practical precision livestock farming. In Practical Precision Livestock Farming: Hands-on Experiences with PLF Technologies in Commercial and R&D Settings; Wageningen Academic Publishers: Wageningen, The Netherlands, 2022; pp. 213–230. [Google Scholar]

- Mallinger, K.; Purcell, W.; Neubauer, T. Systemic design requirements for sustainable Digital Twins in precision livestock farming. In Proceedings of the 10th European Conference on Precision Livestock Farming, Vienna, Austria, 29 August–2 September 2022. [Google Scholar]

- Markets and Markets. AI in Agriculture Market by Technology (Machine Learning, Computer Vision, Predictive Analytics), Offering, Application (Precision Farming, Livestock Monitoring, Drone Analytics, Agriculture Robots), Deployment, and Geography-Global Forecast to 2026. 2023. Available online: https://www.marketsandmarkets.com/Market-Reports/ai-in-agriculture-market-159957009.html (accessed on 4 December 2023).

- Cockburn, M. Application and prospective discussion of machine learning for the management of dairy farms. Animals 2020, 10, 1690. [Google Scholar] [CrossRef]

- Stock, P.V.; Forney, J. Farmer autonomy and the farming self. J. Rural Stud. 2014, 36, 160–171. [Google Scholar] [CrossRef]

- Antsaklis, P. Autonomy and metrics of autonomy. Annu. Rev. Control. 2020, 49, 15–26. [Google Scholar] [CrossRef]

- Makinde, A. Investigating Perceptions, Motivations, and Challenges in the Adoption of Precision Livestock Farming in the Beef Industry. Ph.D. Thesis, University of Guelph, Guelph, ON, Canada, 2020. [Google Scholar]

- Ugochukwu, A.I.; Phillips, P.W. Technology adoption by agricultural producers: A review of the literature. In From Agriscience to Agribusiness: Theories, Policies and Practices in Technology Transfer and Commercialization; Springer: Berlin/Heidelberg, Germany, 2018; pp. 361–377. [Google Scholar]

- Pathak, H.S.; Brown, P.; Best, T. A systematic literature review of the factors affecting the precision agriculture adoption process. Precis. Agric. 2019, 20, 1292–1316. [Google Scholar] [CrossRef]

- Drewry, J.L.; Shutske, J.M.; Trechter, D.; Luck, B.D.; Pitman, L. Assessment of digital technology adoption and access barriers among crop, dairy and livestock producers in Wisconsin. Comput. Electron. Agric. 2019, 165, 104960. [Google Scholar] [CrossRef]

- Mallinger, K.; Corpaci, L.; Neubauer, T.; Tikasz, I.E.; Banhazi, T. Unsupervised and supervised machine learning approach to assess user readiness levels for precision livestock farming technology adoption in the pig and poultry industries. Comput. Electron. Agric. 2023, 213, 108239. [Google Scholar] [CrossRef]

- Isabelle, A. The Legitimacy of Precision Livestock Farming; Swedish University of Agricultural Sciences: Uppsala, Sweden, 2021. [Google Scholar]

- Pivoto, D.; Waquil, P.D.; Talamini, E.; Finocchio, C.P.S.; Dalla Corte, V.F.; de Vargas Mores, G. Scientific development of smart farming technologies and their application in Brazil. Inf. Process. Agric. 2018, 5, 21–32. [Google Scholar] [CrossRef]

- Regan, Á. ‘Smart farming’ in Ireland: A risk perception study with key governance actors. NJAS Wagening. J. Life Sci. 2019, 90, 100292. [Google Scholar] [CrossRef]

- Barreto, L.; Amaral, A. Smart farming: Cyber security challenges. In Proceedings of the 2018 International Conference on Intelligent Systems (IS), IEEE, Funchal, Portugal, 25–27 September 2018; pp. 870–876. [Google Scholar]

- Jerhamre, E.; Carlberg, C.J.C.; van Zoest, V. Exploring the susceptibility of smart farming: Identified opportunities and challenges. Smart Agric. Technol. 2022, 2, 100026. [Google Scholar] [CrossRef]

- Mark, R. Ethics of using AI and big data in agriculture: The case of a large agriculture multinational. ORBIT J. 2019, 2, 1–27. [Google Scholar] [CrossRef]

- Legun, K.; Burch, K.A.; Klerkx, L. Can a robot be an expert? The social meaning of skill and its expression through the prospect of autonomous AgTech. Agric. Hum. Values 2022, 40, 501–517. [Google Scholar] [CrossRef]

- Mohseni, S.; Zarei, N.; Ragan, E.D. A multidisciplinary survey and framework for design and evaluation of explainable AI systems. ACM Trans. Interact. Intell. Syst. (TiiS) 2021, 11, 1–45. [Google Scholar] [CrossRef]

- Wilkinson, M.D.; Dumontier, M.; Aalbersberg, I.J.; Appleton, G.; Axton, M.; Baak, A.; Blomberg, N.; Boiten, J.W.; da Silva Santos, L.B.; Bourne, P.E.; et al. The FAIR Guiding Principles for scientific data management and stewardship. Sci. Data 2016, 3, 160018. [Google Scholar] [CrossRef]

- Liu, Y.; Ma, X.; Shu, L.; Hancke, G.P.; Abu-Mahfouz, A.M. From Industry 4.0 to Agriculture 4.0: Current status, enabling technologies, and research challenges. IEEE Trans. Ind. Inform. 2020, 17, 4322–4334. [Google Scholar] [CrossRef]

- Klerkx, L.; Jakku, E.; Labarthe, P. A review of social science on digital agriculture, smart farming and agriculture 4.0: New contributions and a future research agenda. NJAS Wagening. J. Life Sci. 2019, 90, 100315. [Google Scholar] [CrossRef]

- Rotz, S.; Gravely, E.; Mosby, I.; Duncan, E.; Finnis, E.; Horgan, M.; LeBlanc, J.; Martin, R.; Neufeld, H.T.; Nixon, A.; et al. Automated pastures and the digital divide: How agricultural technologies are shaping labour and rural communities. J. Rural Stud. 2019, 68, 112–122. [Google Scholar] [CrossRef]

- Ahlborg, H.; Ruiz-Mercado, I.; Molander, S.; Masera, O. Bringing technology into social-ecological systems research—Motivations for a socio-technical-ecological systems approach. Sustainability 2019, 11, 2009. [Google Scholar] [CrossRef]

- Redman, C.L.; Miller, T.R. The technosphere and earth stewardship. In Earth Stewardship; Springer: Berlin/Heidelberg, Germany, 2015; pp. 269–279. [Google Scholar]

- Anderies, J.M. Embedding built environments in social–ecological systems: Resilience-based design principles. Build. Res. Inf. 2014, 42, 130–142. [Google Scholar] [CrossRef]

- Birhane, A. Algorithmic injustice: A relational ethics approach. Patterns 2021, 2, 100205. [Google Scholar] [CrossRef]

- Fleming, A.; Jakku, E.; Lim-Camacho, L.; Taylor, B.; Thorburn, P. Is big data for big farming or for everyone? Perceptions in the Australian grains industry. Agron. Sustain. Dev. 2018, 38, 1–10. [Google Scholar] [CrossRef]

- Abbasi, R.; Martinez, P.; Ahmad, R. The digitization of agricultural industry—A systematic literature review on agriculture 4.0. Smart Agric. Technol. 2022, 2, 100042. [Google Scholar] [CrossRef]

- Fuller, A.; Fan, Z.; Day, C.; Barlow, C. Digital Twin: Enabling Technologies, Challenges and Open Research. IEEE Access 2020, 8, 108952–108971. [Google Scholar] [CrossRef]

- Smith, H.E.; Sallu, S.M.; Whitfield, S.; Gaworek-Michalczenia, M.F.; Recha, J.W.; Sayula, G.J.; Mziray, S. Innovation systems and affordances in climate smart agriculture. J. Rural Stud. 2021, 87, 199–212. [Google Scholar] [CrossRef]

- Bear, C.; Holloway, L. Country life: Agricultural technologies and the emergence of new rural subjectivities. Geogr. Compass 2015, 9, 303–315. [Google Scholar] [CrossRef]

- Government of Canada. Learning Nation: Equipping Canada’s Workforce with Skills for the Future; Government of Canada: Ottawa, ON, Canada, 2017.

- Forney, J.; Epiney, L. Governing Farmers through data? Digitization and the Question of Autonomy in Agri-environmental governance. J. Rural Stud. 2022, 95, 173–182. [Google Scholar] [CrossRef]

- Duncan, E. An Exploration of How the Relationship Between Farmers and Retailers Influences Precision Agriculture Adoption. Ph.D. Thesis, University of Guelph, Guelph, ON, Canada, 2018. [Google Scholar]

- Marshall, A.; Dezuanni, M.; Burgess, J.; Thomas, J.; Wilson, C.K. Australian farmers left behind in the digital economy—Insights from the Australian Digital Inclusion Index. J. Rural Stud. 2020, 80, 195–210. [Google Scholar] [CrossRef]

- Mehrabi, N.; Morstatter, F.; Saxena, N.; Lerman, K.; Galstyan, A. A survey on bias and fairness in machine learning. ACM Comput. Surv. (CSUR) 2021, 54, 1–35. [Google Scholar] [CrossRef]

- Murdoch, W.J.; Singh, C.; Kumbier, K.; Abbasi-Asl, R.; Yu, B. Interpretable machine learning: Definitions, methods, and applications. arXiv 2019, arXiv:1901.04592. [Google Scholar] [CrossRef]

- Baeza-Yates, R.; Murgai, L. Bias and the Web. In Introduction to Digital Humanism: A Textbook; Werthner, H., Ghezzi, C., Kramer, J., Nida-Rümelin, J., Nuseibeh, B., Prem, E., Stanger, A., Eds.; Springer Nature: Cham, Switzerland, 2024; pp. 435–462. [Google Scholar]

- Baeza-Yates, R. Bias on the Web. Commun. ACM 2018, 61, 54–61. [Google Scholar] [CrossRef]

- Sculley, D.; Holt, G.; Golovin, D.; Davydov, E.; Phillips, T.; Ebner, D.; Chaudhary, V.; Young, M.; Crespo, J.F.; Dennison, D. Hidden technical debt in machine learning systems. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; Volume 28. [Google Scholar]

- Baeza-Yates, R. Big Data or Right Data? In Proceedings of the Alberto Mendelzon International Workshop on Foundations of Data Management (AMW 2013), Puebla/Cholula, Mexico, 21–23 May 2013; CEUR Workshop Proceedings. Volume 1087. [Google Scholar]

- Ng, A. Unbiggen AI. IEEE Spectrum. 2022. Available online: https://spectrum.ieee.org/andrew-ng-data-centric-ai (accessed on 4 December 2023).

- Rauschenberger, M.; Baeza-Yates, R. How to handle health-related small imbalanced data in machine learning? i-com 2021, 19, 215–226. [Google Scholar] [CrossRef]

- Ibrion, M.; Paltrinieri, N.; Nejad, A.R. On Risk of Digital Twin Implementation in Marine Industry: Learning from Aviation Industry. J. Phys. 2019, 1357, 012009. [Google Scholar] [CrossRef]

- Purcell, W.; Neubauer, T.; Mallinger, K. Digital Twins in agriculture: Challenges and opportunities for environmental sustainability. Curr. Opin. Environ. Sustain. 2023, 61, 101252. [Google Scholar] [CrossRef]

- Khan, N.; Jhariya, M.K.; Raj, A.; Banerjee, A.; Meena, R.S. Eco-Designing for Sustainability. In Ecological Intensification of Natural Resources for Sustainable Agriculture; Jhariya, M.K., Meena, R.S., Banerjee, A., Eds.; Springer: Singapore, 2021; pp. 565–595. [Google Scholar]

- Fielke, S.; Taylor, B.; Jakku, E. Digitalisation of agricultural knowledge and advice networks: A state-of-the-art review. Agric. Syst. 2020, 180, 102763. [Google Scholar] [CrossRef]

- Eastwood, C.; Klerkx, L.; Ayre, M.; Dela Rue, B. Managing socio-ethical challenges in the development of smart farming: From a fragmented to a comprehensive approach for responsible research and innovation. J. Agric. Environ. Ethics 2019, 32, 741–768. [Google Scholar] [CrossRef]

- Rattenbury, T.; Nafus, D. Data Science and Ethnography: What’s Our Common Ground, and Why Does It Matter? Retrieved Novemb. 2018, 26, 2018. [Google Scholar]

- Boeckhout, M.; Zielhuis, G.A.; Bredenoord, A.L. The FAIR guiding principles for data stewardship: Fair enough? Eur. J. Hum. Genet. 2018, 26, 931–936. [Google Scholar] [CrossRef]

- Pröll, S.; Rauber, A. Scalable data citation in dynamic, large databases: Model and reference implementation. In Proceedings of the 2013 IEEE International Conference on Big Data, IEEE, Silicon Valley, CA, USA, 6–9 October 2013; pp. 307–312. [Google Scholar]

- Roman, A.C.; Vaughan, J.W.; See, V.; Ballard, S.; Schifano, N.; Torres, J.; Robinson, C.; Ferres, J.M.L. Open Datasheets: Machine-readable Documentation for Open Datasets and Responsible AI Assessments. arXiv 2023, arXiv:2312.06153. [Google Scholar]

- European Commission. Open Innovation, Open Science, Open to the World: A Vision for Europe; European Commission: Brussels, Belgium, 2016. [Google Scholar]

- Murthy, S.; Bakar, A.A.; Rahim, F.A.; Ramli, R. A comparative study of data anonymization techniques. In Proceedings of the 2019 IEEE 5th International Conference on Big Data Security on Cloud (BigDataSecurity), IEEE International Conference on High Performance and Smart Computing, (HPSC) and IEEE International Conference on Intelligent Data and Security (IDS), IEEE, Washington, DC, USA, 27–29 May 2019; pp. 306–309. [Google Scholar]

- Nikolenko, S.I. Synthetic Data for Deep Learning; Springer: Berlin/Heidelberg, Germany, 2021; Volume 174. [Google Scholar]

- Liu, J.; Huang, J.; Zhou, Y.; Li, X.; Ji, S.; Xiong, H.; Dou, D. From distributed machine learning to federated learning: A survey. Knowl. Inf. Syst. 2022, 64, 885–917. [Google Scholar] [CrossRef]

- Xu, G.; Li, H.; Liu, S.; Yang, K.; Lin, X. Verifynet: Secure and verifiable federated learning. IEEE Trans. Inf. Forensics Secur. 2019, 15, 911–926. [Google Scholar] [CrossRef]

- Poteko, J.; Harms, J. M2M Networking of Devices in the Dairy Barn. In Proceedings of the AgroVet-Strickhof Conference, Lindau, Germany, 7 November 2023; Current and Future Research Projects. Conference Proceedings. Terranova, M., Weiss, A., Eds.; AgroVet-Strickhof: Lindau, Germany, 2023. [Google Scholar]

- Boothby, A.L.; White, D.R. Understanding the Barriers to Uptake of Precision Livestock Farming (PLF) in the UK Sheep Industry. In Proceedings of the Agricultural Engineering AgEng2021, Évora, Portugal, 4–8 July 2021; Volume 572. [Google Scholar]

- Clinciu, M.; Hastie, H. A survey of explainable AI terminology. In Proceedings of the 1st Workshop on Interactive Natural Language Technology for Explainable Artificial Intelligence (NL4XAI 2019), Tokyo, Japan, 29 October–1 November 2019; pp. 8–13. [Google Scholar]

- Molnar, C. Interpretable Machine Learning; Lulu Press: Morrisville, NC, USA, 2020. [Google Scholar]

- Wang, D.; Yang, Q.; Abdul, A.; Lim, B.Y. Designing theory-driven user-centric explainable AI. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 1–15. [Google Scholar]

- Drozdal, J.; Weisz, J.; Wang, D.; Dass, G.; Yao, B.; Zhao, C.; Muller, M.; Ju, L.; Su, H. Trust in AutoML: Exploring information needs for establishing trust in automated machine learning systems. In Proceedings of the 25th International Conference on Intelligent User Interfaces, Cagliari, Italy, 17–20 March 2020; pp. 297–307. [Google Scholar]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. “Why should i trust you?” Explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 1135–1144. [Google Scholar]

- Kim, B.; Wattenberg, M.; Gilmer, J.; Cai, C.; Wexler, J.; Viegas, F. Interpretability beyond feature attribution: Quantitative testing with concept activation vectors (tcav). In Proceedings of the International Conference on Machine Learning, PMLR, Stockholm Sweden, 10–15 July 2018; pp. 2668–2677. [Google Scholar]

- Krause, J.; Perer, A.; Ng, K. Interacting with predictions: Visual inspection of black-box machine learning models. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; pp. 5686–5697. [Google Scholar]

- Kahng, M.; Andrews, P.Y.; Kalro, A.; Chau, D.H. ActiVis: Visual exploration of industry-scale deep neural network models. IEEE Trans. Vis. Comput. Graph. 2017, 24, 88–97. [Google Scholar] [CrossRef]

- Du, M.; Liu, N.; Hu, X. Techniques for interpretable machine learning. Commun. ACM 2019, 63, 68–77. [Google Scholar] [CrossRef]

- Letham, B.; Rudin, C.; McCormick, T.H.; Madigan, D. Interpretable classifiers using rules and bayesian analysis: Building a better stroke prediction model. The Ann. Appl. Stat. 2015, 9, 1350–1371. [Google Scholar] [CrossRef]

- Bernardo, F.; Zbyszynski, M.; Fiebrink, R.; Grierson, M. Interactive machine learning for end-user innovation. In Proceedings of the 2017 AAAI Spring Symposium Series, Stanford, CA, USA, 27–29 March 2017. [Google Scholar]

- Dudley, J.J.; Kristensson, P.O. A review of user interface design for interactive machine learning. ACM Trans. Interact. Intell. Syst. (TiiS) 2018, 8, 1–37. [Google Scholar] [CrossRef]

- Stitzlein, C.; Fielke, S.; Fleming, A.; Jakku, E.; Mooij, M. Participatory design of digital agriculture technologies: Bridging gaps between science and practice. Rural. Ext. Innov. Syst. J. 2020, 16, 14–23. [Google Scholar]

- Villani, V.; Sabattini, L.; Czerniaki, J.N.; Mertens, A.; Vogel-Heuser, B.; Fantuzzi, C. Towards modern inclusive factories: A methodology for the development of smart adaptive human-machine interfaces. In Proceedings of the 2017 22nd IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), IEEE, Limassol, Cyprus, 12–15 September 2017; pp. 1–7. [Google Scholar]

- Metaxa-Kakavouli, D.; Wang, K.; Landay, J.A.; Hancock, J. Gender-inclusive design: Sense of belonging and bias in web interfaces. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–6. [Google Scholar]

- Raghavan, H.; Madani, O.; Jones, R. Active learning with feedback on features and instances. J. Mach. Learn. Res. 2006, 7, 1655–1686. [Google Scholar]

- Brooks, M.; Amershi, S.; Lee, B.; Drucker, S.M.; Kapoor, A.; Simard, P. FeatureInsight: Visual support for error-driven feature ideation in text classification. In Proceedings of the 2015 IEEE Conference on Visual Analytics Science and Technology (VAST), IEEE, Chicago, IL, USA, 25–30 October 2015; pp. 105–112. [Google Scholar]

- Amershi, S. Designing for effective end-user interaction with machine learning. In Proceedings of the 24th Annual ACM Symposium Adjunct on User Interface Software and Technology, Santa Barbara, CA, USA, 16–19 October 2011; pp. 47–50. [Google Scholar]

- Schiff, D.; Rakova, B.; Ayesh, A.; Fanti, A.; Lennon, M. Principles to practices for responsible AI: Closing the gap. arXiv 2020, arXiv:2006.04707. [Google Scholar]

- Meuwissen, M.P.; Feindt, P.H.; Spiegel, A.; Termeer, C.J.; Mathijs, E.; De Mey, Y.; Finger, R.; Balmann, A.; Wauters, E.; Urquhart, J.; et al. A framework to assess the resilience of farming systems. Agric. Syst. 2019, 176, 102656. [Google Scholar] [CrossRef]

- Mazumder, A.; Sahed, M.; Tasneem, Z.; Das, P.; Badal, F.; Ali, M.; Ahamed, M.; Abhi, S.; Sarker, S.; Das, S.; et al. Towards next generation digital twin in robotics: Trends, scopes, challenges, and future. Heliyon 2023, 9, e13359. [Google Scholar] [CrossRef]

- Lu, Q.; Zhu, L.; Xu, X.; Whittle, J. Responsible-AI-by-design: A pattern collection for designing responsible AI systems. IEEE Softw. 2023, 40, 63–71. [Google Scholar] [CrossRef]

- RDA FAIR Data Maturity Model Working Group. FAIR Data Maturity Model: Specification and guidelines. Res. Data Alliance 2020, 10. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).