1. Introduction

The concept of a smart house or smart living environment is more current than ever, as more and more everyday devices are equipped with smart capabilities. The importance of activity recognition research lies in the advantages of being able to monitor and assist a person who uses smart sensors [

1]. Internet connectivity, which is, in most cases omnipresent, has even increased the range of these smart devices as they can now communicate with any internet node and can even be controlled or supervised remotely.

The security aspect of this emerging connectivity is still a very important one, as personal data are sent around via the internet, so any device or platform that is exposed over the internet needs to be properly secured to make sure that the personal data are only available to the correct system and are stored only according to the user’s agreement. Human Activity Recognition (HAR) is one area that greatly benefits from this emerging trend of having smart capable devices around the living environment. The amount of available data for human activity recognition greatly increases as we are surrounded by smart capable devices, thus we can detect human activity more precisely. This increase in HAR precision can be especially helpful in environments that are inhabited by multiple persons. In most cases, smart devices can pinpoint the current user, providing additional context when trying to resolve the multiple inhabitant HAR issue, more precisely, to identify a certain person and link them to a specific complex activity in a multi-person space.

Internet of Things (IoT) technology is applied in multiple domains such as medicine, manufacturing, emergency response, logistics, and daily life activity recognition. Furthermore, emerging IoT technologies allow us to not only supervise HAR but also to actively react, based on these and other data, by controlling smart-enabled devices or contacting other persons. This kind of complex supervision and environment control, together with all the smart-enabled devices and systems, make up the building blocks of the smart home, a home that can help its occupants in day-to-day tasks and especially during emergencies or life-threatening situations.

Activity recognition can be performed using three main sensor categories: ambient, vision, and body-worn, as also shown in [

2,

3,

4]. Ambient sensors are a great way to supervise relatively small areas and require changes across the living environment to function. The main disadvantage of using ambient sensors is the fact that they require a specially prepared living area for HAR analysis. This kind of ambient sensor implementation can help when dealing with multiple inhabitant spaces where we need to identify and pinpoint each user, as the entire living space is supervised at any moment and acts as a single unit. As the supervised living environment increases, the required number of nodes increases as well. A particular type of ambient sensor is vision-based, which can offer great user recognition in multi-inhabitant spaces. This type of ambient sensor requires multiple vision devices installed across the living space (at least one vision-based sensor for every room) and raises some potential privacy issues, especially if the data are analyzed outside the local living environment network.

Body-worn sensors, also known as wearable devices, can directly gather and even process the sensor data closest to the user’s body. Since the main data-gathering device is worn on the user’s body, this approach will work fully or partially even in non-controlled environments. For this body-worn sensor type, we have only network or minimum infrastructure requirements for the system to work, even if there are multiple types of devices that send data via many types of communication technologies [

5]. A person usually performs many types of activities, based on simple and complex movements [

6], and this physical information is easily collected through wearable devices like commercial mobile phones and portable devices [

7].

The smartphone is one of the most used devices for HAR as it can record and process information itself. Also, since the processing power is enough for most tasks, this widely available device has quickly gained popularity for HAR-related tasks. The major downside of using a smartphone for detecting the user’s activity is data downtime for not wearing the device. If the smartphone is not worn or directly used by the user, the system does not receive any relevant data regarding the current activity and, thus, the HAR precision decreases. Smartphones are not necessarily worn consistently, as the wear position will greatly vary depending on the person and situation. A watch has a more stable wear pattern, as it is primarily worn on the user’s wrist and is usually worn extensively for long periods of time. A smartwatch is a small device that can be easily and non-intrusively worn for long periods of time, making it ideal for data acquisition [

8,

9].

For HAR based on accelerometer and gyroscope data, provided by sensors also found in a smartwatch, the classic approach is to use the raw sensor data and preprocess it. Features are then extracted and used to train a neural network for activity recognition. In this scenario, the neural network input is represented by a series of numeric values that try to capture the essence of that particular activity. Based on the raw sensor data or even extracted features, plot images can be generated and fed to the neural network as input data instead of numerical values. In this scenario, the human activity recognition task becomes an image classification task, trying, in essence, to identify the activity based on the plot image using specific image classification neural networks. The numerical data that are turned into a plot image can be graphically represented in multiple ways depending on the type of plot image and the input raw data structure; these variations can have a significant impact on the activity recognition rate [

10,

11].

Data collected from different devices are stored in various databases that are freely available. These databases are helpful to improve and test new algorithms and methods. One of the most common databases is WISDM [

12], as this database contains data collected from the accelerometer and gyroscope of Android-based smartphones and smartwatches [

13]. The data can be affected by the differences in the residence’s layout or human behavior. Some of the causes of these differences are health conditions changes, sensor displacement, and activities performed differently over time, as mentioned in [

13].

For data segmentation, two major types are used: fixed window size and dynamic segmentation. For both of them, the main challenge is to determine the proper window length. Data segmentation is very important, a smaller segment can allow faster activity recognition and real-time recognition, minimizing the time of the entire required process [

14]. On the other hand, choosing a window that is too small may lead to a low activity recognition rate as the system cannot properly identify the activity due to the data window’s similarities with other activities. So the length of the data window chosen is very important as we need to allow real-time process recognition and maintain a proper activity recognition rate. The data segmentation process can be time-based or feature-based. In activity recognition for daily tasks, the most common method is time-based segmentation. The main problem for dynamic-size windows is identifying the correct temporal separation for an activity. For the fixed-size windows, the main problem is to identify the proper size to include all the data recorded for the same activity [

15,

16,

17].

The HAR is very important in real-time systems that include smart gadgets and deep learning techniques [

18,

19]. To recognize activities from a real environment with continuous data streaming remains a major challenge, as shown in [

20]. Recognition can be implemented on wearable devices like mobile phones or personal computers. An important aspect that can affect the performance of the system is the computational cost. Although some minimal computational steps are still required, reducing the multiple features extraction and windowing process is detailed in [

21]. The data from the body-worn sensor module can be relayed to a local data hub or to the cloud directly. A socket or HTTP request can be used for data transfer on the local or cloud data processing device.

Our objective is to create a cloud-based real-time human activity recognition system powered by a neural network. The system should be able to work with raw movement data retrieved from a smartwatch in order to identify human activities. The HAR process will be accomplished using an image recognition ML.NET neural network that handles plot images generated based on both raw accelerometer and gyroscope data. The system should be available for local network usage or cloud-based deployment and usage. Data privacy and security are very important aspects and supporting on-premises deployment and usage provides added layers of security for special requirements and use cases.

2. Related Work

Visually representing data from a dataset is quite common. Symbolic representation is used to increase the recognition rate and uses high-performance neural networks specialized in image recognition. A significant method of representing data is Human Activity Recognition on Signal Images (HARSI). As described in [

22], this method is very efficient and the research describes the method as converting the raw data into an understandable image. The images were generated by plotting the raw data from an accelerometer. The WISDM data set was used for this work and six types of activities were selected: jogging, moving downstairs, walking, moving upstairs, standing, and sitting. In this study, different types of convolutional neural networks were tested. The experimental algorithms tested the following versions: HARSI-ResNet50, HARSI-ResNet101, HARSI-AlexNet, HARSI-DenseNet121, HARSI-SqueezeNetv1.0, HARSI-SqueezeNetv1.1, HARSI-VGG16, and HARSI-VGG19. The most efficient was the HARSI-VGG19 method with a 98% accuracy.

Another study using the WISDM data set was described in [

23]. Two types of algorithms were tested: Convolution Neural Networks (CNNs) and Long Short-Term Memory Networks (LSTMs). Through these algorithms, the performance of recognizing hand movements was tested using smartwatch data. The following activities were studied: kicking, stairs, standing, jogging, sitting, walking, sandwiching, chips, drinking, soup, pasta, folding, typing, teeth, catching, clapping, dribbling, and writing. The data from 44 subjects were selected from the raw data set. In the preprocessing phase, a time-based sliding window was used with a length of 10 s with 5 s of data overlapping. Three types of data sets were tested: data from the accelerometer, data from the gyroscope, and combined data from both the accelerometer and gyroscope. The best results were obtained from combined data from the accelerometer and the gyroscope with a hybrid CNN-LSTM algorithm with an overall recognition rate of 96.20%. Analyzing the confusion table, we observed that the activities with the lower recognition rate were sitting, standing, sandwiching, and writing.

The research described in [

24] presents a human activity recognition method based on symbolic representation (HAR-SR). This method has two essential parts: the first is signal segmentation-based data fusion and the second is symbolic representation based on patterns from time series. The steps in symbolic representation presented in this research start with data segmentation using a sliding window based on time with a specific length, after the dimension of the segments database is reduced by symbolic approximation and noise removal. Next, a search table was used for the discretization process to obtain the symbolic representation of the segment and, in the final step, each discretized segment was used to obtain a frequency histogram of the symbols. For classification, a K-Nearest Neighbor (kNN) algorithm was used and the recognition rate obtained was 77.82%.

An interesting view of human activity recognition was described in [

25]. A new architecture of CNNs network was proposed in this research. A new type of classification was added to the convolutional layer. This layer contains a matching filter (MF) based on adding a signal noise comparison function. The activation function from this method is based on calculating the maximum between the known signal or the template signal and the unknown signal or the signal to be known. This function was included in a layer named Conv1D. The Conv1D layer is followed by a GlobalMaxPooling layer and, in the final step, a fully connected layer with softmax activation was added. For the most common activities (walking, jogging, moving downstairs, moving upstairs, sitting), this research obtained a very good total recognition rate of 97.32%. Analyzing the confusion matrix for this method applied to the WISDM data set (only accelerometer data), we observed a lower recognition rate for moving downstairs and moving upstairs activities. Moving downstairs activity obtained an 89.00% recognition rate and moving upstairs a 91.00% recognition rate. The algorithm was unable to obtain a very good recognition rate for both of these activities, probably due to the similarities between the signals.

The labeling of the activity data set is the most important part of improving activity recognition performance according to [

26]. This research proposes a system based on user feedback regarding the recognized activity. The user was informed through a notification. The recognition algorithm used is a long short-term memory (LSTM) model which was trained using an open dataset. The accelerometer data that are read from the smartphone are recognized by the trained algorithm and the result is sent to the user via a notification. The user approves the newly labeled data and, in this way, the raw data set increases in size. This implementation improves the recognition rate, according to this research, with 10% more than the classical method with the same model. For the kNN model, the recognition rate was improved by 13.40%, and for LSTM, by 16.20%.

The concept of the Internet of Things can help improve people’s life nowadays, by handling and maximizing the data collected from multiple sensors. The authors of [

27] propose a human activity recognition real-time system based on data collected from smartphones and smartwatches. The real-time data are collected from the accelerometer and gyroscope sensors. The watch is placed on the wrist and the smartphone is in a pocket. For the accelerometer, the signals are separated into body and gravity components. The data are segmented into overlapped windows. After the new features are extracted, the data scaling factors and normalization are applied. Four types of algorithms were tested for human activity recognition: Random Forest (RF), Multi-layer Perception (MLP), Support Vector Machines (SVM), and Naive Bayes (NB). The training was implemented with a dataset collected by researchers. The best recognition rate was obtained by the RF model at 98.72%. Also, this method was tested with the WISDM dataset, and a 98.56% recognition rate was obtained.

Using wearable sensors built into everyday objects is common practice, even reaching the level of the incorporation of stretch sensors into clothes. Combining stretch sensors and inertial sensors can yield good results with an accuracy rate of 97.51% [

28]. This approach implies that the user wears custom add-on modules that can also be built into the clothes, and it requires specialized hardware modules for this, making the system a bit more difficult to wear for extensive periods of time.

Options to unobtrusively monitor a user’s activity can also be used, without the subject carrying a dedicated device; for example, the authors of reference [

29] highlight an innovative system that uses indoor WiFi signals to detect users’ activity by the different patterns generated. This system can achieve a good recognition rate; the CNN-ABiLSTM model reached an accuracy of 98.54% but it has some drawbacks as it can be used only in a specific setup environment.

3. Proposed System

We propose a HAR system able to perform real-time activity recognition based on internally generated plot images. The proposed system has multiple components:

A data preprocessing app;

A machine learning core processor;

A machine learning processor Web API;

A real-time Cloud human activity recognition system

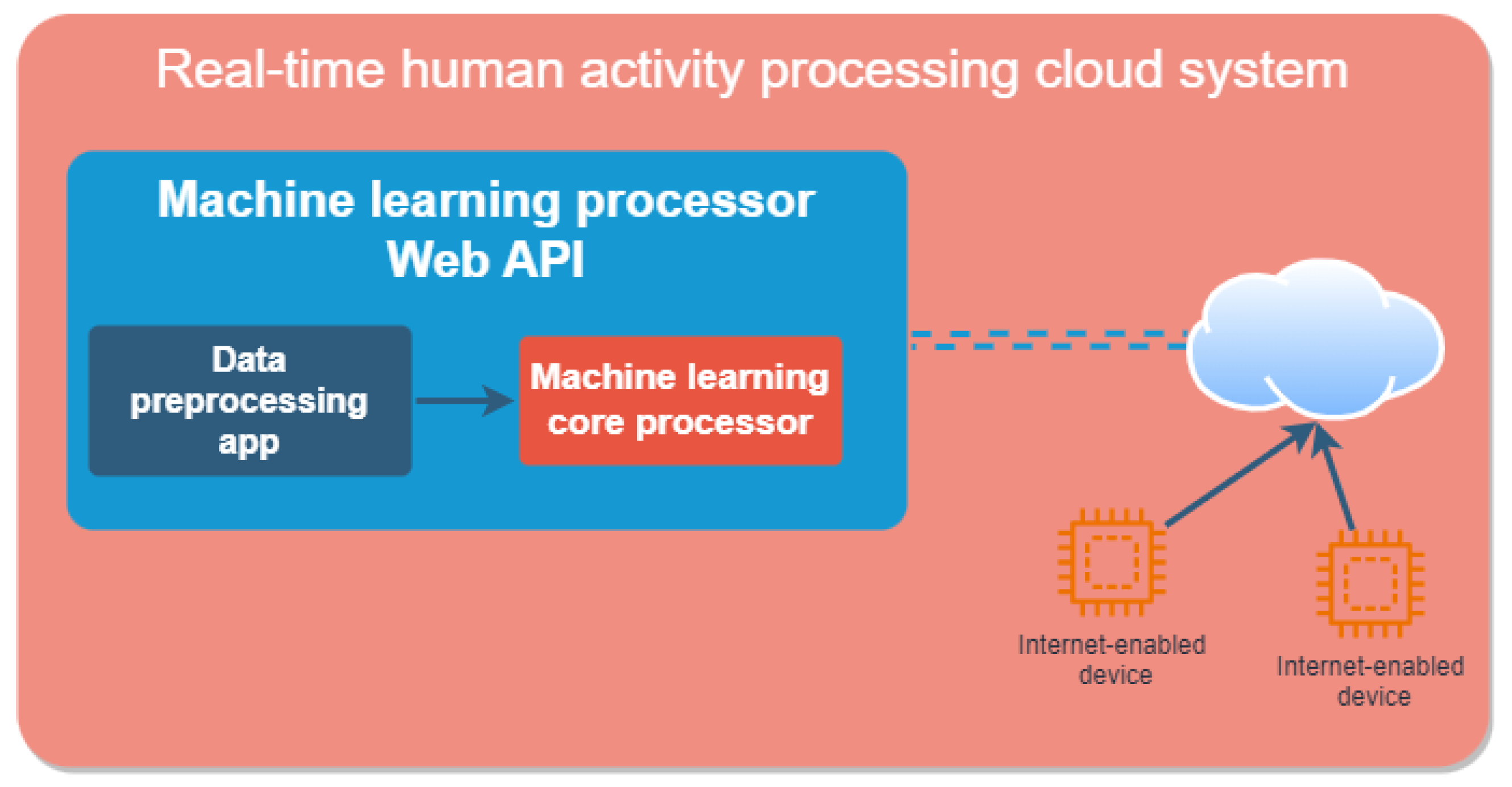

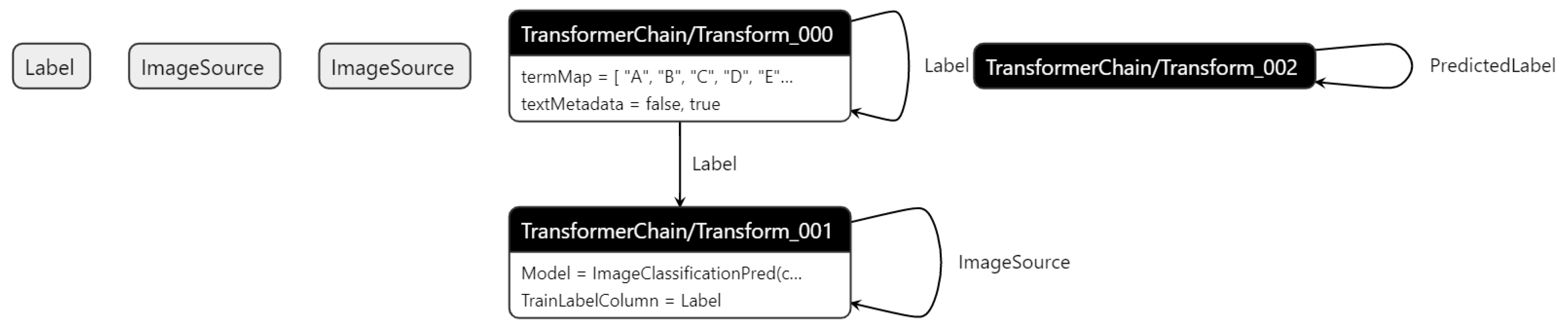

The main components of the proposed system are shown in

Figure 1.

The “Data preprocessing app” handles the conversion of raw movement data from an accelerometer and gyroscope to a plot image. This conversion transforms a movement data window to a single image that will be used as input for the machine learning algorithm. The “Machine learning core processor” represents the main computing logic that is a machine learning implementation trained using the previously generated movement images. After training, this component is capable of receiving an image and predicting its source activity. This behavior is supported only locally, as this component does not support network interactions or advanced conversions from raw data to images. The “Machine learning processor Web Api” is the component that incorporates the “Machine learning core processor” and is able to support network connections and recognize human activity. The recognition process can be based on a generated image but this component is also able to generate the image itself based on raw accelerometer and gyroscope data. So, in order to recognize what activity a series of movement data is part of, a simple API call is sufficient. The “Real-time Cloud human activity recognition system” represents the “Machine learning processor Web Api” cloud correspondent, which is able to handle requests from multiple computer networks via the Internet. This component is not limited to a single local network and can be easily scaled and enhanced for the human activity recognition process. In this way, we can easily recognize real-time human activity from any source application that can make a web API request that contains movement data.

3.1. Our Contributions

Our contributions to the HAR field are as follows:

The implementation of a real-time system for human activity recognition that can operate locally and in the cloud via rest API calls based on image plot recognition;

- –

The implementation and usage of a .NET C# console application to generate label images based on raw accelerometer and gyroscope sensor data;

- –

The creation of a .NET C# application that contains a deep neural network that was created based on a pre-trained TensorFlow DNN model and trained for HAR using plot images;

- –

The integration of the created and trained neural network in a .NET Web API application capable of real-time activity recognition based on rest API calls;

- –

The further extension of the HAR Web API application capabilities to allow cloud-based activity recognition.

The analysis of multiple scenarios for plot image generation configuration and plot types and the evaluation of the obtained activity recognition precision results;

We concluded that a real-time HAR system, based on plot image recognition and REST requests, can be a good system architecture for real-time activity recognition.

3.2. WISDM Biometrics Dataset

The “WISDM Smartphone and Smartwatch Activity and Biometrics Dataset” dataset was chosen for this implementation. This dataset was introduced and described in [

30] and is extensively used for human activity recognition.

This dataset was chosen as it is one of the most important and used datasets for human activity recognition based on wearable devices, as also mentioned in [

22]. Since it was used in multiple studies, it is a perfect candidate to be used to compare different approaches and to analyze the obtained accuracy rates across multiple different implementations. Since the data collection process was closely supervised by the dataset research creators, to ensure proper data quality and movement consistency, this dataset can be clearly used for the in-depth analysis of any of the available activities. This dataset is even suitable for detecting symmetric activities. Based on the fact that the dataset used is an existing one that is freely available for usage, the research creators handled the appropriate approvals from required entities as it involved human subjects research.

The dataset contains many different activities carried out by 51 subjects. We have 18 different activities, each with a length of about 3 min. The monitoring devices used to track the subject’s movements are a smartwatch and a smartphone. The smartwatch is placed on the subject’s dominant hand and is non-obstructive, due to its relatively small form factor. The smartphone is placed in the pocket, simulating the everyday carrying of this omnipresent device. Both data-gathering devices run a custom-made app designed specifically for this purpose. Both tracking devices gather accelerometer and gyroscope data using a number of four separate sensors, two for each device. Each sensor gathers data with a frequency of 20 Hz, reading a measurement every 50 ms. This is the data polling rate that was set for the operation but the actual polling rate of the sensor data can be delayed if the processor is busy.

Accelerometer and gyroscope data folders are present for both smartwatch and smartphone data. In each data sub-directory, there will be a file for each subject, so a total of 51 files will be present in each sub-directory. The naming of the files is standard and uses underlining to delimit different parts of the name components.

Each data file contains one sensor reading per line using a comma-separated value data format, as the data format is maintained across the different sensor types and sources. Each line has the following features:

Subject-id: a unique identifier for the subject, with a range between 1600 and 1650;

Activity Code: a unique identifier for the activity, using a single letter from A to S skipping the “N” value;

Timestamp: the sensor reading timestamp in Linux format;

Sensor value for the x, y, and z axis represented by numeric real values.

Each file contains approximately 54 min of readings, based on the expected 3 min for each activity multiplied by the total number of 18 activities; in an ideal case, we would have a total number of 64,800 lines for the file. The data collection process was not perfect, so these borderline values will still fluctuate across the dataset somewhat.

The raw total number of samples in this dataset is 15,630,426 and the distribution of data across the sensor types and sources is as follows:

Raw phone accelerometer number of data readings: 4,804,403;

Raw phone gyroscope number of data readings: 3,608,635;

Raw watch accelerometer number of data readings: 3,777,046;

Raw watch gyroscope number of data readings: 3,440,342.

The smartwatch data-gathering device runs Android Wear 1.5 and the smartphone runs Android 6.0.

As shown in [

31], this dataset groups all the previously mentioned activities into three main activity categories based on the type of activity and each recorded activity in this dataset has a code assigned. These three major activity categories, alongside the corresponding activities, are shown in

Table 1.

For this research, we plan to use only the smartwatch dataset containing accelerometer and gyroscope raw data and we do not plan to use any of the already available extracted features.

3.3. Data Preprocessing App: Movement Data Plot Images Generation

In order to simplify the classic time-series data classification task, based on the sensor data, we can handle the human activity recognition task as an image classification one. The numeric sensor data from the smartwatch are used to transform a series of values, consisting of a data window, into a single data image. This way we can provide a visual representation of a data chunk that is easier for a human to analyze and interpret the data manually. Each image can show certain characteristics for that particular activity type and different plot styles can be used. The main preprocessing application is written in C# 6 and uses the “ScottPlot” plotting library for .NET. The preprocessing application is written as a .NET console application.

In order to reduce the amount of data generated in the preprocessing phase, only certain activity types and users are selected for processing. The following activities are selected for processing from the source dataset: walking, jogging, stairs, sitting, standing, typing, and brushing teeth. To further decrease the number of generated plot images, the users that performed the activities are also filtered and only the first five users are used.

The “ScottPlot” 4.1.59 plotting library was chosen as it is open-source, free, and provided under the permissive MIT license. This library makes displaying and saving images easy and fast. As mentioned in [

32], there are multiple platforms of .NET that are supported by this library (including .NET core) which means that it can even be used in Azure cloud directly, under the form of Azure function.

Based on the chosen plot image type from the ScottPlot library, we have two main scenarios:

Scatter plot images;

Population images.

The generated plot images have a different width and height based on the chosen scenario, as shown in

Table 2. The window size chosen for overlapping scenarios is 40 records.

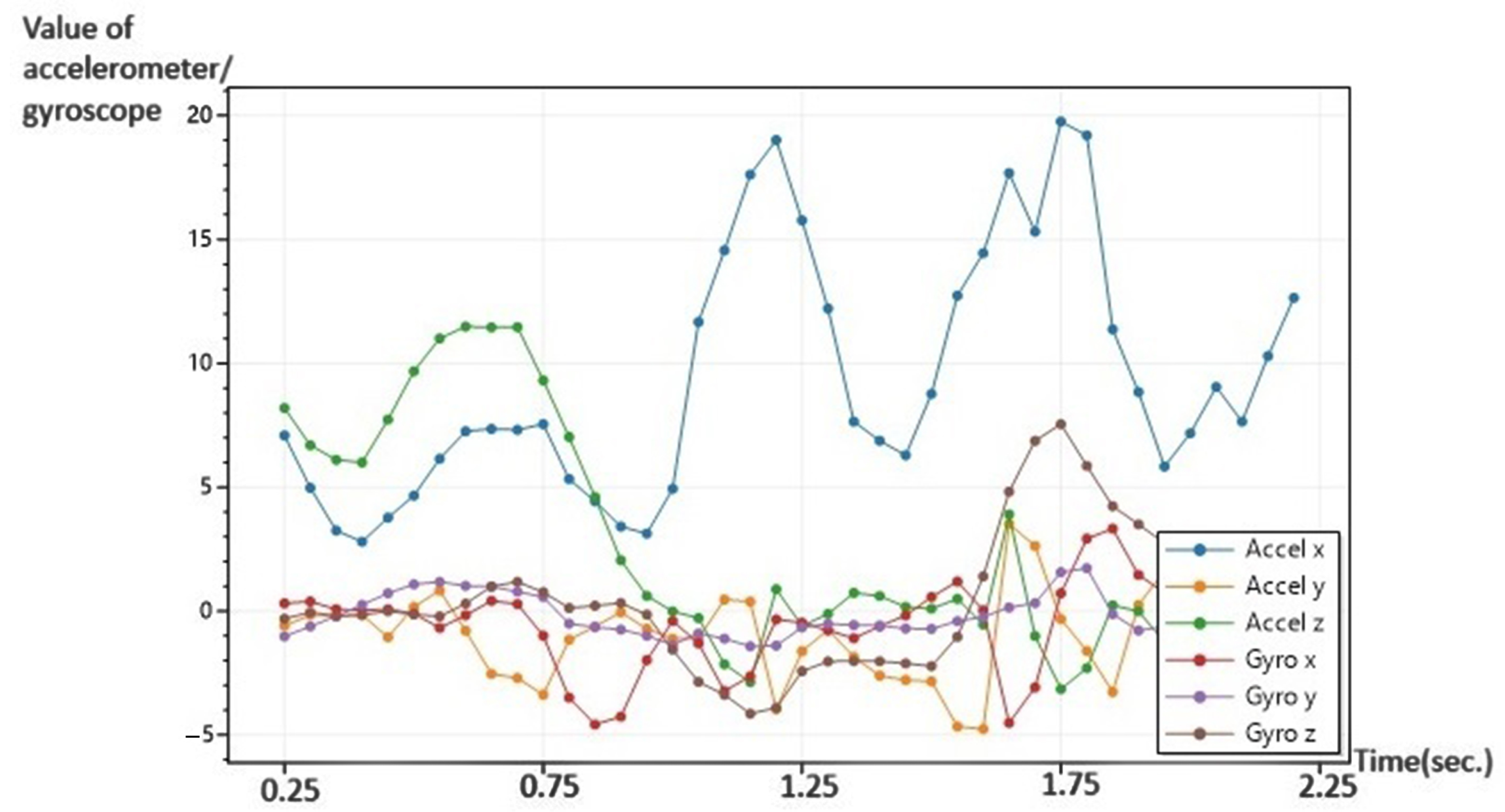

An example of generated scatter plot images with overlapping scatter plots with a data sequence for walking activity is shown in

Figure 2.

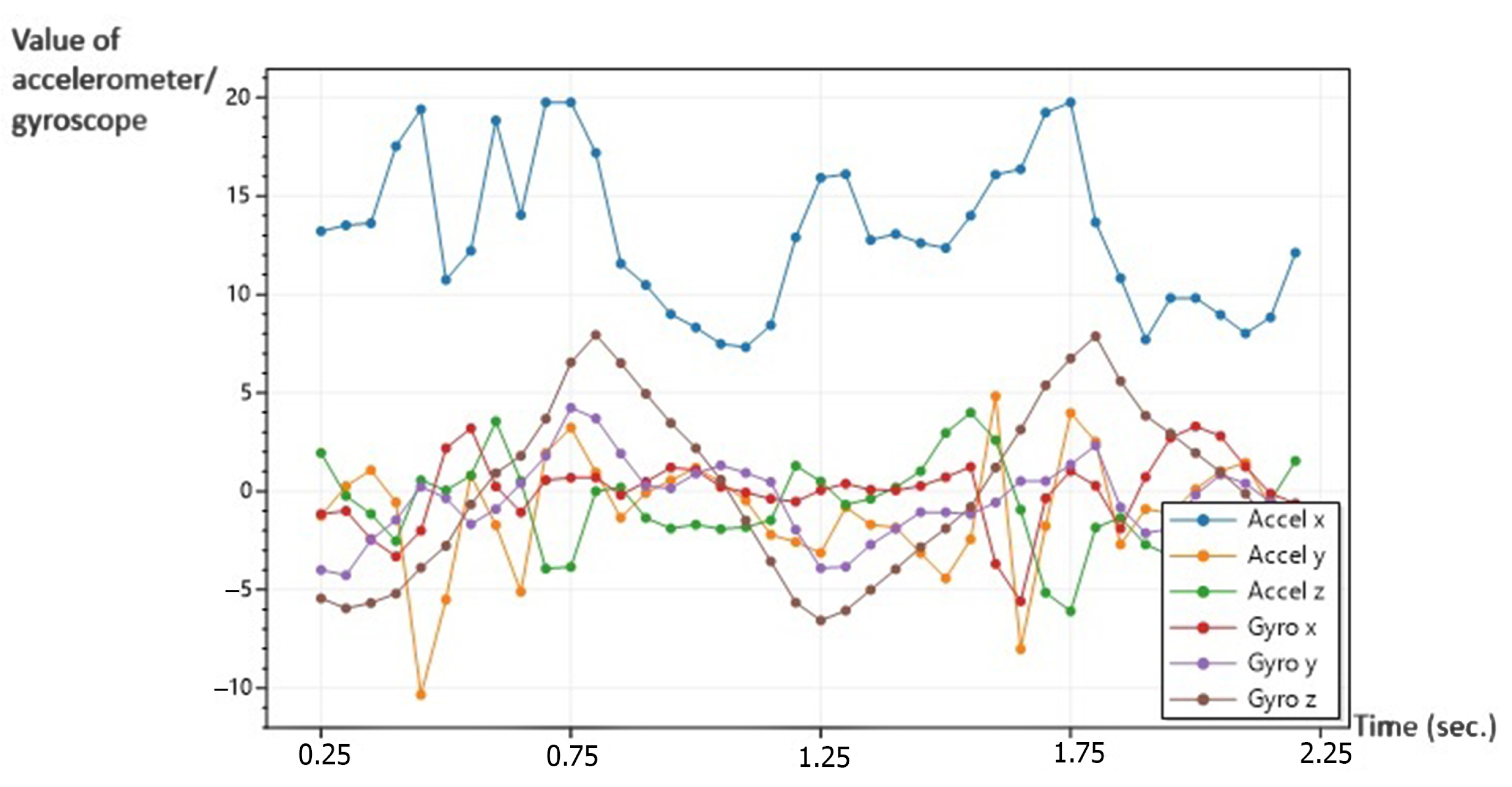

An example of generated scatter plot images with non-overlapping scatter plots with a data sequence for the walking activity is shown in

Figure 3.

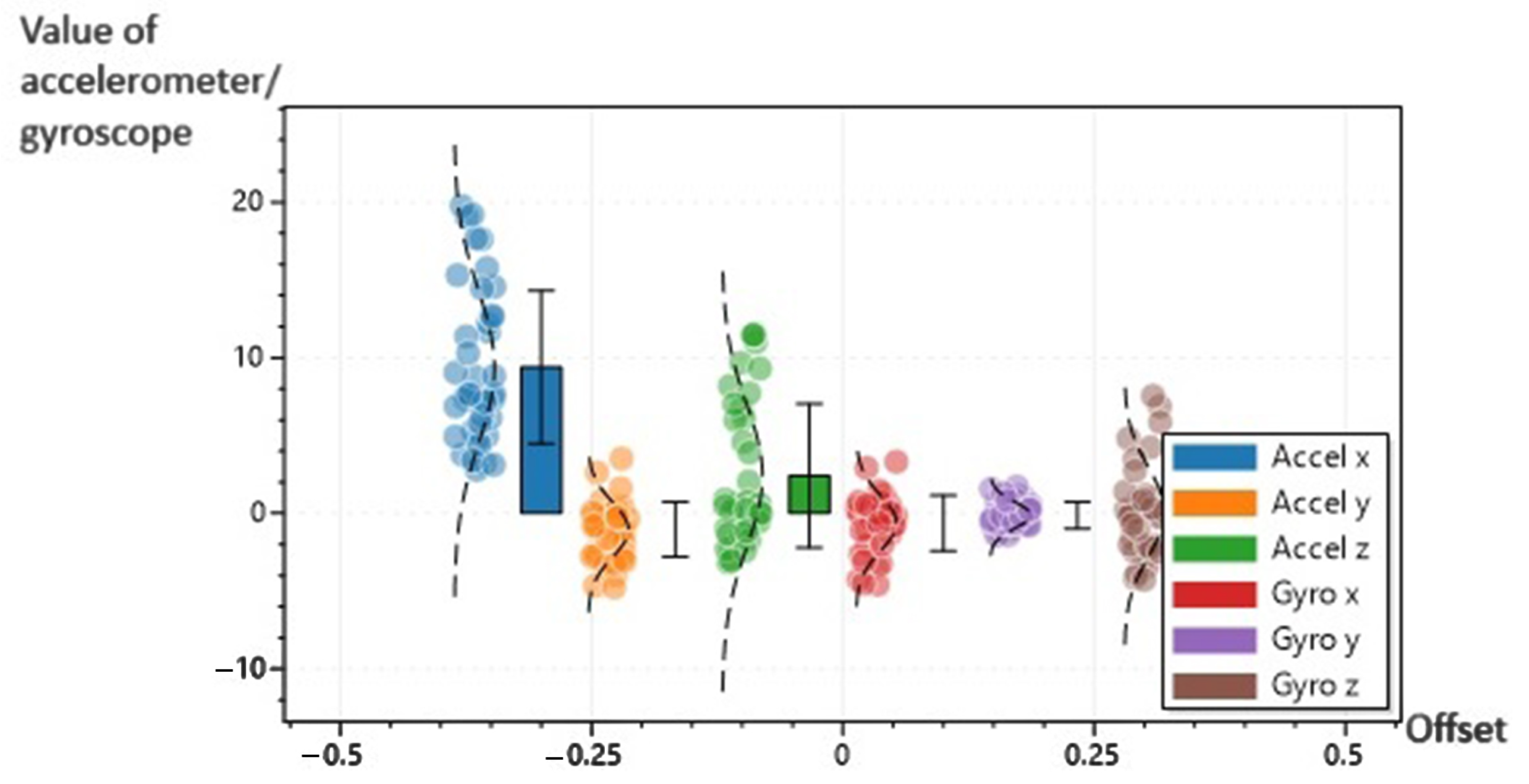

An example of generated population images with the “BarMeanStDev” option is shown in

Figure 4.

3.4. Machine Learning Core Processor

The machine learning core processor is the main component that is able to perform human activity recognition. This component can train a neural network based on the generated plot images and allows this trained neural network to be used directly from a .NET core application. The hosting application where the training takes place is the same one as where the trained neural network is placed afterward; it is implemented in the form of a .NET core console application project. This allows the neural network to be created, trained, saved, and tested, all in one place. The neural network can be later moved into another project to allow further development. This machine learning core processor project thus also contains the required logic for the model consumption and the logic required for the model to be retrained.

3.4.1. Machine Learning Training Process

The training phase was executed locally using the local GPU as the main training processing device. The used training environment machine was equipped with an Intel(R) Core(TM) i5-8300H CPU running at 2.30GHz. The available system memory was 16.0 GB. The GPU used for the main training process was a NVIDIA GeForce GTX 1050.

In order for the training process to support local GPU, the graphics card needs to be CUDA compatible and the system needs to have multiple software components, like extensions and a toolkit, installed for this particular purpose. The “ML.NET Model Builder GPU Support 2022” GPU Visual Studio extension needs to be installed in the system so that we can use the local GPU for training image classification models. The ML.NET Model Builder 2022 extension was updated to the latest version at implementation time, 16.14.2255902, as this extension provides a simple and fast UI tool to build, train, and ship custom machine learning models in .NET applications. The CUDA Toolkit version 10.1 also needs to be installed as this is the recommended version at implementation time and it provides a development environment for creating and running high-performance GPU-accelerated applications. Alongside the CUDA Toolkit, the CUDA Deep Neural Network library (known as cuDNN) also needs to be installed and, in this case, version 7.6.4 was used. cuDNN is a library that contains GPU-accelerated primitives for deep neural networks. The cuDNN library contains implementations for standard routines: forward and backward convolution, pooling, normalization, and activation layers.

3.4.2. Training Time

The training time is different across different runs and ranges from 1.12 to 5.8 h depending on the size and number of the images used for training. All training processes were executed on the same machine described in the previous section. The training times obtained for the executed scenarios are presented in

Table 3.

3.4.3. Local Activity Recognition

After the training phase has been completed, the machine learning core processor can be used to run the activity recognition process locally. From the project’s console application, any logic can be added to leverage the activity recognition functionality. The image data can be generated on the fly based on raw accelerometer data or the system can use a database as a buffer for the image files or movement data. The core processor functionality can be incorporated into any .NET project type, like a desktop application or even a web application.

3.4.4. The Trained Deep Neural Network (DNN)

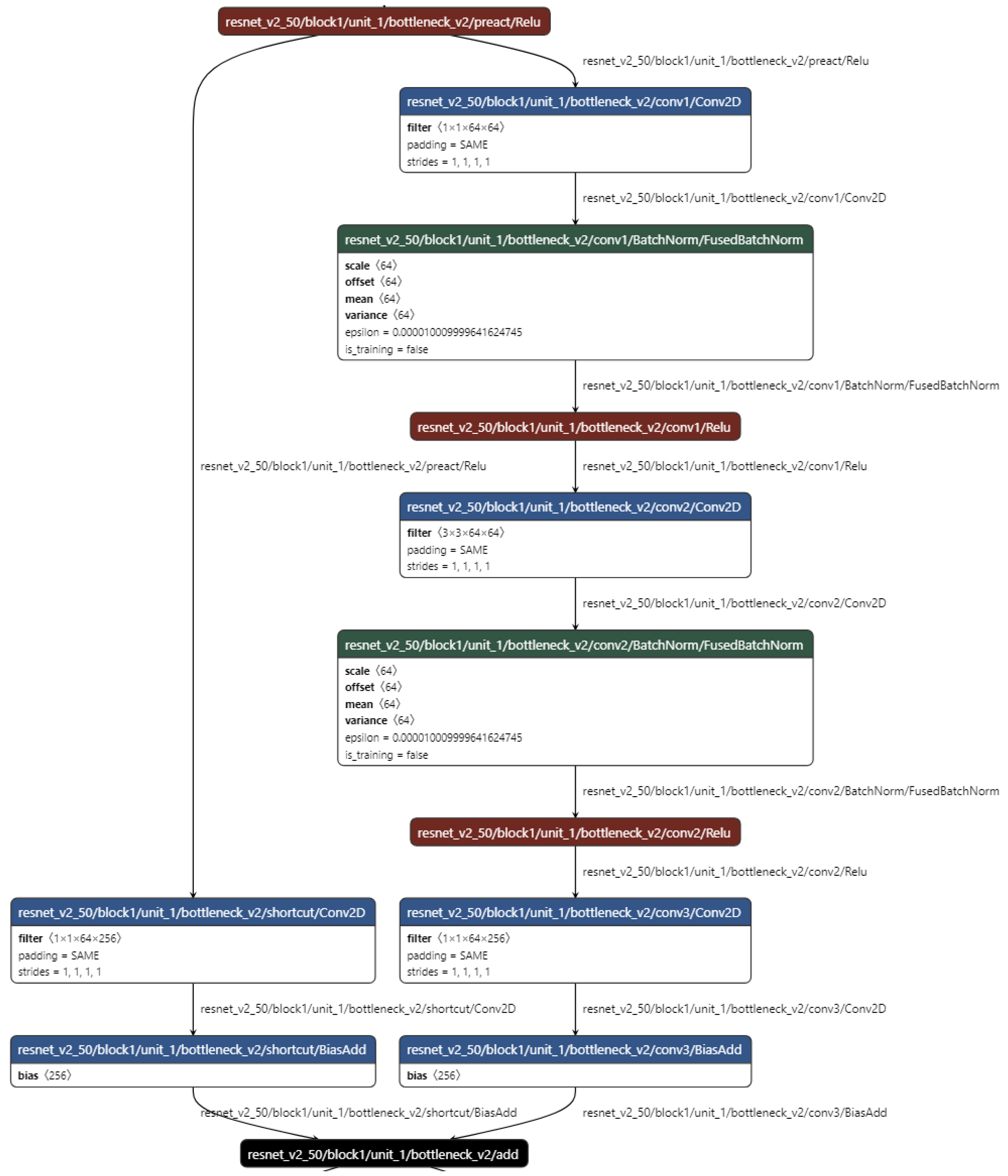

The deep neural network model chosen for the image classification task is “ImageClassificationMulti”. The available trainer for this image classification task is “ImageClassificationTrainer” and it trains a DNN network by using pre-trained models for classifying images, in this case, Resnet50. For this, trainer normalization and the cache are not required. A supervised ML task predicts the category or class of the image representing the activity type that we want to recognize. Each label starts as text and is converted into a numeric key via the “TermTransform”. The image classification algorithm output is a classifier that can recognize the class and the activity type for a provided image.

Figure 5 shows the generated ML model diagram, as can be seen using the Netron viewer software.

Since ML.NET also uses a pre-trained deep neural network (DNN), called transfer learning, the trained model also includes pre-trained knowledge, making it perfect for improving accuracy and decreasing training time. ML.NET internally retrains the Tensorflow layers based on the used data images input dataset. A small portion of the entire Resnet50 model used is shown in

Figure 6.

3.5. Web API Activity Recognition System

Based on the machine learning core processor module, that is able to recognize human activity relying on the movement-generated plot image, a Web API application was built to expose this functionality to other components inside the local network. In this way, any device can receive the activity type as a response by making an API request containing either an already generated plot image or the raw data required to generate the plot image. Since we are handling all the main processing in a Web API application, we can save the received data, resulting in a database, and even send real-time system notifications to other linked subsystems or components based on certain events. For example, email notifications can be sent if the system encounters an activity that is out of the ordinary based on certain logic. A Web API application type component is useful to simplify the system architecture as it is scalable and allows the other subsystems to easily communicate using a fast and reliable method using proven protocols and technologies. Since we are using a stateless design, the lower components that make the data acquisition do not need to be very powerful from the computing perspective. The only requirement is to be able to generate HTTP requests, compared to a real-time system designed around sockets, where communication is achieved via a bidirectional opened channel. The lower layer of the acquisition device can gather data, based on window size, and when the data has reached the window size, an API request can be created with the entire window payload. The frequency of the API requests is clearly dependent on the chosen window size and whether we want to overlap data windows or not.

3.5.1. The Web API Project

The web API project was build in-house and is based on the .NET framework and structured in the form of a minimal API project in .NET core 7. Minimal API was chosen as it is perfect for this kind of implementation due to its low file count and clean architecture. Due to the .NET Core cross-platform nature, this project can be deployed on multiple platforms, like Windows and Linux, and supports cloud integration as well. The minimal API file structure features a small number of configuration files and one single code entry point. The already trained network is loaded from the generated machine learning model zip archive using the “FromFile” extension when registering the prediction engine pool.

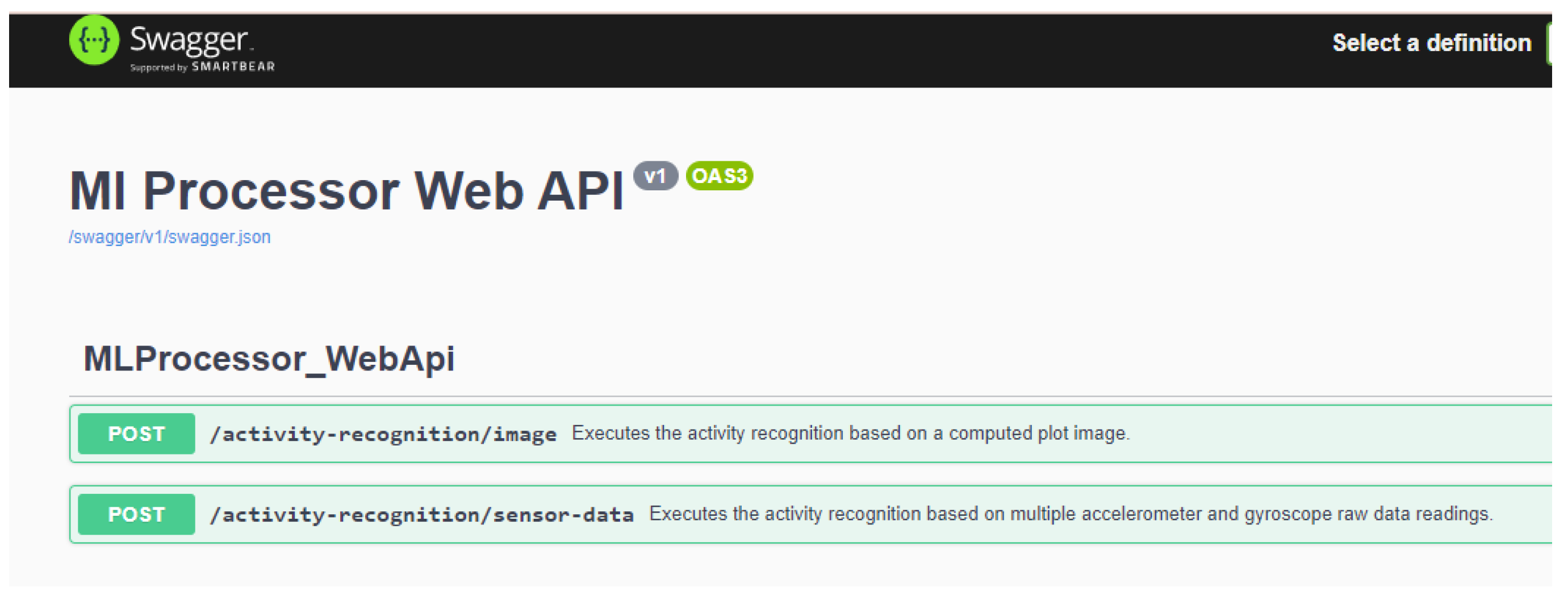

3.5.2. The Web API Endpoints and OpenAPI Specification

The Web API project features two main endpoints used for activity recognition, one that is able to detect human activity based on a movement data plot image and the second that is able to detect human activity based on a window of movement data gathered from an accelerometer and gyroscope. The OpenAPI specification represents the standard for defining RESTful interfaces, providing a technology-agnostic API interface that provides API development and consumption support. Swagger is the tool that allows for OpenAPI specification generation and usage in our web API project. Swagger contains powerful tools to fully use the OpenAPI Specification, as shown in

Figure 7, where the Swagger-generated documentation for the created Web API is highlighted.

3.5.3. Real-Time Activity Recognition Process

Since we are exposing the activity recognition process via a rest API, any subsystem in the local network can call this web API to benefit from the activity recognition process. The rest request type is post and the configured endpoint route is “/activity-recognition/image”. The activity recognition sensor data endpoint can detect the activity based on a window of raw movement sensor data. Internally, the movement plot image containing sensor data from both the accelerometer and gyroscope is recreated and fed to the machine learning neural network. The rest request type is post and the configured endpoint route is “/activity-recognition/sensor-data”.

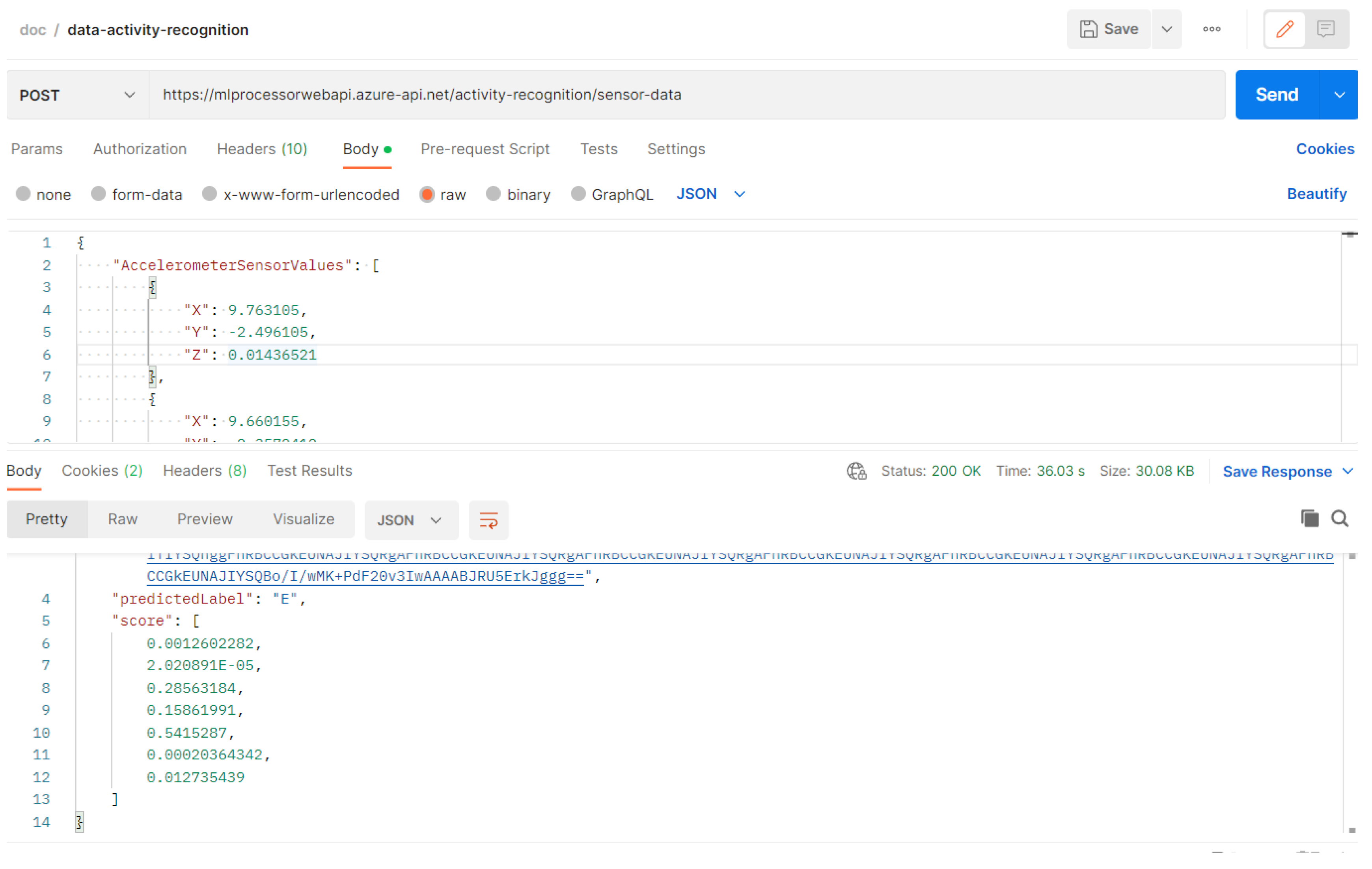

3.6. Real-Time Cloud Human Activity Recognition System

In order to create a real-time system, Azure cloud functionalities were leveraged to create a scalable and omnipresent service. Since the activity recognition system is deployed in the cloud, even a simple internet-enabled device with low processing power can benefit from the activity recognition functionalities, not just the ones from the local network. In order to deploy the Web API application in the cloud, a valid Azure subscription is required. The created components are structured under a hierarchical form, having as a parent the HarResourceGroup resource group that has an API management service named MLprocessorWebApi that can contain multiple APIs, and in this case, contain only one, named MlProcessorWebApi.

The main web API application was deployed in the cloud using the following address [

33] and the postman example request is shown in

Figure 8, a request that is able to determine the activity type with the activity unique identifier “E” that corresponds to the standing activity.

The deployed Web API is secured using subscription keys. In order to consume the published APIs, it is mandatory that this subscription key be included in the HTTP requests when calling the protected APIs. If a valid subscription key is not provided in the header, the calls are not forwarded to the back-end services or rejected immediately by the API Management gateway.

4. Materials and Methods

The “WISDM Smartphone and Smartwatch Activity and Biometrics Dataset” dataset is freely available and can be downloaded from [

12]. The “ScottPlot” library can be found here [

34], alongside examples and demos. The corresponding GitHub page for this project is located here [

32] and the Nuget package is listed here [

35]. The CUDA Toolkit used for GPU neural network training is available here [

36]. NVIDIA CUDA

® Deep Neural Network library (cuDNN) is available here [

37]. ML.NET Model Builder GPU Support 2022 is available here [

38]. ML.NET Model Builder 2022 is available here [

39]. The Netron viewer used is freely available here [

40].

The source code for the proposed real-time cloud-based human activity recognition system that uses image classification is available in a public git repository [

41]. This also contains the Web API project that was deployed and tested in the cloud. It is located in the “realTimeHarSystem” folder and includes the main Web API “MLProcessor WebApi” project and its project dependencies, like the “MlProcessorService” class library project.

The dataset processor console application that was used to generate the plot images used for training is also available in this repository. Each scenario folder contains the “DataSetProcessor” project with the required updates for that particular scenario. The input data used by the “DataSetProcessor” project is located in the “watch” folder located in the root directory and contains the raw data from the dataset smartwatch with subfolders for accelerometer and gyroscope data named “accel” and “gyro”. The output-generated images are not part of this repository as their size is very large and can be easily generated with the tools and files provided in this public repository.

5. Results

We used the WISDM dataset to train a real-time human activity recognition system that is based on a Resnet50 neural network that achieved the best precision of 94.89% using scatter plot images with overlapping scatter plots. Raw accelerometer and gyroscope data from the previously mentioned WISDM dataset are both used to generate the plot images that consist of the input data for the neural network training process.

For the obtained results, the following activities were used: Walking, Jogging, Stairs, Sitting, Standing, Typing, and Brushing Teeth, for five selected users. Four major factors affect the size and number of generated plot image files:

The number of analyzed activities;

The number of analyzed users;

The window type or window size;

The plot image dimensions.

The usage of a reduced dataset is due to the large size and number of the generated image data sets, as we have reduced the available total number of 18 activities to 7 activities and the total number of 30 users to a smaller one of 5. Reducing the number of analyzed users and activities provided a decent working dataset and the decrease of the generated plot image dimensions further decreased the side of the generated plot image dataset.

In

Table 4, we can observe the performance of the obtained results. We can clearly notice that Scatter plot images with the overlapping scatter plots method obtained the best result. Another method that obtained decent results is using population images with the “BarMeanStDev” option. As shown in

Table 4, we managed to obtain a decent precision with a maximum value of 94.89% when using scatter plot images with overlapping scatter plots.

The algorithm managed to obtain a surprising result time-wise, as

Figure 9 clearly shows. Although the method of using mean and standard-deviation-based generated images is not the best from an activity recognition standpoint, it managed to obtain a 4.7 times better training time, meaning it is the fasted trained method. Since the activity recognition difference is 0.2%, this is a negligible value as opposed to the obtained training time gain.

The following sections will detail the obtained results for each of the four analyzed scenarios.

5.1. Scatter-Plot-Images-Based Recognition

For the “Scatter Plot” type scenario, two main variants were tested, overlapping and non-overlapping scatter plots. For overlapping scatter plots, we obtained a micro accuracy of 0.9489 using a training session with a duration of 20,975.7900 s.

For non-overlapping scatter plots, we obtained a micro accuracy of 0.9383 using a training session with a duration of 5750.1130 s. The obtained non-overlapping scatter plot’s micro precision is smaller in this case with 0.0106 compared with using overlapping scatter plots. The training time is significantly lower, with a difference of 15,225.677 s compared to scatter plots.

We obtained better human activity recognition precision using overlapping scatter plots compared with non-overlapping scatter plots but the training time was significantly higher.

5.2. Population-Based Images Recognition

For the “Population” type scenario, only overlapping window type was used, based on the previously tested scenarios. The two main variants that were tested differ in how the population plot was generated using either the BarMeanStDev or the MeanAndStdevResult generation option.

For the “BarMeanStDev” population plot generation option, we obtained a micro accuracy of 0.9469 using a training session with a duration of 4437.9640 s.

For the “MeanAndStdevResult” population plot generation option, we managed to obtain a micro accuracy of 0.9249 using a training session with a duration of 4050.3480 s. The obtained “MeanAndStdevResult” population plot generation option micro precision is smaller in this case with 0.022 compared with using the “BarMeanStDev” population plot generation option. The training time is somewhat lower, with a difference of 387.616 s compared with the “BarMeanStDev” population plot generation option.

We managed to obtain a slightly better precision for scatter plot images with overlapping scatter plots with a micro accuracy of 0.9489 compared with the population images with the “BarMeanStDev” option where we obtained a micro accuracy of 0.9469, with a very small difference for micro accuracy of 0.002.

5.3. Comparison of Results with Other Studies

As shown in

Table 5, the results obtained leveraging the researched methods obtained a satisfactory result. It can be seen that studies that used data representation as images obtained results as good as those represented in raw data windows.

It can be observed that we obtained a better result using the proposed model, with an approximately 5% recognition rate gain compared to the implementation using a hybrid HARSI-AlexNet model (Criteria number 2).

An improved result, compared with the proposed model, was obtained using a model based on RestNet, under the form of a HARSI-ResNet34 hybrid model. This showed that some hybrid models obtained better results than the classic ones, which will help us in the development of a new algorithm model. Comparing the basic model of the hybrid variants, it can be seen that those based on RestNet obtained better results than those based on AlexNet or LSTM. The fact that the HARSI-ResNet34 variant model [

22] used a data plot obtained only from the accelerometer raw data confirms that the model proposed by us, which uses the data from the accelerometer and gyroscope, could be enhanced in a future implementation using a new hybrid model.

6. Conclusions

Our main objective was to implement a real-time cloud-based human activity recognition system that uses image classification at its core. The system should be able to expose HAR capabilities from the cloud or locally via rest API calls. In order to achieve this, a .NET core C#-based web API was implemented to expose the activity recognition functionality. The main HAR functionality was achieved using a deep neural network created based on a pre-trained TensorFlow Resnet50 DNN model and trained for HAR using plot images. The created system was trained using data from the WISDM dataset, which is open access. The training data were generated using a separate .NET core C# application that generated the plot images based on raw accelerometer and gyroscope data. Since the proposed system can also be deployed to the cloud, it can be easily expanded to support multiple sensor modules and users at the same time based on its rest implementation.

We managed to obtain a decent human activity recognition rate of 94.89%, using the following activities subset: Walking, Jogging, Stairs, Sitting, Standing, Typing, and Brushing Teeth, demonstrating that a real-time HAR system based on plot image recognition and REST requests can be a good system architecture for a real-time activity recognition system. We managed to improve the previously obtained human activity recognition accuracy of 93.10%, as shown in [

31], to 94.89%, obtaining an increase of 1.79%.

Since only raw accelerometer and gyroscope data are used by the proposed HAR platform, we should be able to expand the usable sensor modules to any sensor module that has an accelerometer, gyroscope, and network capabilities. This creates a kind of hardware abstraction layer, as this can be simply implemented with a relatively low number of physical components. This is a very simple option to allow basic network-enabled sensor modules to gain ML.NET deep neural network capabilities. The custom hardware implementation of wrist-worn sensor modules is also a viable option to be integrated into the proposed system, as we are using a web API for the final system integration.

In order to further improve the accuracy of the implemented system, additional plot-type images can be analyzed to see if we can gain a performance boost. Other types of neural networks can also be analyzed, and other custom TensorFlow models may even provide a better implementation to further expand the system. The system could be expanded to use more data from the initial dataset, by increasing the number of analyzed activities and users and trying to optimize the training time by using a more powerful training machine, and trying to lower the training time using other pre-trained deep neural networks.

In order to further increase the system’s accuracy, integration with other HAR systems may also yield better results, like integration with an environmental-based HAR system. This will increase the complexity of the system but would provide additional types of data regarding the user’s activity.