AFSFusion: An Adjacent Feature Shuffle Combination Network for Infrared and Visible Image Fusion

Abstract

:1. Introduction

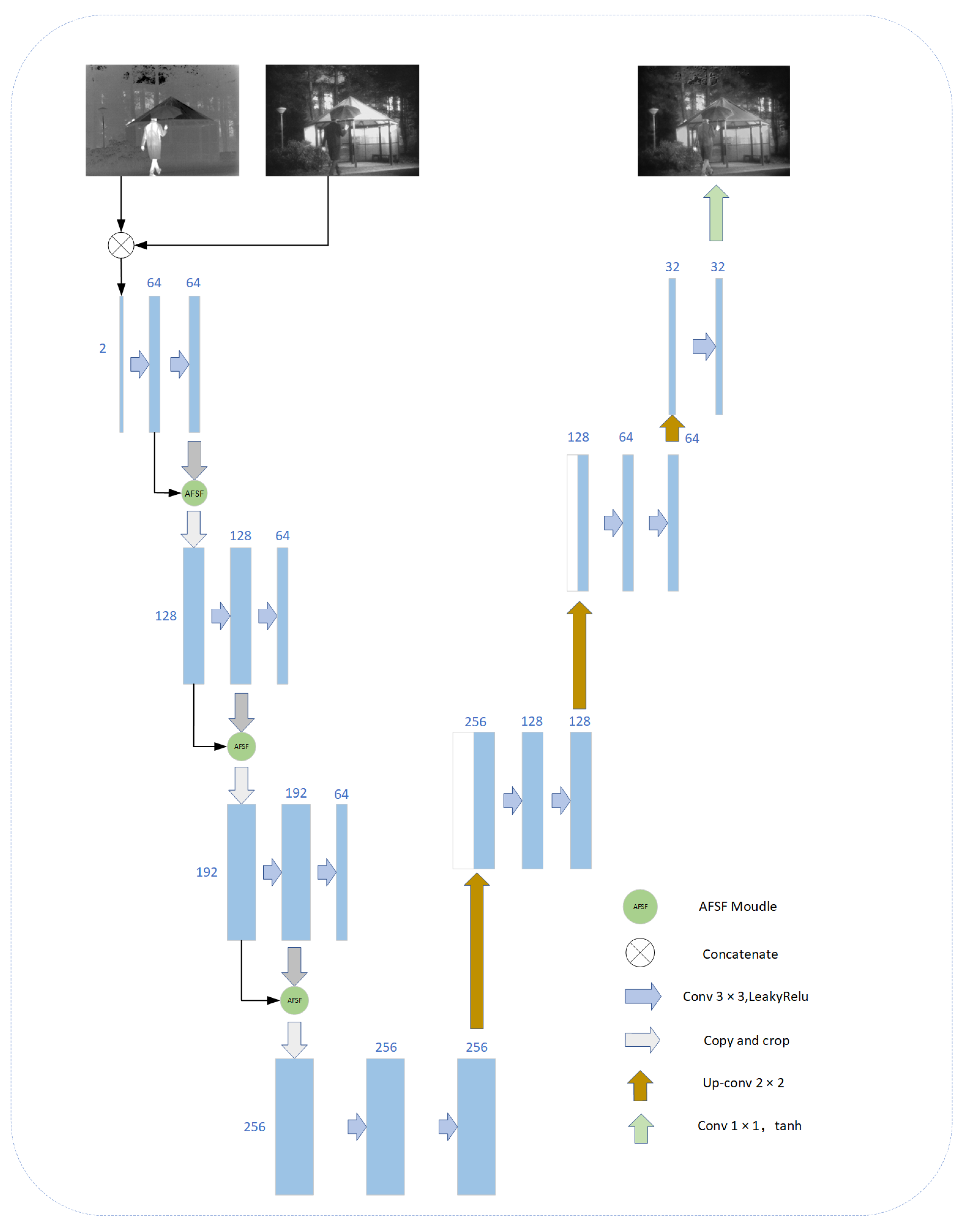

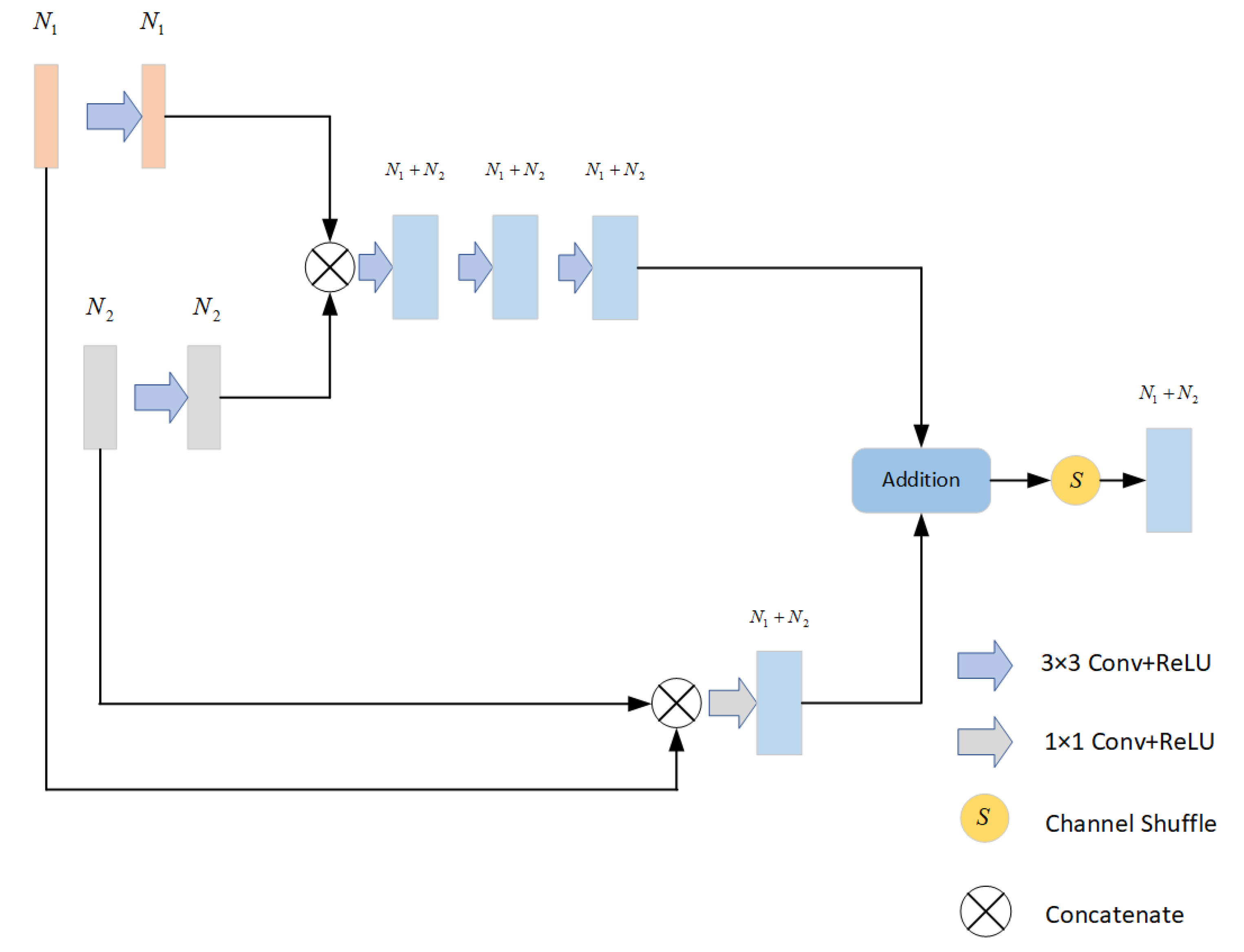

- Our AFSFusion network differs from the resolution transformation of the UNet [21], wherein the network is specifically designed to optimize image feature processing for image fusion. We establish a hybrid fusion network to achieve fusion by scaling up and down several feature channels based on the improved UNet-like architecture. The first half of the network uses the adjacent feature shuffle-fusion (AFSF) module. This module fuses the features of each adjacent convolutional layer several times using shuffle operations, resulting in the full interaction of the input feature information. Consequently, information loss during feature extraction and fusion is significantly reduced, and the complementarity of feature information is enhanced. The proposed network architecture effectively integrates complementary features using the AFSF module, achieving a balanced and collaborative approach to feature extraction, fusion, and modulation. This method is typically not found in traditional methods, and it has resulted in improved fusion performance. These changes allow the proposed network to achieve excellent performance in terms of subjective and objective evaluation.

- Three loss functions, mean square error (MSE), structural similarity (SSIM), and total variation (TV), are used to construct the total loss function; to enable the total loss function to play a significant role in network training, an adaptive weight adjustment (AWA) strategy is designed to automatically set the weight values of the different loss functions according to extracted feature responses for the IR and visible light, which are based on the image content (the magnitude of the feature response values are extracted and calculated using VGG16 [22]). As such, the fused images produced by the fusion network can achieve the best possible fusion result that aligns with the visual perceptual characteristics of the human eye.

2. Related Work

UNet

3. AFSFusion Network Model

3.1. Basic Idea

3.2. Backbone Network

- 1.

- The inputs. It is modified in a dual-channel format to enable the system to receive and process both IR and visible images simultaneously.

- 2.

- The elimination of the skip connection. Unlike traditional U-Net, our network utilizes channel expansion and contraction instead of resolution expansion and contraction. The purpose of skip connections is to transfer information between the encoder and decoder, which helps to address issues of information loss and inaccurate semantic information during segmentation. As our network has a relatively high input image resolution and does not use downsampling, feature information is not lost during the fusion process.

- 3.

- The addition of the AFSF module enhances feature interaction and information transfer capability. In the first half of UNet, the feature channels are sequentially passed from front to back, which limits their information interaction ability. Therefore, the AFSF module proposed in this study fuses feature information from the previous convolutional layer with the output of the current convolutional layer, which is then passed to the next convolutional layer, and adds a shuffle operation to facilitate feature interaction. The details of the specific module are presented in Section 3.3.

- 4.

- The elimination of the maximum pool layer. Typically, the max pooling layer is employed to reduce dimensionality, compress features, lower network complexity, and decrease the number of required parameters. However, since the AFSFusion network primarily utilizes the AFSF module to enhance feature channels, the use of only the max pooling layer can result in ambiguity regarding the number of channels. The depth of the network architecture is relatively shallow, while the number of channels is relatively large. The max pooling layer is not utilized to alleviate computational overhead.

- 5.

- The replacement of the ReLU layer. To solve the problem in which the ReLU layer cannot handle negative values, it is replaced with a Leaky ReLU layer to address the gradient disappearance problem.

3.3. AFSF Module

3.4. Loss Function

3.4.1. Selection of the Loss Function

3.4.2. AWA Strategy

4. Experimental Results and Analysis

4.1. Experimental Setup

4.2. Training Details

4.3. Ablation Studies

4.3.1. Ablation Study Involving the AWA Strategy

4.3.2. Ablation Study Involving Loss Items

4.3.3. Ablation Study Involving AFSF Module

4.4. Analysis of Results

4.4.1. Objective Evaluation

4.4.2. Subjective Evaluation

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Son, D.M.; Kwon, H.J.; Lee, S.H. Visible and near-infrared image synthesis using pca fusion of multiscale layers. Appl. Sci. 2020, 10, 8702. [Google Scholar] [CrossRef]

- Zhang, Z.; Cao, Y.; Ding, M.; Tao, J. Fusion of infrared and visible images using multilayer convolution sparse representation. J. Harbin Inst. Technol. 2021, 53, 51–59. [Google Scholar]

- Guan, Z.; Deng, Y.L.; Nie, R.C. Hyperspectral and panchromatic image fusion based on spectral reconstruction-constrained non-negative matrix factorization. Comput. Sci. 2021, 48, 153–159. [Google Scholar]

- Jin, X.; Jiang, Q.; Yao, S.; Zhou, D.; Nie, R.; Lee, S.J.; He, K. Infrared and visual image fusion method based on discrete cosine transform and local spatial frequency in discrete stationary wavelet transform domain. Infrared Phys. Technol. 2018, 88, 1–12. [Google Scholar] [CrossRef]

- Ren, Y.F.; Zhang, J.M. Fusion of infrared and visible images based on NSST multi-scale entropy. J. Ordnance Equip. Eng. 2022, 43, 278–285. [Google Scholar]

- Aghamohammadi, A.; Ranjbarzadeh, R.; Naiemi, F.; Mogharrebi, M.; Dorosti, S.; Bendechache, M. TPCNN: Two-path convolutional neural network for tumor and liver segmentation in CT images using a novel encoding approach. Expert Syst. Appl. 2021, 183, 115406. [Google Scholar] [CrossRef]

- Ma, J.; Liang, P.; Yu, W.; Chen, C.; Guo, X.; Wu, J.; Jiang, J. Infrared and visible image fusion via detail preserving adversarial learning. Inf. Fusion 2020, 54, 85–98. [Google Scholar] [CrossRef]

- Poria, S.; Cambria, E.; Gelbukh, A. Deep convolutional neural network textual features and multiple kernel learning for utterance-level multimodal sentiment analysis. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–21 September 2015; pp. 2539–2544. [Google Scholar]

- Martínez, H.P.; Yannakakis, G.N. Deep multimodal fusion: Combining discrete events and continuous signals. In Proceedings of the 16th International Conference on Multimodal Interaction, Istanbul, Turkey, 12–16 November 2014; pp. 34–41. [Google Scholar]

- Li, H.; Wu, X.J.; Kittler, J. RFN-Nest: An end-to-end residual fusion network for infrared and visible images. Inf. Fusion 2021, 73, 72–86. [Google Scholar] [CrossRef]

- Li, H.; Wu, X.J. DenseFuse: A fusion approach to infrared and visible images. IEEE Trans. Image Process. 2018, 28, 2614–2623. [Google Scholar] [CrossRef]

- Yi, S.; Jiang, G.; Liu, X.; Li, J.; Chen, L. TCPMFNet: An infrared and visible image fusion network with composite auto encoder and transformer–convolutional parallel mixed fusion strategy. Infrared Phys. Technol. 2022, 127, 104405. [Google Scholar] [CrossRef]

- Jian, L.; Yang, X.; Liu, Z.; Jeon, G.; Gao, M.; Chisholm, D. SEDRFuse: A symmetric encoder–decoder with residual block network for infrared and visible image fusion. IEEE Trans. Instrum. Meas. 2020, 70, 1–15. [Google Scholar] [CrossRef]

- Tang, L.; Yuan, J.; Zhang, H.; Jiang, X.; Ma, J. PIAFusion: A progressive infrared and visible image fusion network based on illumination aware. Inf. Fusion 2022, 83-84, 79–92. [Google Scholar] [CrossRef]

- Li, J.; Huo, H.; Li, C.; Wang, R.; Feng, Q. AttentionFGAN: Infrared and visible image fusion using attention-based generative adversarial networks. IEEE Trans. Multimed. 2020, 23, 1383–1396. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Two-stream convolutional networks for action recognition in videos. In Proceedings of the 27th International Conference on Neural Information Processing Systems (NIPS’14), Montreal, QC, Canada, 8–13 December 2014. [Google Scholar]

- Wu, D.; Pigou, L.; Kindermans, P.J.; Le, N.D.H.; Shao, L.; Dambre, J.; Odobez, J.M. Deep dynamic neural networks for multimodal gesture segmentation and recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 1583–1597. [Google Scholar] [CrossRef]

- Kahou, S.E.; Pal, C.; Bouthillier, X.; Froumenty, P.; Gülçehre, Ç.; Memisevic, R.; Vincent, P.; Courville, A.; Bengio, Y.; Ferrari, R.C.; et al. Combining modality specific deep neural networks for emotion recognition in video. In Proceedings of the 15th ACM on International Conference on Multimodal Interaction, Sydney, Australia, 9–13 December 2013; pp. 543–550. [Google Scholar]

- Xu, H.; Wang, X.; Ma, J. DRF: Disentangled representation for visible and infrared image fusion. IEEE Trans. Instrum. Meas. 2021, 70, 1–13. [Google Scholar] [CrossRef]

- Ramachandram, D.; Taylor, G.W. Deep multimodal learning: A survey on recent advances and trends. IEEE Signal Process. Mag. 2017, 34, 96–108. [Google Scholar] [CrossRef]

- Saadi, S.B.; Ranjbarzadeh, R.; Amirabadi, A.; Ghoushchi, S.J.; Kazemi, O.; Azadikhah, S.; Bendechache, M. Osteolysis: A literature review of basic science and potential computer-based image processing detection methods. Comput. Intell. Neurosci. 2021, 2021. [Google Scholar] [CrossRef] [PubMed]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Zhang, K.; Zuo, W.; Chen, Y.; Meng, D.; Zhang, L. Beyond a gaussian denoiser: Residual learning of deep cnn for image denoising. IEEE Trans. Image Process. 2017, 26, 3142–3155. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Ma, J.; Chen, C.; Li, C.; Huang, J. Infrared and visible image fusion via gradient transfer and total variation minimization. Inf. Fusion 2016, 31, 100–109. [Google Scholar] [CrossRef]

- Zhang, H.; Ma, J. SDNet: A versatile squeeze-and-decomposition network for real-time image fusion. Int. J. Comput. Vis. 2021, 129, 2761–2785. [Google Scholar] [CrossRef]

- Shreyamsha Kumar, B. Multifocus and multispectral image fusion based on pixel significance using discrete cosine harmonic wavelet transform. Signal Image Video Process. 2013, 7, 1125–1143. [Google Scholar] [CrossRef]

- Zhang, H.; Xu, H.; Xiao, Y.; Guo, X.; Ma, J. Rethinking the image fusion: A fast unified image fusion network based on proportional maintenance of gradient and intensity. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12797–12804. [Google Scholar]

- Cheng, C.; Xu, T.; Wu, X.J. MUFusion: A general unsupervised image fusion network based on memory unit. Inf. Fusion 2023, 92, 80–92. [Google Scholar] [CrossRef]

- Xu, H.; Ma, J.; Jiang, J.; Guo, X.; Ling, H. U2Fusion: A unified unsupervised image fusion network. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 502–518. [Google Scholar] [CrossRef] [PubMed]

- Ma, J.; Tang, L.; Fan, F.; Huang, J.; Mei, X.; Ma, Y. SwinFusion: Cross-domain long-range learning for general image fusion via swin transformer. IEEE/CAA J. Autom. Sin. 2022, 9, 1200–1217. [Google Scholar] [CrossRef]

- Toet, A. TNO Image Fusion Dataset. 2022. Available online: https://figshare.com/articles/dataset/TNO_Image_Fusion_Dataset/1008029 (accessed on 23 April 2023).

- Kristan, M.; Matas, J.; Leonardis, A.; Vojir, T.; Pflugfelder, R.; Fernandez, G.; Nebehay, G.; Porikli, F.; Čehovin, L. A Novel Performance Evaluation Methodology for Single-Target Trackers. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 2137–2155. [Google Scholar] [CrossRef]

- Roberts, J.W.; Van Aardt, J.A.; Ahmed, F.B. Assessment of image fusion procedures using entropy, image quality, and multispectral classification. J. Appl. Remote Sens. 2008, 2, 023522. [Google Scholar]

- Rao, Y.J. In-fibre Bragg grating sensors. Meas. Sci. Technol. 1997, 8, 355. [Google Scholar] [CrossRef]

- Qu, G.; Zhang, D.; Yan, P. Information measure for performance of image fusion. Electron. Lett. 2002, 38, 1. [Google Scholar] [CrossRef]

- Ma, K.; Zeng, K.; Wang, Z. Perceptual quality assessment for multi-exposure image fusion. IEEE Trans. Image Process. 2015, 24, 3345–3356. [Google Scholar] [CrossRef]

- Sheikh, H.R.; Bovik, A.C. Image information and visual quality. IEEE Trans. Image Process. 2006, 15, 430–444. [Google Scholar] [CrossRef] [PubMed]

- Gu, K.; Lin, W.; Zhai, G.; Yang, X.; Zhang, W.; Chen, C.W. No-reference quality metric of contrast-distorted images based on information maximization. IEEE Trans. Cybern. 2016, 47, 4559–4565. [Google Scholar] [CrossRef] [PubMed]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.H. Restormer: Efficient transformer for high-resolution image restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5728–5739. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

| Strategy | En | SD | MI | MS-SSIM | VIF | NIQMC |

|---|---|---|---|---|---|---|

| N | 6.8756 | 81.0549 | 13.7512 | 0.9286 | 0.7917 | 4.5564 |

| Y | 7.1589 | 95.9510 | 14.3179 | 0.9053 | 0.8786 | 4.8369 |

| Selection | En | SD | MI | MS-SSIM | VIF | NIQMC |

|---|---|---|---|---|---|---|

| SSIM | 6.7674 | 75.7708 | 13.5347 | 0.8763 | 0.8093 | 4.4303 |

| MSE | 6.8755 | 75.8543 | 13.7509 | 0.8893 | 0.7812 | 4.4854 |

| TV | 6.5573 | 59.3614 | 13.1146 | 0.8997 | 0.6567 | 3.9791 |

| SSIM + MSE | 6.9162 | 81.9746 | 13.8323 | 0.9121 | 0.8140 | 4.5599 |

| SSIM + TV | 6.9104 | 79.5288 | 13.8207 | 0.9052 | 0.7800 | 4.6015 |

| MSE + TV | 6.9942 | 83.7423 | 13.9884 | 0.9236 | 0.8143 | 4.6324 |

| SSIM + MSE + TV | 7.0708 | 90.3205 | 14.1415 | 0.9004 | 0.8784 | 4.7150 |

| and Values | En | SD | MI | MS-SSIM | VIF | NIQMC |

|---|---|---|---|---|---|---|

| (0.5, 0.5) | 6.8913 | 78.2546 | 13.7825 | 0.9299 | 0.8018 | 4.4835 |

| (0.5, 1) | 6.8717 | 75.9035 | 13.7433 | 0.9043 | 0.7970 | 4.4580 |

| (0.5, 1.5) | 6.8782 | 76.0264 | 13.7563 | 0.9196 | 0.7929 | 4.4458 |

| (1, 0.5) | 6.7920 | 71.9125 | 13.5841 | 0.8869 | 0.7875 | 4.3727 |

| (1, 1) | 7.0708 | 90.3205 | 14.1415 | 0.9004 | 0.8784 | 4.7150 |

| (1, 1.5) | 6.9237 | 80.4903 | 13.8475 | 0.9292 | 0.8150 | 4.5627 |

| (1.5, 0.5) | 7.0926 | 93.318 | 14.1852 | 0.9258 | 0.8492 | 4.8003 |

| (1.5, 1) | 7.1589 | 95.9510 | 14.3179 | 0.9053 | 0.8786 | 4.8369 |

| (1.5, 1.5) | 6.8789 | 76.6077 | 13.7577 | 0.9122 | 0.8157 | 4.4652 |

| Methods | En | SD | MI | MS-SSIM | VIF | NIQMC |

|---|---|---|---|---|---|---|

| w/o-AFSF | 6.8926 | 81.0629 | 13.7852 | 0.9320 | 0.7992 | 4.5464 |

| w/o-shuffle | 6.8945 | 83.8112 | 13.7890 | 0.9248 | 0.7847 | 4.5976 |

| AFSFusion | 7.1589 | 95.9510 | 14.3179 | 0.9053 | 0.8786 | 4.8369 |

| Models | SwinFusion | DenseFuse | PMGI | U2Fusion | MUFusion | SDNet | GTF | AFSFusion |

|---|---|---|---|---|---|---|---|---|

| En | 6.83 | 6.38 | 6.97 | 6.90 | 6.71 | 6.57 | 6.72 | 7.16 |

| SD | 93.03 | 59.79 | 77.70 | 76.91 | 71.13 | 62.96 | 76.74 | 95.95 |

| VIF | 0.90 | 0.69 | 0.84 | 0.79 | 0.73 | 0.73 | 0.63 | 0.88 |

| MI | 13.66 | 12.76 | 13.94 | 13.79 | 13.43 | 13.16 | 13.44 | 14.32 |

| MS-SSIM | 0.90 | 0.88 | 0.88 | 0.92 | 0.90 | 0.86 | 0.82 | 0.91 |

| NIQMC | 4.52 | 3.77 | 4.59 | 4.48 | 4.23 | 4.07 | 4.29 | 4.84 |

| Models | SwinFusion | DenseFuse | PMGI | U2Fusion | MUFusion | SDNet | GTF | AFSFusion |

|---|---|---|---|---|---|---|---|---|

| En | 6.83 | 6.38 | 6.96 | 6.96 | 6.68 | 6.71 | 6.77 | 7.11 |

| SD | 89.19 | 55.32 | 76.69 | 76.78 | 65.47 | 70.79 | 83.04 | 95.08 |

| VIF | 0.92 | 0.68 | 0.85 | 0.80 | 0.72 | 0.75 | 0.62 | 0.89 |

| MI | 13.66 | 12.75 | 13.92 | 13.91 | 13.37 | 13.42 | 13.53 | 14.22 |

| MS-SSIM | 0.90 | 0.88 | 0.89 | 0.93 | 0.88 | 0.88 | 0.82 | 0.89 |

| NIQMC | 4.51 | 3.75 | 4.58 | 4.55 | 4.18 | 4.23 | 4.37 | 4.80 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, Y.; Xu, S.; Cheng, X.; Zhou, C.; Xiong, M. AFSFusion: An Adjacent Feature Shuffle Combination Network for Infrared and Visible Image Fusion. Appl. Sci. 2023, 13, 5640. https://doi.org/10.3390/app13095640

Hu Y, Xu S, Cheng X, Zhou C, Xiong M. AFSFusion: An Adjacent Feature Shuffle Combination Network for Infrared and Visible Image Fusion. Applied Sciences. 2023; 13(9):5640. https://doi.org/10.3390/app13095640

Chicago/Turabian StyleHu, Yufeng, Shaoping Xu, Xiaohui Cheng, Changfei Zhou, and Minghai Xiong. 2023. "AFSFusion: An Adjacent Feature Shuffle Combination Network for Infrared and Visible Image Fusion" Applied Sciences 13, no. 9: 5640. https://doi.org/10.3390/app13095640

APA StyleHu, Y., Xu, S., Cheng, X., Zhou, C., & Xiong, M. (2023). AFSFusion: An Adjacent Feature Shuffle Combination Network for Infrared and Visible Image Fusion. Applied Sciences, 13(9), 5640. https://doi.org/10.3390/app13095640