Abstract

The advancement of both the fields of Computer Vision (CV) and Artificial Neural Networks (ANNs) has enabled the development of effective automatic systems for analyzing human behavior. It is possible to recognize gestures, which are frequently used by people to communicate information non-verbally, by studying hand movements. So, the main contribution of this research is the collected dataset, which is taken from open-source videos of the relevant subjects that contain actions that depict confidence levels. The dataset contains high-quality frames with minimal bias and less noise. Secondly, we have chosen the domain of confidence determination during social issues such as interviews, discussions, or criminal investigations. Thirdly, the proposed model is a combination of two high-performing models, i.e., CNN (GoogLeNet) and LSTM. GoogLeNet is the state-of-the-art architecture for hand detection and gesture recognition. LSTM prevents the loss of information by keeping temporal data. So the combination of these two outperformed during the training and testing process. This study presents a method to recognize different categories of Self-Efficacy by performing multi-class classification based on the current situation of hand movements using visual data processing and feature extraction. The proposed architecture pre-processes the sequence of images collected from different scenarios, including humans, and their quality frames are extracted. These frames are then processed to extract and analyze the features regarding their body joints and hand position and classify them into four different classes related to efficacy, i.e., confidence, cooperation, confusion, and uncomfortable. The features are extracted using a combination framework of customized Convolutional Neural Network (CNN) layers with Long Short-Term Memory (LSTM) for feature extraction and classification. Remarkable results have been achieved from this study representing 90.48% accuracy with effective recognition of human body gestures through deep learning approaches.

1. Introduction

Human-Computer Interaction (HCI) improves the relationship between humans and machines by promoting the recognition of dynamic human gestures to extract useful insights. Gesture recognition and human interaction with a computer are now becoming trending research topics, as researchers focus on recognizing and analyzing their appearance features [1]. Hand gestures are important in HCI because they are a natural mode of communication and can also be utilized for controlling devices [2,3]. More commonly, two possible ways of human interaction with surrounding objects or others are categorized as (a) glove-based with integrated sensors and (b) vision-based through detection and recognition of hand movement to classify gestures. The application of human gesture movement applies to different areas of assistance, including smart surveillance, vision-based classifiers, sign language, virtual reality, robotics, and entertainment [4].

Continuous movements of the human body are known as gestures. Manually, a person can recognize these gestures with minimal effort, but it becomes challenging when recognition has to be conducted by a machine. Human gestures include physical movements of body parts such as fingers, hands, arms, neck, and eyes [5]. In Human-Machine Interaction (HMI), hand motions are considered the most valuable and intuitive instrument compared to other body parts. In general, gestures are divided into two types: static gestures and dynamic gestures. An immovable configuration, such as a single image frame of a pose, is categorized as a static gesture. In contrast, a dynamic gesture is a sequence of images, i.e., a video of postures concerning a particular time frame. Overall, static gestures utilize a unit frame that holds slight information, so the computational cost is also tiny. On the contrary, dynamic gestures hold important information, so the computational complexity is also enormous. They are preferable for real-time applications as they contain time references and video sequences. In this research, we categorized each class based on hand gestures. For example, if a person keeps rubbing their hands and fingers, it means that person is uncomfortable. Similarly, if a person joins their hands in a video frame at some point and keeps repeating the same movement in the next frame, it means that the person is cooperative. If during an interview, a person keeps moving their hands while explaining, it means that person is confused or unconfident about their stance. If a person is using hand movements at regular intervals, it means the person is confident in their stance. Strong and decisive movements, e.g., raised fist or firm hand movements, are counted as confident gestures. In contrast, unconfident or confused gestures are more hesitant; for example, movements might be slow, haphazard, and less coordinated. Therefore, it is important to consider the full range of hand gesture cues to get a specific context [6].

Researchers have worked to propose different machine-learning methods for the detection and recognition of human hand gestures. In the scientific field of gesture recognition, hand motions include movements of thumbs, palms, and fingers for recognition, identification, and detection of the gestures [7]. Due to the small size of hands compared to other body parts, their estimation and detection are difficult, yielding excessive recognition complexity and frequent self-occlusions. Effective analysis of hand gestures is complex, given the higher performance cost. Many problems such as background, occlusion, illumination changes, color sensitivity, and light variations have been encountered in the approaches used for gesture analysis, particularly from 2-D video frames captured using digital cameras in the past few decades.

In traditional approaches, machine learning algorithms were used to conduct the recognition activity. For this process, the user had to manually design significant features, following a framework for solving problems such as hand variation in terms of varying size and lighting states. In the hand-crafted approach, feature extraction is carried out in a problem-oriented way. To create hand-crafted features in computing, the right trade-off between performance and accuracy is required. The emergence of deep learning and modern developments in computing and data resources, along with powerful hardware, has led to a paradigm shift in the field of artificial intelligence (AI) and computer vision. Recently, deep-learning-based models have been able to extract helpful information from videos implicitly, without any dependencies on external algorithms for data processing. Remarkable findings are now being obtained in various activities, including posture and hand gesture recognition.

Existing works on video content put together activity acknowledgment zeroed in primarily with respect to adjusting hand-created highlights to perform characterization [8]. This includes an underlying component recognition stage, which is then changed over completely to fixed-length highlight descriptors, trailed by characterization utilizing standard classifiers like Support Vector Machines (SVM). In any case, the analysis indicates that the best-performing highlight descriptor is dataset-dependent, and there is no general hand-designed feature that beats all others [9]. Hence, it is exceptionally helpful to learn dataset explicit low-level, mid-level, and significant-level elements, in regulated, unaided, or semi-directed settings. Since the recent re-emergence of neural networks summoned by Hinton and others, profound brain structures have become a viable methodology for extricating undeniable level highlights from information [10]. Over the last couple of years, profound counterfeit brain networks have given us cutting-edge results on various machine vision-related errands; for example, object detection, classification, and recognition [11], and localization, image and video segmentation, processing and captioning [12,13]. Primary signal situated data sets proposed for the ChaLearn 2013 Multi-Modular Gesture Recognition Challenge contained an adequate measure of deep learning policy Test Readiness for profound learning techniques [14].

Currently, automation has become a common task, thriving in the ever-changing era of technology. The unique objectives of the proposed research are as follows:

- One of the objectives of the proposed research is to design and construct a system to analyze the profile of someone who communicates by gestures. Mainly, we focus on video sequences of moving.

- The proposed architecture is trained and tested on a dataset of video clips collected and extracted from Web resources like YouTube.

- Another critical objective of our approach is the design of a model to integrate different Machine learning techniques like Convolution Neural Networks (CNN) and Long Short-Term Memory (LSTM) and optimize the performance of the recognition of hand gestures.

The main contribution of the proposed framework is that it facilitates to analyze and recognize gestures while detecting hand movement. The collected dataset is taken from open-source videos of the appropriate subjects, such as criminal investigations and academic and job interviews that contain gestures relevant to multi-labeled efficacy classes. To detect these postures/movements, the system captures the video and extracts the frames containing hands with hand detection techniques, e.g., haar cascade. These extracted image frames from video streams are then processed to separate the hands’ boxes, i.e., Region of Interest (ROI), and resize the image frames containing hands only. These images are then processed further for feature extraction using a convolutional neural network trained on a collected dataset containing labeled image frames for relevant categories. The extracted features are further classified into four behavioral categories, i.e., confident, uncomfortable, cooperative, and un-confident, to predict personality based on the gesture. Another important contribution of the proposed research is that the architecture is a combination of two depth performance models, i.e., CNN (GoogLeNet architecture) and LSTM. Considered as the state-of-the-art architecture, GoogLeNet is widely used for hand action detection and recognition. And LSTM helps in classification due to its long-term learning dependency on keeping temporal information of data. So the combination of these two proved an excellent fit for our research.

Below are the significant contributions of this study:

- First, it is a locally collected dataset from freely available open-source resources.

- Second, the chosen domain of confidence determination in a context is unique and helps effectively understand social, academic interviews or crime investigations.

- Third, a combination of two high-performing models, one is Customized CNN (GoogLeNet) with LSTM. The customized CNN consists of four major layers containing Conv2D and then applies a pooling in each layer for feature extraction.

The high-level features extracted through image processing methods are then analyzed to classify these features into appropriate gestures for predicting human personality while interacting with other humans or machines. The proposed framework helps diagnose a lack of confidence or any abnormality and address their psychological needs in a timely manner.

The remaining part of this paper is as follows: Section 2 describes the existing work. Section 3 provides a detailed description of the newly created dataset. Section 4 discusses hand gesture classification. Section 5 presents the proposed approach. Section 6 analyzes the results of the experimentation in this study. Finally, Section 7 draws conclusions and discusses future directions.

2. Related Work

A Human-Computer Interaction (HCI) has a few sorts of communication, and one of those is called motions [15]. One straightforward meaning of a motion is a non-verbal technique for correspondence used in HCI interfaces. The high objective of motion is to plan a particular framework that can recognize human signals and utilize these signals to pass on data for gadget control. Motions, in an augmented experience framework, can be utilized to explore, control, or cooperate with a computing machine [16]. There were several attempts to apply movement catch innovation (MOCAP) by specific photographers and creators in the 19th and 20th centuries [17]. The world of computer vision (CV) was dominated by Hand-crafted features prior to the emergence of deep learning. Feature crafting of useful information by hand was not an easy task. Zobl et al., in [18], described this method using image pre-processing techniques such as segmentation, thresholding, and background subtraction. Ryoo et al. [19] proposed relationship matching techniques to extract features based on Spatio-temporal features. For this approach, structural similarity was measured between the two videos using trajectory detection. Initially, local features were extracted by the researcher from a series of video sequences [20]. Later on, by utilizing the features of pairwise relationships, they created a relationship histogram from every video sequence. Later on, by utilizing the features of pairwise relationships, they created a relationship histogram for every video sequence. In [21], the famous SIFT descriptor was implemented for video data. The author has proposed an effective method using multi-model feature extraction for recognizing human activity [22]. Garcia et al. [23] elaborated a vision-based system for the classification of hand gestures that utilizes a combination of RGB and depth descriptors. To recognize hand movements made by UWB (ultra-wideband) radar, a fully connected neural network (FCNN) is used in [24]. Another approach presented in [24] is to use 2D CNN for extraction and gesture classification, and LSTM is used. In [25], the author proposed an infrastructure that is based on CNN and called it Hypotheses-CNN-Pooling (HCP). The model takes a random number of hypotheses segments as input, then it connects shared CNN with each of these. In the final step, max pooling is used to compute the output of all deep CNN layers connected to each hypothesis. The model reaches an accuracy of 93.25% on VOC 2012 dataset. Tao et al. [26] proposed a novel approach of a multi-task framework for object detection. The framework used multi-label classification in which the detection branch uses R-FCN method to solve the related problems. Accuracy is improved by combining the box-level and image-level features of multi-label branches. In [27], the author explains a spatially self-paced convolutional network (SSCPN) to detect change using an unsupervised approach. The method used pseudo-labels for classification and weights to reflect sample complexity.

The principal phase of the HGR framework is image division, where the hand is disengaged from its background, and the visual content is prepared for further stages [28]. Exploratory outcomes in He and Zhang [29] and Yang et al. [30] show that skin color clusters closely in various color spaces. Therefore, illumination invariance can be achieved by removing the V channel and utilizing only the H and S channels.

In recent years, significant improvement has been observed in computer vision methods due to the adoption of Convolution Neural Networks (CNNs), recurrent neural networks (RNNs), and other deep learning-based methods [31,32]. Deep learning models provide support for rotation and translation invariance, non-linear modeling capabilities, and high-level features. The use of deep learning-based models has increased significantly in research due to their ability to automatically learn feature representations, avoiding time-consuming feature engineering. Another approach for customizing temporal sequences is by using recurrent neural networks (RNNs) placed after the CNN’s last fully connected layer. The RNN can capture long-range dependencies along with temporal structure modeling. In each time slot, it shares different parameters across the network. One of the models presented in [33] for learning spatio-temporal hierarchical features from videos works by combining deep learning algorithms, i.e., CNN, BLSTM-RNN, and PCA (Principle Component Analysis), but this model is not robust. Therefore, pre-processing of data is required when providing real-world data that contains noise. A good model should be robust, i.e., its output and predictions should remain consistent to some extent, even if there is a sudden change in input. Thus, robustness is significant and has been ignored in past work [34,35].

Tsironi et al. [36] utilized the CNN-LSTM network to successfully learn and analyze complex features that vary with duration. This combination learns time-related features of each gesture to accurately classify Kendon’s stroke phase. To effectively visualize the feature maps for these features, it employs a deconvolution process to activate the original pixels of images.

A lot of work has previously been conducted on the detection and recognition of human body gestures. However, there is a need to identify hand gestures and their psychological linkage leading to personality, behavior, and actions. Table 1 summarizes existing work presented by researchers from the earliest computation and body analysis till the advancement of current techniques. Researchers begin studying the area of human gesture recognition by identifying body skeletons and movements in a real-time environment. The challenges arise due to skeletal identification, which can be overcome by analyzing the depth of video frames or images using pre-trained and simple neural networks that enhance the factor of human-computer interaction in a more efficient way.

Table 1.

Comparison of Related Studies.

The aim of this research is to provide the classification of human gestures based on their hand position and movement from video clips using CNN and LSTM to analyze their behavior. For this purpose, we obtained regions of interest containing hands cropped from the image frame for extraction of hand position features. These features are then classified into four categories of behavior using LSTM that classify the feature measures from customized CNN. This is the most important component in human-machine interaction, and it is also the most complex one since it is hard to read someone’s emotions. It can help in different fields like psychology to determine the body language of a person in a criminal investigation.

3. Collected Data-Set

Gesture recognition is crucial for many multi-modal association and computer vision applications. In order to detect body gestures and hand movements from video clips, we need to extract features of human hands captured in various scenarios. These videos include humans communicating with others to convey their points of view. We targeted to collect videos from various internet sources, including YouTube and other social media platforms.

After collecting these videos, we extracted high-quality frames from the original video clips containing the human body. These frames were then further processed to extract and annotate the hand position and gesture respectively in each image frame for the purpose of feature extraction using various deep-learning techniques. We targeted to classify human actions into four major categories, so we needed to capture features based on these categories or classes.

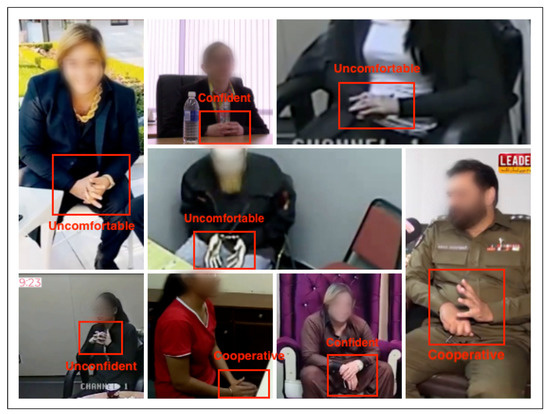

A total of 10,000 images were extracted from different videos containing various hand positions. The processing of these videos led to 4000 images that were categorized according to the required quality. The targeted categories of human gestures, after obtaining their hand position features, include uncomfortable, confident, cooperative, and unconfident/confused. We categorized a person’s state based on their hand gestures. Figure 1 shows various human gestures in different scenarios that were captured to analyze human confidence levels based on their hand position and movement. Additionally, the collected dataset is fully balanced according to these categories, as shown in Table 2.

Figure 1.

Developed Dataset from Captured Video Clips.

Table 2.

Developed Dataset Statistics and Distribution of Images for Training and Testing.

4. Hand Gesture Classification

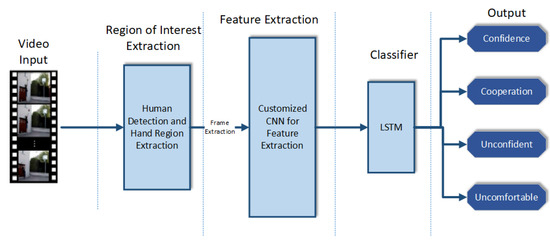

The proposed research detects and extracts the hidden features of human hand pose and their impact using various deep learning feature extraction techniques. The system captures the video and processes digital frames containing a person with appropriate quality. In addition to this, these extracted frames are processed to classify human gestures into multi-class categories based on the hand’s position. The process of feature extraction using deep learning techniques is shown in Figure 2.

Figure 2.

Implementation Pipeline for Hand’s Gesture Classification.

The system process videos to transform them into a sequence of image frames for pre-processing. These frames are then processed for key-frame extraction which contains humans as an object. After the detection of a human object, the system takes those frames and identifies the region of interest (ROI) while processing it through human hand detection using the object detection method. Based on extracted coordinates of the human hand region, the original frames were resized in such a manner that they should contain regions of the human hands that incorporate precise recognition of human gestures. For feature extraction of the image frame, these resized images are provided as inputs to the customized convolutional neural network layers to do feature extraction.

The features are extracted through a customized deep-learning method. These features are processed using the long short-term memory (LSTM) method for the classification of human hands posture and movement position into multi-class categories. These categories include confident, uncomfortable, cooperative, and confusion, based on various positions of hands joint and finger directions.

5. Proposed Architecture

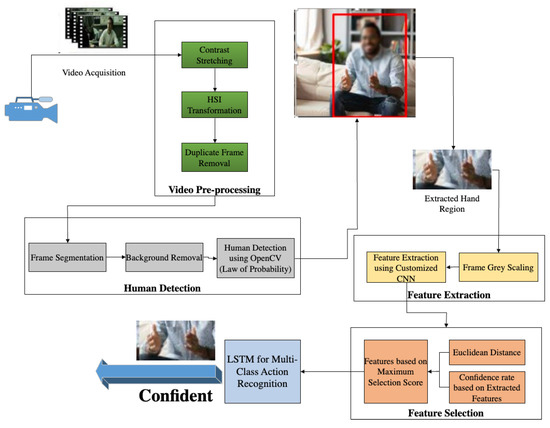

The proposed model is actually the integration of various modules of computer vision for the recognition and classification of human hand gestures. The model can judge the human psyche and classify the personality of the individual. Figure 3 presents the detailed architecture of the proposed research which takes the input video stream and processes it in a sequencing manner such that it generates a multi-class classification of the human confidence state using deep learning techniques. Performance metrics applied are Accuracy, Precision, and F-score. Deep learning approaches are end-to-end learning that automatically learns the hidden rich features and thus perform better in various computer vision tasks. The natural hierarchical structure of human activities is an indication of three-level categorization.

Figure 3.

Proposed architecture for Human Gesture Classification.

In this proposed research, there are some major modules that are listed below.

- Visual data pre-processing

- Frame extraction contains human object

- Frame resizing according to human hand’s region

- Feature extraction and deep learning method learning

- Gesture classification through classifier.

Video pre-processing consists primarily of data cleaning, smoothing, and grouping. The image frames extracted from the input video stream are resized to 400 × 400 because input images may be higher in size, which takes more processing and storage space. Resizing is required to normalize all images at the same resolution.

To recognize a human gesture, it is required to extract those video frames which contain human objects. The system takes the video input, detects a human object, and extracts these frames while removing extra frames that do not contain the human object. To make the gesture detection invariant to the picture scale, the image of the detected human hand is resized to 200 × 200. Extracted frames after human detection are processed to identify the area of interest containing human hands. These extracted images contain single or both hands based on the quality of extracted frames. These sequences of images are fed to the convolutional layers, which extract various features of the human hands. For the purpose of designing and developing neural networks for human detection, CNN is a regularized version of a multi-layer perceptron.

A modified GoogleNet CNN architecture is being used. The input is a series of three continuous frames that help determine whether the entity is human or mannequin-like. As a result, each frame in the input stack has its own CNN layers. The extracted or output features of the CNN layers are concatenated and fed into the fully connected network. The images are classified with the help of the softmax activation layer.

For mathematical interpretation of the above let input = i and C1, C2, C3 and C4 are the 4 convolution and Maxpool layers of CNN. Given that i is fed to C1:

Let On be the output on Cn, then:

Output of , i.e., is the input of given that , therefore:

Similarly,

Given that O4 is the input vector to be fed to next layer of LSTM, let L and V be the vector output from LSTM layer Cl be the final class label:

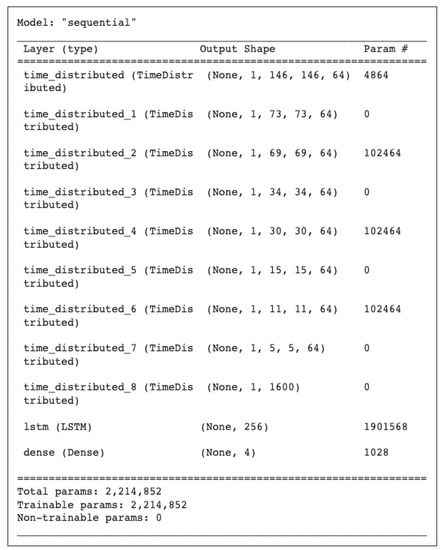

Architecture of Customized Neural Network

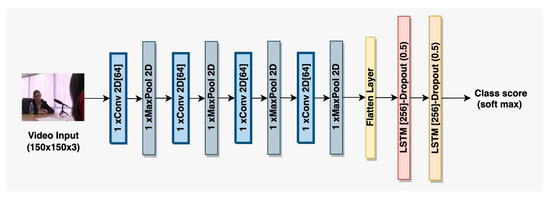

In the proposed paper, we customized the layers of neural networks to reduce the possibility of overfitting and underfitting, resulting in better visualization outcomes of input images. To avoid overfitting, we used two dropout layers, one after the pooling layers and the other after the fully connected layer. The architecture of the customized CNN-LSTM is based on four major layers, which contain Conv2D layers that up-sample the image size and then apply pooling in each layer for feature extraction. After processing through these four convolutional layers, we apply the flattened layer. The processed images take 256 pixels with a dropout rate of 0.5. Lastly, we use the softmax activation function for the CNN-LSTM model. Softmax transforms a vector of numbers into a vector of probabilities, with the probabilities of each value proportional to the vector’s relative scale. After processing through CNN, the system takes the visual features for processing through LSTM to classify the categorized label into four major categories: confidence, cooperation, uncomfortable, and unconfident. Figure 4 shows the implementation of the customized CNN.

Figure 4.

Architecture diagram of proposed CNN model.

The model in Figure 5 consists of an input layer which includes a frame of 150 × 150 × 3 dimensions. It also includes an output layer that states a class label to represent confidence state of a person. In between these two the model has several time distribution layers with four Conv 2D layers each followed by a maxPool 2D layer, further followed by a flatten, LSTM and a dense (softmax) layer.

Figure 5.

Block diagram of proposed model.

6. Experiments & Results

The purpose of this research is to provide an effective framework for the detection and recognition of various human gestures that people perform while communicating with others. Before training our model, we divide our dataset into a training and a testing dataset with the ratio of 70% and 30%, respectively. After splitting the dataset, we have a total of 2817 images in the training dataset and 1208 images in the testing dataset. The dataset contains four categories for classification; therefore, for each class, Table 3 shows the result of evaluation metrics of the training dataset.

Table 3.

Training Data Result for Human Gesture Classification.

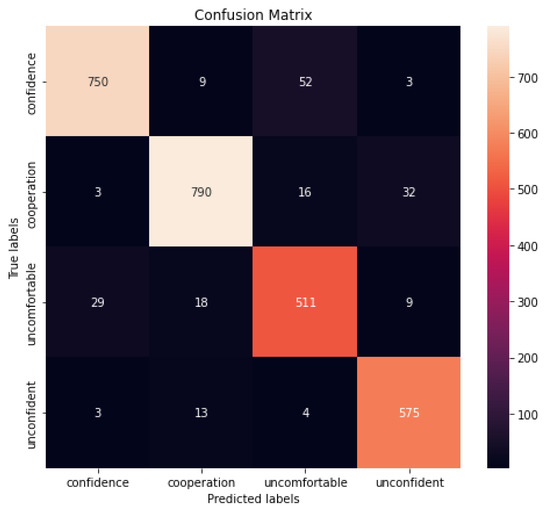

Table 3, shows the results in terms of Precision, F-measure, Recall, and Support for each category. Due to unbalancing of the dataset, confidence achieves highest results whereas the uncomfortable class presents lower results than the other categories. The reason is that, when a class has an imbalanced dataset, then the model will have a slight bias for the class with the maximum number of samples due to unequal misclassification costs. However, the accuracy of this framework collectively is 90.48%, which represents better results compared to existing frameworks. The Confusion matrices represented in Figure 6 for the training data shows the predicted and expected outcomes.

Figure 6.

Training Data Confusion Matrix.

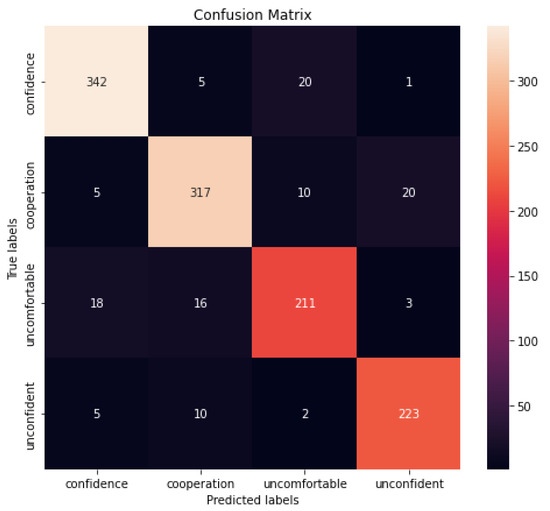

The proposed architecture is validated by the results shown in Table 4 corresponding to the testing data: Precision, Recall, F-measure, and Support; whereas Figure 7 presents the Confusion matrices for testing the visual data.

Table 4.

Testing Dataset Result for Human Gesture Classification.

Figure 7.

Testing Data Confusion Matrix.

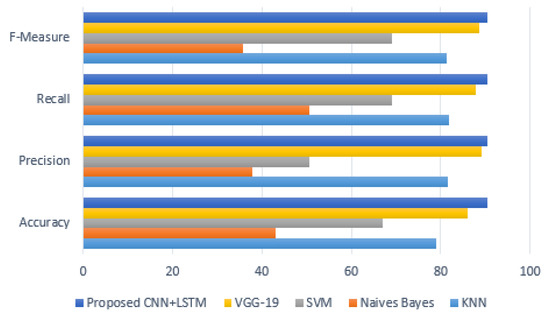

The experimental study involves the comparison of customized neural network + LSTM architecture with the existing pre-trained models, including K-nearest neighbor(KNN), Naive Bayes, SVM, VGG-19 on the collected dataset which shows the leading accuracy of the proposed architecture. In Table 5, the comparison of accuracy, precision, f-measure, and recall is presented with the proposed architecture.

Table 5.

Comparison Results with Other Machine Learning Methods.

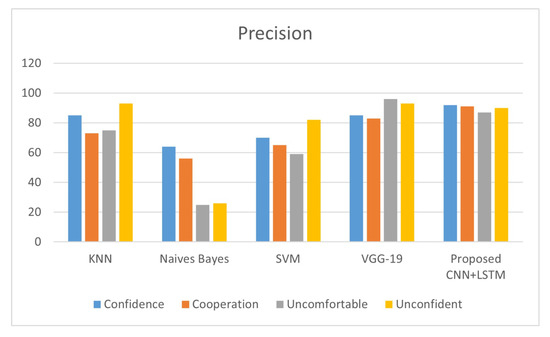

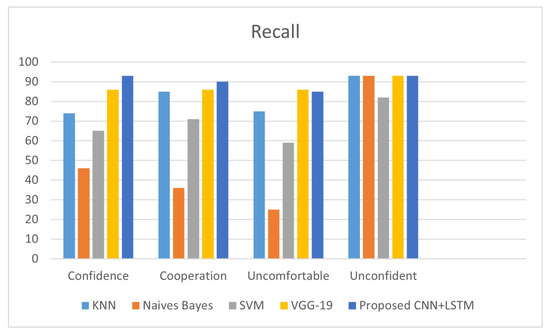

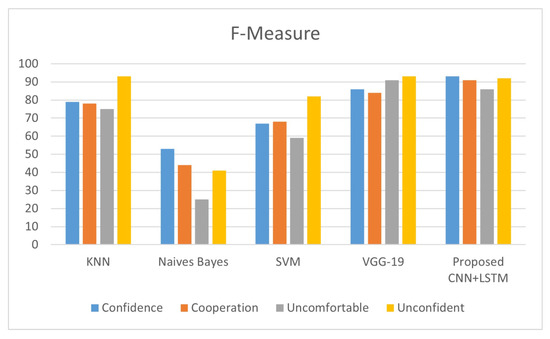

Figure 8 shows the comparison of Accuracy, Precision, Recall, and F-measure for overall results of human-emotion-recognition based on gesture classes. While Figure 9, Figure 10 and Figure 11 indicate the comparison of these evaluation metrics based on four different gesture classes.

Figure 8.

Evaluation Metrics Comparison with Proposed Method.

Figure 9.

Precision comparison.

Figure 10.

Recall comparison.

Figure 11.

F-Measure comparison.

Although CNNs perform well in image classification tasks by learning spatial feature hierarchies from raw data, they do not perform well in sequence data as they do not learn temporal dependencies. Since our model takes images extracted from videos as input, a combination of CNN and LSTM performs better in our case. In real-life scenarios, our model can be used to detect the confidence of a person, learning both spatial and temporal dependencies during training. The VGG-19 model shows slightly better precision for the uncomfortable and unconfident classes than our proposed model. However, our model has significantly fewer parameters than VGG-19 and performs better overall. While VGG-19 has a slightly better recall for the uncomfortable class, the difference is negligible, and our model performs better in the other three classes compared to VGG-19.

7. Conclusions

In this paper, we developed a locally collected dataset after annotation and augmentation. 70% of the dataset is reserved for training purposes, and 30% for testing. A total of 100 epochs are used for training purposes. The former trains the customized CNN combined with LSTM, acting as a compound framework for feature extraction and classification, whereas the testing dataset evaluates the proposed method, achieving better results.

To evaluate the current study, we used various metrics: Accuracy, F-measure, Recall, and ROC for each class related to confidence. The accuracy of the customized CNN & LSTM is validated through testing images, achieving a rate of 90.48% with a precision rate of 90.46%. The results show that the system achieved satisfactory performance under variable conditions. After studying and analyzing the effective outcomes, there is an improvement in facilitating human gesture recognition from visual content.

Future research directions will explore the hidden perspective of emerging trends:

- Detect gestures from a complex background which contains multiple objects and variant colored background.

- Enhance the number of human gestures for effective interaction, visualization, and estimation of particular contextual activities.

- The system can be extended by video and image sequence processing.

- Applications can be diverse considering different scenarios such as educational activities, and official working for automatic recognition.

In this research, after studying and analyzing the effective outcomes, it is evident that there is still room for improvement in facilitating human gesture recognition from visual content. Future research directions should be suggested to explore the hidden perspectives of emerging trends.

Author Contributions

Conceptualization, A.M. and M.A.; methodology, A.M.; writing—original draft preparation, A.M. and N.U.H.; review and editing, M.A., M.U.G.K. and A.M.M.-E.; supervision, M.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Subject consent was waived off as data is collected from open source publicly available videos on YouTube and we are only using hand gestures in this research. We have blurred the faces of all the subjects employed in this study hence there will be no privacy concerns regarding the exposure of any human faces or their identities.

Data Availability Statement

The data will be available publicly after publishing our paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- What Is Computer Vision? Available online: https://www.ibm.com/topics/computer-vision (accessed on 25 October 2022).

- Gadekallu, T.R.; Alazab, M.; Kaluri, R.; Maddikunta, P.K.R.; Bhattacharya, S.; Lakshmanna, K.; Parimala, M. Hand gesture classification using a novel CNN-crow search algorithm. Complex Intell. Syst. 2021, 7, 1855–1868. [Google Scholar] [CrossRef]

- Tan, Y.S.; Lim, K.M.; Lee, C.P. Hand gesture recognition via enhanced densely connected convolutional neural network. Expert Syst. Appl. 2021, 175, 114797. [Google Scholar] [CrossRef]

- Zhang, T. Application of AI-based real-time gesture recognition and embedded system in the design of English major teaching. In Wireless Networks; Springer: Cham, Switzerland, 2021; pp. 1–13. [Google Scholar]

- Zhu, M.; Sun, Z.; Zhang, Z.; Shi, Q.; He, T.; Liu, H.; Chen, T.; Lee, C. Haptic-feedback smart glove as a creative human-machine interface (HMI) for virtual/augmented reality applications. Sci. Adv. 2020, 6, eaaz8693. [Google Scholar] [CrossRef]

- Kendon, A. Gesture: Visible Action as Utterance; University of Pennsylvania: Pennsylvania, PA, USA, 2004. [Google Scholar] [CrossRef]

- Nivash, S.; Ganesh, E.; Manikandan, T.; Dhaka, A.; Nandal, A.; Hoang, V.T.; Kumar, A.; Belay, A. Implementation and Analysis of AI-Based Gesticulation Control for Impaired People. Wirel. Commun. Mob. Comput. 2022, 2022, 4656939. [Google Scholar] [CrossRef]

- Xing, Y.; Zhu, J. Deep Learning-Based Action Recognition with 3D Skeleton: A Survey; Wiley: Hoboken, NJ, USA, 2021. [Google Scholar]

- Shanmuganathan, V.; Yesudhas, H.R.; Khan, M.S.; Khari, M.; Gandomi, A.H. R-CNN and wavelet feature extraction for hand gesture recognition with EMG signals. Neural Comput. Appl. 2020, 32, 16723–16736. [Google Scholar] [CrossRef]

- Islam, M.R.; Mitu, U.K.; Bhuiyan, R.A.; Shin, J. Hand gesture feature extraction using deep convolutional neural network for recognizing American sign language. In Proceedings of the IEEE 2018 4th International Conference on Frontiers of Signal Processing (ICFSP), Poitiers, France, 24–27 September 2018; pp. 115–119. [Google Scholar]

- Buhrmester, V.; Münch, D.; Arens, M. Analysis of explainers of black box deep neural networks for computer vision: A survey. Mach. Learn. Knowl. Extr. 2021, 3, 966–989. [Google Scholar] [CrossRef]

- Wang, C.; Yang, H.; Meinel, C. Image captioning with deep bidirectional LSTMs and multi-task learning. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 2018, 14, 1–20. [Google Scholar] [CrossRef]

- Bhardwaj, S.; Srinivasan, M.; Khapra, M.M. Efficient video classification using fewer frames. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–19 June 2019; pp. 354–363. [Google Scholar]

- Li, Y.; Miao, Q.; Qi, X.; Ma, Z.; Ouyang, W. A spatiotemporal attention-based ResC3D model for large-scale gesture recognition. Mach. Vis. Appl. 2019, 30, 875–888. [Google Scholar] [CrossRef]

- Ahuja, M.K.; Singh, A. Static vision based Hand Gesture recognition using principal component analysis. In Proceedings of the 2015 IEEE 3rd International Conference on MOOCs, Innovation and Technology in Education (MITE), Amritsar, India, 1–2 October 2015; pp. 402–406. [Google Scholar]

- Oudah, M.; Al-Naji, A.; Chahl, J. Hand gesture recognition based on computer vision: A review of techniques. J. Imaging 2020, 6, 73. [Google Scholar] [CrossRef] [PubMed]

- Bernard, J.; Dobermann, E.; Vögele, A.; Krüger, B.; Kohlhammer, J.; Fellner, D. Visual-interactive semi-supervised labeling of human motion capture data. Electron. Imaging 2017, 2017, 34–45. [Google Scholar] [CrossRef]

- Zhan, F. Hand gesture recognition with convolution neural networks. In Proceedings of the 2019 IEEE 20th International Conference on Information Reuse and Integration for Data Science (IRI), Los Angeles, CA, USA, 30 July–1 August 2019; pp. 295–298. [Google Scholar]

- Ryoo, M.S.; Aggarwal, J.K. Spatio-temporal relationship match: Video structure comparison for recognition of complex human activities. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 1593–1600. [Google Scholar]

- Aggarwal, J.K.; Ryoo, M.S. Human activity analysis: A review. ACM Comput. Surv. (CSUR) 2011, 43, 1–43. [Google Scholar] [CrossRef]

- Scovanner, P.; Ali, S.; Shah, M. A 3-dimensional sift descriptor and its application to action recognition. In Proceedings of the 15th ACM International Conference on Multimedia, Augsburg, Germany, 24–29 September 2007; pp. 357–360. [Google Scholar]

- Ehatisham-Ul-Haq, M.; Javed, A.; Azam, M.A.; Malik, H.M.; Irtaza, A.; Lee, I.H.; Mahmood, M.T. Robust human activity recognition using multimodal feature-level fusion. IEEE Access 2019, 7, 60736–60751. [Google Scholar] [CrossRef]

- Benitez-Garcia, G.; Prudente-Tixteco, L.; Castro-Madrid, L.C.; Toscano-Medina, R.; Olivares-Mercado, J.; Sanchez-Perez, G.; Villalba, L.J.G. Improving real-time hand gesture recognition with semantic segmentation. Sensors 2021, 21, 356. [Google Scholar] [CrossRef] [PubMed]

- Hendy, N.; Fayek, H.M.; Al-Hourani, A. Deep Learning Approaches for Air-writing Using Single UWB Radar. IEEE Sens. J. 2022, 22, 11989–12001. [Google Scholar] [CrossRef]

- Wei, Y.; Xia, W.; Lin, M.; Huang, J.; Ni, B.; Dong, J.; Zhao, Y.; Yan, S. HCP: A Flexible CNN Framework for Multi-Label Image Classification. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 1901–1907. [Google Scholar] [CrossRef]

- Gong, T.; Liu, B.; Chu, Q.; Yu, N. Using multi-label classification to improve object detection. Neurocomputing 2019, 370, 174–185. [Google Scholar] [CrossRef]

- Li, H.; Gong, M.; Zhang, M.; Wu, Y. Spatially Self-Paced Convolutional Networks for Change Detection in Heterogeneous Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 4966–4979. [Google Scholar] [CrossRef]

- Parvathy, P.; Subramaniam, K.; Prasanna Venkatesan, G.; Karthikaikumar, P.; Varghese, J.; Jayasankar, T. Development of hand gesture recognition system using machine learning. J. Ambient Intell. Humaniz. Comput. 2021, 12, 6793–6800. [Google Scholar] [CrossRef]

- He, J.; Zhang, H. A real time face detection method in human-machine interaction. In Proceedings of the 2008 2nd International Conference on Bioinformatics and Biomedical Engineering, Shanghai, China, 16–18 May 2008; pp. 1975–1978. [Google Scholar]

- Yang, J.; Lu, W.; Waibel, A. Skin-color modeling and adaptation. In Proceedings of the Asian Conference on Computer Vision; Springer: Cham, Switzerland, 1998; pp. 687–694. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the Thirty-first AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- You, J.; Korhonen, J. Attention boosted deep networks for video classification. In Proceedings of the 2020 IEEE International Conference on Image Processing (ICIP), Online, 25–28 October 2020; pp. 1761–1765. [Google Scholar]

- Muhammad, K.; Ullah, A.; Imran, A.S.; Sajjad, M.; Kiran, M.S.; Sannino, G.; de Albuquerque, V.H.C. Human action recognition using attention based LSTM network with dilated CNN features. Future Gener. Comput. Syst. 2021, 125, 820–830. [Google Scholar] [CrossRef]

- Khan, S.; Khan, M.A.; Alhaisoni, M.; Tariq, U.; Yong, H.S.; Armghan, A.; Alenezi, F. Human action recognition: A paradigm of best deep learning features selection and serial based extended fusion. Sensors 2021, 21, 7941. [Google Scholar] [CrossRef]

- Tsironi, E.; Barros, P.; Weber, C.; Wermter, S. An analysis of convolutional long short-term memory recurrent neural networks for gesture recognition. Neurocomputing 2017, 268, 76–86. [Google Scholar] [CrossRef]

- Xi, C.; Chen, J.; Zhao, C.; Pei, Q.; Liu, L. Real-time hand tracking using kinect. In Proceedings of the 2nd International Conference on Digital Signal Processing, Tokyo, Japan, 25–27 February 2018; pp. 37–42. [Google Scholar]

- Konstantinidis, D.; Dimitropoulos, K.; Daras, P. Sign language recognition based on hand and body skeletal data. In Proceedings of the IEEE 2018-3DTV-Conference: The True Vision-Capture, Transmission and Display of 3D Video (3DTV-CON), Helsinki, Finland, 3–5 June 2018; pp. 1–4. [Google Scholar]

- De Smedt, Q.; Wannous, H.; Vandeborre, J.P.; Guerry, J.; Saux, B.L.; Filliat, D. 3d hand gesture recognition using a depth and skeletal dataset: Shrec’17 track. In Proceedings of the Workshop on 3D Object Retrieval, Graz, Austria, 23–24 April 2017; pp. 33–38. [Google Scholar]

- Ren, Z.; Meng, J.; Yuan, J. Depth camera based hand gesture recognition and its applications in human-computer-interaction. In Proceedings of the IEEE 2011 8th International Conference on Information, Communications & Signal Processing, Lyon, France, 23–24 April 2011; pp. 1–5. [Google Scholar]

- Sahoo, J.P.; Ari, S.; Patra, S.K. Hand gesture recognition using PCA based deep CNN reduced features and SVM classifier. In Proceedings of the 2019 IEEE International Symposium on Smart Electronic Systems (iSES)(Formerly iNiS), Singapore, 13–16 December 2019; pp. 221–224. [Google Scholar]

- Ma, X.; Peng, J. Kinect sensor-based long-distance hand gesture recognition and fingertip detection with depth information. J. Sensors 2018, 2018, 21692932. [Google Scholar] [CrossRef]

- Desai, S. Segmentation and recognition of fingers using Microsoft Kinect. In Proceedings of the International Conference on Communication and Networks; Springer: Cham, Szwitzerland, 2017; pp. 45–53. [Google Scholar]

- Bakar, M.Z.A.; Samad, R.; Pebrianti, D.; Aan, N.L.Y. Real-time rotation invariant hand tracking using 3D data. In Proceedings of the 2014 IEEE International Conference on Control System, Computing and Engineering (ICCSCE 2014), Penang, Malaysia, 28–30 November 2014; pp. 490–495. [Google Scholar]

- Bamwenda, J.; Özerdem, M. Recognition of static hand gesture with using ANN and SVM. Dicle Univ. J. Eng. 2019. [Google Scholar] [CrossRef]

- Desai, S.; Desai, A. Human Computer Interaction through hand gestures for home automation using Microsoft Kinect. In Proceedings of the International Conference on Communication and Networks; Springer: Cham, Switzerland, 2017; pp. 19–29. [Google Scholar]

- Tekin, B.; Bogo, F.; Pollefeys, M. H+ o: Unified egocentric recognition of 3d hand-object poses and interactions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–19 June 2019; pp. 4511–4520. [Google Scholar]

- Wan, C.; Probst, T.; Gool, L.V.; Yao, A. Self-supervised 3d hand pose estimation through training by fitting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–19 June 2019; pp. 10853–10862. [Google Scholar]

- Ge, L.; Ren, Z.; Li, Y.; Xue, Z.; Wang, Y.; Cai, J.; Yuan, J. 3d hand shape and pose estimation from a single rgb image. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–19 June 2019; pp. 10833–10842. [Google Scholar]

- Han, S.; Liu, B.; Cabezas, R.; Twigg, C.D.; Zhang, P.; Petkau, J.; Yu, T.H.; Tai, C.J.; Akbay, M.; Wang, Z.; et al. MEgATrack: Monochrome egocentric articulated hand-tracking for virtual reality. ACM Trans. Graph. 2020, 39, 87:1–87:13. [Google Scholar] [CrossRef]

- Wu, X.; Finnegan, D.; O’Neill, E.; Yang, Y.L. Handmap: Robust hand pose estimation via intermediate dense guidance map supervision. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 237–253. [Google Scholar]

- Alnaim, N.; Abbod, M.; Albar, A. Hand gesture recognition using convolutional neural network for people who have experienced a stroke. In Proceedings of the 2019 3rd International Symposium on Multidisciplinary Studies and Innovative Technologies (ISMSIT), Ankara, Turkey, 11–13 October 2019; pp. 1–6. [Google Scholar]

- Chung, H.Y.; Chung, Y.L.; Tsai, W.F. An efficient hand gesture recognition system based on deep CNN. In Proceedings of the 2019 IEEE International Conference on Industrial Technology (ICIT), Melbourne, VIC, Australia, 13–15 February 2019; pp. 853–858. [Google Scholar]

- Bao, P.; Maqueda, A.I.; del Blanco, C.R.; García, N. Tiny hand gesture recognition without localization via a deep convolutional network. IEEE Trans. Consum. Electron. 2017, 63, 251–257. [Google Scholar] [CrossRef]

- Li, G.; Tang, H.; Sun, Y.; Kong, J.; Jiang, G.; Jiang, D.; Tao, B.; Xu, S.; Liu, H. Hand gesture recognition based on convolution neural network. Clust. Comput. 2019, 22, 2719–2729. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).