Abstract

These days, users consume more electricity during peak hours, and electricity prices are typically higher between 3:00 p.m. and 11:00 p.m. If electric vehicle (EV) charging occurs during the same hours, the impact on residential distribution networks increases. Thus, home energy management systems (HEMS) have been introduced to manage the energy demand among households and EVs in residential distribution networks, such as a smart micro-grid (MG). Moreover, HEMS can efficiently manage renewable energy sources, such as solar photovoltaic (PV) panels, wind turbines, and vehicle energy storage. Until now, no HEMS has intelligently coordinated the uncertainty of smart MG elements. This paper investigated the impact of PV solar power, MG storage, and EVs on the maximum solar radiation hours. Several deep learning (DL) algorithms were utilized to account for the uncertainties. A reinforcement learning home centralized photovoltaic (RL-HCPV) scheduling algorithm was developed to manage the energy demand between the smart MG elements. The RL-HCPV system was modelled according to several constraints to meet household electricity demands in sunny and cloudy weather. Additionally, simulations demonstrated how the proposed RL-HCPV system could incorporate uncertainty, and efficiently handle the demand response and how vehicle-to-home (V2H) can help to level the appliance load profile and reduce power consumption costs with sustainable power production. The results demonstrated the advantages of utilizing RL and V2H technology as potential smart building storage technology.

1. Introduction

Conventional energy sources, including petroleum, natural gas, and coal, contribute to environmental pollution. The effect of these fossil fuels on the environment makes it essential to use renewable energy sources (RnEs), such as solar and wind. However, the fluctuating nature of RnEs introduces new challenges to the energy exchange and load balancing of smart grids (SGs). One of the most effective ways to achieve power system stability is to use the electric vehicle (EV) batteries as distributed energy storage.

Previous studies have shown that 40% of energy is consumed in buildings, including homes, offices, and hospitals [1]. These days, smart buildings are equipped with RnEs as a power supply that is controlled via an energy management system (EMS). Therefore, smart buildings are ideal for energy support to evaluate the impact of the EMS. Furthermore, though EV batteries increase the SG loading, it can function as potential storage to facilitate the demand response (DR) [2]. The literature shows several EMS models for EV batteries. In [3] a feasible motor energy efficiency model as a motor actuator was developed by merging the electric motor productivity map based on collected data with the motor productivity experiment and a current closed-loop motor design to overcome limited energy storage. The study [4] proposed a thorough control method intended to address all usual scenarios, employing a hierarchical design to achieve four-wheel independent control, which will simultaneously improve driving performance and reduce energy consumption. Aside from conventional vehicles, all independent chassis actuators drive-by-wire enable dynamic enhancement of EVs to assist the driver in enhancing handling and cornering stability. The study [5], introduced a multilevel integrated control with control allocation and motion controller to enhance the performance by reducing the tire effort on each wheel. Moreover, a survey of vehicle control for autonomous, connected, and automated EVs was conducted in [6]. The analysis included the techniques, essential elements, collaborative EV control strategies, and possible uses of cooperative driving control techniques, that could increase the sustainability, safety, and efficiency of the future transportation system. Therefore, incorporating EV batteries into smart buildings is a technique for optimizing cost reduction [7,8] and enhancing the usage of energy sources. Thus, the novel vehicle-to-home (V2H) technology is appealing due to its ability to reduce electricity prices in the home. In addition, when the SG is overloaded, V2H technology transfers the peak load and supplies constant emergency lighting [9].

According to the research conducted by Pearre et al., the development of EV technology by focusing on discharging modes, namely, vehicle-to-grid (V2G), vehicle-to-building (V2B), and V2H, has been evaluated [10]. In the discharging modes, EVs discharge their batteries and distribute the excess energy to the power grid, buildings, or residences. The authors argued that the V2G mode is less efficient than V2B and V2H due to the significant transmission power losses. Moreover, the V2H discharging mode can utilize the excess energy from the EV battery more efficiently. For example, in V2H, residences consume the excess energy from the EV battery through appliances. Therefore, the HEMS manages smart appliances and EVs. In addition, a smart meter is connected to the HEMS to support the bi-directional information exchange between the customers and the utility service provider.

Moreover, the HEMS can rely on a prediction algorithm to determine the charging and discharging periods. The HEMS charges the EV battery for cost reduction when the price is low. In contrast, the HEMS schedules the discharge of the EV battery to power home appliances, and avoids purchasing energy from the utility service provider when the price is high. Furthermore, this strategy serves as an emergency energy source during a power outage. Other studies have indicated that EVs and V2H technologies were expected to be used as domestic electricity storage systems [11]. Thus, it is crucial to promote PV power self-consumption. In addition, the authors identified V2H as a technology that uses an EV battery as a controlled voltage source to supply home loads during power outages. As a result, EVs provide an uninterrupted power supply, increasing grid reliability. The study compared V2H and V2G, demonstrating that V2G has a complex infrastructure, that increases losses due to location distance. Therefore, the EV application in V2H is more promising in terms of its controlling strategy.

V2H technology has gone through several optimization procedures [12]. Conventional optimization methods, including model-based linear and dynamic programming, have been used in different applications for integrating V2H into HEMSs, such as scheduling EV charging and discharging [13], economic load reduction [14], and load shifting [15]. However, these optimization methods cannot adapt to the environment’s stochastic nature, including unpredictable driving patterns, load profiles, and pricing. The global search technique, that uses genetic algorithms [16] and swarm intelligence [17] to resolve power management issues, is another model-based optimization strategy. However, the application of these methods in real-time systems is limited due to extensive computations, which makes them slow. Thus, data-driven approaches, including machine learning (ML), are being introduced to offer more advanced services [18,19,20]. Furthermore, reinforcement learning (RL) algorithms provide superior alternative energy decisions, control, and management solutions due to their outstanding ability to make decisions and resolve issues without prior knowledge of the environment. Additionally, before being activated online for any driving patterns, load profiles, or dynamic electricity pricing, RL models may be tested offline for a generation profile and general load [12,21]. Moreover, one direction of the reward formulation used in [22] for deep RL to control battery deterioration is a non-parametric reward function. The evaluation indicated training effectiveness, optimality, and flexibility across different common driving tests.

According to an independent statistical analysis by the US Energy Information Administration (EIA) in 2021, the transportation sector consumes 28% of the energy produced annually, most of which comes from fossil fuels. Moreover, residential energy consumption reaches 27% [23]. The analysis reveals an existing CO2 concentration of 286 ppm, predicting an annual increase of 2 ppm. According to another study, if drastic measures are not taken, some regions could experience increased CO2 emissions of up to 700 ppm by 2100 [10]. Therefore, it is crucial to radically change fossil fuel usage to decrease CO2 emissions in the atmosphere. A major challenge to achieving this goal is decarbonizing transportation. Therefore, electrifying transport to overcome this challenge is the most promising option. Consequently, RnEs, such as PV solar panels and wind, are becoming the most important power sources for electrifying EVs and residences. Furthermore, V2H technology supports using clean energy for EVs and smart homes.

V2H technology refers to transferring electricity from vehicles to the home. Several pieces of literature have explained the origins of this technology. Recently, many countries have implemented rules to reduce gas and CO2 emissions to address global warming and environmental issues and to raise global energy consumption awareness. Moreover, the steady rise in oil prices necessitates the development of alternative fuel sources to reduce oil dependence [24]. As a result, electrical energy could be a viable alternative to traditional fuel sources.

Furthermore, electricity is considered an extraordinary future technology due to its infrastructure readiness, safety, and reliability. However, the storage of electricity has advantages and disadvantages. Additionally, the continuous rise in fuel prices and the emerging environmental awareness motivate manufacturers to develop transportation solutions that overcome such issues.

Previously, EVs were not an ideal alternative due to their weak batteries. Yet, EVs and batteries have been developed as suitable alternatives for conventional vehicles. However, the driving distance of EVs is low, and battery prices are high compared to conventional vehicles. Therefore, EVs can benefit society in many ways, explicitly and implicitly. Many electric transportation projects and the EV technology trade are in operation worldwide with rapid development [25]. For this reason, it is essential to adapt to and develop more innovations and conceptual advancements [26].

Studies have indicated that each EV battery can store up to 5–40 kWh of energy [27], which is not only used for travelling. It can also be used for V2H, V2B, and V2G applications. The study reviewed the application’s characteristics, drawbacks, and pilot projects. The review revealed that V2H and V2B are easier to implement than V2G, and do not need external entities to be fully operational. Furthermore, V2H and V2B provide more apparent advantages for users. The characteristics of V2H applications are: operated at home level, are normally suitable for one EV, reduce electricity bills, provide backup power, and are easy to implement. The main drawback of V2H is that it is only suitable for single-family homes in residential blocks. Some examples of V2H pilot projects are Leaf to Home [28], Toyota Smart Home [29], and Honda Smart Home [30]. So far, Leaf to Home is the only commercially available pilot project among the three.

A study utilized a battery-swapping strategy to integrate an EV into a building and this consisted of a reserve battery and solar energy [31]. The strategy supported the flexible EV exiting for the owner, even when the EV reached the building with an empty battery. The simulation indicated that the building could operate continually under the grid-tied and off-grid states. In another study, the authors aimed to decrease the domestic loads’ dependence on the grid, increase the power utilization generated from rooftop PV solar panels, enhance the power supply reliability compared to the residential loads during peak periods, and decrease the energy cost of a household [32]. A V2H model based on the fuzzy inference system (FIS) was developed to manage home energy effectively and to sufficiently utilize the stored energy of EVs for residential loads. Researchers suggested an HEMS scheduling algorithm that uses V2H battery storage and solar energy to meet the load demand and execute accurate system operations [33]. The study explored the impact of V2H and PV on net power usage and home energy consumption. Four energy flow models were investigated, including green EV (GEV) charging without green PV (GPV) (Model 1), V2H charging and discharging without GPV (Model 2), GEV charging with GPV (Model 3), and V2H charging and discharging with GPV (Model 4). The simulation demonstrated the proposed approach’s ability and proficiency to prevent unanticipated changes.

In another study, the researchers simulated eight system control modes to evaluate a proposed V2H concept [34]. The main operating modes consisted of an in-house battery charging mode where the PV-generated power is used simultaneously for in-house battery charging and load power. The in-house battery discharge mode uses the stored power of the in-house battery to satisfy the load demand. The Nissan Leaf charging mode involves charging the Nissan Leaf using the excess power remaining after the in-house battery is charged and the load demand is satisfied. The Nissan Leaf discharge mode includes using the Nissan Leaf to provide the power to satisfy the demand when the load demand is high, and the generated PV power and state-of-charge (SOC) of the in-house batteries are low. The in-house battery protection mode prevents the degenerative discharge condition of the in-house battery, and the system isolation mode prevents system failure. The excess power handling mode manages the excess generated power, and finally, the night-time charging mode charging the Nissan Leaf should be prepared for the next day.

Researchers simulated an optimized strategy for the operations and facilities of a residential energy system [35]. The authors set up several scenarios and investigated the optimized strategy with different PV panel installation locations, automotive usage patterns, and energy demand characteristics. For houses with extended EV absence times, the results demonstrate that combining a V2H system with a stationary battery (SB) is more cost-effective. Thus, when designing a household energy system, it is advisable to evaluate numerous battery alternatives to determine whether a V2H system should be placed alone or in conjunction with a stationary battery.

The above studies slightly deviate from the uncertainties. Thus, efficient uncertainty prediction models are required to improve decision-making for cost reduction and DR optimization. For instance, the RnEs can predict the amount of energy produced during the day and the household energy demand when each production source and electricity consumption profile are known. For energy prediction, household energy consumption and production values are used to predict how much energy is consumed and produced by appliances. Researchers argued that EVs as energy resources can create ancillary service markets in SGs by providing ancillary services [36]. Therefore, the grid-integrated-vehicle (GIV) technology is appropriate for investing in EVs for ancillary service markets.

Furthermore, it is vital to consider individual consumption behaviour when deciding optimal operational EV points for increasing user profit and resource effectiveness. However, a mechanism that considers individual consumption behaviour has not been created. Thus, a proposed V2H aggregator uses the in-vehicle batteries of EVs to enable individuals to participate in a regulated market. The results indicated that the proposed V2H aggregator could predict the power required for the grid. Studies have been conducted in which the researchers present a partially implemented software to control the energy exchange in V2H technology [37]. They implemented various basic algorithms to satisfy the load demand. The software can receive requests, such as XML documents from several control sources, that include long- and short-term plans from smart homes or EV viewpoints. In addition, the model includes an energy flow control unit (EFCU) as a system for bidirectional EV connection within the smart home. Conventionally, EFCUs are operated manually to start and stop the charging and discharging mechanism. In contrast, the optimized model enables the automatic control of the charging and discharging mechanism based on the predicted long and short-term plans using any communication technology, e.g., WiFi or Bluetooth.

A study compared two house MGs that consisted of PV panels and wind, an energy storage battery, and an EV [38]. One MG incorporated the notion of a V2H unit installed in a home linked to the main grid because the EV was not available daily. The economic benefit of V2H was examined, and its cost-significance may have persuade residences to move from thermal to EVs. Furthermore, the quantity of CO2 released by EVs is half that produced by combustion vehicles, reducing pollution dramatically. As a result, CO2 emissions will be significantly reduced during a 20-year time frame. In addition, the study demonstrated the success of V2H in terms of electrical service continuity, energy self-sufficiency, and pricing. Finally, the study concluded by demonstrating a new research approach: Applying artificial intelligence (AI) and algorithms to predict meteorological data for optimal energy generation and EV availability and use.

Furthermore, several parameters should be considered when modelling MGs, such as pricing, generation of RnEs, and energy consumption for better-managing demand and load shedding. ML algorithms are prevalent wherever AI exists [39] and are classified as supervised or unsupervised [40]. Supervised ML algorithms are trained from a labelled training dataset. For instance, V2H applications can be charged and discharged case labels. Moreover, unsupervised ML algorithms lack labelled output variables, whereas the training dataset comprises only input variables, such as time-series data for load forecasting.

Researchers proposed a supervised ML model to predict EV availability in V2H services to understand the vehicle availability’s impact on capacity [19]. First, the model predicts the EV’s start and end locations (classification problem). Then, it predicts the total distance of the trip (a regression problem). Finally, the framework used for the previous three ML tasks is a light gradient boosted machine (LightGBM). This model was applied to five distinct vehicle usage profiles. First, the usage profiles predicted the EV availability by classifying the number of trips per week. Finally, the model was used on each user profile to earn the lowest electricity bill for each class. The findings of this evaluation indicated that this model predicts the EVs’ travelled distance and location with an accuracy of 85%.

Studies have been performed in which the authors proposed an unsupervised ML model for the economic analysis of the profits of dynamic pricing schemes in the different operation modes of an EV charging and discharging approach in HEMS [11]. The V2H operation mode revealed its relative financial advantage without affecting customer comfort. Furthermore, the results indicated that using V2H for minimizing electricity consumption from the grid in peak pricing periods is more beneficial than V2G or G2V.

Several subsets of ML algorithms, such as DL, utilize artificial neural networks (ANNs) by applying a layered concept hierarchy. Moreover, RL based on rewards and penalties has gained significant attention in dynamically changing environments, and there is a rising interest in data-driven techniques for modelling HEMSs. As a result, researchers are seeking elements that may provide insights and predictive analytics. Table 1 summarizes the algorithms used in the most recent literature (2019–2022) to predict several parameters in HEMSs that integrate V2H technology.

Table 1.

ML application in HEMS with a V2H unit.

Despite extensive research on residential energy management using the RL method, real-time smart energy scheduling algorithms, considering the operation of multiple smart home appliances, PV solar generation, SB, and V2H units, have not been studied. Thus, this study proposes a home centralized photovoltaic (HCPV) technique that uses deep learning (DL) and a model-free RL algorithm, the fuzzy Q-learning algorithm. The following are the main contributions of this paper:

- The proposed RL-HCPV model optimizes the energy usage of a smart home equipped with PV solar panels, stationary batteries, EVs, smart appliances, and V2H units;

- Various DL algorithms predict PV power generation, appliance consumption, and prices one hour in advance. Then, an RL algorithm is employed to make efficient power decisions based on the predicted data. Finally, the RL-HCPV utilizes fuzzy Q-learning to make optimal decisions during the on-peak and off-peak power supply to reduce costs and provide sustainable power for the appliances.

The proposed approach differs from existing model-based optimization methods for HEMSs in the following ways: (1) a model-free fuzzy Q-learning method is used to schedule energy for a smart home appliance, PV power generation, SB, and a V2H unit based on time-of-use (ToU) and real-time pricing (RTP) tariffs; and (2) prediction of appliance consumption, PV generation, prices, EV availability, and SOC using appropriate DL algorithm aids the proposed fuzzy Q-learning algorithm. Combined with the DL models, the suggested RL-HCPV algorithm lowers customer expenditure while maintaining the desired appliance operation. The simulations depict a single home with PV solar panels, SB, smart appliances, and a V2H device under ToU and RTP tariffs.

The simulation results demonstrate that the proposed DL and RL models can minimize the consumer’s power costs while maintaining user comfort. Furthermore, the RL-HCPV algorithm’s performance with and without the V2H unit is analysed, and it is confirmed that integrating the V2H unit results in considerable energy savings under the different penalty constraints of the reward function.

The remainder of this paper is organized as follows: Section 2 defines the system modelling of the PV generation, appliance energy consumption, V2H units, EV availability, and prices, Section 3 presents the proposed RL-HCPV algorithm formulation using various DL algorithms and fuzzy Q-learning methods, The simulation results for the proposed RL-HCPV model are provided in Section 4. The conclusions are provided in Section 5.

2. System Modelling

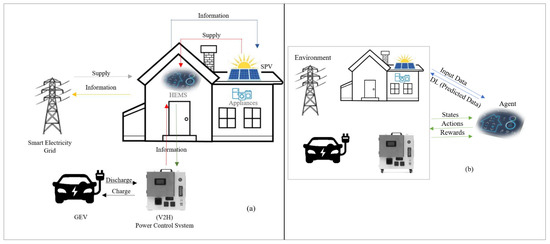

In this study, we analyse the instance in which the HEMS, that schedules and regulates the shiftable and non-shiftable household appliances under the ToU and RTP tariffs, performs automated energy management for a single house. Figure 1 depicts the conceptual system model for the proposed RL-HCPV and the data classification for the customer, weather station, and utility company.

Figure 1.

(a) A schematic of the RL-HCPV concept. (b) DL and RL in RL-HCPV.

2.1. Objective Function

This model aims to build an ideal smart MG that reduces investment and energy expenses while ensuring V2H technology integration. This is accomplished by increasing home autonomy while decreasing grid energy consumption. The investment cost is comprised of the purchase and installation of all equipment, including PV panels, SB, V2H units, electronics, and cables, as well as operation and maintenance costs. Moreover, the energy cost mainly consists of the grid operator’s energy bills. The objective function is to ensure the optimal operation of the smart MG with minimized operating costs. Furthermore, battery installation capacity should be adjusted by incorporating the deterioration cost. Equation (1) can be used to optimize the cost function [38,54]:

where corresponds to the operation cost, which is given by:

is the PV panels cost in ($/W), and is the SB cost in ($/J); is the PV power installed and is the battery capacity. denotes the cost of the electricity supplied by the utility, and it can be expressed as follows:

is the utility grid cost, which fluctuates during the day: off-peak periods are cheaper than on-peak periods; is the power provided by the utility, which is reduced in this study.

2.2. Power Balance Modelling

In residential homes, examples of both shiftable and non-shiftable appliances include lights, kitchen appliances (ovens, stoves, kettles), washing machines, dishwashers, air conditioners, and EVs. Kettles, stoves, refrigerators, and air conditioners are examples of non-shiftable equipment that have a steady power output for a predetermined amount of time. However, appliances, such as washing machines and EVs can be used at other times. For instance, shiftable equipment (washing machines) can be used to conserve energy during non-peak times.

Energy costs can be reduced by rescheduling shiftable equipment. However, this may impede user comfort. Furthermore, heavy electrical demand occurs between 5:00 and 9:00 p.m. as grid power prices steadily rise to their maximum. Therefore, a more flexible strategy is necessary to satisfy the comfort and financial requirements of residential customers. SB energy storage systems are an excellent choice for home power demand for peak shifting or shedding reduction. They can be classified as time and power-shiftable loads or power supply resources. In this study, SB and V2H units are utilized to balance demand and supply, reducing both expense and discomfort. The limits for PV, SB, and EV battery charging and discharging power and utility costs are provided by Equations (4)–(7).

where, is the power of PV at time t and is the maximum generated power by the PV panels. and are the maximum and minimum SOC limitations for the SB to avoid battery degradation. Likewise, and are the maximum and minimum SOC limitations for the EV battery. and are the maximum and minimum utility costs. Equation (8) presents the power balance over the operation.

represents the appliances’ power consumption at time t as the total power supplied by , , ; the grid, PV panels, and EV, respectively.

2.3. Modelling of Appliance Consumption

The household appliances in this research are classified based on their energy consumption patterns, as follows (See Table 2):

Table 2.

Home appliances’ parameters.

- Non-shiftable appliances;

- Shiftable interruptible appliances;

- Fixed appliances.

Non-shiftable appliances are designed to be easily utilized and cannot be stopped once the appliance is turned on. However, before operating, it might be moved from one time slot to another. Shiftable interruptible appliances may be turned off at any moment. They help save power costs when turned off during peak hours [54]. Fixed appliances are base appliances that are neither shiftable nor interruptible and require a continuous power supply. Non-shiftable appliances’ power consumption may be divided into two modes, signifying a high and low consumption. Consequently, the price of the non-shiftable appliance power consumption at time t () is calculated as follows (Equation (9)):

The user can customize the appliances based on their comfort by specifying issues, such as the preferred duration, temperature, and interval. For non-shiftable appliances, the operating time can be delayed to the off-peak period; in this case, the dissatisfaction price was indicated by the user. Thus, for non-shiftable appliances is the updated operating time of non-shiftable appliances because said appliances cannot be interrupted once they start working. CT(t) is the electricity tariff at time t, and is the power consumption of non-shiftable appliances during the mode at time t, is the power rating of the non-shiftable appliance. Moreover, indicates the predicted extra consumption cost at a specific time. Various requirements must be met before scheduling may begin. The following is how the cost of shiftable interruptible appliances is calculated (Equation (10)):

is the scheduled start time of the appliance and is the updated operating time. Moreover, is the power consumption of a shiftable appliance at time t. Additionally, the dissatisfaction price for shiftable appliances can be utilized to specify how quickly an appliance should complete its job; for example, minimizing the duration of electrical water or rescheduling the starting and ending time of a vacuum cleaner to clean the house before or after the peak hours. Finally, as a limitation, the appliance must complete its operation within the following twelve hours of being scheduled.

2.4. Modelling of PV Generation

Recently, solar PV panels have been widely used in many types of buildings and are likely to be one of the key renewable energy supplies in smart MGs. However, the generated electricity performance greatly depends on seasonal and climatic conditions. The unpredictability of the climate influences generated power and negatively impacts the grid’s stability, dependability, and operation. As a result, an accurate PV generation forecast is a critical requirement for ensuring the smart MG’s stability and dependability [55]. The following formula (Equation (11)) is used to determine a building’s solar PV power generation at each time interval [56]:

, , and indicate the area of the solar panels, PV efficiency, and PV irradiance, respectively. There are three different possibilities depending on the value of the net power (Equation (11)) [38]:

- = 0: Renewables completely meet the load, and no electricity from SB, EV, or utility is required;

- ≤ 0: SB, utilities, and EVs are necessary to compensate for the shortage of renewables (Equation (12)). The , the utility prices, and the availability of EVs determine the utilization of each of these resources;

- ≥ 0: Overproduction is stored in an SB-free capacity. Excess power is transferred to the grid if the battery is fully charged or the demand exceeds the maximum power limit. However, the EV battery is a backup power source if the EV is available or the grid is insufficient.

The SB is the first to intervene when there is a renewable energy shortage ( ≤ 0). It can supply the power and maintain the balance between supply and demand when it is fully charged and the demand is below its maximum discharge power limits. However, the SB supplies its maximum power while the grid supplies the remaining power if the discharge power exceeds the maximum SB discharge power limits. Although the grid is a major energy supply, its power might be restricted due to reliability and security concerns. Therefore, the grid provides the load if the demand satisfies the grid power constraints. Otherwise, the EV serves as a backup to provide an uninterrupted power supply. Finally, load shedding can be used if the EV maximum power limits are exceeded or the EV battery is prohibited due to the minimum .

In contrast, the SB is used to store the surplus generated power during the instance where there is overproduction ( ≥ 0). Moreover, the utility grid interferes and maintains the power supply and demand balance if the SB is completely charged or the demand exceeds the SB maximum charge power limits. This restriction on grid power aims to increase smart MG stability and autonomy. Thus, EV stores a portion of the generated power when the grid’s maximum power has been reached.

2.5. Modelling of EV SOC and Availability

The proposed management technique also considers the EV battery’s SOC which is a significant metric because it indicates the amount of energy stored. Equation (13) is a typical assessment of the EV battery’s SOC based on power charging and discharging.

is the starting SOC and is the EV battery’s power capacity. Given the EV battery’s lifespan, several limits have been placed on the power produced by the EV through the V2H unit and the battery SOC. In addition, represents when the EV battery is charged from the grid, is the energy supplied to the home, and is the energy returned to the grid.

According to Equation (14), EV power is limited between the lowest operational power () given to the EV and the largest power range () to be provided to the home or grid (V2G or V2H).

Equation (15) similarly indicates the inhibition of EV battery deep discharge and full charging by setting a minimum and maximum SOC., i.e., () and ().

Equation (16) [57] considers the charging and discharging efficiency () of the EV. Where, () is the cost of power loss, () is the EV range, () is the distance between the EV and home, () is the total EV battery capacity, and () is the power price at time t.

The ability to ensure if the EV is parked at home and available for V2H connections is defined as follows by Equation (17):

2.6. Modelling of SB

The energy balance equation for SB is given as follows (Equation (18)):

represents the energy storage level at time t and is energy loss. and represent the SB’s charging power and efficiency; and represent the discharging power and efficiency, respectively. The SB’s stored energy is constrained by its minimum and maximum values, and , as follows:

To guarantee that the battery is full, the energy storage level of the SB at the end of the day, T, is assumed to be identical to its original value, as follows:

The following constraints ensure that and are less than the SB’s maximum charging and discharging power ratings:

( t) and (t) are binary variables representing the charging and discharging operation modes of the SB. It is worth noting that Equation (21) requires the charging power to be zero if the SB is not in charging mode (i.e., (t) = 0) and (t) for the discharge mode. The SB can only function in the charging or discharging modes at a time, as defined by the following restriction (Equation (22)):

2.7. Modelling of TOU and RTP Pricing

Different power tariffs (e.g., ToU, CPP, and RTP.) have been introduced under the DR program to minimize electricity use during on-peak hours. The DR method incentivises load transfer from on-peak to off-peak hours, where costs are lower during off-peak hours. Prices increase when the demand for power rises during on-peak hours, and the extra demand is satisfied by expensive utility tariffs. Several factors, including the generation cost, determine the electricity price per unit. The key factors influencing electricity prices are distribution, transmission, and generation costs. In addition, prices are greatly impacted by the utility and consumer demand. The utility is a service provider that regulates power rates on a seasonal (ToU), weekly, daily, or hourly basis (RTP).

RTP and ToU are popular pricing tariffs across the world. However, the RTP fluctuates in response to the demand and wholesale price. This variation may cause the customer some discomfort. The ToU tariff is divided into three blocks: on-peak, shoulder-peak, and off-peak hours, with pricing remaining constant throughout the season during these periods. Utilities determine pricing rates for each block of ToU after monitoring the consumers’ past energy consumption behaviour.

The adjusted ToU tariff is based on extra generation costs and user load demand during particular hours. Changes are implemented per hour unit for on-peak and shoulder-peak hours. The formal expression of the adjusted ToU price signal () is given as (Equation (23)) [58]:

A indicates the off-peak hours and denotes the extra cost. Utilities set the pricing tariffs based on demand, with electricity costs set higher during on-peak hours and cheaper during off-peak hours. Off-peak hours are when households and companies consume the least amount of power.

The RTP price is an hour-ahead, day-ahead, and week-ahead price. The day-ahead and week-ahead pricing approaches are based on prior power usage, while the RTP hourly price is the daily prediction of demand and cost. It is simple to program electrical appliances for day-ahead and week-ahead pricing systems. However, hourly price scheduling is rather challenging because the price fluctuates between on-peak and off-peak hours. Load forecasting can be employed to address this issue [59]. However, shiftable appliances are adjusted from on-peak to off-peak prices. Vacuum cleaners, humidifiers, and hairdryers are regarded as shiftable appliances. The total hourly power consumed by all shiftable appliances (SH) in a day is determined as follows:

T = 24 and signifies the power rating for each shiftable appliance. An denotes the hourly status of each shiftable appliance (ON or OFF). Each appliance’s state for the next period is indicated as follows:

The second appliance type is non-shiftable, meaning the appliances are not interrupted or shifted. These types of appliances may not help with load scheduling or energy management. Non-shiftable appliances must follow a preset execution pattern. This study considers dishwashers and rice cookers as non-shiftable appliances. Equation (26) calculates the hourly total power consumption (kWh) in a day for non-shiftable appliances ().

where is the rating of power of non-shiftable appliances and indicates the status of each non-shiftable appliance (ON or OFF).

2.8. Modelling of V2H Unit

Equation (28) expresses the total power saved in the EV battery [12].

indicates the overall amount of energy in the EV battery, is the initial power stored in the EV battery, is the power transferred from the grid to the EV battery, indicates the power supplied from the EV to the home, is the discharged power from the EV to the grid, and indicates the total EV power consumption during the trip.

and are the EV charging and discharging power, respectively. Additionally, represents the EV connection mode (0 or 1).

The battery capacity () should not exceed the predetermined threshold to ensure that the remaining power can meet the driving pattern requirements. The maximum power for charging the EV battery is (). The EV battery SOC () should be within the identified limits. The charge inverter (V2H) is provided by () [38].

3. Proposed RL-HCPV

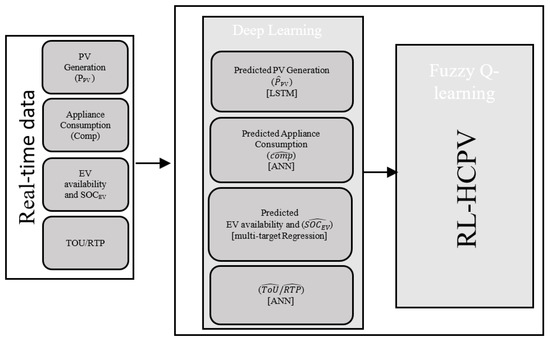

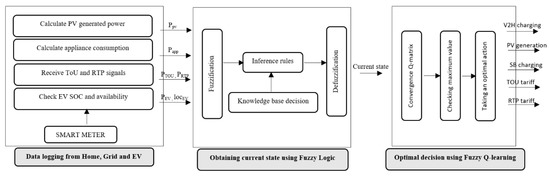

Reinforcement learning (RL) is an ML computational technique in which an agent attempts to increase the overall reward by executing actions and engaging with an anonymous environment. The suggested RL-based data-driven technique in this research is divided into two sections, as seen in Figure 2, (i) the appropriate DL algorithms are trained for predicting the future PV generation, appliance consumption, EV SOC, EV availability, and electricity prices (ToU and RTP); (ii) a fuzzy Q-learning algorithm is created for generating efficient one-hour-ahead DR decisions. The next subsections go into the specifics of this data-driven solution strategy.

Figure 2.

Schematic of the RL-HCPV system.

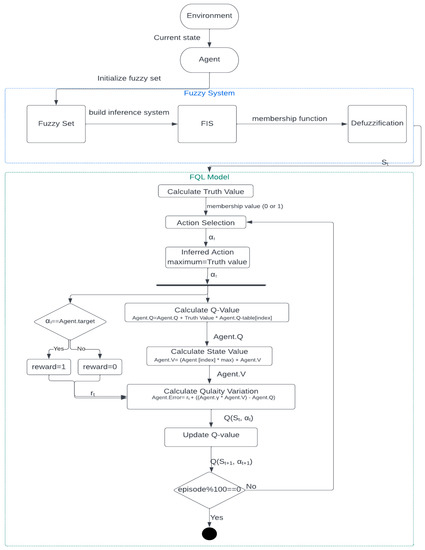

The one-hour-ahead prediction of PV generation, appliance consumption, EV SOC, EV availability, and electricity prices allows for the effective scheduling for charging/discharging the energy storage units, including SB and EV batteries, using a V2H unit. To ensure the optimal and intelligent management of the DR of this smart MG, an RL-HCPV algorithm is proposed in Algorithms 1 and 2 and Figure 3. The predicted data are used as input parameters in the fuzzy Q-learning model to create the ideal schedule for energy storage units charging and discharging while taking into account all energy and transport demand restrictions. This section explains the process of predicting the input parameters of the model. Several DL algorithms, including artificial neural networks (ANN), long short-term memory (LSTM), and multi-target regression, are used to predict the uncertainty parameters; PV generation, appliance consumption, EV SOC, EV availability, and electricity prices from historical time-series datasets.

| Algorithm 1 Proposed RL-HCPV Algorithm |

|

Figure 3.

FQL for the RL-HCPV system.

| Algorithm 2 Fuzzy Q-Learning (Decision Making) |

|

|

3.1. Prediction of Uncertainty Parameters of HEMS

- A.

- DL algorithms for the uncertainty prediction

Applying DLs to predicting PV power generation helps to overcome the limitations of statistical approaches in handling non-linear data caused by weather variations. DL is an effective prediction approach with many applications, such as appliance consumption, weather conditions, and wind speed prediction [31,46]. The ANN algorithm has become a prominent tool for predicting renewable power generation [60], electricity price [61], and load [62]. ANN is one of the easiest ways to set up building models, even with only minimal physics knowledge. ANNs are constructed by training, validating, and testing datasets. The input, hidden, and output layers are the three primary components of ANNs. The input layer receives the input data, and the hidden layer, that consists of numerous layers, examines the input data. Following the acquisition of analysed information from the hidden layer, the output layer provides the output.

- B.

- Uncertainty management strategy for RL-HCPV

Every hour, the inputs of the historical data of PV generations, appliance consumption, EV SOC, location, and electricity price data are used for the DL algorithms. The outputs are predicted for 24 h. The projected information will then be placed into the decision-making process, fuzzy Q-learning for efficient power decision-making.

- Datasets:

This study employs five datasets for the uncertainty prediction.

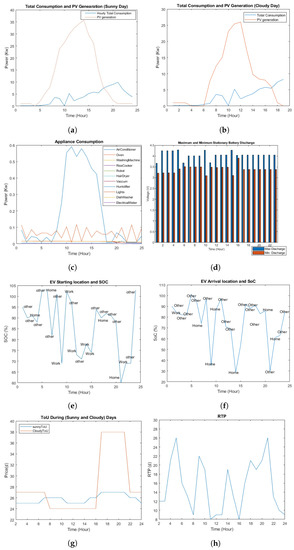

- An appliance consumption dataset [63]: A sample smart meter readings dataset was obtained from the Saudi Electricity Company (SEC). The data were collected for each appliance, as shown in Figure 4c during a 30-min time duration;

Figure 4. Input experimental profiles: (a,b) total appliance consumption and PV generation; (c) appliance consumption; (d) stationary battery SOC; (e,f) EV availability and SOC; (g) ToU; and (h) RTP.

Figure 4. Input experimental profiles: (a,b) total appliance consumption and PV generation; (c) appliance consumption; (d) stationary battery SOC; (e,f) EV availability and SOC; (g) ToU; and (h) RTP. - PV generation and SB datasets [64]: A daily solar resource report for King Abdullah City on the atomic and renewable energy dataset, as part of the renewable resource monitoring and mapping (RRMM) program, was imported. Several features are included in the dataset, such as site, latitude, longitude, date, air temperature, irradiance, and SPV;

- An EV dataset [65]: Data from the “Smart-Grid Smart-City Electric Vehicle Trial Data” were used to generate the EV dataset. The data describes the EV trips during the trial, including odometer readings, speed, distance, and the battery SOC at the beginning and end of each trip;

- Pricing dataset [66]: Data from SEC were used for RTP and ToU tariffs. The dataset contains the hourly prices (peak, shoulder peak, and off-peak) for each month during the whole year.

- Preprocessing:

Preprocessing is essential for transforming the imported data into a format that will be more useful for loading into our model. The environment-gathered raw data were not appropriate for further processing due to the possibility that they could contain data that are unnecessary, duplicated, or missing. Thus, it is preprocessed in order to build a clear dataset, which includes label encoding, null value elimination of the situations by deleting duplicate data, and removing special characters, such as ($). Table 3 demonstrates the preprocessing for each dataset.

Table 3.

Preprocessing for each dataset.

In this study’s proposed model, to deal with the uncertainties of PV generations, appliance consumption, EV SOC, availability, and electricity prices, several DL algorithms were applied to predict the future trends of these uncertainty parameters. Several DL algorithms (ANN, LSTM, SVR, multi-target regression, DT) have been employed to decide the most accurate algorithm for each uncertainty parameter. Therefore, the appropriate DL algorithm is set for each uncertainty parameter (see Table 4). The input for each DL algorithm is shown in Figure 4.

Table 4.

DL algorithms for each uncertainty parameter.

In this model, each uncertainty parameter was manipulated separately to ensure the most accurate output predictions. The DL models were implemented for each uncertainty parameter using Python. First, an ANN model for appliance consumption was applied using a training dataset in the input layer. Three hidden layers were included, with 32 neurons for each layer and one output layer for the predicted power consumption values. The model displayed the accuracy of mean absolute error (MAE) at 0.21 and mean square error (MSE) at 0.22. In addition, LSTM modelling was applied for PV power generation. The PV generation training dataset was used in the input layer. Three hidden layers were included, with 96 neurons for each layer and one output layer for the predicted PV solar generation values. The model results displayed the accuracy of MAE at 0.37 and MSE at 0.27. Multi-target regression is advantageous for EV SOC and availability because it predicts several continuous target variables concurrently based on the same input dataset. Two target columns were considered; EV arrival location and SOC at every time interval (one hour). The model displayed the accuracy of MAE at 0.31 and MSE at 0.15. Finally, ANN modelling was applied for ToU and RTP tariffs. The observations, including the trained dataset prices, were regarded as the inputs for the neural networks at every time interval (one-hour). The input layer had 15 neurons for the prices training dataset. Three hidden layers were included, 32, 18, and 9, respectively, to obtain the output layer for the predicted prices. The model displayed the accuracy of MAE at 0.37 and MSE at 0.32.

3.2. RL for Decision-Making in HEMS

- A.

- Fuzzy Q-Learning Overview

The fuzzy Q-learning algorithm is a fuzzy variation of the Q-learning method. The input states and their related fuzzy sets must be identified before building an FIS to combine with the Q-learning method [67]. As a new ML method, the fuzzy Q-learning algorithm [67] is frequently employed in decision-making to maximize cumulative rewards. This algorithm’s primary mechanism is to establish a Q-table in which the Q-value, Q(,) in each iteration is modified using Equation (31) until the convergence condition is met.

The discount rate defines the relationship between future and present rewards. It accepts a value between 0 and 1, where 0 indicates that the agent depends solely on the present reward, whereas 1 implies that the agent will compete for future rewards. While represents the learning rate and indicates how much the new reward influences the previous value of Q(,). For example, indicates that the information currently collected is negligible and the received reward will not affect the Q-value. Whereas means that only the most recent information is considered.

- B.

- Fuzzy Q-Learning model for decision making in the RL-HCPV

Under ToU and RTP dynamic power pricing and varied energy consumption patterns, RL produced a real-time smart energy scheduling option for a smart home. The RL process is defined by a state space and a numerical reward given to the agent after performing a specific action to assess the new state. The fuzzy Q-learning in our proposed model consists of state-space and reward function, as follows:

- State-space implementation using fuzzy logic

The state space here was represented by 16 states, as displayed in Table 4. PV power generation and appliance consumption were classified as low or high levels to simplify the model and decrease calculation time. The SB and EV battery SOC was defined as efficient or not efficient, while the EV availability was defined as available or unavailable. In addition, the weather was either cloudy or sunny. Finally, the prices were categorized as cheap and expensive;

- Action space

The suggested model aims to supply the smart home with power from PV, V2H, and SB during on-peak hours. During a power outage, the power supply is scheduled during off-peak hours when electricity rates are cheaper. As a result, the activities can be classified as V2H charging, SB charging, ToU tariff, and RTP tariff. PV-generated energy serves as the standard power source. Based on the situation, the agent chooses one action from action space A (see Table 5) that is given by:

Table 5.

Fuzzy Q-learning states and actions for RL-HCPV.

- Reward function implementation using fuzzy logic

Once the agent performs a random action and observes the new state, it earns a numerical reward . The value of this reward reflects the adequacy of the agent’s action for a present state. Thus, fuzzy logic is utilized to evaluate the action to respond to a specific state. The output of the FIS system’s state space is , which indicates the present state. Then, will be used as the input variable of the reward function’s FIS. Therefore, evaluations of the random actions resulting from FIS make up fuzzy Q-learning. The fuzzy sets are defined as very good (VG), good (G), and bad (B) actions for each action taken (output). According to the membership function (MF) discourse, all possible acts are either rated as 0 or 1. First, the state-space FIS system will determine the present state. Next, the agent will choose a random action from the action space depending on the current space. The FIS system of the reward function also evaluates all potential actions with a value of 0 or 1. Then, the agent will receive a numerical value that represents the action taken.

- C.

- Fuzzy Q-Learning management strategy for RL-HCPV

The fuzzy Q-learning method was used to determine the best policy after obtaining the expected energy prices, PV solar generation, appliance use, and EV SOC. In this scenario, RL is used to determine the ideal DR, given the fluctuating cost of electricity and the variety of energy usage patterns.

The agent can employ the off-policy to learn by performing a random action in a specific state while not adhering to a current policy. Thus, fuzzy Q-learning supports the off-policy by making the best decision given the present state. This implies that no policies are required during the training process. Initially, all of the Q-values’ pairs in the Q-table should be set to zero. Then, the agent will interact with the environment and use Equation (33) to update the Q-values after each action.

In this paper, a random action called exploring was used. In this scenario, a suitable number of repetitions will be required to explore and update the values of for all of the Q-value pairs at least once. The training phase of the model suggested by this study was set to 5000 iterations so that the agent could visit all pairings and gather new information. As a result, when the Q-table converges, the optimal Q-values will be obtained.

Figure 5 depicts the technique of employing fuzzy Q-learning in the RL-HCPV algorithm. Generally, Figure 3 illustrates how fuzzy logic decides on a particular state and reward. The parameters and are set at 0.9 and 0.1, respectively, and the entries in the Q-value table are set to zeros. All possible actions are listed for each present state, and then an action is randomly selected. When the stated action is finished, the agent will use fuzzy logic in this step to detect the new state and the reward. Equation (33) will calculate the maximum Q-value before updating the state-action Q-value pairs. Finally, the present state will then be the following state.

Figure 5.

Flowchart describing the proposed optimal decision-making method.

4. Results and Discussion

4.1. Implementing the Proposed Model for the RL-HCPV

The proposed RL-HCPV method is based on the interactions between PV power generation, appliance power consumption, electricity pricing, V2H unit, EV SOC, and availability. A smart meter uses the smart house to gather power data, such as PV power generation, appliance consumption, , and energy price signals from the utility, which are then sent to the HEMS. When the EV returns home, HEMS will receive a signal indicating EV availability and the SOC percentage. The data collected help to determine whether the PV-generated power and are sufficient to meet the demand. If the EV can transmit electricity to the home via V2H, HEMS may be the best alternative in a power outage.

The simulation runs for 24 h, using an hour-long time step. First, the power utility provides the price tariff signal. Then, the user is expected to depart at 7:00 a.m. and return at 2:00 p.m. There are two peak times: 2:00–5:00 p.m., when the household member returns from work, and 7:00–9:00 p.m., when they begin cooking, watching TV, and other activities. On sunny and cloudy days, the off-peak time begins at midnight and lasts until early morning. The suggested approach sought to understand how using DL, RL, and V2H affected power consumption efficiency while reducing expenses. The two smart MG design scenarios emphasize the necessity of including V2H as a different energy source in the RL-HCPV model.

The simulation was tested on a Windows 11 PC with an AMD Ryzen CPU clock speed of 1.80 GHz and 16 GB RAM. The simulation software used was the Real-time Simulink tool from MATLAB R2022a, while the programming software was Python.

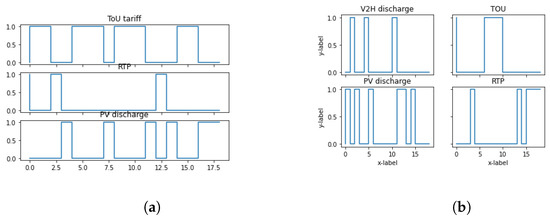

- Case1:

- Smart MG without V2H unit

Depending on the relationship between the overall power usage, the price of electricity, and the Q-values, the standard SG, in this case, comprises PV solar panels, SB, and appliances. All of the executed activities are depicted in Figure 6a. For example, during 5:00–7:00 p.m., the total energy consumption is low (3–5.7kWh), the ToU energy price is 0.24 $/kW, RTP is 0.21 $/kW, and PV generation is low (5 kWh). As a result, the current state is = low; low; expensive; expensive. According to the Q-value, the RTP action has the highest Q-value for this state.

Figure 6.

(a) Actions during Case 1. (b) Actions during Case 2.

Later on after 7:00 a.m., the system switches to another state, since demand exceeds (16 kWh), prices are (ToU 0.28 $/kWh and RTP 0.27 $/kWh), and PV generation is high (10 kWh). The current state is = high; high; expensive; expensive. The PV discharging action has the highest Q-value for this condition according to the Q-value. Furthermore, the PV generation is low, but the SB saves the excess power during sunny days (over 10 kWh). Thus, the current state is = low; high; expensive; expensive. The SB discharge mode provides energy to appliances while lowering power costs. Finally, from 9:00 p.m.–04:00 a.m., consumption is 0.95 kWh, PV generation is 0.3 kWh, and SB power is 3.5 kW. The current states are all low. Thus, ToU (0.21 $/kWh) will be activated, which is cheaper compared to RTP (0.27 $/kWh).

- Case2:

- Smart MG with V2H unit

Depending on the link between the overall power usage, the price of electricity, and the Q-values, the smart MG, in this instance, is made up of PV solar panels, SB, V2H units, and appliances. All of the executed activities are depicted in Figure 6b. First, the RL-HCPV checks the EV availability and SOC efficiencies. Thus, the V2H discharging mode is initiated when appliance power demand is high, and PV power output is low, typically between 7:00 and 9:00 p.m. when prices are also high. Thus, the current state is = V2H discharge; low; high; expensive; expensive.

4.2. Performance of the Proposed RL-HCPV

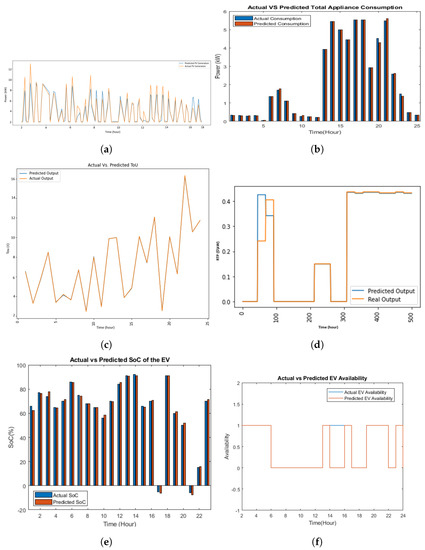

- Performance of the DL Algorithms

The performance of the LSTM model for PV generation is shown in Figure 7a. In addition, the hourly prediction of the appliances’ consumption using ANN compared with actual consumption is shown in Figure 7b. The multi-target regression for the hourly EV availability and SOC prediction is compared with the actual power generation, as shown in Figure 7c,d. Finally, the performance of the ANN model for the price tariff is shown in Figure 7e,f. The blue line in these graphs reflects the hourly predicted data, whereas the orange line represents the actual values. The actual and predicted data are similar, while slight inaccuracies can be observed in certain time intervals. In summary, the accuracy of the applied DL algorithms is listed in Table 6. As a result, the suggested DL algorithms for each uncertainty parameter generate precise and realistic predicted values, that can help in the decision-making process for DR management.

Figure 7.

Actual and predicted (a) PV generation; (b) appliance consumption; (c) EV SOC; (d) EV availability; (e) ToU pricing; and (f) RTP.

Table 6.

Accuracy of the DL algorithms.

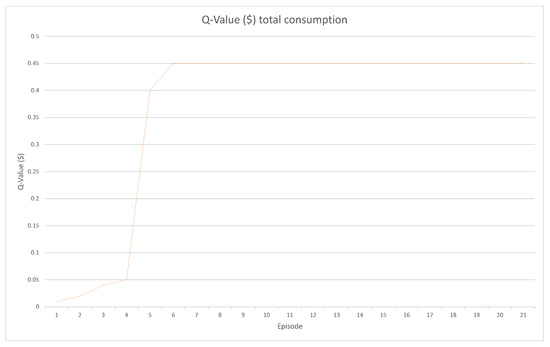

- Performance of Fuzzy Q-learning

In RL, the Q-value is used to evaluate an action in a given state. The convergence can measure the learning rate of the Q-value. As a result, an RL algorithm is said to have converged when the learning curve becomes flat and no longer grows. The convergence of the Q-value for our FQL model is displayed in Figure 8. This graph shows that the agent converges with the highest Q-value. The Q-value is initially low because the agent makes bad action decisions, but it rises as the agent learns the behaviours through trial and error, eventually achieving the maximum Q-value.

Figure 8.

The convergence of the Q-value.

- Performance comparison with/without V2H

While implementing the model, the main focus was minimizing costs and customer discomfort by ensuring sustainable power delivery. This is achieved by comparing the appliance consumption in various situations to the ToU and RTP tariffs.

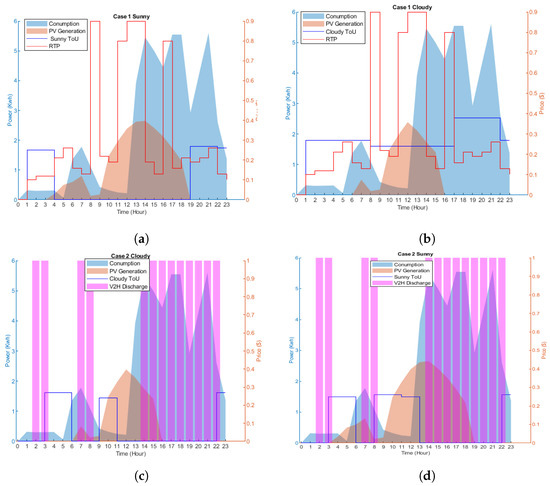

The hourly appliance power consumption shown in Figure 9 illustrates the effectiveness of the proposed RL-HCPV algorithm. As displayed in this figure, when the V2H unit is not included, the dependence on grid prices grows from roughly seven hours on sunny days to 15 h on cloudy days. In contrast, when the V2H unit is included, the dependence on grid prices on sunny and cloudy days is around three hours. The figure shows that when the V2H unit is incorporated, the suggested solution decreases reliance on grid costs by 38% on sunny days and 24% on cloudy days. Thus, integrating the V2H unit reduces reliance on the utility, indicating a significant reduction in electricity costs.

Figure 9.

Dependence on ToU/RTP with/without V2H. (a) Case 1 during sunny days (b) Case 1 during cloudy days (c) Case 2 during sunny days (d) Case 2 during cloudy days.

4.3. Electricity Bill Analysis

According to the Saudi Electricity Company (SEC), the energy consumption is calculated using the following equation:

Table 7 compares the prices for a day of 14 KW consumption in four scenarios. The comparison shows that including the V2H unit can remove the penalties and significantly reduce the bill compared to other cases that do not include the V2H. Furthermore, when using RL, scheduling consumption when costs are the lowest significantly impacts RTP, resulting in lower costs than in other situations. Therefore, using both V2H and RL is the most effective way to lower the electricity bill.

Table 7.

Comparison of electricity bills with and without V2H and RL.

4.4. Analysis of the Computational Capabilities

A mathematical HEMS model typically consists of discrete and continuous binary variables. The MILP problem related to HEMS was calculated using a number of heuristic algorithms, including differential evolution (DE), binary particle swarm (BPS), particle swarm optimization (PSO), binary learning differential evolution (BLDE), and genetic algorithm (GA). However, the computing demands of these approaches and algorithms are high and require tremendous processing power to meet the requirements for the best solution. As a result, the ideal convergence of these methodologies and algorithms is considered unstable.

Hence, this study utilized DL and RL to overcome these issues. Thus, Table 8 compares the obtained DL and RL results with the GA algorithm used to analyse our proposed model’s productivity and effectiveness. The reduced cost statistics and average computational time of GA have been obtained for scheduled power optimizations. The result indicates that the RL generates noticeably better computational results in less time.

Table 8.

Statistics comparison of the simulated algorithms’ performance.

5. Conclusions

This study proposed an RL-HCPV system for intelligently managing the DR of a smart home. The proposed model aims to make optimal decisions during on-peak and off-peak power supply to minimize costs and ensure sustainable electricity for the appliances. Several DL algorithms have been applied to the real-time data to deal with the uncertainty of PV generation, appliance power consumption, prices, EV availability, and SOC. DL algorithms have examined each uncertainty parameter to determine the most accurate model. Consequently, ANN is applied for the PV generation, ToU, and RTP tariffs. A multi-target regression is applied to predict EV availability and SOC. In addition, LSTM is applied to predict appliance energy consumption. Finally, the predicted data are transferred into the RL algorithm, the fuzzy Q-learning model, for efficient DR decision-making. Moreover, simulations demonstrate that:

- When the V2H unit is included, the proposed RL-HCPV algorithm can minimize the reliance on grid prices by 38% on sunny days and 24% on cloudy days;

- Using both V2H and RL is a most effective way to lower the electricity bill;

- RL generates noticeably better computational results in less time.

Future analyses should consider consumer-friendly actions, such as selling surplus electricity to the grid to boost user revenue. In addition, smart MGs require an autonomous sustainable power supply plan for household appliances.

Author Contributions

O.A. planned the presented idea and wrote the manuscript with support from S.A.b.S. and B.A.Z., who contributed to the original draft preparation, supervised the findings and final revisions. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Nomenclature

| Operation costs | |

| power cost of the energy provided by the main grid | |

| PV panels cost in ($/W) | |

| installed PV power | |

| Battery cost in ($/J) | |

| Battery capacity | |

| Utility power cost | |

| Power fed or supplied by utility grid | |

| Dissatisfaction price for shiftable/non-shiftable appliance | |

| Power consumption at the mode | |

| Power rating of the shiftable/non-shiftable appliance | |

| Cost of shiftable/non-shiftable appliances | |

| CT(t) | Electricity tariff at time t |

| Start time of the shiftable appliance | |

| Newly scheduled time of the shiftable appliance | |

| Net power generated by PV | |

| EV location | |

| (t) | Power consumption of device n at time t |

| Predicted consumption cost at time t. | |

| Solar panels area | |

| PV efficiency | |

| r(t) | Solar irradiation |

| PV fully satisfies the load | |

| Power generated from EV | |

| Power generated from SB | |

| Initial state of charge | |

| Power capacity of the battery | |

| Discharged power from the grid to charge the EV battery | |

| Minimum operating power | |

| Maximum operating power | |

| Power storage level at time t | |

| Power loss | |

| T | Time interval in hours |

| (t) | Charging/ discharging power |

| Efficiencies of the SB | |

| Power fed to the grid. | |

| Discharged power to the household appliances | |

| h | Home |

| Charging and discharging operation modes of the SB 0/1 | |

| ToU price signal | |

| Extra cost | |

| SH/NSH | Shiftable/non-shiftable appliances |

| Power rating of each appliance in the shiftable class. | |

| (t) | ON or OFF status of each appliance |

| Real-time pricing for Shiftable/non-shiftable appliances | |

| Net stored power in the EV battery | |

| Initial stored power in the EV battery | |

| Total consumed power by the EV during the trip | |

| Power rating | |

| Charging/discharging power | |

| Status of the EV connection mode 0/1 | |

| Discount rate | |

| r | reward |

| Learning rate | |

| State | |

| Action |

References

- Corral-García, J.; Lemus-Prieto, F.; González-Sánchez, J.-L.; Pérez-Toledano, M.-Á. Analysis of Energy Consumption and Optimization Techniques for Writing Energy-Efficient Code. Electronics 2019, 8, 1192. [Google Scholar] [CrossRef]

- Aliero, M.S.; Asif, M.; Ghani, I.; Pasha, M.F.; Jeong, S.R. Systematic Review Analysis on Smart Building: Challenges and Opportunities. Sustainability 2022, 14, 3009. [Google Scholar] [CrossRef]

- Hua, M.; Chen, G.; Zhang, B.; Huang, Y. A hierarchical energy efficiency optimization control strategy for distributed drive electric vehicles. Proc. Inst. Mech. Eng. Part J. Automob. Eng. 2019, 233, 605–621. [Google Scholar] [CrossRef]

- Chen, G.; Hua, M.; Zong, C.; Zhang, B.; Huang, Y. Comprehensive chassis control strategy of FWIC-EV based on sliding mode control. Iet Intell. Transp. Syst. 2019, 13, 703–713. [Google Scholar] [CrossRef]

- Chen, G.; He, L.; Zhang, B.; Hua, M. Dynamics integrated control for four-wheel independent control electric vehicle. Int. J. Heavy Veh. Syst. 2019, 26, 515–534. [Google Scholar] [CrossRef]

- Liu, W.; Hua, M.; Deng, Z.; Huang, Y.; Hu, C.; Song, S.; Xia, X. A systematic survey of control techniques and applications: From autonomous vehicles to connected and automated vehicles. arXiv 2023, arXiv:2303.05665. [Google Scholar]

- Hafeez, G.; Wadud, Z.; Khan, I.U.; Khan, I.; Shafiq, Z.; Usman, M.; Khan, M.U.A. Efficient Energy Management of IoT-Enabled Smart Homes Under Price-Based Demand Response Program in Smart Grid. Sensors 2020, 20, 3155. [Google Scholar] [CrossRef] [PubMed]

- Jabash Samuel, G.K.; Jasper, J. MANFIS based SMART home energy management system to support SMART grid. Peer Peer Netw. Appl. 2020, 13, 2177–2188. [Google Scholar]

- Sharma, B.; Maherchandani, J.K. A Review on Integration of Electric Vehicle in Smart Grid: Operational modes, Issues and Challenges. In Proceedings of the 2022 International Conference on Computer Communication and Informatics (ICCCI), Coimbatore, India, 25–27 January 2022; pp. 1–5. [Google Scholar]

- Pearre, N.S.; Ribberink, H. Review of research on V2X technologies, strategies, and operations. Renew. Sustain. Energy Rev. 2019, 105, 61–70. [Google Scholar] [CrossRef]

- Park, S.-H.; Hussain, A.; Kim, H.-M. Impact Analysis of Survivability-Oriented Demand Response on Islanded Operation of Networked Microgrids with High Penetration of Renewables. Energies 2019, 12, 452. [Google Scholar] [CrossRef]

- Alfaverh, F.; Denai, M.; Sun, Y. Electrical vehicle grid integration for demand response in distribution networks using reinforcement learning. Iet Electr. Syst. Transp. 2021, 11, 348–361. [Google Scholar] [CrossRef]

- ur Rehman, U.; Yaqoob, K.; Khan, M.A. Optimal power management framework for smart homes using electric vehicles and energy storage. Int. J. Electr. Power Energy Syst. 2022, 134, 107358. [Google Scholar] [CrossRef]

- Elkholy, M.H.; Metwally, H.; Farahat, M.A.; Nasser, M.; Senjyu, T.; Lotfy, M.E. Dynamic centralized control and intelligent load management system of a remote residential building with V2H technology. Energy Storage 2022, 52, 104839. [Google Scholar] [CrossRef]

- Georgiou, G.S.; Christodoulides, P.; Kalogirou, S.A. Optimizing the energy storage schedule of a battery in a PV grid-connected nZEB using linear programming. Energy 2020, 208, 118177. [Google Scholar] [CrossRef]

- Nasab, S.S.R.; Nasrabadi, A.T.; Asadi, S.; Taghia, S.A.H.S. Investigating the probability of designing net-zero energy buildings with consideration of electric vehicles and renewable energy. Eng. Constr. Archit. Manag. 2021, 29, 4061–4087. [Google Scholar] [CrossRef]

- Rout, S.; Biswal, G.R. Bidirectional EV integration in home load energy management using swarm intelligence. Int. J. Ambient. Energy 2022, 2022, 1–8. [Google Scholar] [CrossRef]

- Dileep, A.; Beena, B.M. Global Distribution and Price Prediction of Electric Vehicles Using Machine Learning. In Proceedings of the 2022 2nd International Conference on Intelligent Technologies (CONIT), Hubballi, India, 24–26 June 2022; pp. 1–6. [Google Scholar]

- Aguilar-Dominguez, D.; Ejeh, J.; Dunbar, A.D.; Brown, S.F. Machine learning approach for electric vehicle availability forecast to provide vehicle-to-home services. Energy Rep. 2021, 7, 71–80. [Google Scholar] [CrossRef]

- Khezri, R.; Steen, D. Vehicle to Everything (V2X)-A Survey on Standards and Op-erational Strategies. In Proceedings of the 2022 IEEE International Conference on Environment and Electrical Engineering and 2022 IEEE Industrial and Commercial Power Systems Europe (EEEIC/I&CPS Europe), Prague, Czech Republic, 28 June–1 July 2022; pp. 1–6. [Google Scholar]

- Xu, X.; Jia, Y.; Xu, Y.; Xu, Z.; Chai, S.; Lai, C.S. A multi-agent reinforcement learning-based data-driven method for home energy management. IEEE Trans Smart Grid 2020, 11, 3201–3211. [Google Scholar] [CrossRef]

- Yan, F.; Wang, J.; Du, C.; Hua, M. Multi-Objective Energy Management Strategy for Hybrid Electric Vehicles Based on TD3 with Non-Parametric Reward Function. Energies 2023, 16, 74. [Google Scholar] [CrossRef]

- US Energy Information Administration. Independent Statistics and Analysis. 2021. Available online: https://www.eia.gov/totalenergy/data/annual/ (accessed on 12 April 2023).

- Gschwendtner, C.; Sinsel, S.R.; Stephan, A. Vehicle-to-X (V2X) implementation: An overview of predominate trial configurations and technical, social and regulatory challenges. Renew. Sustain. Energy Rev. 2021, 145, 110977. [Google Scholar] [CrossRef]

- Ahmadian, A.; Mohammadi-Ivatloo, B.; Elkamel, A. A review on plug-in electric vehicles: Introduction, current status, and load modeling techniques. J. Mod. Power Syst. Clean Energy 2020, 8, 412–425. [Google Scholar] [CrossRef]

- Vadi, S.; Bayindir, R.; Colak, A.M.; Hossain, E. A Review on Communication Standards and Charging Topologies of V2G and V2H Operation Strategies. Energies 2019, 12, 3748. [Google Scholar] [CrossRef]

- Chatzivasileiadi, A.; Ampatzi, E.; Knight, I.P. Electrical Energy Storage Sizing and Space Requirements for Sub-Daily Autonomy in Residential Buildings. Energies 2022, 15, 1145. [Google Scholar] [CrossRef]

- Solomon, E.; Hagos, I.G.; Khan, B. Outage management in a smart distribution grid integrated with PEV and its V2B applications. In Active Electrical Distribution Network; Elsevier: Amsterdam, The Netherlands, 2022; pp. 217–226. [Google Scholar]

- Das, S.; Dai, R.; Koperski, M.; Minciullo, L.; Garattoni, L.; Bremond, F.; Francesca, G. Toyota smarthome: Real-world activities of daily living. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 833–842. [Google Scholar]

- Cui, B.; Lee, S.; Im, P.; Koenig, M.; Bhandari, M. High resolution dataset from a net-zero home that demonstrates zero-carbon living and transportation capacity. Data Brief 2022, 45, 108703. [Google Scholar] [CrossRef]

- Mehrjerdi, H. Resilience oriented vehicle-to-home operation based on battery swapping mechanism. Energy 2021, 218, 119528. [Google Scholar] [CrossRef]

- Shamami, M.S.; Alam, M.S.; Ahmad, F.; Shariff, S.M.; AlSaidan, I.; Rafat, Y.; Asghar, M.J. Artificial Intelligence-Based Performance Optimization of Electric Vehicle-to-Home (V2H) Energy Management System. Sae Int. J. Sustain. Transp. Energy Environ. Policy 2020, 1, 115–125. [Google Scholar] [CrossRef]

- Ouramdane, O.; Elbouchikhi, E.; Amirat, Y.; Le Gall, F.; Sedgh Gooya, E. Home Energy Management Considering Renewable Resources, Energy Storage, and an Electric Vehicle as a Backup. Energies 2022, 15, 2830. [Google Scholar] [CrossRef]

- Sundararajan, R.S.; Iqbal, M.T. Dynamic Modelling of a Solar Energy System with Vehicle to Home Option for Newfoundland Conditions. Eur. J. Eng. Technol. Res. 2021, 6, 16–23. [Google Scholar] [CrossRef]

- Higashitani, T.; Ikegami, T.; Uemichi, A.; Akisawa, A. Evaluation of residential power supply by photovoltaics and electric vehicles. Renew Energy 2021, 178, 745–756. [Google Scholar] [CrossRef]

- Nakano, H.; Nawata, I.; Inagaki, S.; Kawashima, A.; Suzuki, T.; Ito, A.; Kempton, W. Aggregation of V2H systems to participate in regulation market. IEEE Trans. Autom. Sci. Eng. 2020, 18, 668–680. [Google Scholar] [CrossRef]

- Kosinka, M.; Slanina, Z.; Petruzela, M.; Blazek, V. V2H control system software analysis and design. In Proceedings of the 2020 20th International Conference on Control, Automation and Systems (ICCAS), Busan, Republic of Korea, 13–16 October 2020; pp. 972–977. [Google Scholar]

- Slama, S.B. Design and implementation of home energy management system using vehicle to home (H2V) approach. J. Clean. Prod. 2021, 312, 127792. [Google Scholar] [CrossRef]

- Vermesan, O.; John, R.; Pype, P.; Daalderop, G.; Kriegel, K.; Mitic, G.; Lorentz, V.; Bahr, R.; Sand, H.E.; Bockrath, S.; et al. Automotive intelligence embedded in electric connected autonomous and shared vehicles technology for sustainable green mobility. Front. Future Transp. 2021, 2, 688482. [Google Scholar] [CrossRef]

- Shahriar, S.; Al-Ali, A.R.; Osman, A.H.; Dhou, S.; Nijim, M. Machine Learning Approaches for EV Charging Behavior: A Review. IEEE Access 2020, 8, 168980–168993. [Google Scholar] [CrossRef]

- Yang, Y.; Wang, S. Resilient residential energy management with vehicle-to-home and photovoltaic uncertainty. Int. J. Electr. Power Energy Syst. 2021, 132, 107206. [Google Scholar] [CrossRef]

- Borge-Diez, D.; Icaza, D.; Açıkkalp, E.; Amaris, H. Combined vehicle to building (V2B) and vehicle to home (V2H) strategy to increase electric vehicle market share. Energy 2021, 237, 121608. [Google Scholar] [CrossRef]

- Mohammad, A.; Zuhaib, M.; Ashraf, I.; Alsultan, M.; Ahmad, S.; Sarwar, A.; Abdollahian, M. Integration of Electric Vehicles and Energy Storage System in Home Energy Management System with Home to Grid Capability. Energies 2021, 14, 8557. [Google Scholar] [CrossRef]

- Ye, Y.; Qiu, D.; Wang, H.; Tang, Y.; Strbac, G. Real-Time Autonomous Residential Demand Response Management Based on Twin Delayed Deep Deterministic Policy Gradient Learning. Energies 2021, 14, 531. [Google Scholar] [CrossRef]

- Kelm, P.; Mieński, R.; Wasiak, I. Energy Management in a Prosumer Installation Using Hybrid Systems Combining EV and Stationary Storages and Renewable Power Sources. Appl. Sci. 2021, 11, 5003. [Google Scholar] [CrossRef]

- Rafique, S.; Hossain, M.J.; Nizami, M.S.H.; Irshad, U.B.; Mukhopadhyay, S.C. Energy management systems for residential buildings with electric vehicles and distributed energy resources. IEEE Access 2021, 9, 46997–47007. [Google Scholar] [CrossRef]

- Eseye, A.T.; Lehtonen, M.; Tukia, T.; Uimonen, S.; Millar, R.J. Optimal energy trading for renewable energy integrated building microgrids containing electric vehicles and energy storage batteries. IEEE Access 2019, 7, 106092–106101. [Google Scholar] [CrossRef]

- Lee, S.; Jin, H.; Vecchietti, L.F.; Hong, J.; Har, D. Short-term predictive power man-agement of PV-powered nanogrids. IEEE Access 2020, 8, 147839–147857. [Google Scholar] [CrossRef]

- Yousefi, M.; Hajizadeh, A.; Soltani, M.N.; Hredzak, B. Predictive home energy management system with photovoltaic array, heat pump, and plug-in electric vehicle. IEEE Trans. Ind. Inform 2020, 17, 430–440. [Google Scholar] [CrossRef]

- Duman, A.C.; Erden, H.S.; Gönül, Ö.; Güler, Ö. A home energy management system with an integrated smart thermostat for demand response in smart grids. Sustain. Cities Soc. 2021, 65, 102639. [Google Scholar] [CrossRef]

- Liu, X.; Wu, Y.; Wu, H. PV-EV Integrated Home Energy Management Considering Residential Occupant Behaviors. Sustainability 2021, 13, 13826. [Google Scholar] [CrossRef]

- Tran, A.T.; Kawashima, A.; Inagaki, S.; Suzuki, T. Design of a Home Energy Management System Integrated with an Electric Vehicle (V2H+ HPWH EMS). In Design and Analysis of Distributed Energy Management Systems; Springer: Berlin/Heidelberg, Germany, 2020; pp. 47–66. [Google Scholar]

- Lee, S.; Choi, D.-H. Reinforcement Learning-Based Energy Management of Smart Home with Rooftop Solar Photovoltaic System, Energy Storage System, and Home Appliances. Sensors 2019, 19, 3937. [Google Scholar] [CrossRef]

- Ashenov, N.; Myrzaliyeva, M.; Mussakhanova, M.; Nunna, H.K. Dynamic Cloud and ANN based Home Energy Management System for End-Users with Smart-Plugs and PV Generation. In Proceedings of the 2021 IEEE Texas Power and Energy Conference (TPEC), College Station, TX, USA, 2–5 February 2021; pp. 1–6. [Google Scholar]

- Kim, M.; Kim, H.; Jung, J.H. A Study of Developing a Prediction Equation of Electricity Energy Output via Photovoltaic Modules. Energies 2021, 14, 1503. [Google Scholar] [CrossRef]

- Vulkan, A.; Kloog, I.; Dorman, M.; Erell, E. Modeling the potential for PV installation in residential buildings in dense urban areas. Energy Build. 2018, 169, 97–109. [Google Scholar] [CrossRef]

- Li, J.; Liu, C.; Wang, Y.; Chen, R.; Xu, X. Bi-level programming model approach for electric vehicle charging stations considering user charging costs. Electr. Power Syst. Res. 2023, 214, 108889. [Google Scholar] [CrossRef]

- Khalid, A.; Javaid, N.; Mateen, A.; Ilahi, M.; Saba, T.; Rehman, A. Enhanced Time-of-Use Electricity Price Rate Using Game Theory. Electronics 2019, 8, 48. [Google Scholar] [CrossRef]

- Aslam, S. An Optimal Home Energy Management Scheme Considering Grid Connected Microgrids with Day-Ahead Weather Forecasting Using Artificial Neural Network. Master’s Thesis, University Islamabad, Islamabad, Pakistan, 2018. [Google Scholar]

- Fu, W.; Wang, K.; Li, C.; Tan, J. Multi-step short-term wind speed forecasting approach based on multi-scale dominant ingredient chaotic analysis, improved hybrid GWO-SCA optimization and ELM. Energy Convers. Manag. 2019, 187, 356–377. [Google Scholar] [CrossRef]