Improvement of Auxiliary Diagnosis of Diabetic Cardiovascular Disease Based on Data Oversampling and Deep Learning

Abstract

1. Introduction

1.1. Background

1.2. Related Work

1.3. Contributions

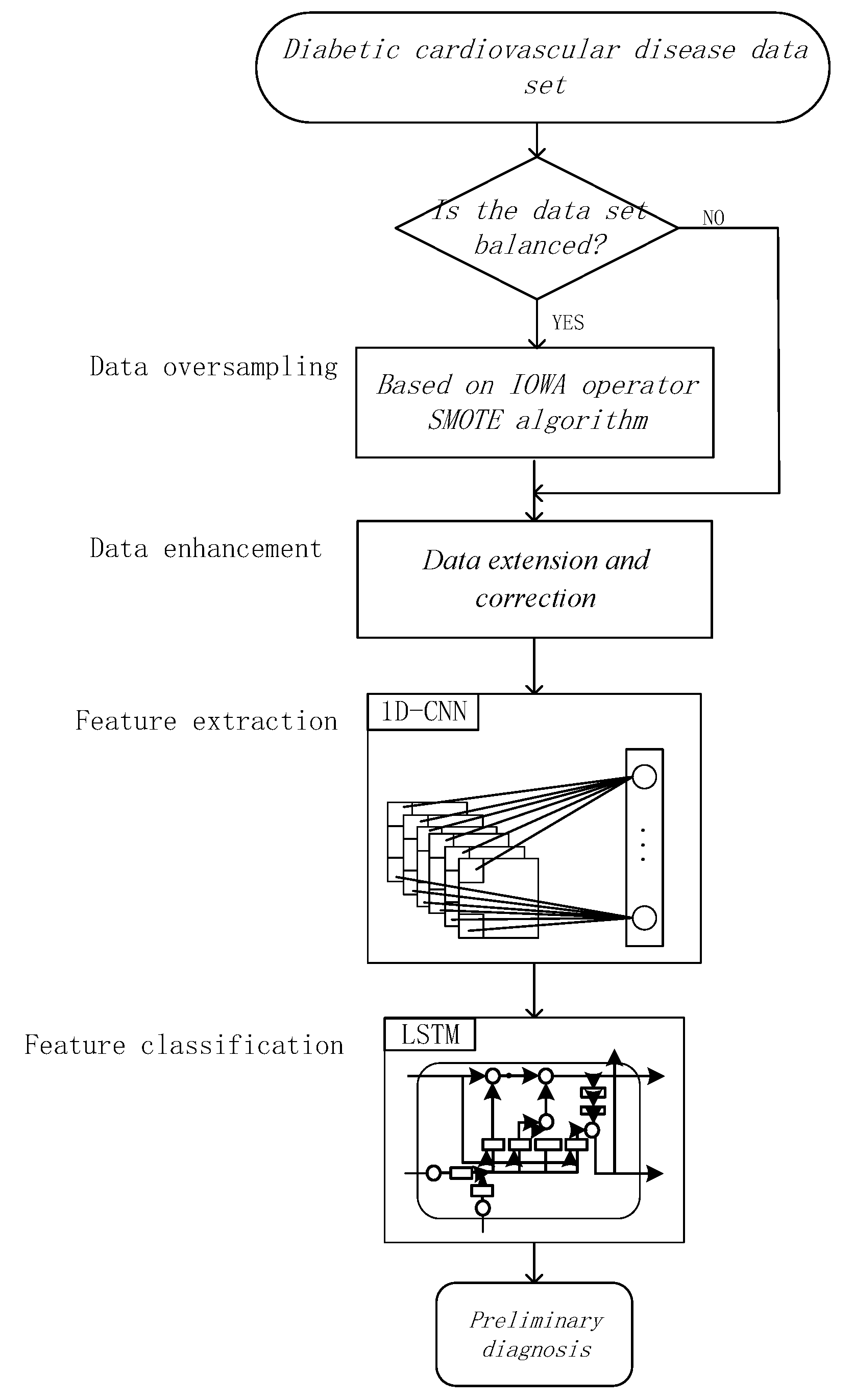

- (1)

- At the data level, we use the weighted Minkowski distance to define the IOWA operator SMOTE for some sample spacing [18]. From the eigen weights, the weighted Minkowski distance of the IOWA operator can be obtained to calculate the distance to the nearest neighboring point. Then, by SMOTE interpolation, increase the distribution density of a few samples and combine a few samples to achieve sample balance.

- (2)

- At the algorithmic level, extended learning, the 1D-CNN and LSTM networks are proposed [19,20,21,22] for data classification. Extended learning has been proven to be a fast and effective technique, especially in the case of very limited raw data [23]. We combine the 1D-CNN with LSTM to process the encoded data searching for the features hidden in the original data, and also introduce attention mechanisms in memory neurons to learn associative features of distant data.

- (3)

- We used human hematology data and human urine data to illustrate the algorithm initially and compared the performance of different algorithms. Compared with electronic health records, medical testing information with unified standards has higher reliability [24]. The human hematology tests used in this paper include blood glucose tests, blood routine tests, urine routine tests and biochemical tests. Different parameters are often obtained by different methods, which can reflect different health conditions of the human body [25,26,27]. The proposed data synthesis based on weight and extension algorithms has been preliminarily applied to the diagnosis of diabetic cardiovascular disease using textual data from Human Hematology and Urology.

2. Materials and Methods

2.1. Data Synthesis Based on Weight and Extension Algorithms

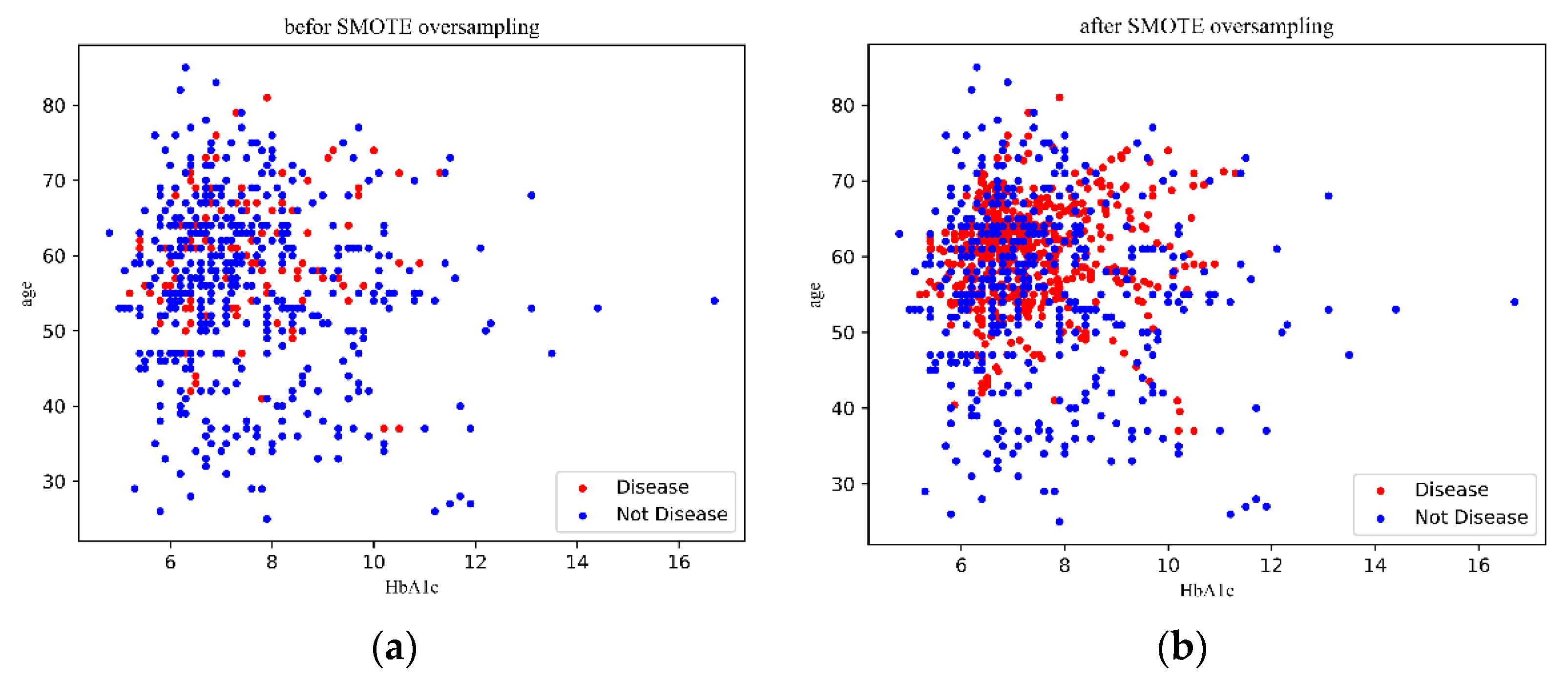

2.2. SMOTE Algorithm Based on IOWA Operator

- ①

- Fisher scores of each feature variable in dataset X were calculated to obtain the weight matrix w for all features by Equation (4):

- ②

- The minority samples in the training set were taken out. The variant form of Minkowski distance weighted by the IOWA was considered to define the neighborhood using Equation (5):

- ③

- According to the preset sampling ratio, several samples are randomly selected from , then the new samples are inserted into these samples.

- ④

- Repeat steps two and three until the dataset has the appropriate number of samples.

2.3. Data Enhancement

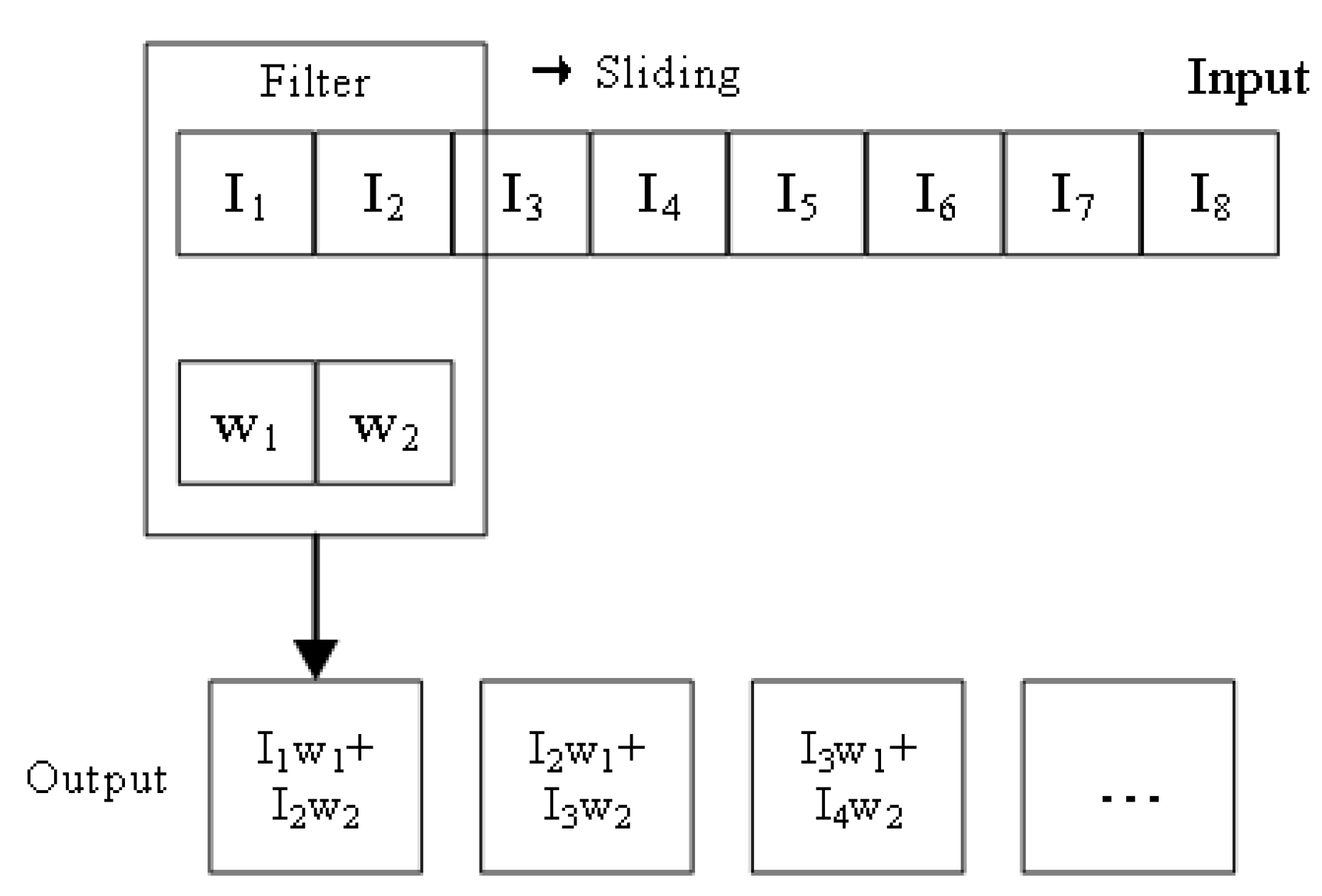

2.4. 1D-Convolutional Neural Network

2.5. Long Short-Term Memory Networks

3. Experiments

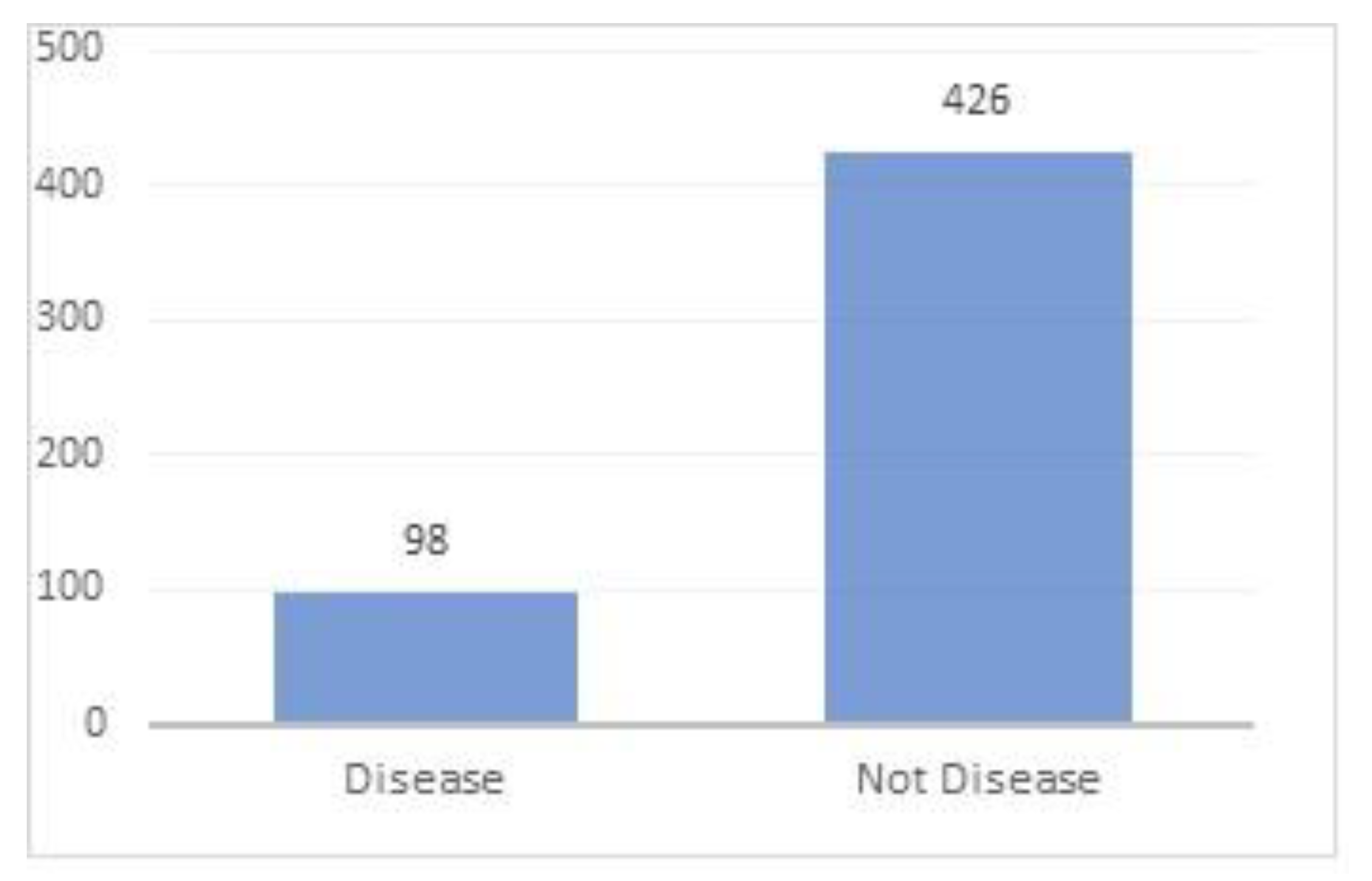

3.1. Dataset and Preprocessing

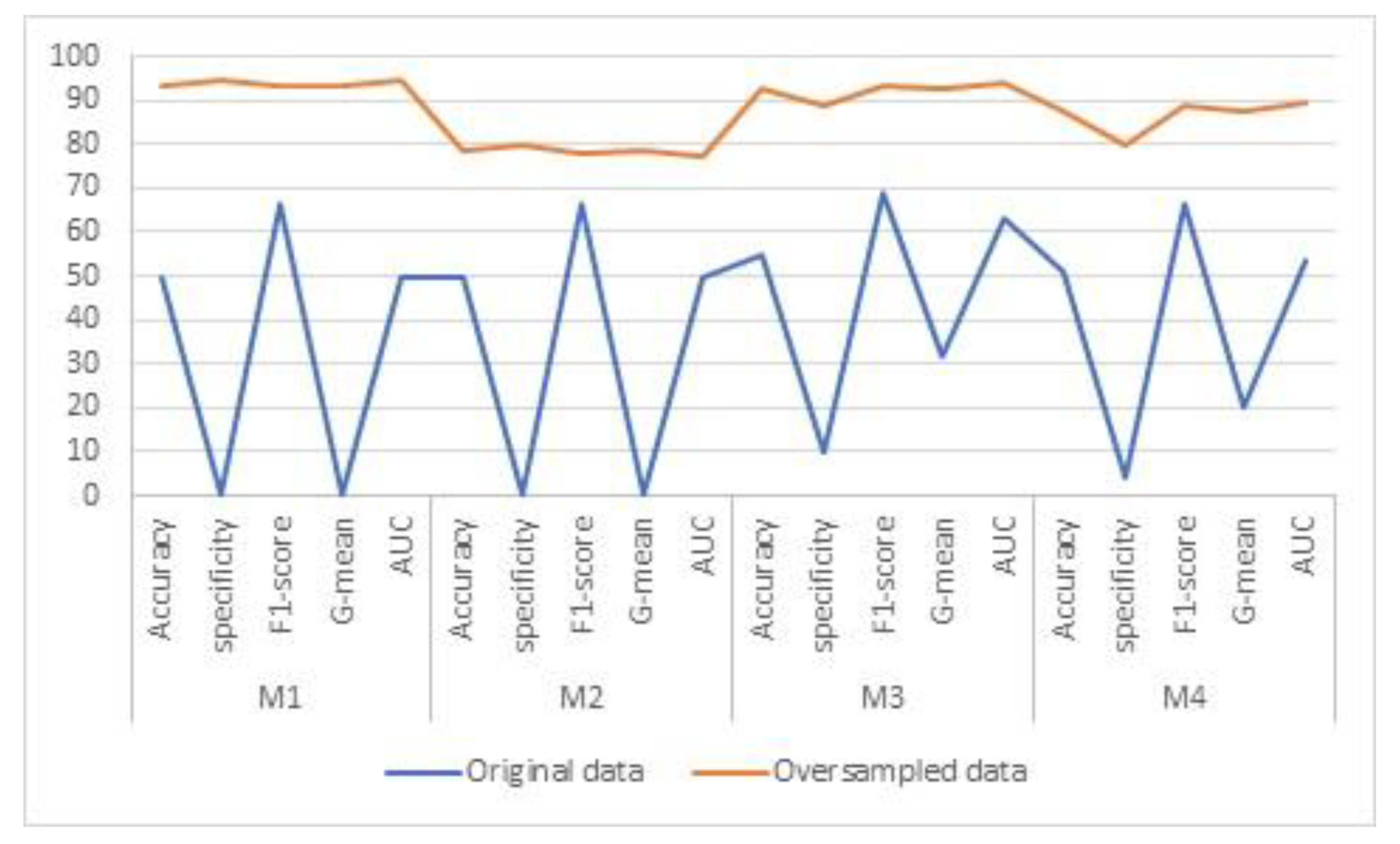

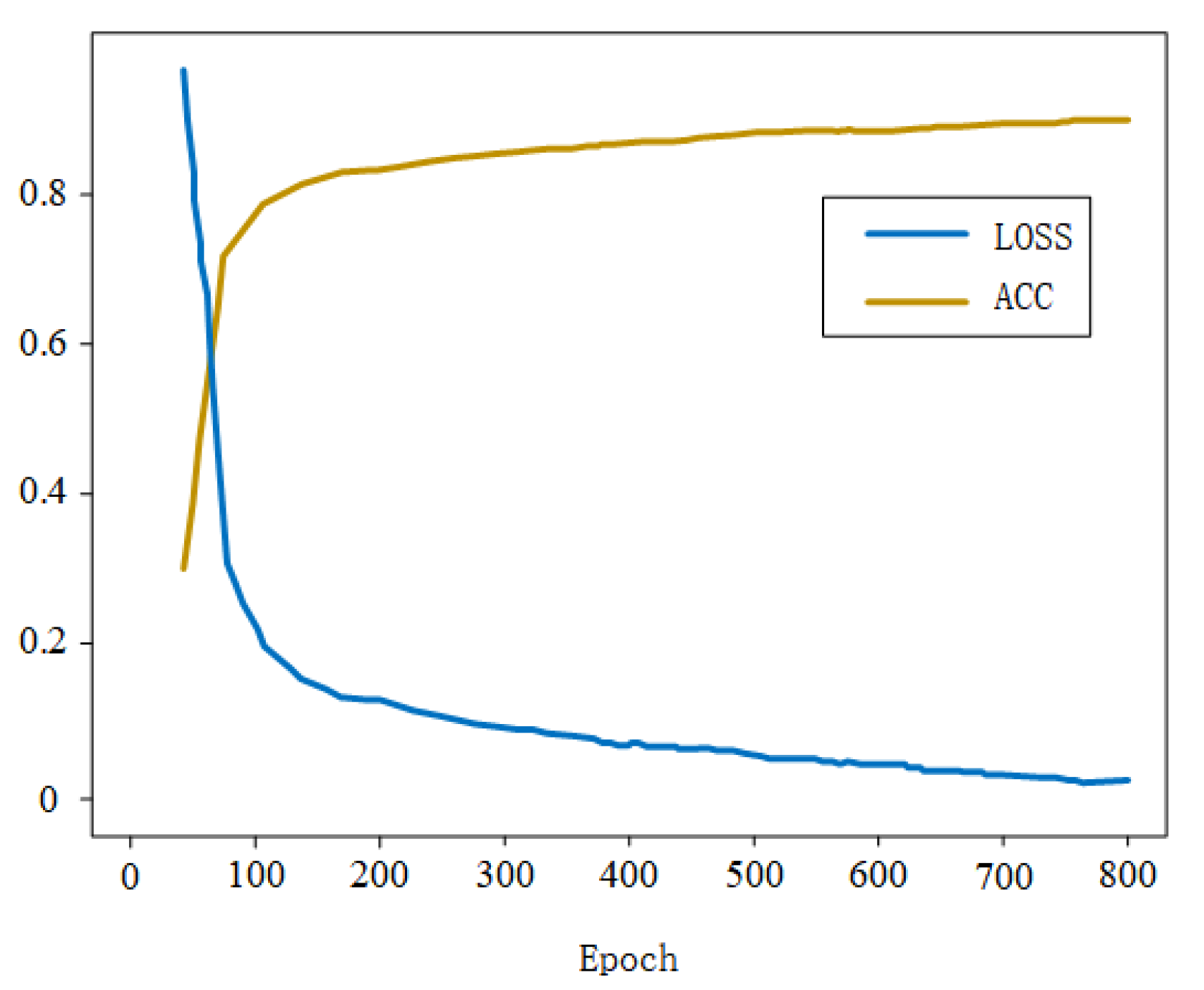

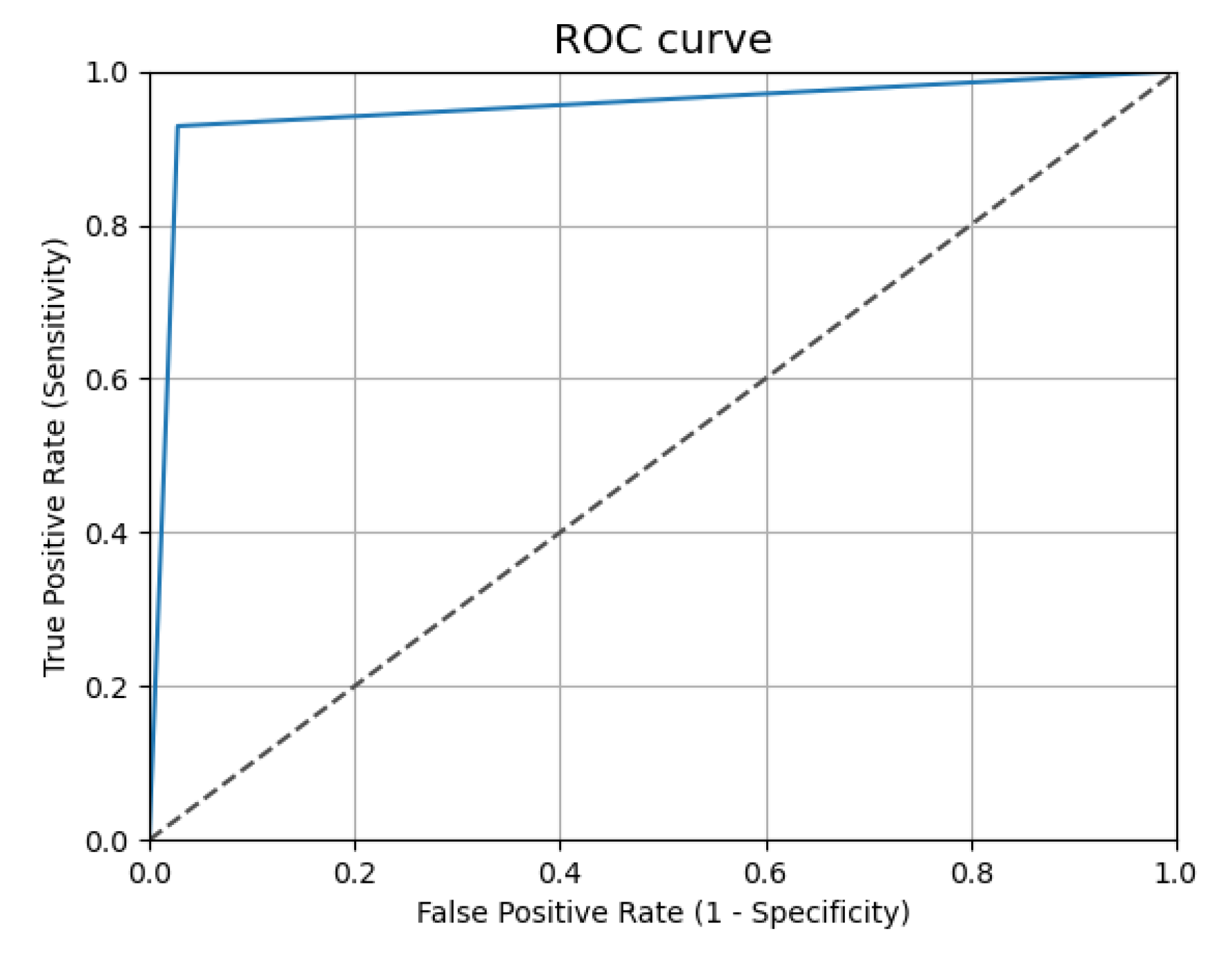

3.2. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ogurtsova, K.; Da Rocha Fernandes, J.D.; Huang, Y.; Linnenkamp, U.; Guariguata, L.; Cho, N.H.; Cavan, D.; Shaw, J.E.; Makaroff, L.E. IDF diabetes atlas global estimates for the prevalence of diabetes for 2015 and 2040. Diabetes Res. Clin. Pract. 2017, 128, 40–50. [Google Scholar] [CrossRef] [PubMed]

- Padmalayam, I. Targeting mitochondrial oxidative stress through lipoic acid synthase: A novel strategy to manage diabetic cardiovascular disease. Cardiovasc. Hemato.l Agents Med. Chem. 2012, 10, 223–233. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Zhang, Q.; Zhao, G.; Liu, G.; Liu, Z. Deep learning-based method of diagnosing hyperlipidemia and providing diagnostic markers automatically. Diabetes Metab. Syndr. Obes. Targets Ther. 2020, 13, 679–691. [Google Scholar] [CrossRef] [PubMed]

- National Research Council. Toward Precision Medicine: Building a Knowledge Network for Biomedical Research and a New Taxonomy of Disease; National Academies Press: New York, NY, USA, 2011. [Google Scholar]

- Zhang, Z.; Tang, M.A. Domain-based, adaptive, multi-scale, inter-subject sleep stage classification network. Appl. Sci. 2023, 13, 3474. [Google Scholar] [CrossRef]

- Kolachalama, V.B.; Garg, P.S. Machine learning and medical education. NPJ Digital. Med. 2018, 1, 54. [Google Scholar] [CrossRef]

- Rajendra, A.U.; Faust, O.; Adib, K.N.; Suri, J.S.; Yu, W. Automated identification of normal and diabetes heart rate signals using nonlinear measures. Comput. Biol. Med. 2013, 43, 1523–1529. [Google Scholar] [CrossRef]

- Gu, P.; Yang, Y. Oversampling algorithm oriented to subdivision of minority class in imbalanced data set. Comput. Eng. 2017, 43, 241–247. [Google Scholar]

- Liu, X.Y.; Wu, J.; Zhou, Z.H. Exploratory under-sampling for class-imbalance learning. IEEE Trans. Syst. Man Cybern. Part B (Cybern.) 2008, 39, 539–550. [Google Scholar]

- Sun, J.; Knoop, S.; Shabo, A.; Carmeli, B.; Sow, D.; Syed-Mahmood, T.; Rapp, W.; Kohn, M.S. IBM’s health analytics and clinical decision support. Yearb. Med. Inform. 2014, 23, 154–162. [Google Scholar] [CrossRef]

- Sun, Y.; Wong, A.K.; Kamel, M.S. Classification of imbalanced data: A review. Int. J. Pattern Recognit. Artif. Intell. 2009, 23, 687–719. [Google Scholar] [CrossRef]

- Douzas, G.; Bacao, F.; Last, F. Improving imbalanced learning through a heuristic oversampling method based on k-means and SMOTE. Inf. Sci. 2018, 465, 1–20. [Google Scholar] [CrossRef]

- Jedrzejowicz, J.; Jedrzejowicz, P. GEP-based classifier for mining imbalanced data. Expert Syst. Appl. 2021, 164, 114058. [Google Scholar] [CrossRef]

- Liu, X.; Zhou, H.; Wang, Z.; Liu, X.; Li, X.; Nie, C.; Li, Y. Fully convolutional neural network deep learning model fully in patients with type 2 diabetes complicated with peripheral neuropathy by high-frequency ultrasound image. Comput. Math. Methods Med. 2022, 2022, 5466173. [Google Scholar] [CrossRef]

- Lipton, Z.C.; Kale, D.C.; Elkan, C.; Wetzel, R. Learning to diagnose with LSTM recurrent neural networks. arXiv 2015, arXiv:1511.03677. [Google Scholar]

- Yi, Z.; Li, S.; Yu, J.; Tan, Y.; Wu, Q.; Yuan, H.; Wang, T. Drug-drug Interaction extraction via recurrent neural network with multiple attention layers. In Advanced Data Mining and Applications: 13th International Conference, ADMA 2017, Singapore, 5–6 November 2017; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Antoniou, A.; Storkey, A.; Edwards, H. Data Augmentation Generative Adversarial Networks; The University of Edinburgh: Edinburgh, UK, 2018. [Google Scholar]

- Merigó, J.M.; Casanovas, M. A new Minkowski distance based on induced aggregation operators. Int. J. Comput. Intell. Syst. 2011, 4, 123–133. [Google Scholar] [CrossRef]

- Yang, W.; Zhao, M.; Huang, Y.; Zheng, Y. Adaptive online learning based robust visual tracking. IEEE Access 2018, 6, 14790–14798. [Google Scholar] [CrossRef]

- Gers, F.A.; Schmidhuber, J.; Cummins, F. Learning to forget: Continual prediction with LSTM. Neural Comput. 2014, 12, 2451–2471. [Google Scholar] [CrossRef]

- Cireşan, D.; Schmidhuber, J. Multi-column deep neural networks for offline handwritten Chinese character classification. In Proceedings of the 2015 International Joint Conference on Neural Networks (IJCNN), Killarney, Ireland, 12–16 July 2015; IEEE: Piscataway, NJ, USA, 2015. [Google Scholar] [CrossRef]

- Amini, P.; Ahmadinia, H.; Poorolajal, J.; Amiri, M.M. Evaluating the high-risk groups for Suicide: A comparison of logistic regression, Support Vector Machine, Decision Tree and Artificial Neural Network. Iran. J. Public Health 2016, 45, 1179–1187. [Google Scholar]

- Simard, P.Y.; Steinkraus, D.; Platt, J.C. Best practices for convolutional neural networks applied to visual document analysis. In Proceedings of the 7th International Conference on Document Analysis and Recognition, IEEE Computer Society, Edinburgh, UK, 3–6 August 2003; p. 958. [Google Scholar]

- Liu, Y.; Zhang, Q.; Zhao, G.; Qu, Z.; Liu, G.; Liu, Z.; An, Y. Detecting diseases by human-physiological parameter-based deep learning. IEEE Access 2018, 7, 2169–3536. [Google Scholar] [CrossRef]

- Fram, E.B.; Moazami, S.; Stern, J.M. The effect of disease severity on 24-hour urine parameters in kidney stone patients with type II diabetes. Urology 2016, 87, 52–59. [Google Scholar] [CrossRef]

- Salhen, K.A.; Mahmoud, A.Y. Hematological profile of patients with type 2 diabetic mellitus in El-Beida, Libya. Ibnosina J. Med. Biomed. Sci. 2017, 9, 76–80. [Google Scholar] [CrossRef]

- Acharya, U.R.; Faust, O.; Sree, S.V.; Ghista, D.N.; Dua, S.; Joseph, P.; Ahamed, V.I.T.; Janarthanan, N.; Tamura, T. An integrated diabetic index using heart rate variability signal features for diagnosis of diabetes. Comput. Methods Biomech. Biomed. Eng. 2013, 16, 222–234. [Google Scholar] [CrossRef] [PubMed]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Chiclana, F.; Herrera-viedma, E.; Herrea, F.; Alonso, S. Some induced ordered weighted averaging operators and their use for solving group decision-making problems based on fuzzy preference relations. Eur. J. Oper. Res. 2007, 182, 383–399. [Google Scholar] [CrossRef]

| Discrete Index | Coding Standard |

|---|---|

| Gender | Male = 01, Female = 10 |

| LEU | “−” = 001, “+/−” = 010, “+” = 100 |

| ERY | |

| NIT | |

| PRO | |

| GLC | |

| KET | |

| URO | |

| BR | |

| Urine color | Light yellow = 0001, Amber = 0010, Brown = 0100, Red = 1000 |

| Accuracy | Specificity | F1-Score | G-Mean | AUC | |

|---|---|---|---|---|---|

| A0 | 50 | 0 | 66.67 | 0 | 50 |

| A1 | 59.35 | 49.15 | 63.77 | 58.13 | 60.03 |

| A2 | 88 | 91.3 | 87.5 | 87.9 | 94.72 |

| A3 | 66.94 | 58.62 | 65.81 | 68.22 | 70.7 |

| A4 | 72.22 | 65.03 | 71.77 | 72.87 | 74.12 |

| A5 | 60.75 | 57.66 | 58.87 | 61.11 | 61.55 |

| A6 | 76 | 68 | 77.78 | 75.58 | 77.6 |

| A7 | 93.57 | 94.37 | 93.33 | 93.5 | 94.37 |

| M1 | M2 | M3 | M4 | ||

|---|---|---|---|---|---|

| Original data | Unextended and Uncorrected | 52.29 | 52.86 | 55.71 | 55 |

| Extended Uncorrected | 50 | 52.14 | 52.14 | 50 | |

| Extension and Correction | 50 | 50 | 55 | 50.71 | |

| Oversampled data | Unextended and Uncorrected | 80 | 78.51 | 91.43 | 87.85 |

| Extended Uncorrected | 92.14 | 78.51 | 91.43 | 91.43 | |

| Extension and Correction | 93.57 | 78.51 | 92.85 | 75.71 | |

| M1 | M2 | M3 | M4 | ||

|---|---|---|---|---|---|

| Original data | Unextended and Uncorrected | 11.43 | 5.71 | 12.85 | 10 |

| Extended Uncorrected | 0 | 5.71 | 5.71 | 2.86 | |

| Extension and Correction | 0 | 0 | 10 | 4.29 | |

| Oversampled data | Unextended and Uncorrected | 95.71 | 81.43 | 95.71 | 95.71 |

| Extended Uncorrected | 71.43 | 80 | 95.71 | 71.43 | |

| Extension and Correction | 94.37 | 80 | 88.57 | 80 | |

| M1 | M2 | M3 | M4 | ||

|---|---|---|---|---|---|

| Original data | Unextended and Uncorrected | 68 | 67.96 | 69 | 68.97 |

| Extended Uncorrected | 0.66 | 67.31 | 67.32 | 66.02 | |

| Extension and Correction | 0.66 | 66.67 | 68.97 | 66.34 | |

| Oversampled data | Unextended and Uncorrected | 91.85 | 78.52 | 91.33 | 91.33 |

| Extended Uncorrected | 79.54 | 78.56 | 91.33 | 75.6 | |

| Extension and Correction | 93.33 | 78.56 | 92.76 | 87.5 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, W.; Guo, Y.; Liu, Y. Improvement of Auxiliary Diagnosis of Diabetic Cardiovascular Disease Based on Data Oversampling and Deep Learning. Appl. Sci. 2023, 13, 5449. https://doi.org/10.3390/app13095449

Yang W, Guo Y, Liu Y. Improvement of Auxiliary Diagnosis of Diabetic Cardiovascular Disease Based on Data Oversampling and Deep Learning. Applied Sciences. 2023; 13(9):5449. https://doi.org/10.3390/app13095449

Chicago/Turabian StyleYang, Weiming, Yujia Guo, and Yuliang Liu. 2023. "Improvement of Auxiliary Diagnosis of Diabetic Cardiovascular Disease Based on Data Oversampling and Deep Learning" Applied Sciences 13, no. 9: 5449. https://doi.org/10.3390/app13095449

APA StyleYang, W., Guo, Y., & Liu, Y. (2023). Improvement of Auxiliary Diagnosis of Diabetic Cardiovascular Disease Based on Data Oversampling and Deep Learning. Applied Sciences, 13(9), 5449. https://doi.org/10.3390/app13095449