Cloud–Edge Hybrid Computing Architecture for Large-Scale Scientific Facilities Augmented with an Intelligent Scheduling System

Abstract

1. Introduction

1.1. Classical Approaches at the Synchrotron Radiation Facilites

1.2. Shanghai Synchrotron Radiation Facility (SSRF)

2. Materials and Methods

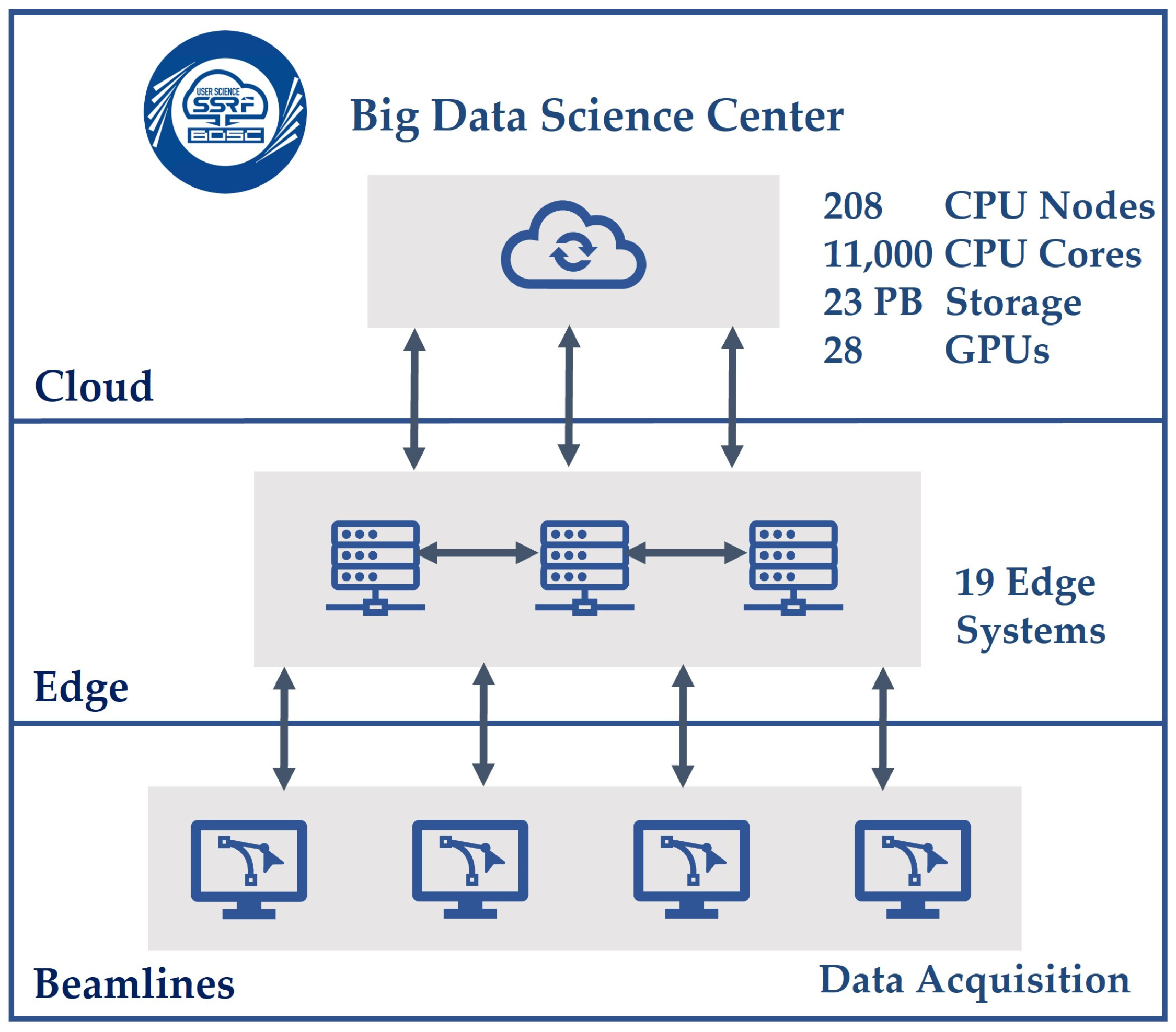

2.1. Architecture Design of the Cloud–Edge Hybrid Intelligent System (CEHIS)

2.1.1. Computing Infrastructure

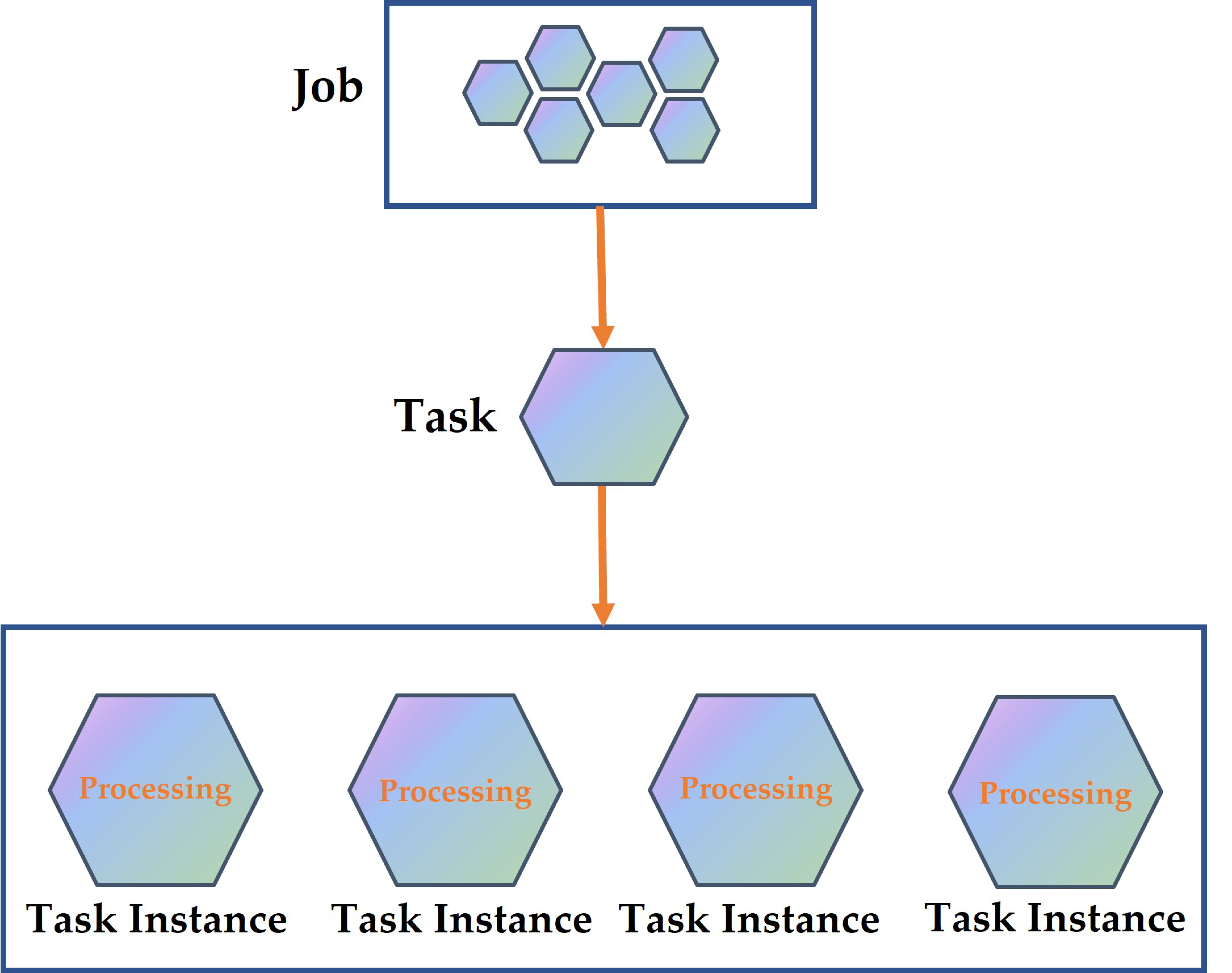

2.1.2. Modeling

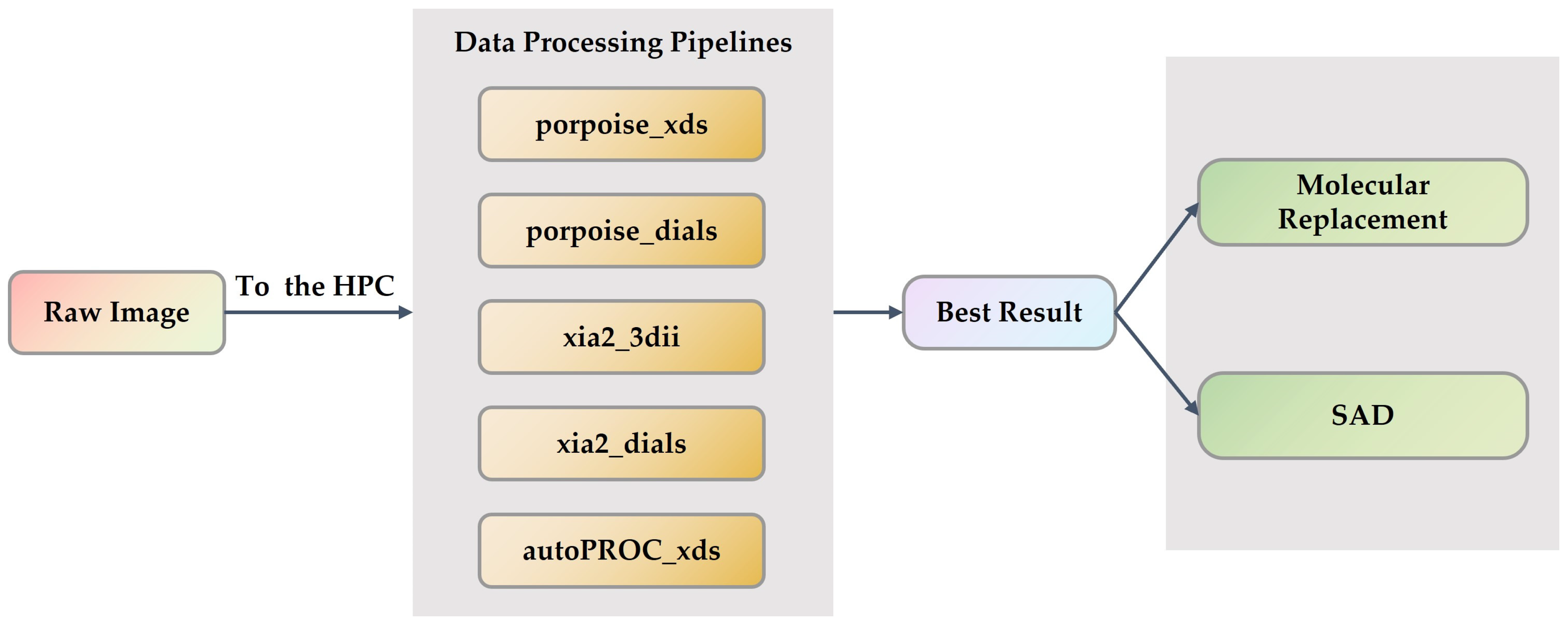

2.1.3. Pipelines

2.1.4. Service Delivery Paradigm

2.2. Task Scheduling Algorithm

2.2.1. Classical Scheduling Algorithms

- Lack of adaptability. Classical scheduling algorithms are often inflexible, meaning that once a task has been scheduled on a machine, it cannot be rescheduled. In real-world applications, however, task characteristics may change with time and environmental conditions, demanding ongoing modifications and optimizations of the task scheduling systems.

- Lack of optimization. Traditional scheduling algorithms are frequently dependent on heuristic principles or greedy strategies, making it difficult to identify the globally best solution. In addition, due to the interactions between activities, simple task scheduling is frequently insufficient to address the optimization needs of the system.

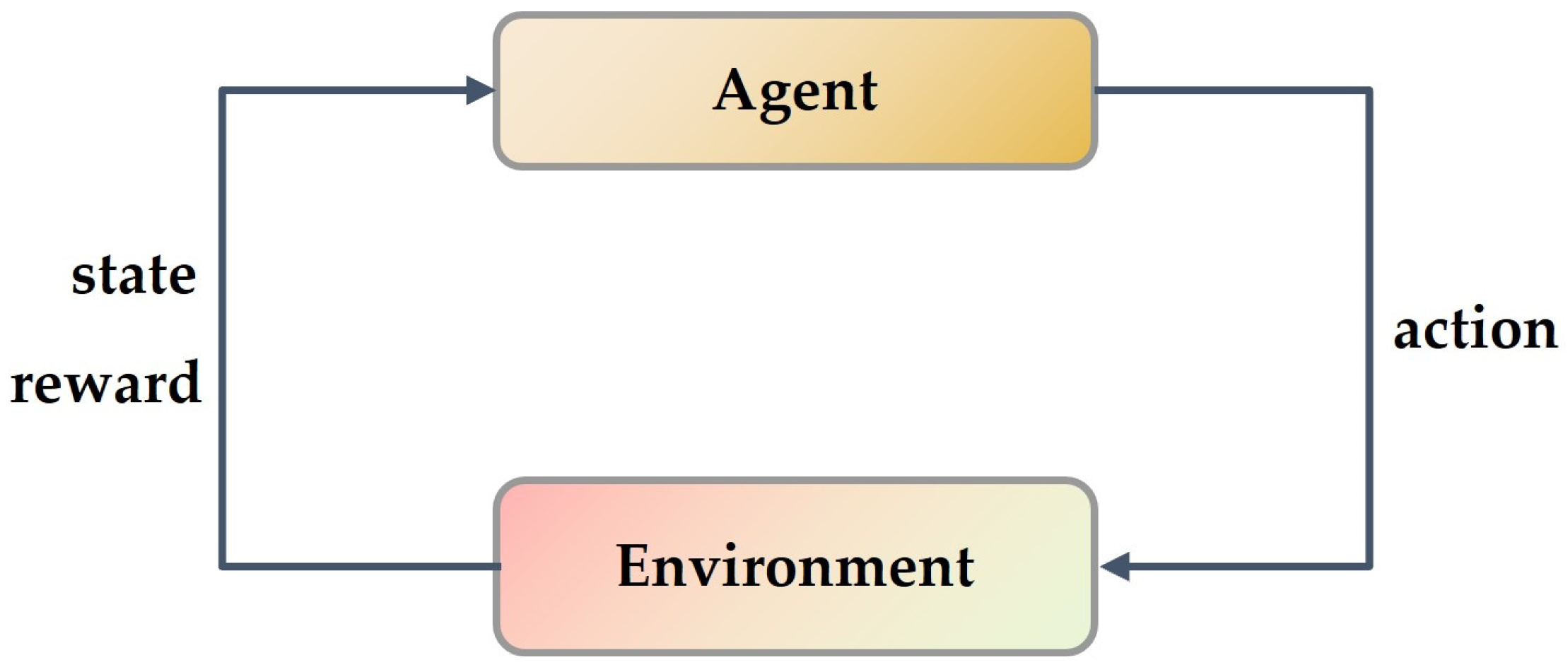

2.2.2. Deep Reinforcement Learning

2.3. Policy Gradient-Based Deep Reinforcement Learning

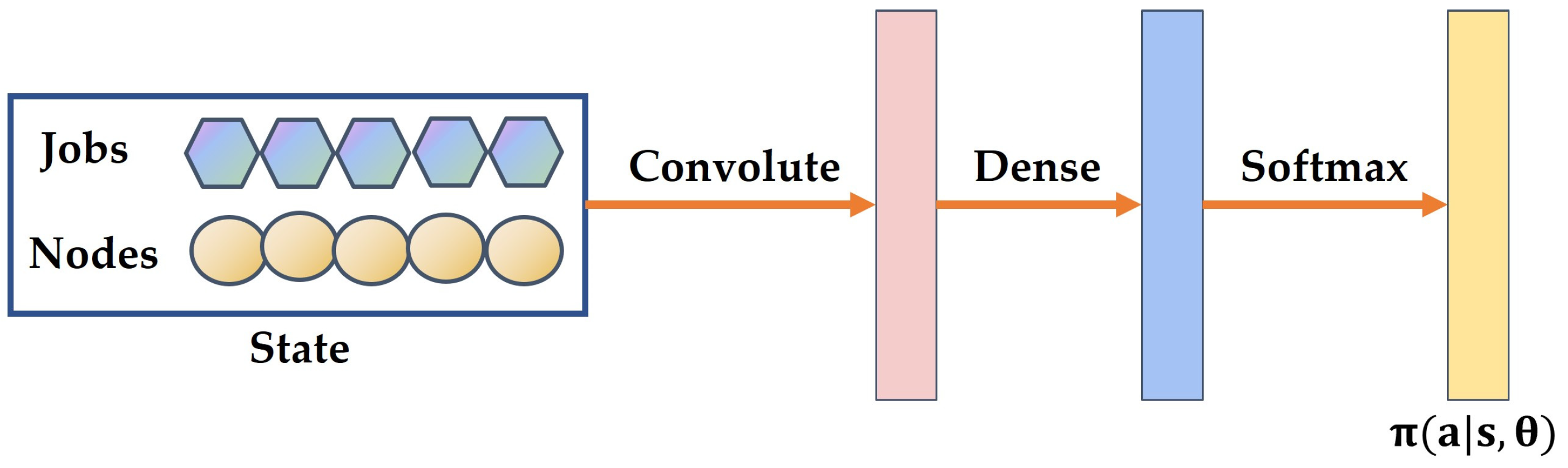

2.3.1. Policy Network

2.3.2. Policy Gradient

2.3.3. Advantage Function and Baseline

2.3.4. Reward-to-Go Method

3. Results

3.1. Computing Architecture

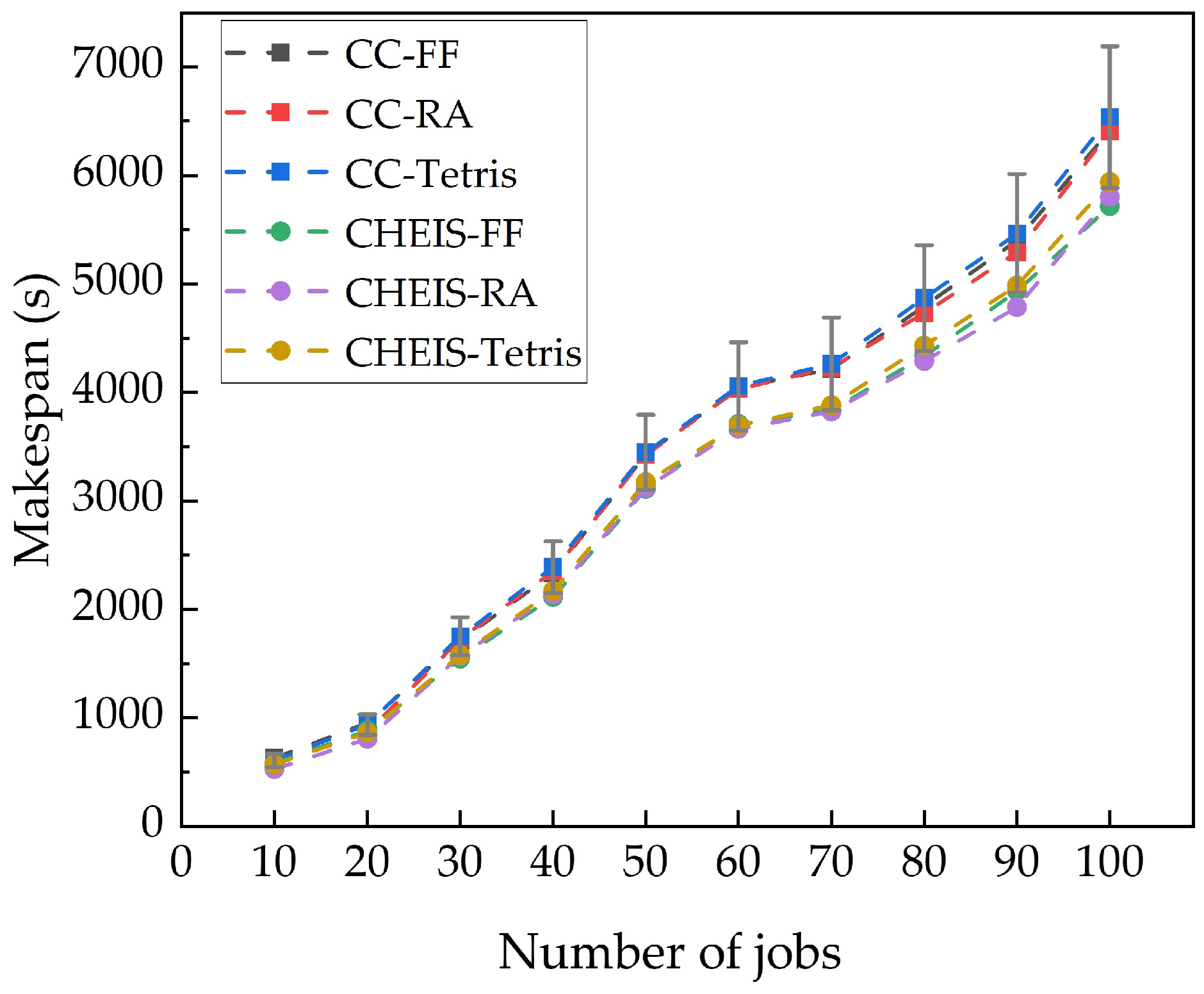

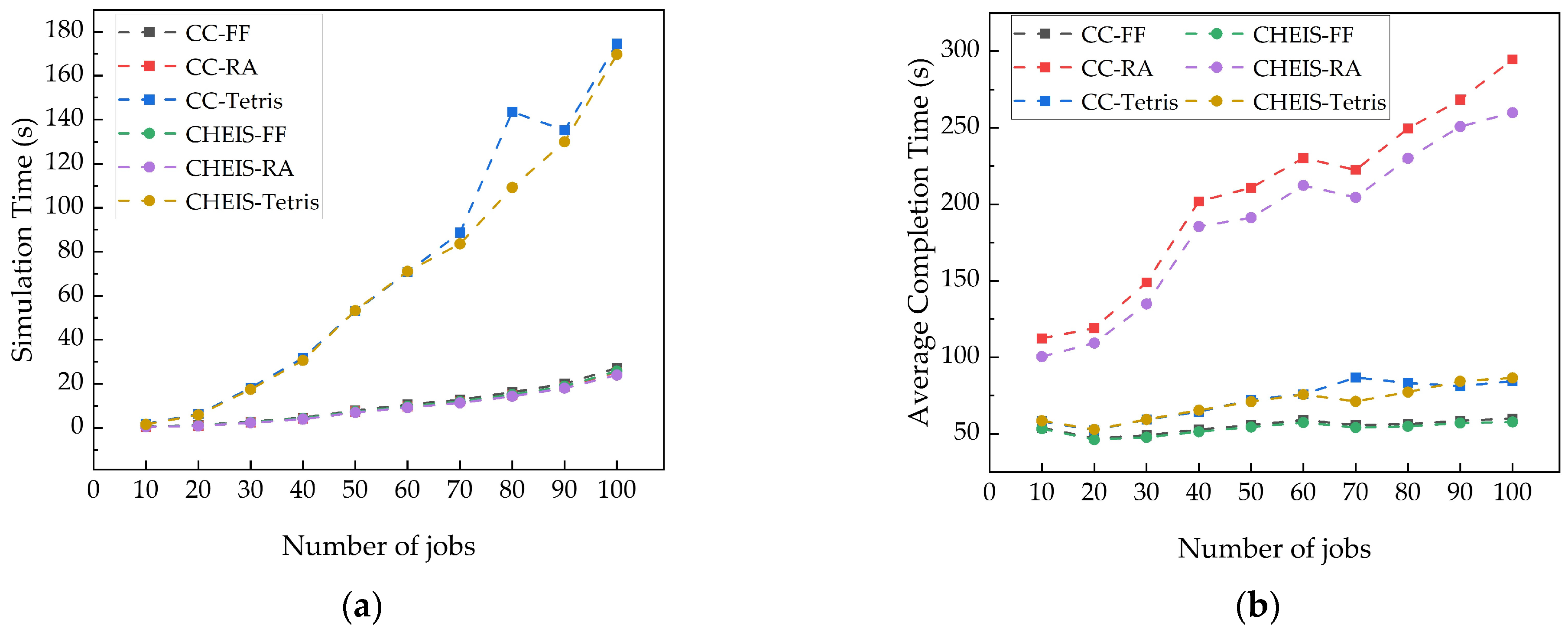

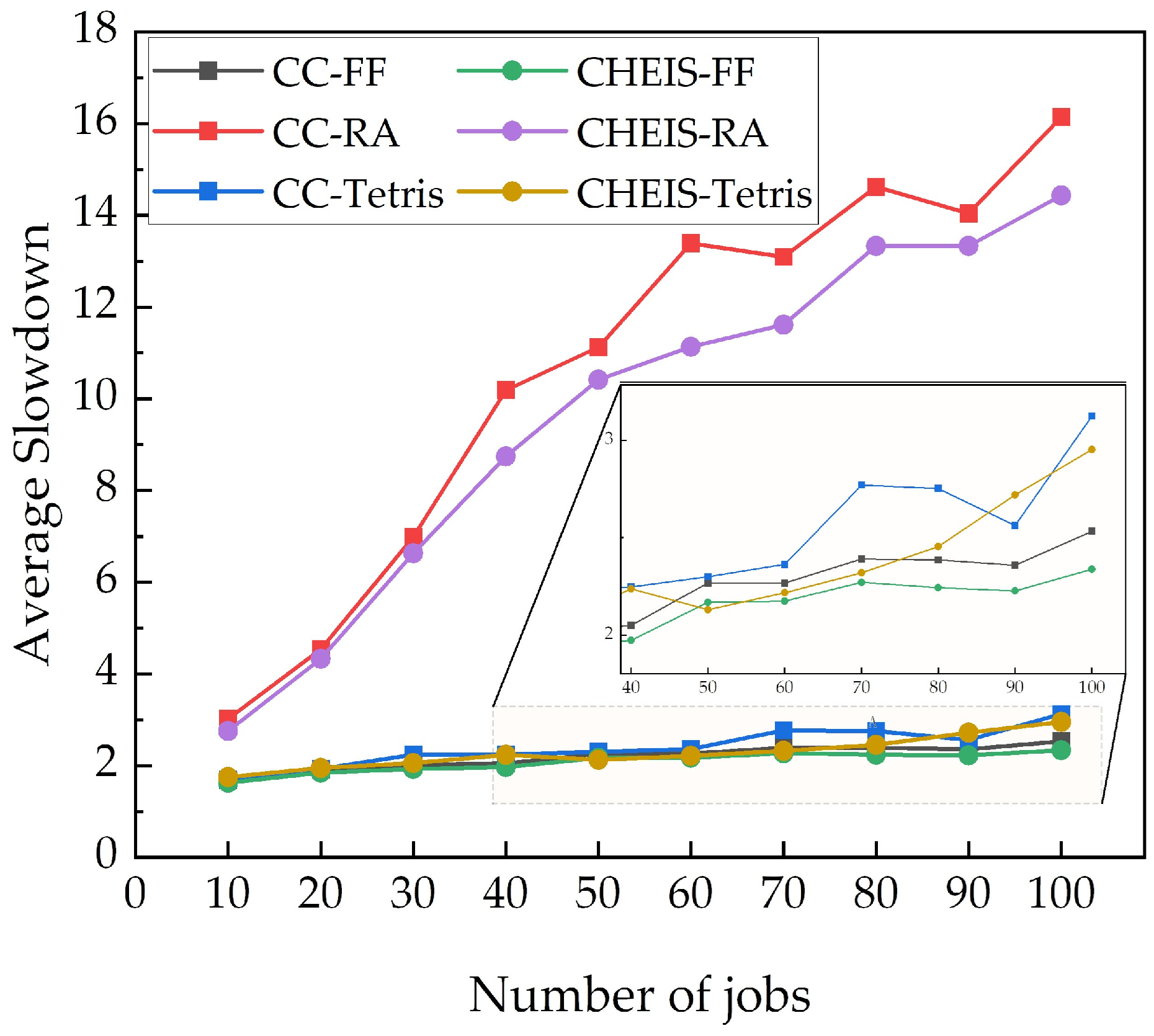

3.2. Inteligent Task Scheduler

4. Discussion and Conclusions

- Improved efficiency. The shorter makespan suggests that the CEHIS can process and analyze data more efficiently, enabling faster completion of the experiments and the analysis tasks.

- Enhanced resource utilization. The CEHIS allows for more effective allocation and utilization of computing resources, thereby reducing the resource wastage and the overall costs.

- Better real-time responsiveness. With edge nodes located closer to the data sources, the CEHIS can provide faster response times and lower latency, successfully satisfying the real-time requirements of the SSRF beamlines.

- Scalability. The CEHIS offers a more scalable solution, as it can flexibly allocate resources between the cloud and edge nodes, making it better suited to handle the future increasing data volumes and computational demands.

- Encouraging interdisciplinary research. The improved efficiency and responsiveness introduced by the CEHIS can facilitate collaborations across different research fields, fostering innovation and scientific discovery.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Beamline | Area of Application | Average Output (Byte/Day) | Max Burst (Byte/Day) |

|---|---|---|---|

| BL20U1/U2 | Energy material (E-line) | 300 MB | 500 MB |

| BL11B | Hard X-ray spectroscopy | 300 MB | 500 MB |

| BL16U1 | Medium-energy spectroscopy | 300 MB | 500 MB |

| BL07U | Spatial-resolved and spin-resolved angle resolved photoemission spectroscopy and magnetism (S2-line) | 300 MB | 500 MB |

| Resonant inelastic X-ray scattering | 300 MB | 500 MB | |

| BL02U1 | Membrane protein crystallography | 12 TB | 20 TB |

| BL02U2 | Surface diffraction | 12 TB | 20 TB |

| BL03SB | Laue micro-diffraction | 7.5 TB | 15 TB |

| BL13U | Hard X-ray nanoprobe | 5 TB | 10 TB |

| BL18B | 3D nano imaging | 5 TB | 10 TB |

| BL05U BL06B | Dynamics (D-line) | 1.5 TB | 2 TB |

| BL10U1 | Time-resolved ultra-small-angle X-ray scattering | 5 TB | 10 TB |

| BL16U2 | Fast X-ray imaging | 60 TB | 506 TB |

| BL10U2 | Biosafety P2 protein crystallography | 10 TB | 15 TB |

| BL13SSW | Radioactive materials | 300 MB | 500 MB |

| BL12SW | Ultra-hard X-ray applications | 5 TB | 10 TB |

| BL03SS(ID) | Laser electron gamma source (SLEGS) | / | / |

| BL09B | X-ray test beamline | / | / |

| Total | 124 TB | 616 TB | |

References

- Wang, C.; Ullrich, S.; Alessandro, S. Synchrotron Big Data Science. Small 2018, 14, 1802291. [Google Scholar] [CrossRef] [PubMed]

- Bell, G.; Hey, T.; Szalay, A. Beyond the Data Deluge. Science 2009, 323, 1297–1298. [Google Scholar] [CrossRef] [PubMed]

- Pralavorio, C. LHC Season 2: CERN Computing Ready for Data Torrent; CERN: Geneva, Switzerland, 2015. [Google Scholar]

- FLIR Systems. Available online: https://www.flir.com/products/oryx-10gige (accessed on 1 May 2019).

- Campbell, S.I.; Allan, D.B.; Barbour, A.M.; Olds, D.; Rakitin, M.S.; Smith, R.; Wilkins, S.B. Outlook for artificial intelligence and machine learning at the NSLS-II. Mach. Learn. Sci. Technol. 2021, 2, 013001. [Google Scholar] [CrossRef]

- Barbour, J.L.; Campbell, S.; Caswell, T.A.; Fukuto, M.; Hanwell, M.D.; Kiss, A.; Konstantinova, T.; Laasch, R.; Maffettone, P.M.; Ravel, B.; et al. Advancing Discovery with Artificial Intelligence and Machine Learning at NSLS-II. Synchrotron Radiat. News 2022, 35, 44–50. [Google Scholar] [CrossRef]

- Hu, H. The design of a data management system at HEPS. J. Synchrotron Radiat. 2021, 28, 169–175. [Google Scholar] [CrossRef] [PubMed]

- Parkinson, D.Y.; Beattie, K.; Chen, X.; Correa, J.; Dart, E.; Daurer, B.J.; Deslippe, J.R.; Hexemer, A.; Krishnan, H.; MacDowell, A.A.; et al. Real-time data-intensive computing. AIP Conf. Proc. 2016, 1741, 050001. [Google Scholar] [CrossRef]

- Bard, D.; Snavely, C.; Gerhardt, L.M.; Lee, J.; Totzke, B.; Antypas, K.; Arndt, W.; Blaschke, J.P.; Byna, S.; Cheema, R.; et al. The LBNL Superfacility Project Report. arXiv 2022, arXiv:2206.11992. [Google Scholar]

- Bashor, J. NERSC and ESnet: 25 Years of Leadership; Lawrence Berkeley National Laboratory: Berkeley, CA, USA, 1999.

- Blaschke, J.; Brewster, A.S.; Paley, D.W.; Mendez, D.; Sauter, N.K.; Kröger, W.; Shankar, M.; Enders, B.; Bard, D.J. Real-Time XFEL Data Analysis at SLAC and NERSC: A Trial Run of Nascent Exascale Experimental Data Analysis. arXiv 2021, arXiv:2106.11469. [Google Scholar]

- Giannakou, A.; Blaschke, J.P.; Bard, D.; Ramakrishnan, L. Experiences with Cross-Facility Real-Time Light Source Data Analysis Workflows. In Proceedings of the 2021 IEEE/ACM HPC for Urgent Decision Making (UrgentHPC), St. Louis, MO, USA, 19 November 2021; pp. 45–53. [Google Scholar]

- Vescovi, R.; Chard, R.; Saint, N.; Blaiszik, B.; Pruyne, J.; Bicer, T.; Lavens, A.; Liu, Z.; Papka, M.E.; Narayanan, S.; et al. Linking Scientific Instruments and HPC: Patterns, Technologies, Experiences. arXiv 2022, arXiv:2204.05128. [Google Scholar] [CrossRef]

- Enders, B.; Bard, D.; Snavely, C.; Gerhardt, L.M.; Lee, J.R.; Totzke, B.; Antypas, K.; Byna, S.; Cheema, R.; Cholia, S.; et al. Cross-facility Science with the Superfacility Project at LBNL. In Proceedings of the 2020 IEEE/ACM 2nd Annual Workshop on Extreme-Scale Experiment-in-the-Loop Computing (XLOOP), Atlanta, GA, USA, 12 November 2020; pp. 1–7. [Google Scholar]

- Deslippe, J.R.; Essiari, A.; Patton, S.J.; Samak, T.; Tull, C.E.; Hexemer, A.; Kumar, D.; Parkinson, D.Y.; Stewart, P. Workflow Management for Real-Time Analysis of Lightsource Experiments. In Proceedings of the 2014 9th Workshop on Workflows in Support of Large-Scale Science, New Orleans, LA, USA, 16 November 2014; pp. 31–40. [Google Scholar]

- Mokso, R.; Schlepütz, C.M.; Theidel, G.; Billich, H.; Schmid, E.; Celcer, T.; Mikuljan, G.; Sala, L.; Marone, F.; Schlumpf, N.; et al. GigaFRoST: The gigabit fast readout system for tomography. J. Synchrotron Radiat. 2017, 24, 1250–1259. [Google Scholar] [CrossRef]

- Buurlage, J.-W.; Marone, F.; Pelt, D.M.; Palenstijn, W.J.; Stampanoni, M.; Batenburg, K.J.; Schlepütz, C.M. Real-time reconstruction and visualisation towards dynamic feedback control during time-resolved tomography experiments at TOMCAT. Sci. Rep. 2019, 9, 18379. [Google Scholar] [CrossRef] [PubMed]

- Marone, F.; Studer, A.; Billich, H.; Sala, L.; Stampanoni, M. Towards on-the-fly data post-processing for real-time tomographic imaging at TOMCAT. Adv. Struct. Chem. Imag. 2017, 3, 1. [Google Scholar] [CrossRef] [PubMed]

- Gürsoy, D.; De Carlo, F.; Xiao, X.; Jacobsen, C. TomoPy: A framework for the analysis of synchrotron tomographic data. J. Synchrotron Radiat. 2014, 21, 1188–1193. [Google Scholar] [CrossRef] [PubMed]

- Pandolfi, R.J.; Allan, D.; Arenholz, E.A.; Barroso-Luque, L.; Campbell, S.I.; Caswell, T.A.; Blair, A.; De Carlo, F.; Fackler, S.W.; Fournier, A.P.; et al. Xi-cam: A versatile interface for data visualization and analysis. J. Synchrotron Radiat. 2018, 25 Pt 4, 1261–1270. [Google Scholar] [CrossRef]

- Yu, F.; Wang, Q.; Li, M.; Zhou, H.; Liu, K.; Zhang, K.; Wang, Z.; Xu, Q.; Xu, C.; Pan, Q.; et al. Aquarium: An automatic data-processing and experiment information management system for biological macromolecular crystallography beamlines. J. Appl. Crystallogr. 2019, 52, 472–477. [Google Scholar] [CrossRef]

- Jiang, M.H.; Yang, X.; Xu, H.J.; Ding, Z.H. Shanghai Synchrotron Radiation Facility. Chin. Sci. Bull. 2009, 54, 4171–4181. [Google Scholar] [CrossRef]

- He, J.; Zhao, Z. Shanghai synchrotron radiation facility. Natl. Sci. Rev. 2014, 1, 171–172. [Google Scholar] [CrossRef]

- Yin, L.; Tai, R.; Wang, D.; Zhao, Z. Progress and Future of Shanghai Synchrotron Radiation Facility. J. Vac. Soc. Jpn. 2016, 59, 198–204. [Google Scholar] [CrossRef]

- Wang, C.; Yu, F.; Liu, Y.; Li, X.; Chen, J.; Thiyagalingam, J.; Sepe, A. Deploying the Big Data Science Center at the Shanghai Synchrotron Radiation Facility: The first superfacility platform in China. Mach. Learn. Sci. Technol. 2021, 2, 035003. [Google Scholar] [CrossRef]

- Sun, B.; Wang, Y.; Liu, K.; Wang, Q.; He, J. Design of new sub-micron protein crystallography beamline at SSRF. In Proceedings of the 13th International Conference on Synchrotron Radiation Instrumentation, Taipei, Taiwan, 11–15 June 2018. [Google Scholar]

- Li, Z.; Fan, Y.; Xue, L.; Zhang, Z.; Wang, J. The design of the test beamline at SSRF. In Proceedings of the 13th International Conference on Synchrotron Radiation Instrumentation, Taipei, Taiwan, 11–15 June 2018. [Google Scholar]

- Shi, W.; Jie, C.; Quan, Z.; Li, Y.; Xu, L. Edge Computing: Vision and Challenges. Internet Things J. IEEE 2016, 3, 637–646. [Google Scholar] [CrossRef]

- Ning, H.; Li, Y.; Shi, F.; Yang, L.T. Heterogeneous edge computing open platforms and tools for internet of things. Future Gener. Comput. Syst. 2020, 106, 67–76. [Google Scholar] [CrossRef]

- Yin, J.; Zhang, G.; Cao, H.; Dash, S.; Chakoumakos, B.C.; Wang, F. Toward an Autonomous Workflow for Single Crystal Neutron Diffraction. In Proceedings of the IEEE International Conference on Systems, Man and Cybernetics, Virtual Event, 23–25 August 2022. [Google Scholar]

- Hirschman, J.; Kamalov, A.; Obaid, R.; O’Shea, F.H.; Coffee, R.N. At-the-Edge Data Processing for Low Latency High Throughput Machine Learning Algorithms. In Proceedings of the IEEE International Conference on Systems, Man and Cybernetics, Virtual Event, 23–25 August 2022. [Google Scholar]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction. IEEE Trans. Neural Netw. 2005, 16, 285–286. [Google Scholar] [CrossRef]

- Sinaei, K.; Yazdi, M.R.S. PID Controller Tuning with Deep Reinforcement Learning Policy Gradient Methods. In Proceedings of the 29th Intermational Conference of Iranian Society of Mechanical Engineers & 8th Conference on Thermal Power Plants, Tehran, Iran, 25–27 May 2021. [Google Scholar]

| Nodes | Number | Configuration |

|---|---|---|

| CPU Node | 48 | Intel Xeon Gold 6104 (2.3 GHz, 18 Core) × 2 16 GB DDR4 Memory × 8 |

| Fat Node | 1 | Intel Xeon E7-8860v4 (2.2 GHz, 18 Core) × 16 16 GB DDR4 Memory × 128 |

| PCle GPU Node | 4 | Intel Xeon 5118 (2.3 GHz, 12 Core) × 2 NVIDIA Tesla P100 GPU Card × 2 16 GB DDR4 Memory × 8 |

| NVLINK GPU Node | 1 | Intel Xeon Gold 6132 (2.6 GHz, 14 Core) × 4 NVIDIA Tesla P100 GPU Card × 4 32 GB DDR4 Memory × 32 |

| Nodes | Number | Configuration |

|---|---|---|

| CPU Node | 160 | Intel Xeon Gold 5320 (2.2 GHz, 26 Core) × 2 32 GB DDR4 Memory × 8 |

| Fat Node | 2 | Intel Xeon 8260 (2.4 GHz, 24 Core) × 8 16 GB DDR4 Memory × 64 |

| GPU Node | 4 | Intel Xeon 6226R (2.3 GHz, 18 Core) × 2 NVIDIA A100 GPU Card × 4 16 GB DDR4 Memory × 12 |

| CPU | Memory | Storage | GPU | Application | Beamline |

|---|---|---|---|---|---|

| x86 × 2 2.0 GHz 64 cores | 512 GB | 184.32 TB Intel S4510 SSD × 24 | Big metadata Real-time pipelines | BL02U1 BL10U2 | |

| x86 × 2 2.9 GHz 16 cores | 192 GB | 184.32 TB Intel S4510 SSD × 24 | RTX5000 × 2 | 3D data pipelines | BL12SW BL13U |

| x86 × 2 2.9 GHz 16 cores | 192 GB | 192 TB 7200 RPM × 12 | Big metadata | BL16U2 | |

| x86 × 2 2.0 GHz 64 cores | 512 GB | 96 TB 7200 RPM × 6 | Big metadata | BL03SB BL13SSW | |

| x86 × 2 2.9 GHz 16 cores | 192 GB | 48 TB 7200 RPM × 3 | RTX3080TI | 3D data pipelines Big metadata Real-time pipelines | BL17M BL18B |

| x86 × 2 2.9 GHz 16 cores | 192 GB | 184.32 TB Intel S4510 SSD × 24 | RTX5000 × 2 | 3D data pipelines | BL12SW BL13U etc. |

| RA | FF | Tetris | DRL | |

|---|---|---|---|---|

| Makespan | 666 | 673 | 666 | 632 |

| ST | 1.67 | 2.07 | 3.77 | 86.13 |

| ACT | 39.42 | 38.01 | 38.75 | 38.82 |

| AS | 1.21 | 1.19 | 1.17 | 1.17 |

| RA | FF | Tetris | DRL | |

|---|---|---|---|---|

| Makespan | 1316 | 1331 | 1433 | 1251.41 |

| ST | 6.26 | 6.36 | 18.56 | 223.19 |

| ACT | 54.52 | 42.97 | 45.82 | 48.84 |

| AS | 2.03 | 1.59 | 1.32 | 1.32 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ye, J.; Wang, C.; Chen, J.; Wan, R.; Li, X.; Sepe, A.; Tai, R. Cloud–Edge Hybrid Computing Architecture for Large-Scale Scientific Facilities Augmented with an Intelligent Scheduling System. Appl. Sci. 2023, 13, 5387. https://doi.org/10.3390/app13095387

Ye J, Wang C, Chen J, Wan R, Li X, Sepe A, Tai R. Cloud–Edge Hybrid Computing Architecture for Large-Scale Scientific Facilities Augmented with an Intelligent Scheduling System. Applied Sciences. 2023; 13(9):5387. https://doi.org/10.3390/app13095387

Chicago/Turabian StyleYe, Jing, Chunpeng Wang, Jige Chen, Rongzheng Wan, Xiaoyun Li, Alessandro Sepe, and Renzhong Tai. 2023. "Cloud–Edge Hybrid Computing Architecture for Large-Scale Scientific Facilities Augmented with an Intelligent Scheduling System" Applied Sciences 13, no. 9: 5387. https://doi.org/10.3390/app13095387

APA StyleYe, J., Wang, C., Chen, J., Wan, R., Li, X., Sepe, A., & Tai, R. (2023). Cloud–Edge Hybrid Computing Architecture for Large-Scale Scientific Facilities Augmented with an Intelligent Scheduling System. Applied Sciences, 13(9), 5387. https://doi.org/10.3390/app13095387