Abstract

Most advanced technologies for the treatment of type 1 diabetes, such as sensor-pump integrated systems or the artificial pancreas, require accurate glucose predictions on a given future time-horizon as a basis for decision-making support systems. Seasonal stochastic models are data-driven algebraic models that use recent history data and periodic trends to accurately estimate time series data, such as glucose concentration in diabetes. These models have been proven to be a good option to provide accurate blood glucose predictions under free-living conditions. These models can cope with patient variability under variable-length time-stamped daily events in supervision and control applications. However, the seasonal-models-based framework usually needs of several months of data per patient to be fed into the system to adequately train a personalized glucose predictor for each patient. In this work, an in silico analysis of the accuracy of prediction is presented, considering the effect of training a glucose predictor with data from a cohort of patients (population) instead of data from a single patient (individual). Feasibility of population data as an input to the model is asserted, and the effect of the dataset size in the determination of the minimum amount of data for a valid training of the models is studied. Results show that glucose predictors trained with population data can provide predictions of similar magnitude as those trained with individualized data. Overall median root mean squared error (RMSE) (including 25% and 75% percentiles) for the predictor trained with population data are , , , , , mg/dL, for prediction horizons (PH) of min, respectively, while the baseline of the individually trained RMSE results are , , , , , mg/dL, both training with 16 weeks of data. Results also show that the use of the population approach reduces the required training data by half, without losing any prediction capability.

1. Introduction

Diabetes affected 537 million people with diabetes worldwide in 2021 [1], and this figure is estimated to rise to 643 million by 2030 and 783 million by 2045, which makes diabetes one of the major health challenges of the 21st century. Type 1 diabetes (T1D) is the most severe variant of this condition, and affects about 10% of people with diabetes. In people with T1D, -cells in the pancreas are destroyed by an autoimmune response, impairing or completely suppressing insulin secretion, causing blood glucose (BG) concentrations to escape the safe range of 70–180 mg/dL. Since the discovery of insulin, T1D treatment usually consists in exogenous administration of insulin, or more recently, insulin analogs, in order to avoid long-term complications due to hyperglycemia (high concentration of BG). Unfortunately, insulin overdosing may reduce BG concentration to hypoglycemic levels, which may lead to severe consequences such are comma and death when untreated. Ambulatory BG control is demanding for patients, who carry out many critical decisions and calculations per day, and are required to constantly monitor BG levels to avoid hyper- and hypoglycemia.

Nowadays, glucose concentration can be measured in real time by using continuous glucose monitoring (CGM) devices [2], which are increasingly common medical devices in the treatment and supervision on T1D. However, accurate glucose predictions over long time horizons are still needed to help in preventing hyperglycemic and, especially, hypoglycemic episodes [3,4]. Glucose prediction is important in sensor–pump integrated systems [5] or artificial pancreas (AP) systems [6,7,8,9].

There are a variety of alternatives for BG prediction in T1D in the literature, such as, among others, linear empirical dynamic models, multivariate nonlinear regression techniques, extended Kalman Filters, data mining or artificial intelligence approaches [10,11,12]. More recently, deep learning and meta-learning [13,14,15] approaches have yielded very accurate predictions when trained on population data, albeit those models need to be fed exogenous data that may not be reliably available or accurate. A promising approach is the use of seasonal stochastic local models [16,17], since these models provide reliable glucose predictions under high intra-patient variability for long prediction horizons. The rationale lies in the fact that observed behaviors in past similar scenarios can help to improve predictions at the present moment. Seasonal stochastic time series models such as SARIMA (seasonal autoregressive integrated moving average) or SARIMAX (SARIMA including eXogenous variables) have exhibited good accuracy for postprandial BG prediction accuracy over long prediction horizons [16], including challenging scenarios with a variety of meals and exercise sessions [17]. Seasonal local modeling also proved successful under real-life scenarios [18], but at least four months of data from each patient were needed to properly train the personalized system. SARIMA models have recently been proven to provide valid short term predictions in real life experimental datasets, as shown in [19], holding their ground against neural networks when predicting glucose in two different experimental benchmark datasets.

Thus, it is desirable to reduce as much as possible the individual historical data needed to train these models in order to provide valid glucose predictions to the patient and clinical staff as soon as possible. The question that motivated this work is whether training from population data, composed of smaller datasets from many patients, provides accurate enough glucose predictions when used in an individual. Additionally, we investigated to what extent individual data needs can be reduced in the training process to maintain prediction accuracy.

The main purpose of this work is to train population seasonal data-based models and perform an in silico study of prediction capabilities to analyze if the use of population data can substitute individual patient data while maintaining accuracy. Additionally, the effect of the reduction of the volume of data needed (number of weeks per patient included in the population data) is studied and how it affects the feasible prediction horizon.

2. Material and Methods

The main contribution of this work is the use of global glucose predictors to estimate future glucose, which uses seasonal stochastic models trained with data from a population of patients, in contrast to those trained with data from the same individual. The methodology, apart from the data curation and partitioning, is very similar to the procedure described in [18] for seasonal stochastic local modeling. A summary of the method is reproduced next where additions and modifications to the original procedure have been included.

2.1. Data Overview

CGM usage has experienced an extraordinary growth in recent years and its data is easier to access by researchers than before. Indeed, diabetes management software such as Tidepool or SocialDiabetes gather and store this data, facilitating data curation and access by researchers [20,21]. Furthermore, full repositories of experimental CGM data are freely available to download e.g., the rePLACE-BG diabetes dataset [22]. In this work, we opted to use in silico data consisting of two datasets (one for training, one for validation) of ten virtual adults from the educational version of the UVA/Padova simulator [23], with an exercise model add-on [24], and additional sources of intra-patient variability. Both sets of data were generated following open loop therapy (basal insulin and ratios provided by the simulator were used). The main characteristics (events and variability) of the simulated scenario were chosen to represent as closely as possible the real life of people with T1D, and are summarized next:

- A nominal day with three meals of 40, 90 and 60 g of carbohydrates at 7:00, 14:00 and 21:00 h were simulated.

- Actual mealtime and carbohydrate intake varied around nominal values following a normal distribution with a standard deviation of 20 min for mealtime, and a 10% coefficient of variance for meal size.

- Meal absorption dynamics were changed at each meal by randomly selecting one of the meal model parameter sets available from the simulator [23].

- Meal absorption rate at each meal changed within a uniform distribution in ±30%, and carbohydrate bioavailability in ±10% around selected nominal values.

- Carbohydrate counting errors by the patient were considered to follow a uniform distribution between −30% and +10%, following results in [25] where a trend to meal underestimation is reported.

- Insulin absorption pharmacokinetics varied in ±30%, according to the intra-patient variability reported in [26].

- Circadian variability of insulin sensitivity was considered with variations in ±30% around nominal sensitivity, reproducing changes in basal insulin requirements in the adult population reported in [27].

- The 15–15 rule [28] was used to treat hypoglycemia (15 g of carbohydrates were administered when glucose went below 70 mg/dL, and repeated if after 15 min hypoglycemia was still present).

Training and validation datasets were generated using the same scenario. Four months of data were generated for the training dataset, representing the maximum data per patient available. The actual length of training data used was varied in the study performed according to the scenarios described in Section 2.8. A fixed length of two months was considered for the validation dataset.

In both datasets, besides CGM data, time stamps and labels for the following events were generated: Breakfast, Lunch, Dinner, Night and Hypoglycemia treatment. The Night event was considered to start 6 h after dinner. All rescue carbs administered in a hypoglycemia treatment were gathered in a single Hypoglycemia treatment event, starting at the time of the first rescue carb intake. This will be represented, for each patient , by the set of time-ordered pairs

where is the timestamp for the i-th event, , is the label of the i-th event, {Breakfast, Lunch, Dinner, Night, Hypoglycemia treatment}, and is the number of events.

2.2. CGM Data Partitioning

Seasonality must be enforced before building seasonal stochastic local models from the training dataset. Provided that the starting time and duration of events in the CGM historical data is variable between event instances, the original CGM time series data is partitioned into a set of “event-to-event” time subseries from the reported timestamps and labels in the set . Although different options exist to build groups (from a single partition if event labels are neglected to multiple partitions of all event labels are considered), three partitions are considered in this work (meals, night and hypoglycemia treatment). This partitioning is a compromise requiring only information about meal and hypoglycemia time instants (which can be extracted automatically from data) and performed well in previous studies [18]. Thus, consider for patient p

where

Given the index sets for patient p

and denoting by the time subseries given by the segment of CGM data from to for patient p, the following CGM data partitions are built:

In this work, population data is also needed. In this case, some partitions can be aggregated to create population partitions, such are those including the meals, the nights and the hypoglycemia treatments for the whole population.

where is the number of patients in the cohort; in our case, .

This aggregates sequentially (the partition from one patient after the previous one) the partitions of all patients into a single partition. Therefore, when using the aggregated population partition, the identification procedure is patient-blind.

2.3. Data Regularization

Each CGM time subseries in the training dataset is comprised from a given event timestamp, , to the next event timestamp, . In order to enforce seasonality, the time subseries’ lengths must be regularized. Time subseries of shorter length than the corresponding maximum length found in the partition where it is included are expanded with blank values (“not a number”—NaN) until reaching maximum length. As a result, time subseries length is regularized and seasonality can be enforced (see Section 2.6). Note that most of time subseries will have blanks in the final positions, which should be adequately treated as missing data in the clustering and identification processes. Thus, regularized length will be different for each partition , , , , , and .

2.4. Data Clustering

Regularized partitions in the training dataset must be clustered to gather similar glucose responses prior to local modelling, as required by seasonal techniques. Fuzzy C-Means (FCM) clustering [29] requires data to be complete and, therefore, it is not directly applicable to the available partitions with missing data resulting from the seasonality enforcing step. The partial distance strategy FCM (PDSFCM) algorithm is used instead, combined with the partial distance as defined in [30].

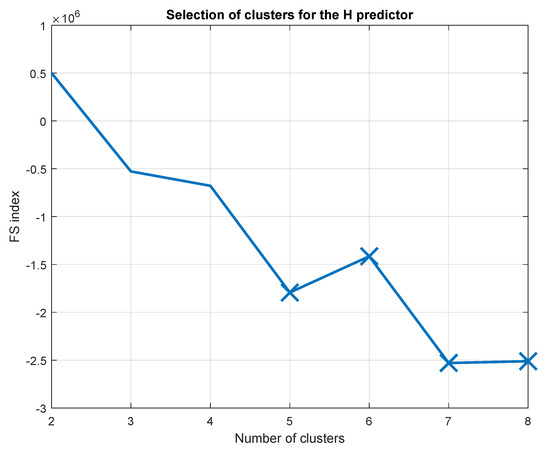

Most clustering algorithms, such as FCM and its modification PDSFCM, require knowing the expected number of clusters in the data before running the process. Fortunately, there exists a large variety of indices to help determine the optimal number of clusters. In this work, minimization of the Fukuyama–Sugeno (FS) index has been used [31] for this purpose, due to the good results obtained with it in previous works [18].

Additionally, provided that a local model will be identified later for each cluster, a minimum number of time series is required in a cluster for the identification process to be performed. A threshold of 10 time series has been found adequate in this work, then ensuring subsequent local models a minimum set for identification and validation.

Figure 1 shows an example where FS decreases as the number of clusters increases, with some local minimum in the evolution. If only FS is considered, five or seven clusters are the bests candidates (local minimum with less clusters). However, when five or more clusters are considered, the number of time series drops below 10 in (at least) one cluster (marked with a cross in the figure), thus leading to possible problems in the subsequent identification step (due to the small number of data for training and validation). Therefore, four clusters are chosen in this case.

Figure 1.

Example of number of clusters selection using the FS index in combination with a minimum number of time series in a cluster. The X indicates the existence of one or more clusters with less than 10 time series.

2.5. Data Preparation for Identification

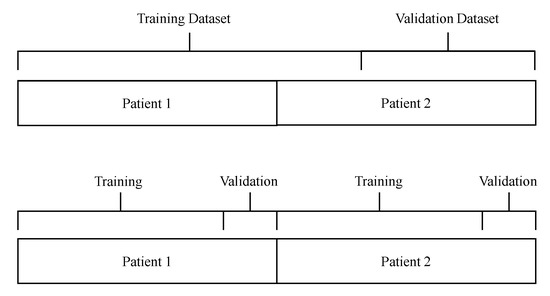

This work will compare models obtained using individual patient data vs population data. Although the used data (amount, partitions, etc.) is the same, the data must be organised in a different way before feeding it to the identification algorithm. In both cases, 80% of data will be used for local models training and the remaining 20% for local models validation, but sorted in a different way.

In the case of data for individual models (one for each patient), partitions (9), (), and () will be split into two parts: the first 80% of data will be used for training and last 20% for validation.

However, if the same splitting procedure is applied to the population partitions in (12), (), and (), an undesirable effect is detected. As an example, the top image in Figure 2 shows the application of the procedure to a cluster from the partition . It can be seen that, provided that each cluster contains data from different patients and that data is stored in order in the original clustered partitions, the two parts for identification (training and validation) will contain events from completely different patients (patient 1 is used for training, and only part of patient 2 is used for validation). In the case of this work (10 patients), this procedure will lead to a scenario where patients 1 to 8 will be used to train the local models and the remaining patients will be used to validate the local model. In order to avoid this problem, it is extremely important to properly split the training and validation data from each patient. Therefore, the total number of patients present in each cluster will be identified and then the data from each one of them will be split using the 80/20 criteria, as presented in the bottom image in Figure 2 for the partition . In this way, the right proportion of data from each patient is ensured in the training and validation split.

Figure 2.

Training and validation split procedures for population data.

2.6. Local SARIMA Modeling

After data in a partition are clustered, local seasonal models are trained for each cluster. If the clustering process is successful, all the time series in a cluster will be similar. Then, a SARIMA model can be identified for each cluster i, yielding a set of local glucose predictors (one per cluster). Note that the cost index during identification will neglect blank data in the residuals computation. Box–Jenkins methodology [32] is used in this identification process. Exogenous inputs could also be considered with SARIMAX models [17], although only glucose and mealtimes will be considered available in this work.

2.7. Models Integration in a Glucose Predictor

Once local models are properly trained for each cluster, a glucose predictor is built by the integration of such local models during real-time operation. Although a detailed description of this process can be found in [18], the glucose predictor working process is quickly described next: When the glucose predictor receives a new real time glucose value , a time series is built with this value and a given window of previous glucose measurements from the time the last event occurred. This time series is then compared with the same time window from the cluster prototypes of each local model, and a membership value is calculated, representing the similarity between the current time series and each prototype i for that time window. Then, local models are evaluated providing a local prediction, based on the new glucose measurement and past historical data in the corresponding cluster, depending on the seasonal order of the local models. In the end, all those predictions are integrated using the weighted sum of each response by their corresponding membership . Therefore, the final (global) prediction will depend mostly on the local model’s response that is more similar to the current patient’s situation, but all the other responses will be taken into account in some degree.

2.8. Glucose Predictors Validation

As described in Section 2.1, the training dataset comprised sixteen-weeks in silico data for a ten patient cohort. For each patient p, training data was partitioned (following the descriptions in Section 2.2 for partition, and Section 2.3 for regularization) in , , and sets. Clustering (see Section 2.4) and local model training and integration (Section 2.6 and Section 2.7) was then applied to each partition, yielding a set of individual glucose predictors (one per patient p and event group , , and ): , , and . The same procedure has been applied to the population data in the partitions , , and , then resulting in a single population glucose predictor per event (, , and ), but valid for all patients: , , and .

It must be recalled that in both cases, individual and population training, 80% of the available data for each cluster was used for local models identification and the remaining 20% was kept for validation time series following the procedure described in Section 2.5. These partial results on local modeling are kept internal and not shown in this work, since it is not deemed relevant for the conclusions.

Individual (patient-personalized) glucose predictors performed very well in previous works [18], and this performance will be compared to the population predictor in this work. Additionally, the population predictor performance degradation will be studied as the number of data is reduced (the number of weeks of available data per patient is reduced sequentially from 16 to 2 weeks) for prediction horizons in the set min.

Both glucose predictors (individual and population) performance have been evaluated using an independent 2-month validation dataset (see Section 2.1). Each glucose predictor has been matched, at each event, according to its type (meal, night, hypoglycemia treatment). For all time series in the validation data, predictions have been computed as described in Section 2.7. In order to facilitate results comparison with previous studies in glucose prediction in the literature, the standard metric RMSE (mg/dL) has been used here to measure forecasting accuracy:

where n is the number of observations, is the forecasting error (residual), denote the ith glucose observation, and is the forecast of . The residuals of the glucose trajectory are built from predictions at time for a prediction horizon , i.e., . It evaluates how successfully the actual glucose trajectory can be predicted time samples ahead. In this case, for each time series in the validation data, and denoting as the length of the time series, observations are available for the computation of residuals. Metrics were averaged for all those time series in the validation data.

3. Results and Discussion

The main contribution of this work is using CGM data from a given population to train prediction models for each individual, instead of using data from longer periods of time provided by the same individual. In this section, the prediction accuracy metrics for our approach are presented.

3.1. Individual vs. Population Predictor

For the population predictor, the number of clusters (and, therefore, the number of local models needed) for each partition predictor are reported in Table 1, where the first row displays the results using the whole 16-week dataset. The optimal number of clusters in the day predictor is independent of the volume of data used for training, always requiring eight clusters for optimum clustering performance. For the individual predictor case, a detailed list of the number of clusters of each partition and each patient is detailed in [18].

Table 1.

Number of clusters in each predictor in function of the training dataset size.

The glucose predictor ensembled for the day partition is consistent in finding eight clusters of data independently of the amount of data used to train it, suggesting a homogeneous distribution of easy to identify behaviors across the dataset. The night predictor ranges from three to five clusters as the volume of data increases. The three base clusters may represent common nighttime patterns, which appear consistently even in small datasets. The fourth and fifth clusters, however, are only identified when the data volume grows, suggesting that they are sparsely represented in the data, and several repetitions of those behaviors are required to appear in the data in order to assign them a cluster. A similar conclusion can be drawn from the number of clusters in the hypoglycemic predictor.

As expected from the population approach, the number of clusters needed when clustering the whole dataset is larger than those for any single individual (see [18]), which range from five to eight for the Day Predictor, from three to five in the Night Predictor, and between one and four for the hypoglycemia predictor. The case of the hypoglycemia predictor is particularly interesting, since using data from a population yields from five to eight clusters, more than that of any individual. This leads to the conclusion that hypoglycemia predictors are more readily built with fewer sets of data than those based on data from the individual, due to hypoglycaemic patterns appearing more often among many individuals rather than repeatedly in the same individual.

The accuracy performance of both predictors (trained with individual/population data) are reported in Table 2 for the initial training with 16-week data. The results are reported as mean (standard deviation), and median [25th–75th percentiles]. For each , results for each partition predictor (meal, night, hypoglycemia treatment) as well as overall metrics are presented. In both cases, results show the averaged behaviour over the 10 patient cohort. The Wilcoxon rank sum test (p < 0.001) was used to test statistically significance, marked with a red dot (•).

Table 2.

Accuracy metrics of the individual and population glucose predictors for the 16-weeks validation dataset.

Results show a small increase in the mean error in the population approach when compared to the individual one in the case of small . However, in the worst case scenario, an increase of 4.86 mg/dL is detected when considering the Meals and Night glucose predictors, but this value can not be considered relevant in a medical context. On the other hand, this loss of prediction capability becomes clearer when considering longer hypoglycemia predictors, where the RMSE increases to 6.89 mg/dL ( min) and 13.68 mg/dL ( min). It must be noted that this difference has not been found statistically significant.

However, considering that the population predictors were trained with data from a population and not from data of the very same patient, the prediction errors are remarkably low, especially when considered in a clinical environment. The overall predictor being able to accurately predict glucose 4h ( min) into the future with a RMSE of 30.40 mg/dL is very promising, especially when the individual predictor only yields errors of 27.28 mg/dL, a difference of 3.12 mg/dL, which is not statistically significant. In general, we observe RMSE numbers of the same order of magnitude for both the individual and population approaches, which, given that population data can be readily used as a “seed” for predictions out–of–the–box without the need of individualization to the patient, suggests that the population approach to clustering and training local models is the way forward.

3.2. Effect of the Number of Weeks of Data on the Population Predictor

So far, it has been observed that using population data to train local models does not jeopardize prediction accuracy when applied to an individual, compared to training with individual data and assuming the same individual dataset size. Now, the effect of the data size (in number of weeks of data used to train the local models) on the predictions , , and is analysed. The initial hypothesis was that, as the size of the training dataset is reduced, so is the predictive power of the ensemble of models that comprise the global predictors. To confirm this hypothesis, we evaluate the performance of the predictors using population sets of weeks of data in , and prediction horizons ranging min.

The number of clusters for each partition predictor was calculated for each set of identification data (from 16 to 2 weeks) using the FS index (see Section 2.4, and results are reported in Table 1). As can be seen in Table 1, the ideal number of clusters is lower in scenarios for smaller numbers of weeks, which is expected since the volume of data is lower. This behaviour affects particularly to the number of clusters in the Night and Hypoglycemia predictor, since the number of these events decrease drastically in smaller datasets.

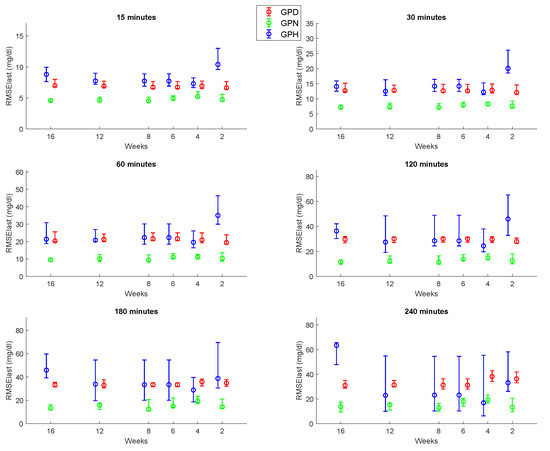

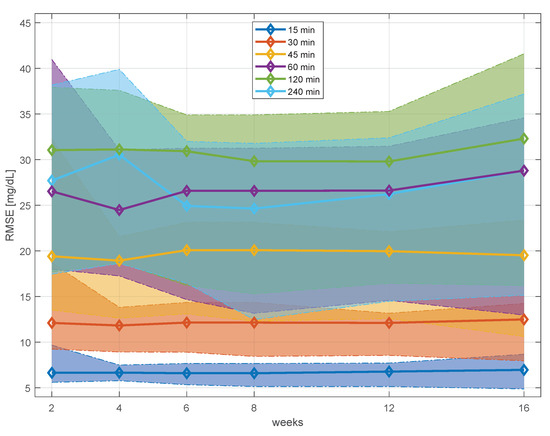

The same validation procedure followed in Section 3.1 was used here to compare the predictive power of the different glucose predictors when trained with different population data volumes. Figure 3 shows the distribution of the prediction error (RMSE median and quartiles) vs the different training dataset sizes for all the prediction horizons.

Figure 3.

RMSE Median and Quartiles metrics for the different Glucose Predictor for all prediction horizons for all the training dataset sizes. Hypoglycemia predictor in blue, Night predictor in green, and Meal predictor in red.

For all considered PHs, the hypoglycemia glucose predictor is clearly the most erratic (larger error bars) and on average, the one that shows higher median errors. It can also be observed that for low PHs () no significant difference is observable in the RMSE, except for an abnormally high error when only 2 weeks of data were used for training. For higher PHs (), the RMSE remains almost constant when training with 4, 6, 8 or 12 weeks of data, but is worse for 2 or 16 weeks, suggesting a “sweet spot” of training data volume between 4 and 12 weeks of data. The most plausible explanation for this phenomenon is a tendency of over-fitting anomalous or highly variable behaviors in the scenario with 16 weeks of data, and a lack of relevant dynamics in the 2 weeks of data.

The day and night time predictors show a very consistent predictive power, with little to no difference at low Phs when using different dataset volumes for training, and a slight trend of decreasing RMSE as the number of weeks increases for longer PHs. This is concordant with the initial hypothesis of the effect of the dataset volume on the predictive power.

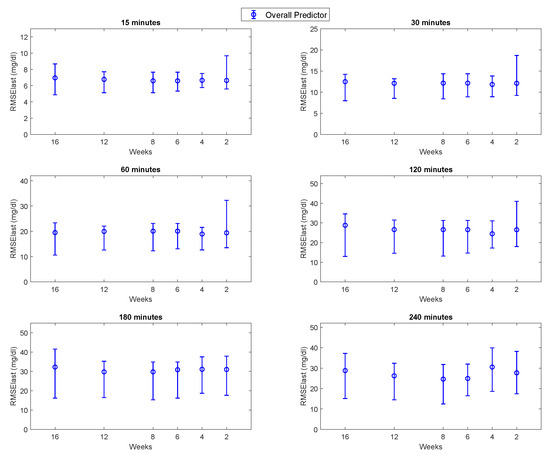

Similar trends are observed if the Overall prediction error is analysed, as seen in Figure 4. For the shorter PHs (), the error bars of the two-week dataset are significantly larger than all the other (larger) datasets used for training, suggesting that a critical volume of training data for obtaining consistently good predictions is more than two weeks. For longer PHs, lower RMSE values tend to be achieved when larger datasets are used for training (6, 8 or 12 weeks of data vs. 2 or 4), but this trend is inverted when the dataset volume is increased to 16 weeks. This suggests that using 16 weeks of data from a whole cohort of patients to train models might lead to subpar predictions, since more dynamics are modeled that may not be used to provide accurate predictions of an individual patient.

Figure 4.

RMSE Median and Quartiles metrics for the Overall for all prediction horizons for all the training dataset sizes.

This is also observed in Figure 5, where the median and interquartile ranges for all the Overall predictors are illustrated overlapping. Median RMSE remains mostly unchanged for low PHs, and it reaches its minimum values for 4, 6 and 8 weeks for longer PHs. Interquartile range drastically increases for the 2 and 16 weeks datasets. When comparing the RMSE between PHs, it is curious to observe how better predictions are achieved for the 240 min prediction vs the 120 min one.

Figure 5.

Median and inter-quartile range trends in RMSE for different PHs.

4. Conclusions

In previous works, seasonal local modeling proved successful in glucose prediction under realistic scenarios for long prediction horizons. However, a minimum of 4 months of data for each patient were needed to properly train the system personalized to the user. The predictive power of these previously tested individualized glucose predictors has been compared to those obtained by a single model for all patients trained using population data. The results shows that there is no loss of predictive power when population and individual approaches are compared. The population approach was easier and faster to implement and train, as only three different glucose predictors were trained for the whole cohort of patients, instead of the thirty glucose predictors that the individual approach requires. Additionally, the use of population data avoids the necessity of using specific data from a patient and transfers knowledge from a generic population to a specific patient by learning common behaviours (local models) useful for all. While the prediction accuracy metrics reported are promising, in order to extrapolate these results to a general population, these models will have to be assessed in a randomized experimental setting.

In summary, it was observed that no significant differences were found between using 16, 12 and 8 weeks for training the population glucose predictors when using them to forecast individual data. Therefore, using an ensemble of data from diverse patients reduces the amount of data required to train models by half, without losing any predictive power.

Author Contributions

Conceptualization, J.-L.D. and J.B.; methodology, J.-L.D., A.J.L.S. and J.B.; software, A.A., J.-L.D. and J.B.; validation, A.A., J.-L.D., A.J.L.S. and J.B. formal analysis, J.-L.D., A.J.L.S. and J.B.; investigation, J.-L.D., A.J.L.S. and J.B.; resources, J.-L.D. and J.B.; data curation, A.A., J.-L.D., A.J.L.S. and J.B.; writing—original draft preparation, A.A. and J.-L.D.; writing—review and editing, J.-L.D., A.J.L.S. and J.B.; visualization, A.A., J.-L.D., A.J.L.S. and J.B.; supervision, J.-L.D. and J.B.; project administration, J.-L.D. and J.B.; funding acquisition, J.-L.D. and J.B. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by grant PID2019-107722RB-C21 funded by MCIN/AEI/10.13039/501100011033, by grant CIPROM/2021/012 funded by Conselleria de Innovación, Universidades, Ciencia y Sociedad Digital from Generalitat Valenciana, by CIBER -Consorcio Centro de Investigación Biomédica en Red- group number CB17/08/00004, Instituto de Salud Carlos III, Ministerio de Ciencia e Innovación and by European Union–European Regional Development Fund.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The simulated datasets used in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

References

- International Diabetes Federation. IDF Diabetes Atlas, 10th ed.; International Diabetes Federation: Brussels, Belgium, 2021. [Google Scholar]

- Hovorka, R. Continuous glucose monitoring and closed-loop systems. Diabet. Med. 2006, 23, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Palerm, C.C.; Willis, J.P.; Desemone, J.; Bequette, B.W. Hypoglycemia prediction and detection using optimal estimation. Diabetes Technol. Ther. 2005, 7, 3–14. [Google Scholar] [CrossRef] [PubMed]

- Li, L.; Xie, X.; Yang, J. A predictive model incorporating the change detection and Winsorization methods for alerting hypoglycemia and hyperglycemia. Med. Biol. Eng. Comput. 2021, 59, 2311–2324. [Google Scholar] [CrossRef]

- Forlenza, G.P.; Li, Z.; Buckingham, B.A.; Pinsker, J.E.; Cengiz, E.; Wadwa, R.P.; Ekhlaspour, L.; Church, M.M.; Weinzimer, S.A.; Jost, E.; et al. Predictive low-glucose suspend reduces hypoglycemia in adults, adolescents, and children with type 1 diabetes in an at-home randomized crossover study: Results of the PROLOG trial. Diabetes Care 2018, 41, 2155–2161. [Google Scholar] [CrossRef]

- Haidar, A. The artificial pancreas: How closed-loop control is revolutionizing diabetes. IEEE Control Syst. Mag. 2016, 36, 28–47. [Google Scholar]

- Kovatchev, B.; Tamborlane, W.V.; Cefalu, W.T.; Cobelli, C. The artificial pancreas in 2016: A digital treatment ecosystem for diabetes. Diabetes Care 2016, 39, 1123–1126. [Google Scholar] [CrossRef] [PubMed]

- Trevitt, S.; Simpson, S.; Wood, A. Artificial pancreas device systems for the closed-loop control of type 1 diabetes: What systems are in development? J. Diabetes Sci. Technol. 2016, 10, 714–723. [Google Scholar] [CrossRef]

- Bergenstal, R.M.; Garg, S.; Weinzimer, S.A.; Buckingham, B.A.; Bode, B.W.; Tamborlane, W.V.; Kaufman, F.R. Safety of a hybrid closed-loop insulin delivery system in patients with type 1 diabetes. JAMA 2016, 316, 1407–1408. [Google Scholar] [CrossRef]

- Georga, E.I.; Príncipe, J.C.K.; Fotiadis, D.I. Short-term prediction of glucose in type 1 diabetes using kernel adaptive filter. Med. Biol. Eng. Comput. 2019, 57, 27–46. [Google Scholar] [CrossRef]

- Hovorka, R.; Canonico, V.; Chassin, L.J.; Haueter, U.; Massi-Benedetti, M.; Federici, M.O.; Pieber, T.R.; Schaller, H.C.; Schaupp, L.; Vering, T.; et al. Nonlinear model predictive control of glucose concentration in subjects with type 1 diabetes. Physiol. Meas. 2004, 25, 905. [Google Scholar] [CrossRef]

- Oviedo, S.; Vehí, J.; Calm, R.; Armengol, J. A review of personalized blood glucose prediction strategies for T1DM patients. Int. J. Numer. Methods Biomed. Eng. 2017, 33, e2833. [Google Scholar] [CrossRef]

- Li, K.; Daniels, J.; Liu, C.; Herrero-Vinas, P.; Georgiou, P. Convolutional Recurrent Neural Networks for Glucose Prediction. IEEE J. Biomed. Health Inform. 2020, 24, 603–613. [Google Scholar] [CrossRef]

- Zhu, T.; Li, K.; Chen, J.; Herrero, P.; Georgiou, P. Dilated Recurrent Neural Networks for Glucose Forecasting in Type 1 Diabetes. J. Healthc. Inform. Res. 2020, 4, 308–324. [Google Scholar] [CrossRef] [PubMed]

- Langarica, S.; Rodriguez-Fernandez, M.; Núñez, F.; Doyle, F. A meta-learning approach to personalized blood glucose prediction in type 1 diabetes. Control Eng. Pract. 2023, 135, 105498. [Google Scholar] [CrossRef]

- Montaser, E.; Díez, J.L.; Bondia, J. Stochastic Seasonal Models for Glucose Prediction in the Artificial Pancreas. J. Diabetes Sci. Technol. 2017, 11, 1124–1131. [Google Scholar] [CrossRef] [PubMed]

- Montaser, E.; Díez, J.L.; Rossetti, P.; Rashid, M.; Cinar, A.; Bondia, J. Seasonal Local Models for Glucose Prediction in Type 1 Diabetes. J. Biomed. Health Inform. 2020, 24, 2064–2072. [Google Scholar] [CrossRef] [PubMed]

- Montaser, E.; Díez, J.-L.; Bondia, J. Glucose Prediction under Variable-Length Time-Stamped Daily Events: A Seasonal Stochastic Local Modeling Framework. Sensors 2021, 21, 3188. [Google Scholar] [CrossRef]

- Prendin, F.; Díez, J.L.; del Favero, S.; Sparacino, G.; Facchinetti, A.; Bondia, J. Assessment of Seasonal Stochastic Local Models for Glucose Prediction without Meal Size Information under Free-Living Conditions. Sensors 2022, 22, 8682. [Google Scholar] [CrossRef]

- León-Vargas, F.; Martin, C.; Garcia-Jaramillo, M.; Aldea, A.; Leal, Y.; Herrero, P.; Reyes, A.; Henao, D.; Gomez, A. Is a cloud-based platform useful for diabetes management in Colombia? The Tidepool experience. Comput. Methods Programs Biomed. 2021, 208, 106205. [Google Scholar] [CrossRef]

- Vehi, J.; Regincós Isern, J.; Parcerisas, A.; Calm, R.; Contreras, I. Impact of Use Frequency of a Mobile Diabetes Management App on Blood Glucose Control: Evaluation Study. JMIR Mhealth Uhealth 2019, 7, e11933. [Google Scholar] [CrossRef]

- Aleppo, G.; Ruedy, K.; Riddlesworth, T.; Kruger, D.; Peters, A.; Hirsch, I.; Bergenstal, R.; Toschi, E.; Ahmann, A.; Shah, V.; et al. REPLACE-BG: A Randomized Trial Comparing Continuous Glucose Monitoring With and Without Routine Blood Glucose Monitoring in Adults With Well-Controlled Type 1 Diabetes. Diabetes Care 2017, 40, 538–545. [Google Scholar] [CrossRef] [PubMed]

- Dalla Man, C.; Micheletto, F.; Lv, D.; Breton, M.; Kovatchev, B.; Cobelli, C. The UVA/PADOVA type 1 diabetes simulator: New features. J. Diabetes Sci. Technol. 2014, 8, 26–34. [Google Scholar]

- Schiavon, M.; Man, C.D.; Kudva, Y.C.; Basu, A.; Cobelli, C. In silico optimization of basal insulin infusion rate during exercise: Implication for artificial pancreas. J. Diabetes Sci. Technol. 2013, 7, 1461–1469. [Google Scholar] [CrossRef] [PubMed]

- Brazeau, A.S.; Mircescu, H.; Desjardins, K.; Leroux, C.; Strychar, I.; Ekoé, J.M.; Rabasa-Lhoret, R. Carbohydrate counting accuracy and blood glucose variability in adults with type 1 diabetes. Diabetes Res. Clin. Pract. 2013, 99, 19–23. [Google Scholar] [CrossRef]

- Haidar, A.; Elleri, D.; Kumareswaran, K.; Leelarathna, L.; Allen, J.M.; Caldwell, K.; Murphy, H.R.; Wilinska, M.E.; Acerini, C.L.; Evans, M.L.; et al. Pharmacokinetics of insulin aspart in pump-treated subjects with type 1 diabetes: Reproducibility and effect of age, weight, and duration of diabetes. Diabetes Care 2013, 36, e173–e174. [Google Scholar] [CrossRef]

- Scheiner, G.; Boyer, B. Characteristics of basal insulin requirements by age and gender in Type-1 diabetes patients using insulin pump therapy. Diabetes Res. Clin. Pract. 2005, 69, 14–21. [Google Scholar] [CrossRef]

- American Diabetes Association. Standards of Medical Care in Diabetes. Diabetes Care. 2014, 1, S14–S80. [Google Scholar]

- Bezdek, J.C. Pattern Recognition with Fuzzy Objective Function Algorithms; Advanced Applications in Pattern Recognition Series; Springer: New York, NY, USA, 1981. [Google Scholar]

- Dixon, J.K. Pattern recognition with partly missing data. IEEE Trans. Syst. Man Cybern. 1979, 9, 617–621. [Google Scholar] [CrossRef]

- Wang, W.; Zhang, Y. On fuzzy cluster validity indices. Fuzzy Sets Syst. 2007, 158, 2095–2117. [Google Scholar] [CrossRef]

- Box, G.E.; Jenkins, G.M.; Reinsel, G.C.; Ljung, G.M. Time Series Analysis: Forecasting and Control; John Wiley & Sons: Hoboken, NJ, USA, 2015. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).