Learning Hierarchical Representations for Explainable Chemical Reaction Prediction

Abstract

1. Introduction

2. Related Work

2.1. Chemical Reaction Representation

2.2. Contrastive Learning

3. Method

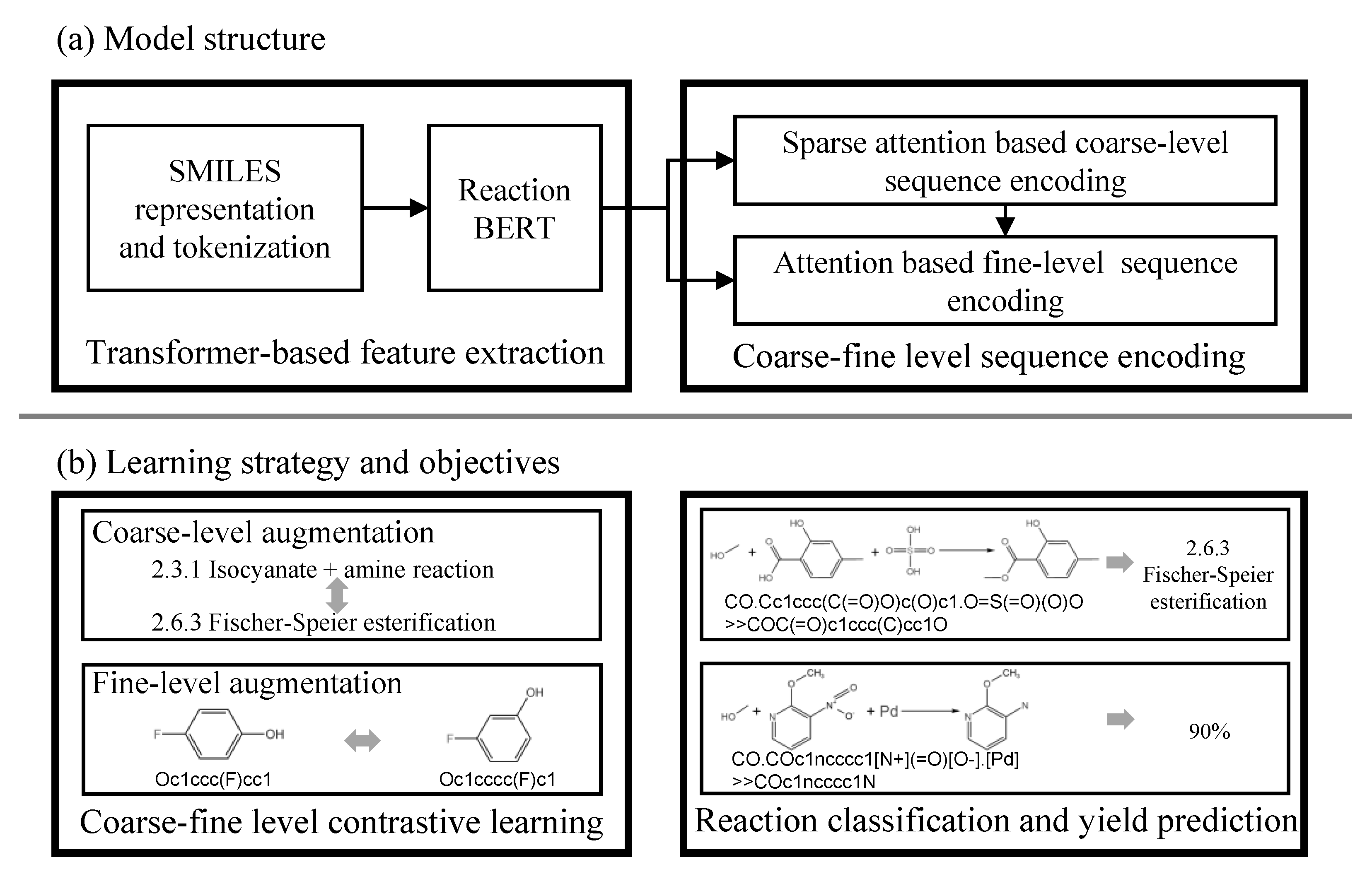

3.1. Model Structure

3.1.1. Transformer-Based Feature Extraction

3.1.2. Coarse-Fine Level Sequence Encoding

3.2. Learning Strategy and Objectives

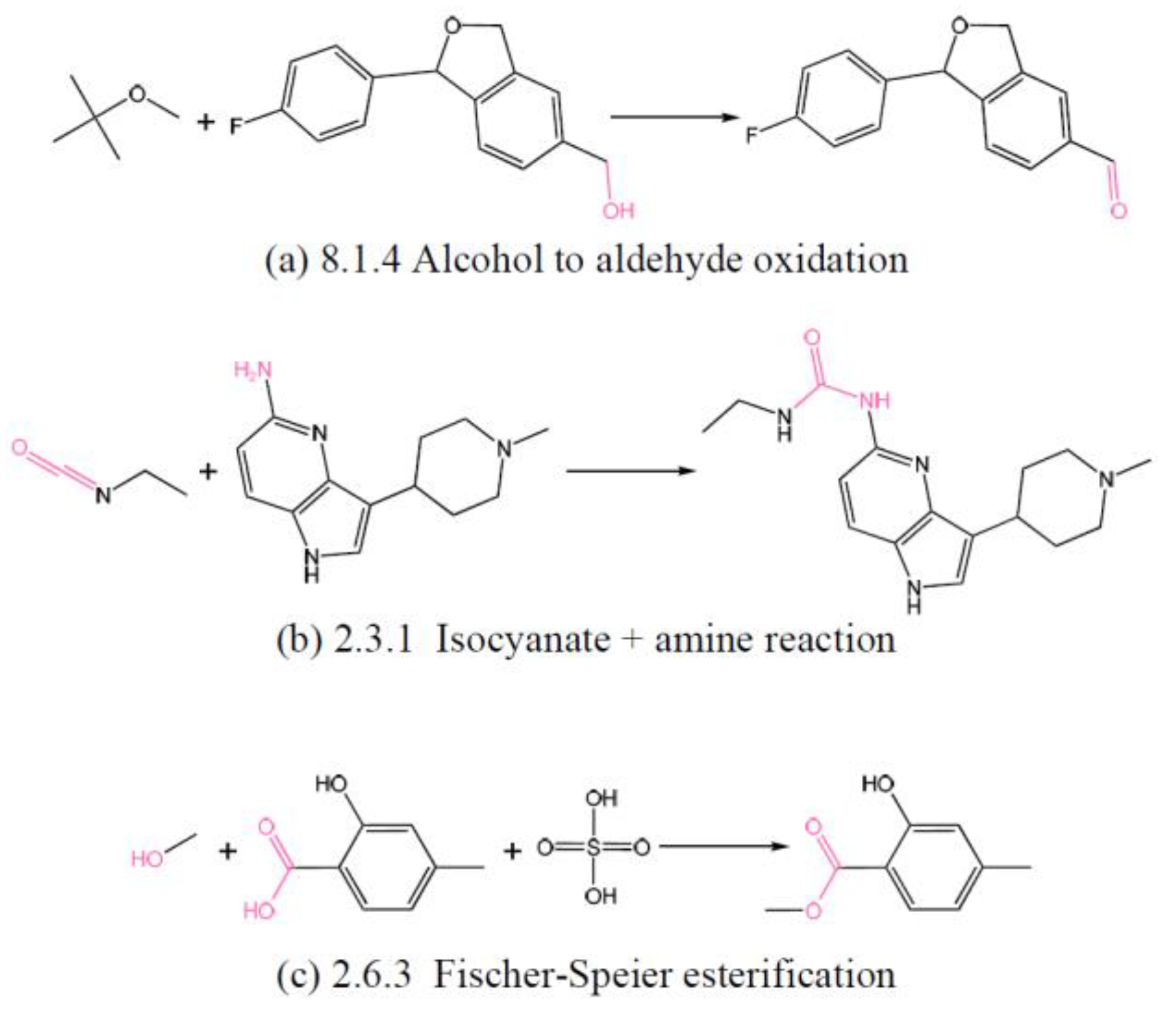

3.2.1. Coarse-Fine Level Contrastive Learning

3.2.2. Reaction Classification and Yield Prediction

4. Experiments

4.1. Datasets

4.2. Implementation Details

4.3. Results of Reaction Classification

4.4. Results of Yield Prediction

4.5. Ablation Study

- (1)

- Our full model in the 9-th row achieves the highest F1-score, which demonstrates that all the designed parts in our model help to discover discriminative and descriptive information for chemical reaction representation.

- (2)

- The 1st row in the table is our backbone model, i.e., the reaction BERT model.

- (3)

- The model of the 2nd row which removes the CSE module and the DA #3 for coarse-level augmentation is thus disabled. The improvement of the performance might mainly come from the data augmentation strategies. Compared with the 9th row, the result also suggests the great importance and usefulness of our coarse-level representation learning.

- (4)

- We banned all the data augmentation methods and the contrastive learning in the 3rd row and observe that the result is even worse than the backbone models. It might be because the scale of Schneider 50k is too small to train a model to generalize well and lead to the negative transfer learning phenomenon. Therefore, it is important for the effectiveness of our model to conduct contrastive learning and data augmentation.

- (5)

- The 4th row indicates that randomly conducting data augmentation achieves a lower F1-score than augmenting data conditioned on the atoms’ importance in the reaction. The performance difference shows the superiority of our probabilistic DA mechanism.

- (6)

- The 5th, 6th, and 7th rows show the results of disabling the 3 DA mechanisms, respectively. Comparing the results with the result of our full model in the 9th row, we can conclude that the DA #1 is the most effective method and the effectiveness of the DA #3 is limited.

- (7)

- The 8th row indicates that the pretraining procedure is removed, and the coarse-level related mechanisms are thus removed without any coarse-level annotations being available. Compared with the 2nd and 3rd rows, the results further emphasized the necessity of our data augmentation and contrastive learning.

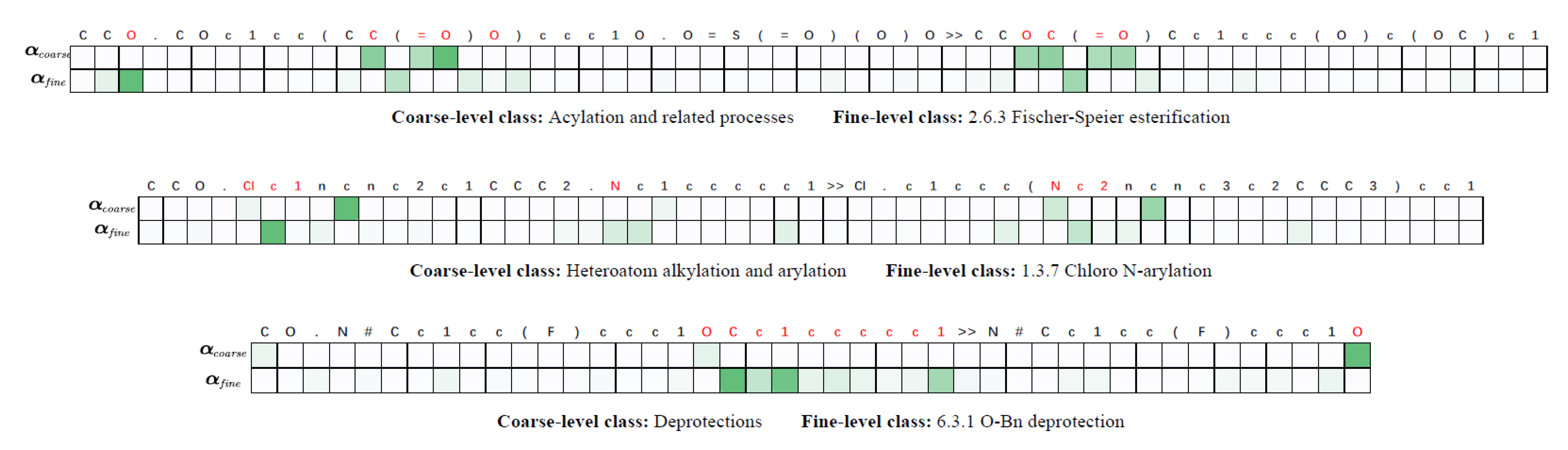

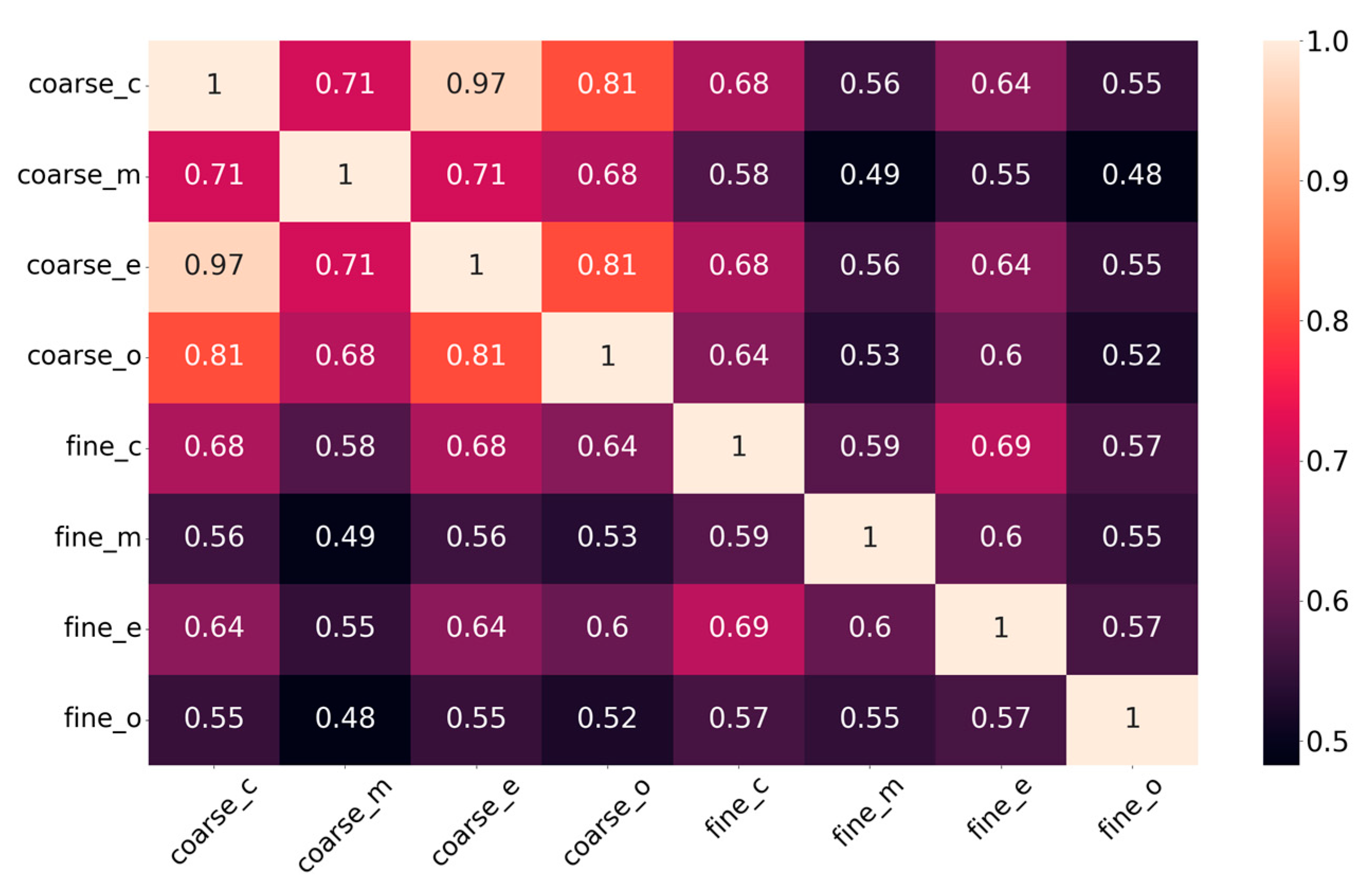

4.6. Disscussions

- (1)

- The coarse-fine level feature representation mechanism. In our model, a sparse attention is first applied to extract and encode the most salient features in coarse level, and an attention with suppression is then used to highlight fine-level representations by constraining the impact of the coarse-level features. The coarse-fine level representation is able to fully discover the useful information for the specific tasks.

- (2)

- The contrastive learning-based data augmentation. Our probabilistic data augmentation calculates the changing probabilities of the atoms and the bonds in the reaction according to the coarse-fine level attention, which generates more accurate data for contrastive pretraining without too much noise. The contrastive learning enforces the model to capture both the inter-class discriminative and intra-class descriptive information.

- (3)

- The data-driven end-to-end training manner. Based on the coarse-level feature representation and the contrastive data augmentation, it is feasible to train our model in an end-to-end manner with only a small amount of annotated data. The advantage of the end-to-end training is to learn appropriate representations for various tasks, such as the reaction-classification and yield-prediction task.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ramakrishnan, R.; Dral, P.O.; Rupp, M.; von Lilienfeld, O.A. Quantum chemistry structures and properties of 134 kilo molecules. Sci. Data 2015, 2, 140022. [Google Scholar] [CrossRef]

- Goh, G.B.; Hodas, N.O.; Vishnu, A. Deep learning for computational chemistry. J. Comput. Chem. 2017, 38, 1291–1307. [Google Scholar] [CrossRef]

- Coley, C.W.; Barzilay, R.; Green, W.H.; Jaakkola, T.S.; Jensen, K.F.; Kang, S.H. A graph-convolutional neural network model for the prediction of chemical reactivity. Chem. Sci. 2019, 10, 370–377. [Google Scholar] [CrossRef] [PubMed]

- Segler, M.H.; Kogej, T.; Tyrchan, C.; Waller, M.P. Generating focused molecule libraries for drug discovery with recurrent neural networks. ACS Cent. Sci. 2018, 4, 120–131. [Google Scholar] [CrossRef]

- Chen, H.; Engkvist, O.; Wang, Y.; Olivecrona, M.; Blaschke, T. The rise of deep learning in drug discovery. Drug Discov. Today 2018, 23, 1241–1250. [Google Scholar] [CrossRef]

- Ma, J.; Sheridan, R.P.; Liaw, A.; Dahl, G.E.; Svetnik, V.; Team, D.D. Deep learning enables rapid identification of potent DDR1 kinase inhibitors. Nat. Biotechnol. 2019, 37, 1038–1040. [Google Scholar]

- Raccuglia, P.; Elbert, K.C.; Adler, P.D.F.; Falk, C.; Wenny, M.B.; Mollo, A.; Zeller, M.; Friedler, S.A.; Schrier, J.; Norquist, A.J.; et al. Machine-learning-assisted materials discovery using failed experiments. Nature 2016, 533, 73–76. [Google Scholar] [CrossRef] [PubMed]

- Mater, A.C.; Coote, M.L. Deep learning in chemistry. J. Chem. Inf. Model. 2019, 59, 2545–2559. [Google Scholar] [CrossRef] [PubMed]

- Schwaller, P.; Probst, D.; Vaucher, A.C.; Nair, V.H.; Kreutter, D.; Laino, T.; Reymond, J. Mapping the space of chemical reactions using attention-based neural networks. Nat. Mach. Intell. 2021, 3, 144–152. [Google Scholar] [CrossRef]

- Schwaller, P.; Vaucher, A.C.; Laino, T.; Reymond, J. Prediction of chemical reaction yields using deep learning. Mach. Learn. Sci. Technol. 2021, 2, 15016. [Google Scholar] [CrossRef]

- Probst, D.; Schwaller, P.; Reymond, J.L. Reaction classification and yield prediction using the differential reaction fingerprint DRFP. Digit. Discov. 2022, 1, 91–97. [Google Scholar] [CrossRef]

- Zeng, Z.; Yao, Y.; Liu, Z.; Sun, M. A deep-learning system bridging molecule structure and biomedical text with comprehension comparable to human professionals. Nat. Commun. 2022, 13, 862. [Google Scholar] [CrossRef]

- Schwaller, P.; Vaucher, A.C.; Laino, T.; Reymond, J.L. Data augmentation strategies to improve reaction yield predictions and estimate uncertainty. In Proceedings of the NeurIPS Workshop on Machine Learning for Molecules, Virtual, 6–12 December 2020. [Google Scholar]

- Schwaller, P.; Gaudin, T.; Lányi, D.; Bekas, C.; Laino, T.; Nair, V.H. “Molecular Transformer”: A model for uncertainty-calibrated chemical reaction prediction. ACS Cent. Sci. 2019, 5, 1572–1583. [Google Scholar] [CrossRef]

- Jin, W.; Barzilay, R.; Jaakkola, T. Junction tree variational autoencoder for molecular graph generation. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 27–37. [Google Scholar]

- Coley, C.W.; Barzilay, R.; Green, W.H.; Jaakkola, T.S.; Jensen, K.F.; Kang, B. Convolutional embedding of attributed molecular graphs for physical property prediction. J. Chem. Inf. Model. 2019, 59, 3427–3436. [Google Scholar] [CrossRef]

- Hou, J.; Wu, X.; Wang, R.; Luo, J.; Jia, Y. Confidence-Guided Self Refinement for Action Prediction in Untrimmed Videos. IEEE Trans. Image Process. 2020, 29, 6017–6031. [Google Scholar] [CrossRef] [PubMed]

- Schneider, N.; Lowe, D.M.; Sayle, R.A.; Landrum, G.A. Development of a novel fingerprint for chemical reactions and its application to large-scale reaction classification and similarity. J. Chem. Inf. Model. 2015, 55, 39–53. [Google Scholar] [CrossRef]

- Lowe, D. Chemical reactions from US patents (1976-Sep2016). Figshare 2017. [Google Scholar] [CrossRef]

- Gilmer, J.; Schoenholz, S.S.; Riley, P.F.; Vinyals, O.; Dahl, G.E. Neural message passing for quantum chemistry. In Proceedings of the International Conference on Machine Learning, Sydney, Australia, 11–15 August 2017; pp. 1263–1272. [Google Scholar]

- Kwon, Y.; Lee, D.; Choi, Y.S.; Kang, S. Uncertainty-aware prediction of chemical reaction yields with graph neural networks. J. Cheminform. 2022, 14, 2. [Google Scholar] [CrossRef] [PubMed]

- Saebi, M.; Nan, B.; Herr, J.; Wahlers, J.; Wiest, O.; Chawla, N. Graph neural networks for predicting chemical reaction performance. Chemrxiv.Org 2021. [Google Scholar] [CrossRef]

- Jung, C.; Kwon, G.; Ye, J.C. Exploring patch-wise semantic relation for contrastive learning in image-to-image translation tasks. In Proceedings of the International Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 18260–18269. [Google Scholar]

- Wang, X.; Du, Y.; Yang, S.; Zhang, J.; Wang, M.; Zhang, J.; Yang, W.; Huang, J.; Han, X. RetCCL: Clustering-guided contrastive learning for whole-slide image retrieval. Med. Image Anal. 2023, 83, 102645. [Google Scholar] [CrossRef]

- Yang, J.; Duan, J.; Tran, S.; Xu, Y.; Chanda, S.; Chen, L.; Zeng, B.; Chilimbi, T.; Huang, J. Vision-language pre-training with triple contrastive learning. In Proceedings of the International Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 15671–15680. [Google Scholar]

- Rethmeier, N.; Augenstein, I. A Primer on Contrastive Pretraining in Language Processing: Methods, Lessons Learned, and Perspectives. ACM Comput. Surv. 2023, 55, 1–17. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, J.; Cao, Z.; Barati Farimani, A. Molecular contrastive learning of representations via graph neural networks. Nat. Mach. Intell. 2022, 4, 279–287. [Google Scholar] [CrossRef]

- Wen, M.; Blau, S.M.; Xie, X.; Dwaraknath, S.; Persson, K.A. Improving machine learning performance on small chemical reaction data with unsupervised contrastive pretraining. Chem. Sci. 2022, 13, 1446–1458. [Google Scholar] [CrossRef]

- Weininger, D. SMILES, a chemical language and information system. Introduction to methodology and encoding rules. J. Chem. Inf. Comput. Sci. 1988, 28, 31–36. [Google Scholar] [CrossRef]

- Lu, Y.; Wen, L.; Liu, J.; Liu, Y.; Tian, X. Self-Supervision Can Be a Good Few-Shot Learner. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 25–27 October 2022; pp. 740–758. [Google Scholar]

- Chen, X.; He, K. Exploring Simple Siamese Representation Learning. In Proceedings of the International Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 15750–15758. [Google Scholar]

- Arús-Pous, J.; Johansson, S.V.; Prykhodko, O.; Bjerrum, E.J.; Tyrchan, C.; Reymond, J.L.; Chen, H.; Engkvist, O. Randomized SMILES strings improve the quality of molecular generative models. J. Cheminform. 2019, 11, 71. [Google Scholar] [CrossRef] [PubMed]

- Lambard, G.; Gracheva, E. Smiles-x: Autonomous molecular compounds characterization for small datasets without descriptors. Mach. Learn. Sci. Technol. 2020, 1, 025004. [Google Scholar] [CrossRef]

- Wei, J.; Yuan, X.; Hu, Q.; Wang, S. A novel measure for evaluating classifiers. Expert Syst. Appl. 2010, 37, 3799–3809. [Google Scholar] [CrossRef]

- Matthews, B.W. Comparison of the predicted and observed secondary structure of T4 phage lysozyme. Biochim. Biophyica Acta (BBA)-Protein Struct. 1975, 405, 442–451. [Google Scholar] [CrossRef]

- Gorodkin, J. Comparing two K-category assignments by a K-category correlation coefficient. Comput. Biol. Chem. 2004, 28, 367–374. [Google Scholar] [CrossRef]

- Landrum, G.; Tosco, P.; Kelley, B.; Riniker, S.; Gedeck, P.; Schneider, N.; Vianello, R.; Dalke, A.; Schmidt, R.; Cole, B.; et al. rdkit/rdkit: 2019 03 4 (Q1 2019) Release; OpenAIRE: Athens, Greece, 2019. [Google Scholar] [CrossRef]

| Datasets | Schneider 50k [18] | USPTO 1k TPL [9] | USPTO Yield [19] |

|---|---|---|---|

| Task | reaction classification | reaction classification | yield prediction |

| Reaction Number | 50k | 445k | 500k |

| Class Number | 9 super classes 50 template classes | 1000 template classes | - |

| Split Strategy | 10k for training 40k for testing | 90% for training 10% for testing | Random split |

| USP-few: 32 per class for training | Time split: data in and before 2012 for training, after 2012 for testing | ||

| Metric | Accuracy, CEN [34], and MCC [35,36] | Accuracy, CEN [34], MCC [35,36], and F1-score |

| Datasets | Schneider 50k | USPTO 1k TPL | ||||

|---|---|---|---|---|---|---|

| Metrics | Accuracy | CEN | MCC | Accuracy | CEN | MCC |

| Reaction BERT | 0.985 | - | - | 0.989 | 0.006 | 0.989 |

| DRFP | 0.956 | 0.053 | 0.955 | 0.977 | 0.011 | 0.977 |

| Ours | 0.988 | 0.011 | 0.987 | 0.991 | 0.005 | 0.990 |

| Methods | Reaction BERT | KV-PLM | Ours |

|---|---|---|---|

| Marco F1 | 0.790 | 0.856 | 0.873 |

| Methods | Yield BERT | DRFP | Ours (w/o Pretraining) | Ours | ||||

|---|---|---|---|---|---|---|---|---|

| Splits | Random | Time | Random | Time | Random | Time | Random | Time |

| Gram scale | 0.117 | 0.095 | 0.130 | - | 0.125 | 0.097 | 0.129 | 0.099 |

| Sub-gram scale | 0.195 | 0.142 | 0.197 | - | 0.198 | 0.146 | 0.200 | 0.147 |

| # | CSE | FSE | Probabilistic DA | DA #1 | DA #2 | DA #3 | Pretraining | Macro F1 |

|---|---|---|---|---|---|---|---|---|

| 1 | - | - | - | - | - | - | - | 0.790 |

| 2 | - | ✓ | ✓ | ✓ | ✓ | - | ✓ | 0.802 |

| 3 | ✓ | ✓ | - | - | - | - | ✓ | 0.787 |

| 4 | ✓ | ✓ | - | ✓ | ✓ | ✓ | ✓ | 0.835 |

| 5 | ✓ | ✓ | ✓ | - | ✓ | ✓ | ✓ | 0.823 |

| 6 | ✓ | ✓ | ✓ | ✓ | - | ✓ | ✓ | 0.867 |

| 7 | ✓ | ✓ | ✓ | ✓ | ✓ | - | ✓ | 0.872 |

| 8 | - | ✓ | ✓ | ✓ | ✓ | - | - | 0.793 |

| 9 | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | 0.873 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hou, J.; Dong, Z. Learning Hierarchical Representations for Explainable Chemical Reaction Prediction. Appl. Sci. 2023, 13, 5311. https://doi.org/10.3390/app13095311

Hou J, Dong Z. Learning Hierarchical Representations for Explainable Chemical Reaction Prediction. Applied Sciences. 2023; 13(9):5311. https://doi.org/10.3390/app13095311

Chicago/Turabian StyleHou, Jingyi, and Zhen Dong. 2023. "Learning Hierarchical Representations for Explainable Chemical Reaction Prediction" Applied Sciences 13, no. 9: 5311. https://doi.org/10.3390/app13095311

APA StyleHou, J., & Dong, Z. (2023). Learning Hierarchical Representations for Explainable Chemical Reaction Prediction. Applied Sciences, 13(9), 5311. https://doi.org/10.3390/app13095311