Multi-Source HRRP Target Fusion Recognition Based on Time-Step Correlation

Abstract

1. Introduction

2. Proposed Model

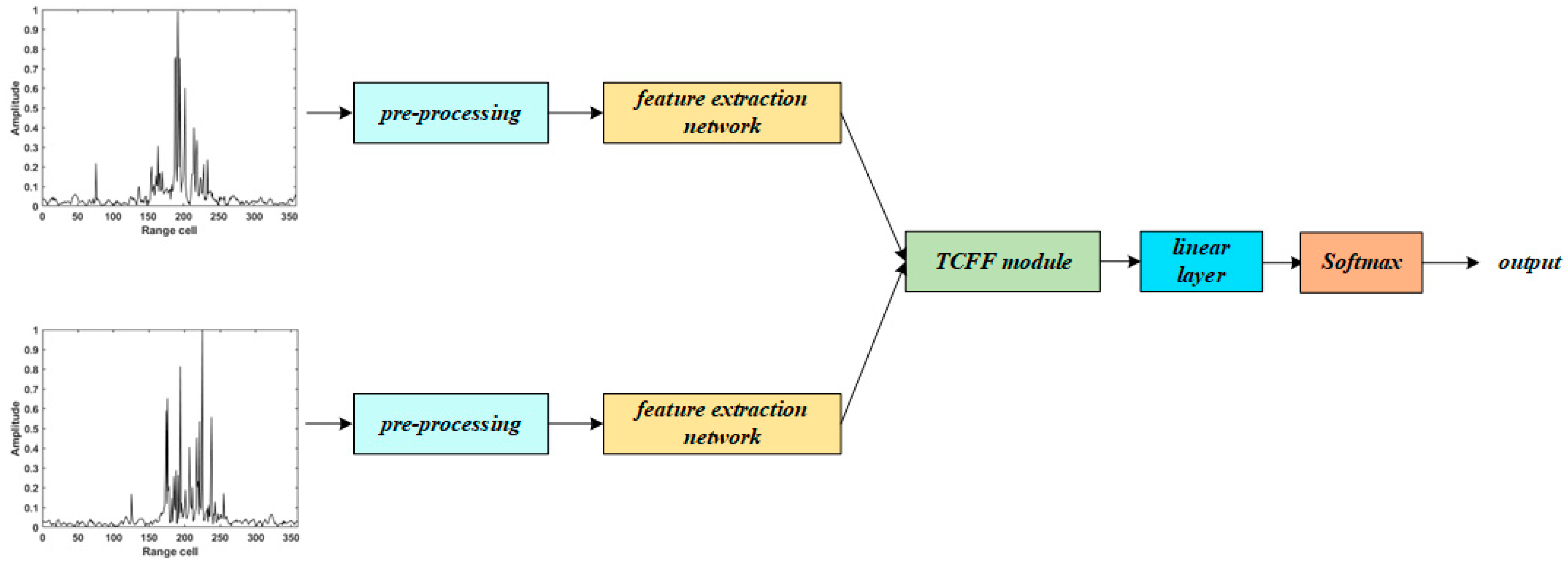

2.1. Multi-Source Feature Fusion Recognition Network Based on Time-Tep Correlation

2.2. Data Pre-Processing

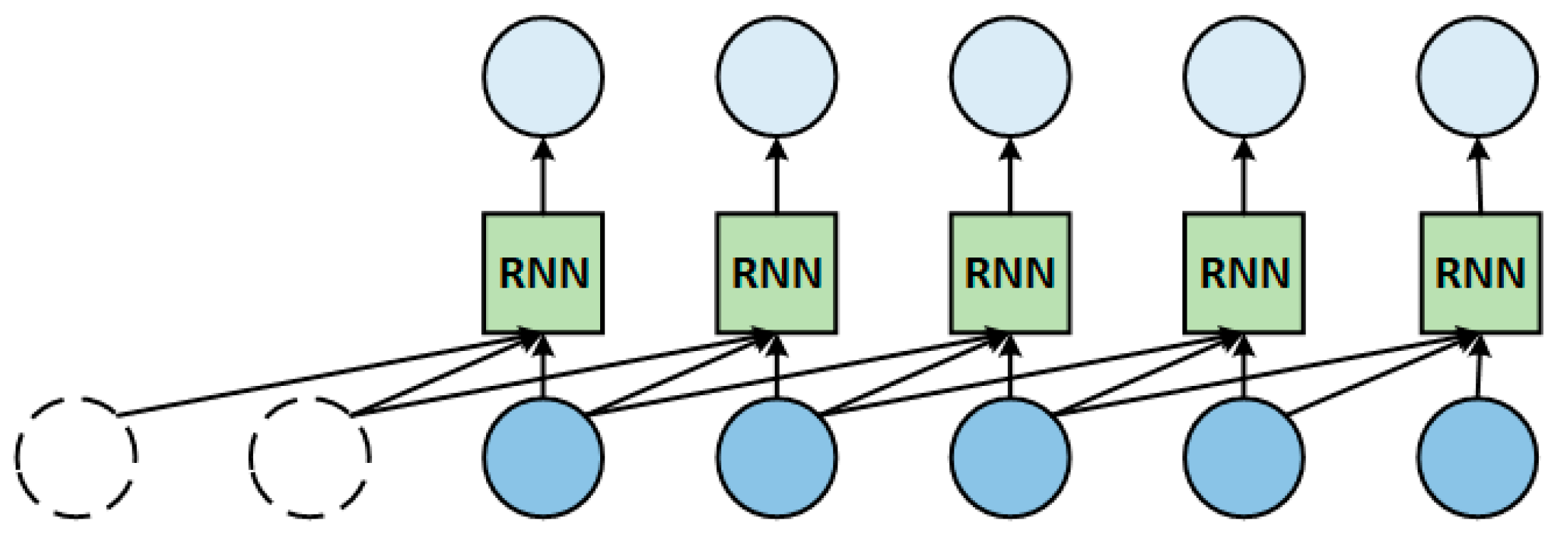

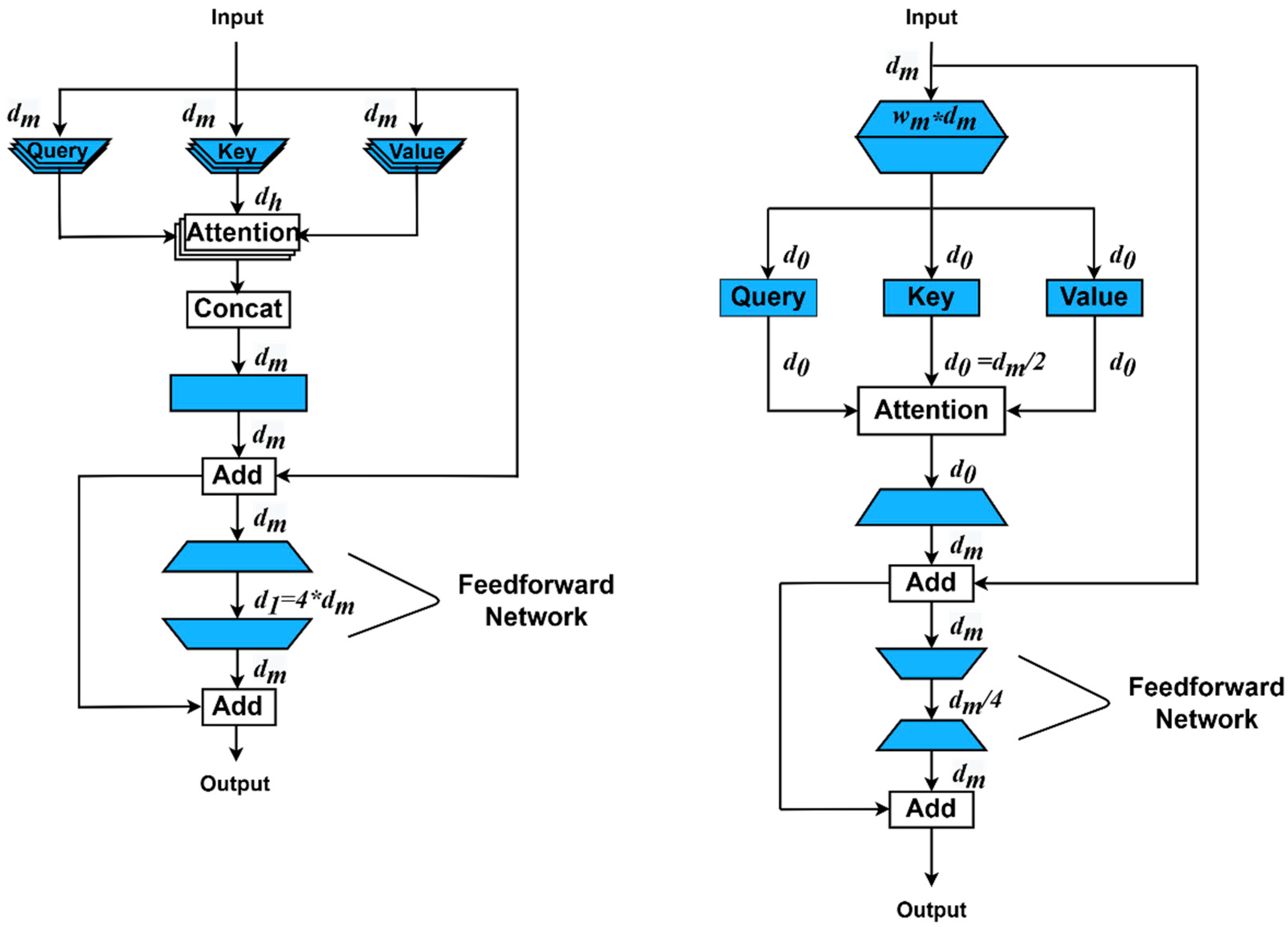

2.3. Feature Extraction Network

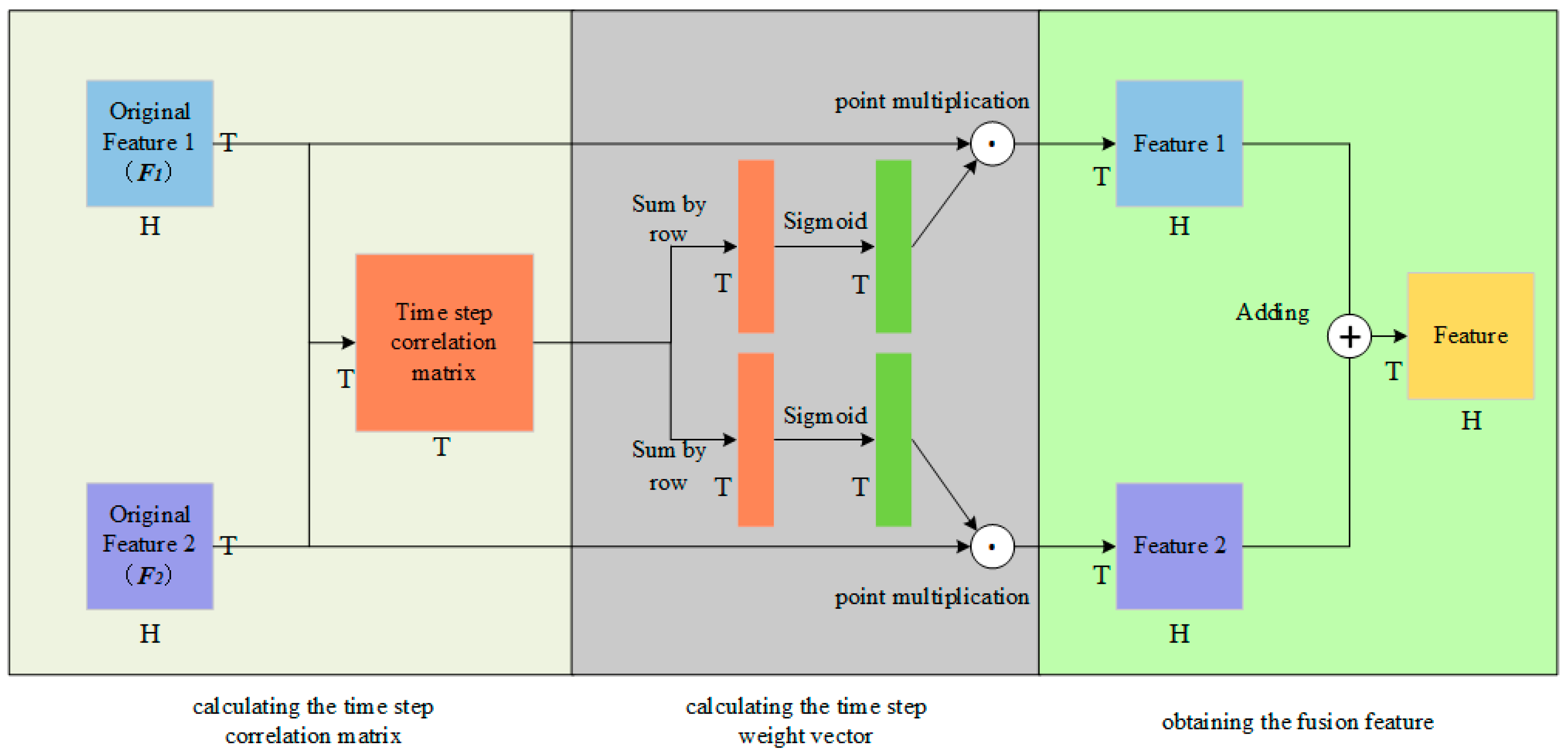

2.4. Time-Step Correlation-Based Feature Fusion (TCFF) Module

3. Experimental Results and Analysis

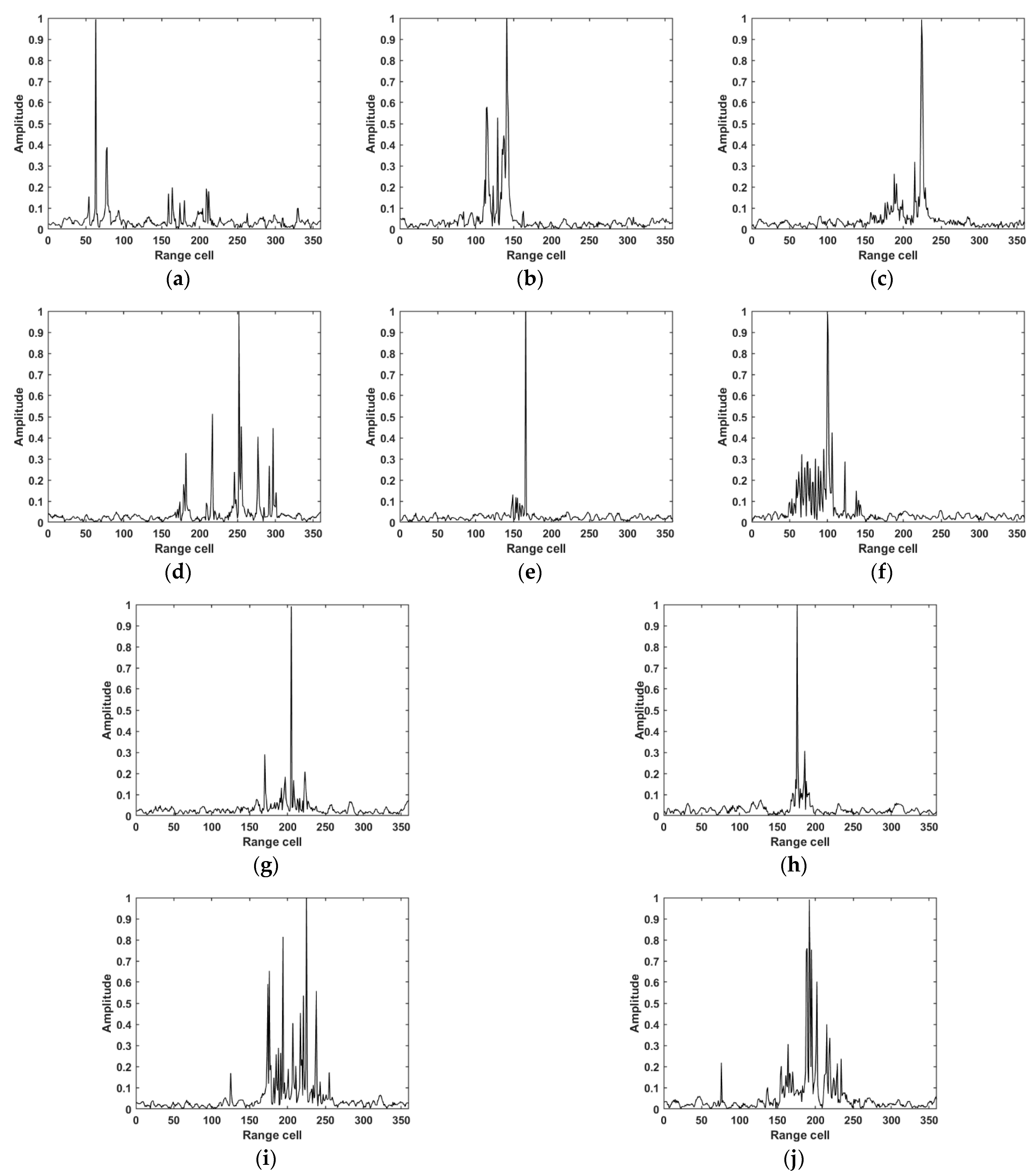

3.1. Introduction to the Dataset

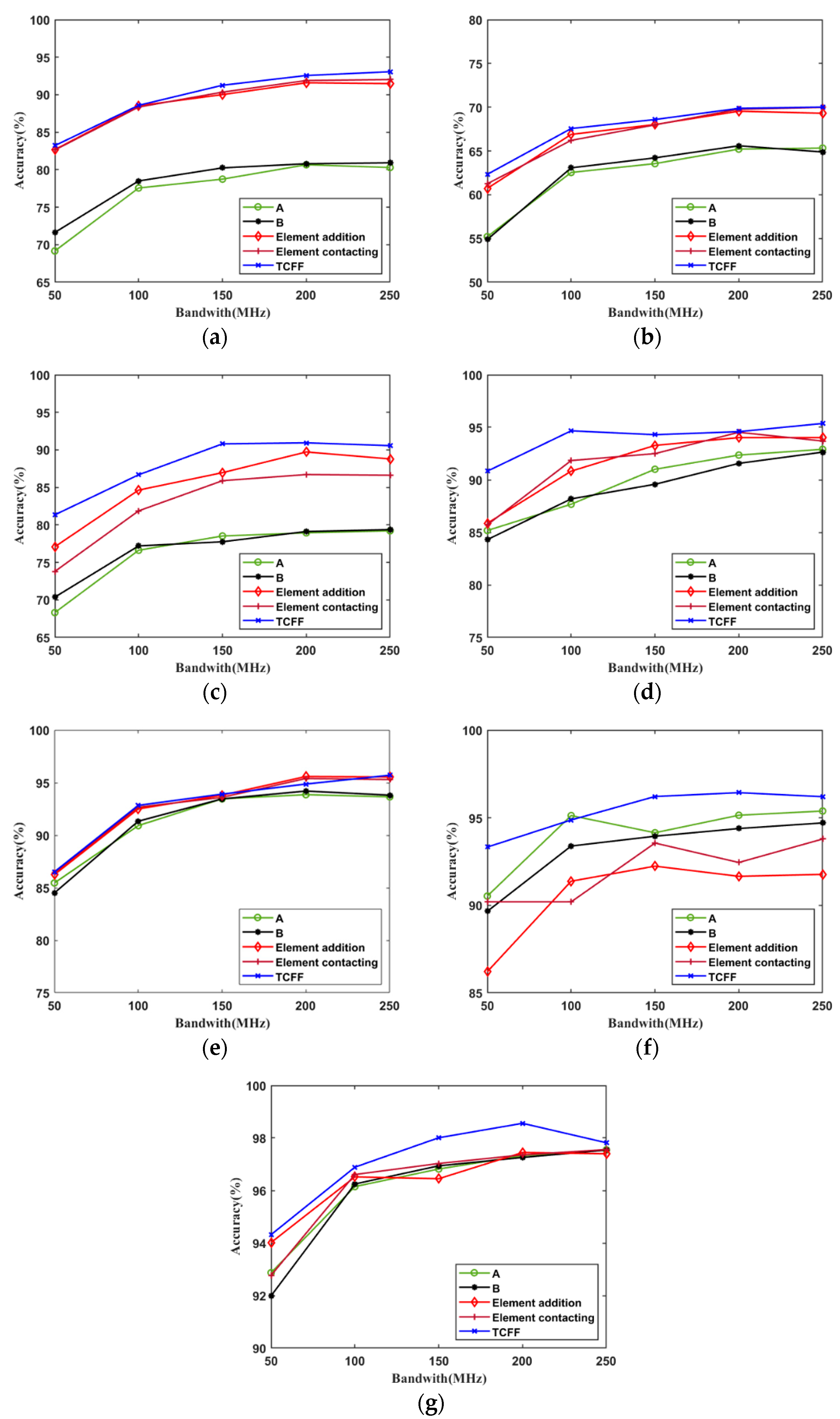

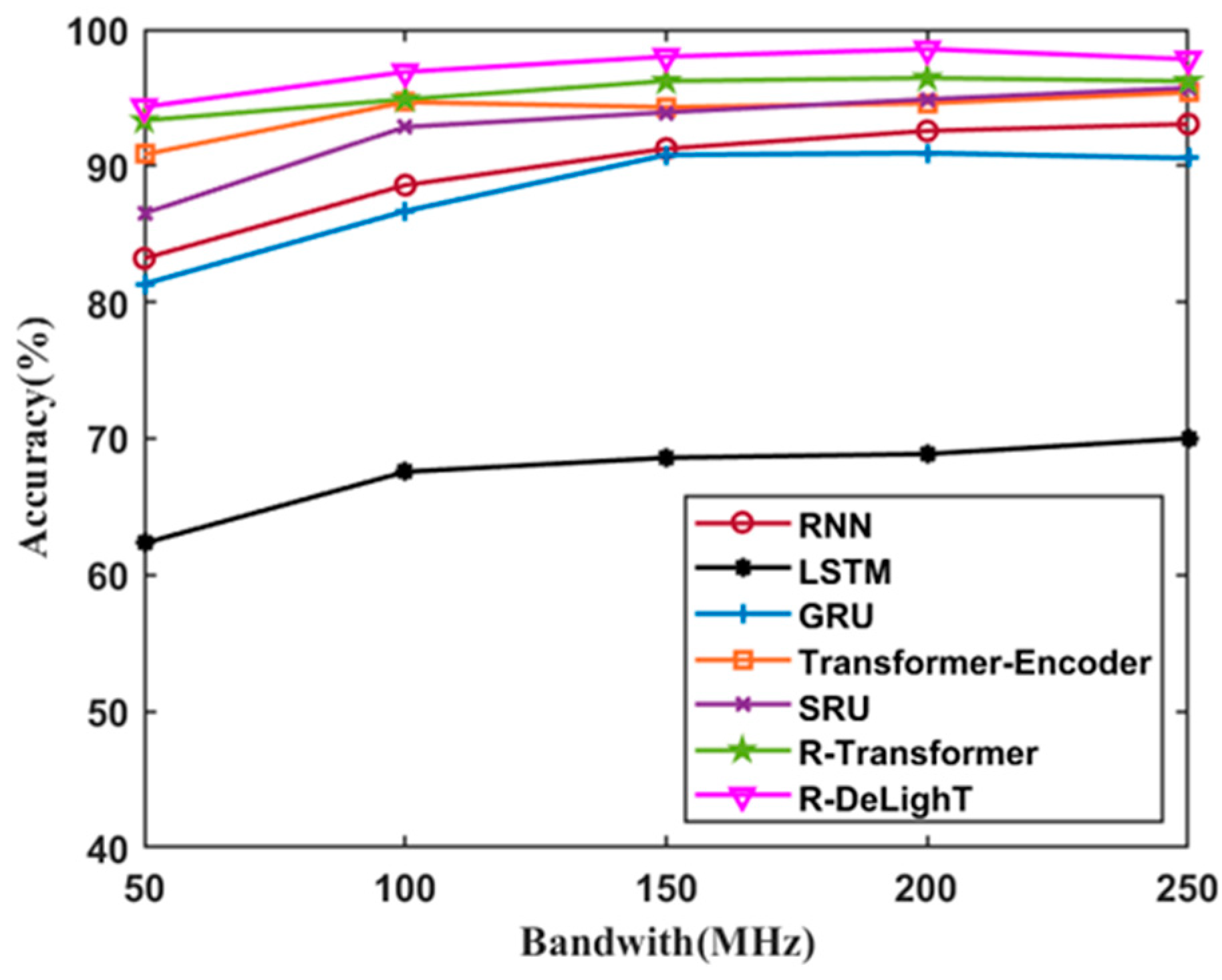

3.2. Recognition Performance

4. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhang, X.D.; Shi, Y.; Bao, Z. A new feature vector using selected bispectra for signal classification with application in radar target recognition. IEEE Trans. Signal Process. 2001, 49, 1875–1885. [Google Scholar] [CrossRef]

- Liao, X.; Runkle, P.; Carin, L. Identification of ground targets from sequential high-range-resolution radar signatures. IEEE Trans. Aerosp. Electron. Syst. 2002, 38, 1230–1242. [Google Scholar] [CrossRef]

- Shi, L.; Wang, P.; Liu, H.; Xu, L.; Bao, Z. Radar HRRP statistical recognition with local factor analysis by automatic Bayesian Ying-Yang harmony learning. IEEE Trans. Signal Process. 2010, 59, 610–617. [Google Scholar] [CrossRef]

- Du, L.; Wang, P.; Liu, H.; Pan, M.; Chen, F.; Bao, Z. Bayesian spatiotemporal multitask learning for radar HRRP target recognition. IEEE Trans. Signal Process. 2011, 59, 3182–3196. [Google Scholar] [CrossRef]

- Zhang, H.; Ding, D.; Fan, Z.; Chen, R. Adaptive neighborhood-preserving discriminant projection method for HRRP-based radar target recognition. IEEE Antennas Wirel. Propag. Lett. 2014, 14, 650–653. [Google Scholar] [CrossRef]

- Liu, H.; Feng, B.; Chen, B.; Du, L. Radar high-resolution range profiles target recognition based on stable dictionary learning. IET Radar Sonar Navig. 2016, 10, 228–237. [Google Scholar] [CrossRef]

- Zhou, D. Radar target HRRP recognition based on reconstructive and discriminative dictionary learning. Signal Process. 2016, 126, 52–64. [Google Scholar] [CrossRef]

- Feng, B.; Chen, B.; Liu, H. Radar HRRP target recognition with deep networks. Pattern Recognit. 2017, 61, 379–393. [Google Scholar] [CrossRef]

- Liao, K.; Si, J.; Zhu, F.; He, X. Radar HRRP target recognition based on concatenated deep neural networks. IEEE Access 2018, 6, 29211–29218. [Google Scholar] [CrossRef]

- Chen, G.U.O.; Tao, J.I.A.N.; Congan, X.U.; You, H.E.; Shun, S.U.N. Radar HRRP Target Recognition Based on Deep Multi-Scale 1D Convolutional Neural Network. J. Electron. Inf. Technol. 2019, 41, 1302–1309. [Google Scholar]

- Du, L.; Liu, H.; Bao, Z.; Xing, M. Radar HRRP target recognition based on higher order spectra. IEEE Trans. Signal Process. 2005, 53, 2359–2368. [Google Scholar]

- Du, L.; Liu, H.; Bao, Z.; Zhang, J. Radar automatic target recognition using complex high-resolution range profiles. IET Radar. Sonar. Navig. 2007, 1, 18–26. [Google Scholar] [CrossRef]

- Feng, B.; Du, L.; Liu, H.; Fei, L. Radar HRRP target recognition based on K-SVD algorithm. In Proceedings of the 2011 IEEE CIE International Conference on Radar, Chengdu, China, 24–27 October 2011; 1, pp. 642–645. [Google Scholar]

- Pan, M.; Du, L.; Wang, P.; Liu, H.; Bao, Z. Multi-task hidden Markov model for radar automatic target recognition. In Proceedings of the 2011 IEEE CIE International Conference on Radar, Chengdu, China, 24–27 October 2011; 1, pp. 650–653. [Google Scholar]

- Xu, B.; Chen, B.; Liu, H.; Jin, L. Attention-based recurrent neural network model for radar high-resolution range profile target recognition. J. Electron. Inf. Technol. 2016, 38, 2988–2995. [Google Scholar]

- Xu, B.; Chen, B.; Liu, J.; Wang, P.; Liu, H. Radar HRRP target recognition by the bidirectional LSTM model. Xi’an Dianzi Keji Daxue Xuebao J. Xidian Univ. 2019, 46, 29–34. [Google Scholar]

- Liu, J.; Chen, B.; Jie, X. Radar high-resolution range profile target recognition based on attention mechanism and bidirectional gated recurrent. J. Radars 2019, 8, 589–597. [Google Scholar]

- Jinwei, W.; Bo, C.; Bin, X.; Liu, H.; Jin, L. Convolutional neural networks for radar HRRP target recognition and rejection. EURASIP J. Adv. Signal Process. 2019, 2019, 5. [Google Scholar]

- Kuncheva, L.I.; Bezdek, J.C.; Duin, R.P.W. Decision templates for multiple classifier fusion: An experimental comparison. Pattern Recognit. 2001, 34, 299–314. [Google Scholar] [CrossRef]

- Liu, Z.G.; Pan, Q.; Dezert, J.; Han, J.; He, Y. Classifier Fusion With Contextual Reliability Evaluation. IEEE Trans. Cybern. 2018, 48, 1605–1618. [Google Scholar] [CrossRef]

- Liu, Z.G.; Pan, Q.; Mercier, G.; Dezert, J. A New Incomplete Pattern Classification Method Based on Evidential Reasoning. IEEE Trans. Cybern. 2015, 45, 635–646. [Google Scholar] [CrossRef] [PubMed]

- Chen, J. Radar Target Recognition Based on Multi-features Fusion. Bachelor’s Thesis, Xidian University, Xi’an, China, 2010. [Google Scholar]

- Li, H.; Qiming, M.; Shuanchuan, D. A feature fusion algorithm based on canonical correlation analysis. Acoust. Electron. Eng. 2015, 20–23. [Google Scholar]

- Zhai, J.; Dong, G.; Chen, F.; Xiaodan, X.; Chengming, Q.; Lin, L. A Deep Learning Fusion Recognition Method Based on SAR Image Data. Procedia Comput. Sci. 2019, 147, 533–541. [Google Scholar]

- Wu, J.; Zhang, H.; Gao, X. Radar High-Resolution Range Profile Target Recognition by the Dual Parallel Sequence Network Model. Int. J. Antennas Propag. 2021, 2021, 1–9. [Google Scholar] [CrossRef]

- Seetharaman, P.; Wichern, G.; Pardo, B.; Roux, J.L. Autoclip: Adaptive gradient clipping for source separation networks. In Proceedings of the 2020 IEEE 30th International Workshop on Machine Learning for Signal Processing (MLSP), Espoo, Finland, 21–24 September 2020; IEEE. pp. 1–6. [Google Scholar]

- Xu, B. Radar High Resolution Range Profile Target Recognition based on Temporal Dynamic Methods. Ph.D. Thesis, Xidian University, Xi’an, China, 2019. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Cho, K.; van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning phrase representations using RNN encoder-decoder for statistical machine translation. arXiv 2014, arXiv:1406.1078. [Google Scholar]

- Lei, T. Fast Neural Network Implementations by Increasing Parallelism of Cell Computations. U.S. Patent 11,106,975, 31 August 2021. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 8, 5598–6008. [Google Scholar]

- Yue, Z.; Lu, J.; Wan, L. Lightweight Transformer Network for Ship HRRP Target Recognition. Appl. Sci. 2022, 12, 9728. [Google Scholar] [CrossRef]

| Ship Number | Length (m) | Width (m) |

|---|---|---|

| 1 | 182.8 | 24.1 |

| 2 | 153.8 | 20.4 |

| 3 | 162.9 | 21.4 |

| 4 | 99.6 | 15.2 |

| 5 | 332.8 | 76.4 |

| 6 | 337.2 | 77.2 |

| 7 | 17.1 | 3.6 |

| 8 | 143.4 | 15.2 |

| 9 | 17.6 | 4.5 |

| 10 | 16.3 | 4.7 |

| Number | Operational Frequency Band (GHz) | Bandwidth (MHz) |

|---|---|---|

| A1 | 2.85–2.90 | 50 |

| A2 | 2.85–2.95 | 100 |

| A3 | 2.85–3.00 | 150 |

| A4 | 2.85–3.05 | 200 |

| A5 | 2.85–3.10 | 250 |

| B1 | 3.10–3.15 | 50 |

| B2 | 3.05–3.15 | 100 |

| B3 | 3.00–3.15 | 150 |

| B4 | 2.95–3.15 | 200 |

| B5 | 2.90–3.15 | 250 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, J.; Yue, Z.; Wan, L. Multi-Source HRRP Target Fusion Recognition Based on Time-Step Correlation. Appl. Sci. 2023, 13, 5286. https://doi.org/10.3390/app13095286

Lu J, Yue Z, Wan L. Multi-Source HRRP Target Fusion Recognition Based on Time-Step Correlation. Applied Sciences. 2023; 13(9):5286. https://doi.org/10.3390/app13095286

Chicago/Turabian StyleLu, Jianbin, Zhibin Yue, and Lu Wan. 2023. "Multi-Source HRRP Target Fusion Recognition Based on Time-Step Correlation" Applied Sciences 13, no. 9: 5286. https://doi.org/10.3390/app13095286

APA StyleLu, J., Yue, Z., & Wan, L. (2023). Multi-Source HRRP Target Fusion Recognition Based on Time-Step Correlation. Applied Sciences, 13(9), 5286. https://doi.org/10.3390/app13095286