Information Rich Voxel Grid for Use in Heterogeneous Multi-Agent Robotics

Abstract

1. Introduction

- 1.

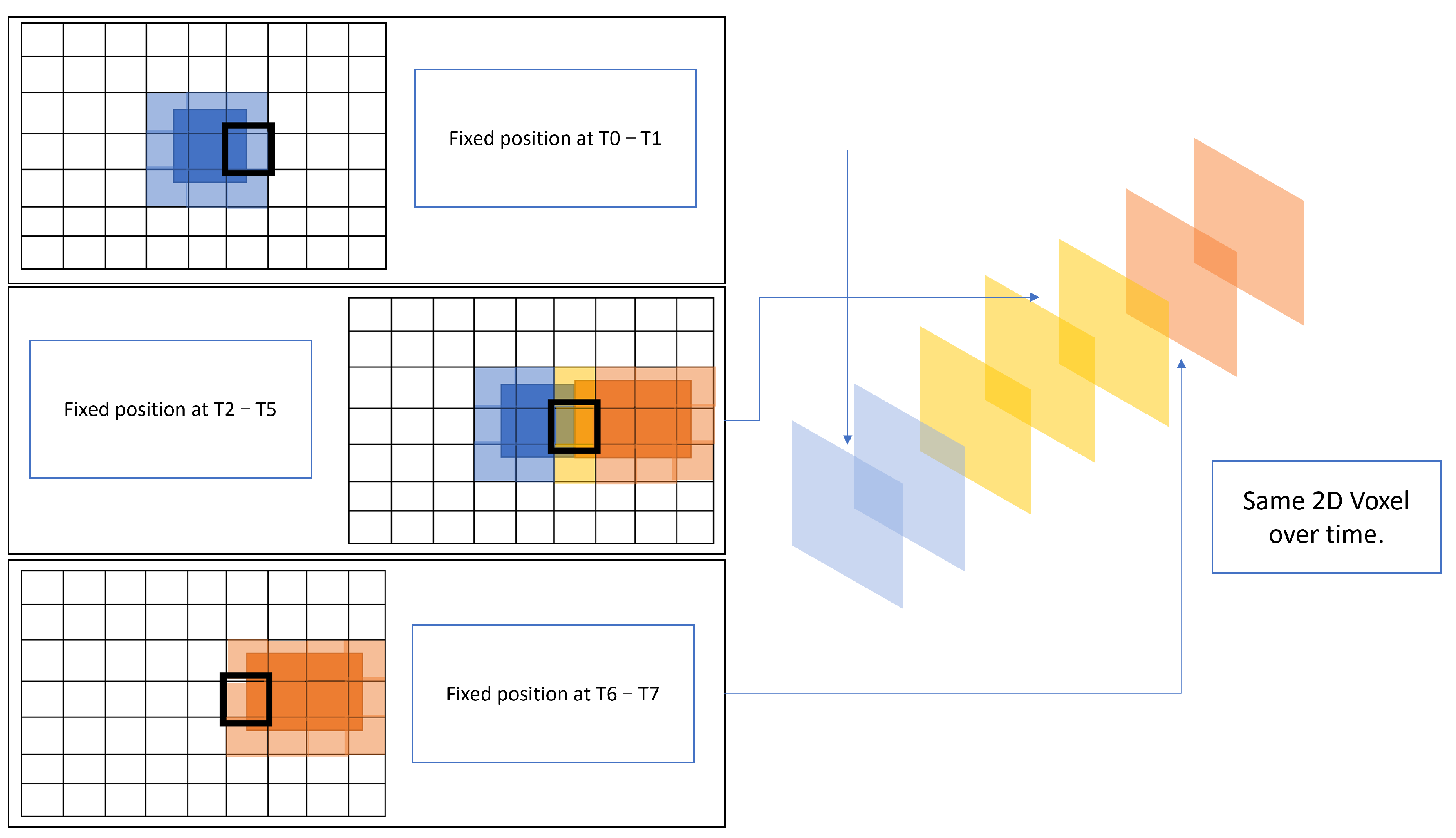

- Voxel grid that shows persistent probability over time—this is a 3D representation of an environment where each voxel corresponds to a small volume in space. The grid is used to model the occupancy probability of each voxel over time, indicating the likelihood of a particular voxel being occupied by an object or obstacle. The persistent probability over time refers to the ability of the grid to maintain the occupancy probabilities of voxels over a period, even as new observations or data are added. This means that the grid can be updated with new information, allowing it to adapt to changes in the environment.

- 2.

- Voxel grid that preserves information over time and contains detailed information—this is a grid that preserves detailed information about the environment and tracks changes in that environment over time. The voxel grid can store information such as occupancy probabilities, surface normals, color, and texture, allowing for a more accurate representation of the environment. Additionally, the voxel grid can be updated with new information over time, allowing it to adapt to changes in the environment.

- 3.

- A voxel grid that can be integrated into a multiagent swarm—this is a 3D representation of the environment that can be used by multiple agents in a coordinated manner. The ability to integrate a voxel grid into a multiagent swarm is useful in a wide range of applications, such as in robotics, where multiple agents may be required to work together to accomplish a task.

- 4.

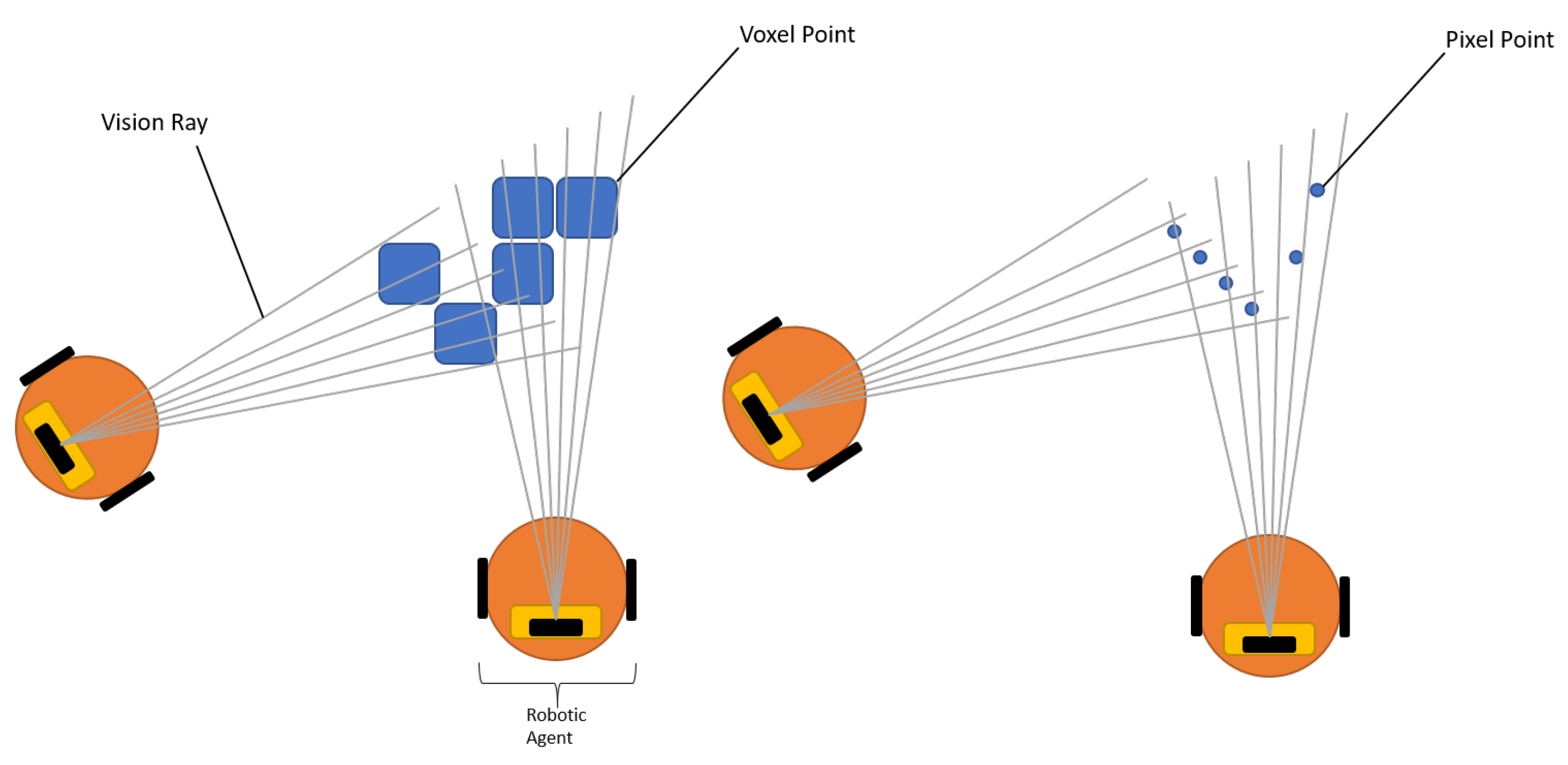

- A voxel grid that can accommodate multiple sensor types—this is a 3D representation of the environment that can be constructed by integrating data from multiple sensors. The key benefit of this approach is that by utilising a voxel grid, the information can be represented in a uniform manner regardless of the sensor type.

2. Materials and Methods

2.1. The Problem and Why We Need Framework

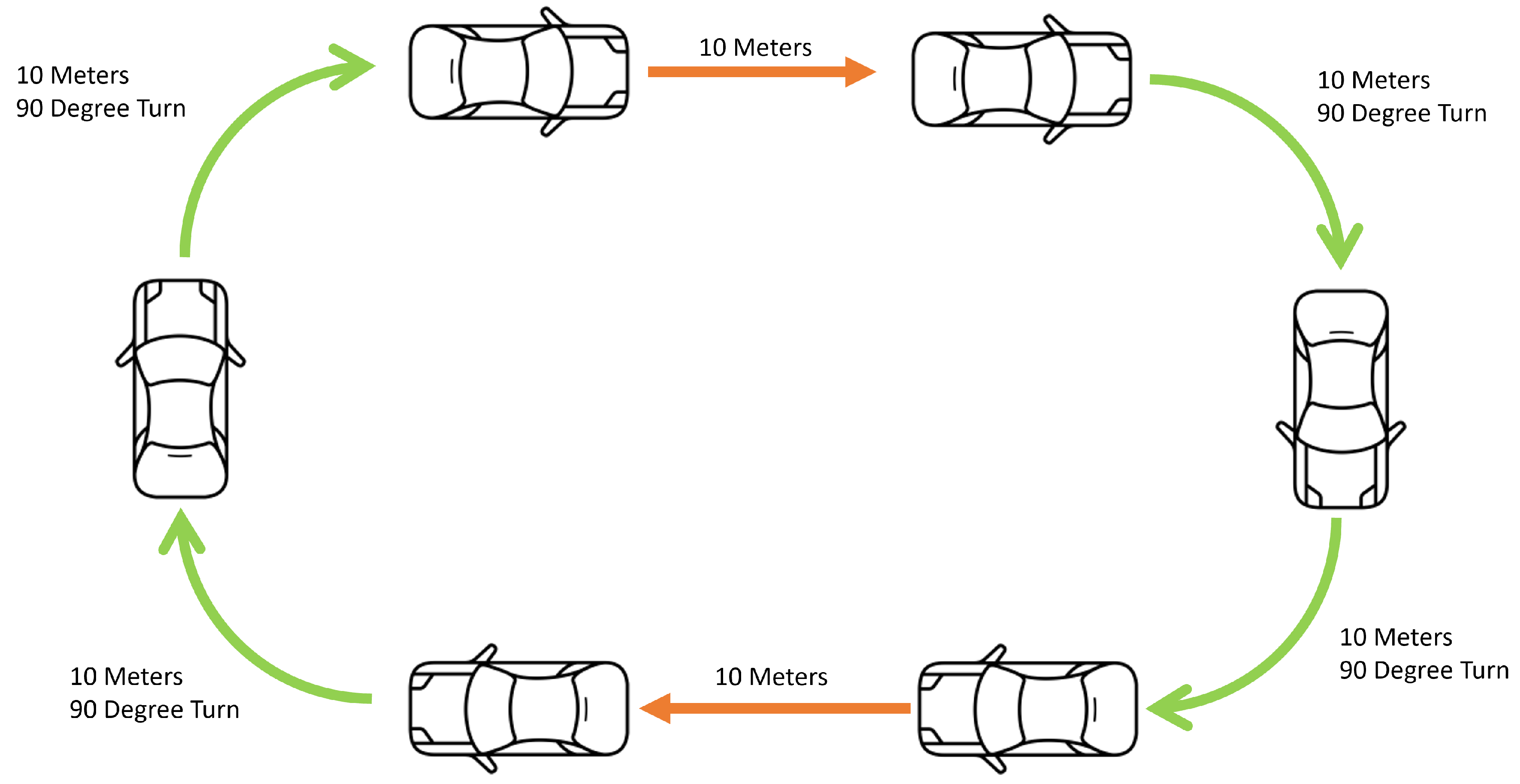

2.2. SLAM

- Gather sensor data from the agent;

- Collect sensor data into a map of the environment;

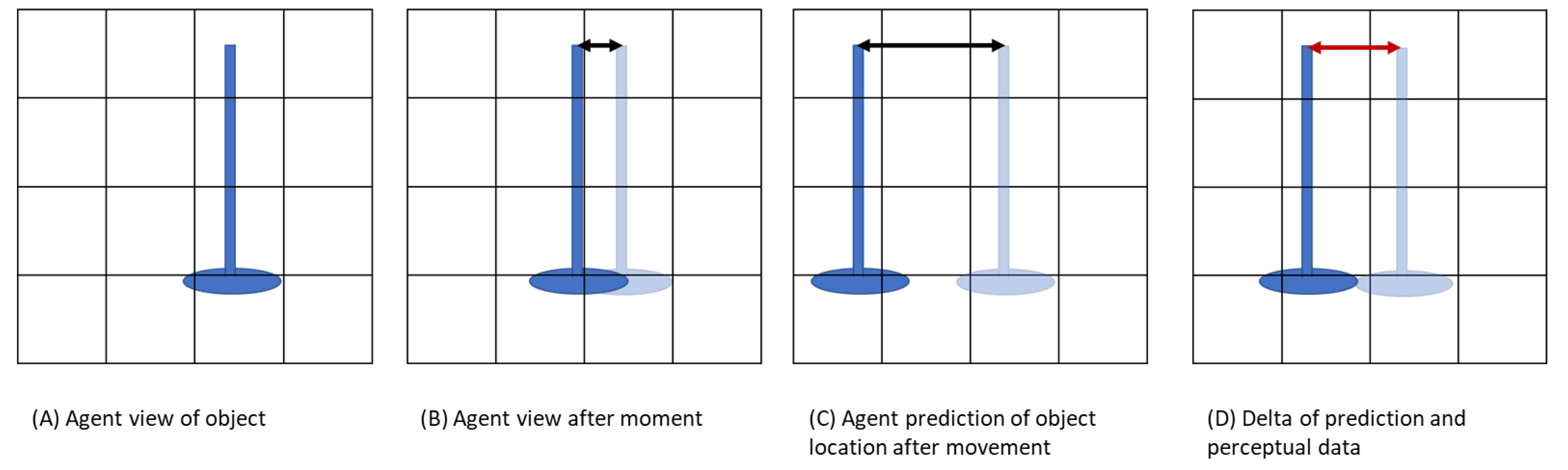

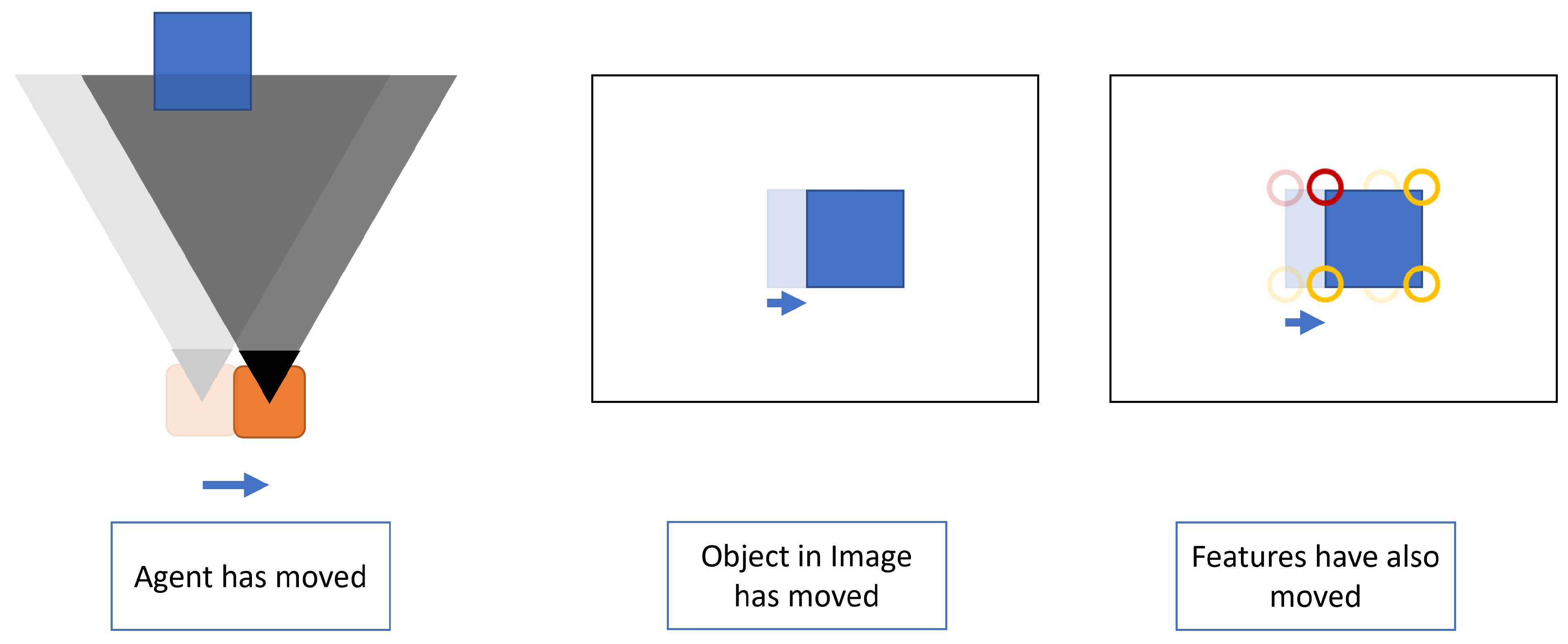

- Track agent movement at time T via the use of onboard odometry;

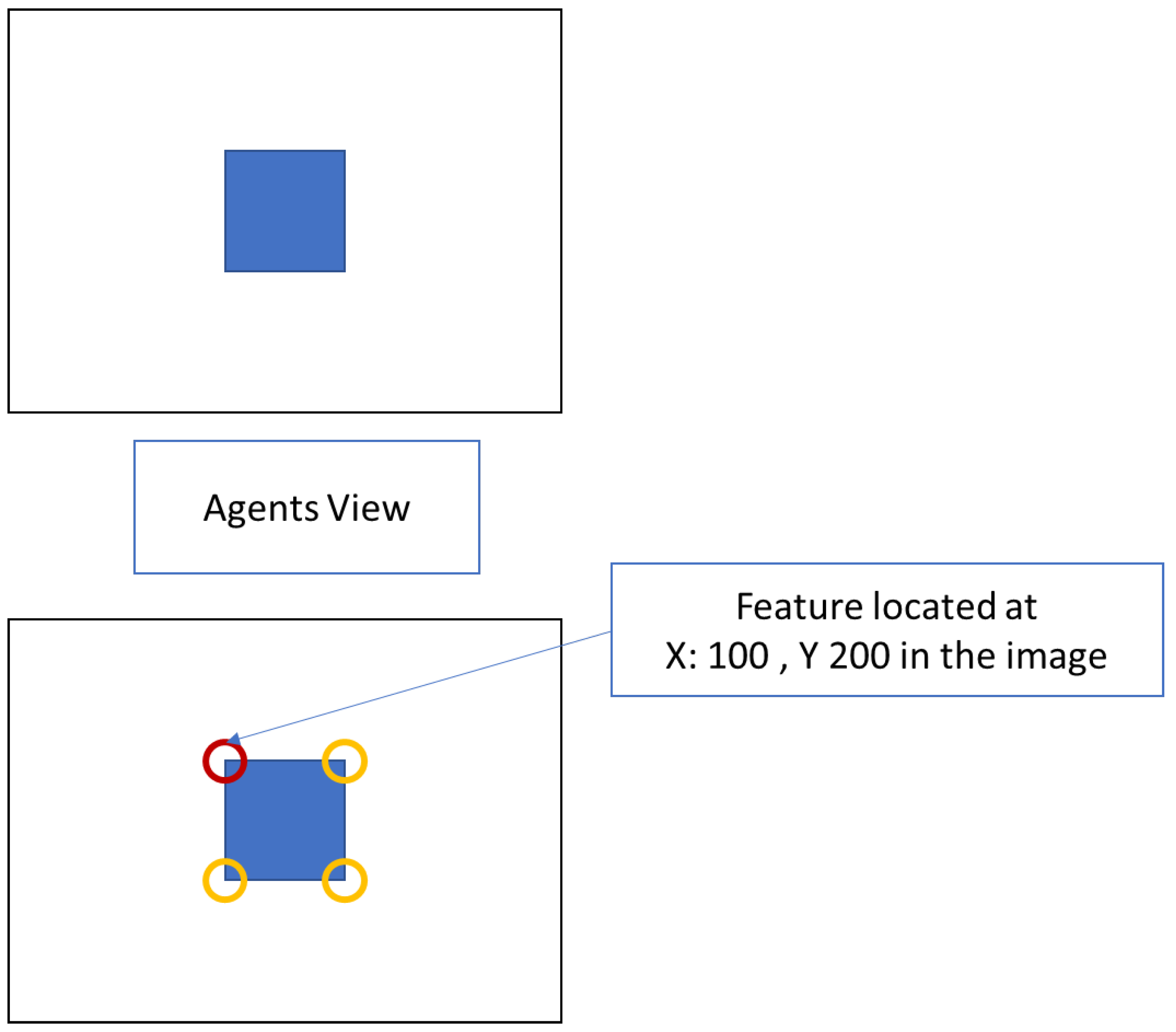

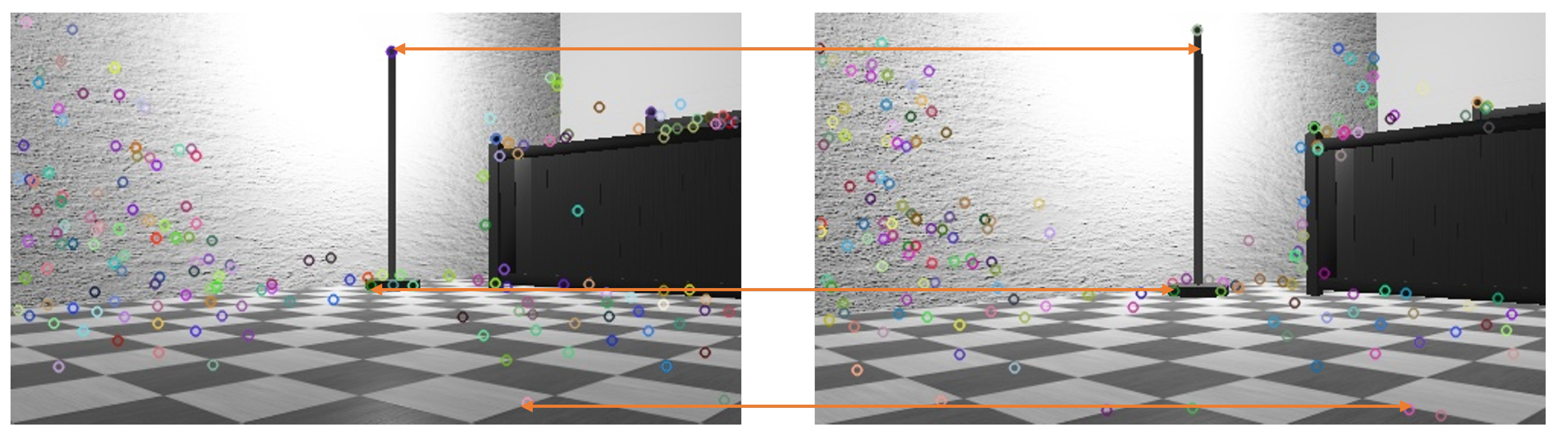

- Detect features/landmarks within the environment;

- Track features/landmarks at time T;

- Move agent;

- Update predicted position based on odometry;

- Update position based on features/landmarks accounting for uncertainty in both odometry and sensor readings.

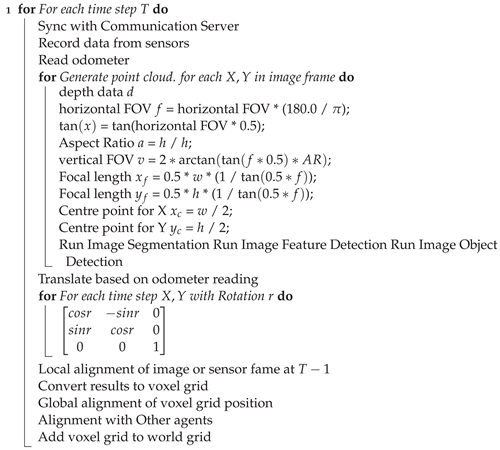

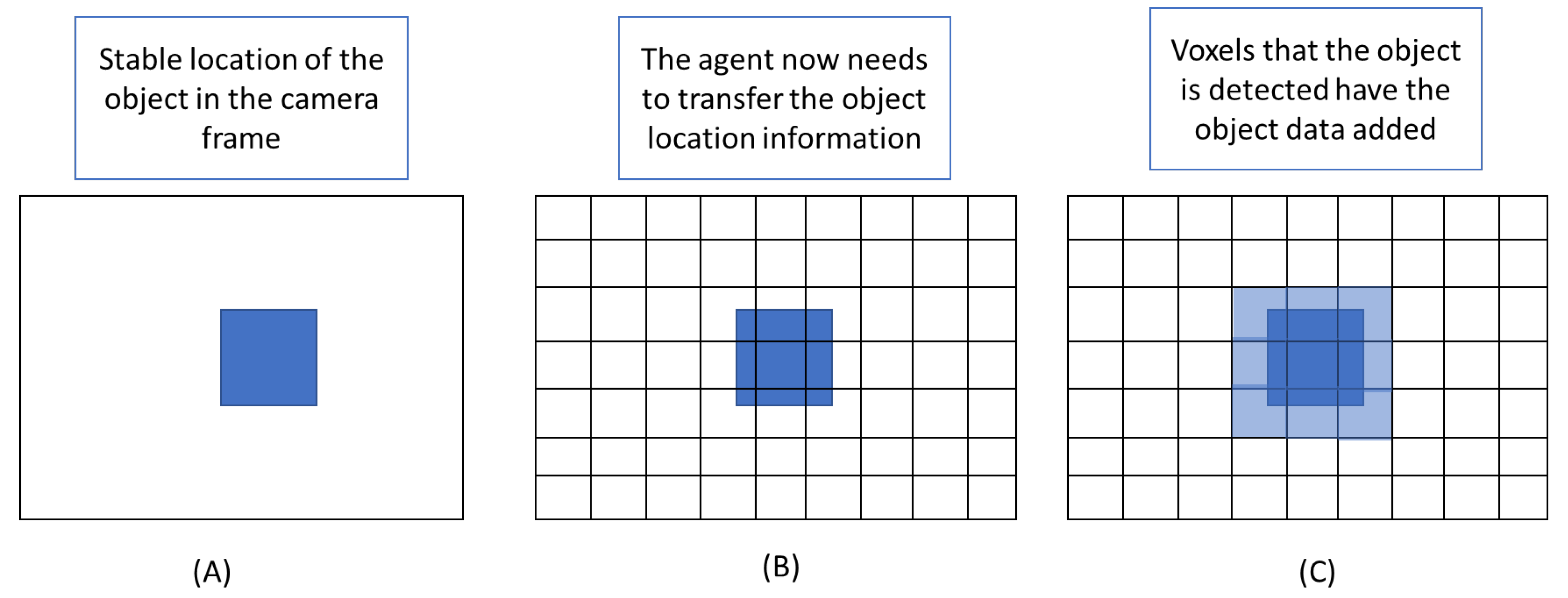

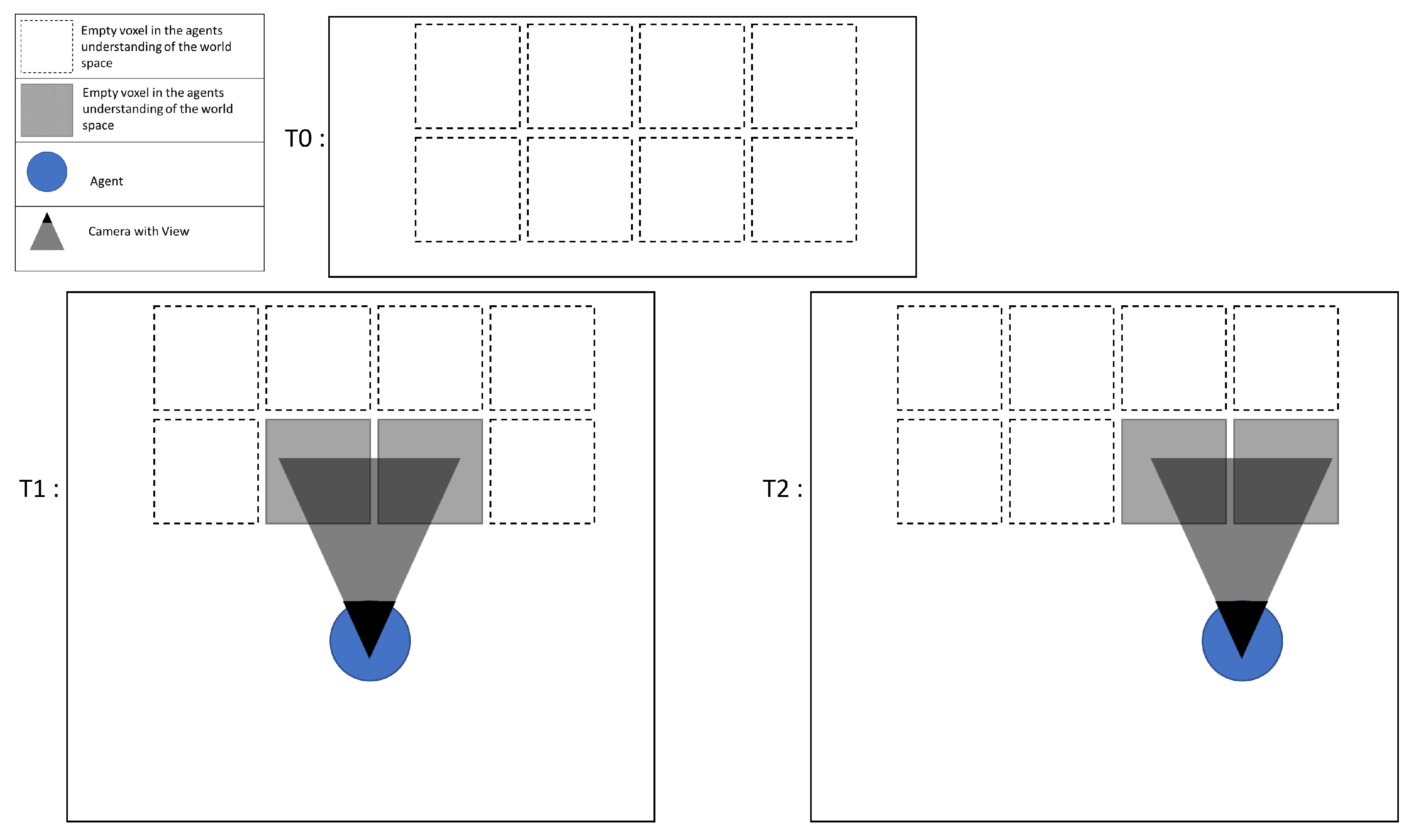

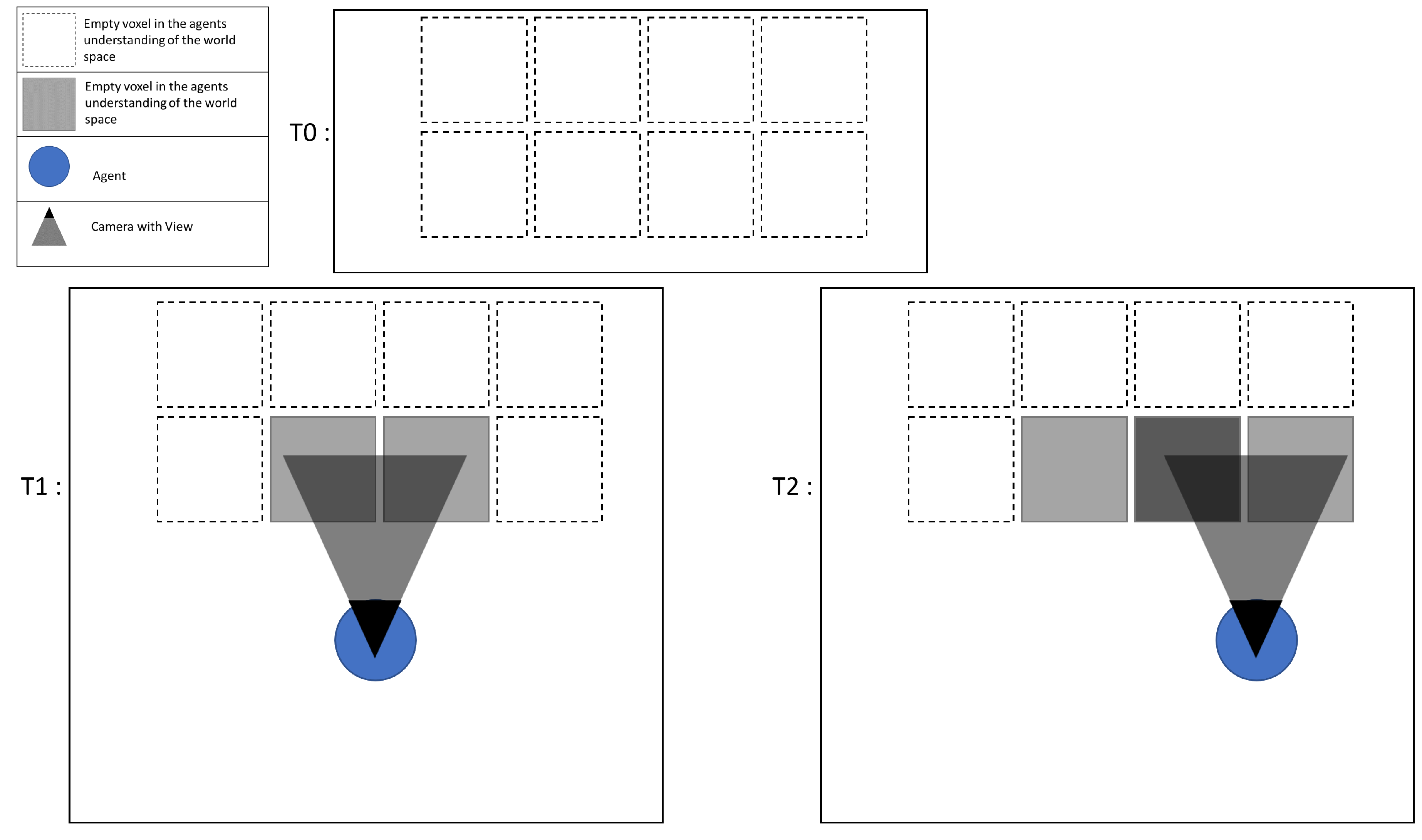

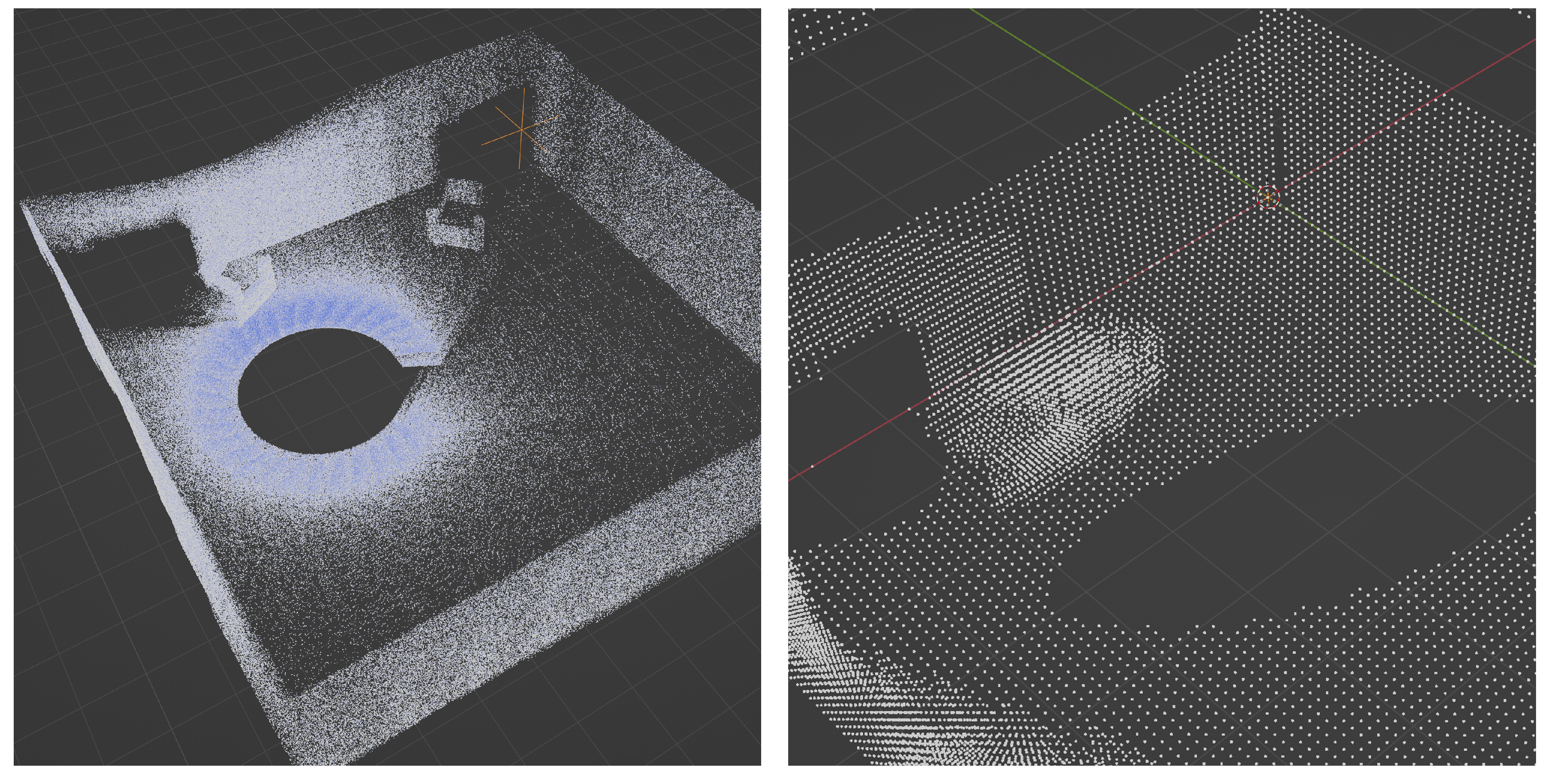

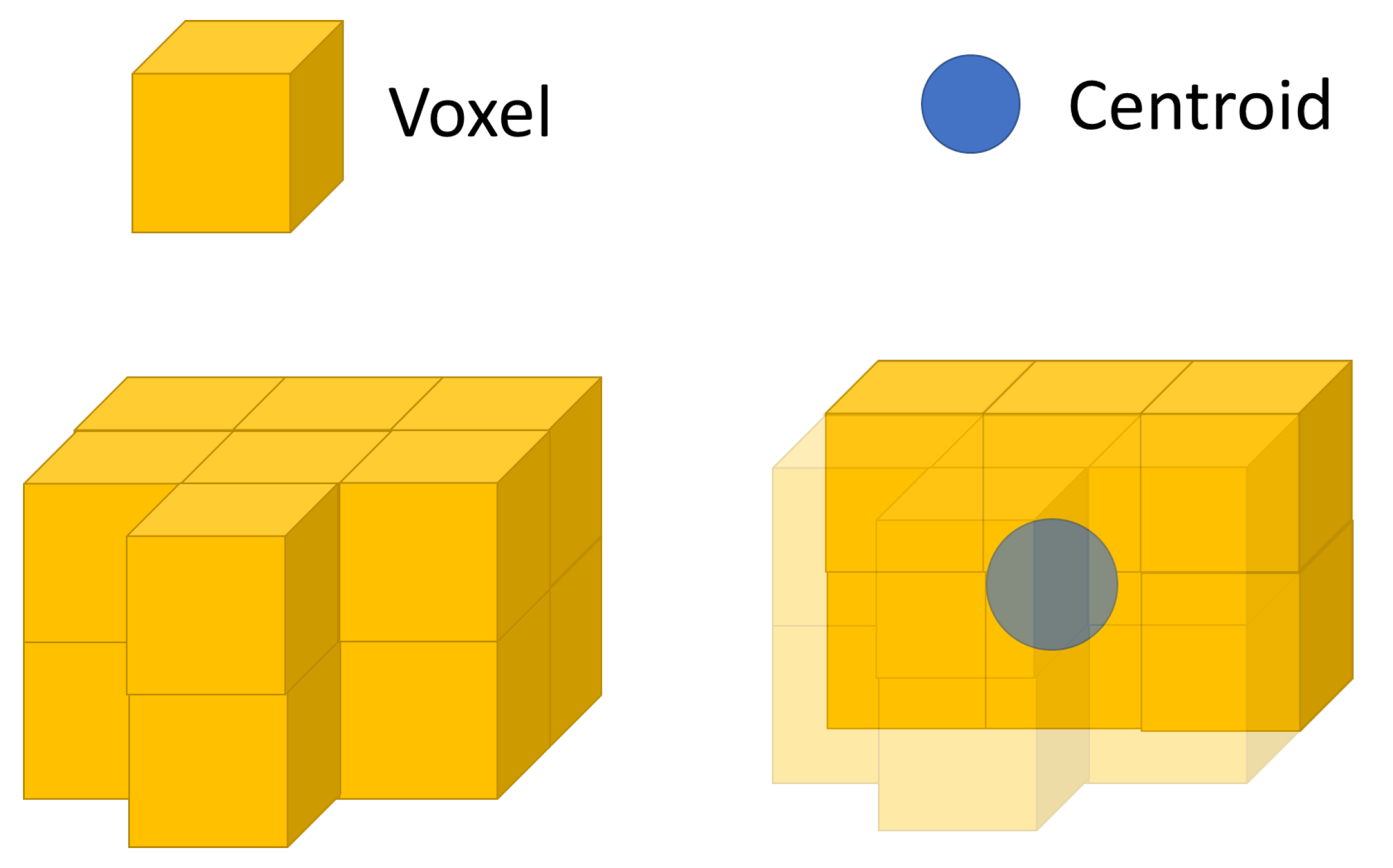

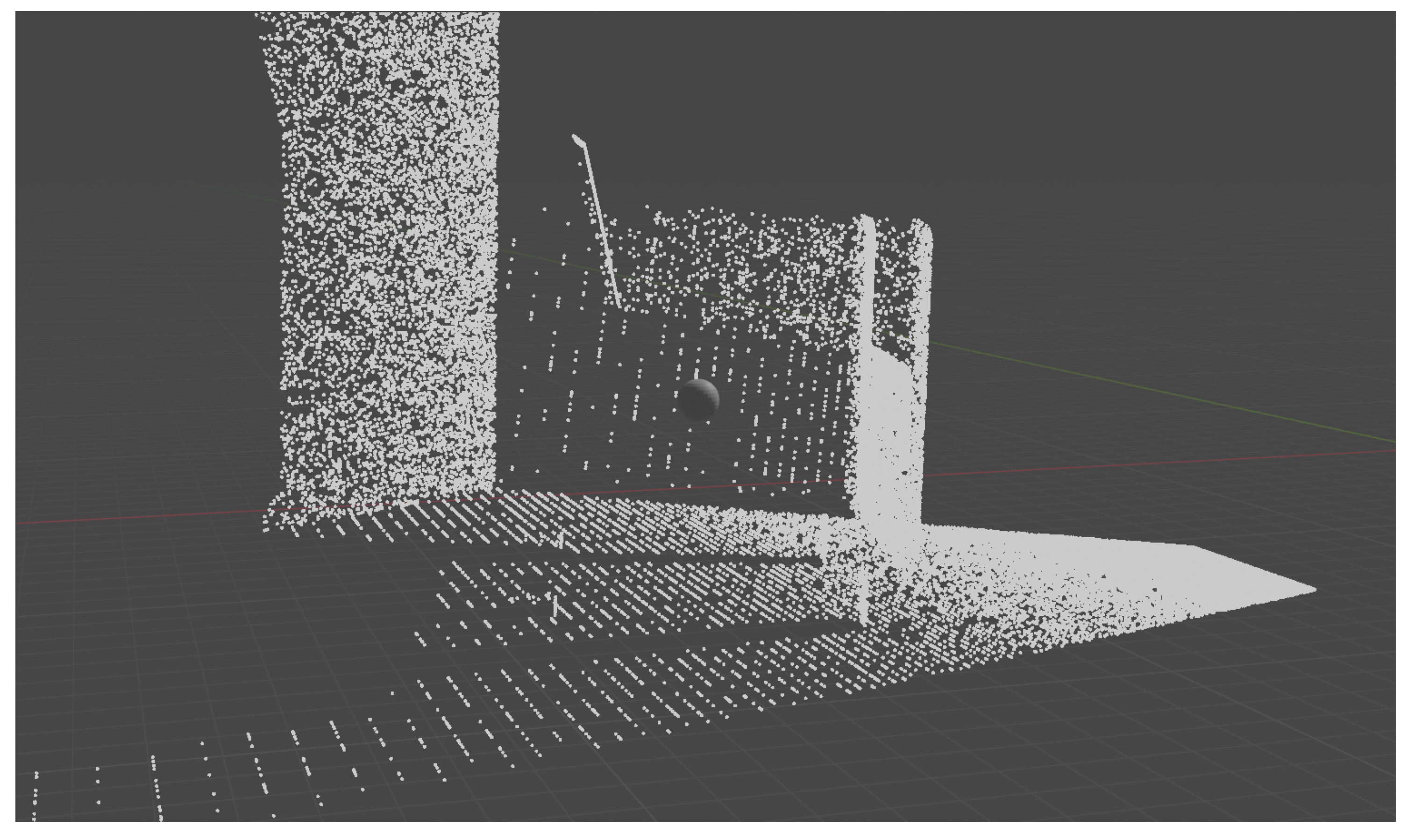

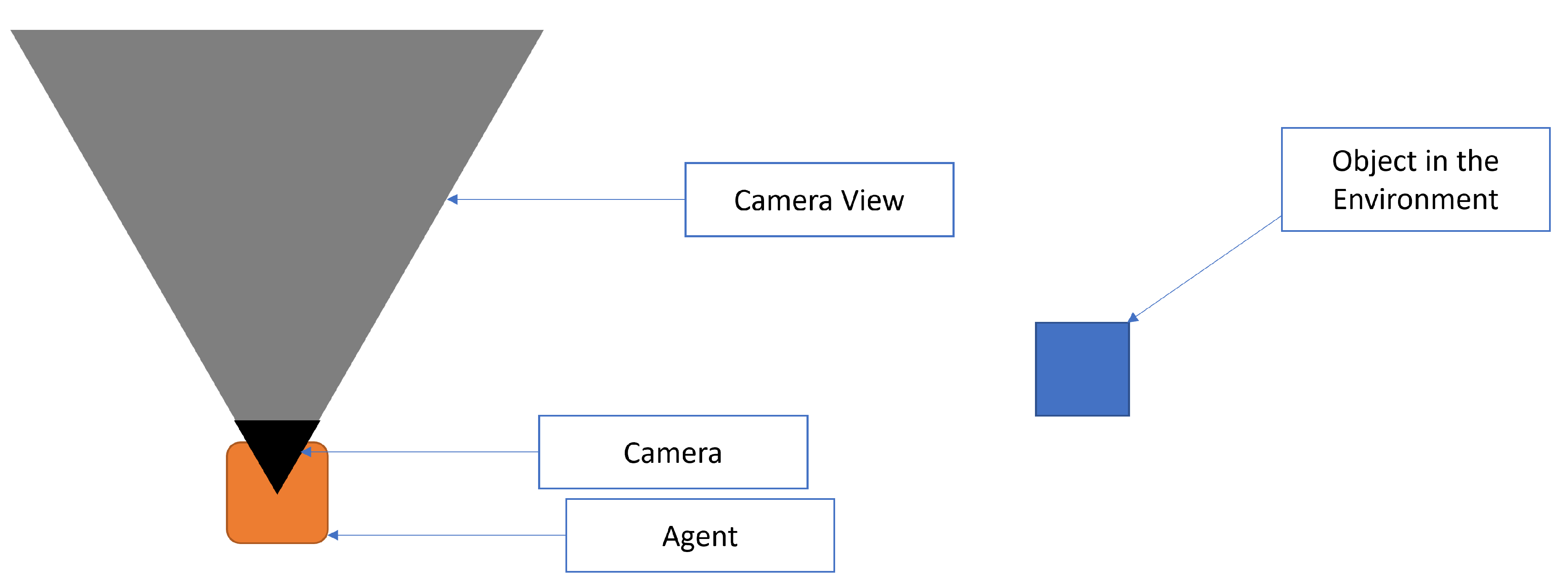

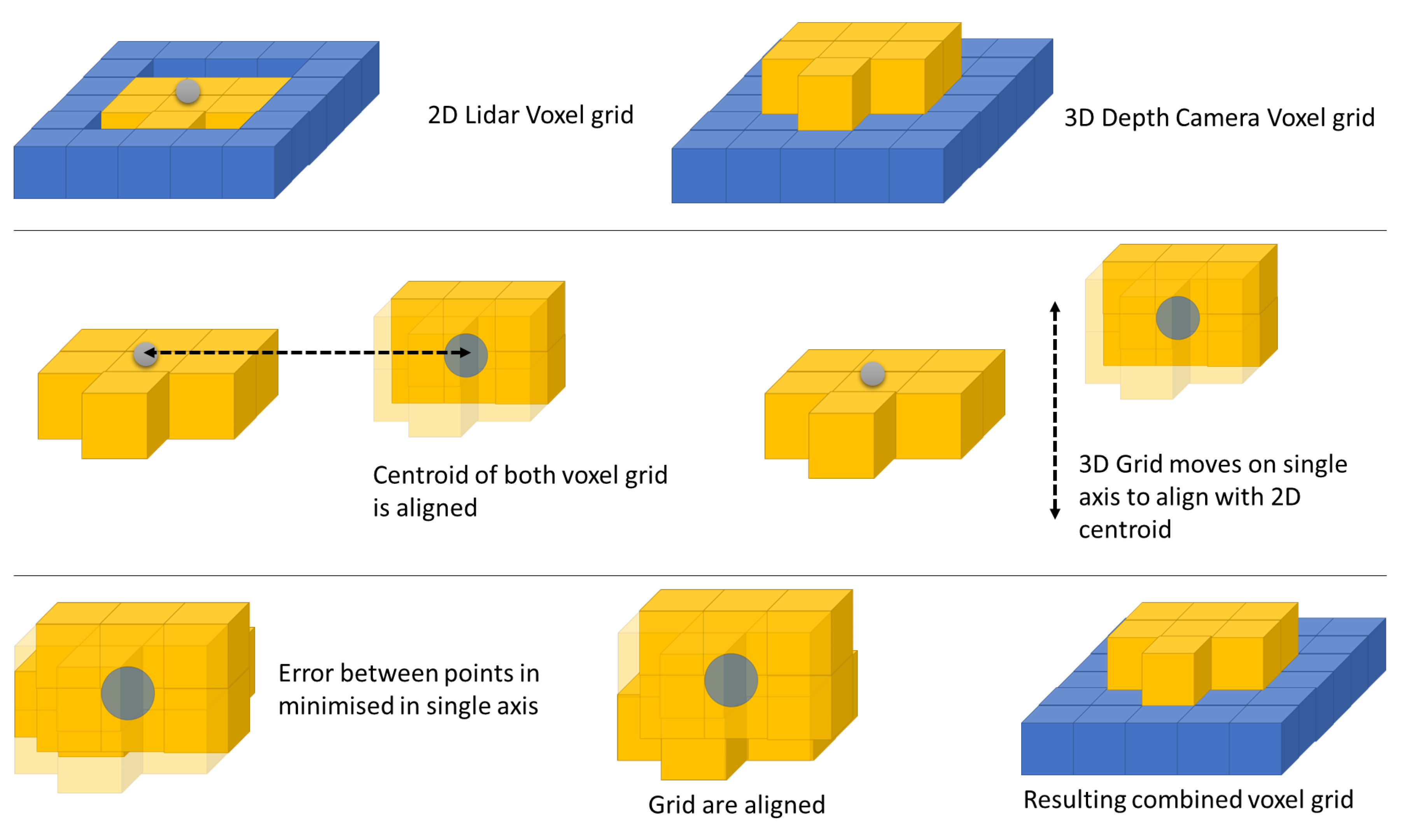

2.3. The Voxel Grid and the Approach to Capture Data

- Static definitions needed for agent loops

- w = Camera Width

- h = Camera Height

| Algorithm 1: Agent loop |

|

2.4. Creating the Voxel Grid

- w = The size of the world

- Time t is an arbitrary, but consistent, timestep that is defined by the agent.

- g = { }

- v = { x,y,z,d }

- where { X, Y, Z}

- and represents the position of an arbitrary point in Cartesian space.

- d = { }

- d = { e, r, b, j }

- j = { } Where s is the number of objects discovered in any given frame

- o = { i, p }

- p = The probability of a given object being in that voxel at time t

- e = Agent Experience

- r = Reliability of agents

- b = Agent Bias

2.5. Loop Closure and Object Probability over Time

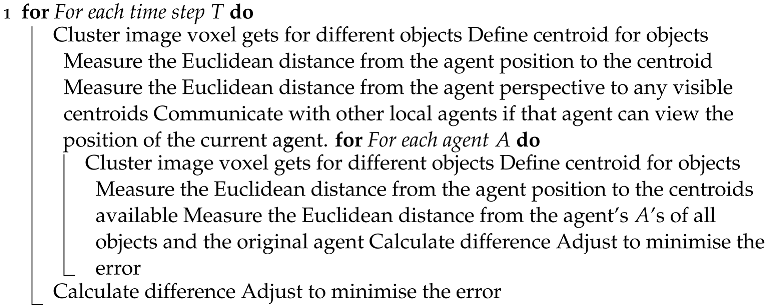

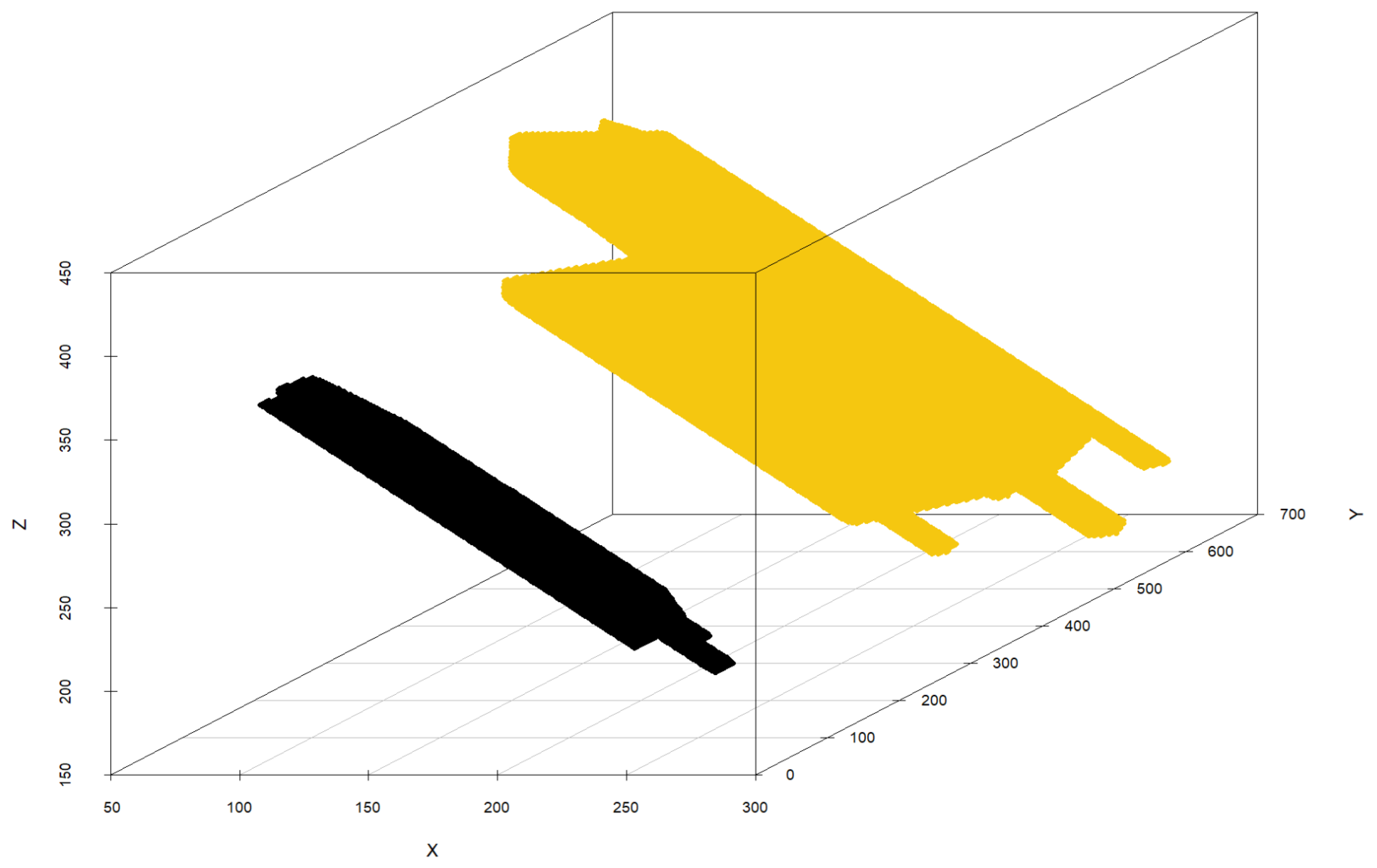

2.6. Centre Point of Object, Clustering

- S is a set of voxels within the voxel grid;

- k is the number of unique objects within the environment the agents has mapped;

- x is an observation data point;

- is the mean of points in

- is some arbitrary function that measures the distance between points in any given unit.

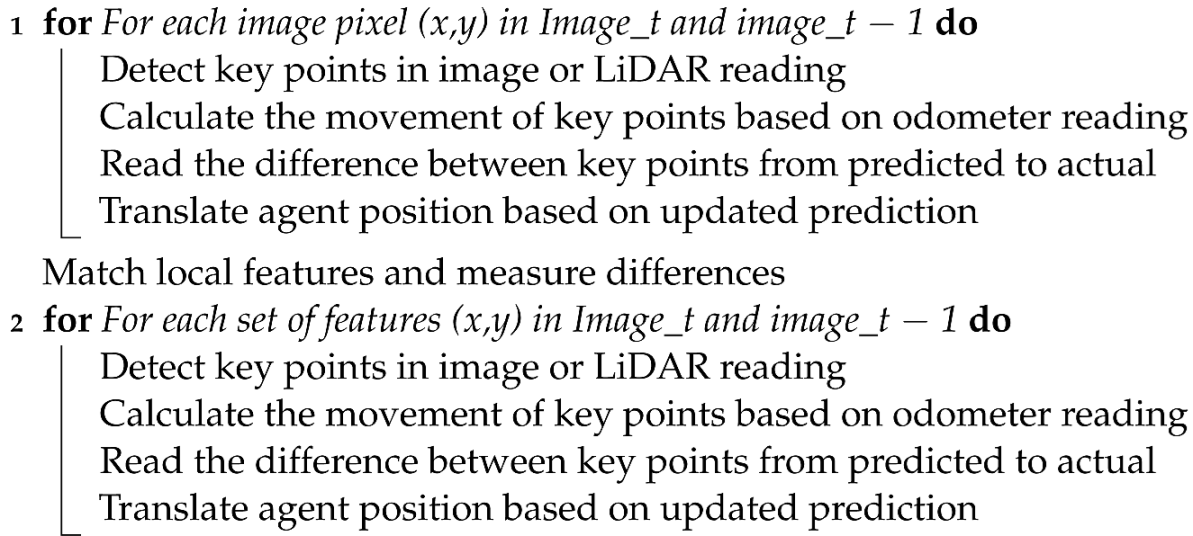

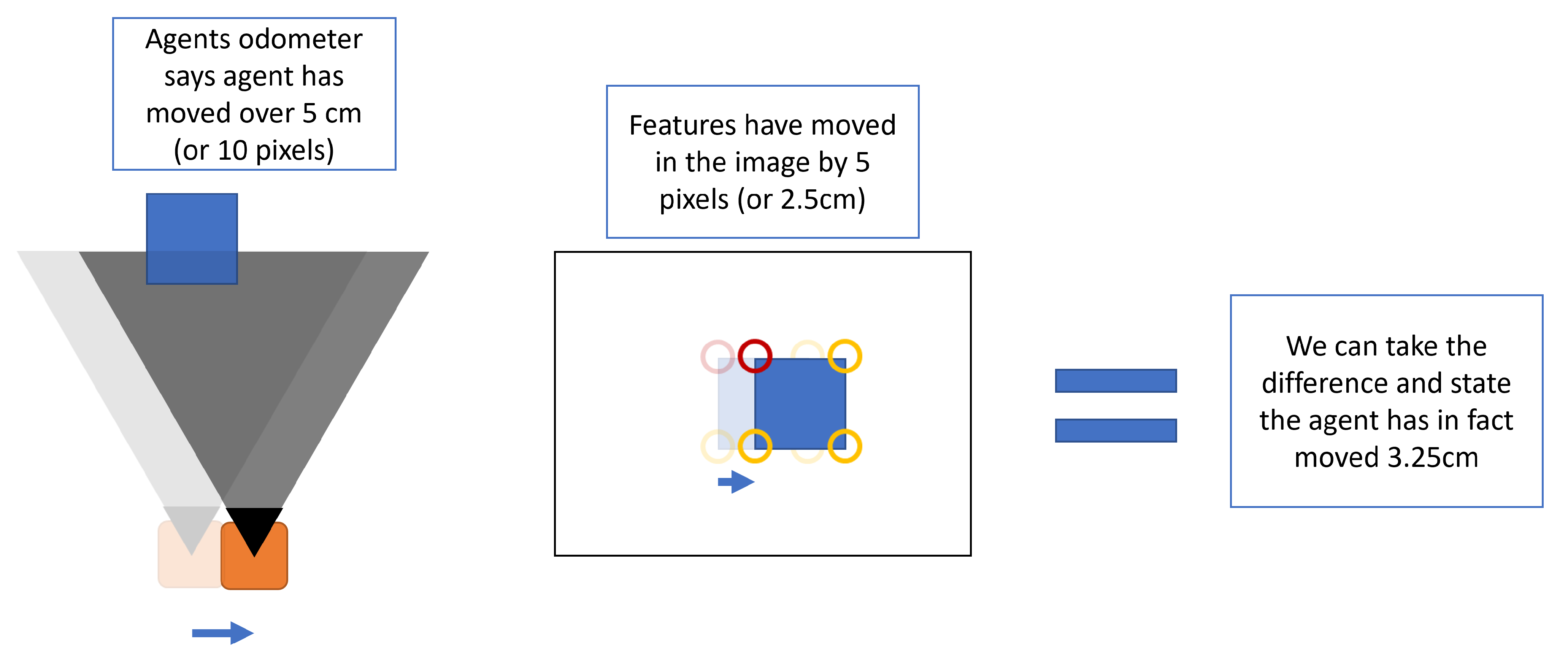

2.7. The Frame-to-Frame Alignment

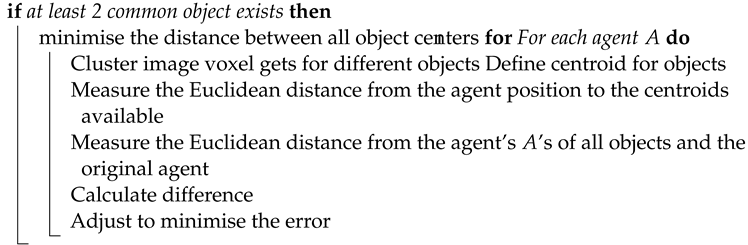

| Algorithm 2: Frame-to-frame alignment |

| Locate the image at t − 1 Gather the current image at t Find features in images  |

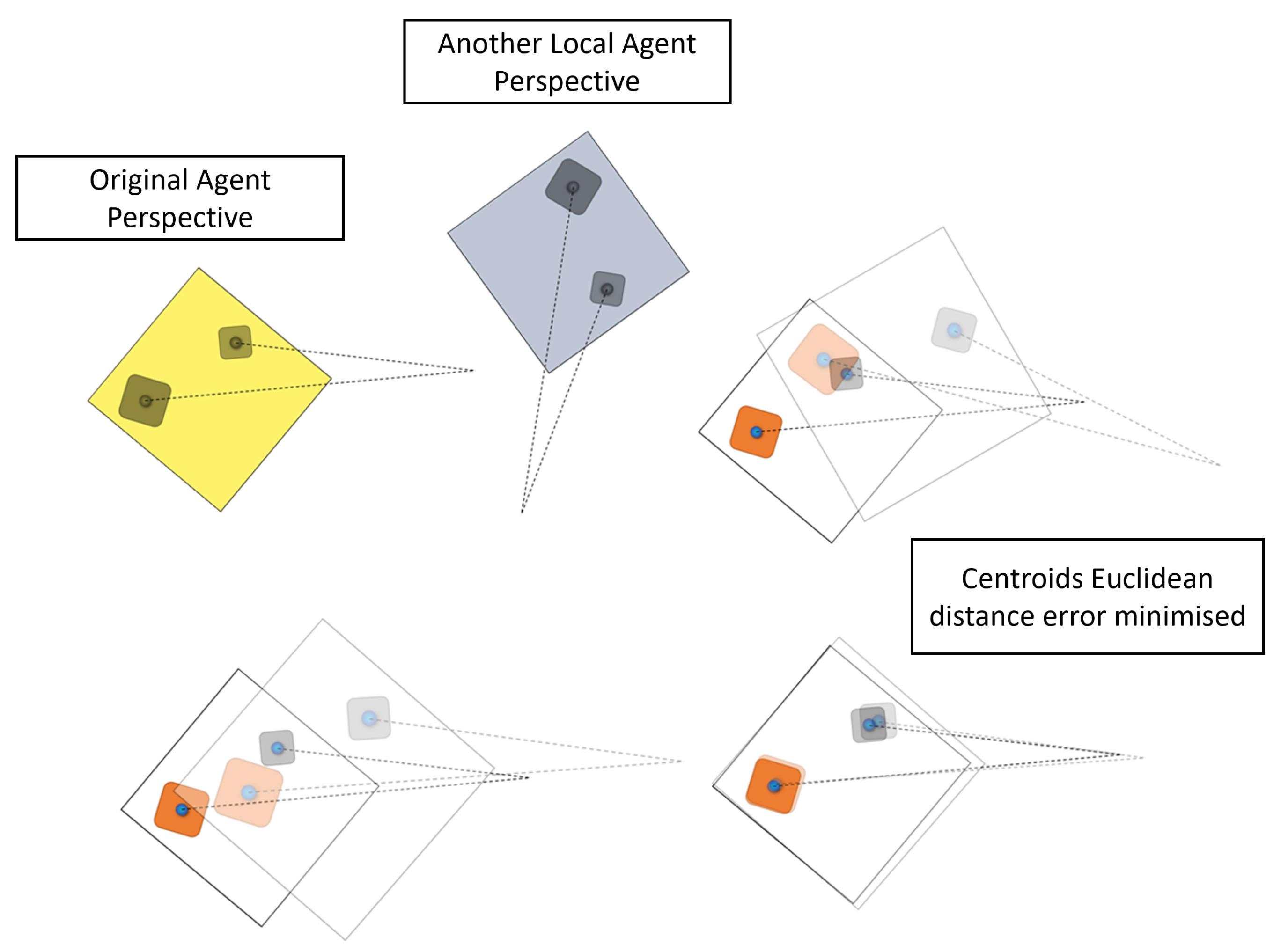

2.8. Any Local Object to Global Map Alignment

| Algorithm 3: Global alignment |

| Cluster objects in voxel grid at time T Remove outliers from object data Alingment  |

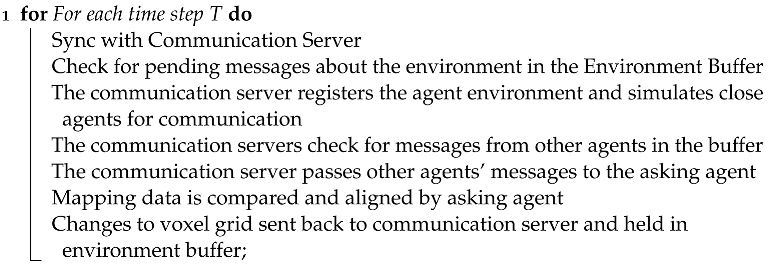

2.9. Multiagent Alignment of Maps

- Agents must be predefined and registered with the central coordinator before joining the colony or domain.

- Errors occurring within the system may propagate throughout the entire colony.

- As the number of agents increases, the centralised system must manage vast amounts of data to maintain coordination among the expanding agent population.

| Algorithm 4: Message sync |

|

| Algorithm 5: Combine agents’ maps |

Agent sends mapping data for the local environment to another agent  |

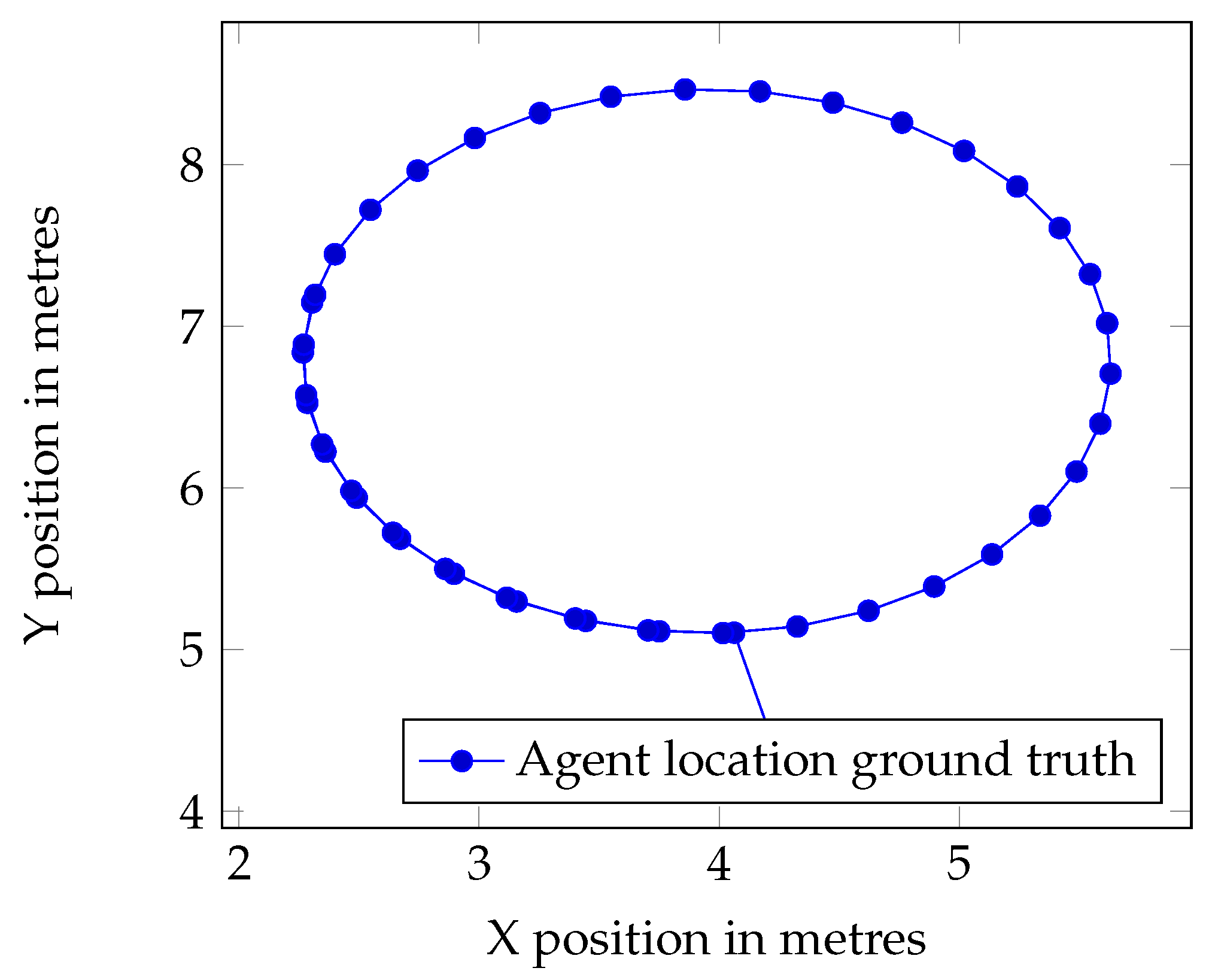

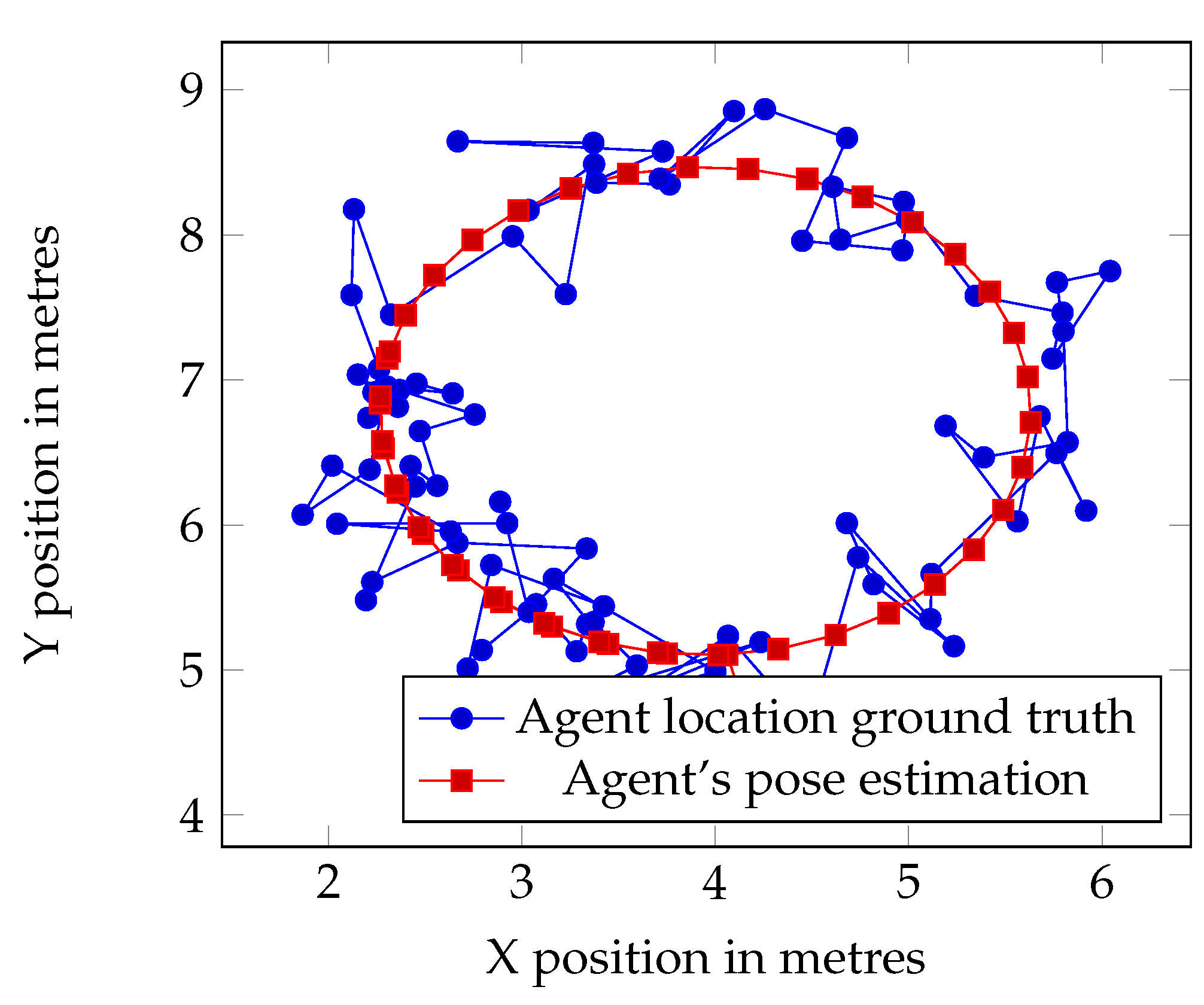

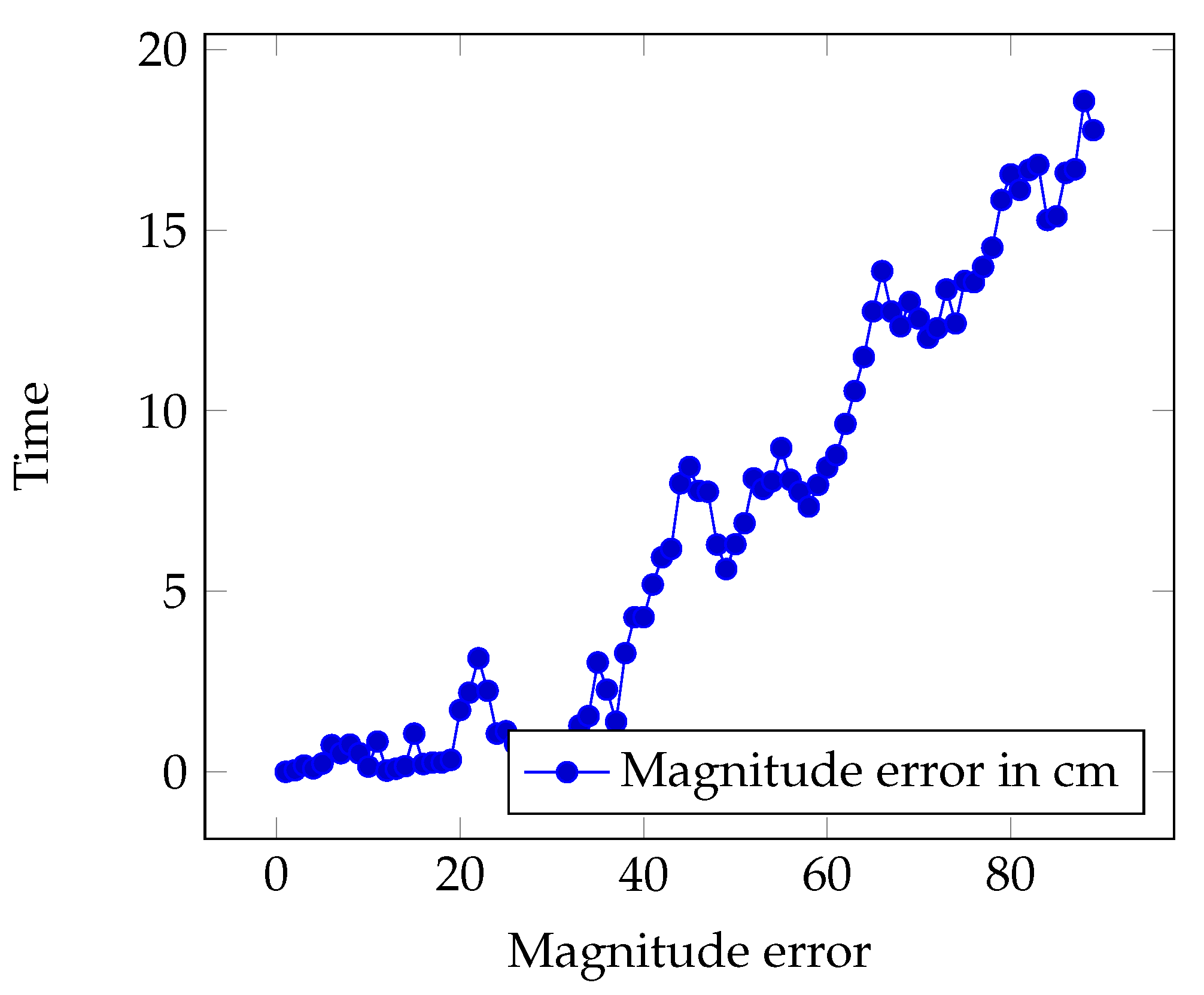

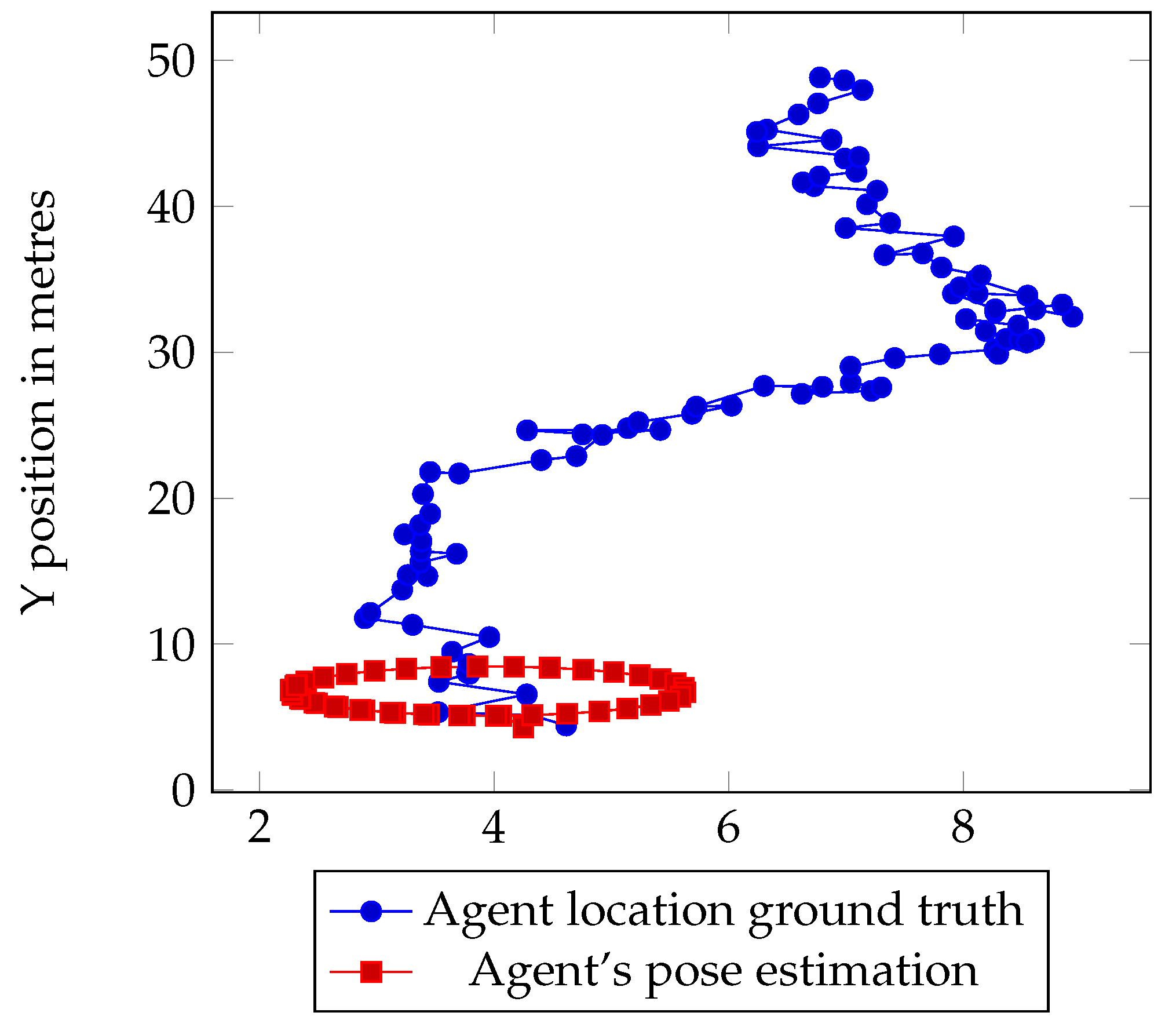

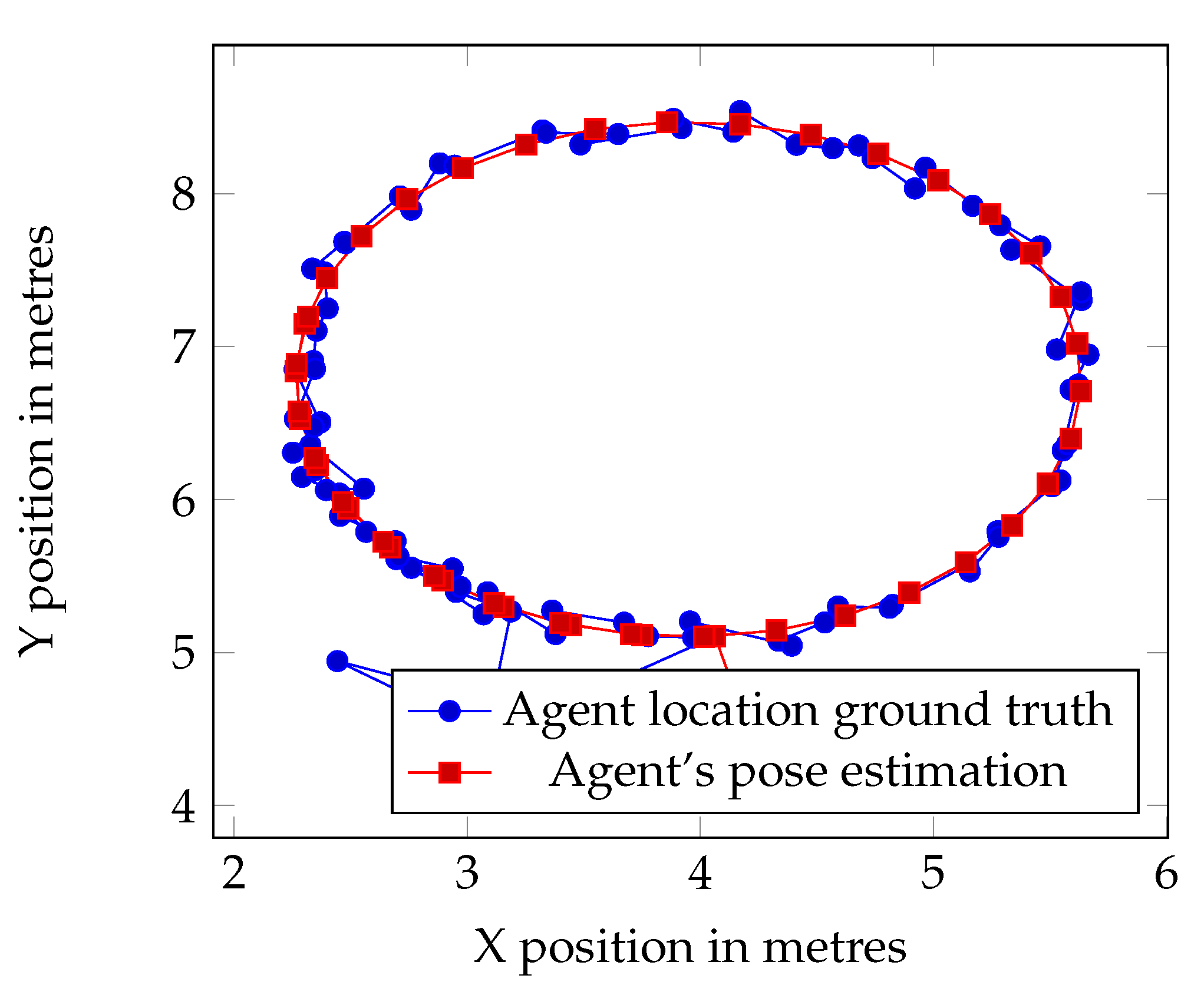

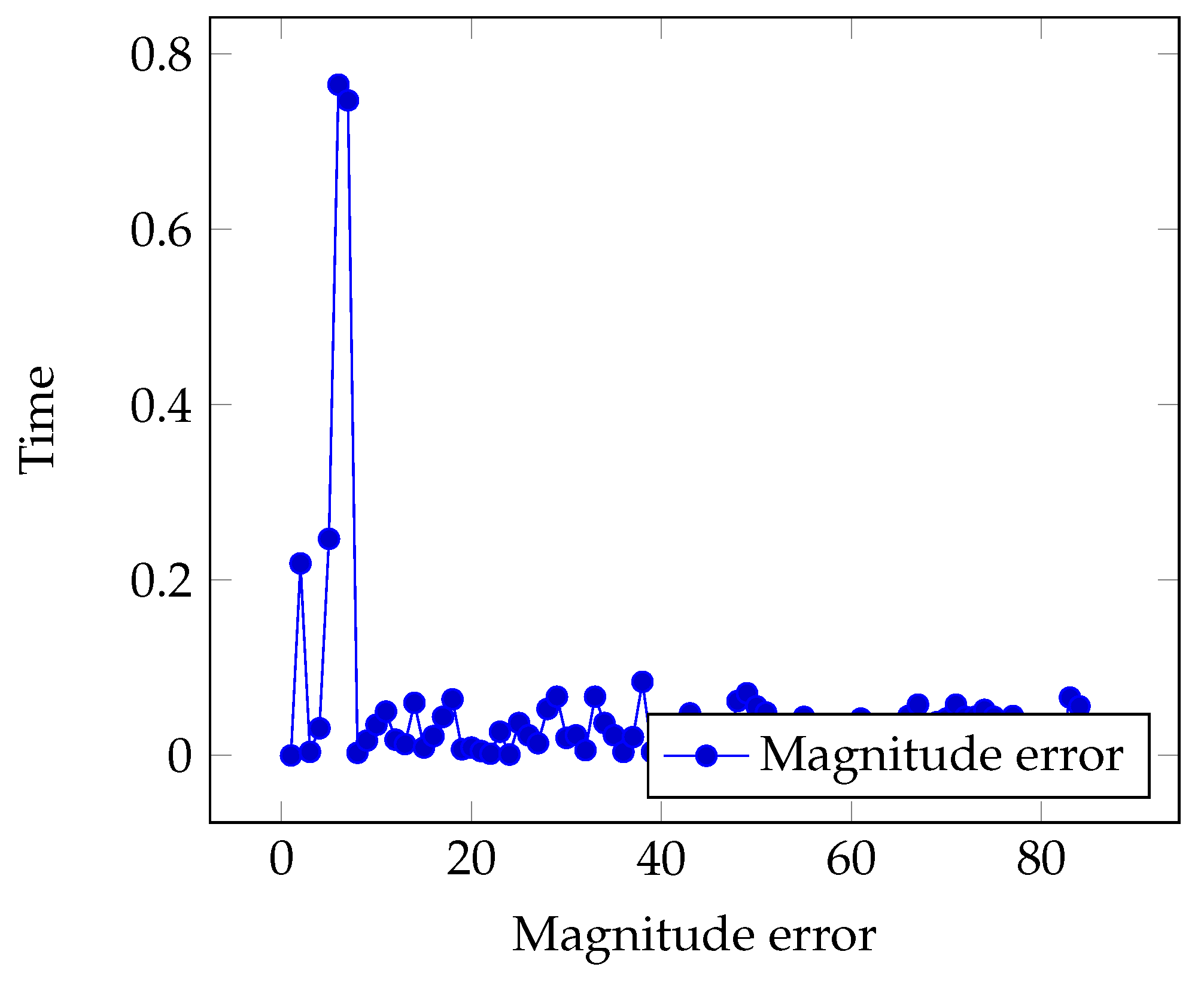

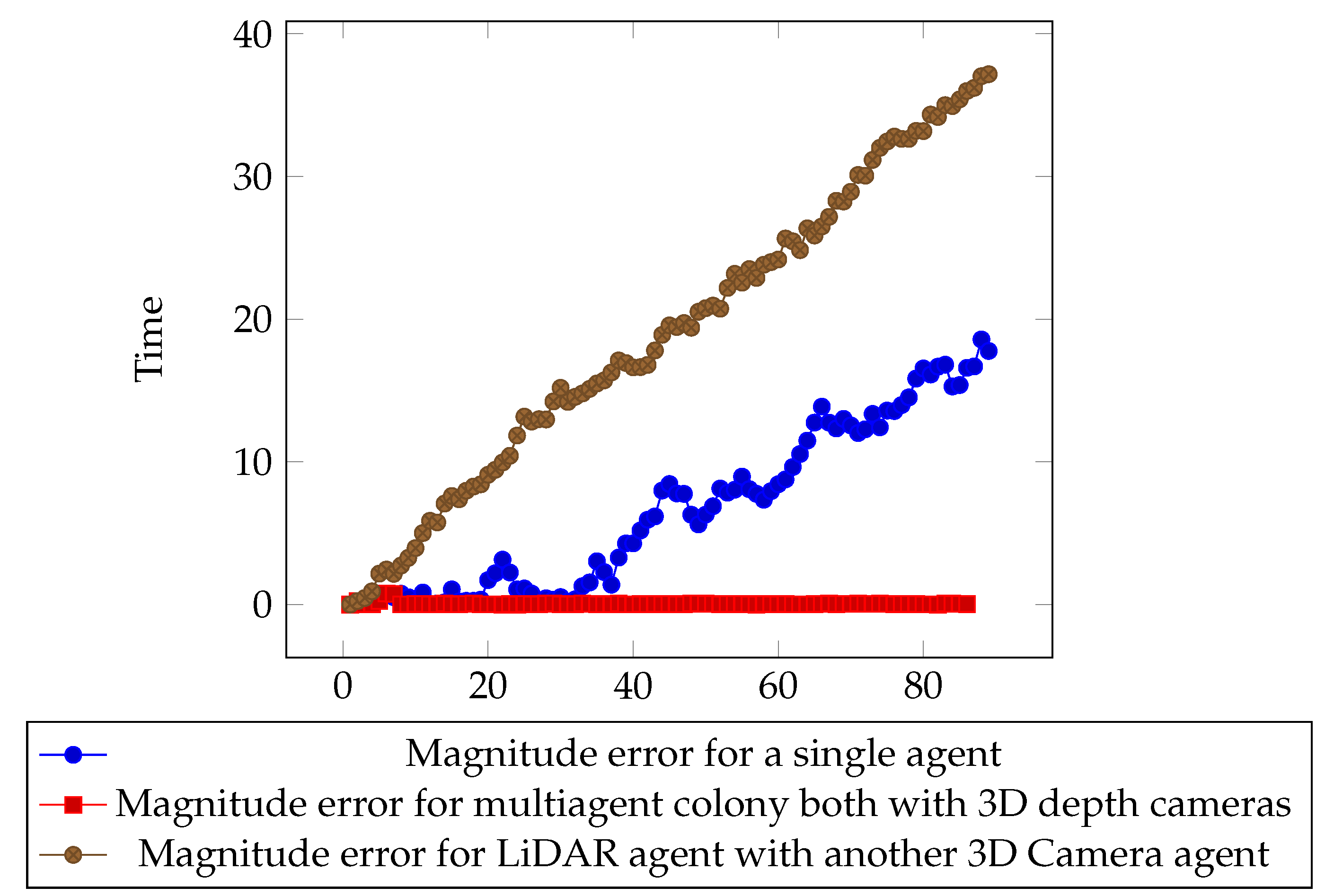

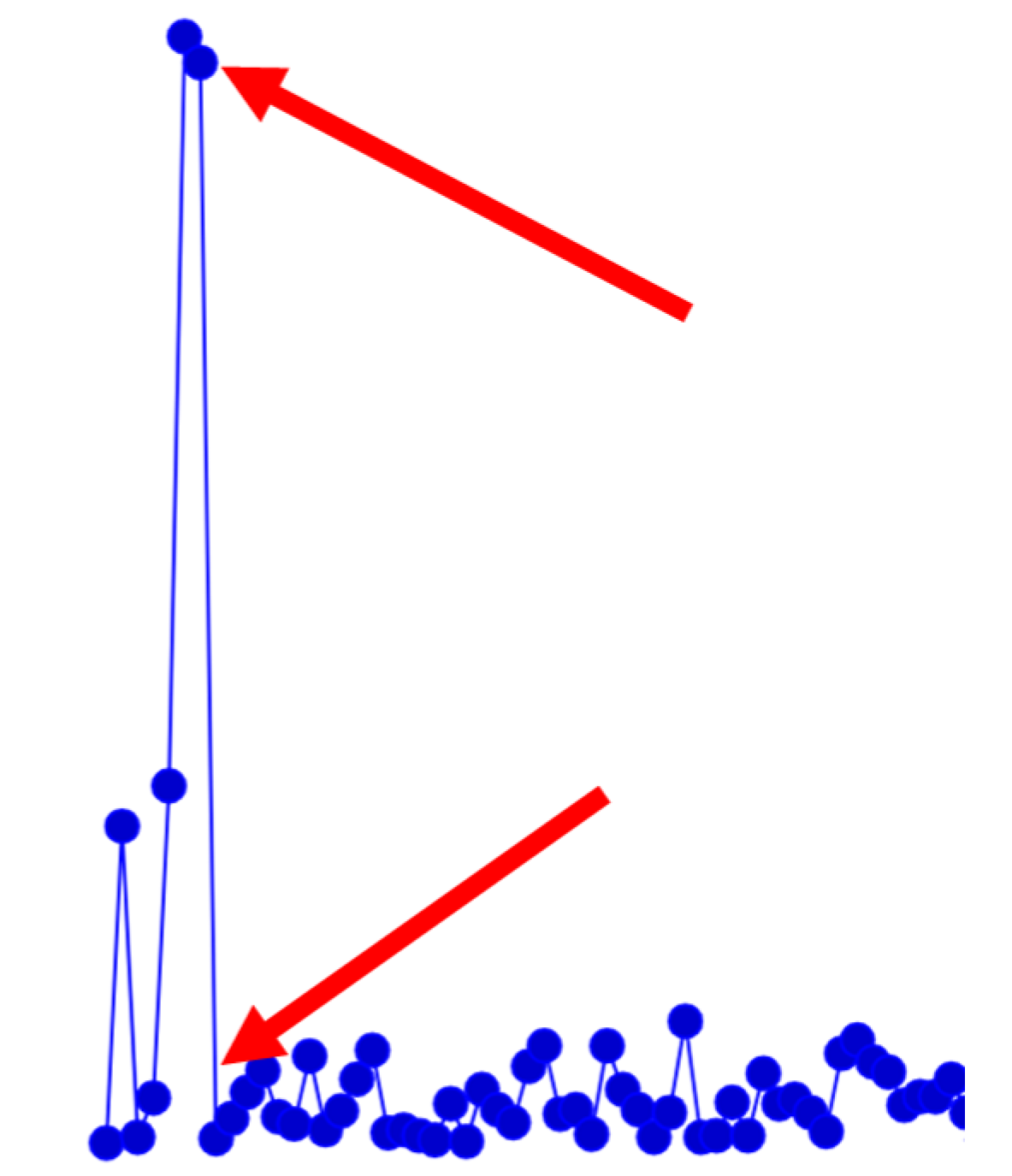

3. Results and Discussion

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Fax, J.; Murray, R. Information Flow and Cooperative Control of Vehicle Formations. IEEE Trans. Autom. Control 2004, 49, 1465–1476. [Google Scholar] [CrossRef]

- Lončar, I.; Babić, A.; Arbanas, B.; Vasiljević, G.; Petrović, T.; Bogdan, S.; Mišković, N. A heterogeneous robotic swarm for long-term monitoring of marine environments. Appl. Sci. 2019, 9, 1388. [Google Scholar] [CrossRef]

- Schmuck, P.; Scherer, S.A.; Zell, A. Hybrid Metric-Topological 3D Occupancy Grid Maps for Large-scale Mapping. IFAC-PapersOnLine 2016, 49, 230–235. [Google Scholar] [CrossRef]

- Schmuck, P.; Chli, M.; Schmuck, P. CCM-SLAM: Robust and efficient centralized collaborative monocular simultaneous localization and mapping for robotic teams CCM-SLAM: Robust and Efficient Centralized Collaborative Monocular SLAM for Robotic Teams. J. Field Robot. 2019, 36, 763–781. [Google Scholar] [CrossRef]

- Jadbabaie, A.; Jie, L.; Morse, A. Coordination of groups of mobile autonomous agents using nearest neighbor rules. IEEE Trans. Autom. Control 2003, 48, 988–1001. [Google Scholar] [CrossRef]

- Lin, Z.; Broucke, M.; Francis, B. Local Control Strategies for Groups of Mobile Autonomous Agents. IEEE Trans. Autom. Control 2004, 49, 622–629. [Google Scholar] [CrossRef]

- Andrade-Cetto, J.; Sanfeliu, A. The Effects of Partial Observability in SLAM. In Proceedings of the IEEE International Conference on Robotics and Automation, New Orleans, LA, USA, 26 April–1 May 2004. [Google Scholar]

- Mur-Artal, R.; Montiel, J.M.; Tardos, J.D. ORB-SLAM: A Versatile and Accurate Monocular SLAM System. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Campos, C.; Elvira, R.; Rodriguez, J.J.; Montiel, J.M.; Tardos, J.D. ORB-SLAM3: An Accurate Open-Source Library for Visual, Visual-Inertial, and Multimap SLAM. IEEE Trans. Robot. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

- Wang, G.; Gao, X.; Zhang, T.; Xu, Q.; Zhou, W. LiDAR Odometry and Mapping Based on Neighborhood Information Constraints for Rugged Terrain. Remote Sens. 2022, 14, 5229. [Google Scholar] [CrossRef]

- Yuan, M.; Li, X.; Cheng, L.; Li, X.; Tan, H. A Coarse-to-Fine Registration Approach for Point Cloud Data with Bipartite Graph Structure. Electronics 2022, 11, 263. [Google Scholar] [CrossRef]

- Muglikar, M.; Zhang, Z.; Scaramuzza, D. Voxel Map for Visual SLAM. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May 2020–31 August 2020. [Google Scholar]

- Siva, S.; Nahman, Z.; Zhang, H. Voxel-based representation learning for place recognition based on 3D point clouds. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 8351–8357. [Google Scholar] [CrossRef]

- Bailey, B.Y.T.I.M.; Durrant-whyte, H. Simultaneous Localization and Mapping (SLAM): PART II. Update 2006, 13, 108–117. [Google Scholar] [CrossRef]

- Smith, R.; Self, M.; Cheeseman, P. Estimating Uncertain Spatial Relationships in Robotics. Mach. Intell. Pattern Recognit. 1988, 5, 435–461. [Google Scholar] [CrossRef]

- Leonard, J.; Durrant-Whyte, H. Mobile robot localization by tracking geometric beacons. IEEE Trans. Robot. Autom. 1991, 7, 376–382. [Google Scholar] [CrossRef]

- Saeedi, S.; Trentini, M.; Seto, M.; Li, H. Multiple-Robot Simultaneous Localization and Mapping: A Review. J. Field Robot. 2016, 33, 3–46. [Google Scholar] [CrossRef]

- Saha, A.; Dhara, B.C.; Umer, S.; AlZubi, A.A.; Alanazi, J.M.; Yurii, K. CORB2I-SLAM: An Adaptive Collaborative Visual-Inertial SLAM for Multiple Robots. Electronics 2022, 11, 2814. [Google Scholar] [CrossRef]

- Dube, R.; Gawel, A.; Sommer, H.; Nieto, J.; Siegwart, R.; Cadena, C. An online multi-robot SLAM system for 3D LiDARs. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Vancouver, BC, Canada, 24–28 September 2017; pp. 1004–1011. [Google Scholar] [CrossRef]

- Zhang, S.; Zheng, L.; Tao, W. Survey and Evaluation of RGB-D SLAM. IEEE Access 2021, 9, 21367–21387. [Google Scholar] [CrossRef]

- Deng, W.; Huang, K.; Chen, X.; Zhou, Z.; Shi, C.; Guo, R.; Zhang, H. Semantic RGB-D SLAM for Rescue Robot Navigation. IEEE Access 2020, 8, 221320–221329. [Google Scholar] [CrossRef]

- Runz, M.; Buffier, M.; Agapito, L. MaskFusion: Real-Time Recognition, Tracking and Reconstruction of Multiple Moving Objects. In Proceedings of the 2018 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Munich, Germany, 16–20 October 2019; pp. 10–20. [Google Scholar] [CrossRef]

- Jiang, J.; Wang, J.; Wang, P.; Chen, Z. POU-SLAM: Scan-to-model matching based on 3D voxels. Appl. Sci. 2019, 9, 4147. [Google Scholar] [CrossRef]

- Cattaneo, D.; Vaghi, M.; Valada, A. LCDNet: Deep Loop Closure Detection and Point Cloud Registration for LiDAR SLAM. IEEE Trans. Robot. 2021, 38, 2074–2093. [Google Scholar] [CrossRef]

- Xiang, H.; Shi, W.; Fan, W.; Chen, P.; Bao, S.; Nie, M. FastLCD: A fast and compact loop closure detection approach using 3D point cloud for indoor mobile mapping. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102430. [Google Scholar] [CrossRef]

- Liu, K.; Ou, H. A Light-Weight LiDAR-Inertial SLAM System with Loop Closing. arXiv 2022, arXiv:2212.05743. [Google Scholar]

- Lewis, J.; Lima, P.U.; Basiri, M. Collaborative 3D Scene Reconstruction in Large Outdoor Environments Using a Fleet of Mobile Ground Robots. Sensors 2022, 23, 375. [Google Scholar] [CrossRef] [PubMed]

- Luo, J.; Shu, X.; Zhai, Y.; Fu, X.; Ding, B.; Xu, J. A Fast and Robust Solution for Common Knowledge Formation in Decentralized Swarm Robots. J. Intell. Robot. Syst. 2022, 106, 68. [Google Scholar] [CrossRef]

| Title | Methodology | Key Findings | Challenges |

|---|---|---|---|

| A Versatile and Efficient Stereo Visual Inertial SLAM System [8] | Stereo visual-inertial SLAM system | Outperformed state-of-the-art SLAM systems on several datasets while being computationally efficient. | Difficulty in handling featureless environments and robustness to lighting changes. |

| ORB-SLAM3: An Accurate Open-Source Library for Visual, Visual–Inertial, and Multi-Map SLAM [9] | Visual–inertial, and multi-map SLAM system. | Achieved state-of-the-art performance on several datasets and provided a unified SLAM framework. | Difficulty in handling large-scale environments and varying camera configurations. |

| Real-time LiDAR Odometry and Mapping on Variable Terrain using Surface Normal Voxel Representation [10] | LiDAR odometry and mapping using surface normal voxel representation. | Improved accuracy of LiDAR odometry and mapping on variable terrain compared to traditional approaches. | Difficulty in handling dynamic environments and varying LiDAR configurations. |

| A Hierarchical Point Cloud Registration Method Based on Coarse-to-Fine Voxelisation [11] | Hierarchical voxel-based point cloud registration method. | Improved accuracy and robustness of point cloud registration compared to existing methods. | Limited scalability to large-scale point clouds and difficulty in handling featureless areas. |

| Voxel Map for Visual SLAM [12] | Voxel hashing-based monocular visual-inertial SLAM system. | Achieved state-of-the-art performance on several datasets and improved robustness to camera motion and occlusions. | Difficulty in handling large-scale environments and varying lighting conditions. |

| Voxel-Based Representation Learning for Place Recognition Based on 3D Point Cloud [13] | Place recognition based on voxel grid. | The proposed voxel-based representation learning method is effective for place recognition using 3D point clouds. The method outperforms state-of-the art methods in terms of accuracy and robustness to changes in viewpoint and lighting conditions. The method can be trained end-to-end using only raw 3D point clouds without the need for additional preprocessing steps. | Limited to place recognition tasks and may not be suitable for other applications that require a more detailed understanding of the 3D environment, such as object recognition or scene reconstruction. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Balding, S.; Gning, A.; Cheng, Y.; Iqbal, J. Information Rich Voxel Grid for Use in Heterogeneous Multi-Agent Robotics. Appl. Sci. 2023, 13, 5065. https://doi.org/10.3390/app13085065

Balding S, Gning A, Cheng Y, Iqbal J. Information Rich Voxel Grid for Use in Heterogeneous Multi-Agent Robotics. Applied Sciences. 2023; 13(8):5065. https://doi.org/10.3390/app13085065

Chicago/Turabian StyleBalding, Steven, Amadou Gning, Yongqiang Cheng, and Jamshed Iqbal. 2023. "Information Rich Voxel Grid for Use in Heterogeneous Multi-Agent Robotics" Applied Sciences 13, no. 8: 5065. https://doi.org/10.3390/app13085065

APA StyleBalding, S., Gning, A., Cheng, Y., & Iqbal, J. (2023). Information Rich Voxel Grid for Use in Heterogeneous Multi-Agent Robotics. Applied Sciences, 13(8), 5065. https://doi.org/10.3390/app13085065