Abstract

To address the challenges of inefficient intelligent parking performance and reduced efficiency in complex environments, this study presents an end-to-end intelligent parking control model based on a Convolutional Neural Network–Long Short-Term Memory (CNN-LSTM) architecture incorporating multi-source sensory information fusion to improve decision-making and adaptability. The model can produce real-time intelligent parking control decisions by extracting spatiotemporal features, including comprehensive 360-degree panoramic images and ultrasonic sensor distance measurements. To enhance the coverage of real-world environments in the dataset, a data collection platform was developed, leveraging the PreScan simulation platform in conjunction with actual parking environments. Consequently, a comprehensive parking environment dataset comprising various types was constructed. A deep learning model was devised to ameliorate horizontal and vertical control in intelligent parking systems, integrating Convolutional Neural Networks and Long Short-Term Memory in a parallel configuration. By meticulously accounting for parking environment characteristics, sliding window parameters were optimized, and transfer learning was employed for secondary model training to fortify the prediction accuracy. To ascertain the system’s robustness, simulation tests were performed. The ultimate results from the actual environment experiment revealed that the end-to-end intelligent parking model substantially surpassed the existing approaches, bolstering parking efficiency and effectiveness in complex contexts.

1. Introduction

With increased car ownership, parking spaces become increasingly limited, and parking environments grow more complex. According to urban traffic data [1], vehicles are prone to collisions during parking, with such accidents accounting for 68% of the total figure. In addition, self-inflicted accidents make up around 23% of total accidents. This makes it challenging for inexperienced drivers to park in complex environments. With advancements in intelligent driving technology, developing intelligent parking systems to address parking difficulties for drivers has emerged as a popular research area. The development of intelligent parking systems aims to address these challenges by employing advanced technologies and optimization algorithms. In recent years, researchers have proposed various approaches to intelligent parking. There are two main types of intelligent parking methods: planning and tracking and artificial intelligent parking. The planning and tracking parking methods achieve the parking function through the mode of “environment perception—path planning—control execution.” Most current path planning is performed by a curve combination or polynomial interpolation [2,3], but their planned paths have curvature discontinuity problems. The literature [4] used the Bezier curve to construct the parking path, which solved the problem of path curvature discontinuity but brought challenges to the path-tracking control. Zhdanov et al. [5] employed an analytical method to design online paths that combine circular arcs and straight lines, resulting in a fast computational speed. However, the method requires highly flexible vehicles and can be challenging to control in confined spaces. Patrik Zips et al. [6] proposed to design suitable and collision-free trajectories specifically for parking in tight spaces, and this method can continuously optimize the paths to achieve parking planning. However, when the parking space is small, the method is computationally intensive, which leads to a time-consuming and tedious planning process. The literature [7] summarizes various parking path-tracking control methods, such as the sliding film control. Still, it is easy to park failure in the more complex environment of the slot, and it cannot draw on the experience of skilled drivers. Artificial intelligence methods make the controller simulate the driver’s operation behavior in the parking process through intelligent algorithms [8], and domestic and foreign scholars have carried out research on intelligent parking path planning and tracking control through intelligent algorithms, such as ant colony algorithm, reinforcement learning, and fuzzy control, respectively [9,10,11,12]. The literature [13] uses an ant colony algorithm that reasonably uses the pheromone heuristic and expectation factors, which improves the pheromone volatility factor. This algorithm has the characteristics of positive feedback, strong robustness, and better adaptability. Still, this algorithm’s convergence speed is slow, and the obtained paths are more tortuous and do not easily satisfy the vehicle’s kinematic constraints. The reinforcement learning (RL) algorithm in machine learning is also a path planning method in which an intelligent body is trained to explore the possible paths in the environment in a trial-and-error manner to learn without a large amount of driver experience data, and the reward or penalty of the reward function is used to judge the good or bad state of the intelligent body in the environment to obtain the corresponding target path [14]. Jiang Hui [15] built a fuzzy inferential system that can be used for parallel parking by organizing the experimental data of parking and analyzing the driver parking data with the help of adaptive neural networks, but designing a fuzzy controller still requires more driver parking data, and it is time-consuming to screen the valid data manually. In addition, there are other shortcomings of fuzzy control for parking trajectory planning: the initial area is unclear, the control accuracy is not high, and the design of rules is tedious and complicated.

In summary, the existing intelligent parking algorithms still have many shortcomings, which limit the effectiveness of these methods in practical applications and provide directions for future research to improve and optimize.

In recent years, the integration of artificial intelligence and intelligent driving has been deepening, and end-to-end deep learning methods have received much attention in intelligent driving. The end-to-end deep learning-based intelligent driving network model exhibits the following features: it operates in an “environment perception-control execution” mode for vehicle motion planning and control, utilizes supervised learning techniques to learn human driving actions and behaviors, and directly maps sensor data to control variables such as an accelerator pedal, steering wheel, and the brake pedal. This approach effectively minimizes system complexity and enhances the actual time performance [16]. Dosovitskiy, Ros, Codevilla, Lopez, and Koltun proposed Convolutional Neural Networks (CNNs) for processing image data to achieve autonomous driving [17]. Xu et al. further proposed a network architecture of Fully Convolutional Network–Long Short-Term Memory (FCN-LSTM) to enhance the model’s understanding of environments and achieve the prediction of discrete or continuous vehicle actions [18]. Hecker et al. End2End learning used a surround-view camera [19]. Pinpin Qin et al. proposed a CNN-LSTM-based car tracking model, which is able to car tracking process of the potential relationship between the car in front and the car behind, and compared with the classic car following models (LSTM model, intelligent driver model), and proved that the accuracy and the ability to learn heterogeneity of the proposed model are better than the other two models [20].

The end-to-end deep learning model directly maps input data to control commands, streamlining the process by avoiding division into multiple sub-tasks. This simplified structure enhances the system’s robustness. Deep learning enables robust feature extraction and representation learning, eliminating the need for manual feature engineering and reducing implementation complexity. As a data-driven approach, the model automatically learns control strategies, minimizing human influence and handling complex environments effectively. With its portability across various vehicle types and sensor configurations, the end-to-end model boasts excellent adaptability for diverse applications.

However, most of the current research on end-to-end-based intelligent driving control methods is aimed at regular road environments. Its application in intelligent parking environments faces difficulties in many aspects, so the related research and applications have not been reported. The reasons for this are mainly reflected in the following aspects:

- The parking environment is more complex than the regular road environment. There are narrow parking spaces and irregular obstacles, so parking requires higher accuracy for close-range obstacle perception. The LIDAR and millimeter wave radar sensors currently that are used in intelligent road driving are unsuitable for close vehicle object detection. Ultrasonic radar, which is widely used in intelligent parking environments, has a strong close object sensing capability. Still, its directionality is poor, and it is not easy to meet the input requirements of end-to-end intelligent driving models.

- Compared with cars driving on the road, the intelligent parking system is more concerned about the environmental characteristics of the side and rear of the vehicle. Compared with the intelligent driving monocular camera, the fisheye camera has a more extensive view angle and is more suitable for the 360 surround-view parking function. However, the training and operation efficiency and prediction accuracy of the end-to-end intelligent driving model are significantly reduced due to the low proportion of the reservoir line pixels in the whole image and the distortion problems such as correction distortion and image stretching in the processed image.

This paper builds an intelligent parking control model based on multi-source perception information fusion and end-to-end deep learning from deep learning. It solves the problem of sensing complex environments by fusing the vehicle’s surround-view system and vehicle’s ultrasonic sensor information. By building a parallel combination of CNN and LSTM neural network models, we can capture the front and back dependencies of environment features and coding sequence data to improve the parking control accuracy of vehicles in complex environments and better actualize “multiple input—multiple outputs.” The end-to-end intelligent parking neural network model is trained using the transfer learning method to eliminate the difference between the simulated and actual environments. Finally, the actual vehicle comparison test is conducted.

2. End-to-End Intelligent Parking Model Architecture

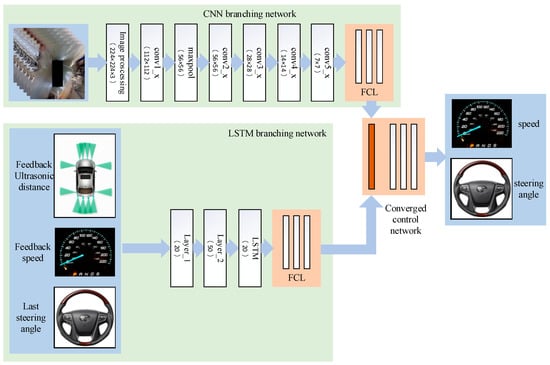

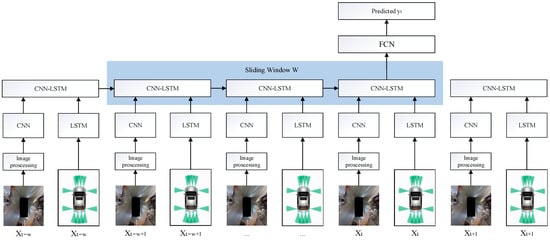

The end-to-end neural network model based on multi-source sensory information fusion mainly consists of a spatial feature extraction sub-network, temporal feature extraction sub-network, and multi-task prediction sub-network, the architecture is shown in Figure 1. The spatial feature extraction sub-network uses a Convolutional Neural Network (CNN) to extract environment spatial features and semantic information; the temporal feature extraction sub-network captures the continuous features of the environmental information and controls decisions through a Long Short-Term Memory Network (LSTM); multi-task prediction sub-network, fusing scene features and coding sequence features (steering wheel angle, speed) using the features cascade method to achieve end-to-end intelligent parking horizontal and vertical control. The input in the spatial feature extraction sub-network is a single-frame surround-view image sequence. For the temporal feature extraction sub-network, the inputs consist of ultrasonic distance sequences, car speed sequences, and steering wheel turning angle sequences, each containing data from the previous w timestamps. A timestamp represents a specific point in time when data are recorded, allowing for the precise tracking and organization of time-based information. This enables the temporal feature extraction sub-network to analyze and process time-varying data effectively, helping the model to account for changes in driving conditions and behaviors over time. The input surround-view image of the spatial feature extraction sub-network is 224 × 224 × 3, and the Convolutional Neural Network used is ResNet50. The ResNet50 network extracts the image features, and after a dimensionality reduction by three fully connected layers, they are fused with the steering wheel turning angle sequence and vehicle speed sequence features extracted by the temporal feature extraction sub-network, and the predicted steering wheel turning angle and vehicle speed are finally outputted after the feature fusion layer and the fully connected layer of the multi-task prediction sub-network to complete the horizontal and vertical control of the vehicle.

Figure 1.

The architecture of CNN-LSTM intelligent parking algorithm.

2.1. CNN-LSTM Neural Network Action Layers

The CNN-LSTM neural network model constructed in this paper mainly consists of a convolutional layer, a pooling layer, an LSTM layer, a feature fusion layer, an activation layer, a fully connected layer, and a dropout layer. The convolutional layer is used to extract image features, and the pooling layer is used to effectively reduce the size of the node matrix, reduce the number of feature dimensions, and compress the number of data and parameters. The function of the LSTM layer is to identify and capture the relationships between the temporal features of the vehicle control data sequence and the ultrasonic sensor data sequence. The role of the feature fusion layer is mainly to fuse two or more feature vectors and generate new semantic features. The primary purpose of the activation layer is to enhance the representation and learning ability of the neural network through nonlinear transformations.

- Spatial feature extraction sub-network: a convolutional layer and its network structure are shown in Table 1. The feature extraction network uses a Convolutional Neural Network to extract the high-level semantic features in the input single-frame surround-view image, including features such as the slot line, obstacles, and the location information of the intelligent parking vehicle and the surrounding environment. The input image size of the spatial feature extraction sub-network is 224 × 224 × 3, and the Convolutional Neural Network used is ResNet50. The initial convolutional layer employs 64 kernels, each with a 7 × 7 size and a stride of two. The output size is 112 × 112, followed by a 3 × 3 max pooling operation with a stride of two. This is succeeded by four distinct residual modules, each from ResNet50, which extract features and generate a 1000-dimensional feature vector. A three-layer fully connected layer reduces this to 128 dimensions before feeding it into the multi-task prediction sub-network.

Table 1. ResNet50 network structure.

Table 1. ResNet50 network structure. - Temporal feature extraction sub-network: vehicle control commands can be generated directly from the camera information via CNNs, but their mapping process does not exactly match the whole process of a human driving a vehicle, i.e., the process of a human driving a vehicle not only needs to make a driving intention judgment based on the forward view observation but it also needs to consider the historical state of the car. The driving decision of intelligent parking is continuous in the time dimension, so it is necessary to introduce recurrent neural networks. Recurrent neural networks have powerful information mining and deep characterization capabilities [21,22]. The temporal feature extraction sub-network can capture the temporal dependencies existing between the steering wheel angle data; the value of w represents the number of discrete time steps considered, which allows the sub-network to effectively analyze and process the time-varying relationships among the vehicle speed, steering wheel angle, and ultrasonic distances after first going through one LSTM layer with 128 dimensions and then further feature extraction through two fully connected layers. The outputs are all 128-dimensional time series features for the multi-task prediction sub-network and the feature extraction sub-network.

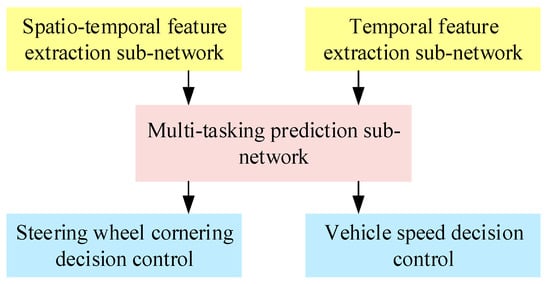

- Multi-task prediction sub-network: in end-to-end intelligent driving control decision-making methods, the steering wheel angle and vehicle speed control decisions belong to multi-task learning. Multi-task learning belongs to the recommendation task, which improves the generalization ability by sharing representation information among related tasks to enable the network to better generalize the initial task [23]. The vehicle speed and steering angle prediction information is output by fusing the spatial and temporal features to achieve horizontal and vertical vehicle control. The proposed multi-task prediction sub-network is shown in Figure 2.

Figure 2. Multi-tasking prediction sub-network.

Figure 2. Multi-tasking prediction sub-network.

2.2. Activation Function, Loss Function, and Training Algorithm

The [24] (Exponential Linear Unit) is used as the activation function, and the functional Equation (1) is as follows:

where is the weighted sum of the inputs; is an adjustable hyperparameter defined as the value to which the function approaches when the input is a large negative number, which is usually set to 1. The role of the fully connected layer is twofold: first, there is dimensional transformation, which reduces the high-dimensional feature vector to a lower dimension; second, there is the integration of the extracted image features to obtain the high-level meaning that the image features have. The primary purpose of the dropout layer is to address the overfitting issue that emerges during the deep network training process.

The decision values outputted from the CNN-LSTM neural network model constructed in this paper are continuous and belong to the regression problem, so the Mean Squared Error Loss () loss function is used, and its defining function Equation (2) is as follows:

where is the sample size; is the output prediction of the th sample; and is the corresponding actual value.

The Nadam [25] (Nesterov-accelerated Adaptive Moment Estimation) algorithm is used as the training algorithm. This algorithm can calculate the adaptive learning rate for each parameter, it has more substantial constraints on the learning rate, it learns faster, and it possesses better optimization results, and its Equation (3) is as follows:

where is the network weight; is the gradient, and the sub-script indicates the th iteration process; is the first-order moment, i.e., the expected value of the gradient, and indicates the Nesterov acceleration value of the first-order moment; is the second-order moment, i.e., the expected value of the gradient squared; indicates the corrected first-order moment; indicates the corrected second-order moment; and indicate the decay rate; indicates the learning rate; and indicates the minuscule quantities. , , , and are hyperparameters set before the start of training and they do not need to be learned by training.

3. Dataset Collection

3.1. Simulation Training Data Collection

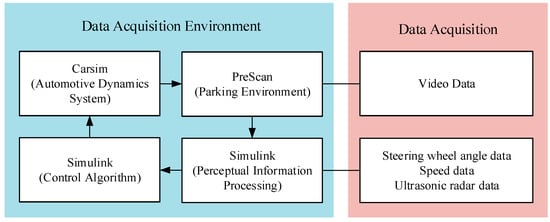

To achieve end-to-end intelligent parking model pre-training and obtain a large amount of parking data, this paper constructs a simulated parking environment using the Carsim/PreScan/Simulink framework, Figure 3 shows the flow chart of simulation data acquisition. This approach simulates an intelligent parking process closely resembling actual parking environments. The primary advantages of using a simpler simulation environment include the following:

Figure 3.

Simulation data acquisition flowchart.

- Reduced computational complexity: This allows for faster model training, which is particularly beneficial during the initial model development and testing stage.

- Faster convergence: A simple environment with fewer variables can help the model converge more quickly during pre-training, enabling more effective fine-tuning of model parameters and performance evaluation.

- Ease of implementation: A simpler simulation environment is easier to build and enables more extensive data collection, saving time and resources during development.

In this study, the driver’s parking control within the simulated parking environment is achieved using the Logitech G29 driving simulator. Parking loop views are recorded with PreScan’s integrated video recording function, while the car dynamics model is built using Carsim, replacing the in-built car model in PreScan. The steering wheel angle, vehicle speed, and ultrasonic radar distance data for the simulated car are recorded through Simulink. Each frame of the surround-view data and ultrasonic sensor data collected by the simulation platform automatically match the corresponding driving decision, eliminating the need for additional space–time synchronization.

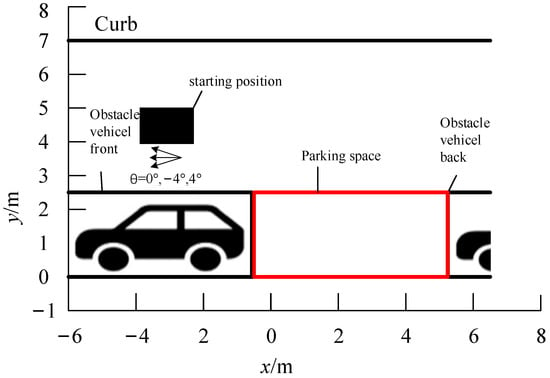

Skilled drivers collected the experimental data by manually driving the main vehicle’s simulator (Figure 4). The resolution of the captured screenshots was set to 540 × 960, with a frame rate of 20 fps. To enhance the diversity of the dataset, multiple skilled drivers performed various parking environments from different starting points in multiple parking experiments (as depicted in Figure 5), the black area is the starting position of parking, and the arrow points to three different angles of parking starting. This data collection process yielded a total of 648,350 frames of parking data.

Figure 4.

(a) Simulated parking environment for capturing intelligent parking driving models. (b) Driving simulator.

Figure 5.

Starting area in the simulated parallel parking slot.

The dataset was divided into training, validation, and test sub-sets in an 8:1:1 ratio to evaluate the model’s performance during training and assess its ability to generalize to new, unseen data.

Although the simplified simulated parking environment offers several advantages, it may only partially capture the intricacies of actual parking environments. To address this limitation, this paper employs a two-step approach: first, the intelligent parking model is pre-trained in the simulation and then retrained using transfer learning methods with a dataset from actual parking environments and more complex environments. This strategy ensures that the end-to-end intelligent parking model achieves high accuracy and better generalization when applied in real-world settings.

3.2. Actual Car Training Data Collection

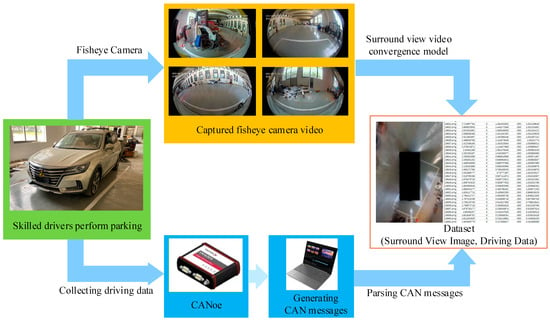

This paper needs to use the actual parking environment data to improve the generalization of the end-to-end intelligent parking model to perform transfer learning retraining on the completed pre-trained model. The acquisition process is shown in Figure 6. In the process of parking data acquisition, the parking video data of the front, rear, and left side of the car are collected in real-time by four fisheye cameras that are installed in the front hood, trunk, and left and right rearview mirrors of the vehicle, respectively, which are collected at a frequency of 20 fps, followed by the use of a script to synthesize and split the surround-view video into a single frame surround-view picture, while using CANoe to record the steering wheel turn angle, car speed, and ultrasonic radar data through CANalyzer. The CANalyzer 7.5 records the steering wheel angle, car speed, and ultrasonic radar data to ensure that the surround-view image data can correspond with CANoe data one by one. Hence, the CANoe data collection frequency is also 20 Hz; after that, the software generates CAN messages, parses them, and finally collects the synthesized surround-view frame images and CANoe collected data, and uses EXCEL to make dataset.

Figure 6.

Actual car dataset collection process.

However, when making the actual parking environment dataset, it is necessary to consider the temporal and spatial synchronization of the surround-view parking image sequence and the car control data sequence, and two synchronization methods are chosen in this paper:

- To ensure that the length of the sequence of the surround-view images captured by the fisheye camera and the data captured by CANoe are consistent, this paper proposes a comparison of the displacement data of both to achieve sequence length consistency. A 5 m straight line is designed in the actual environment to accomplish this, with ground markings at the start and endpoint. The surround-view system records the ground markings before each parking data acquisition, and the displacement data are obtained using CANoe.

- To ensure the proper alignment of the starting time for data interception, this paper proposes an initiation of the interception process at the beginning of the movement detected in the surround-view image and simultaneously at the commencement of the speed and displacement data captured by CANoe. This approach helps to synchronize and maintain the consistency of the data sequences from both sources, resulting in a more coherent and seamless integration.

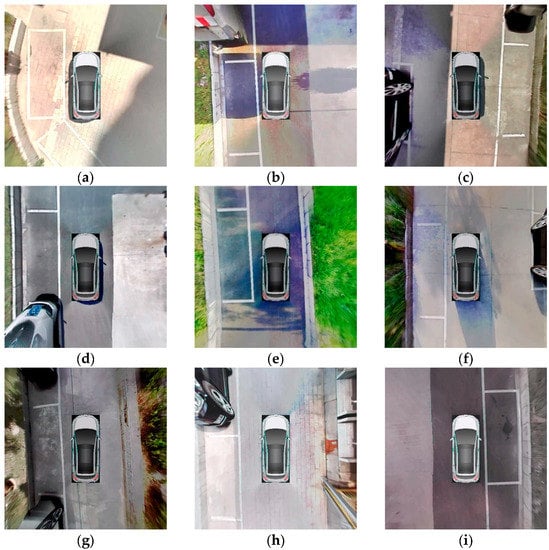

In this paper, various datasets of actual parking environments are collected, and an example of the surround-view image in the dataset is shown in Figure 7. The dataset collection environment is mainly selected in the parking environment with obstacles and parking space lines, which ensures the complexity of the dataset collection environment and thus improves the generalization of the training model. Likewise, this paper also takes into account the impact of various weather conditions on parking performance while collecting the data. Diverse weather conditions, such as sunny, cloudy, rainy, and snowy, can affect visibility and road conditions, resulting in a slow model response and even failure to recognize parking space lines, which poses more challenges for the parking system. By gathering data in these varying environments, this study aims to create a more robust and adaptable end-to-end intelligent parking model that is capable of handling a wide range of actual parking environments. The actual car parking dataset contains parallel parking on the left and right side, strong and low light conditions, and obstacles of different shapes in the front and back, whether the road is inclined, and whether the parking line is fuzzy. The parking starting points are three initial attitudes of 0°, −4°, and 4°; the range of the parking starting points is consistent with Figure 4. This experiment was conducted by several skilled drivers in selected multiple environments for multiple parking to collect data, with 560,106 frames of parking data.

Figure 7.

Parking environment for capturing intelligent parking driving models: (a) Strong light illumination and curbstone obstacles; (b) front-side vehicle obstacle and curbstone obstacles; (c) front and left-side vehicle obstacle and curbstone obstacles; (d) vehicle obstacle and curbstone obstacles and covered parking slot lines; (e) left- and right-side curbstone obstacles; (f) right-side vehicle obstacle and curbstone obstacles and fuzzy parking slot line; (g) rainy day; (h) sunny day; and (i) ideal parking environment.

3.3. Dataset Enhancement

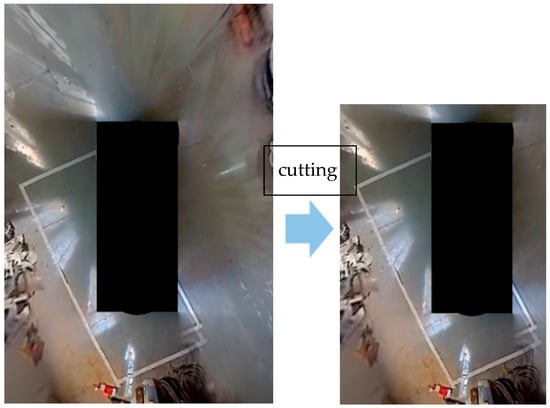

Compared with intelligent driving, the environment of intelligent parking is more complex, requiring a higher accuracy of obstacles and boundary detection. There are many blind areas of view around the vehicle, so this paper uses the vehicle surround-view system to complete the acquisition of image information around the vehicle. However, there are disturbing pixels in the surround-view map that affect the training effect, so the end-to-end intelligent parking surround-view map should be pre-processed to improve the adaptability and generalization of the model in the intelligent parking environment: in the left horizontal parking condition, the movement of the car parking slot line is always located at the bottom left of the surround-view map slowly, so the bottom left of the input sequence of the surround-view map should be cropped, as shown in the right part of Figure 8, to achieve the goal of not changing the premise of the image sequence’s input size, to avoid the low training efficiency due to the compression of the model on the region of interest, in order to reduce the interference pixels present in the input surround-view image, and improve the recognition accuracy of the parking slot line and the obstacle in the image.

Figure 8.

Cutting area.

Normalization of the input data (and the propagation data between network layers) during training can smooth and stabilize the training process of deep neural networks [26,27]. Data normalization is accomplished by the following Equation (4):

is the original data, and is the normalized data.

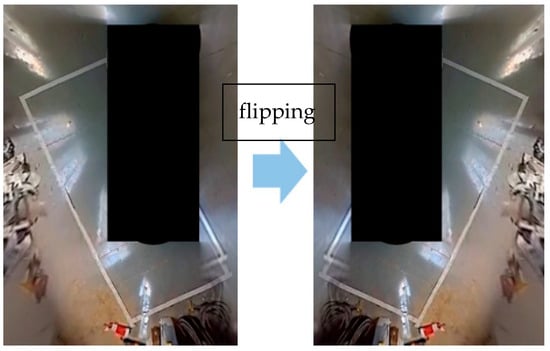

In addition, the dataset is enhanced using the horizontal flipping enhancement method [28]. Random frames in the dataset are flipped horizontally to obtain their mirror frames, and the flipped car loop view is shown in the right part of Figure 9. This method helps to smooth the algorithm and make the end-to-end intelligent parking system more stable.

Figure 9.

Flip picture data.

4. Experiment and Evaluation

4.1. Training Platform

The training was performed using TensorFlow 2.7 on a hardware platform with the following specifications: a 5.0 GHz Intel i9-9900k processor, 64 GB DDR4/3200 MHz RAM, and an Nvidia GeForce RTX 2080Ti. TensorFlow 2.7 is an open-source software platform that allows the execution of computations on multiple GPUs or CPUs to perform computations [29].

4.2. Model Parameters

The batch size is set to 32. Batch size is controlled as the number of samples propagated through the network simultaneously. Using small batches compared to larger batches has been shown to avoid local minima, improve generalization performance, and enhance optimization convergence during deep neural network training.

With w set to 3, the LSTM network processes w driving decisions in a sliding window, which represents the current time driving decision that is predicted using the past w-1 driving decisions, and the size of the sliding window w determines the memory length for making the current control decisions (as shown in Figure 10). A larger w is more suitable for high-speed driving because it processes more information from the past but requires more time to train and make driving decisions. Conversely, smaller w leads to shorter memories that can significantly improve the actual time nature of driving decisions and is suitable for low-speed driving, which is similar to parking control.

Figure 10.

Schematic diagram of the model training process.

The Nadam optimization algorithm is used to train the network. The initial learning rate is set to 0.001, is set to 0.9, is set to 0.999, and is set to 1e-7, and the probability value of dropout is set to 0.5. Usually, a high learning rate may make it difficult for the network to converge, especially for networks trained with small batches. However, the 0.001 learning rate is very low, so many training sets are needed to achieve convergence.

Epoch is set to 300, and training is interrupted when is less than 0.02.

4.3. Experiments and Analysis Based on Simulation Platform Data

To demonstrate the efficiency of the CNN-LSTM network model, two alternative networks are selected in this paper to compare the performance levels:

- ResNet-50 ResNet50 and FCN: This network utilizes the ResNet50 architecture for feature extraction. The resulting feature vectors are subsequently input into a fully connected network (FCN) equipped with a Softmax layer to predict driving decisions for parking.

- ResNet50, LSTM, and FCN: This network uses CNN-extracted visual features fused separately with LSTM-extracted coded sequence features in the feature fusion layer, and the FCN layer in the feature fusion layer is responsible for predicting parking driving decisions.

To measure the accuracy of the end-to-end intelligent parking driving model output driving decisions, the last 10% of the data samples of the dataset (the validation data collected by the parking process will not be included in the training) are selected as the validation set. This is because using 10% of the randomly selected data as the validation set would be very similar to the training set, resulting in a low generalization capability of the trained model.

A schematic of its training process is shown in Figure 10.

4.4. Comparison Analysis and Evaluation

For a skilled driver to park twice at the same starting point, the steering wheel and throttle operations are likely to be different. Therefore, achieving 100% accuracy even with training data is impossible. In neural networks, the loss function is used to evaluate the difference between the predicted and actual values of the network model output. The smaller the loss value, the better the performance of the network model. As shown in Table 2, the loss function values in the table are the sum of the error values of the two loss functions of the steering wheel angle and car speed. Referring to the data in the table, it can be concluded that the mean square error value of the ResNet50, LSTM, and FCN network based on spatiotemporal feature fusion will be reduced from 0.163 to 0.033, and the end-to-end intelligent parking model based on CNN-LSTM can converge better in the environment of simulation.

Table 2.

Comparison of training and validation accuracy across different network structures.

The performance of the end-to-end intelligent parking model is evaluated in a simulation environment for steering wheel angle prediction. Table 3 shows the mean square error values of the steering wheel angle of the model, which is 0.446 for the CNN model and 0.013 for the CNN-LSTM model. This further proves that the LSTM branching network can improve the prediction effect of the model on the steering wheel angle, which further improves the prediction accuracy of decision control.

Table 3.

Steering wheel angle mean square error loss.

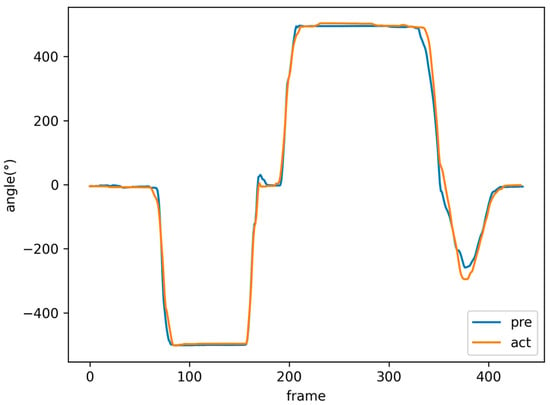

Figure 11 and Figure 12 show the comparison between the predicted and actual steering wheel angle curves, where the orange curve indicates the actual curve and the blue curve indicates the predicted curve. By comparing the two curves, it can be found that the prediction curve of the CNN model does not fit the actual curve well from the overall perspective.

Figure 11.

Steering wheel turning angle curve predicted by CNN model.

Figure 12.

Steering wheel turning angle curve predicted by CNN-LSTM model.

The prediction curve of the CNN-LSTM model fits the curve better, and its prediction curve is smoother with less jitter, which can predict the steering wheel turning angle value more accurately.

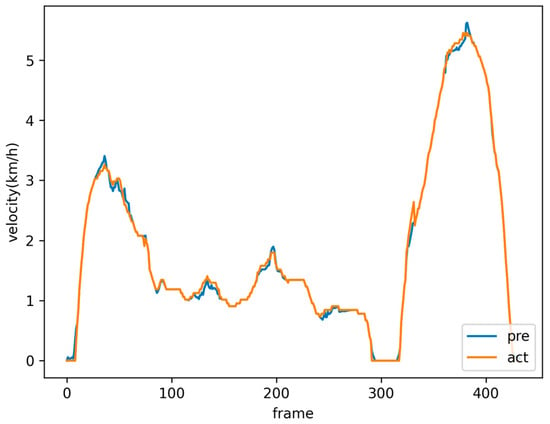

Next, the speed prediction performance was evaluated, and the mean square error value of the CNN-LSTM model for speed was calculated to be 0.00309. A total of 391 frames from the test set were selected to compare the speed prediction curve and the actual curve, as shown in Figure 13. It can be seen from the figure that the predicted curve fits the actual curve well.

Figure 13.

Vehicle speed curve predicted by CNN-LSTM model.

4.5. Experimentation and Analysis Based on Actual Car Training Data

After training the end-to-end intelligent driving model on the PreScan dataset, the well-performing model is solidified and retrained using actual environment data for transfer learning [30]. The features learned in the first few layers of the neural network are usually generic. The features learned in the later, deeper network layers are specific to a particular task environment. The network layers that learn generic features are transferred, while those that learn specific features are finetuned. Finetuning is a method that adjusts the weight parameters of neural network models that others have trained for new learning tasks.

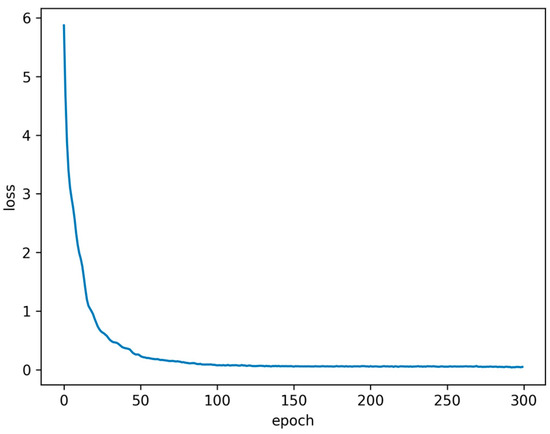

The actual dataset is used to finetune and retrain the end-to-end intelligent parking model trained on the PreScan dataset using a transfer learning approach to close the environment gap between the actual and simulated environments. The finetuning strategy for transfer learning in this paper is to fix the weights of the ResNet50 and LSTM layers, initialize the fully connected layers for training, and retrain the neural network weights using the backpropagation method. The learning rate is set to 0.001, the training algorithm is the Nadam algorithm, and the model is trained until the error no longer decreases. The loss function curve of the CNN-LSTM network trained on the actual parking environment dataset is shown in Figure 14, and the neural network converges during the training process.

Figure 14.

CNN-LSTM network mean square error loss function curve.

The prediction performance of the model was evaluated. The mean square error of the CNN-LSTM model was 0.069 and 0.082 for the steering wheel angle and speed in the test environment, which indicates that the CNN-LSTM network model has a good prediction of the steering wheel angle and speed after retraining by transfer learning on the actual environment dataset, and the completed model has good generalization after training.

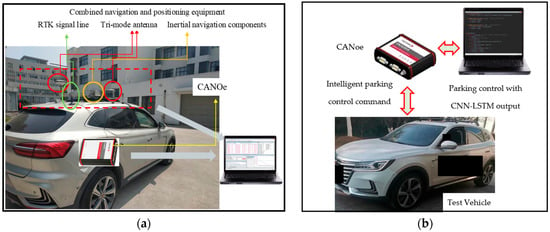

4.6. Actual Car Experiment

The experimental vehicle is the ROEWE Marvel X electric vehicle; the experimental environment is shown in Figure 15; and the hardware architecture of the actual vehicle is shown in Figure 16; Table 4 shows the configuration of the vehicle. The vehicle is equipped with an Around View Monitor (AVM) system around the body, twelve ultrasonic radars around the body, four fisheye cameras, and an RTK-GPS system, which exchanges data with other ECU nodes inside the vehicle via CAN bus and sends control commands to the execution unit. The data are processed using the algorithm proposed in this paper, and the parking control is output to the intelligent parking control unit via the CAN bus.

Figure 15.

Actual parallel parking slot: (a) Vehicle obstacle, columnar obstacle, and curbstone obstacles; (b) columnar obstacle and curbstone obstacles; (c) vehicle obstacle and curbstone obstacles; (d) vehicle obstacle and fuzzy parking slot line; (e) vehicle obstacle and columnar obstacle and fuzzy parking slot line; and (f) vehicle obstacle and covered parking slot lines.

Figure 16.

(a) The hardware architecture of the intelligent parking experimental system; (b) path data collection platform.

Table 4.

Configuration of vehicle.

Because the detection algorithm is beyond the scope of this paper, it is assumed in the experiments that the parked parking position has been detected by AVM or radar. The vehicle position during parking is obtained from RTK-GPS, and the positioning error is only 1~2 cm in the open environment.

The actual car experiments consist of the following two groups:

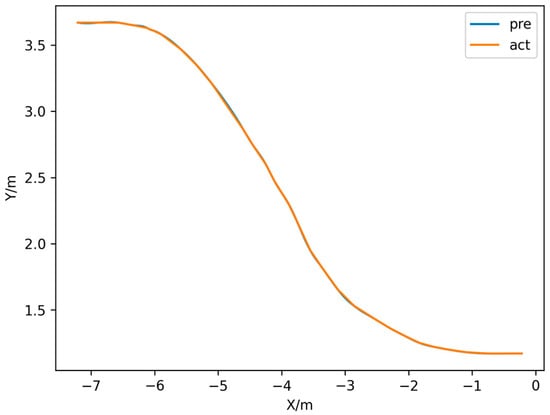

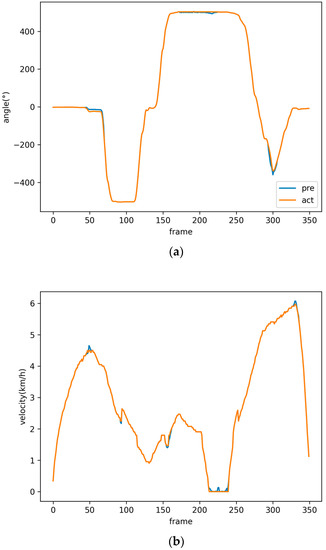

Group 1: Frame-by-frame analysis of the parking trajectory of the actual car test, Figure 17, shows the experimental environment of this experiment and the end-to-end intelligent parking model controlling the experimental vehicle for parking. The deviation is the mean square error between the car’s predicted and actual path coordinates on the x and y axis. Figure 18 shows the comparison trajectory between the actual car and the test for the complete parking process with of 0.048 for the x-axis trajectory coordinates and 0.012 for the y-axis trajectory coordinates. Figure 19 compares the control decision between the actual car and the test for the actual car for this parking process. The results show that the computational model provides a smooth driving experience that is consistent with a skilled driver.

Figure 17.

Parallel parking trajectory comparison experiment: (a) Start parking; (b) Car turning; (c) Continuous turning; (d) Second turning; (e) Enter the parking space; (f) Complete parking.

Figure 18.

Tracking the effect of parallel parking.

Figure 19.

Predicted values compared to the skilled driver driving data: (a) Steering wheel turning angle curve. (b) Vehicle speed curve.

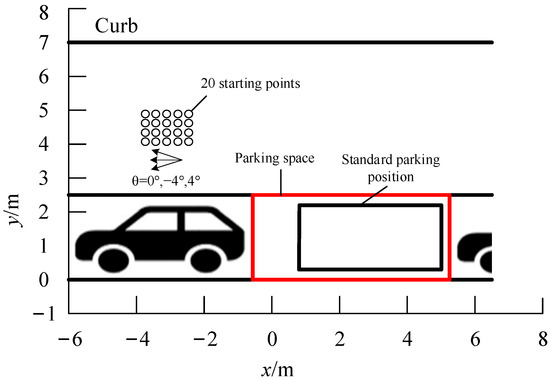

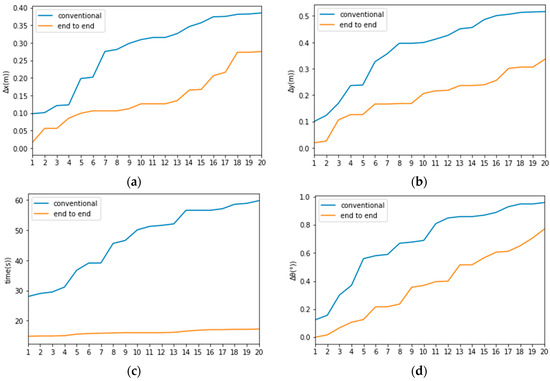

Group 2: Design of end-to-end intelligent parking and existing path-tracking intelligent parking of parking accuracy and actual time comparison experiments: There are six types of experimental environments, such as bilateral car obstacle, bilateral column obstacle, unilateral column obstacle, unilateral car obstacle, fuzzy or obscured slot line, and the arrow points to three different angles (0°, −4°, 4°) of parking starting; there are three relative positions of 1 m, 1.5 m, and 2 m. A total of 54 working conditions and 20 sets of end-to-end intelligent parking model and existing path-tracking intelligent parking model with the standard parking position were conducted under each working condition to compare the parking test, where the distance between the standard parking position and the boundary on both sides was 0.3 m, the distance between the rear end and the rear side boundary was 0.2 m (the standard parking position is shown in Figure 20), and 1080 sets of parking data were finally obtained.

Figure 20.

Standard parking position.

The mean and extreme values of 1080 groups of parking data are shown in Table 5, where x is the absolute x-axis error of the car compared to the standard parking space after parking is completed, y is the absolute y-axis error of the vehicle compared to the standard parking space after parking is completed, is the absolute angle error of vehicle car compared to the standard parking space after parking is completed, and time is the time spent by the car from the completion of the parking tour to the time spent in the parking space. From the table, we can learn that, compared with the current path-tracking intelligent parking model, end-to-end intelligent parking of parking in a small part of the premise of the improvement, the average value of the parking completion time is about 20 s. In the environment of fuzzy slot lines, the longest parking process is completed within 27 s. However, the average completion time for the intelligent path-tracking parking is more than 29 s, so the end-to-end intelligent parking model built in this paper has a significant advantage in terms of the completion time for parking. As shown in Figure 21, the curve environment is “Vehicle obstacle and Columnar obstacle and Curbstone obstacles,”. The curve data are the comparison data of 20 sets of traditional trajectory tracking intelligent parking and end-to-end intelligent parking in this environment. It can be observed that end-to-end intelligent parking is more efficient than path-tracking intelligent parking, and end-to-end intelligent parking has obvious advantages in terms of parking accuracy. Based on the above data comparison, it can be concluded that the end-to-end intelligent parking model has some improvements in terms of the actual time and accuracy compared to the existing path-tracking intelligent parking model.

Table 5.

Intelligent parking average comparison test.

Figure 21.

Comparison of 20 sets of parking data between conventional and end-to-end intelligent parking: (a) x; (b) y; (c) time; and (d) .

5. Conclusions

This paper uses an intelligent parking control model based on multi-source perception information fusion and end-to-end deep learning to solve the problems of low intelligent parking efficiency and poor parking effects in complex environments. In this study, we construct a comprehensive data acquisition platform encompassing simulated and actual car parking environments. In this paper, Carsim2019.1/PreScan8.5/MatlabSimulink2020b simulation software is used to build a parking data acquisition platform in simulation environments, providing enough simulation data for the pre-training of end-to-end intelligent parking models, while an actual dataset acquisition platform is built using a surround-view system, ultrasonic sensors, and CANoe for collecting actual parking data and preparing for transfer learning and actual vehicle testing afterwards. We built a deep learning model combining CNN and LSTM in parallel to capture the front-to-back dependencies in environment features and coded sequence data, and to solve the problem of the horizontal and vertical control of car parking to achieve the effect of “multiple inputs—multiple outputs”; for the complexity of an intelligent parking environment, pre-processing such as cropping and flipping the dataset to improve the training speed and generalization ability of the model; considering the low-speed environment of intelligent parking and to enhance the training efficiency of the end-to-end intelligent parking model by optimizing the timestamp w; and to ensure comprehensive coverage of environmental information during the parking process, we employed a fusion method that combines circumferential information and ultrasonic sensor data, thereby improving perception accuracy. Initially trained on a simulated dataset, the model is then retrained using a transfer learning approach with an actual parking environment dataset, which helps eliminate perception discrepancies between simulated and actual parking environments to enhance the adaptability and generalization capabilities of the end-to-end intelligent parking model. Finally, we test the end-to-end intelligent parking model on an experimental vehicle, including parking trajectory tests and comparison tests against existing path-tracking intelligent parking models. Our end-to-end intelligent parking model accurately predicted steering wheel angles and speeds and completed parking tasks in complex environments. Furthermore, it significantly improved the actual time performance and accuracy compared it to existing path-planning intelligent parking control models.

6. Discussion

Management insights into end-to-end intelligent parking can help city planners and parking facility operators better understand the practical implications and potential benefits of adopting intelligent parking technologies. Some key insights are as follows:

- Efficiency improvements: End-to-end intelligent parking systems can significantly improve vehicle parking efficiency.

- Environmental benefits: By reducing the time spent parking and optimizing traffic flow, intelligent parking can significantly contribute to reducing emissions, cleaning the urban environment, and unlocking energy.

- Strong adaptability: End-to-end intelligent parking systems can adapt to complex and unfamiliar parking environments better than skilled drivers and meet urban parking spaces’ changing needs.

- Easy to reproduce: The structure of the end-to-end intelligent parking model is relatively simple and the experimental cost is low, which is convenient for researchers to reproduce the study.

- Technical progress and innovation: It provided a new design idea for end-to-end intelligent parking.

Nevertheless, additional optimization and enhancement are required to effectively apply this method in real-world environments. In future research, we should investigate the following potential avenues to refine the approach presented in this paper:

- In this study, the car surround-view image and ultrasonic sensor distance information are features that are captured through spatial and temporal feature sub-networks, respectively. Hence, the resolution of the environment, especially the obstacle boundaries, could be more accurate. In this case, it should be considered to improve the surround-view image extracted by the spatial feature sub-network into an image where the car surround-view image is fused with the ultrasonic radar or proximal millimeter wave radar information, where the distance information captured by the radar sensor is projected onto the vehicle to the surrounding view map through coordinate transformation and presented as a smooth connecting line of the detected points of the ultrasonic sensors around the vehicle, which can further achieve information fusion and improve the recognition accuracy of the end-to-end intelligent parking model for obstacle boundaries in low-speed environments.

- The constructed end-to-end intelligent parking model only outputs the steering wheel turning angle and vehicle speed control decisions in this study. However, brake pedal control is a critical factor that must be addressed in the driver’s parking process. Therefore, in future research, brake pedal control strategies should be considered for incorporation into the model to simulate actual driving situations and improve the accuracy and safety of the parking system more comprehensively.

Author Contributions

Conceptualization, Z.M., H.J. and S.M.; methodology, Z.M.; software, Z.M.; validation, Z.M., S.M. and Y.L.; formal analysis, S.M.; investigation, Z.M.; resources, H.J.; data curation, Y.L.; writing—original draft preparation, Z.M.; writing—review and editing, S.M.; visualization, Z.M.; supervision, H.J.; project administration, H.J.; funding acquisition, S.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Natural Science Foundation of the Jiangsu Higher Education Institutions of China, grant number: 16KJA580001.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Huang, X. Study on Optimal Trajectory Decision and Control Algorithm of Automatic Parking System. Master’s Thesis, Jilin University, Jilin, China, 2018. [Google Scholar]

- Hu, Q.; Wang, J.; Zhang, X. Optimized Parallel Parking Path Planning Based on Quintic Polynomial. Comput. Eng. Appl. 2022, 58, 291–298. [Google Scholar] [CrossRef]

- Jiang, S.; Jiang, H.; Ma, S. Automatic Parking Path Tracking Control Based on Backstepping Sliding Mode Adaptive Strategy. J. Chongqing Univ. Technol. Nat. Sci. 2020, 34, 9–16. [Google Scholar]

- Qian, Y.; Wang, Z.; LI, P. Automatic Parking Control Method Based on the Linear-quadratic Regulator. Inform. Control 2021, 50, 660–668. [Google Scholar] [CrossRef]

- Zhdanov, A.A.; Klimov, D.M.; Korolev, V.V.; Utemov, A.E. Modeling parallel parking a car. J. Comput. Syst. Sci. Int. 2008, 47, 907–917. [Google Scholar] [CrossRef]

- Zips, P.; Böck, M.; Kugi, A. Optimisation based path planning for car parking in narrow environments. Robot. Auton. Syst. 2016, 79, 1–11. [Google Scholar] [CrossRef]

- Das, S.; Reshma Sheerin, M.; Nair, S.R.P.; Vora, P.B.; Dey, R.; Sheta, M.A. Path Tracking and Control for Parallel Parking. In Proceedings of the 2020 International Conference on Image Processing and Robotics (ICIP), Negombo, Sri Lanka, 6–8 March 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Li, H. A Study on Path Planning and Tracking Control Method for Automatic Parking System. Ph.D. Thesis, Hunan University, Changsha, China, 2014. [Google Scholar]

- Sokri, M.N.; Kadir Mahamad, A.; Saon, S.; Yamaguchi, S.; Ahmadon, M.A. Autonomous Car Parking System using Fuzzy Logic. In Proceedings of the 2021 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 10–12 January 2021. [Google Scholar] [CrossRef]

- Hu, Z.; Yan, J.; Wang, Y.; Yang, C.; Fu, Q.; Lu, W.; Wu, H. Study on Path Planning of Multi-storey Parking Lot Based on Combined Loss Function. In Proceedings of the International Conference on Intelligent Computing, Xi’an, China, 7–11 August 2022. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, C.; Zhao, J.; Bu, C. Path Planning and Tracking Control for Narrow Parallel Parking Space. J. Jilin Univ. Eng. Technol. 2021, 51, 1879–1886. [Google Scholar] [CrossRef]

- Cao, X.; Shi, P.; Li, Z.; Liu, M. Neural-Network-Based Adaptive Backstepping Control With Application to Spacecraft Attitude Regulation. IEEE Trans. Neural Netw. Learn. Syst. 2017, 29, 4303–4313. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Shi, X. Dynamic path planning of mobile robot based on improved ant colony algorithm. J. Nanjing Univ. Sci. Technol 2019, 43, 700–707. [Google Scholar] [CrossRef]

- Liang, X.; Feng, Y.; Ma, Y.; Cheng, G.; Huang, J.; Wang, Q.; Zhou, Y.; Liu, Z. Deep Multi-Agent Reinforcement Learning: A Survey. Acta Autom. Sin. 2020, 46, 2537–2557. [Google Scholar] [CrossRef]

- Jiang, H. Research on Strategies of Automatic Parallel Parking Steering Control. Ph.D. Thesis, Jilin University, Jilin, China, 2010. [Google Scholar]

- Li, G. End-to-End Autonomous Driving Using Deep Deterministic Policy Gradient Based on 3D Convolutional Neural Network. Electron. Des. Eng. 2018, 26, 5. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Ros, G.; Codevilla, F.; Lopez, A.; Koltun, V. CARLA: An Open Urban Driving Simulator. In Proceedings of the Conference on Robot Learning (CoRL), Mountain View, CA, USA, 13–15 November 2017; pp. 1–16. [Google Scholar] [CrossRef]

- Xu, H.; Gao, Y.; Yu, F.; Darrell, T. End-to-End Learning of Driving Models from Large-Scale Video Datasets. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 3530–3538. [Google Scholar] [CrossRef]

- Hecker, S.; Dai, D.; Van Gool, L. End-to-End Learning of Driving Models with Surround-View Cameras and Route Planners. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 435–453. [Google Scholar] [CrossRef]

- Qin, P.; Li, H.; Li, Z.; Guan, W.; He, Y. A CNN-LSTM Car-Following Model Considering Generalization Ability. Sensors 2023, 23, 660. [Google Scholar] [CrossRef] [PubMed]

- Jeong, Y. Interactive Lane Keeping System for Autonomous Vehicles Using LSTM-RNN Considering Driving Environments. Sensors 2022, 22, 9889. [Google Scholar] [CrossRef] [PubMed]

- Liu, L.; Finch, A.; Utiyama, M.; Sumita, E. Agreement on Target-bidirectional Recurrent Neural Networks for Sequence-to-Sequence Learning. J. Artif. Intell. Res. 2020, 67, 581–606. [Google Scholar] [CrossRef]

- Zhang, Y.; Yang, Q. An Overview of Multi-task Learning. Natl. Sci. Rev. 2018, 5, 30–43. [Google Scholar] [CrossRef]

- Li, Y.; Fan, C.; Li, Y.; Wu, Q.; Ming, Y. Improving Deep Neural Network with Multiple Parametric Exponential Linear Units. Neurocomputing 2018, 301, 11–24. [Google Scholar] [CrossRef]

- Gui, Y.; Li, D.; Fang, R. A fast Adaptive Algorithm for Training Deep Neural Networks. Appl. Intell. 2022, 53, 4099–4108. [Google Scholar] [CrossRef]

- Singh, S.; Krishnan, S. Filter Response Normalization Layer: Eliminating Batch Dependence in the Training of Deep Neural Networks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11234–11243. [Google Scholar] [CrossRef]

- Lin, Y.-Z.; Nie, Z.-H.; Ma, H.-W. Structural Damage Detection with Automatic Feature-Extraction through Deep Learning. Comput. Civ. Infrastruct. Eng. 2017, 32, 1025–1046. [Google Scholar] [CrossRef]

- He, K.; Girshick, R.; Dollár, P. Rethinking Imagenet Pre-training. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 4918–4927. [Google Scholar] [CrossRef]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, N.; et al. Tensorflow: A System for Large-scale Machine Learning. Osdi 2016, 16, 265–283. [Google Scholar] [CrossRef]

- Zhang, X.; Guo, Y.; Zhang, X. Deep Convolutional Neural Network Structure Design for Remote Sensing Image Environment Classification Based on Transfer Learning. In Proceedings of the IOP Conference Series: Earth and Environmental Science, Chengdu, China, 18–20 July 2020. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).