Automatic Parsing and Utilization of System Log Features in Log Analysis: A Survey

Abstract

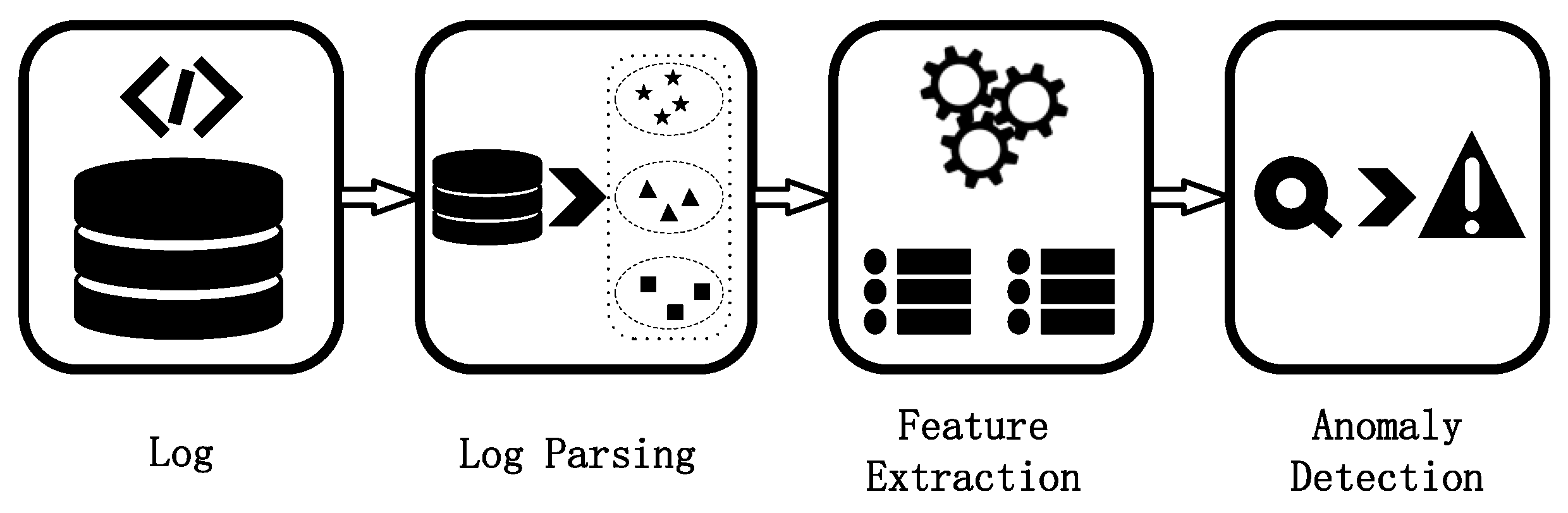

:1. Introduction

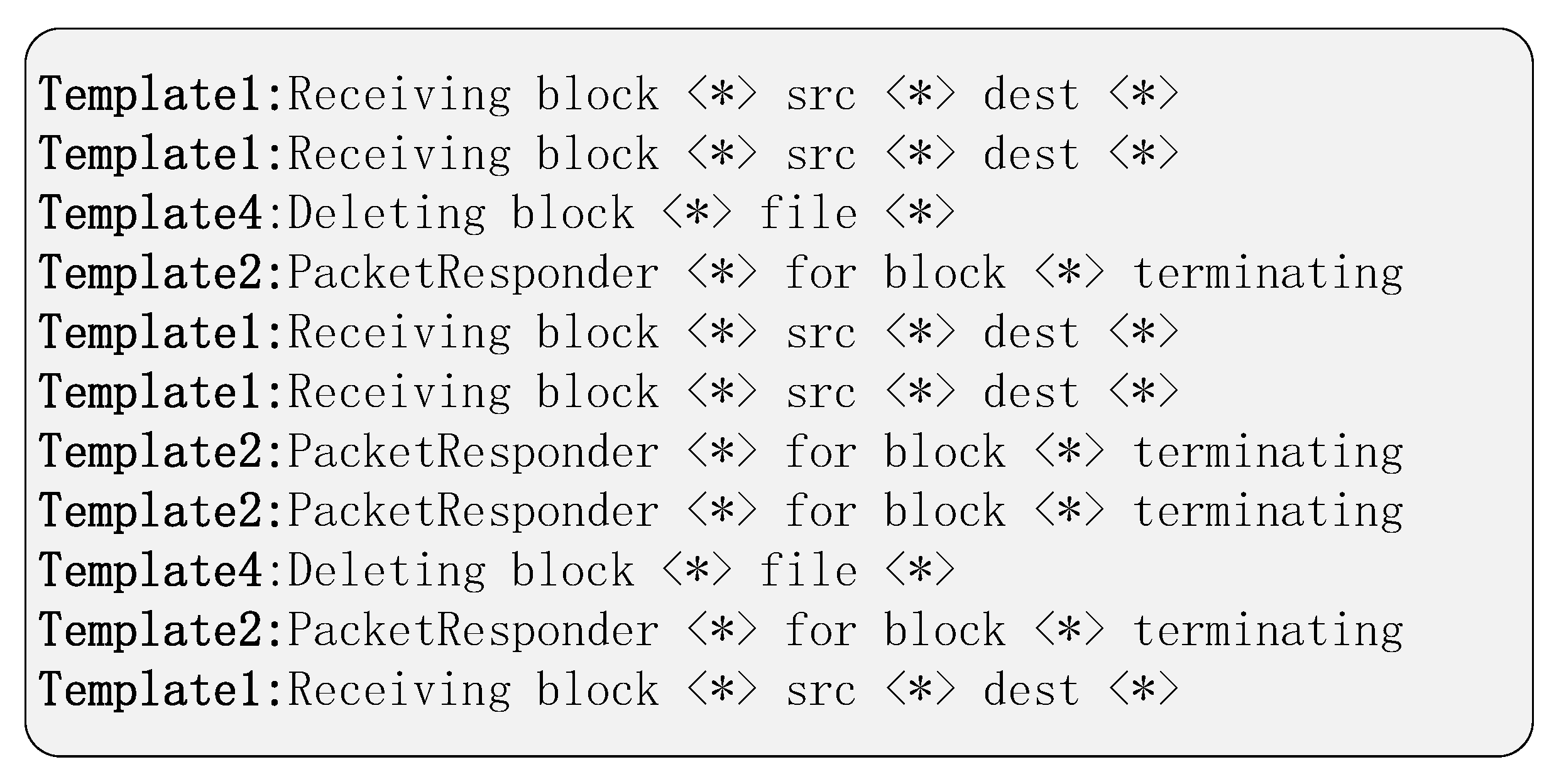

2. Log Parsing

2.1. Classification of Log Parsing

2.1.1. Clustering

2.1.2. Heuristic

2.1.3. Utility Itemset Mining

2.1.4. Others

2.2. Accuracy of Parsing

2.2.1. Evaluation Indicators

2.2.2. Accuracy

3. Feature Extraction and Utilization

3.1. Digital Features

3.1.1. Log Count Features

3.1.2. Log Index Features

3.1.3. Log Event Features

3.1.4. Log Sequence Features

3.1.5. Log Time Features

3.1.6. Log Parameter Features

3.1.7. Others

3.2. Graphical Features

3.3. Comparison of Feature Utilization

4. Prospects

5. Summary

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| DMTF | Distributed Management Task Force |

| CADF | Cloud Auditing Data Federation |

| PID | Process Identity Document |

| PA | Parsing Accuracy |

| RI | Rand Index |

| HDFS | Hadoop Distributed File System |

| BGL | Blue Gene/L |

| PCA | Principal Component Analysis |

| SVM | Support Vector Machine |

| LSTM | Long Short-Term Memory |

| CNN | Convolutional Neural Networks |

| TF-IDF | Term Frequency–Inverse Document Frequency |

| Bi-LSTM | Bi-Directional Long Short-Term Memory |

| NLP | Natural Language Processing |

| IM | Invariant Mining |

| GAN | Generative Adversial Network |

| BERT | Bidirectional Encoder Representation from Transformers |

| RF | Random Forest |

| KNN | K-Nearest Neighbor |

| MLP | Multilayer Perceptron |

| RoBERTa | Robustly Optimized BERT Pretraining Approach |

| GRU | Gate Recurrent Unit |

| SVDD | Support Vector Data Description |

| GGNNs | Gated Graph Neural Networks |

References

- He, S.; He, P.; Chen, Z.; Yang, T.; Su, Y.; Lyu, M.R. A survey on automated log analysis for reliability engineering. ACM Comput. Surv. (CSUR) 2021, 54, 1–37. [Google Scholar] [CrossRef]

- Broken Connection in Recent Deployment Causes Microsoft Teams Outage. Available online: https://techwireasia.com/2022/07/broken-connection-in-recent-deployment-causes-microsoft-teams-outage/ (accessed on 21 July 2022).

- Google Outage Analysis: 9 August 2022. Available online: https://www.thousandeyes.com/blog/google-outage-analysis-august-9-2022 (accessed on 19 August 2022).

- Hadem, P.; Saikia, D.K.; Moulik, S. An SDN-based intrusion detection system using SVM with selective logging for IP traceback. Comput. Netw. 2021, 191, 108015. [Google Scholar] [CrossRef]

- Lou, P.; Lu, G.; Jiang, X.; Xiao, Z.; Hu, J.; Yan, J. Cyber intrusion detection through association rule mining on multi-source logs. Appl. Intell. 2021, 51, 4043–4057. [Google Scholar] [CrossRef]

- Lin, Y.D.; Wang, Z.Y.; Lin, P.C.; Nguyen, V.L.; Hwang, R.H.; Lai, Y.C. Multi-datasource machine learning in intrusion detection: Packet flows, system logs and host statistics. J. Inf. Secur. Appl. 2022, 68, 103248. [Google Scholar] [CrossRef]

- Awuson-David, K.; Al-Hadhrami, T.; Alazab, M.; Shah, N.; Shalaginov, A. BCFL logging: An approach to acquire and preserve admissible digital forensics evidence in cloud ecosystem. Future Gener. Comput. Syst. 2021, 122, 1–13. [Google Scholar] [CrossRef]

- Dalezios, N.; Shiaeles, S.; Kolokotronis, N.; Ghita, B. Digital forensics cloud log unification: Implementing CADF in Apache CloudStack. J. Inf. Secur. Appl. 2020, 54, 102555. [Google Scholar] [CrossRef]

- Cinque, M.; Della Corte, R.; Pecchia, A. Contextual filtering and prioritization of computer application logs for security situational awareness. Future Gener. Comput. Syst. 2020, 111, 668–680. [Google Scholar] [CrossRef]

- Lupton, S.; Washizaki, H.; Yoshioka, N.; Fukazawa, Y. Literature Review on Log Anomaly Detection Approaches Utilizing Online Parsing Methodology. In Proceedings of the 2021 28th Asia-Pacific Software Engineering Conference (APSEC), Taipei, Taiwan, 6–9 December 2021; pp. 559–563. [Google Scholar]

- Zhang, T.; Qiu, H.; Castellano, G.; Rifai, M.; Chen, C.S.; Pianese, F. System Log Parsing: A Survey. IEEE Trans. Knowl. Data Eng. 2023. early access. [Google Scholar] [CrossRef]

- Vaarandi, R.; Pihelgas, M. Logcluster—A data clustering and pattern mining algorithm for event logs. In Proceedings of the 2015 11th International Conference on Network and Service Management (CNSM), Barcelona, Spain, 9–13 November 2015; pp. 1–7. [Google Scholar]

- Hamooni, H.; Debnath, B.; Xu, J.; Zhang, H.; Jiang, G.; Mueen, A. Logmine: Fast pattern recognition for log analytics. In Proceedings of the 25th ACM International on Conference on Information and Knowledge Management, Indianapolis, IN, USA, 24–28 October 2016; pp. 1573–1582. [Google Scholar]

- Du, M.; Li, F.; Zheng, G.; Srikumar, V. Deeplog: Anomaly detection and diagnosis from system logs through deep learning. In Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security, Dallas, TX, USA, 30 October–3 November 2017; pp. 1285–1298. [Google Scholar]

- Meng, W.; Liu, Y.; Zhu, Y.; Zhang, S.; Pei, D.; Liu, Y.; Chen, Y.; Zhang, R.; Tao, S.; Sun, P.; et al. LogAnomaly: Unsupervised detection of sequential and quantitative anomalies in unstructured logs. In Proceedings of the IJCAI, Macao, China, 10–16 August 2019; Volume 19, pp. 4739–4745. [Google Scholar]

- Zhang, X.; Xu, Y.; Lin, Q.; Qiao, B.; Zhang, H.; Dang, Y.; Xie, C.; Yang, X.; Cheng, Q.; Li, Z.; et al. Robust log-based anomaly detection on unstable log data. In Proceedings of the 2019 27th ACM Joint Meeting on European Software Engineering Conference and Symposium on the Foundations of Software Engineering, Tallinn, Estonia, 26–30 August 2019; pp. 807–817. [Google Scholar]

- Huang, S.; Liu, Y.; Fung, C.; He, R.; Zhao, Y.; Yang, H.; Luan, Z. Hitanomaly: Hierarchical transformers for anomaly detection in system log. IEEE Trans. Netw. Serv. Manag. 2020, 17, 2064–2076. [Google Scholar] [CrossRef]

- Li, X.; Chen, P.; Jing, L.; He, Z.; Yu, G. Swisslog: Robust and unified deep learning based log anomaly detection for diverse faults. In Proceedings of the 2020 IEEE 31st International Symposium on Software Reliability Engineering (ISSRE), Coimbra, Portugal, 12–15 October 2020; pp. 92–103. [Google Scholar]

- Xiao, T.; Quan, Z.; Wang, Z.J.; Zhao, K.; Liao, X. Lpv: A log parser based on vectorization for offline and online log parsing. In Proceedings of the 2020 IEEE International Conference on Data Mining (ICDM), Sorrento, Italy, 17–20 November 2020; pp. 1346–1351. [Google Scholar]

- Fang, L.; Di, X.; Liu, X.; Qin, Y.; Ren, W.; Ding, Q. QuickLogS: A Quick Log Parsing Algorithm based on Template Similarity. In Proceedings of the 2021 IEEE 20th International Conference on Trust, Security and Privacy in Computing and Communications (TrustCom), Shenyang, China, 20–22 October 2021; pp. 1085–1092. [Google Scholar]

- Du, M.; Li, F. Spell: Streaming parsing of system event logs. In Proceedings of the 2016 IEEE 16th International Conference on Data Mining (ICDM), Barcelona, Spain, 12–15 December 2016; pp. 859–864. [Google Scholar]

- He, P.; Zhu, J.; Zheng, Z.; Lyu, M.R. Drain: An online log parsing approach with fixed depth tree. In Proceedings of the 2017 IEEE International Conference on Web Services (ICWS), Honolulu, HI, USA, 25–30 June 2017; pp. 33–40. [Google Scholar]

- Wang, X.; Zhao, Y.; Xiao, H.; Wang, X.; Chi, X. Ltmatch: A method to abstract pattern from unstructured log. Appl. Sci. 2021, 11, 5302. [Google Scholar] [CrossRef]

- Vervaet, A.; Chiky, R.; Callau-Zori, M. Ustep: Unfixed search tree for efficient log parsing. In Proceedings of the 2021 IEEE International Conference on Data Mining (ICDM), Auckland, New Zealand, 7–10 December 2021; pp. 659–668. [Google Scholar]

- Fu, Y.; Yan, M.; Xu, J.; Li, J.; Liu, Z.; Zhang, X.; Yang, D. Investigating and improving log parsing in practice. In Proceedings of the 30th ACM Joint European Software Engineering Conference and Symposium on the Foundations of Software Engineering, Singapore, 14–18 November 2022; pp. 1566–1577. [Google Scholar]

- Huang, S.; Liu, Y.; Fung, C.; He, R.; Zhao, Y.; Yang, H.; Luan, Z. Paddy: An event log parsing approach using dynamic dictionary. In Proceedings of the NOMS 2020-2020 IEEE/IFIP Network Operations and Management Symposium, Budapest, Hungary, 20–24 April 2020; pp. 1–8. [Google Scholar]

- Messaoudi, S.; Panichella, A.; Bianculli, D.; Briand, L.; Sasnauskas, R. A search-based approach for accurate identification of log message formats. In Proceedings of the 26th Conference on Program Comprehension, Gothenburg, Sweden, 27 May–3 June 2018; pp. 167–177. [Google Scholar]

- Fournier-Viger, P.; Lin, J.C.W.; Kiran, R.U.; Koh, Y.S.; Thomas, R. A survey of sequential pattern mining. Data Sci. Pattern Recognit. 2017, 1, 54–77. [Google Scholar]

- Luna, J.M.; Fournier-Viger, P.; Ventura, S. Frequent itemset mining: A 25 years review. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2019, 9, e1329. [Google Scholar] [CrossRef]

- Vaarandi, R. A data clustering algorithm for mining patterns from event logs. In Proceedings of the 3rd IEEE Workshop on IP Operations & Management (IPOM 2003) (IEEE Cat. No. 03EX764), Kansas City, MO, USA, 3 October 2003; pp. 119–126. [Google Scholar]

- Nagappan, M.; Vouk, M.A. Abstracting log lines to log event types for mining software system logs. In Proceedings of the 2010 7th IEEE Working Conference on Mining Software Repositories (MSR 2010), Cape Town, South Africa, 2–3 May 2010; pp. 114–117. [Google Scholar]

- Dai, H.; Li, H.; Chen, C.S.; Shang, W.; Chen, T.H. Logram: Efficient Log Parsing Using n n-Gram Dictionaries. IEEE Trans. Softw. Eng. 2020, 48, 879–892. [Google Scholar] [CrossRef]

- Sedki, I.; Hamou-Lhadj, A.; Ait-Mohamed, O.; Shehab, M.A. An Effective Approach for Parsing Large Log Files. In Proceedings of the 2022 IEEE International Conference on Software Maintenance and Evolution (ICSME), Limassol, Cyprus, 3–7 October 2022; pp. 1–12. [Google Scholar]

- Chu, G.; Wang, J.; Qi, Q.; Sun, H.; Tao, S.; Liao, J. Prefix-Graph: A Versatile Log Parsing Approach Merging Prefix Tree with Probabilistic Graph. In Proceedings of the 2021 IEEE 37th International Conference on Data Engineering (ICDE), Chania, Greece, 19–22 April 2021; pp. 2411–2422. [Google Scholar]

- Tao, S.; Meng, W.; Cheng, Y.; Zhu, Y.; Liu, Y.; Du, C.; Han, T.; Zhao, Y.; Wang, X.; Yang, H. Logstamp: Automatic online log parsing based on sequence labelling. ACM SIGMETRICS Perform. Eval. Rev. 2022, 49, 93–98. [Google Scholar] [CrossRef]

- Rand, J.; Miranskyy, A. On automatic parsing of log records. In Proceedings of the 2021 IEEE/ACM 43rd International Conference on Software Engineering: New Ideas and Emerging Results (ICSE-NIER), Madrid, Spain, 25–28 May 2021; pp. 41–45. [Google Scholar]

- Zhang, S.; Wu, G. Efficient Online Log Parsing with Log Punctuations Signature. Appl. Sci. 2021, 11, 11974. [Google Scholar] [CrossRef]

- Zhu, J.; He, S.; Liu, J.; He, P.; Xie, Q.; Zheng, Z.; Lyu, M.R. Tools and benchmarks for automated log parsing. In Proceedings of the 2019 IEEE/ACM 41st International Conference on Software Engineering: Software Engineering in Practice (ICSE-SEIP), Montreal, QC, Canada, 25–31 May 2019; pp. 121–130. [Google Scholar]

- He, S.; Zhu, J.; He, P.; Lyu, M.R. Experience report: System log analysis for anomaly detection. In Proceedings of the 2016 IEEE 27th International Symposium on Software Reliability Engineering (ISSRE), Ottawa, ON, Canada, 23–27 October 2016; pp. 207–218. [Google Scholar]

- Xie, Y.; Zhang, H.; Zhang, B.; Babar, M.A.; Lu, S. LogDP: Combining Dependency and Proximity for Log-Based Anomaly Detection. In Proceedings of the Service-Oriented Computing: 19th International Conference, ICSOC 2021, Virtual Event, 22–25 November 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 708–716. [Google Scholar]

- Zhao, N.; Wang, H.; Li, Z.; Peng, X.; Wang, G.; Pan, Z.; Wu, Y.; Feng, Z.; Wen, X.; Zhang, W.; et al. An empirical investigation of practical log anomaly detection for online service systems. In Proceedings of the 29th ACM Joint Meeting on European Software Engineering Conference and Symposium on the Foundations of Software Engineering, Athens, Greece, 23–28 August 2021; pp. 1404–1415. [Google Scholar]

- Lu, S.; Wei, X.; Li, Y.; Wang, L. Detecting anomaly in big data system logs using convolutional neural network. In Proceedings of the 2018 IEEE 16th Intl Conf on Dependable, Autonomic and Secure Computing, 16th Intl Conf on Pervasive Intelligence and Computing, 4th Intl Conf on Big Data Intelligence and Computing and Cyber Science and Technology Congress (DASC/PiCom/DataCom/CyberSciTech), Athens, Greece, 12–15 August 2018; pp. 151–158. [Google Scholar]

- Yen, S.; Moh, M.; Moh, T.S. Causalconvlstm: Semi-supervised log anomaly detection through sequence modeling. In Proceedings of the 2019 18th IEEE International Conference On Machine Learning Furthermore, Applications (ICMLA), Boca Raton, FL, USA, 16–19 December 2019; pp. 1334–1341. [Google Scholar]

- Bertero, C.; Roy, M.; Sauvanaud, C.; Trédan, G. Experience report: Log mining using natural language processing and application to anomaly detection. In Proceedings of the 2017 IEEE 28th International Symposium on Software Reliability Engineering (ISSRE), Toulouse, France, 23–26 October 2017; pp. 351–360. [Google Scholar]

- Wang, J.; Tang, Y.; He, S.; Zhao, C.; Sharma, P.K.; Alfarraj, O.; Tolba, A. LogEvent2vec: LogEvent-to-vector based anomaly detection for large-scale logs in internet of things. Sensors 2020, 20, 2451. [Google Scholar] [CrossRef]

- Ying, S.; Wang, B.; Wang, L.; Li, Q.; Zhao, Y.; Shang, J.; Huang, H.; Cheng, G.; Yang, Z.; Geng, J. An improved KNN-based efficient log anomaly detection method with automatically labeled samples. ACM Trans. Knowl. Discov. Data (TKDD) 2021, 15, 1–22. [Google Scholar] [CrossRef]

- Lv, D.; Luktarhan, N.; Chen, Y. ConAnomaly: Content-based anomaly detection for system logs. Sensors 2021, 21, 6125. [Google Scholar] [CrossRef]

- Yang, H.; Zhao, X.; Sun, D.; Wang, Y.; Huang, W. Sprelog: Log-Based Anomaly Detection with Self-matching Networks and Pre-trained Models. In Proceedings of the Service-Oriented Computing: 19th International Conference, ICSOC 2021, Virtual Event, 22–25 November 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 736–743. [Google Scholar]

- Ryciak, P.; Wasielewska, K.; Janicki, A. Anomaly detection in log files using selected natural language processing methods. Appl. Sci. 2022, 12, 5089. [Google Scholar] [CrossRef]

- Li, Y.; Du, N.; Bengio, S. Time-dependent representation for neural event sequence prediction. arXiv 2017, arXiv:1708.00065. [Google Scholar]

- Rak, T.; Żyła, R. Using Data Mining Techniques for Detecting Dependencies in the Outcoming Data of a Web-Based System. Appl. Sci. 2022, 12, 6115. [Google Scholar] [CrossRef]

- Xiao, R.; Chen, H.; Lu, J.; Li, W.; Jin, S. AllInfoLog: Robust Diverse Anomalies Detection Based on All Log Features. IEEE Trans. Netw. Serv. Manag. 2022. early access. [Google Scholar] [CrossRef]

- Backes, M.; Humbert, M.; Pang, J.; Zhang, Y. walk2friends: Inferring social links from mobility profiles. In Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security, Dallas, TX, USA, 30 October–3 November 2017; pp. 1943–1957. [Google Scholar]

- Dai, H.; Dai, B.; Song, L. Discriminative embeddings of latent variable models for structured data. In Proceedings of the International Conference on Machine Learning, PMLR, New York, NY, USA, 20–22 June 2016; pp. 2702–2711. [Google Scholar]

- Xu, X.; Liu, C.; Feng, Q.; Yin, H.; Song, L.; Song, D. Neural network-based graph embedding for cross-platform binary code similarity detection. In Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security, Dallas, TX, USA, 30 October–3 November 2017; pp. 363–376. [Google Scholar]

- Perozzi, B.; Al-Rfou, R.; Skiena, S. Deepwalk: Online learning of social representations. In Proceedings of the 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, New York, NY, USA, 24–27 August 2014; pp. 701–710. [Google Scholar]

- Tang, J.; Qu, M.; Wang, M.; Zhang, M.; Yan, J.; Mei, Q. Line: Large-scale information network embedding. In Proceedings of the 24th International Conference on World Wide Web, Florence, Italy, 18–22 May 2015; pp. 1067–1077. [Google Scholar]

- Grover, A.; Leskovec, J. node2vec: Scalable feature learning for networks. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 855–864. [Google Scholar]

- Hacker, T.; Pais, R.; Rong, C. A markov random field based approach for analyzing supercomputer system logs. IEEE Trans. Cloud Comput. 2017, 7, 611–624. [Google Scholar] [CrossRef]

- Zhao, X.; Rodrigues, K.; Luo, Y.; Yuan, D.; Stumm, M. Non-Intrusive Performance Profiling for Entire Software Stacks Based on the Flow Reconstruction Principle. In Proceedings of the Osdi, Savannah, GA, USA, 2–4 November 2016; pp. 603–618. [Google Scholar]

- Milajerdi, S.M.; Gjomemo, R.; Eshete, B.; Sekar, R.; Venkatakrishnan, V. Holmes: Real-time apt detection through correlation of suspicious information flows. In Proceedings of the 2019 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 19–23 May 2019; pp. 1137–1152. [Google Scholar]

- Liu, F.; Wen, Y.; Zhang, D.; Jiang, X.; Xing, X.; Meng, D. Log2vec: A heterogeneous graph embedding based approach for detecting cyber threats within enterprise. In Proceedings of the 2019 ACM SIGSAC Conference on Computer and Communications Security, London, UK, 11–15 November 2019; pp. 1777–1794. [Google Scholar]

- Yang, W.; Gao, P.; Huang, H.; Wei, X.; Liu, W.; Zhu, S.; Luo, W. RShield: A refined shield for complex multi-step attack detection based on temporal graph network. In Proceedings of the Database Systems for Advanced Applications: 27th International Conference, DASFAA 2022, Virtual Event, 11–14 April 2022; Proceedings, Part III. Springer: Berlin/Heidelberg, Germany, 2022; pp. 468–480. [Google Scholar]

- Zhang, C.; Peng, X.; Sha, C.; Zhang, K.; Fu, Z.; Wu, X.; Lin, Q.; Zhang, D. DeepTraLog: Trace-log combined microservice anomaly detection through graph-based deep learning. In Proceedings of the 44th International Conference on Software Engineering, Pittsburgh, PA, USA, 21–29 May 2022; pp. 623–634. [Google Scholar]

- Guo, H.; Yuan, S.; Wu, X. Logbert: Log anomaly detection via bert. In Proceedings of the 2021 International Joint Conference on Neural Networks (IJCNN), Shenzhen, China, 18–22 July 2021; pp. 1–8. [Google Scholar]

- Guo, Y.; Wu, Y.; Zhu, Y.; Yang, B.; Han, C. Anomaly detection using distributed log data: A lightweight federated learning approach. In Proceedings of the 2021 International Joint Conference on Neural Networks (IJCNN), Shenzhen, China, 18–22 July 2021; pp. 1–8. [Google Scholar]

- Chen, R.; Zhang, S.; Li, D.; Zhang, Y.; Guo, F.; Meng, W.; Pei, D.; Zhang, Y.; Chen, X.; Liu, Y. Logtransfer: Cross-system log anomaly detection for software systems with transfer learning. In Proceedings of the 2020 IEEE 31st International Symposium on Software Reliability Engineering (ISSRE), Coimbra, Portugal, 12–15 October 2020; pp. 37–47. [Google Scholar]

- Xia, B.; Yin, J.; Xu, J.; Li, Y. Loggan: A sequence-based generative adversarial network for anomaly detection based on system logs. In Proceedings of the Science of Cyber Security: Second International Conference, SciSec 2019, Nanjing, China, 9–11 August 2019; Revised Selected Papers 2. Springer: Berlin/Heidelberg, Germany, 2019; pp. 61–76. [Google Scholar]

- Yang, L.; Chen, J.; Wang, Z.; Wang, W.; Jiang, J.; Dong, X.; Zhang, W. Semi-supervised log-based anomaly detection via probabilistic label estimation. In Proceedings of the 2021 IEEE/ACM 43rd International Conference on Software Engineering (ICSE), Madrid, Spain, 25–28 May 2021; pp. 1448–1460. [Google Scholar]

- Le, V.H.; Zhang, H. Log-based anomaly detection without log parsing. In Proceedings of the 2021 36th IEEE/ACM International Conference on Automated Software Engineering (ASE), Melbourne, Australia, 15–19 November 2021; pp. 492–504. [Google Scholar]

- Lee, Y.; Kim, J.; Kang, P. LAnoBERT: System log anomaly detection based on BERT masked language model. arXiv 2021, arXiv:2111.09564. [Google Scholar]

- Vervaet, A. MoniLog: An Automated Log-Based Anomaly Detection System for Cloud Computing Infrastructures. In Proceedings of the 2021 IEEE 37th International Conference on Data Engineering (ICDE), Chania, Greece, 19–22 April 2021; pp. 2739–2743. [Google Scholar]

- He, S.; Zhu, J.; He, P.; Lyu, M.R. Loghub: A large collection of system log datasets towards automated log analytics. arXiv 2020, arXiv:2008.06448. [Google Scholar]

- Landauer, M.; Skopik, F.; Höld, G.; Wurzenberger, M. A User and Entity Behavior Analytics Log dataset for Anomaly Detection in Cloud Computing. In Proceedings of the 2022 IEEE International Conference on Big Data (Big Data), Naples, Italy, 5–7 December 2022; pp. 4285–4294. [Google Scholar]

| Metrics | Log Parser | Year | HDFS | BGL | Zookeeper | OpenStack | Hadoop |

|---|---|---|---|---|---|---|---|

| PA | LogCluster | 2015 | 0.546 | 0.835 | 0.732 | 0.696 | 0.563 |

| PA | Spell | 2016 | 0.999 | 0.787 | 0.964 | 0.764 | 0778 |

| PA | Drain | 2017 | 0.999 | 0.963 | 0.967 | 0.733 | 0.948 |

| PA | MoLFI | 2018 | 0.998 | 0.960 | 0.839 | 0.213 * | 0.957 |

| PA | Paddy | 2020 | 0.940 | 0.963 | 0.986 | 0.839 | 0.952 |

| PA | QuickLogS | 2021 | 0.998 | - | 0.965 | 0.837 | - |

| PA | USTEP | 2021 | 0.998 | 0.964 | 0.988 | 0.764 | 0.951 |

| PA | LTmatch | 2021 | 1 | 0.933 | 0.987 | 0.835 | 0.987 |

| PA | Drain+ | 2022 | 1 | 0.941 | 0.967 | 0.807 | 0.954 |

| RI | Spell | 2016 | 0.999 | 0.880 | 0.999 | 0.917 | 0.999 |

| RI | Drain | 2017 | 1 | 0.912 | 1 | 0.904 | 0.982 |

| RI | Prefix-Graph | 2021 | 0.989 | 0.993 | 1 | 0.890 | 0.999 |

| RI | LogStamp | 2022 | 1 | 0.98 | 0.95 | - | 0.95 |

| Datasets | # Words | # Templates | Size | # Messgae | ||

|---|---|---|---|---|---|---|

| HDFS | 132 | 30 | 320 | 139 | 1.58 GB | 11,175,629 |

| BGL | 1053 | 619 | 928 | 156 | 74.32 MB | 4,747,963 |

| Zookeeper | 261 | 95 | 387 | 1387 | 10.4 MB | 74,380 |

| OpenStack | 282 | 51 | 450 | 295 | 61.4 MB | 207,820 |

| Hadoop | 880 | 298 | 592 | 121 | 48.2 MB | 394,308 |

| Methods | Year | Features | Robustness | Principle | HDFS | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Count | Time | Event | Index | Sequence | Parameter | Precision | Recall | |||||

| LogCluster | 2016 | ✓ | Cluster | 0.99 | 0.77 | 0.87 | ||||||

| SVM etc. | 2017 | ✓ | SVM etc. | - | - | - | ||||||

| IM etc. | 2017 | ✓ | IM etc. | - | - | - | ||||||

| DeepLog | 2017 | ✓ | ✓ | ✓ | LSTM | 0.93 | 0.94 | 0.93 | ||||

| LogCNN | 2018 | ✓ | CNN | - | - | - | ||||||

| CausalConvLSTM | 2019 | ✓ | CNN/LSTM | 0.9 | 1 | 0.94 | ||||||

| LogAnomaly | 2019 | ✓ | ✓ | LSTM | 0.96 | 0.94 | 0.95 | |||||

| LogGAN | 2020 | ✓ | ✓ | GAN/LSTM | 1 | 0.36 | 0.53 | |||||

| LogRobust | 2020 | ✓ | ✓ | Bi-LSTM | 0.98 | 1 | 0.99 | |||||

| SwissLog | 2020 | ✓ | ✓ | ✓ | BERT/LSTM | 0.97 | 1 | 0.99 | ||||

| HitAnomaly | 2020 | ✓ | ✓ | ✓ | Transformer | 1 | 0.97 | 0.98 | ||||

| LogEvent2vec | 2020 | ✓ | RF etc. | - | - | - | ||||||

| ConAnomaly | 2021 | ✓ | ✓ | LSTM | 1 | 0.98 | 0.98 | |||||

| LogBert | 2021 | ✓ | ✓ | BERT | 0.87 | 0.78 | 0.82 | |||||

| LAnoBERT | 2021 | ✓ | ✓ | BERT | - | - | 0.96 | |||||

| LogAD | 2021 | ✓ | ✓ | ✓ | ✓ | ✓ | LSTM etc. | - | - | - | ||

| KNN-Based | 2021 | ✓ | ✓ | KNN | 0.97 | 0.99 | 0.98 | |||||

| LogDP | 2021 | ✓ | ✓ | MLP | 0.98 | 0.99 | 0.99 | |||||

| Sprelog | 2021 | ✓ | ✓ | RoBERTa | - | - | - | |||||

| PLELog | 2021 | ✓ | ✓ | GRU | 0.95 | 0.96 | 0.96 | |||||

| AllInfoLog | 2022 | ✓ | ✓ | ✓ | ✓ | RoBERTa/Bi-LSTM | 0.99 | 0.99 | 0.99 | |||

| DeepTraLog | 2022 | ✓ | ✓ | SVDD/GGNNs | - | - | - | |||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, J.; Liu, Y.; Wan, H.; Sun, G. Automatic Parsing and Utilization of System Log Features in Log Analysis: A Survey. Appl. Sci. 2023, 13, 4930. https://doi.org/10.3390/app13084930

Ma J, Liu Y, Wan H, Sun G. Automatic Parsing and Utilization of System Log Features in Log Analysis: A Survey. Applied Sciences. 2023; 13(8):4930. https://doi.org/10.3390/app13084930

Chicago/Turabian StyleMa, Junchen, Yang Liu, Hongjie Wan, and Guozi Sun. 2023. "Automatic Parsing and Utilization of System Log Features in Log Analysis: A Survey" Applied Sciences 13, no. 8: 4930. https://doi.org/10.3390/app13084930

APA StyleMa, J., Liu, Y., Wan, H., & Sun, G. (2023). Automatic Parsing and Utilization of System Log Features in Log Analysis: A Survey. Applied Sciences, 13(8), 4930. https://doi.org/10.3390/app13084930