Short-Term Bus Passenger Flow Prediction Based on Graph Diffusion Convolutional Recurrent Neural Network

Abstract

Featured Application

Abstract

1. Introduction

2. Methods

2.1. Modeling the Bus Passenger Flow Prediction Problem

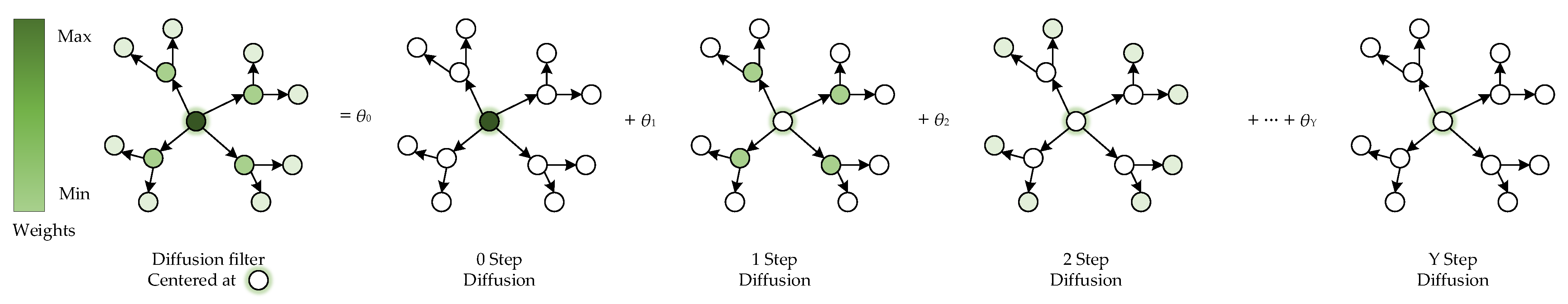

2.2. Graph Diffusion Convolution for Spatial Dependency Modeling

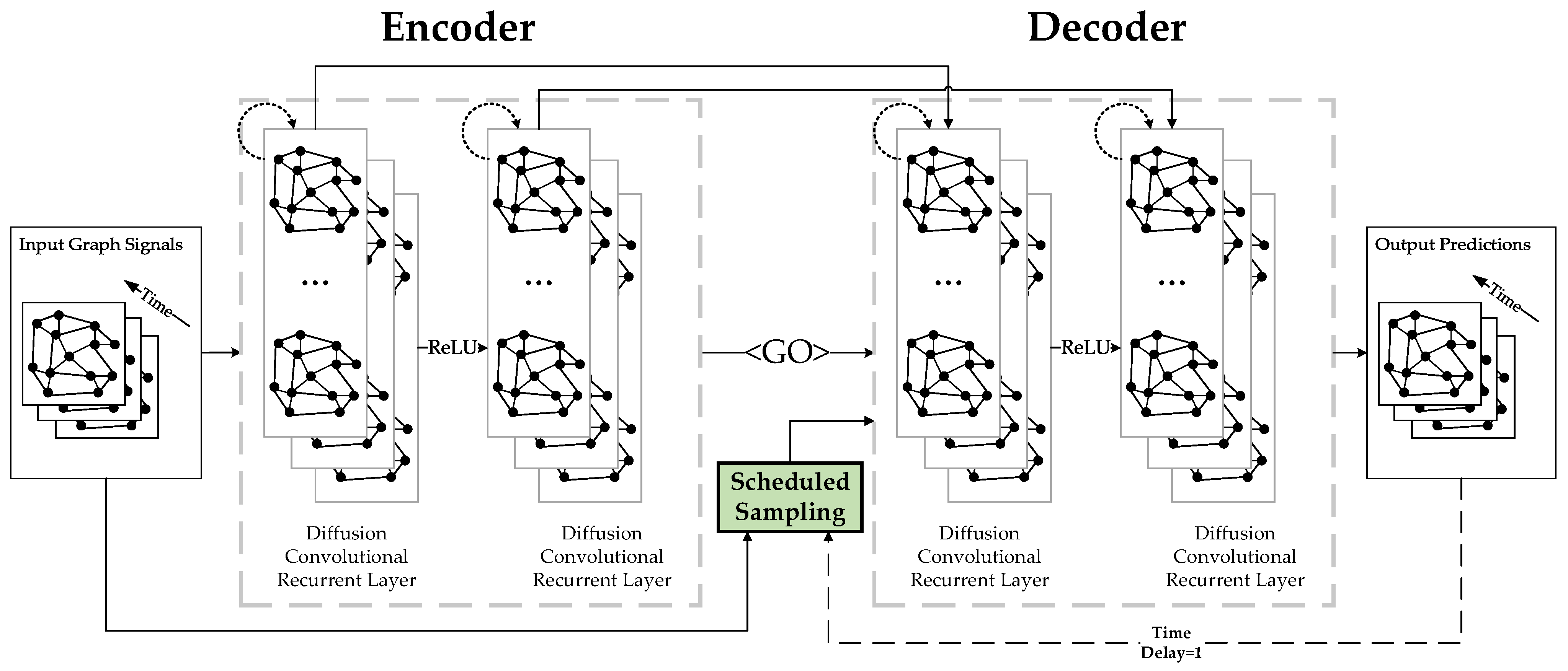

2.3. Sequence-to-Sequence Learning for Temporal Dynamics Modeling

| Algorithm 1: DCRNN |

| Input: historical graph signals ) and graph . Output: DCRNN model and future graph signals . 1. Define the topology of the bus transit network using a weighted graph . 2. Represent the volume of transit card transactions on as a graph signal , where is the total number of bus lines and is the number of features of each vertex. 3. Define a function that maps historical graph signals to future graph signals, given graph . 4. Integrate diffusion convolution into a recurrent neural network to capture the spatiotemporal relationships:

|

3. Study Area and Data Processing

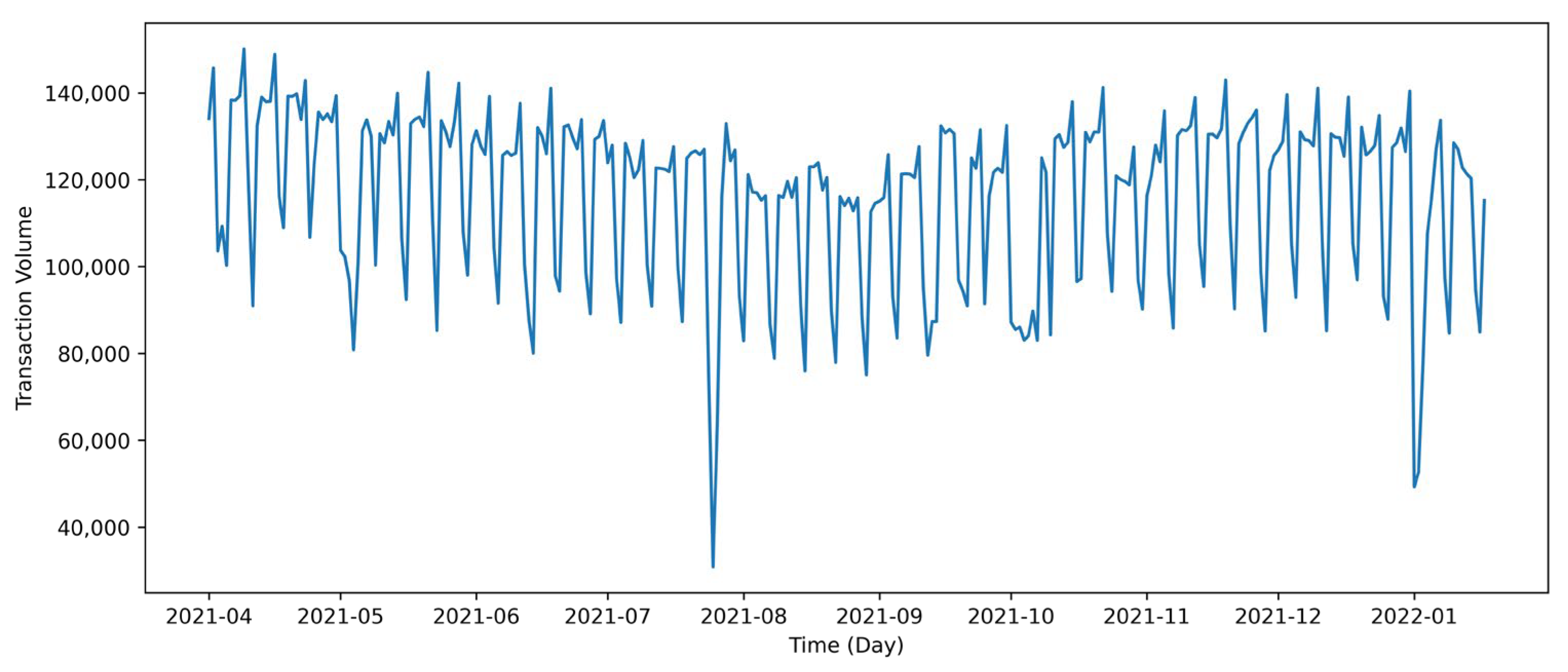

3.1. Temporal Patterns of Daily Ridership

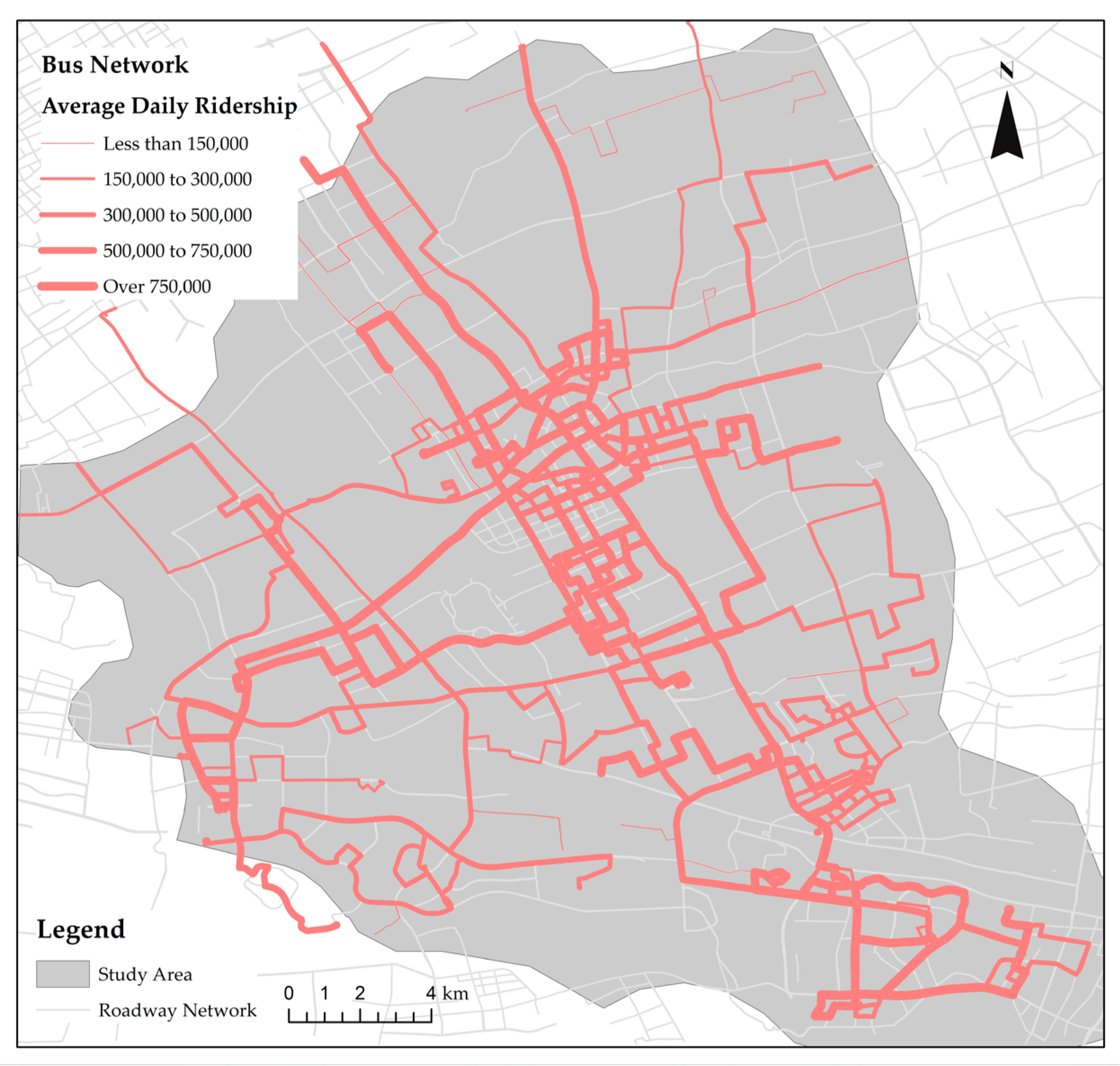

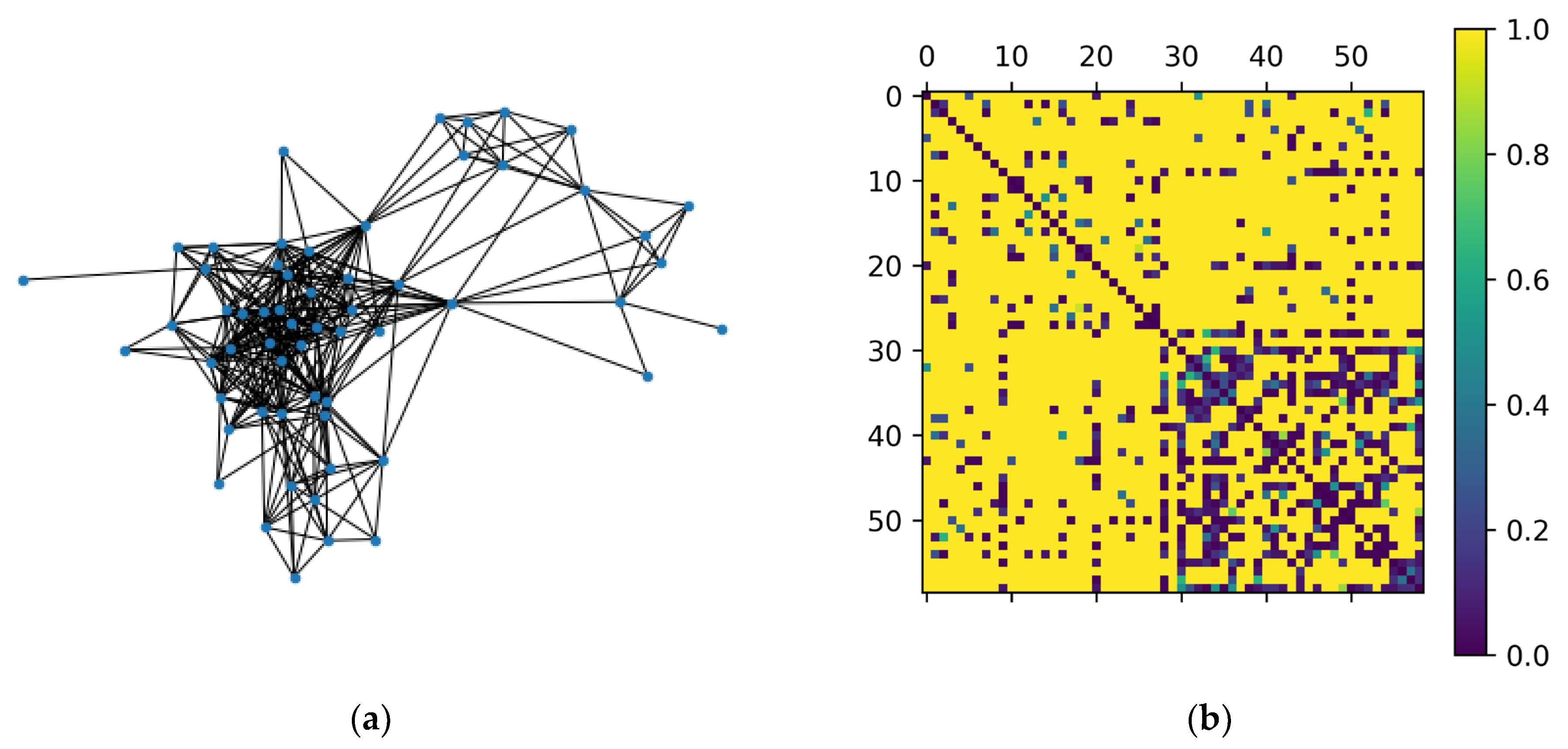

3.2. Spatial Distribution and Construction of Graph for Bus Network

4. Results and Discussion

4.1. Metrics for Modeling Evaluation

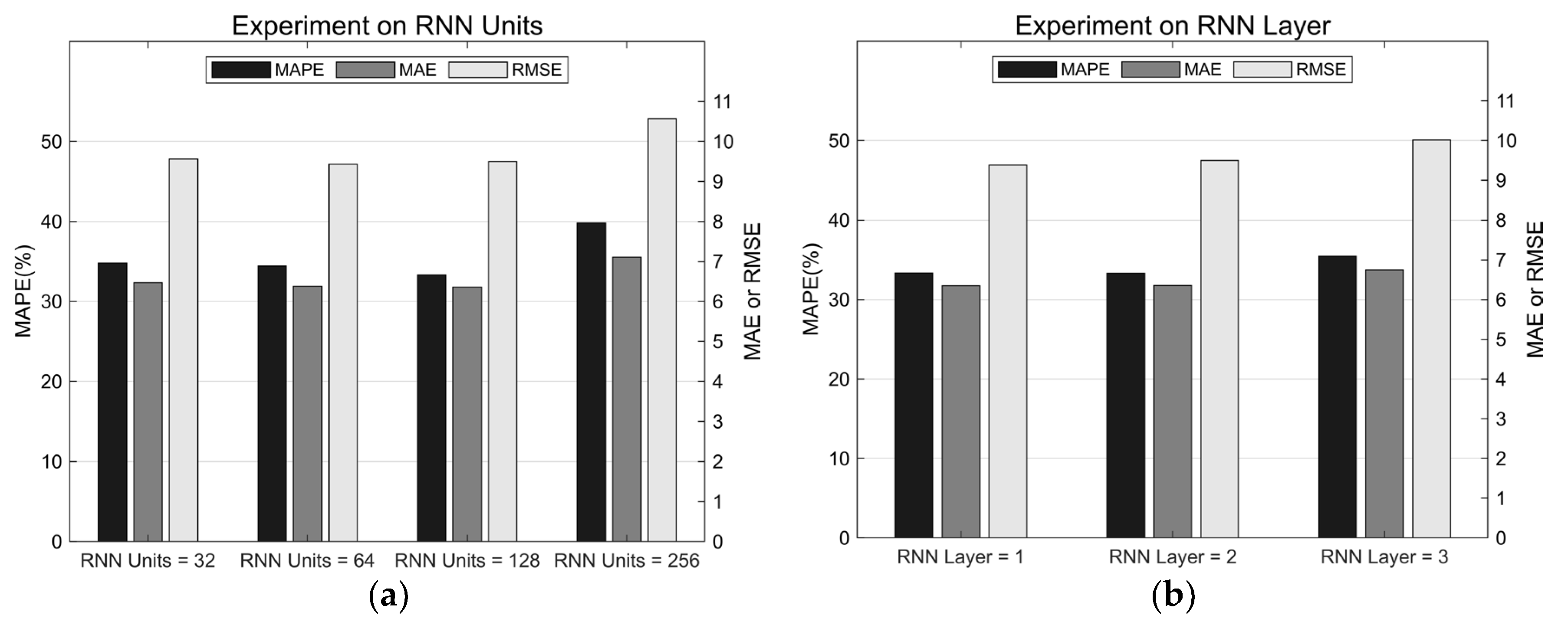

4.2. Modeling Results with Different Hyperparameters

- (1)

- The number of hidden units in each layer: the RNN units determine the model’s capacity and representational power, where a greater number of units result in a more complex model but also increase the risk of overfitting.

- (2)

- The number of layers in the model: The RNN layers also affect the complexity and learning capability of the model. Deeper layers make the modeling structure more sophisticated, but can also lead to potential issues such as gradient vanishing and/or exploding.

- (3)

- The diffusion steps of the graph convolutional filter: The diffusion steps affect the model’s ability to capture spatial information. A larger number of steps enable the model to better capture the relationships between the vertices in a network that are distant from each other. However, it may also lead to the overfitting of the model and make it more demanding in computation.

- (4)

- The dropout parameter of the model: the dropout parameter helps the model mitigate the overfitting issue by randomly dropping a certain proportion of neurons during the training process.

| Case | Baseline Parameter | Hyper Parameter | MAPE | MAE | RMSE | Number of Parameters |

|---|---|---|---|---|---|---|

| 1 | RNN Layers = 2, Diffusion steps = 2, Dropout = 0 | RNN Units = 32 | 34.80% | 6.47 | 9.56 | 94,017 |

| RNN Units = 64 | 34.47% | 6.38 | 9.43 | 372,353 | ||

| RNN Units = 128 | 33.32% | 6.36 | 9.50 | 1,481,985 | ||

| RNN Units = 256 | 39.81% | 7.10 | 10.56 | 5,913,089 | ||

| 2 | RNN Units = 128, Diffusion steps = 2, Dropout = 0 | RNN Layers = 1 | 33.36% | 6.36 | 9.38 | 498,177 |

| RNN Layers = 2 | 33.32% | 6.36 | 9.50 | 1,481,985 | ||

| RNN Layers = 3 | 35.46% | 6.74 | 10.01 | 2,465,793 | ||

| 3 | RNN Units = 128, RNN Layers = 2, Dropout = 0 | Diffusion Steps = 1 | 34.24% | 6.49 | 9.74 | 889,857 |

| Diffusion Steps = 2 | 33.32% | 6.36 | 9.50 | 1,481,985 | ||

| Diffusion Steps = 3 | 46.58% | 7.27 | 10.79 | 2,074,113 | ||

| 4 | RNN Units = 128, RNN Layers = 2, Diffusion steps = 2 | Dropout = 0 | 33.32% | 6.36 | 9.50 | 1,481,985 |

| Dropout = 0.1 | 34.67% | 6.66 | 10.02 | 1,481,985 | ||

| Dropout = 0.2 | 39.08% | 6.77 | 9.88 | 1,481,985 |

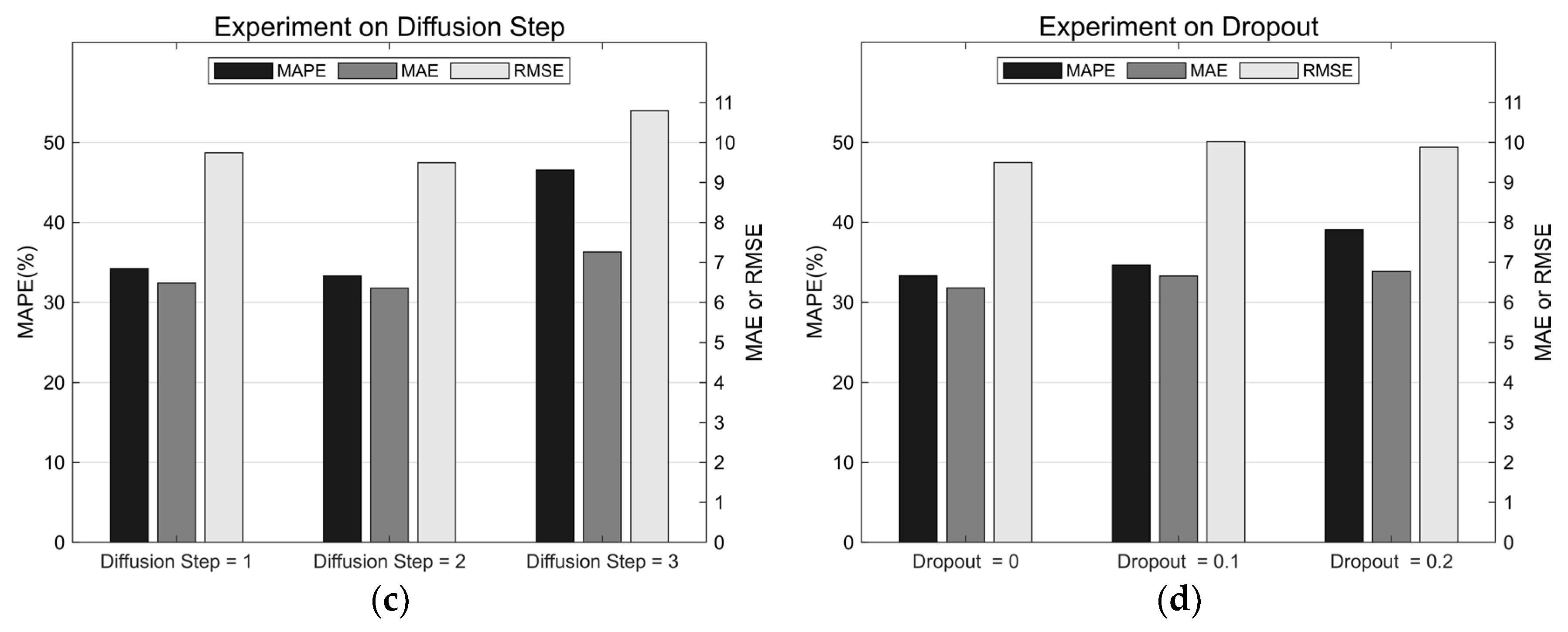

4.3. Comparison with Alternative Models

| Model | MAPE | MAE | RMSE |

|---|---|---|---|

| DCRNN | 33.32% | 6.36 | 9.50 |

| LSTM | 38.06% | 6.43 | 9.76 |

| GRU | 38.65% | 6.56 | 10.03 |

| Static | 137.45% | 22.64 | 34.81 |

| Historical Average | 112.32% | 15.07 | 22.66 |

| VAR | 89.48% | 13.25 | 20.3 |

4.4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Alomari, A.H.; Khedaywi, T.S.; Jadah, A.A.; Marian, A.R.O. Evaluation of Public Transport among University Commuters in Rural Areas. Sustainability 2023, 15, 312. [Google Scholar] [CrossRef]

- Anderson, M.L. Subways, Strikes, and Slowdowns: The Impacts of Public Transit on Traffic Congestion. Am. Econ. Rev. 2014, 104, 2763–2796. [Google Scholar] [CrossRef]

- Nagaraj, N.; Gururaj, H.L.; Swathi, B.H.; Hu, Y.-C. Passenger Flow Prediction in Bus Transportation System Using Deep Learning. Multimed. Tools Appl. 2022, 81, 12519–12542. [Google Scholar] [CrossRef]

- Tirachini, A.; Hensher, D.A.; Rose, J.M. Crowding in Public Transport Systems: Effects on Users, Operation and Implications for the Estimation of Demand. Transp. Res. Part A Policy Pract. 2013, 53, 36–52. [Google Scholar] [CrossRef]

- Liu, C.; Shen, Q. An Empirical Analysis of the Influence of Urban Form on Household Travel and Energy Consumption. Comput. Environ. Urban Syst. 2011, 35, 347–357. [Google Scholar] [CrossRef]

- Luo, D.; Zhao, D.; Ke, Q.; You, X.; Liu, L.; Zhang, D.; Ma, H.; Zuo, X. Fine-Grained Service-Level Passenger Flow Prediction for Bus Transit Systems Based on Multitask Deep Learning. IEEE Trans. Intell. Transp. Syst. 2021, 22, 7184–7199. [Google Scholar] [CrossRef]

- Ahmed, M.S.; Cook, A.R. Analysis of Freeway Traffic Time-Series Data by Using Box-Jenkins Techniques; Transportation Research Record; Transportation Research Board: Washington, DC, USA, 1979. [Google Scholar]

- Williams, B.M.; Durvasula, P.K.; Brown, D.E. Urban Freeway Traffic Flow Prediction: Application of Seasonal Autoregressive Integrated Moving Average and Exponential Smoothing Models. Transp. Res. Rec. 1998, 1644, 132–141. [Google Scholar] [CrossRef]

- Williams, B.M.; Hoel, L.A. Modeling and Forecasting Vehicular Traffic Flow as a Seasonal ARIMA Process: Theoretical Basis and Empirical Results. J. Transp. Eng. 2003, 129, 664–672. [Google Scholar] [CrossRef]

- Li, X.; Pan, G.; Wu, Z.; Qi, G.; Li, S.; Zhang, D.; Zhang, W.; Wang, Z. Prediction of Urban Human Mobility Using Large-Scale Taxi Traces and Its Applications. Front. Comput. Sci. 2012, 6, 111–121. [Google Scholar]

- Jiao, P.; Li, R.; Sun, T.; Hou, Z.; Ibrahim, A. Three Revised Kalman Filtering Models for Short-Term Rail Transit Passenger Flow Prediction. Math. Probl. Eng. 2016, 2016, e9717582. [Google Scholar] [CrossRef]

- Su, H.; Zhang, L.; Yu, S. Short-Term Traffic Flow Prediction Based on Incremental Support Vector Regression. In Proceedings of the Third International Conference on Natural Computation (ICNC 2007), Haikou, China, 24–27 August 2007; Volume 1, pp. 640–645. [Google Scholar]

- Cheng, S.; Lu, F.; Peng, P.; Wu, S. Short-Term Traffic Forecasting: An Adaptive ST-KNN Model That Considers Spatial Heterogeneity. Comput. Environ. Urban Syst. 2018, 71, 186–198. [Google Scholar] [CrossRef]

- Toqué, F.; Khouadjia, M.; Come, E.; Trepanier, M.; Oukhellou, L. Short & Long Term Forecasting of Multimodal Transport Passenger Flows with Machine Learning Methods. In Proceedings of the 2017 IEEE 20th International Conference on Intelligent Transportation Systems (ITSC), Yokohama, Japan, 16–19 October 2017; pp. 560–566. [Google Scholar]

- Li, Y.; Ma, C. Short-Time Bus Route Passenger Flow Prediction Based on a Secondary Decomposition Integration Method. J. Transp. Eng. Part A Syst. 2023, 149, 04022132. [Google Scholar] [CrossRef]

- Nguyen, H.; Kieu, L.-M.; Wen, T.; Cai, C. Deep Learning Methods in Transportation Domain: A Review. IET Intell. Transp. Syst. 2018, 12, 998–1004. [Google Scholar] [CrossRef]

- Gang, X.; Kang, W.; Wang, F.; Zhu, F.; Lv, Y.; Dong, X.; Riekki, J.; Pirttikangas, S. Continuous Travel Time Prediction for Transit Signal Priority Based on a Deep Network. In Proceedings of the 2015 IEEE 18th International Conference on Intelligent Transportation Systems, Gran Canaria, Spain, 15–18 September 2015; pp. 523–528. [Google Scholar]

- Ma, X.; Tao, Z.; Wang, Y.; Yu, H.; Wang, Y. Long Short-Term Memory Neural Network for Traffic Speed Prediction Using Remote Microwave Sensor Data. Transp. Res. Part C Emerg. Technol. 2015, 54, 187–197. [Google Scholar] [CrossRef]

- Duan, Y.; Lv, Y.; Wang, F.-Y. Travel Time Prediction with LSTM Neural Network. In Proceedings of the 2016 IEEE 19th International Conference on Intelligent Transportation Systems (ITSC), Rio de Janeiro, Brazil, 1–4 November 2016; pp. 1053–1058. [Google Scholar]

- Huang, W.; Song, G.; Hong, H.; Xie, K. Deep Architecture for Traffic Flow Prediction: Deep Belief Networks With Multitask Learning. IEEE Trans. Intell. Transp. Syst. 2014, 15, 2191–2201. [Google Scholar] [CrossRef]

- Ke, J.; Zheng, H.; Yang, H.; Chen, X. (Michael) Short-Term Forecasting of Passenger Demand under on-Demand Ride Services: A Spatio-Temporal Deep Learning Approach. Transp. Res. Part C Emerg. Technol. 2017, 85, 591–608. [Google Scholar] [CrossRef]

- Perez, L.; Wang, J. The Effectiveness of Data Augmentation in Image Classification Using Deep Learning. arXiv 2017, arXiv:1712.04621. [Google Scholar] [CrossRef]

- Young, T.; Hazarika, D.; Poria, S.; Cambria, E. Recent Trends in Deep Learning Based Natural Language Processing. IEEE Comput. Intell. Mag. 2018, 13, 55–75. [Google Scholar] [CrossRef]

- Schlichtkrull, M.; Kipf, T.N.; Bloem, P.; van den Berg, R.; Titov, I.; Welling, M. Modeling Relational Data with Graph Convolutional Networks. In The Semantic Web, Proceedings of the 15th International Conference, ESWC 2018, Heraklion, Crete, Greece, 3–7 June 2018; Gangemi, A., Navigli, R., Vidal, M.-E., Hitzler, P., Troncy, R., Hollink, L., Tordai, A., Alam, M., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 593–607. [Google Scholar]

- Yu, B.; Yin, H.; Zhu, Z. Spatio-Temporal Graph Convolutional Networks: A Deep Learning Framework for Traffic Forecasting. In Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018; pp. 3634–3640. [Google Scholar]

- Polson, N.G.; Sokolov, V.O. Deep Learning for Short-Term Traffic Flow Prediction. Transp. Res. Part C Emerg. Technol. 2017, 79, 1–17. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, Z.; Jia, R. DeepPF: A Deep Learning Based Architecture for Metro Passenger Flow Prediction. Transp. Res. Part C Emerg. Technol. 2019, 101, 18–34. [Google Scholar] [CrossRef]

- Ren, Y.; Chen, H.; Han, Y.; Cheng, T.; Zhang, Y.; Chen, G. A Hybrid Integrated Deep Learning Model for the Prediction of Citywide Spatio-Temporal Flow Volumes. Int. J. Geogr. Inf. Sci. 2020, 34, 802–823. [Google Scholar] [CrossRef]

- Zhao, T.; Huang, Z.; Tu, W.; He, B.; Cao, R.; Cao, J.; Li, M. Coupling Graph Deep Learning and Spatial-Temporal Influence of Built Environment for Short-Term Bus Travel Demand Prediction. Comput. Environ. Urban Syst. 2022, 94, 101776. [Google Scholar] [CrossRef]

- Chen, T.; Fang, J.; Xu, M.; Tong, Y.; Chen, W. Prediction of Public Bus Passenger Flow Using Spatial–Temporal Hybrid Model of Deep Learning. J. Transp. Eng. Part A Syst. 2022, 148, 04022007. [Google Scholar] [CrossRef]

- Baghbani, A.; Bouguila, N.; Patterson, Z. Short-Term Passenger Flow Prediction Using a Bus Network Graph Convolutional Long Short-Term Memory Neural Network Model. Transp. Res. Rec. 2023, 2677, 1331–1340. [Google Scholar] [CrossRef]

- Zhao, C.; Song, A.; Du, Y.; Yang, B. TrajGAT: A Map-Embedded Graph Attention Network for Real-Time Vehicle Trajectory Imputation of Roadside Perception. Transp. Res. Part C Emerg. Technol. 2022, 142, 103787. [Google Scholar] [CrossRef]

- Atwood, J.; Towsley, D. Diffusion-Convolutional Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems 29 (NIPS 2016), Barcelona, Spain, 5–10 December 2016; Curran Associates, Inc.: Sydney, Australia, 2016. [Google Scholar]

- Gasteiger, J.; Weißenberger, S.; Günnemann, S. Diffusion Improves Graph Learning. In Proceedings of the Advances in Neural Information Processing Systems 32 (NeurIPS 2019), Vancouver, BC, Canada, 8–14 December 2019; Curran Associates, Inc.: Sydney, Australia, 2019. [Google Scholar]

- Li, Y.; Yu, R.; Shahabi, C.; Liu, Y. Diffusion Convolutional Recurrent Neural Network: Data-Driven Traffic Forecasting. arXiv 2017, arXiv:1707.01926. [Google Scholar] [CrossRef]

- Wang, H.-W.; Peng, Z.-R.; Wang, D.; Meng, Y.; Wu, T.; Sun, W.; Lu, Q.-C. Evaluation and Prediction of Transportation Resilience under Extreme Weather Events: A Diffusion Graph Convolutional Approach. Transp. Res. Part C Emerg. Technol. 2020, 115, 102619. [Google Scholar] [CrossRef]

- Lin, L.; He, Z.; Peeta, S. Predicting Station-Level Hourly Demand in a Large-Scale Bike-Sharing Network: A Graph Convolutional Neural Network Approach. Transp. Res. Part C Emerg. Technol. 2018, 97, 258–276. [Google Scholar] [CrossRef]

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to Sequence Learning with Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems 27 (NIPS 2014), Montreal, QC, Canada, 8–13 December 2014; Curran Associates, Inc.: Sydney, Australia, 2014. [Google Scholar]

- Zou, L.; Shu, S.; Lin, X.; Lin, K.; Zhu, J.; Li, L. Passenger Flow Prediction Using Smart Card Data from Connected Bus System Based on Interpretable XGBoost. Wirel. Commun. Mob. Comput. 2022, 2022, e5872225. [Google Scholar] [CrossRef]

- Fu, R.; Zhang, Z.; Li, L. Using LSTM and GRU Neural Network Methods for Traffic Flow Prediction. In Proceedings of the 2016 31st Youth Academic Annual Conference of Chinese Association of Automation (YAC), Wuhan, China, 11–13 November 2016; pp. 324–328. [Google Scholar]

| Model | Baseline Parameter | Hyper Parameter | MAPE | MAE | RMSE |

|---|---|---|---|---|---|

| LSTM | RNN Layer = 2 Dropout = 0.2 | RNN Units = 32 | 38.93% | 6.34 | 9.49 |

| RNN Units = 64 | 40.93% | 6.48 | 9.65 | ||

| RNN Units = 128 | 39.23% | 6.29 | 9.43 | ||

| RNN Units = 256 | 38.06% | 6.43 | 9.76 | ||

| GRU | RNN Layer = 2 Dropout = 0.2 | RNN Units = 32 | 38.90% | 6.33 | 9.45 |

| RNN Units = 64 | 44.23% | 6.64 | 9.73 | ||

| RNN Units = 128 | 39.90% | 6.37 | 9.54 | ||

| RNN Units = 256 | 38.65% | 6.56 | 10.03 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhai, X.; Shen, Y. Short-Term Bus Passenger Flow Prediction Based on Graph Diffusion Convolutional Recurrent Neural Network. Appl. Sci. 2023, 13, 4910. https://doi.org/10.3390/app13084910

Zhai X, Shen Y. Short-Term Bus Passenger Flow Prediction Based on Graph Diffusion Convolutional Recurrent Neural Network. Applied Sciences. 2023; 13(8):4910. https://doi.org/10.3390/app13084910

Chicago/Turabian StyleZhai, Xubin, and Yu Shen. 2023. "Short-Term Bus Passenger Flow Prediction Based on Graph Diffusion Convolutional Recurrent Neural Network" Applied Sciences 13, no. 8: 4910. https://doi.org/10.3390/app13084910

APA StyleZhai, X., & Shen, Y. (2023). Short-Term Bus Passenger Flow Prediction Based on Graph Diffusion Convolutional Recurrent Neural Network. Applied Sciences, 13(8), 4910. https://doi.org/10.3390/app13084910