A Novel Moving Object Detection Algorithm Based on Robust Image Feature Threshold Segmentation with Improved Optical Flow Estimation

Abstract

1. Introduction

- We adopted a dense optical flow estimation algorithm that combines the HS pyramid large displacement optical flow method with the LK local optical flow method to improve the robustness of the algorithm and introduced the DF-β based on an edge-preserving strategy as the smoothing term of the optical flow energy equation to ensure edge integrity.

- We adopted the non-quadratic penalty function and Delaunay triangulation occlusion determination to reduce the inaccuracy of optical flow estimation caused by the ‘ghost’ problem and to improve the accuracy of moving object detection.

- We proposed a moving object detection algorithm based on optical flow thresholding of Harris robust feature points. According to the MSAC algorithm, the background model is calculated, and the background robust feature points are screened. The optical flow value of the Harris robust feature points is used as the threshold and can be applied to both a static or moving camera.

2. Materials and Methods

2.1. Algorithm Framework

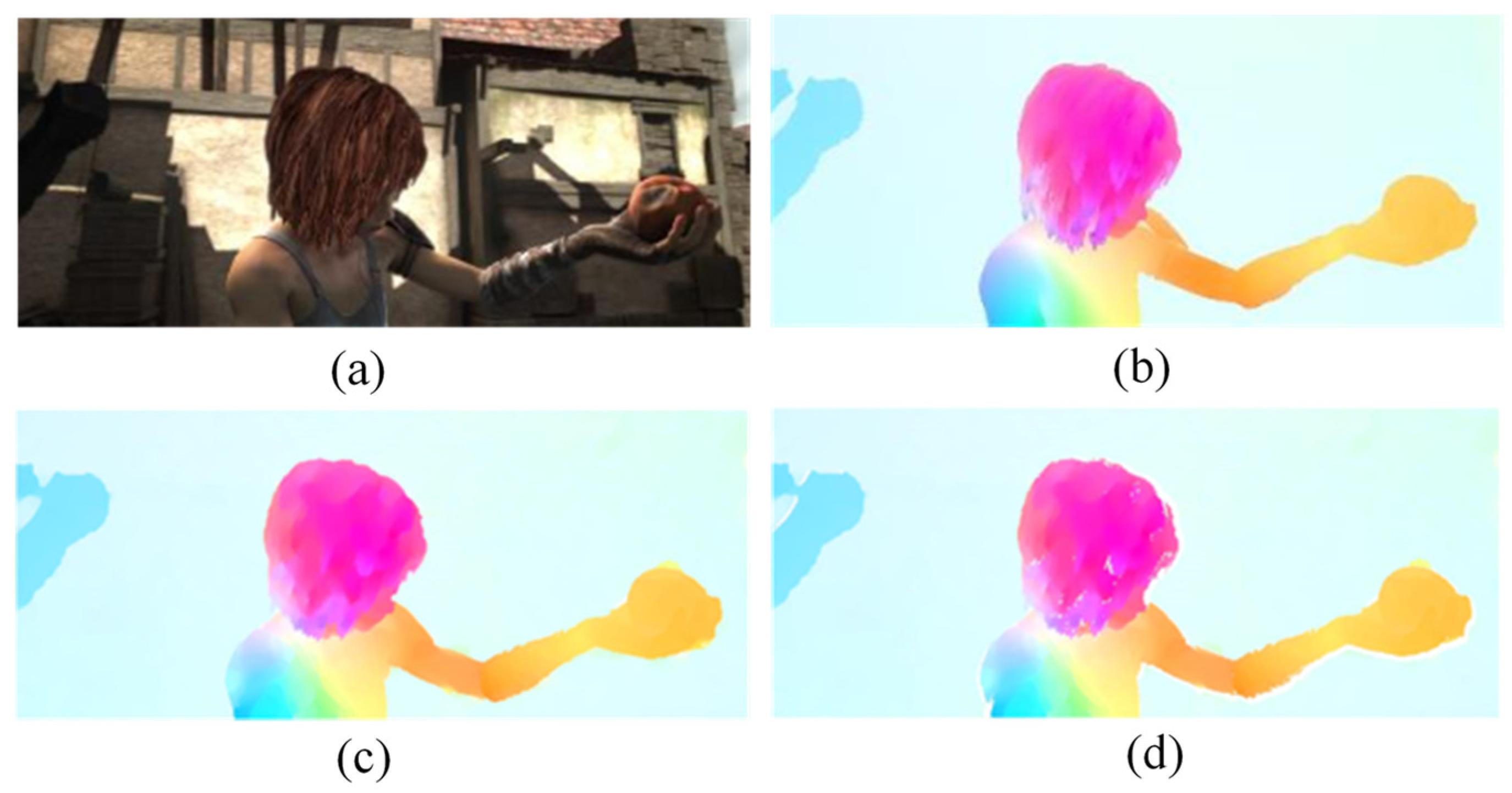

2.2. Improved Optical Flow Estimation Method

2.2.1. Improved Optical Flow Estimation Energy Equation

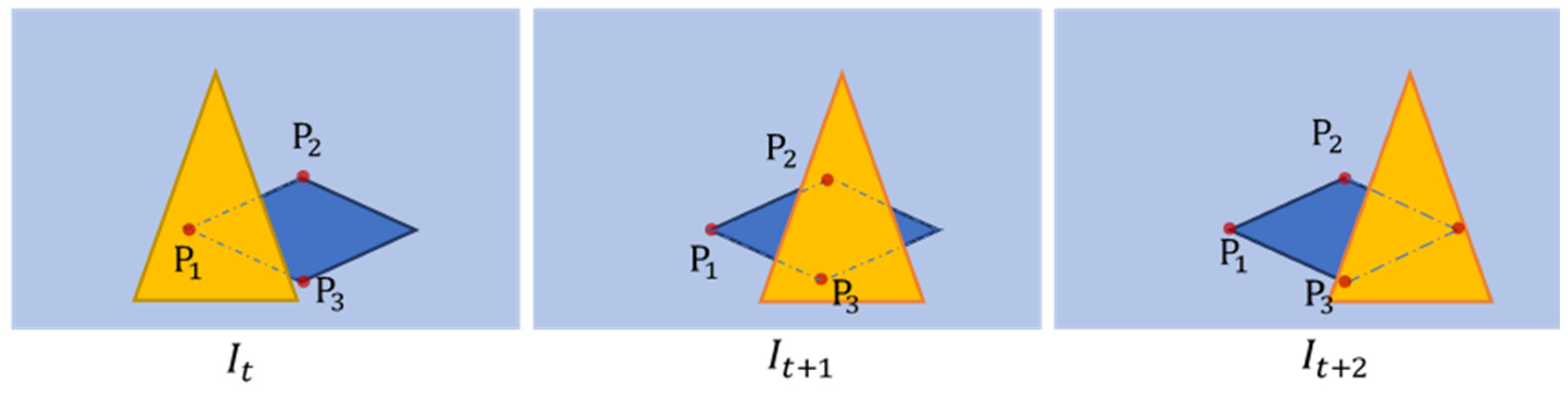

2.2.2. Occlusion Determination Based on the Delaunay Triangulation

| Algorithm 1 Improved optical flow estimation |

| Input: and in image sequence Output: Optical flow estimation result of at time t Build the image pyramid and from bottom to up, which QCalculate the initial value of the top-level optical flow for k = 2 to n do The optical flow of current layer is calculated according to Calculate the initial optical flow of next layer end The optical flow of the current image is updated as Detecting the edge of optical flow as Delaunay triangulation is established in the edge area Calculating the grey difference: if else for i = 1 to K Calculating the change in brightness of triangle point: if else end end Estimating the optical flow after occlusion judgment: |

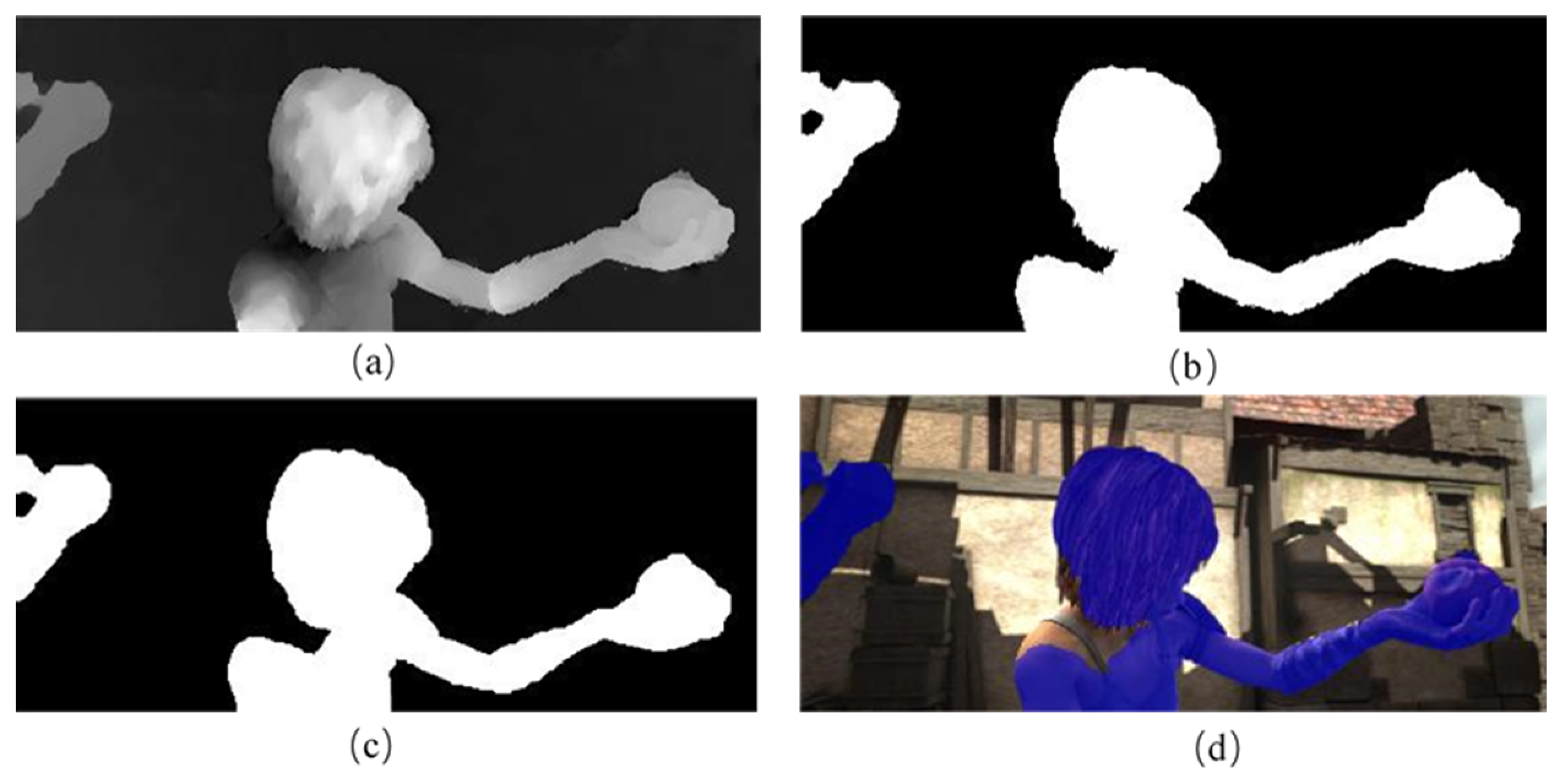

2.3. Moving Object Detection Based on Robust Feature Threshold Segmentation

| Algorithm 2 Moving object detection |

| Input: Image , and optical flow estimation at time t Output: moving objects area R Calculating optical flow field to optical flow grey value Normalized grey image Extracting Harris feature points of image Calculating corresponding points in image : Calculating affine transformation matrix of background model: Calculating optical flow threshold of background robust feature points Extracting binary image of moving objects region Morphological processing |

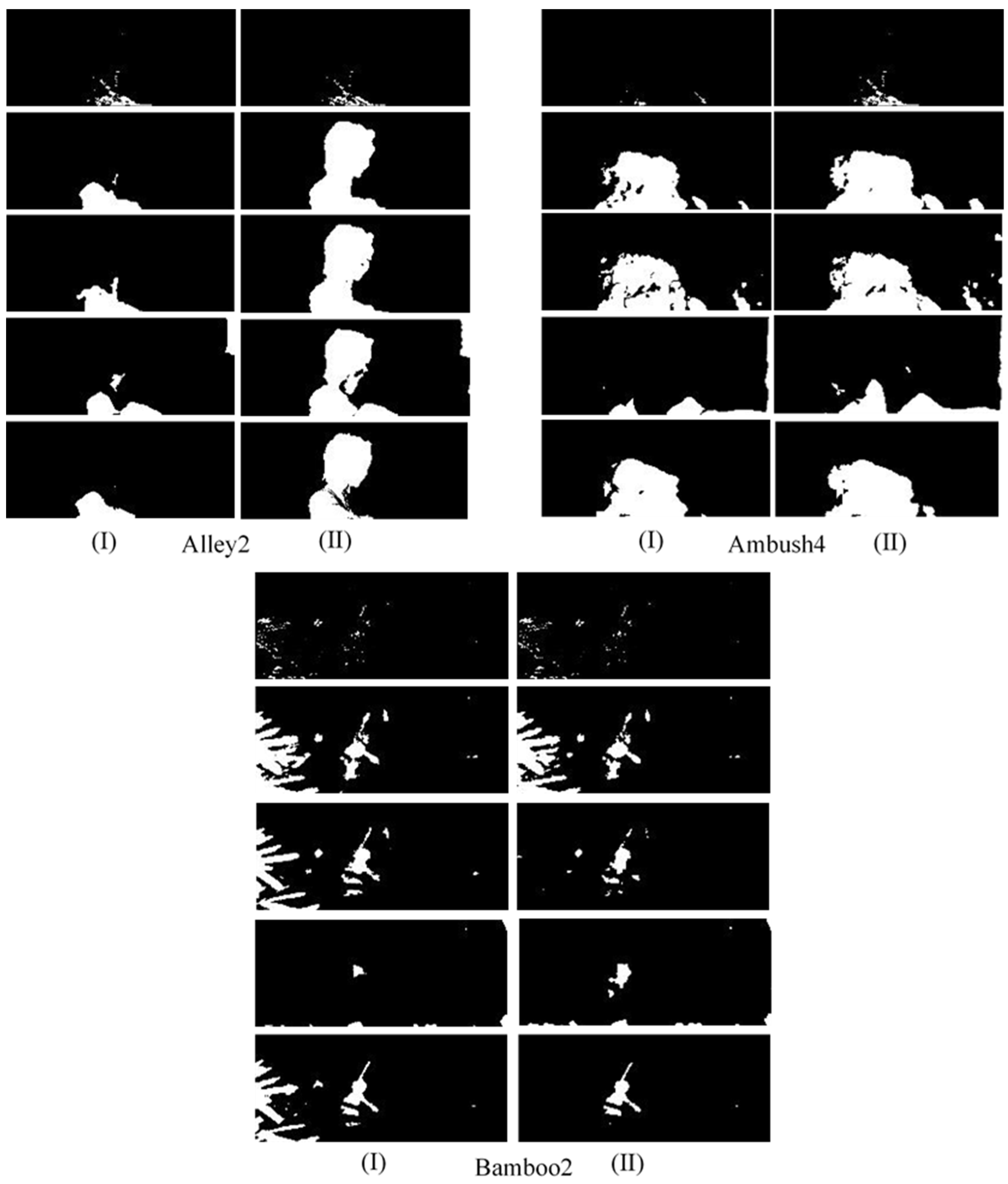

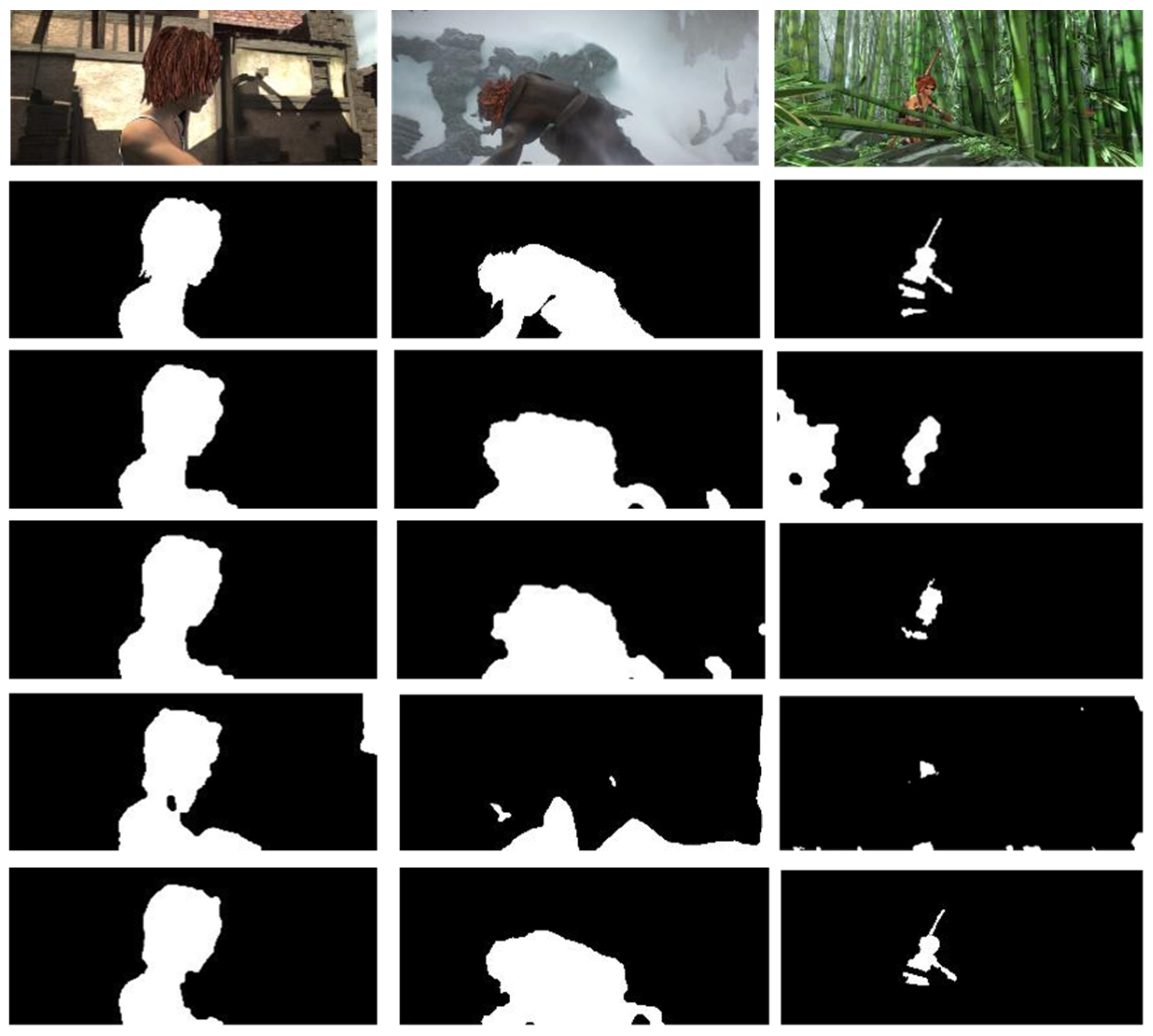

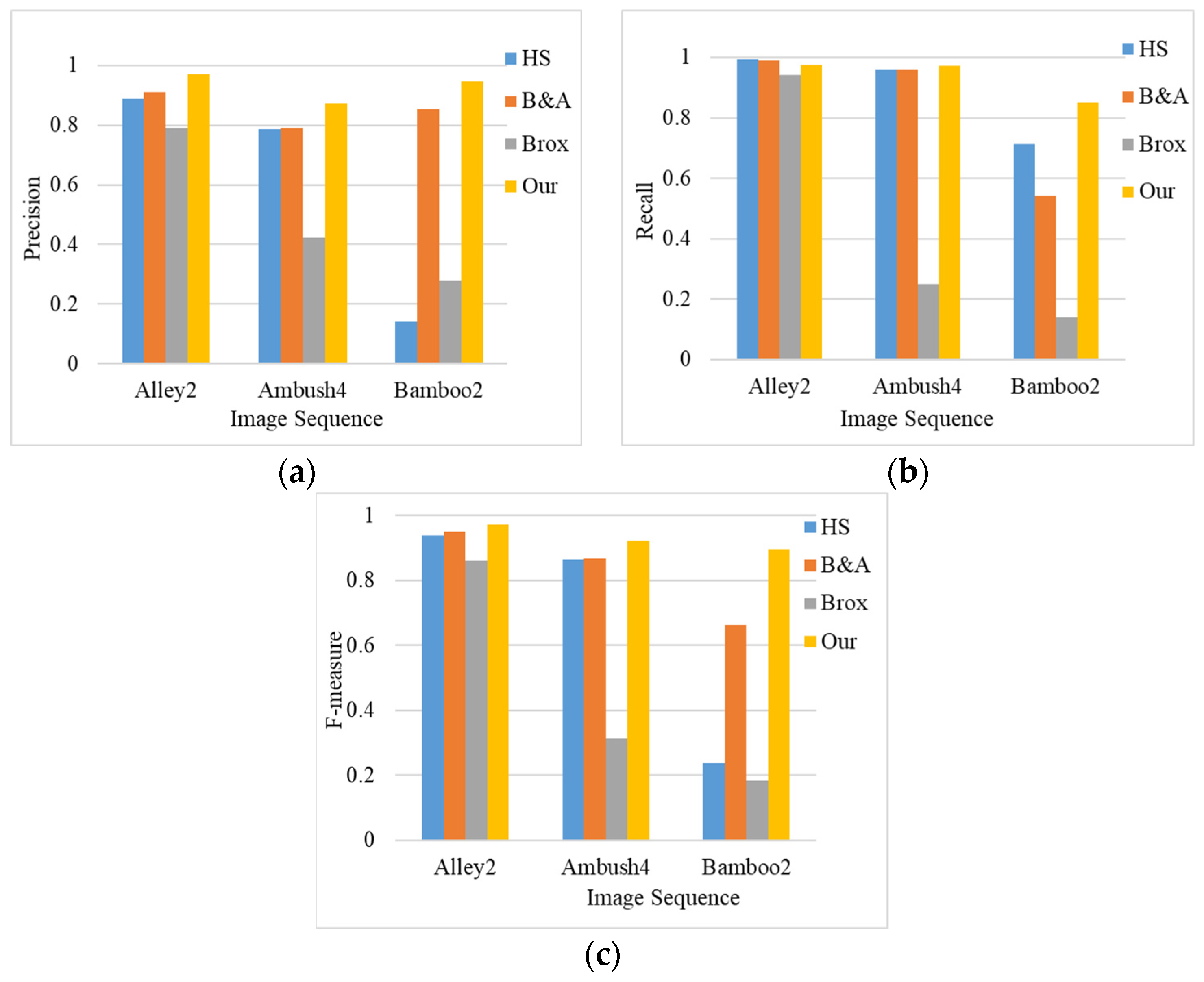

3. Experiment and Results

3.1. Experimental Environment and Evaluation Indicators

3.2. Experiment and Analysis

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kulchandani, J.S.; Dangarwala, K.J. Moving object detection: Review of recent research trends. In Proceedings of the 2015 International Conference on Pervasive Computing (ICPC), Pune, India, 8–10 January 2015; pp. 1–5. [Google Scholar]

- Zhan, C.; Duan, X.; Xu, S.; Song, Z.; Luo, M. An Improved Moving Object Detection Algorithm Based on Frame Difference and Edge Detection. In Proceedings of the Fourth International Conference on Image and Graphics (ICIG 2007), Chengdu, China, 22–24 August 2007; pp. 519–523. [Google Scholar]

- Chapel, M.-N.; Bouwmans, T. Moving objects detection with a moving camera: A comprehensive review. Comput. Sci. Rev. 2020, 38, 100310. [Google Scholar] [CrossRef]

- Yazdi, M.; Bouwmans, T. New trends on moving object detection in video images captured by a moving camera: A survey. Comput. Sci. Rev. 2018, 28, 157–177. [Google Scholar] [CrossRef]

- Stojnić, V.; Risojević, V.; Muštra, M.; Jovanović, V.; Filipi, J.; Kezić, N.; Babić, Z. A Method for Detection of Small Moving Objects in UAV Videos. Remote Sens. 2021, 13, 653. [Google Scholar] [CrossRef]

- Singla, N. Motion detection based on frame difference method. Int. J. Inf. Comput. Technol. 2014, 4, 1559–1565. [Google Scholar]

- Shaikh, S.H.; Saeed, K.; Chaki, N. Moving Object Detection Using Background Subtraction. In Moving Object Detection Using Background Subtraction; Springer: Cham, Switzerland, 2014; pp. 15–23. [Google Scholar] [CrossRef]

- Yin, Z.; Shi, J. GeoNet: Unsupervised Learning of Dense Depth, Optical Flow and Camera Pose. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake, UT, USA, 18–12 June 2018; pp. 1983–1992. [Google Scholar]

- Saddique, M.; Asghar, K.; Bajwa, U.I.; Hussain, M.; Habib, Z. Spatial Video Forgery Detection and Localization using Texture Analysis of Consecutive Frames. Adv. Electr. Comput. Eng. 2019, 19, 97–108. [Google Scholar] [CrossRef]

- Sengar, S.S.; Mukhopadhyay, S. Moving object detection based on frame difference and W4. Signal Image Video Process. 2017, 11, 1357–1364. [Google Scholar] [CrossRef]

- Sengar, S.S.; Mukhopadhyay, S. A novel method for moving object detection based on block based frame differencing. In Proceedings of the 2016 3rd International Conference on Recent Advances in Information Technology (RAIT), Dhanbad, India, 3–5 March 2016; pp. 467–472. [Google Scholar]

- Kalli, S.; Suresh, T.; Prasanth, A.; Muthumanickam, T.; Mohanram, K. An effective motion object detection using adaptive background modeling mechanism in video surveillance system. J. Intell. Fuzzy Syst. 2021, 41, 1777–1789. [Google Scholar] [CrossRef]

- Suresh, D.S.; Lavanya, M.P. Motion Detection and Tracking using Background Subtraction and Consecutive Frames Difference Method. Int. J. Res. Stud. Sci. Eng. Technol. 2014, 1, 16–22. [Google Scholar]

- Piccardi, M. Background subtraction techniques: A review. In Proceedings of the 2004 IEEE International Conference on Systems, Man and Cybernetics (IEEE Cat. No.04CH37583), The Hague, The Netherlands, 10–13 October 2004; pp. 3099–3104. [Google Scholar]

- Agarwal, A.; Gupta, S.; Singh, D.K. Review of optical flow technique for moving object detection. In Proceedings of the 2016 2nd International Conference on Contemporary Computing and Informatics (IC3I), Greater Noida, India, 14–17 December 2016; pp. 409–413. [Google Scholar]

- Sengar, S.S.; Mukhopadhyay, S. Detection of moving objects based on enhancement of optical flow. Optik 2017, 145, 130–141. [Google Scholar] [CrossRef]

- Cho, J.; Jung, Y.; Kim, D.S.; Lee, S.; Jung, Y. Moving Object Detection Based on Optical Flow Estimation and a Gaussian Mixture Model for Advanced Driver Assistance Systems. Sensors 2019, 19, 3217. [Google Scholar] [CrossRef]

- Bors, A.G.; Pitas, I. Optical flow estimation and moving object segmentation based on median radial basis function network. IEEE Trans. Image Process. 1998, 7, 693–702. [Google Scholar] [CrossRef]

- GaliC, S.; LonCariC, S. Spatio-temporal image segmentation using optical flow and clustering algorithm. In Proceedings of the IWISPA 2000, Proceedings of the First International Workshop on Image and Signal Processing and Analysis in Conjunction with 22nd International Conference on Information Technology Interfaces, Pula, Croatia, 14–15 June 2000; IEEE: Piscataway, NJ, USA; pp. 63–68. [Google Scholar]

- Wei, S.-G.; Yang, L.; Chen, Z.; Liu, Z.-F. Motion Detection Based on Optical Flow and Self-adaptive Threshold Segmentation. Procedia Eng. 2011, 15, 3471–3476. [Google Scholar] [CrossRef]

- Yu, X.; Chen, X.; Jiang, M. Detection of Moving Object in Moving Background Based on Feature Vector Field Fuzzy Segmentation and OTSU Method. Opto-Electron. Eng. 2012, 39, 94–102. [Google Scholar] [CrossRef]

- Han, X.; Gao, Y.; Lu, Z.; Zhang, Z.; Niu, D. Research on Moving Object Detection Algorithm Based on Improved Three Frame Difference Method and Optical Flow. In Proceedings of the 2015 Fifth International Conference on Instrumentation and Measurement, Computer, Communication and Control (IMCCC), Qinhuangdao, China, 18–20 September 2015; pp. 580–584. [Google Scholar]

- Mendes, P.A.; Mendes, M.; Coimbra, A.P.; Crisóstomo, M.M. Movement detection and moving object distinction based on optical flow. In Proceedings of the Lecture Notes in Engineering and Computer Science: Proceedings of The World Congress on Engineering, London, UK, 3–5 July 2019; pp. 3–5. [Google Scholar]

- Mendes, P.A.; Paulo Coimbra, A. Movement Detection and Moving Object Distinction Based on Optical Flow for a Surveillance System. In Transactions on Engineering Technologies; Ao, S.I., Gelman, L., Kim, H.K., Eds.; Springer: Singapore, 2021; pp. 143–158. [Google Scholar] [CrossRef]

- Han, P.; Du, J.; Zhou, J.; Zhu, S. An Object Detection Method Using Wavelet Optical Flow and Hybrid Linear-Nonlinear Classifier. Math. Probl. Eng. 2013, 2013, 965419. [Google Scholar] [CrossRef]

- Sun, W.; Sun, M.; Zhang, X.; Li, M. Moving Vehicle Detection and Tracking Based on Optical Flow Method and Immune Particle Filter under Complex Transportation Environments. Complexity 2020, 2020, 3805320. [Google Scholar] [CrossRef]

- Fleet, D.J.; Weiss, Y. Optical Flow Estimation. In Handbook of Mathematical Models in Computer Vision; Springer: New York, NY, USA, 2006; pp. 237–257. [Google Scholar] [CrossRef]

- Brox, T.; Bruhn, A.E.; Papenberg, N.; Weickert, J. High Accuracy Optical Flow Estimation Based on a Theory for Warping. In Proceedings of the 8th European Conference on Computer Vision, Berlin/Heidelberg, Germany, 11–14 May 2004; pp. 25–36. [Google Scholar]

- Chetverikov, D.; Fazekas, S.; Haindl, M. Dynamic texture as foreground and background. Mach. Vis. Appl. 2010, 22, 741–750. [Google Scholar] [CrossRef]

- Monzón, N.; Salgado, A.; Sánchez, J. Regularization strategies for discontinuity-preserving optical flow methods. IEEE Trans. Image Process. 2016, 25, 1580–1591. [Google Scholar] [CrossRef]

- Kennedy, R.; Taylor, C.J. Optical flow with geometric occlusion estimation and fusion of multiple frames. In Proceedings of International Workshop on Energy Minimization Methods in Computer Vision and Pattern Recognition; Springer: Cham, Switzerland, 2015; pp. 364–377. [Google Scholar]

- Zhang, C.; Chen, Z.; Wang, M.; Li, M.; Jiang, S. Motion Occlusion Detecting from Image Sequence Based on Optical Flow and Delaunay Triangulation. Acta Electron. Sin. 2018, 46, 479–485. [Google Scholar]

- Negahban, S.N.; Ravikumar, P.; Wainwright, M.J.; Yu, B. A Unified Framework for High-Dimensional Analysis of $M$-Estimators with Decomposable Regularizers. Stat. Sci. 2012, 27, 538–557. [Google Scholar] [CrossRef]

- Rosten, E.; Drummond, T. Fusing Points and Lines for High Performance Tracking. In Proceedings of the Tenth IEEE International Conference on Computer Vision (ICCV’05), Beijing, China, 17–20 October 2005; pp. 1508–1515. [Google Scholar]

- Bay, H.; Tuytelaars, T.; Van Gool, L. SURF: Speeded up robust features. In Proceedings of Proceedings of the 9th European Conference on Computer Vision (ECCV), Graz, Austria, 7–13 May 2006; pp. 404–417. [Google Scholar]

- Harris, C.G.; Stephens, M. A Combined Corner and Edge Detector. In Proceedings of the Alvey Vision Conference, Manchester, UK, 31 August–2 September 1988; pp. 23.21–23.26. [Google Scholar]

- Butler, D.J.; Wulff, J.; Stanley, G.B.; Black, M.J. A naturalistic open source movie for optical flow evaluation. In Proceedings of the European Conference on Computer Vision (ECCV), Berlin/Heidelberg, Germany, 7 October 2012; pp. 611–625. [Google Scholar]

- Lucas, B.D.; Kanade, T. An Iterative Image Registration Technique with an Application to Stereo Vision. In Proceedings of the IJCAI’81: 7th International Joint Conference on Artificial Intelligence, Vancouver, BC, Canada, 24–28 August 1981; pp. 674–679. [Google Scholar]

- Hartley, R. Segmentation of optical flow fields by pyramid linking. Pattern Recognit. Lett. 1985, 3, 253–262. [Google Scholar] [CrossRef]

- Black, M.J.; Anandan, P. A framework for the robust estimation of optical flow. In Proceedings of the 1993 (4th) International Conference on Computer Vision, Berlin, Germany, 11–14 May 1993; pp. 231–236. [Google Scholar]

- Brox, T.; Malik, J. Large displacement optical flow: Descriptor matching in variational motion estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 500–513. [Google Scholar] [CrossRef] [PubMed]

- Min, Q.; Huang, Y. Motion detection using binocular image flow in dynamic scenes. EURASIP J. Adv. Signal Process. 2016, 2016, 49. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ding, J.; Zhang, Z.; Yu, X.; Zhao, X.; Yan, Z. A Novel Moving Object Detection Algorithm Based on Robust Image Feature Threshold Segmentation with Improved Optical Flow Estimation. Appl. Sci. 2023, 13, 4854. https://doi.org/10.3390/app13084854

Ding J, Zhang Z, Yu X, Zhao X, Yan Z. A Novel Moving Object Detection Algorithm Based on Robust Image Feature Threshold Segmentation with Improved Optical Flow Estimation. Applied Sciences. 2023; 13(8):4854. https://doi.org/10.3390/app13084854

Chicago/Turabian StyleDing, Jing, Zhen Zhang, Xuexiang Yu, Xingwang Zhao, and Zhigang Yan. 2023. "A Novel Moving Object Detection Algorithm Based on Robust Image Feature Threshold Segmentation with Improved Optical Flow Estimation" Applied Sciences 13, no. 8: 4854. https://doi.org/10.3390/app13084854

APA StyleDing, J., Zhang, Z., Yu, X., Zhao, X., & Yan, Z. (2023). A Novel Moving Object Detection Algorithm Based on Robust Image Feature Threshold Segmentation with Improved Optical Flow Estimation. Applied Sciences, 13(8), 4854. https://doi.org/10.3390/app13084854