1. Introduction

In recent years, artificial neural networks (ANNs) have become the best-known approach in artificial intelligence (AI) and have achieved superb performance in various application fields, such as video processing [

1], computer vision [

2], autonomous driving drones [

3], natural language processing (NLP) [

4], medical diagnosis [

5], game playing [

6], and text processing [

7]. The emergence of deep learning (DL) has again brought enormous attention to ANNs. They have become state-of-the-art models and won different machine learning (ML) challenges. Despite being inspired by the brain, these networks lack biological plausibility and have structural differences. The human brain can learn from a few samples and generalizes well. It stores a large amount of information and has amazing energy efficiency. For many years, a belief in neuroscience was that neurons encode essential information via frequencies of spikes. Recent neurophysiological findings show that the precise timing of action potentials is also necessary for effective information processing in brain systems [

8,

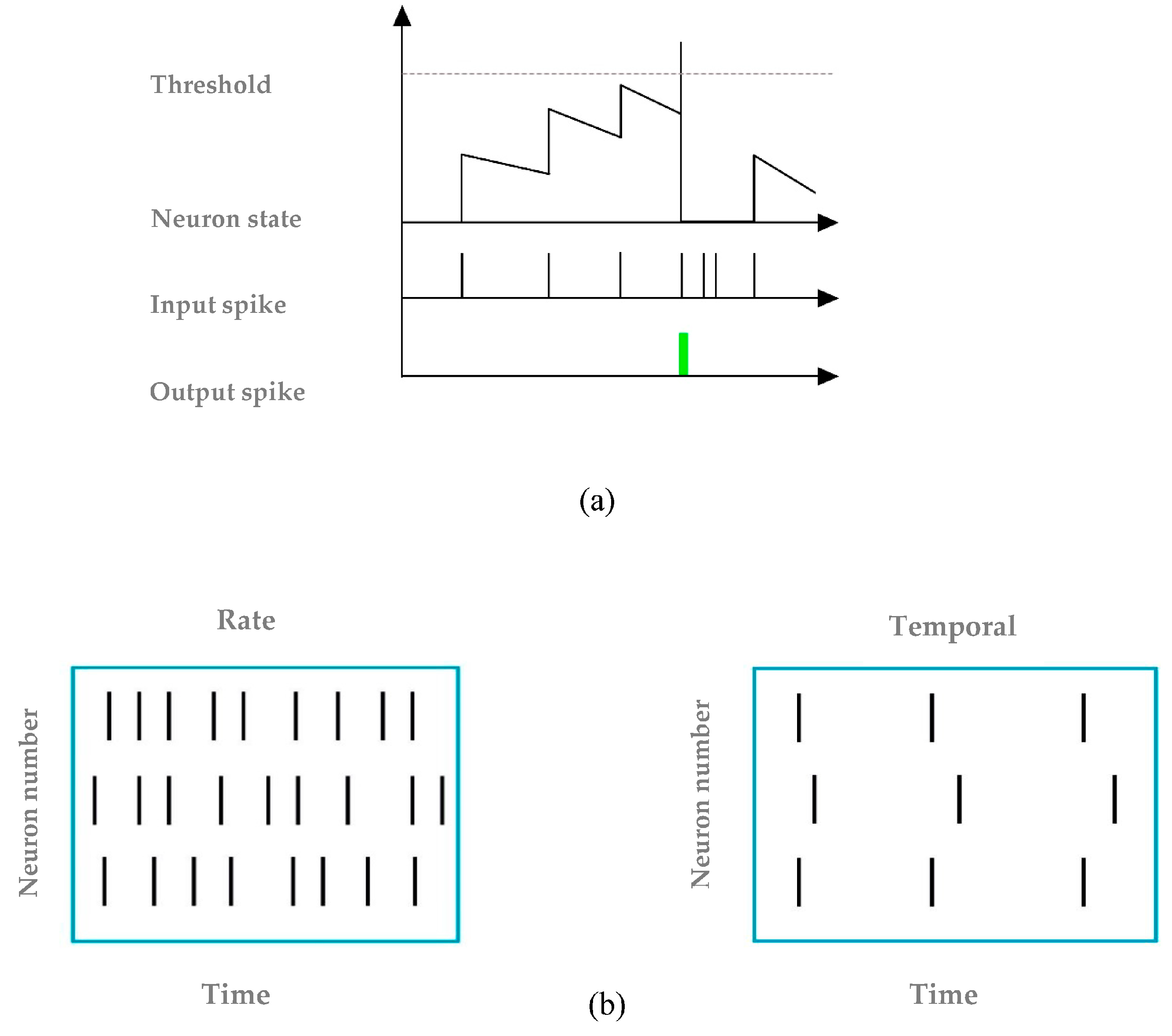

9]. ANN models can be divided into three generations based on their computational units, as shown in

Figure 1. First-generation ANNs utilize the traditional McCulloch and Pitts neuron model as computational units, in which the output value is a binary variable (‘0’ or ‘1’). The second generation is characterized by the use of continuous activation functions in neural networks, such as perceptron and Hopfield. The third generation of neural algorithms is represented by spiking neural networks (SNNs) [

10]. In this model, information is encoded into spikes inspired by neuroscience. Moreover, this neuron model mimics biological neurons and synaptic communication mechanisms based on action potentials [

11]. In spiking neurons, inputs are integrated into a membrane potential only when spikes are received and generate spikes when the membrane potential reaches a certain threshold voltage. These operations enable event-driven computation and are exceptionally energy efficient [

12,

13], which makes them appealing for real-time embedded AI systems and edge computing solutions [

14].

Table 1 shows the differences between SNNs and ANNs in terms of neurons and information processing.

Existing works reveal that SNNs are executed on neuromorphic chips handling spike-based accumulate (AC) operations. Thus, SNNs can save energy by orders of magnitude compared with ANNs that are dominated by energy-hungry multiply-and-accumulate (MAC) operations on conventional dense computing hardware, such as GPUs. Recently, many neuromorphic processors have been developed, such as IBM’s TrueNorth [

15], Intel’s Loihi [

16], and SpiNNaker [

17], to implement SNNs. In recent years, SNNs have received extensive attention in numerous applications, including the brain–machine interface [

18], machine control and navigation systems [

19], event detection [

20], and pattern recognition [

21].

Although they offer many advantages, there are still some barriers to overcome. Learning strategies in SNNs are integrated with various elements of a neural network, including how information is encoded and the neuron model. Thus, training an SNN is difficult due to the non-differentiable nature of activation functions [

22] (

Figure 1). In recent years, tremendous efforts have been devoted to the training algorithms of SNNs. Conventionally, there are two main approaches to training SNNs: converting ANNs to SNNs and directly training SNNs. In the first case, conventional ANNs are fully trained, using backpropagation (BP), before being converted to an equivalent model consisting of spiking neurons [

23,

24]. This method is often referred to as rate-based learning, since, commonly, the analog outputs of traditional ANNs are converted to spike trains through rate encoding. The advantage here is that training happens in the ANN domain, leveraging widely used machine learning frameworks, such as PyTorch and TensorFlow, which have short training times and can be applied to complex datasets. Although some progress in SNN conversion has been made, such as threshold balancing [

25,

26], weight normalization [

27], and a soft-reset mechanism [

28], all of these methods require a large number of time steps, which significantly increases the latency and energy consumption of the SNN. Another disadvantage of such a learning approach is that it is not biologically plausible.

The second category is direct training methods, which include unsupervised and supervised learning rules; in either case, they utilize the full advantage of spiking neurons. Spike Timing Dependent Plasticity (STDP), an unsupervised learning mechanism, is a biologically plausible mechanism for synaptic learning in SNNs [

29,

30]. STDP-based learning rules modify the weight of a synapse connecting a pair of pre-and post-synaptic neurons based on the degree of correlation between the respective spike times [

31]. Diehl and Cook [

32] utilized STDP to train a two-layer SNN with lateral inhibitions in an unsupervised learning style. Masquelier and Thorpe [

33] applied STDP to multilayer neural networks inspired by ventral visual pathways to enable unsupervised feature learning. Although unsupervised STDP training is attractive for the real-time hardware implementation of several emerging and non-von Neumann architectures, it is not yet suitable for challenging cognitive tasks due to its inaccuracy/scalability issues.

Supervised learning is the most widely used learning algorithm in traditional ANNs. However, directly training SNNs with backpropagation-based supervised learning methods is challenging due to the discontinuous and non-differentiable nature of the spiking neuron [

34]. To circumvent this problem, several approaches, such as SpikeProp [

35], the Tempotron learning rule [

36], and ReSuMe [

37], have been proposed. SpikeProp [

35] is the primary spike-based method for backpropagating multilayer SNNs. Tempotron [

36] can perform binary classification tasks in analogy to a perceptron. The remote supervised method (ReSuMe) [

37] and the spike pattern association neuron (SPAN) [

38] are classical spike sequence learning rules. However, these methods suffer from two major drawbacks, namely, overfitting problems and falling into local minima, which limit the practical usage of SNNs.

In recent years, metaheuristic algorithms have emerged as promising methods for training ANNs and deep learning [

39]. In contrast to gradient-based algorithms, they can easily escape the local minimum, since their design considers two contradictory criteria: exploring a search space and exploiting the most efficient one [

40]. They can accurately formulate the optimal estimation of ANN components, such as hyperparameters, weights, number of layers, number of neurons, and learning rate. To overcome the drawbacks of gradient descent in training SNNs, using metaheuristic algorithms for the learning process of SNNs has received increasing attention. Pavlidis et al. (2005) applied the parallel differential evolution strategy for training supervised SNNs [

41]. They tested their model for solving the XOR problem, which did not reveal its advantages. For a linear and non-linear classification challenge, Vazquez and Garro (2011) used the PSO algorithm to train SNNs [

42]. They observed that the same class of input patterns produced the same rate of fire. In another work, Vazquez and Garro (2015) used an artificial bee colony algorithm to train SNNs [

43]. However, the accuracy was still lower than state-of-the-art performance on benchmark datasets.

The main research question of this work is: ‘How to develop a supervised learning method to address the challenge of gradient descent with spiking neurons?’ In this paper, a novel metaheuristic-based supervised learning method for SNNs based on adapting the temporal error function is introduced for solving classification problems. The procedure is as follows: each input pattern is converted into a spike, then the Integrate-and-Fire neuron is stimulated and emits a spike when it reaches its threshold, and finally, the temporal error function is computed. We used seven well-known algorithms, from modern to simple ones, called evolutionary algorithms (EAs), such as Genetic Algorithm (GA), Differential Evolution (DE) and Grammatical Evolution (GE), and swarm intelligence algorithms (SIAs), such as Particle Swarm Optimization (PSO) and Artificial Bee Colony (ABC). There are also nature-based methods, such as the Cuckoo Search algorithm (CS), and a family of physical algorithms, such as Harmony Search (HS). To define target firing times, relative target firing times were used instead of fixed and predetermined ones. The key benefit is that our proposed model makes predictions with only a single output spike, making the computation of the error function simpler. The main finding was that, due to the success of the metaheuristic algorithms, we effectively avoided the significant drawbacks of the gradient descent training method falling into local minima. The outcome is that our proposed approach demonstrated competitive accuracy on UCI datasets. All seven metaheuristic algorithms converged to the optimal solution; however, the CS algorithm reported the highest results and showed a faster convergence rate for most evaluated datasets. To the best of our knowledge, this is the first study to investigate seven metaheuristic algorithms for training SNNs. The following are the contributions of this paper:

This work proposes a novel metaheuristic-based supervised learning method for multilayer SNNs to classify UCI datasets.

This work uses seven well-known metaheuristic algorithms for the training of SNNs in order to overcome the issue of falling into local minima associated with gradient descent training.

To simplify the computation of the error function, our proposed model makes predictions with only a single output spike. Moreover, this work uses relative target firing times instead of fixed and predetermined ones.

To evaluate the efficiency of our proposed model, the trained network was tested on five benchmark classification datasets. The model performance was comparable to state-of-the-art methods, with accuracy levels of 0.9858, 0.9768, 0.9591, 0.7752, and 0.6871 for iris, cancer, wine, diabetes, and liver datasets, respectively. Among the seven metaheuristic algorithms, CS showed the most satisfactory performance.

The rest of the paper is organized as follows.

Section 2 describes a theoretical framework and concepts of metaheuristic algorithms.

Section 3 explains the SNN model and the proposed methodology for training multilayer spiking neural networks with temporal error.

Section 4 describes the experimental configuration of this work and compares the efficiency of the proposed approaches against several existing metaheuristic and non-metaheuristic SNN learning models.

Section 5 presents a discussion of the results. In the last section, conclusions are drawn and future research directions are indicated.

4. Experimental Results and Evaluation

To evaluate the accuracy of the suggested approach, five experiments were implemented. The datasets used were provided by the UCI Machine Learning Repository [

64] and were named Pima Indians Diabetes (Diabetes), Iris Plant, Breast Cancer, Wine, and Liver. Each dataset was split into two groups of nearly equal size named the training set and the testing set.

Table 2 shows the characteristics of the UCI benchmark datasets that were used in this work.

4.1. Iris Plant

The Iris dataset contains 150 samples, divided into three categories (Iris setosa, Iris virginica, and Iris versicolor), each having 50 samples with four attributes. The dataset is divided into 75 samples for training and testing. The dataset was encoded into spikes over the simulation times.

4.2. Breast Cancer

The BCW dataset contains 683 instances, divided into two categories (benign and malignant cell tissues), with 458 benign (65.5%) and 241 (34.5%) samples, respectively. Each class had nine features encoded into spikes over the simulation times. The dataset was split into two parts, training and test datasets, with 342 and 341 samples, respectively.

4.3. Diabetes

The Diabetes dataset contains 768 samples belonging to two classes (with or without signs of diabetes). It includes eight quantitative variables. The dataset was divided into two parts, training and test datasets, which contained equal numbers of samples (384).

4.4. Wine

The Wine dataset contains 178 instances, divided into three categories, with 13 features. The dataset was divided into two parts: training and test datasets, with 105 and 73 samples, respectively. The dataset was encoded into spikes over simulation times.

4.5. Liver

The Liver dataset contains 345 samples belonging to two classes. It includes six quantitative variables. The dataset was divided into two parts, training and test datasets, with 200 and 145 samples, respectively. The dataset was encoded into spikes over simulation times.

All the experiments and performance analyses were programmed in Python. This was run on an Intel corei7 CPU 1.60 GHz processor with 16 GB RAM. In this experiment, the threshold of all neurons in all layers was set to 100. We set the maximum simulation time as

= 256 and initialized the synaptic weights with random values drawn from uniform distributions in the range [0, 1]. There were also specific parameters associated with each metaheuristic algorithm, as presented in

Table 3. We tested various values for each parameter and selected the one that led to the highest accuracy. Each algorithm was tested for 20 iterations.

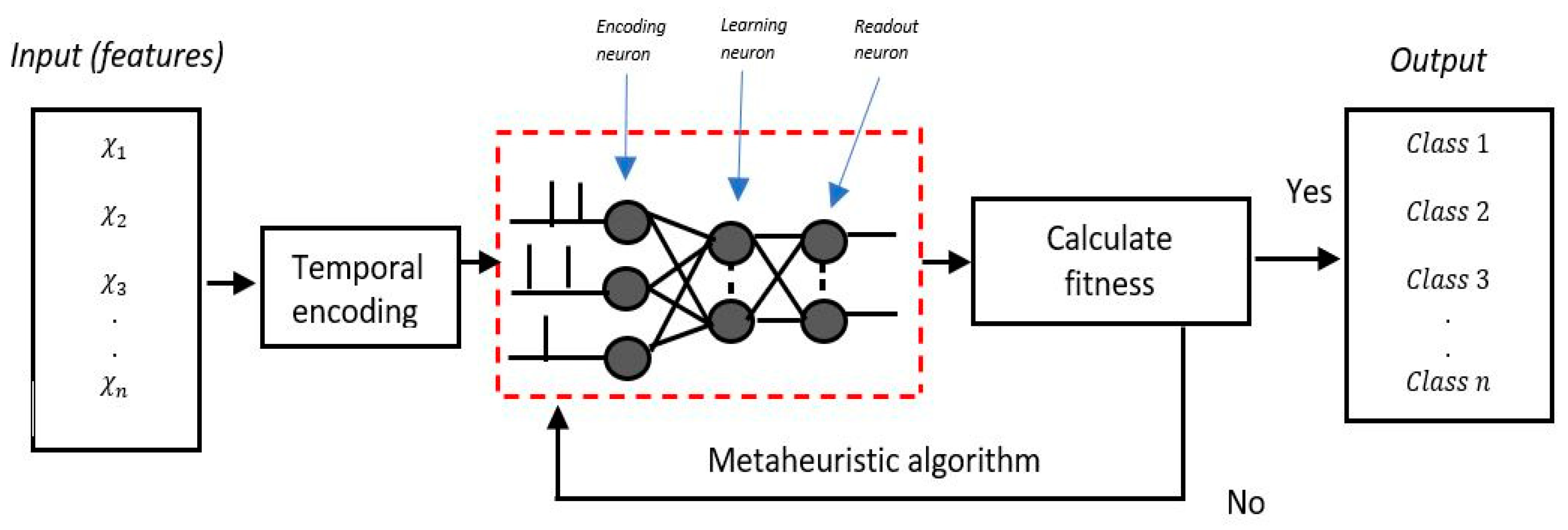

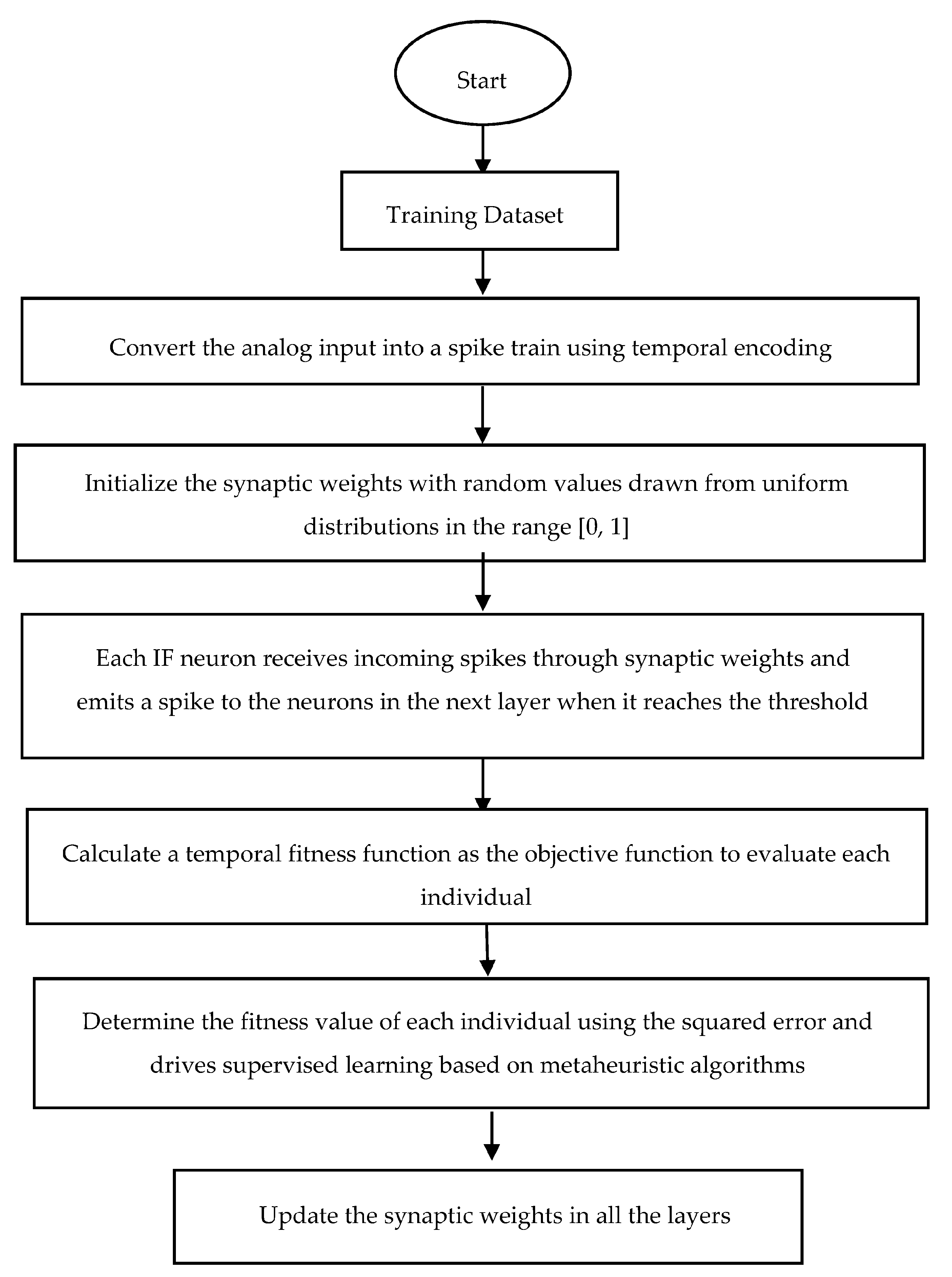

In this work, we present a metaheuristic-based supervised learning method for SNNs, as shown in

Figure 5. The following sequential steps describe the learning procedure of the proposed method:

- (1)

The first step is to transform the analog input into a spike train. Here, we used temporal coding for the entry layer. These spikes are then fed to the first layer of the network, where the IF neuron receives incoming spikes through synaptic weights and emits a spike to the neurons in the next layer when it reaches the threshold (Equation (1)). We initialized the input-hidden synaptic weights based on random values in the range [0, 1].

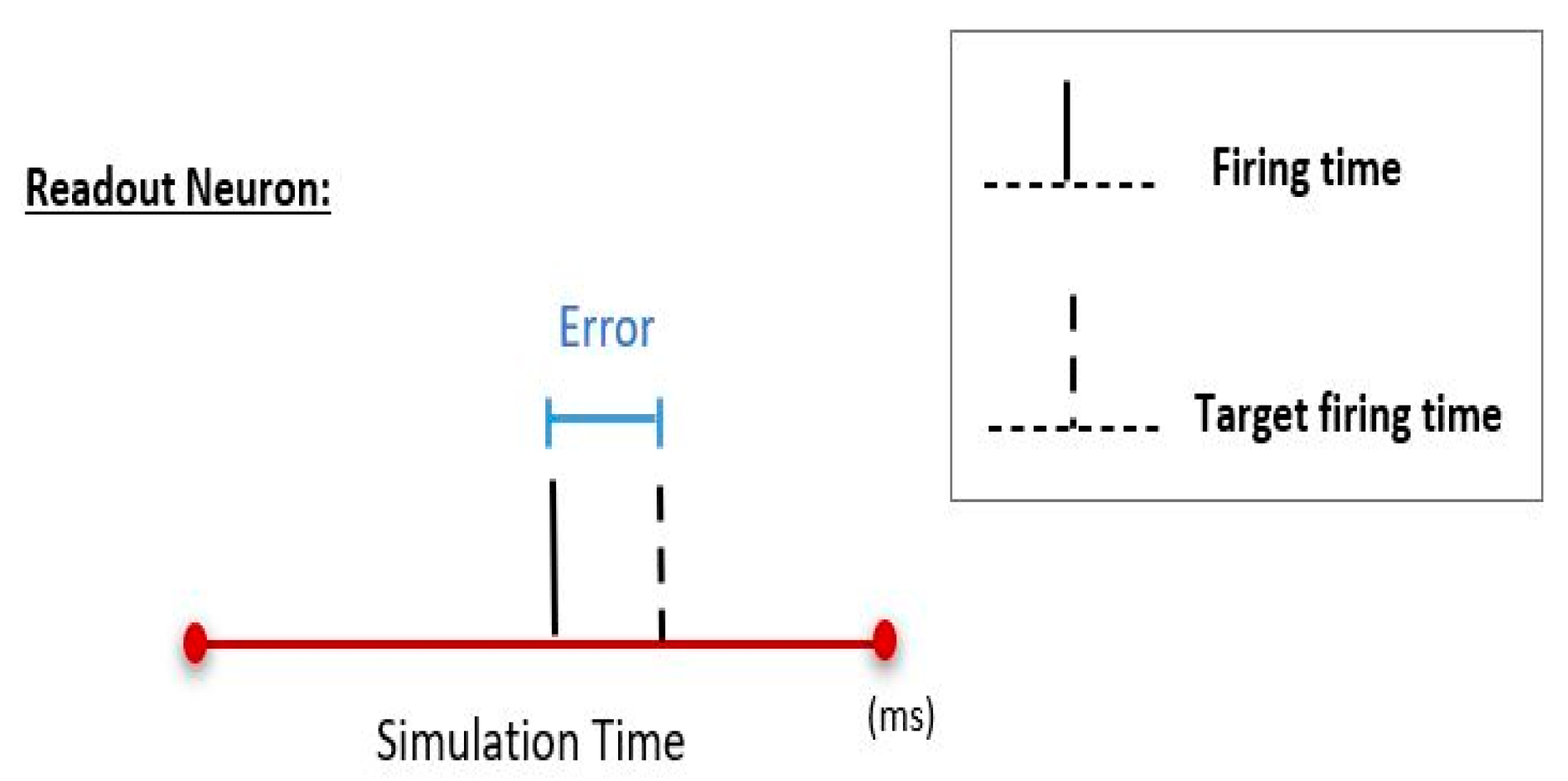

- (2)

For the classification task with the N class, each output neuron was assigned to a different class. After the forward pass was completed, the output neuron in the readout layer that fired earlier determined the class of the stimulus. Thus, to be able to train the network in the backward pass, we defined a temporal fitness function for the output layer by comparing its actual firing time and the target firing time (Equation (3)).

- (3)

During the training phase, we used metaheuristic algorithms to update the synaptic weights. The temporal fitness function was considered as the objective function to evaluate each individual. The next step is to calculate the squared error for N classes using Equation (4). This calculated error determines the fitness value of each individual and drives supervised learning based on metaheuristic algorithms. After completing the forward and backward steps on the current input, the membrane potentials of all the IF neurons are reset to zero, and the network gets ready to process the next input. In order to evaluate the performance of the system, after the training phase, the weights were updated, fixed, and the testing datasets were fed into the network so that the classification accuracy of the testing datasets could be calculated. The first output neuron to fire determines the decision of the network.

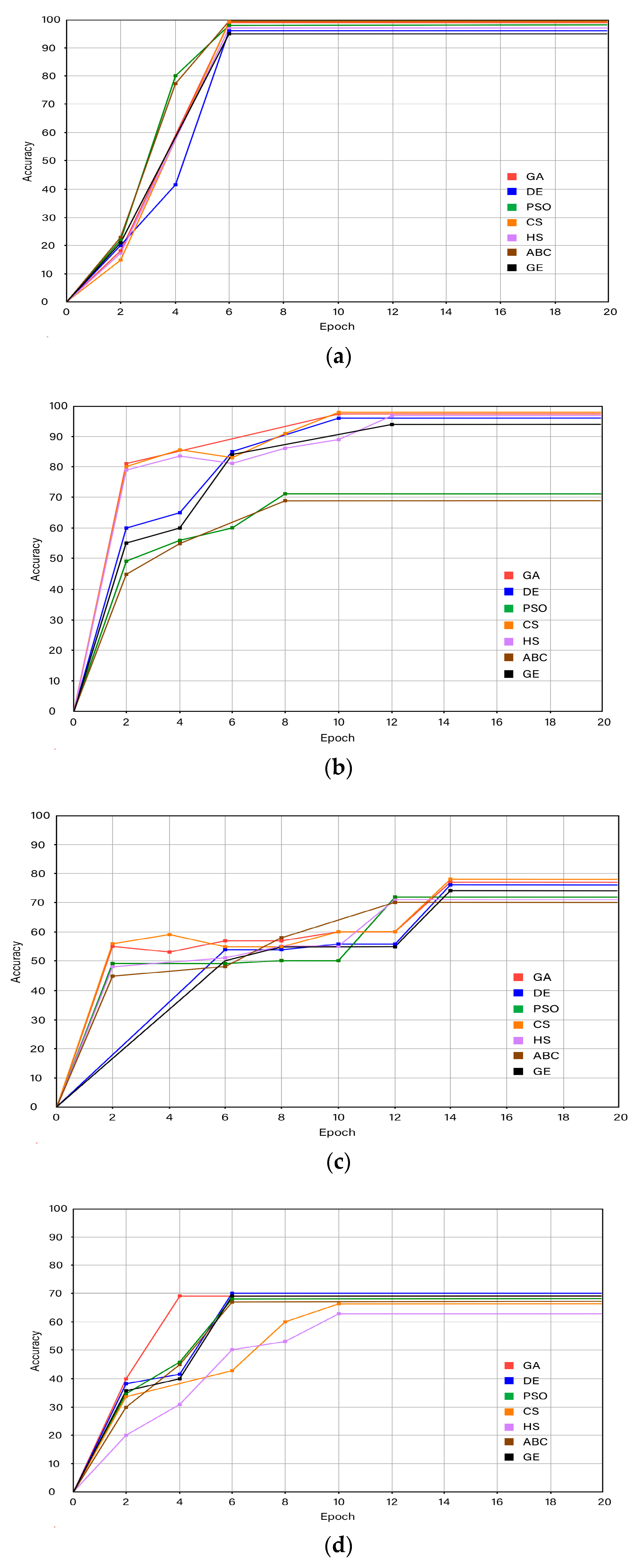

The learning curve of the proposed method is illustrated in

Figure 6. It illustrates the changes in the learning accuracy value over 20 iterations. It is clear that, as iterations increase, all algorithms eventually converge. The learning curve results of the algorithms are displayed in various colors. The red line indicates the learning curve of GA, the green line indicates that of PSO, the purple line that of HS, the blue line that of DE, the orange line that of SC, the black line that of GE, and the brown line that of ABC. The learning curves show that CS outperformed these experimental algorithms in five datasets, namely, Iris, Cancer, Wine, Diabetes, and Liver. For example, for the Iris dataset, the accuracy was increased at iteration 2 from 0 to 15. The accuracy was then increased to 99.76 at iteration 6 and remained unchanged until all iterations were completed.

The classification accuracy (CA) obtained with different metaheuristic algorithms was calculated as:

where

N and

n are the total number of categories and the number of accurate classes, respectively.

5. Discussion

The performance of the metaheuristic-based supervised learning was evaluated on five datasets provided by the UCI Machine Learning Repository: Pima Indians Diabetes (Diabetes), Iris Plant, Breast Cancer, Wine, and Liver. The population size and the number of iterations for all datasets were set to 100 and 20, respectively. We investigated the performance of seven meta-heuristic algorithms named the Cuckoo Search Algorithm, Genetic Algorithm, Differential Evolution, Harmony Search, Particle Swarm Optimization, Grammatical Evolution, and Artificial Bee Colony as training algorithms for SNNs. We employed a relative approach to estimate target firing times instead of defining a predefined target firing time.

According to the learning curve in

Figure 6, it can be observed that all algorithms converged to the optimal solution; however, the CS algorithm showed a faster convergence rate, particularly for the four datasets Iris, Cancer, Wine, and Diabetes. After only six iterations, the CS algorithm converged to the optimal solution. For liver classification, the DE algorithm had a faster convergence rate after only six iterations.

The trained network was then tested on the test dataset.

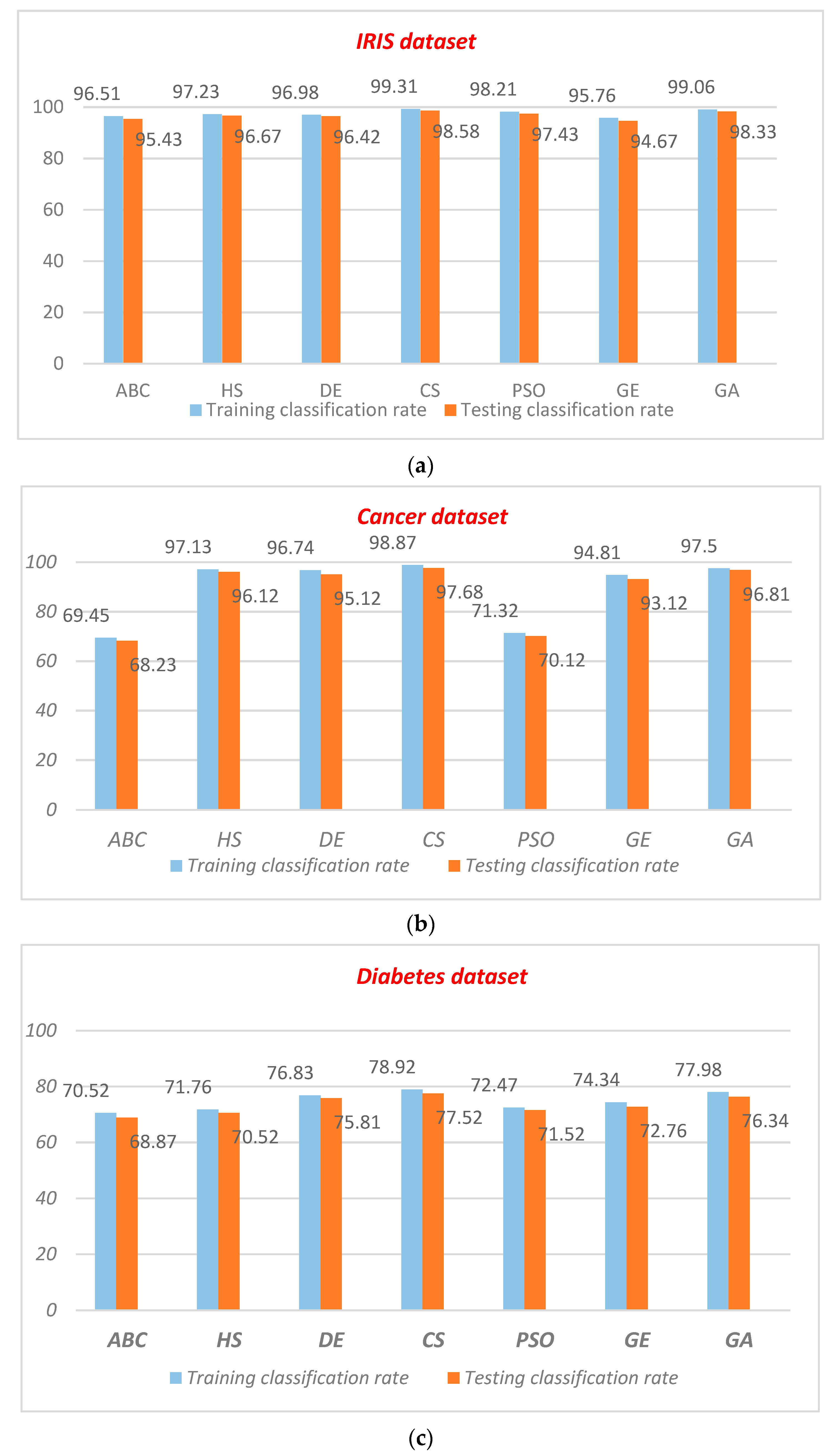

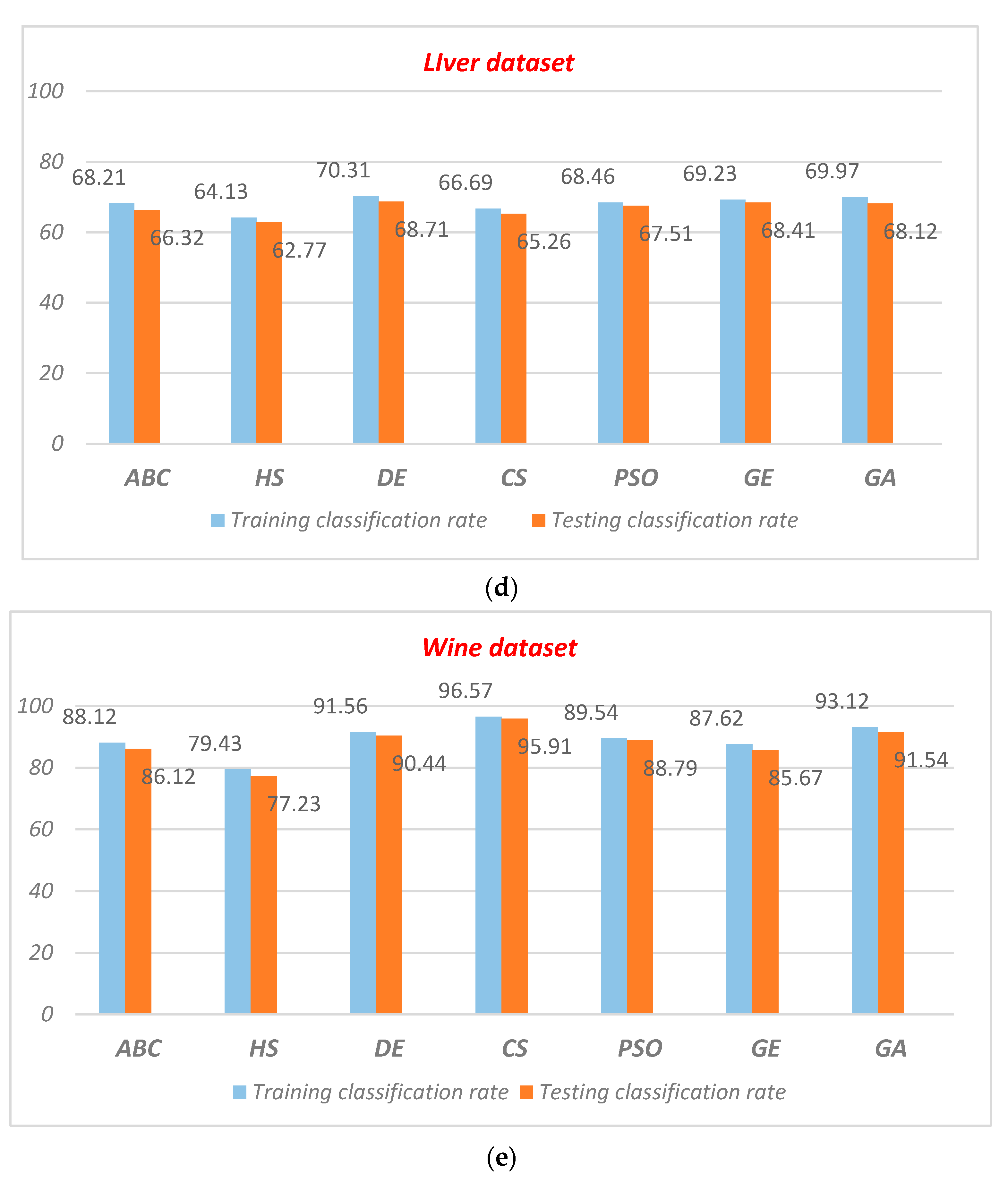

Figure 7 shows the training and testing results of UCI datasets among all metaheuristic designs. Based on the classification accuracy achieved by the seven metaheuristic algorithms, it is evident that all of the algorithms gave comparable performances. For the Iris dataset, the classification accuracy of CS was the highest; the training and testing classification accuracies were 99.31% and 98.58%, respectively. In contrast, GE achieved the lowest classification accuracy; training and testing accuracies were 95.76% and 94.67%, respectively. For the Cancer dataset, the classification accuracy of CS on the training and testing sets was 98.87% and 97.68%, which results were superior to those for the other algorithms. For the Liver dataset, the classification accuracy of GA on the training and testing sets was 69.97% and 68.12%—higher than CS and PSO, but lower than DE. For the Wine and Diabetes datasets, CS achieved much better accuracy than the other algorithms.

Furthermore, in order to validate the efficiency of the proposed algorithm, five experiments were performed, and the quantitative results were compared with other supervised learning algorithms using multilayer feedforward SNNs. In

Table 3, the various models for developing supervised SNNs are compared in terms of learning type and approaches, spiking neuron models, encoding methods, and accuracy based on the five datasets: Pima Indians Diabetes (Diabetes), Iris Plant, Breast Cancer Wisconsin, Wine, and Liver. The gradient descent algorithm SpikeProp [

65], Enhanced-Mussels Wandering Optimization (E-MWO) [

66], SpikeTemp [

67], Growing-Pruning (GP) [

68], and multi-SNN with Long-Term Memory SRM [

69] were chosen for comparison.

Table 4 shows that the proposed model outperformed the other algorithms in terms of classification accuracy. The suggested approach achieved optimal results on four classification test datasets. As shown by the simulation results in

Table 4, for the Iris dataset, the classification accuracy of our proposed model was 98.58%, which was higher than for all the other models. Close to our result, the multi-SNN with Long-Term Memory SRM had an accuracy of 97.2%. For the Breast Cancer dataset, the classification accuracy of the proposed algorithm was 97.68%. The accuracy of SpikeProp was 97%, which was around 0.68% lower than our best model. SpikeTemp, a rank-order based learning method for SNNs, however, obtained the lowest accuracy of 92.1%. For the Diabetes dataset, our best model scored 77.52%, which was higher than the scores for all the other models. For the Wine dataset, the classification accuracy of our proposed model was 95.91%, but 0.9% lower than SpikeProp. For the Liver dataset, our best model achieved an accuracy of 68.71%. The accuracies of SpikeProp and multi-SNN were 65.1% and 64.7%, respectively. SpikeTemp and Growing-Pruning (GP) obtained the lowest accuracies of 55.2% and 59.79%, respectively.

We also compared the efficiency of the proposed algorithm with some existing non-spiking models for solving classification problems with medical data. Darabi et al. (2021) presents a thermogram-based Computer-Aided Detection (CAD) system for breast cancer detection [

70]. In this CAD system, the Random Subset Feature Selection (RSFS) algorithm and a hybrid of the minimum Redundancy Maximum Relevance (mRMR) algorithm and GA with the RSFS algorithm are utilized for feature selection. The experimental results demonstrate that using the RSFS algorithm for feature selection and kNN and SVM algorithms as classifiers have 85.36% and 75% accuracy, respectively. Additionally, using hybrid GA and RSFS algorithms for feature selection and kNN and SVM algorithms for classifiers yielded 83.87% and 69.56% accuracy, respectively.

In another study, Zarei et al. (2021) proposed a novel segmentation method for breast cancer detection using infrared thermal images [

71]. The evaluation results showed that the average Dice similarity coefficient, Jaccard index, and Hausdorff distance in the FCM segmentation algorithm were 89.44%, 80.90% and 5.00, respectively. These values were 89.30%, 80.66%, and 5.15 for the MS segmentation algorithm, and 91.81%, 84.86%, and 4.87 for the MGMS segmentation algorithm. Salman et al. (2018) proposed a hybrid classification optimization method for improving ANN classification accuracy [

72]. Three optimization approaches named GA, PSO, and Fireworks Algorithm (FWA) were used in this work. They achieved an accuracy of 98.42% on the breast cancer datasets. In general, our proposed approach demonstrated competitive accuracy on the UCI datasets.

6. Conclusions

In this paper, we have demonstrated how different metaheuristic algorithms called Cuckoo Search, Genetic Algorithm, Harmony Search, Differential Evolution, Particle Swarm Optimization, Artificial Bee Colony, and Grammatical Evolution, can be applied to train a spiking neural network. This approach effectively avoids some problems, such as getting stuck in local minima and over-fitting. In the input layer, a temporal coding scheme is used to transform input data into spikes. Leaky Integrate-and-Fire neurons are employed to simulate the hidden and output layer neurons. The training process for the neurons was carried out using metaheuristic algorithms. We employed dynamic approaches to determine the target firing times for each input. The performance of our approach was evaluated on five UCI Machine Learning Repository datasets. The CS successfully obtained accuracies of 0.9858, 0.9768, 0.9591, and 0.7752 for the Iris, Cancer, Wine, and Diabetes datasets, respectively. DE showed the best performance for the Liver dataset (0.6871). The classification accuracy of the proposed learning model was compared to some existing non-metaheuristic training algorithms. Several issues need to be tackled before an SNN can be used for various tasks, including the design of a learning algorithm. Future work will include conducting experiments on more complex pattern-recognition problems, such as face and voice recognition, with more complex datasets and investigation of the impact of different encoding schemes on classification accuracy. Furthermore, designing a multi-objective fitness function using algorithms, such as Bayesian optimization, is needed to produce the most accurate and smallest SNNs.