Abstract

Artificial intelligence and distributed algorithms have been widely used in mechanical fault diagnosis with the explosive growth of diagnostic data. A novel intelligent fault diagnosis system framework that allows intelligent terminals to offload computational tasks to Mobile edge computing (MEC) servers is provided in this paper, which can effectively address the problems of task processing delays and enhanced computational complexity. As the resources at the MEC and intelligent terminals are limited, performing reasonable resource allocation optimization can improve the performance, especially for a multi-terminals offloading system. In this study, to minimize the task computation delay, we jointly optimize the local content splitting ratio, the transmission/computation power allocation, and the MEC server selection under a dynamic environment with stochastic task arrivals. The challenging dynamic joint optimization problem is formulated as a reinforcement learning (RL) problem, which is designed as the computational offloading policies to minimize the long-term average delay cost. Two deep RL strategies, deep Q-learning network (DQN) and deep deterministic policy gradient (DDPG), are adopted to learn the computational offloading policies adaptively and efficiently. The proposed DQN strategy takes the MEC selection as a unique action while using the convex optimization approach to obtain the local content splitting ratio and the transmission/computation power allocation. Simultaneously, the actions of the DDPG strategy are selected as all dynamic variables, including the local content splitting ratio, the transmission/computation power allocation, and the MEC server selection. Numerical results demonstrate that both proposed strategies perform better than the traditional non-learning schemes. The DDPG strategy outperforms the DQN strategy in all simulation cases exhibiting minimal task computation delay due to its ability to learn all variables online.

1. Introduction

Large-scale and integrated equipment puts forward higher requirements for condition monitoring with the improvement of productivity [1,2,3,4]. Intelligent mechanical fault diagnosis algorithms have been accompanied by the development of artificial intelligence (AI) and Internet of Things (IoT) technologies, such as the application of deep learning (DL) and reinforcement learning (RL), in fault diagnosis [5,6,7,8,9,10]. A collaborative deep learning-based fault diagnosis framework is proposed to solve the data transmission problem in distributed complex systems, which is a security strategy that does not require the transmission of raw data [11]. An improved classification and regression tree algorithm are proposed, which ensures the accuracy of fault classification by reducing the iteration time in the computation [12]. A fault diagnosis method based on adaptive privacy-preserving federated learning is used for the Internet of Ships, which guarantees no risk of data leakage by sharing model parameters [13]. A deep learning-based approach to automated fault detection and isolation is used for fault detection in automotive dashboard systems, which is tested against data generated from a local computer-based manufacturing system [14]. An intelligent fault detection method based on the multi-scale inner product is adopted for shipboard antenna fault detection, which uses the inner product to capture fault information in vibration signals and combines it with locally connected feature extraction [15].

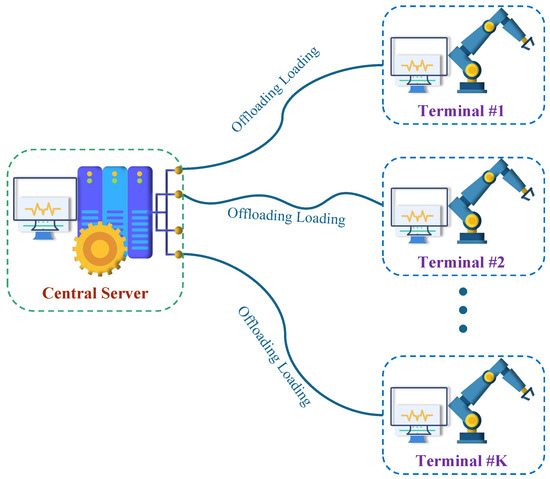

The current intelligent fault diagnosis algorithm pays more attention to the reliability of the diagnosis and less attention to the timeliness [16]. The server’s computation resources and the timeliness of data processing have become urgent problems to be solved with the exponential growth of diagnostic data throughput. The traditional fault diagnosis systems offload the diagnostic data collected by terminals to a server with powerful computing power for processing, as shown in Figure 1. The server is usually far away from the acquisition terminal, which causes a waste of resources during transmission and increases data transmission delay [17]. The emergence of mobile edge computing (MEC) provides a solution to these problems, which is considered a promising architecture for data access [18,19,20,21]. MEC deploys several lightweight servers closer to the collection terminals compared to traditional state monitoring systems, which are called mobile edge servers. MEC servers can reduce the burden of performing computation for large content tasks and task processing delays significantly by allowing terminals to offload computation tasks to a nearby MEC server [22,23].

Figure 1.

The framework of the conventional mechanical fault diagnosis system in which the terminal uploads the monitoring data to a central server through the network cable for processing.

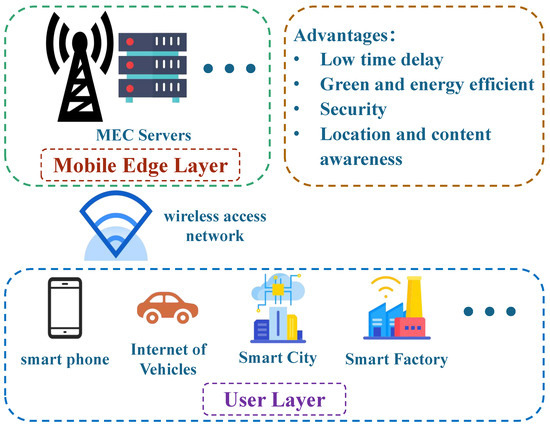

The architecture of MEC usually consists of the user layer and the mobile edge layer [24,25,26,27], as shown in Figure 2. In the MEC paradigm, the user layer consists of mobile device terminals, which contain various applications and functions and also have certain computing capabilities. When processing each computing task, the device terminal can choose to process it on its own device in addition to offloading the task to the mobile edge layer or cloud layer through data transfer. The mobile edge layer consists of edge servers near the device terminals, where computing resources are more abundant than those of the device terminals. Through computing offload technology, information can be interacted with in real-time to meet the computing needs of different types of application scenarios. The MEC architecture has a wide range of application scenarios in the IoT, such as 5G communication, virtual reality, Internet of Vehicles, smart city, smart factory, etc. The MEC architecture has the advantages of low time delay, green and energy efficiency, security, location, content awareness, etc., which makes it easier to access AI methods and blockchain methods.

Figure 2.

Architecture, applications, and advantages of MEC.

Computing offloading as one of the core techniques of the MEC has received great attention recently. For simple, indivisible, or highly integrated tasks, binary offloading strategies are generally adopted, and tasks can only be computed locally or all offloaded to the servers [28]. The authors in [29] formulated the binary computation offloading decision problem as a convex problem, which minimizes the transmission energy consumption under the time delay constraint. The computation offloading model studied in [30] assumed that the application has to complete the computing task with a given probability within a specified time interval, for which the optimization goal is the sum of local and offloading energy consumption. This work concluded that offloading computing tasks to the MEC servers can be more efficient in some cases. In practice, offloading decisions can be more flexible. The computation tasks can be divided into two parts performed in parallel: one part is processed locally, and the other is offloaded to the MEC servers for processing [31]. A task-call graph model is proposed to illustrate the dependency between the terminal and MEC servers, in which decisions and latencies are investigated by the joint offloading scheduling and formulated as a linear programming problem [32].

RL has been employed as a new solution to the problem of MEC offloading, which is a model-free machine learning algorithm that can perform self-iterative training based on the data it generates [33,34,35,36]. Task processing delay is a vital optimization parameter for time-sensitive systems. The authors studied the problem of computation offloading in an IoT network in [37], in which the Q-learning-based RL approach was proposed for an IoT device to select a proper device and determine the proportion of the computation task to offload. The authors in [38] investigated joint communication, caching, and computing for vehicular mobility networks. A deep Q-learning-based RL with a multi-timescale framework was developed to solve the joint online optimization problem. In [39], the authors studied the offloading for the energy harvesting (EH) MEC network. An after-state RL algorithm was proposed to address the large time complexity problem, and polynomial value function approximation was introduced to accelerate the learning process. In [40], the authors also studied the MEC network with the EH device. The authors proposed hybrid-based actor-critic learning for optimizing the offloading ratio, local computation capacity, and server selection. From the above references, efficient computational offloading decisions based on RL methods can help the system to reduce computational complexity and computational time cost.

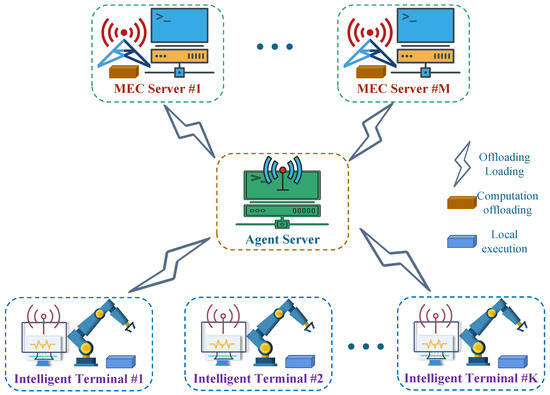

In the framework of the intelligent fault diagnosis system proposed in this paper, the user layer consists of intelligent terminals with certain computing power, and the mobile edge layer consists of MEC servers with strong computing power, as shown in Figure 3. The intelligent terminal offloads the fault diagnosis data to any MEC server proportionally through the agent server’s policy. The optimization problem becomes an offloading decision problem in a dynamic MEC environment, and the current channel state information (CSI) cannot be observed while making the offloading decision. The offloading policy should follow the predicted CSI and task arrival rates under the intelligent terminal and MEC server energy constraints aiming to minimize the long-term average delay cost. We first establish a low-complexity deep Q-learning network (DQN)-based offloading framework where the action includes only discrete MEC server selection, while the local content splitting ratio and the transmission/computation power allocation are optimized by the convex optimization method. Then, we develop a deep deterministic policy gradient (DDPG)-based framework, which includes both the discrete MEC server selection variable and constant local content splitting ratio, the transmission/computation power allocation variable as actions. The numerical results demonstrate that both proposed strategies perform better than the traditional non-learning scheme. The DDPG strategy is superior to the DQN strategy as it can online learn all variables. Compared with the traditional fault diagnosis system, the intelligent fault diagnosis system migrates the original computing tasks based on the central server to the edge computing system, which reduces the computing load of the central server, slows down the network bandwidth pressure, and improves the real-time data interaction. On the other hand, the new intelligent fault diagnosis system solves the problem of the single function of traditional instrumentation systems, which increases the intelligence of instrumentation and makes it easier to access other intelligent methods.

Figure 3.

The framework of the intelligent mechanical fault diagnosis system in this paper, which contains three parts: intelligent terminal, agent server, and MEC servers.

The contributions of this paper can be summarized as follows.

- (1)

- A new framework for the intelligent fault diagnosis system based on the MEC framework is proposed, in which MEC servers and intelligent terminals can process monitoring data and the ratio determined by the offload policy of the agent server. Compared with the traditional fault diagnosis system, the intelligent fault diagnosis system solves the problems of limited computing resources and network delay and increases the intelligence of the equipment.

- (2)

- Two offloading scenarios of the intelligent fault diagnosis system are modeled: one-to-one and one-to-multiple. One-to-one means that one MEC server can only be connected by one intelligent terminal simultaneously, and one-to-multiple implies that multiple intelligent terminals can be connected to the same MEC server simultaneously. The optimization goal is taking the maximum time delay for the system to complete the computation task at each time slot. Every intelligent terminal and MEC server has its energy constraints, and the agent determines the power allocation during the offloading process.

- (3)

- The offloading decision optimization algorithm based on the combination of convex optimization and deep reinforcement learning is designed. Firstly, the convex optimization methods are used to solve the connection problem of the intelligent terminal needing to choose which MEC server. Then, the resource allocation of intelligent fault diagnosis system offloading is given by the DQN and DDPG algorithm.

The remainder of this paper is structured as follows. The intelligent fault diagnosis system models are provided in Section 2. The DDPG-based Offloading Design and DQN-based Offloading Design are described in Section 3 and Section 4, respectively. The numerical results and relevant analysis are presented in Section 5. The conclusion is given in Section 6.

2. The Intelligent Fault Diagnosis System Model

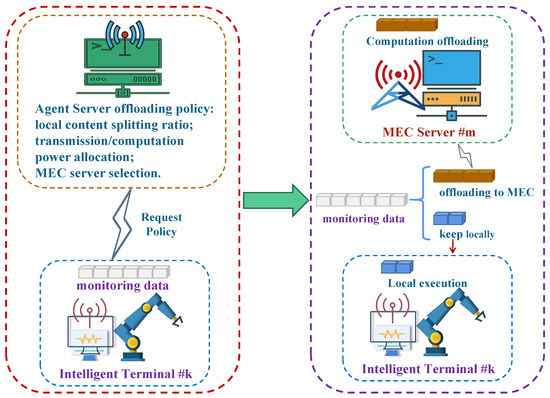

A new framework for the intelligent fault diagnosis system is proposed in this paper, which consists of MEC servers and intelligent terminals, as shown in Figure 4. Both MEC servers and intelligent terminals can process monitoring data, and the intelligent terminal can offload data to any MEC server through the agent. The interaction between the intelligent terminal and the MEC server operates in the orthogonal frequency division multiple access frameworks. The offloading policy includes the local content splitting ratio, the transmission/computation power allocation, and the MEC server selection. According to the offloading policy, the monitoring data is split into two parts: one is offloaded to the MEC server for processing and the remaining part is kept locally for processing by the intelligent terminal. The intelligent fault diagnosis system based on the MEC framework can be divided into three models: the network model, the communication model, and the computing model. These will be introduced separately in the following.

Figure 4.

The working principle of intelligent fault diagnosis system in this paper. The intelligent terminal collects the fault diagnosis data and then requests a policy from the agent server.

2.1. Network Model of Intelligent Fault Diagnosis System

The network of intelligent fault diagnosis system supporting offloading contains M MEC servers and K intelligent terminals. Let and be the index sets of the MEC servers and the intelligent terminals, respectively. Part of the diagnostic data will be offloaded to the MEC server, assuming that the MEC server has more computing power than the intelligent terminal. The system time is divided into consecutive time frames with equal time period and the time indexed by . The channel state information between the m-th MEC server and the k-th intelligent terminal is denoted as , and the task size at intelligent terminal k is marked as . The channel state information of the MEC network and the task arrival at each intelligent terminal change for each time interval . In order to save the energy consumption of intelligent terminals and MEC servers and reduce the task processing latency, the central agent node needs to determine the task ratio of local execution content size and offloading content size, as well as the power allocation ratio of local task processing and data transmission. The power splitting of the MEC server among multiple smart terminals should be determined if one MEC server is selected to help handle tasks from multiple intelligent terminals. The communication model and the computational model are described in detail below.

2.2. Communication Model of MEC Servers and Intelligent Terminals

In the considered network of intelligent fault diagnosis systems, the communications are operated in an orthogonal frequency division multiple access framework, and a dedicated subchannel with bandwidth B is allocated for each intelligent terminal for the partial task offloading. Supposing that intelligent terminal k communicates with MEC server m, the received signal at MEC m receiver can be represented as

where denotes the symbols transmitted from intelligent terminal k, is the utilized power at intelligent terminal k, and denotes the received additive Gaussian noise with power . Here the channel gains follows the finite-state Markov chain (FSMC), and thus the communication rate between MEC server m and intelligent terminal k is give by

2.3. Computing Model of Intelligent Fault Diagnosis System

The task received at intelligent terminal k at time t need to be processed during time interval t. Denote the task splitting ratio as , which indicates that at time interval t, bits are executed at the intelligent terminal device and the remaining bits are offloaded to and processed by the MEC server.

(1) Local computing: In local computation, the CPU of the intelligent terminal device is the primary engine, which adopts the dynamic frequency and voltage scaling (DVFS) technique and the performance of the CPU is controlled by the CPU-cycle frequency . Let denote the local processing power at intelligent terminal k, then the intelligent terminal’s computing speed (cycles per second) at t-th slot is given by

Let denote the number of CPU cycles required for intelligent terminal k to accomplish one task bit. Then the local computation rate for intelligent terminal k at time slot t is given by

(2) Mobile Edge Computation Offloading: The task model for mobile edge computation offloading is the data-partition model, where the task-input bits are bit-wise and can be arbitrarily divided into different groups. At the beginning of the time slot, the intelligent terminal chooses which MEC server to connect to according to the channel state. Assume that the processed power which is allocated to the intelligent terminal k by the MEC server m is , then the computation rate at MEC server m for intelligent terminal k is:

where is the number of CPU cycles required for the MEC server to accomplish one task bit, and denotes the CPU-cycle frequency at the MEC server. It is noted that the MEC server can simultaneously process tasks from multiple intelligent terminals. We assume multiple applications can be executed parallel with a negligible processing latency. The feedback time from the MEC to the intelligent terminal is ignored due to the small-sized computational output.

3. DQN-Based Offloading Design

In this section, we develop a DQN-based offloading framework for minimizing the long-term processing delay cost. With the development of the traditional Q-learning algorithm, DQN is particularly suitable for high-dimensional state spaces and possesses fast convergence behavior. The MEC system constructs the DQN environment in the considered DQN offloading design framework. A central agent node is set up to observe status, perform actions, and receive feedback rewards. The center can be the cloud server or an MEC server.

The DQN-based offloading framework is introduced in the following, in which the corresponding state space, action space, and reward are defined. In the overall DQN paradigm, it is assumed that the instantaneous CSI is estimated at MEC servers using the training sequences and then delivered to the agent. The CSI observed at the agent is the delayed version due to the channel estimation operations and feedback delay. Only local CSI of intelligent terminals, which connect to this MEC server, is acquired for each MEC server.

3.1. System State and Action Spaces

System State Space: In the considered DQN paradigm, the state space observed by the agent includes the CSI of the overall network and the received task size at time t. As the agent needs to consume extra communication overhead to connect the CSI from all MEC servers, the MEC server at time t observes a delayed version of CSI at time , i.e., . Denote

The state space observed at time t can be represented as

System Action Space: The agent will take certain actions to interact with the environment with the observed state space . As DQN can only take care of the discrete actions, the actions defined in the proposed DQN paradigm constitute only the MEC server selection. The MEC server selection action is denoted as , which can be represented as

where means that the intelligent terminal k does not select the MEC server m at t-th time slot, while indicates that the intelligent terminal k selects the MEC server m at t-th time slot.

3.2. Reward Function

In the DQN paradigm, the reward is defined as the maximum time delay required to complete all the tasks received at all intelligent terminals. After taking the actions, a dedicated MEC server can calculate the time delays required for the intelligent terminals choosing this MEC server to offload, as all MEC can observe the local CSI. With the loss of generality, we assume that intelligent terminal k with offloads the tasks to MEC m, where set defines the indexes of the intelligent terminals selecting MEC server m to offload tasks. To minimize the required time delays, the MEC server needs to formulate an optimized problem to find the optimal , , , and . It is worth noting that as the MEC server knows the instantaneous CSI at time t, the solution can be obtained based on , which is different from the MEC server selection taken based on . For the intelligent terminals which do not offload tasks to the MEC servers, the required time delays for local task processing can be known by these intelligent terminals. The agent collects all the time delay consumptions from the intelligent terminals and the MEC servers to obtain the final reward.

We detail how to compute the time delay for intelligent terminal k, assuming that it selects MEC server m to offload. The total time consumption for completing the task processing at intelligent terminal k is denoted as , which equals to where , , and denote the times required for intelligent terminal local task processing, task offloading transmission from intelligent terminal k to MEC server m, and task processing at MEC server, respectively.

With the computation rate defined in Equation (4), time can be represented as

As the size of the offloaded task is with the communication rate defined in Equation (2), time can be calculated as

With the computation rate allocated by MEC server m to intelligent terminal k in Equation (4), time can be computed as

To maximize the reward, we need to minimize the time delay for each intelligent terminal under the total energy constraint at intelligent terminals and MEC servers. To illustrate the way to find optimal , , , and for different types of MEC server selection, we next present two typical offloading scenarios, that is, an MEC server serves one intelligent terminal and an MEC server serves two intelligent terminals. It is noted that the proposed way of solving , , , and can be extended to the case where an MEC server serves arbitrary number of intelligent terminals.

- (1)

- Scenario 1: one MEC server serves one intelligent terminal

The energy consumption at intelligent terminal k, denoted by , includes two parts, i.e., one part for local partial task processing and another for partial task transmission. Therefore, can be written as

The energy consumption at the MEC server m for processing the partial task offloaded from intelligent terminal k is denoted by , and can be represented as

The optimization problem formulated to find optimal is given by

where and denote the maximum available energy at intelligent terminal k and MEC server m, respectively. Problem (14) can be rewritten as

To solve problem (15), we first find that at optimal solution, constraint (15c) must be active, which can minimize the the objective value (15a). We thus have

which produces

It is noted that problem (18) is a non-convex optimization problem. We propose an alternating algorithm to solve , , and in different subproblems separately to find an efficient solution. In the first subproblem, we solve for given and . To minimize the objective function, the optimal solution of should activate constraint (18b), that is, , which implies

In the second subproblem, we solve with given and . The corresponding optimization problem is given by

Problem (19) is a convex optimization problem and can be efficiently solved, such as the interior point algorithm, etc.

In the third problem, is solved with given and . The corresponding optimization problem is given by

For the min–max problem (21), by denoting , and , it is known that the optimal , denoted by , occurs in the following three cases, that is, , , or . Note that the solution of the third case can be obtained by solving a cubic equation. The final solution is given as

By alternating three subproblems with the solutions given in (19), (20), and (22) until convergence, we obtain the final solution.

- (2)

- Scenario 2: one MEC server serves two intelligent terminals

Assume that MEC server m serves two intelligent terminals, e.g., intelligent terminal k and intelligent terminal , then the optimization problem can be formulated as follows

The previously proposed iterative algorithm can still be applied here to solve , , and with . Here the only difference lies in solving and . The corresponding optimization problem can be formulated as

It is worth noting that the optimal solution must activate the constraints and make the two terms within the objective function equal to each other. Therefore, the optimal and can be obtained by solving the following equations

Hence, under an action , the system reward can be obtained as

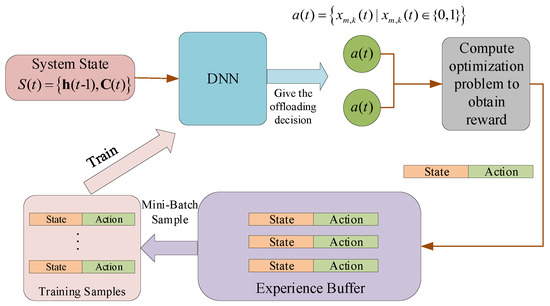

The structure of the DQN-based offloading algorithm is illustrated in Figure 5, and the pseudocode is presented in Algorithm 1.

| Algorithm 1 The DQN-based Offloading Algorithm |

|

Figure 5.

The structure of the DQN-based offloading algorithm.

4. DDPG-Based Offloading Design

Note that only the discrete actions can be handled by the DQN-based offloading design, where the reward acquisition mainly depends on solving the formulated optimization problems at MEC servers, which may increase the extra computing burden at the MEC servers. In this section, we rely on the DDPG to design offloading policy, considering that DDPG can deal with discrete and continuous value actions. Different from DQN, DDPG uses the Actor-Critic network to improve the accuracy of the model. In this section, we directly regard , , , , and as the output action instead of disassembling the problem into two parts.

System State Space: In the DDPG offloading paradigm, the system state space action is the same as the DQN-based offloading paradigm, which is given by

where and are defined in (6). As in the DQN offloading paradigm, the agent can only observe the delayed version of CSI due to channel estimation operations and feedback delay.

System Action Space: In the DDPG offloading paradigm, the value of is utilized to indicate the MEC server selection, where represents that there is no partial task at intelligent terminal k offloaded to the MEC server m. In other words, the MEC server m is not chosen by intelligent terminal k. If is not equal to 0, it means that the intelligent terminal k decides to offload partial tasks to the MEC server m. Since the intelligent terminal can only connect to one MEC server at one time slot, only one in any time slot is not 0, and the remaining ones are 0. The action space of the DDPG offloading paradigm can be expressed as

It is noted that here the continuous actions can be obtained based on state with delayed CSI .

System Reward Funciton: In the DDPG offloading algorithm, , , , and can be obtained from a continuous action space. With the decisions, the agent tells each intelligent terminal k the selected MEC server and delivers and to it to perform the offloading. Moreover, the agent needs to send to each server to allocate computing resources. After that, the reward is obtained as in (26) by collecting observed at the MEC servers or intelligent terminals.

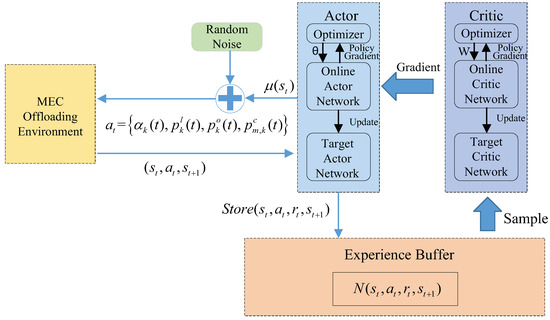

Compared to the DQN-based offloading paradigm, the DDPG-based offloading paradigm does not need the MEC servers to solve the optimization problems, which can release the computation burden at the MEC servers. However, as the DDPG algorithm is generally more complex than the DQN algorithm, the computation complexity unavoidably increases at the agent. The structure of the DDPG-based offloading algorithm is illustrated in Figure 6. We provide the pseudocode in Algorithm 2.

| Algorithm 2 The DDPG-based Offloading Algorithm |

|

Figure 6.

The structure of the DDPG-based offloading algorithm.

5. Numerical Results

In this section, we present the numerical simulation results to illustrate the performance of the proposed two offloading paradigms. Assume that the time interval of the system is 1 ms, and the bandwidth of the intelligent fault diagnosis system is 1 MHz. Additionally, the required CPU cycles per bit are 300 cycles/bit at the intelligent terminals and 120 cycles/bit at MEC servers. In the training process, the learning rate of the DQN-based offloading algorithm is . In the DDPG-based offloading algorithm, the learning rate of the actor network is , and the learning rate of the critic network is .

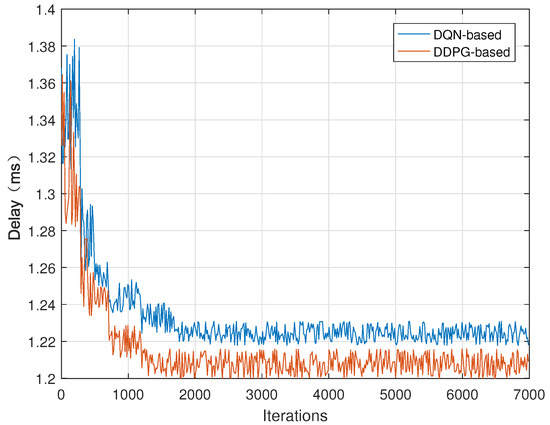

In Figure 7, we plot the training process of the DQN-based algorithm and the DDPG-based algorithm, where the blue curve represents the delay dynamics of the DQN-based algorithm and the red curve represents the delay dynamics of the DDPG-based algorithm. The delay of the system is in an unstable state with large fluctuations in the beginning, indicating that the agent is constantly exploring the environment randomly. After a period of learning, the delay decreases slowly, and the fluctuation range gradually gets smaller. After about 1200 iterations, the DDPG-based algorithm converges to a stable value of 1.2; after about 1500 iterations, the DQN-based algorithm converges to 1.22. At this time, the average reward of each episode no longer changes, and the training process is completed. The DDPG-based algorithm converges faster and can obtain a lower latency than the DQN-based algorithm. This indicates that the performance of the DDPG-based algorithm is better than the DQN-based algorithm for our offloading problem.

Figure 7.

The delay dynamics of each iteration of the DQN-based and DDPG-based algorithms during the training process, where the blue curve represents the delay dynamics of the DQN-based algorithm and the red curve represents the delay dynamics of the DDPG-based algorithm.

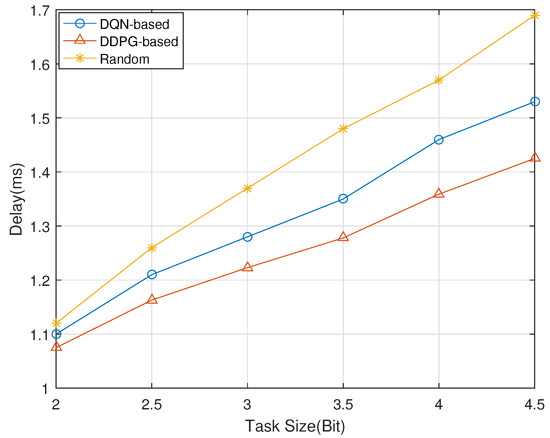

In Figure 8, the DDPG-based computational offloading paradigm, the DQN-based computational offloading paradigm, and the “Random” policy are compared for different task sizes in terms of delay. “Random” means that the computing resources are allocated randomly. The delay difference between the three policies is slight at task sizes below 2.5 bit, with the DDPG-based computational offloading paradigm having the smallest delay and the “Random” policy having the largest delay. The delay of the “Random” policy increases the most as the task size increases, while the latency of the DDPG-based computational offloading paradigm and the DQN-based computational offloading paradigm increases slightly less. The delay of DDPG’s computational offload paradigm and DQN-based computational offload paradigm consistently remains low compared to the “Random” policy.

Figure 8.

The delay comparison under different task sizes, where the red curve represents the delay of the DDPG-based computational offloading paradigm, the blue curve represents the delay of the DQN-based computational offloading paradigm, and the yellow curve represents the delay of the “Random” policy.

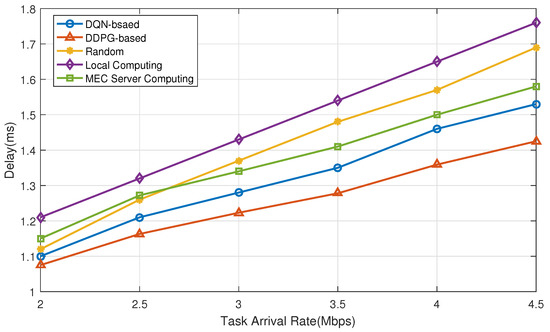

In Figure 9, we illustrate the offloading delay as the function of the amount of tasks at intelligent terminals. Three benchmarks, namely “Random”, “Local computing”, and “MEC server computing”, are chosen to compare the performance with the proposed two offloading paradigms. Here “Random” means that the computing resources are allocated in a random manner; “Local computing” and “MEC server computing” mean that the tasks are processed only at intelligent terminals and only at MEC servers, respectively. The curves in Figure 9 show that the required time delay increases correspondingly as the amount of tasks grows. The computation delay of “Local computing” is the largest as intelligent terminals have little local computing capacity. “MEC server computing” performs better than “random scheme” when the task arrival rate is more significant than Mbps, which indicates that when the task arrival rate increases, task offloading to MEC servers can obtain a lower time delay. When the task arrival rate is greater than 4 Mbps, the offloading time delay of “MEC server computing” is close to the DQN-based computation offloading algorithm, indicating that most tasks are offloaded to the MEC servers with large task sizes. Both proposed DQN and DDPG offloading paradigms achieve better performance than other benchmarks, proving the proposed methods’ effectiveness. On the other hand, the DDPG-based computation offloading paradigm achieves a lower computation delay than the DQN-based computation offloading paradigm, which further verifies the superiority of the DDPG algorithm in dealing with high-dimensional continuous action-state space problems.

Figure 9.

The delay comparison under different task arrival rates, where the red curve represents the delay of the DDPG-based computational offloading paradigm, the blue curve represents the delay of the DQN-based computational offloading paradigm, the yellow curve represents the delay of the “Random” policy, the purple curve represents the delay of the “Local computing” policy, and the green curve represents the delay of the “MEC server computing” policy.

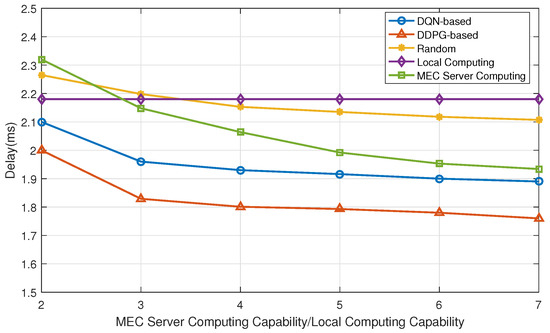

Figure 10 shows the impact of the computing capabilities of intelligent terminals and MEC servers on the processing delay. We fix the local computing capability as a constant value and increase the computing capacity of the MEC server continuously, so the computation delay of “Local computing” is not affected by the ratio of computing capacity between the intelligent terminal and MEC server. Under different computing capabilities, the proposed DQN and DDPG offloading paradigms can achieve better performance than the other three benchmarks, and the performance of the DDPG-based offloading paradigm is slightly better than the DQN-based offloading paradigm. When the ratio of MEC server computing capacity to intelligent terminal computing capacity locates between two and three and the ratio increases, the processing speed of the MEC server is faster than the intelligent terminal, and the intelligent terminal chooses to offload more tasks to the MEC server. The computation delay of “MEC computing” is smaller than the “random scheme”. When the ratio exceeds three and as the ratio increases, the processing speed of the MEC server is significantly higher than the intelligent terminals. The intelligent terminal prioritizes the task offloading, and the task processing delay is still decreasing, but the downward trend slows down. The computation delay of “MEC computing” is lower than the “random scheme” and close to the DQN-based offloading paradigm, which indicates that most or all tasks are offloaded to the MEC servers. The decrease in the delay is mainly due to the increase in the computing capacity of the MEC servers.

Figure 10.

The delay comparison under different computing capabilities, where the red curve represents the delay of the DDPG-based computational offloading paradigm, the blue curve represents the delay of the DQN-based computational offloading paradigm, the yellow curve represents the delay of the “Random” policy, the purple curve represents the delay of the “Local computing” policy, and the green curve represents the delay of the “MEC server computing” policy.

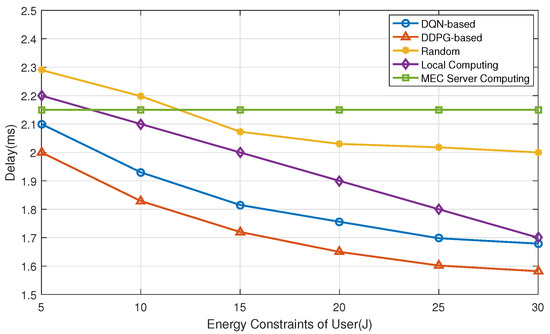

Figure 11 illustrates the computation delay under different energy constraints at the intelligent terminals. The curves show that “MEC computing” is not affected by the change in energy of the intelligent terminal. “Local computing” highly depends on the intelligent terminal energy constraint, and the computation delay decreases significantly as the intelligent terminal energy increases. The increase in intelligent terminal energy indicates a fast local processing speed and high available transmission at the intelligent terminals, which can reduce the computation delay to a certain extent. The computation delay of DQN-based and DDPG-based offloading paradigms decreases significantly as the intelligent terminal’s energy increases at the beginning. The computation delay gradually decreases when the intelligent terminal’s energy reaches a certain level, which shows that the intelligent terminal’s energy constraint significantly impacts the computation delay within a specific range. The computation delay has a weaker impact when the intelligent terminal’s energy exceeds a certain range. The DQN-based and the DDPG-based offloading paradigms achieve better performance than other offloading methods under different intelligent terminal energy constraints, which indicates the effectiveness of the proposed computational offloading algorithms. Moreover, the performance of the DDPG-based offloading paradigm is slightly better than the DQN-based offloading paradigm.

Figure 11.

The delay comparison under different energy constraints at the intelligent terminals, where the red curve represents the delay of the DDPG-based computational offloading paradigm, the blue curve represents the delay of the DQN-based computational offloading paradigm, the yellow curve represents the delay of the “Random” policy, the purple curve represents the delay of the “Local computing” policy, and the green curve represents the delay of the “MEC server computing” policy.

6. Conclusions

In this paper, we propose a novel framework for the intelligent mechanical fault diagnosis system, which is a resource allocation scheme based on deep reinforcement learning for offloading diagnostic data of multiple intelligent terminals. The optimization parameters and optimization objectives can be determined by modeling the data offloading scenario of the intelligent fault diagnosis system. Two deep reinforcement learning algorithms, i.e., DQN-based offloading strategy and DDPG-based offloading strategy, are investigated to solve the formulaic offloading optimization problem for obtaining the lowest latency. Comparing the different offloading schemes shows that the proposed deep reinforcement learning-based learning approach can reduce task processing latency under different system parameters. The intelligent fault diagnosis framework proposed in this paper allows easier access to other intelligent technologies, such as deep learning techniques for data calibration, federated learning techniques, and blockchain technologies for protecting user data privacy.

Author Contributions

Conceptualization, R.W. (Rui Wang), L.Y. and Q.G.; methodology, R.W. (Rui Wang); software, M.S. and F.Y.; validation, R.W. (Ran Wang); formal analysis, L.Y., Q.G. and R.W. (Rui Wang); resouces, R.W. (Rui Wang); data curation, L.Y.; writing—original draft preparation, L.Y. and Q.G.; writing—review and editing, L.Y., Q.G. and R.W. (Rui Wang); project administration, L.Y. and R.W. (Rui Wang); funding acquisition, R.W. (Rui Wang), L.Y. and R.W. (Ran Wang). All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Science Foundation of China under Grant 62271352, the National Natural Science Foundation of China under Grant 12074254 and 51505277, the Natural Science Foundation of Shanghai under Grant 21ZR1434100, and Shanghai Science and Technology Innovation Action Plan Project No. 21220713100.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zhang, M.; Chen, J.; He, S.; Yang, L.; Gong, X.; Zhang, J. Privacy-preserving database assisted spectrum access for industrial internet of things: A distributed learning approach. IEEE Trans. Ind. Electron. 2019, 67, 7094–7103. [Google Scholar] [CrossRef]

- Yang, B.; Xu, S.; Lei, Y.; Lee, C.G.; Stewart, E.; Roberts, C. Multi-source transfer learning network to complement knowledge for intelligent diagnosis of machines with unseen faults. Mech. Syst. Signal Process. 2022, 162, 108095. [Google Scholar] [CrossRef]

- Azamfar, M.; Li, X.; Lee, J. Intelligent ball screw fault diagnosis using a deep domain adaptation methodology. Mech. Mach. Theory 2020, 151, 103932. [Google Scholar] [CrossRef]

- Wang, R.; Zhang, C.; Yu, L.; Fang, H.; Hu, X. Rolling Bearing Weak Fault Feature Extraction under Variable Speed Conditions via Joint Sparsity and Low-Rankness in the Cyclic Order-Frequency Domain. Appl. Sci. 2022, 12, 2449. [Google Scholar] [CrossRef]

- Qin, C.; Jin, Y.; Zhang, Z.; Yu, H.; Tao, J.; Sun, H.; Liu, C. Anti-noise diesel engine misfire diagnosis using a multi-scale CNN-LSTM neural network with denoising module. CAAI Trans. Intell. Technol. 2023, 1–24. [Google Scholar] [CrossRef]

- Wang, H.; Liu, C.; Du, W.; Wang, S. Intelligent Diagnosis of Rotating Machinery Based on Optimized Adaptive Learning Dictionary and 1DCNN. Appl. Sci. 2021, 11, 11325. [Google Scholar] [CrossRef]

- Li, W.; Huang, R.; Li, J.; Liao, Y.; Chen, Z.; He, G.; Yan, R.; Gryllias, K. A perspective survey on deep transfer learning for fault diagnosis in industrial scenarios: Theories, applications and challenges. Mech. Syst. Signal Process. 2022, 167, 108487. [Google Scholar] [CrossRef]

- Wang, X.; Wang, T.; Ming, A.; Zhang, W.; Li, A.; Chu, F. Semi-supervised hierarchical attribute representation learning via multi-layer matrix factorization for machinery fault diagnosis. Mech. Mach. Theory 2022, 167, 104445. [Google Scholar] [CrossRef]

- Chen, Z.; Wu, J.; Deng, C.; Wang, C.; Wang, Y. Residual deep subdomain adaptation network: A new method for intelligent fault diagnosis of bearings across multiple domains. Mech. Mach. Theory 2022, 169, 104635. [Google Scholar] [CrossRef]

- Lei, Y.; Yang, B.; Jiang, X.; Jia, F.; Li, N.; Nandi, A.K. Applications of machine learning to machine fault diagnosis: A review and roadmap. Mech. Syst. Signal Process. 2020, 138, 106587. [Google Scholar] [CrossRef]

- Wang, H.; Liu, C.; Jiang, D.; Jiang, Z. Collaborative deep learning framework for fault diagnosis in distributed complex systems. Mech. Syst. Signal Process. 2021, 156, 107650. [Google Scholar] [CrossRef]

- Deng, H.; Diao, Y.; Wu, W.; Zhang, J.; Ma, M.; Zhong, X. A high-speed D-CART online fault diagnosis algorithm for rotor systems. Appl. Intell. 2020, 50, 29–41. [Google Scholar] [CrossRef]

- Zhang, Z.; Guan, C.; Chen, H.; Yang, X.; Gong, W.; Yang, A. Adaptive Privacy-Preserving Federated Learning for Fault Diagnosis in Internet of Ships. IEEE Internet Things J. 2021, 9, 6844–6854. [Google Scholar] [CrossRef]

- Iqbal, R.; Maniak, T.; Doctor, F.; Karyotis, C. Fault detection and isolation in industrial processes using deep learning approaches. IEEE Trans. Ind. Inform. 2019, 15, 3077–3084. [Google Scholar] [CrossRef]

- Pan, T.; Chen, J.; Zhou, Z.; Wang, C.; He, S. A novel deep learning network via multiscale inner product with locally connected feature extraction for intelligent fault detection. IEEE Trans. Ind. Inform. 2019, 15, 5119–5128. [Google Scholar] [CrossRef]

- Liu, S.; Guo, C.; Al-Turjman, F.; Muhammad, K.; de Albuquerque, V.H.C. Reliability of response region: A novel mechanism in visual tracking by edge computing for IIoT environments. Mech. Syst. Signal Process. 2020, 138, 106537. [Google Scholar] [CrossRef]

- Kumar, K.; Liu, J.; Lu, Y.H.; Bhargava, B. A survey of computation offloading for mobile systems. Mob. Netw. Appl. 2013, 18, 129–140. [Google Scholar] [CrossRef]

- Nilsen, J.M.; Park, J.H.; Yun, S.; Kang, J.M.; Jung, H. Competing Miners: A Synergetic Solution for Combining Blockchain and Edge Computing in Unmanned Aerial Vehicle Networks. Appl. Sci. 2022, 12, 2581. [Google Scholar] [CrossRef]

- Peng, Y.; Liu, Y.; Li, D.; Zhang, H. Deep Reinforcement Learning Based Freshness-Aware Path Planning for UAV-Assisted Edge Computing Networks with Device Mobility. Remote Sens. 2022, 14, 4016. [Google Scholar] [CrossRef]

- Huda, S.A.; Moh, S. Survey on computation offloading in UAV-Enabled mobile edge computing. J. Netw. Comput. Appl. 2022, 201, 103341. [Google Scholar] [CrossRef]

- Liao, L.; Lai, Y.; Yang, F.; Zeng, W. Online Computation Offloading with Double Reinforcement Learning Algorithm in Mobile Edge Computing. J. Parallel Distrib. Comput. 2023, 171, 28–39. [Google Scholar] [CrossRef]

- Lu, W.; Mo, Y.; Feng, Y.; Gao, Y.; Zhao, N.; Wu, Y.; Nallanathan, A. Secure transmission for multi-UAV-assisted mobile edge computing based on reinforcement learning. IEEE Trans. Netw. Sci. Eng. 2022, 1–12. [Google Scholar] [CrossRef]

- Guo, Y.; Zhao, R.; Lai, S.; Fan, L.; Lei, X.; Karagiannidis, G.K. Distributed machine learning for multiuser mobile edge computing systems. IEEE J. Sel. Top. Signal Process. 2022, 16, 460–473. [Google Scholar] [CrossRef]

- Esposito, C.; Castiglione, A.; Pop, F.; Choo, K.K.R. Challenges of connecting edge and cloud computing: A security and forensic perspective. IEEE Cloud Comput. 2017, 4, 13–17. [Google Scholar] [CrossRef]

- Liu, Y.; Peng, M.; Shou, G.; Chen, Y.; Chen, S. Toward edge intelligence: Multiaccess edge computing for 5G and Internet of Things. IEEE Internet Things J. 2020, 7, 6722–6747. [Google Scholar] [CrossRef]

- Wu, D.; Huang, X.; Xie, X.; Nie, X.; Bao, L.; Qin, Z. LEDGE: Leveraging edge computing for resilient access management of mobile IoT. IEEE Trans. Mob. Comput. 2019, 20, 1110–1125. [Google Scholar] [CrossRef]

- Cui, Q.; Zhang, J.; Zhang, X.; Chen, K.C.; Tao, X.; Zhang, P. Online anticipatory proactive network association in mobile edge computing for IoT. IEEE Trans. Wirel. Commun. 2020, 19, 4519–4534. [Google Scholar] [CrossRef]

- Mao, Y.; Zhang, J.; Letaief, K.B. Dynamic computation offloading for mobile-edge computing with energy harvesting devices. IEEE J. Sel. Areas Commun. 2016, 34, 3590–3605. [Google Scholar] [CrossRef]

- Barbarossa, S.; Sardellitti, S.; Di Lorenzo, P. Communicating while computing: Distributed mobile cloud computing over 5G heterogeneous networks. IEEE Signal Process. Mag. 2014, 31, 45–55. [Google Scholar] [CrossRef]

- Zhang, W.; Wen, Y.; Guan, K.; Kilper, D.; Luo, H.; Wu, D.O. Energy-optimal mobile cloud computing under stochastic wireless channel. IEEE Trans. Wirel. Commun. 2013, 12, 4569–4581. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, H.; Jiao, L.; Fu, X. To offload or not to offload: An efficient code partition algorithm for mobile cloud computing. In Proceedings of the 2012 IEEE 1st International Conference on Cloud Networking (CLOUDNET), Paris, France, 28–30 November 2012; pp. 80–86. [Google Scholar]

- Mahmoodi, S.E.; Uma, R.; Subbalakshmi, K. Optimal joint scheduling and cloud offloading for mobile applications. IEEE Trans. Cloud Comput. 2016, 7, 301–313. [Google Scholar] [CrossRef]

- Lu, H.; Gu, C.; Luo, F.; Ding, W.; Liu, X. Optimization of lightweight task offloading strategy for mobile edge computing based on deep reinforcement learning. Future Gener. Comput. Syst. 2020, 102, 847–861. [Google Scholar] [CrossRef]

- Wang, D.; Tian, X.; Cui, H.; Liu, Z. Reinforcement learning-based joint task offloading and migration schemes optimization in mobility-aware MEC network. China Commun. 2020, 17, 31–44. [Google Scholar] [CrossRef]

- Zhao, R.; Wang, X.; Xia, J.; Fan, L. Deep reinforcement learning based mobile edge computing for intelligent Internet of Things. Phys. Commun. 2020, 43, 101184. [Google Scholar] [CrossRef]

- Ren, Y.; Sun, Y.; Peng, M. Deep reinforcement learning based computation offloading in fog enabled industrial Internet of Things. IEEE Trans. Ind. Inform. 2020, 17, 4978–4987. [Google Scholar] [CrossRef]

- Min, M.; Xiao, L.; Chen, Y.; Cheng, P.; Wu, D.; Zhuang, W. Learning-based computation offloading for IoT devices with energy harvesting. IEEE Trans. Veh. Technol. 2019, 68, 1930–1941. [Google Scholar] [CrossRef]

- Le Thanh, T.; Hu, R.Q. Mobility-aware edge caching and computing in vehicle networks: A deep reinforcement learning. IEEE Trans. Veh. Technol. 2018, 67, 10190–10203. [Google Scholar]

- Wei, Z.; Zhao, B.; Su, J.; Lu, X. Dynamic edge computation offloading for Internet of Things with energy harvesting: A learning method. IEEE Internet Things J. 2018, 6, 4436–4447. [Google Scholar] [CrossRef]

- Zhang, J.; Du, J.; Shen, Y.; Wang, J. Dynamic computation offloading with energy harvesting devices: A hybrid-decision-based deep reinforcement learning approach. IEEE Internet Things J. 2020, 7, 9303–9317. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).