1. Introduction

With the increasing popularity of electric vehicles (EVs), the charging of EVs has also attracted much attention. At present, the common charging method in public places involves a human taking the charger off the charging pile and then inserting the charger into the charging port of the EV. However, a charger with a heavy power cable usually brings great inconvenience to the users. In addition, unlike in traditional gas stations where each employee has undergone long-term training and the possibility of mis-operation during the refueling process is very small, in terms of charging electric vehicles, there is a potential safety hazard for the users who have not undergone strict training on how to use the charger by themselves. Therefore, in order to reduce the burden on users and eliminate potential safety hazards, the use of robots to automatically charge EVs has been proposed as an alternative solution [

1,

2,

3].

Recently, research on the scenario of automatic charging for EVs has mainly focused on robot control, trajectory planning, and visual positioning of charging ports [

4,

5,

6], while less attention has been paid to safety issues, even though safety is crucial during the automatic charging process. In general, in robot application scenarios, accidental contact or collision with the robot is the main safety concern. In order to deal with such problems, some research has explored utilizing a vision system to avoid accidental collisions [

7,

8,

9]. However, such methods will fail in the blind spots of the system, and this situation is usually unavoidable in practice. Therefore, in order to improve the safety of robots, research on contact perception is necessary.

The contact or collision problem of robots is a very open problem for different application scenarios. These scenarios can be roughly divided into two categories based on whether humans and robots share the workspace or not. In the scenario where humans and robots share the workspace, human safety should be the focus. In this scenario, the threat of the robot to humans often comes from the link of the robot arm rather than the end effector. Considering this situation, it is possible to use model-based methods to analyze and detect the contact [

10], determine whether the contact is intentional or accidental [

11], and identify the location of the collision [

12]. In addition, these works can provide a basis for planning the reasonable response of the robot after contact [

13]. Due to the need to artificially set thresholds for the signals used in practice, the noise in different sensors and the complexity of the contact will influence the flexibility and robustness of the method. In the same scenario, the data-driven method is an alternative. For instance, in [

14], an RNN-based model is used to realize collision classification. Similarly, in [

15], a combination of the Generalized Momentum Observer and the NN method is used to realize the collision classification while also judging whether the collision occurred on the upper or lower part of the robot. However, because the motion of humans has a high degree of randomness, and different motion states of the robot will significantly affect the contact state, it is very difficult to obtain comprehensive contact data. This difficulty in obtaining comprehensive data can be referred to as mode difficulty in data acquisition. To alleviate the mode difficulty in this scenario, it is often necessary to assume that when the robot is in contact with the human, the human is quasi-static and the contact posture with the robot is fixed, and then the contact between the robot and the human is analyzed. However, these assumptions tend to weaken the practical effect of the method. In the other scenarios, where no shared workspace is required, in order to ensure that the robot is able to perform the manipulation tasks reasonably, the contact perception of the robot to the object is also needed. In contrast to the previous scenario, in order to perceive and grasp the objects in a reasonable pose, the contact localization of the end effector to the object is more important. Recent work has attempted to utilize tactile sensors for high-accuracy contact localization [

16,

17]. These tactile sensors are often mounted on specific end effectors, such as dexterous hands, U-shaped graspers, etc. Using a dense array arrangement on an extremely small surface area, the contact positioning accuracy of such a method can even reach the sub-millimeter level [

16]. Nevertheless, in the scenario where the required contact frequency is high, the contact load is large, or there is an impact load, the sensor is likely to suffer from the memory effect upon making contact, resulting in a decrease in the robustness [

18].

In the scenario of the automatic charging of EVs, with the development of automatic driving and automated valet parking (AVP), a large part of the automatic charging of electric vehicles in the future will be carried out in unmanned scenarios. In such an unmanned scenario, there is often no need to pay much attention to whether the robotic arm will threaten the safety of the surrounding people during its operation, and thus, ensuring the safety of the vehicle–robot interaction is more important. In general, in this scenario, for different types of robotic devices, the difficulty in end-effector contact analysis is different. The current charging robots for EVs can be divided into two types: the non-integrated charger type and the integrated charger type. In the non-integrated charger type, the charger and the robot are independent of each other. Before each charging, the robot needs to grab the charger from the charging pile. In the integrated charger type, the charger and the robot are connected, and the grabbing process of the charger can be omitted. Compared to the integrated charger type, using a robot to automatically grab the charger can result in a pose error of the charger. This error not only complicates the charging process but also makes contact analysis more difficult. Thus, the robots currently researched for automatic charging of electric vehicles are mainly charger-integrated [

1,

2,

3]. Therefore, this work focuses on the contact problem of the charger-integrated robot. In our previous work, we explored the feasibility of using a supervised learning method to realize collision classification and collision localization for a charger-integrated, cable-driven manipulator when the charger and the charging port are in contact [

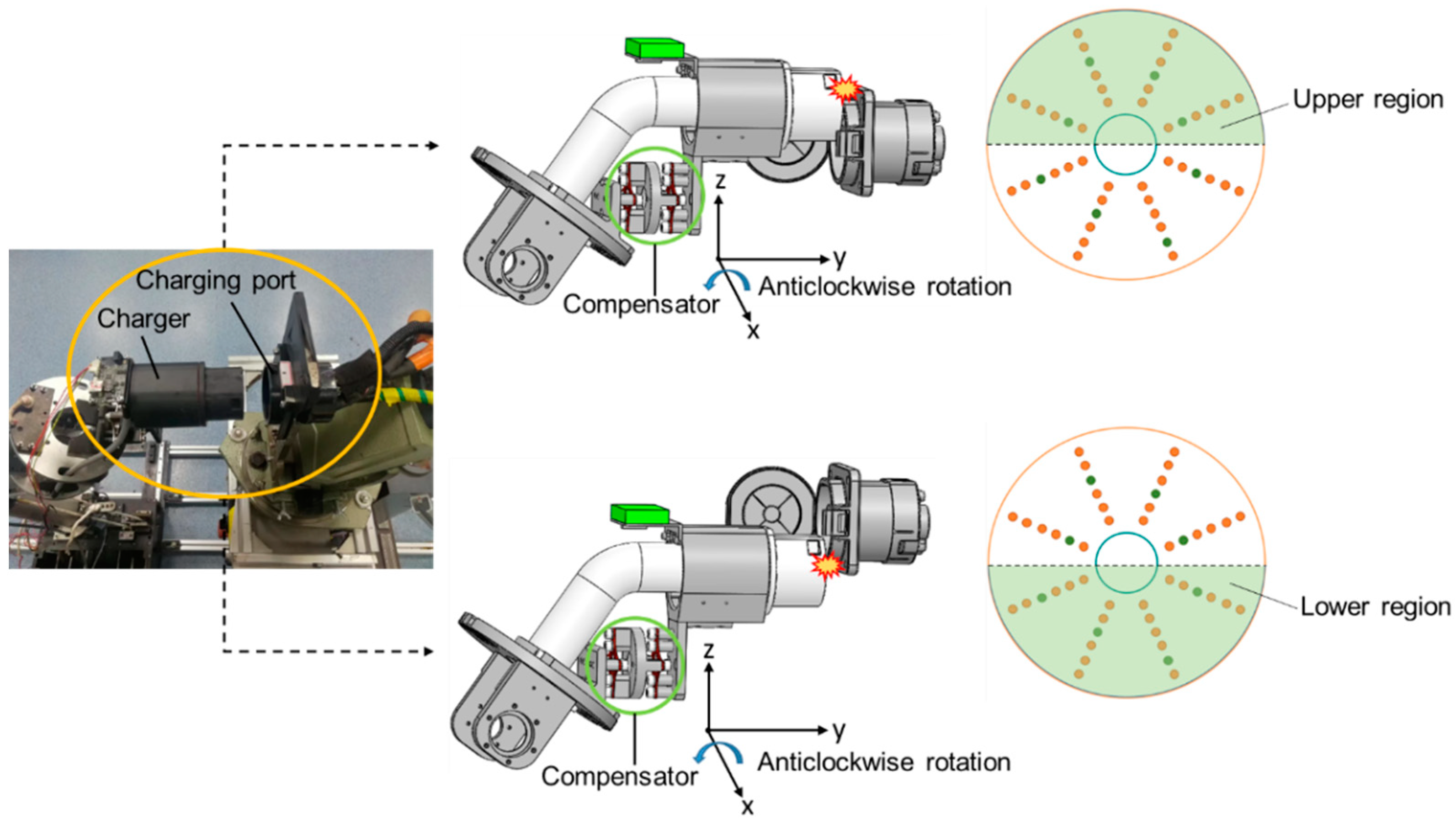

19]. To alleviate the mode difficulty during data collection, we designed an mm scale area on the charging port and set pre-specified collision points in this region. Both the training set and the test set are these pre-specified collision points. The difference between the two sets is that the joint configurations, corresponding to the collision points at the same position in the specified region, are different. Using the above method, in order to localize a random collision point in such a region, the pre-specified collision points need to contain the random collision point. This will greatly increase the time needed for data collection in practical applications. In this work, to alleviate this situation, we explore an approach in which the entire region is divided into several sub-regions, and the pre-specified collision points in the sub-regions are used to predict the positions of collision points that have never been seen before. Here, we refer to these collision points that have never been seen before as zero-shot collision points. In the process of data collection, we found that when the central axis of the elastic compensator and the central axis of the end link of the manipulator do not coincide, the vibration caused by the collision between the charger and the upper part of the charging port will have a certain degree of similarity to the vibration caused by the collision between the charger and the lower part of the charging port. The main target of this article is to reduce the impact of that similarity on the localization results, while realizing zero-shot collision point localization, by proposing a two-stage collision localization scheme.

The rest of the paper is organized as follows.

Section 2 reviews related works on collision localization.

Section 3 describes the details of the datasets.

Section 4 presents the architecture of our proposed method.

Section 5 gives and discusses the experimental results, and

Section 6 concludes the paper.

2. Related Work

As demonstrated in [

20], the collision localization problem is essentially a classification problem. Unlike the collision classification task, which cares whether the collision is accidental or intentional, collision localization can be considered as a multi-classification problem with data acquisition boundary condition constraints. Collision localization often provides information for the subsequent collision response or assists in the completion of collision classification to improve the reliability of collision classification. Since the process of collision has temporal characteristics, this kind of classification problem can often be converted into a classification problem of time series signals.

Recently, related work has mainly used two types of signal-processing methods. One type consists of machine learning methods relying on manual feature extraction. In [

20], the joint torque signal was collected in a specific motion mode, a variety of machine learning classifiers were used to filter out artificial features, and, finally, online collision classification was realized using NN and Bayesian decision theory. In [

21], an artificial neural network was used to analyze the time domain and frequency domain characteristics of the vibration signal caused by the collision and then determine the collision localization from preset positions on different arms. Although this kind of method using artificial features is cheap in terms of classifier training and actual engineering application deployment, the manual extraction of features often relies on expert knowledge, and, when using such features, it is often impossible to update the corresponding features according to the classification results. When encountering complex problems, the effectiveness of such a method will decrease. In our previous work, we confirmed that using this kind of feature engineering method to deal with small-scale collision problems is not ideal. The other kind of method used in the literature is the automatic feature extraction method, which is capable of using raw data directly without prior feature engineering. The representative types are the RNN-based method and the CNN-based method. For example, in [

14], the RNN-based method was used to solve the classification problem of distinguishing between intentional and accidental collisions between humans and robots, and it achieved good results. However, there are very few studies using RNN-based methods to explore the problem of robot collision localization. Theoretically, the RNN-based method has natural advantages for time-series signal processing; LSTM-based and GRU-based methods, especially, are widely used in EEG analysis [

22], music emotion classification [

23,

24], and body pose estimation [

25,

26,

27]. In our previous work, it was confirmed that the effect of using a two-layer CNN is slightly better than using LSTM when locating the pre-specified collision points. Therefore, CNN is used as the basis of this work.

Despite recent progress in collision localization using both artificial feature extraction and automatic feature extraction methods, there are still limitations in the existing studies. Most existing methods focus on large-scale collision localization problems, such as determining which link of the robot arm a collision occurred on. However, it is unclear whether these methods can be applied to small-scale collision problems, and the effectiveness of the signal used and the structure of the device must be considered. In some cases, it may be necessary to add external structures or sensors to the robot, and the suitability of the scenario used must also be considered. In our previous work, we proposed a data-driven method using external compensator vibration signals for studying small-scale collision localization problems in the context of electric vehicle automatic charging scenarios and achieved some success. However, our previous work mainly focused on studying the effect of the robot arm’s joint configuration and region partition schemes on collision localization and did not pay much attention to two additional crucial aspects of collision localization: (1) reducing the data collection cost required for data-driven collision localization methods and (2) suppressing the effects of signal similarity on collision localization caused by environmental factors. These are critical issues that need to be addressed to improve the accuracy and applicability of collision localization methods. Therefore, our current research focuses on addressing these two aspects of collision localization.

As demonstrated in

Section 1, the asymmetric installation of the charger to the end of the manipulator will cause a similarity in the vibration signal of the compensator during a collision between the charger and the charging port. In general, the similarity may interfere with data-driven collision localization. However, there are very few current studies on how to reduce the impact of similarity on collision localization. In order to fill this gap, inspired by the divide-and-conquer method proposed in [

28], before finely localizing the collision point, we first perform a rough localization on the collision point to distinguish whether the collision point is in the upper region or the lower region of the charging port. In addition, this can ensure that the overall approach has a better focus on the fine localization process. The main contributions of this paper are as follows:

For the first time, we propose to use the vibration information from the elastic compensator corresponding to the pre-specified collision points to predict the location of the zero-shot collision point in the small-scale region, which helps to reduce the cost of data collection to some extent.

Considering the similarity in the collision signal caused by the asymmetric installation of the end effector relative to the end link, a two-stage collision localization method is proposed. The rough localization stage of the method can reduce the effect of the vibration similarity and improve the ability of the classifier to produce promising results in the fine localization stage.

4. Proposed Two-Stage Collision Localization Method

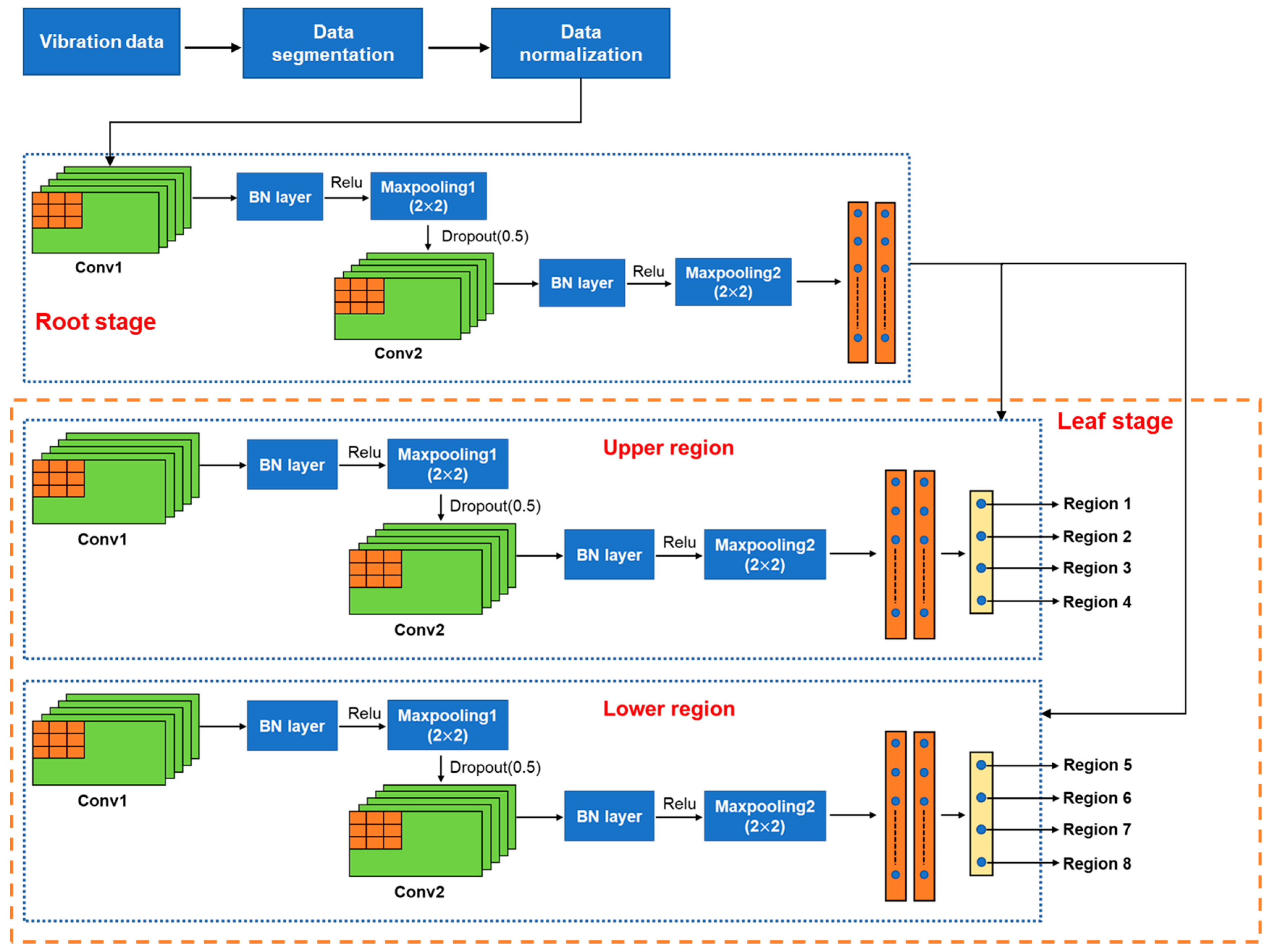

In this section, our proposed method, a two-stage convolutional neural network, is introduced. In our scheme, the structure of the two-stage model is in binary tree form, and the results of the root stage will decide which leaf stage should be activated. Therefore, our method is called RL-CNN. Such a structure is designed to isolate regions where similar collision behavior occurs in order to improve localization accuracy. In the root stage, the task is to identify whether the collision happens in the upper region or the lower region. In the leaf stage, the model predicts in which fine-divided region the zero-shot collision point is located. As the whole area is partitioned into eight finely divided regions, the task in each leaf stage is essentially to solve a four-classification problem with zero-shot samples.

4.1. Baseline

CNN has been proved to be effective in numerous applications, such as brain tumor classification [

29], hyperspectral images classification [

30], remote sensing data classification [

31], and so on. Due to the different application scenarios, there is an endless variety in variant structures of the CNN. Among the variants of CNNs, the classic models are: AlexNet [

32], VGG [

33], and ResNet [

34]. AlexNet is mainly composed of five convolutional layers and two fully connected layers, and an innovative ReLU activation function was introduced into the structure. For most image classification problems, AlexNet has been proven to be effective. However, because it uses convolutional kernels with large sizes, when the network gets deeper, the computation burden is considerably increased. Compared to AlexNet, VGG uses 3 × 3 convolutional kernels to alleviate the problem above and proves that deeper networks generally have a stronger fitting ability. Although deep networks can achieve better results when dealing with complex image classification problems, merely increasing the depth of the network may be counterproductive. Essentially, a deep network that is built by simply stacking layers will face the problem of degradation. To solve this problem, ResNet utilizes skip connections to realize identity mapping with shallow networks. This method makes very deep network training possible. Although these methods have excellent performance in image classification, they may not be suitable for real-time application scenarios because too many network layers and too many parameters will increase the computational cost. In addition, when the input length is considerably longer than the width, with the increase in the number of network layers, the two-dimensional features will degrade into one-dimensional features, which leads to the degradation in the classification ability of the model. Therefore, we chose the structure proposed by our previous work as the baseline for both the root and leaf stages [

19]. The details of the baseline structure are shown in

Table 2.

4.2. Proposed RL-CNN Method

Figure 4 shows the proposed RL-CNN. After collecting data from IMU, the data will be segmented as described in

Section 3, and then the segmented data will be normalized to [0, 1]. After the above preprocessing, we use the RL-CNN to perform the zero-shot collision point localization. In the root and leaf stages, the structures are similar. Their common parts consist of two convolutional layers, two max pooling layers, and three fully connected layers. The output of each convolutional layer will be batch normalized and then activated using the ReLU function. The purpose of using batch normalization in such a shallow network is to suppress the network’s over-understanding of visible data and improve the network’s ability to classify zero-shot data. For the same reason, a dropout layer is stacked after the first max pooling layer, and the rate is set to 0.5. In the root stage, the network is mainly used to distinguish whether the contact occurs in the upper region or the lower region of the charger. According to the output of the root stage, which leaf stage should be subsequently activated is determined. The only difference between the network used in the leaf stage and that used in the root stage is that the last layer in the network in the leaf stage uses a four-node fully connected layer with a softmax activation function. The leaf stages are mainly designed to estimate where the zero-shot collision point occurs in the finely divided region. For more details, the parameters of each layer are shown in

Table 3.

In order to provide a more general understanding of RL-CNN, we present the mathematical analysis of RL-CNN. Since the two stages of RL-CNN are highly similar, for the sake of clarity, we focus on one of the stages here. For an input vibration signal

of size (

,

,

), where

,

, and

represent the length of the input, the width of the input, and the channel of the input, respectively, assuming that the kernel size of the convolutional layer is (

,

), the channel in the convolutional layer is

, the stride is

, and then the feature extracted by the convolutional layer can be described as:

where

and

are the weight matrix and bias vector of the convolutional layer, respectively. In the convolution operation, the index

and

correspond to the spatial location of the output feature map. The index

and

represent the number of the input channel and the output channel, respectively.

represents the activation function. Assuming the padding is

, the output of the convolutional layer, denoted by

, is a tensor of size (

,

,

), representing the activation maps of the convolutional layer. Among this process, the batch normalization is applied to the feature maps before the feature maps are activated to lead to improved accuracy and faster convergence during training.

In addition to the convolutional layer, we also incorporate a max pooling layer into our model. The max pooling operation is applied to each activation map independently, and when assuming the stride in the max pooling is also set as , the output of the max pooling layer is a tensor of size (, , ), where the pooling window size is (,). After the last max pooling layer, the output feature maps are flattened and passed through two or three fully connected layers. Finally, the output of the last fully connected layer is activated by the softmax activation function in order to serve as a criterion for collision localization.

When considering the time complexity of the proposed RL-CNN, it is necessary to analyze the time complexity of each component. Here, we need to consider the time complexity of the convolutional layers, batch normalization layers, max pooling layers, and fully connected layers. Compared to these four components, the impact of the activation functions on the overall time complexity can be neglected. When using the relevant parameters provided above and ignoring the influence of bias, the time complexity of the first convolutional layer can be expressed as:

When the network is relatively shallow and the number of filters in each convolutional layer is the same, the time complexity of the first convolutional layer can represent that of a generic convolutional layer in RL-CNN. The complexity of this component can be described as:

Based on similar reasons, the computational complexity of the batch normalization and max pooling layers can be expressed as follows, respectively:

Although the number of fully connected layers in the root and leaf stages is different, for the purpose of the time complexity analysis, we only need to consider the upper bound, which can be achieved by assuming that there are three fully connected layers. Let the number of nodes in these layers be

,

, and

and let

be the dimension of the flattened output from the last max pooling layer. Then, the time complexity of the fully connected layers can be expressed as:

Then, the overall time complexity of RL-CNN can be expressed as: