RSII: A Recommendation Algorithm That Simulates the Generation of Target Review Semantics and Fuses ID Information

Abstract

1. Introduction

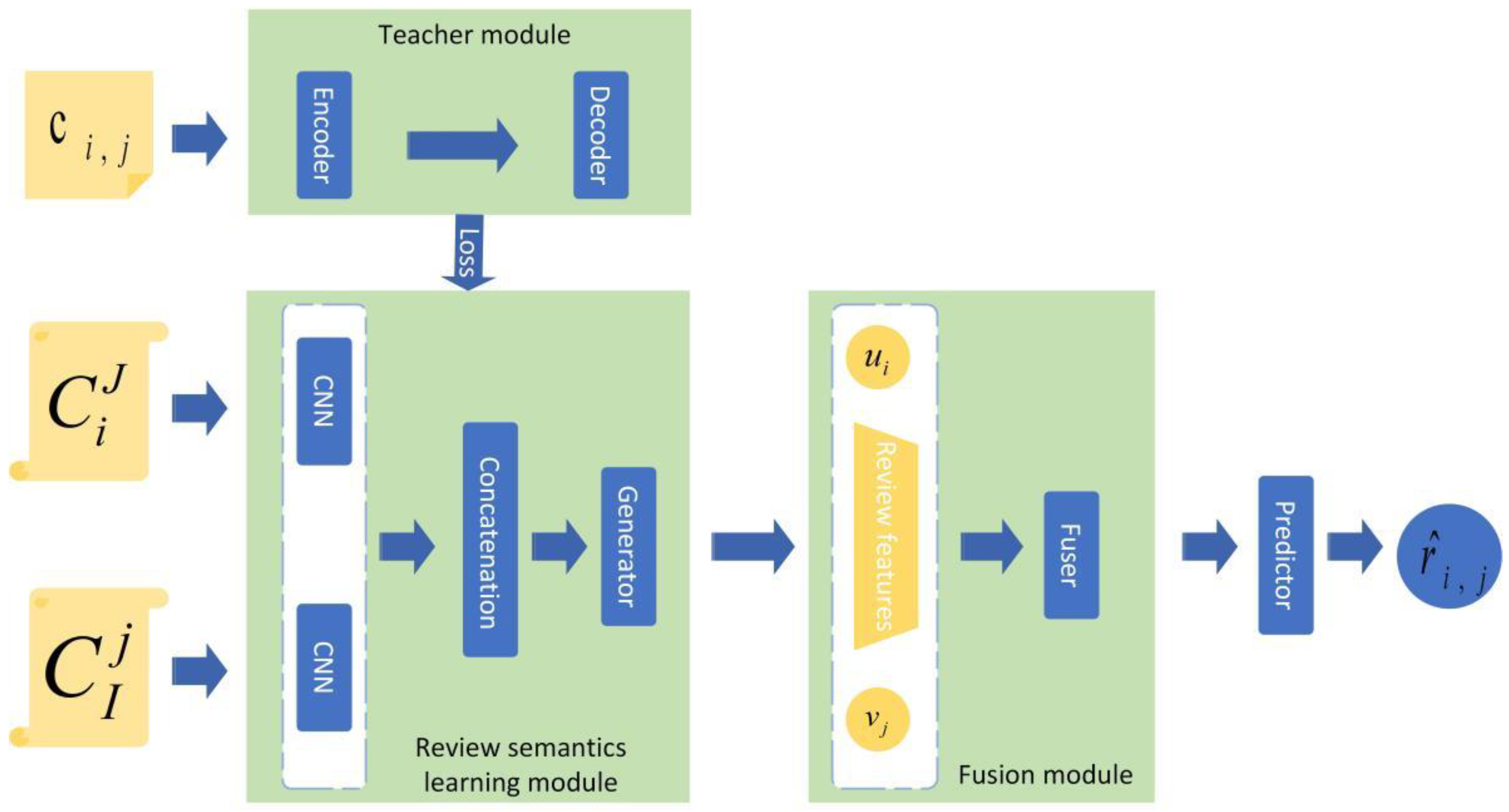

- This paper presents a review semantic learning module and a teacher module, where the teacher module could effectively guide the review semantic learning module to learn the target review semantics, thus, alleviating the problem of difficulty in obtaining the target reviews promptly.

- This paper proposes a fusion module that dynamically combines the ID information and the target review semantics to enrich the expression of predictive features, thereby alleviating the problem of inconsistency between the target reviews and ratings.

- The RSII model was extensively tested on three public datasets. The results showed that compared with seven state-of-the-art models, the RSII model improved the MSE metric by 8.81% and the MAE metric by 10.29%.

2. Related Work

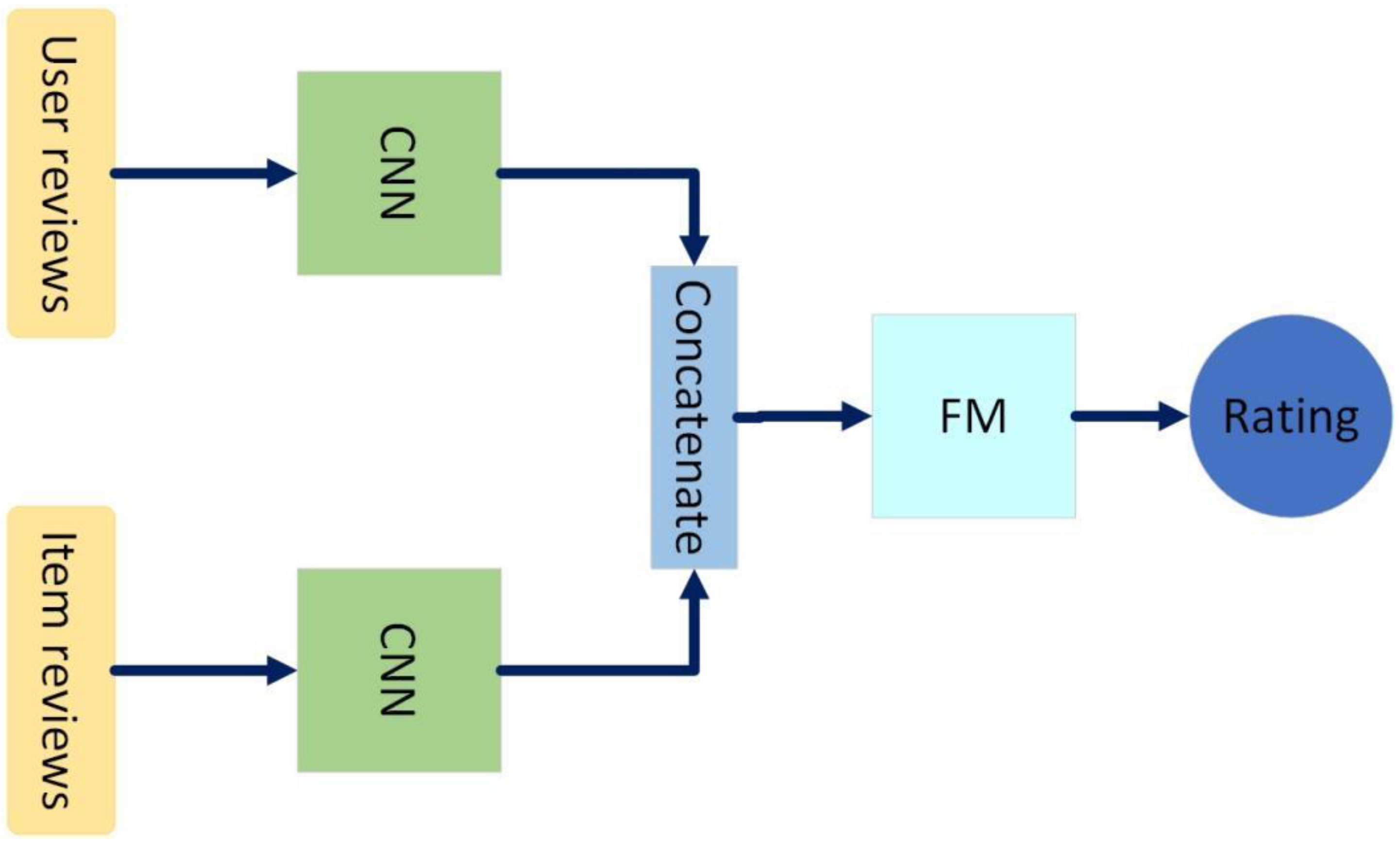

2.1. Review-Based Recommendations

2.2. Self-Teacher Learning

3. Preliminaries

3.1. Notation

3.2. Word Embedding

4. Proposed RSII Model

4.1. The Teacher Module

4.2. The Review Semantics Learning Module

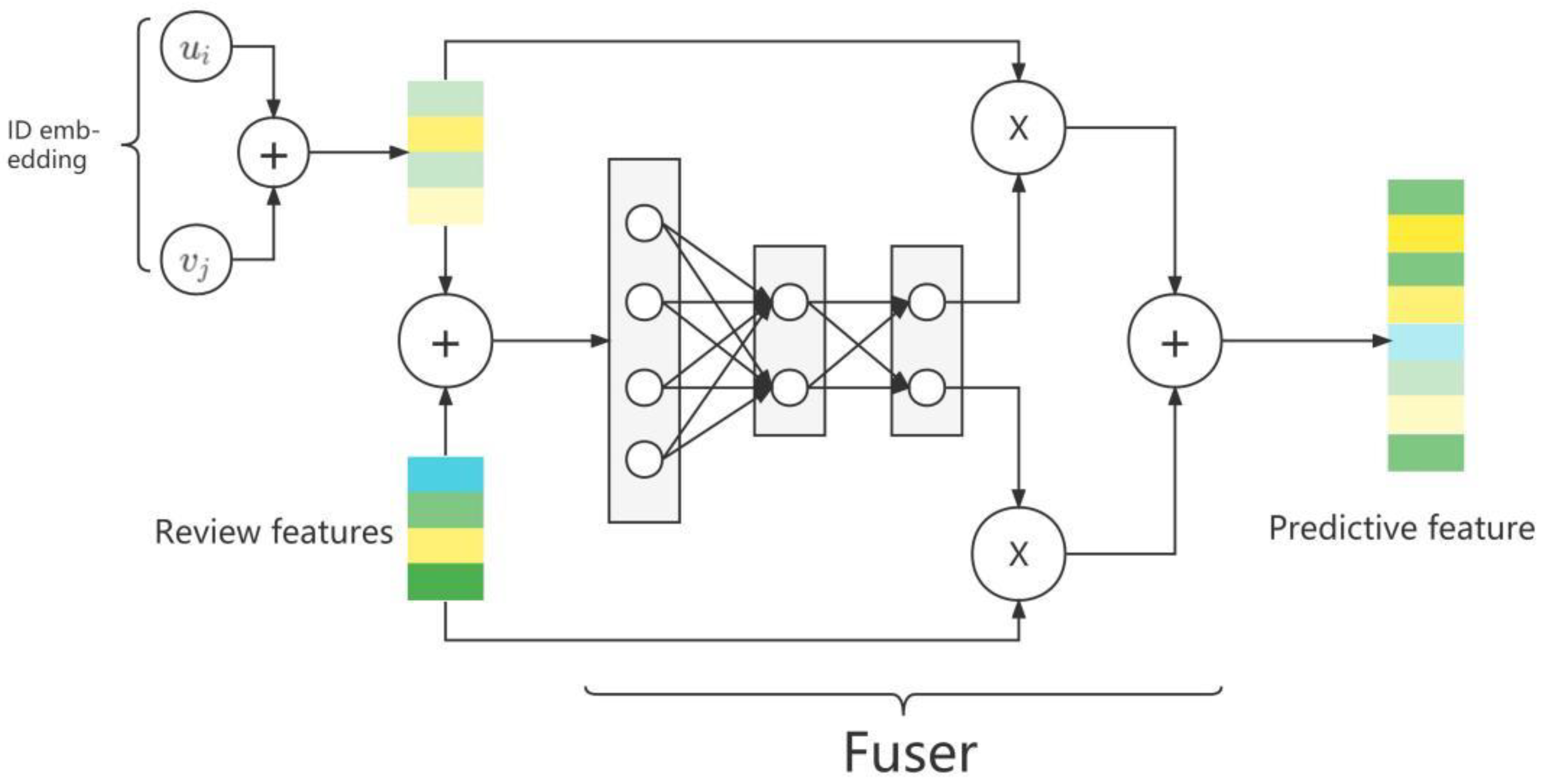

4.3. The Fusion Module

4.4. Predictor

5. Experimental Section

5.1. Datasets and Evaluation Metric

5.2. Baseline Method

5.3. Comparison of Experimental Results

5.4. Ablation Experiment

- RSII_si: Remove the teacher module and the fusion module.

- RSII_i: Remove the fusion module and retain the teacher module.

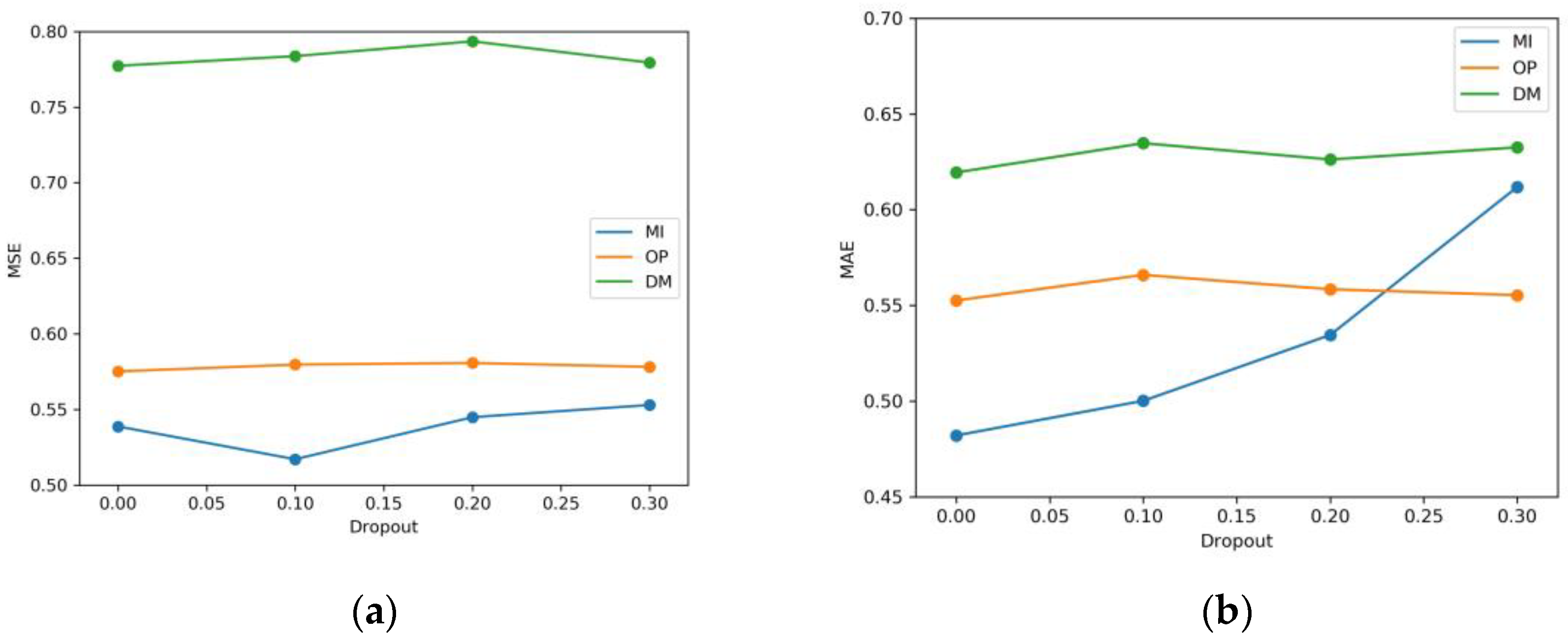

5.5. The Impact of Different Parameters on the Model

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Srifi, M.; Oussous, A.; Ait Lahcen, A.; Mouline, S. Recommender Systems Based on Collaborative Filtering Using Review Texts—A Survey. Information 2020, 11, 317. [Google Scholar] [CrossRef]

- Kim, T.-Y.; Ko, H.; Kim, S.-H.; Kim, H.-D. Modeling of Recommendation System Based on Emotional Information and Collaborative Filtering. Sensors 2021, 21, 1997. [Google Scholar] [CrossRef] [PubMed]

- Margaris, D.; Vassilakis, C.; Spiliotopoulos, D. On Producing Accurate Rating Predictions in Sparse Collaborative Filtering Datasets. Information 2022, 13, 302. [Google Scholar] [CrossRef]

- Zheng, L.; Noroozi, V.; Yu, P.S. Joint deep modeling of users and items using reviews for recommendation. In Proceedings of the Tenth ACM International Conference on Web Search and Data Mining, Cambridge, UK, 6–10 February 2017; pp. 425–434. [Google Scholar]

- Zhao, H.; Min, W.; Xu, J.; Han, Q.; Wang, Q.; Yang, Z.; Zhou, L. SPACE: Finding key-speaker in complex multi-person scenes. IEEE Trans. Emerg. Topics Comput. 2021, 10, 1645–1656. [Google Scholar] [CrossRef]

- Wang, Q.; Min, W.; Han, Q.; Liu, Q.; Zha, C.; Zhao, H.; Wei, Z. Inter-domain adaptation label for data augmentation in vehicle re-identification. IEEE Trans. Multimed. 2022, 24, 1031–1041. [Google Scholar] [CrossRef]

- Teicholz, P. BIM for Facility Managers; John Wiley & Sons: Hoboken, NJ, USA, 2013. [Google Scholar]

- Becerik-Gerber, B.; Jazizadeh, F.; Li, N.; Calis, G. Application areas and data requirements for BIM-enabled facilities management. J. Constr. Eng. Manag. 2011, 138, 431–442. [Google Scholar] [CrossRef]

- Catherine, R.; Cohen, W. TransNets: Learning to Transform for Recommendation. In Proceedings of the Eleventh ACM Conference on Recommender Systems (RecSys ’17), Como, Italy, 27–31 August 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 288–296. [Google Scholar]

- Seo, S.; Huang, J.; Yang, H.; Liu, Y. Interpretable convolutional neural networks with dual local and global attention for review rating prediction. In Proceedings of the Eleventh ACM Conference on Recommender Systems, Como, Italy, 27–31 August 2017; pp. 297–305. [Google Scholar]

- Chen, C.; Zhang, M.; Liu, Y.; Ma, S. Neural attentional rating regression with review explanations. In Proceedings of the 2018 World Wide Web Conference, Lyon, France, 23–27 April 2018; pp. 1583–1592. [Google Scholar]

- Tay, Y.; Luu, A.T.; Hui, S.C. Multi-pointer co-attention networks for recommendation. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018; pp. 2309–2318. [Google Scholar]

- Shao, J.; Qin, J.; Zeng, W.; Zheng, J. Multipointer Coattention Recommendation with Gated Neural Fusion between ID Embedding and Reviews. Appl. Sci. 2022, 12, 594. [Google Scholar] [CrossRef]

- Richa, B.P. Trust and distrust based cross-domain recommender system. Appl. Artif. Intell. 2021, 35, 326–351. [Google Scholar] [CrossRef]

- Meo, P.D. Trust prediction via matrix factorisation. ACM Trans. Internet Technol. (TOIT) 2019, 19, 1–20. [Google Scholar] [CrossRef]

- Hassan, T. Trust and trustworthiness in social recommender systems Companion. In Proceedings of the 2019 World Wide Web Conference, San Francisco, CA, USA, 13–17 May 2019; pp. 529–532. [Google Scholar]

- Huang, J.; Rogers, S.; Joo, E. Improving restaurants by extracting subtopics from yelp reviews. In Proceedings of the iConference 2014 (Social Media Expo), Berlin, Germany, 4–7 April 2014; iSchools: Grandville, MI, USA, 2014; pp. 1–5. [Google Scholar]

- Bao, Y.; Fang, H.; Zhang, J. Topicmf: Simultaneously exploiting ratings and reviews for recommendation. In Proceedings of the Twenty-Eighth AAAI Conference on Artificial Intelligence, Québec City, QC, Canada, 27–31 July 2014. [Google Scholar]

- Haruna, K.; Akmar Ismail, M.; Suhendroyono, S.; Damiasih, D.; Pierewan, A.C.; Chiroma, H.; Herawan, T. Context-Aware Recommender System: A Review of Recent Developmental Process and Future Research Direction. Appl. Sci. 2017, 7, 1211. [Google Scholar] [CrossRef]

- Ko, H.; Lee, S.; Park, Y.; Choi, A. A Survey of Recommendation Systems: Recommendation Models, Techniques, and Application Fields. Electronics 2022, 11, 141. [Google Scholar] [CrossRef]

- Beheshti, A.; Yakhchi, S.; Mousaeirad, S.; Ghafari, S.M.; Goluguri, S.R.; Edrisi, M.A. Towards Cognitive Recommender Systems. Algorithms 2020, 13, 176. [Google Scholar] [CrossRef]

- Wu, L.; Quan, C.; Li, C.; Wang, Q.; Zheng, B.; Luo, X. A context-aware user-item representation learning for item recommendation. ACM Trans. Inf. Syst. 2019, 37, 1–29. [Google Scholar] [CrossRef]

- Jaiswal, A.; Babu, A.R.; Zadeh, M.Z.; Banerjee, D.; Makedon, F. A Survey on Contrastive Self-teacher Learning. Technologies 2021, 9, 2. [Google Scholar] [CrossRef]

- Albelwi, S. Survey on Self-teacher Learning: Auxiliary Pretext Tasks and Contrastive Learning Methods in Imaging. Entropy 2022, 24, 551. [Google Scholar] [CrossRef]

- Zhou, K.; Wang, H.; Zhao, W.X.; Zhu, Y.; Wang, S.; Zhang, F. S3-Rec: Self-teacher learning for sequential recommendation with mutual information maximization. In Proceedings of the 29th ACM International Conference on Information and Knowledge Management, Virtual, 19–23 October 2020; ACM: Dublin, Ireland, 2020; pp. 1893–1902. [Google Scholar]

- Xie, R.; Liu, Q.; Wang, L.; Liu, S.; Zhang, B.; Lin, L. Contrastive Cross-domain Recommendation in Matching. In Proceedings of the 28th ACM SIGKDD Conference on Knowledge Discovery and Data Mining (KDD ’22), Washington, DC, USA, 14–18 August 2022; Association for Computing Machinery: New York, NY, USA, 2022; pp. 4226–4236. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C.D. Glove: Global vectors for word representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- Chen, Y.; Dai, H.; Yu, X.; Hu, W.; Xie, Z.; Tan, C. Improving Ponzi Scheme Contract Detection Using Multi-Channel TextCNN and Transformer. Sensors 2021, 21, 6417. [Google Scholar] [CrossRef] [PubMed]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pretraining of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Dumitrescu, D.; Boiangiu, C.-A. A Study of Image Upsampling and Downsampling Filters. Computers 2019, 8, 30. [Google Scholar] [CrossRef]

- Makarkin, M.; Bratashov, D. State-of-the-Art Approaches for Image Deconvolution Problems, including Modern Deep Learning Architectures. Micromachines 2021, 12, 1558. [Google Scholar] [CrossRef]

- Orukwo, J.O.; Kabari, L.G. Diagnosing Diabetes Using Artificial Neural Networks. Eur. J. Eng. Res. Sci. 2020, 5, 221–224. [Google Scholar] [CrossRef]

- Takato, S.; Shin, K.; Hajime, N. Recommendation System Based on Generative Adversarial Network with Graph Convolutional Layers. J. Adv. Comput. Intell. Intell. Inform. 2021, 25, 389–396. [Google Scholar]

- Chae, D.; Kang, J.; Kim, S.; Lee, J. CFGAN: A generic collaborative filtering framework based on generative adversarial networks. In Proceedings of the 27th ACM International Conference on Information and Knowledge Management, Torino, Italy, 22–26 October 2018; pp. 137–146. [Google Scholar]

- He, R.; McAuley, J. Ups and downs: Modeling the visual evolution of fashion trends with one-class collaborative filtering. In Proceedings of the 25th International Conference on World Wide Web, Montreal, QC, Canada, 11–15 April 2016; pp. 507–517. [Google Scholar]

| Notations | Definitions |

|---|---|

| The i-th user’s rating of the j-th item | |

| The i-th user’s review of the j-th item | |

| The predicted rating of the i-th user for the j-th item | |

| The embedding size | |

| The quantized value of | |

| The semantics of | |

| The reconstruction vector of | |

| All reviews of the i-th user excluding | |

| All reviews on the j-th item excluding | |

| The generated semantic features of reviews | |

| The ID features of the i-th user and j-th item | |

| The relative coefficient between and |

| Statistics | MI | OP | DM |

|---|---|---|---|

| of users | 1429 | 4905 | 5541 |

| of items | 900 | 2420 | 3568 |

| of ratings | 10,254 | 53,237 | 64,705 |

| Sparsity | 99.20% | 99.51% | 99.67% |

| Model | MI | OP | DM | |||

|---|---|---|---|---|---|---|

| MAE | MSE | MAE | MSE | MAE | MSE | |

| DeepCoNN | 0.622 | 0.557 | 0.682 | 0.718 | 0.783 | 1.055 |

| D-Attn | 0.644 | 0.862 | 0.618 | 0.746 | 0.653 | 0.840 |

| NARRE | 0.640 | 0.839 | 0.658 | 0.797 | 0.683 | 0.876 |

| CARL | 0.709 | 0.880 | 0.624 | 0.742 | 0.681 | 0.884 |

| TransNets | 0.784 | 1.162 | 0.661 | 0.718 | 0.825 | 1.197 |

| MPCN | 0.571 | 0.739 | 0.728 | 0.749 | 0.764 | 1.017 |

| MPCAR | 0.670 | 0.858 | 0.615 | 0.682 | 0.660 | 0.857 |

| OURS | 0.4821 | 0.5391 | 0.5531 | 0.5751 | 0.6191 | 0.7771 |

| 15.59% | 3.23% | 10.08% | 15.69% | 5.21% | 7.50% | |

| Model | MI | OP | DM | |||

|---|---|---|---|---|---|---|

| MAE | MSE | MAE | MSE | MAE | MSE | |

| RSII_si | 0.6085 | 0.6528 | 0.7116 | 0.7074 | 0.8079 | 1.0939 |

| RSII_i | 0.5627 | 0.5390 | 0.6898 | 0.7009 | 0.7750 | 1.0962 |

| RSII | 0.48202 | 0.53872 | 0.55251 | 0.57511 | 0.61941 | 0.77731 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ren, Q.; Qin, J.; Shao, J.; Song, X. RSII: A Recommendation Algorithm That Simulates the Generation of Target Review Semantics and Fuses ID Information. Appl. Sci. 2023, 13, 3942. https://doi.org/10.3390/app13063942

Ren Q, Qin J, Shao J, Song X. RSII: A Recommendation Algorithm That Simulates the Generation of Target Review Semantics and Fuses ID Information. Applied Sciences. 2023; 13(6):3942. https://doi.org/10.3390/app13063942

Chicago/Turabian StyleRen, Qiulin, Jiwei Qin, Jianjie Shao, and Xiaoyuan Song. 2023. "RSII: A Recommendation Algorithm That Simulates the Generation of Target Review Semantics and Fuses ID Information" Applied Sciences 13, no. 6: 3942. https://doi.org/10.3390/app13063942

APA StyleRen, Q., Qin, J., Shao, J., & Song, X. (2023). RSII: A Recommendation Algorithm That Simulates the Generation of Target Review Semantics and Fuses ID Information. Applied Sciences, 13(6), 3942. https://doi.org/10.3390/app13063942