To help a child with special needs understand his/her environment and improve his/her social skills, we developed text and audio classifications to distinguish between different ongoing situations that the child may encounter. In this section, we discuss several studies focusing on emotion recognition via text and voice.

2.2.1. Text Emotion Recognition

Emotion recognition or sentiment analysis of text and speech is often used to determine the sentiments and emotions of writers or speakers [

20]. Acheampong et al. [

7] surveyed models, concepts, and approaches for text-based emotion detection (ED), and listed the important datasets available for text-based ED. In addition, they discussed recent ED studies and their results and limitations. A wide range of algorithms, including both supervised and unsupervised methods, have been employed for text-sentiment analysis. Early studies used several types of supervised ML methods (such as support vector machines (SVM), maximum entropy, and naïve Bayes (NB)) and a variety of feature combinations. Unsupervised methods include methods that exploit sentiment lexicons, grammatical analysis, and syntactic patterns. DL has emerged as a powerful ML technique and has achieved impressive achievements in many application domains, including sentiment analysis [

8]. Another approach was used by Shaheen et al. [

9], who proposed a framework for emotion classification in sentences, in which emotions were treated as generalized concepts extracted from sentences. They built an emotion seed called an emotion recognition rule and used k-nearest neighbors (KNN) and point mutual information classifiers to compare the generated emotion seed with a set of reference emotion recognition rules. In our previous work [

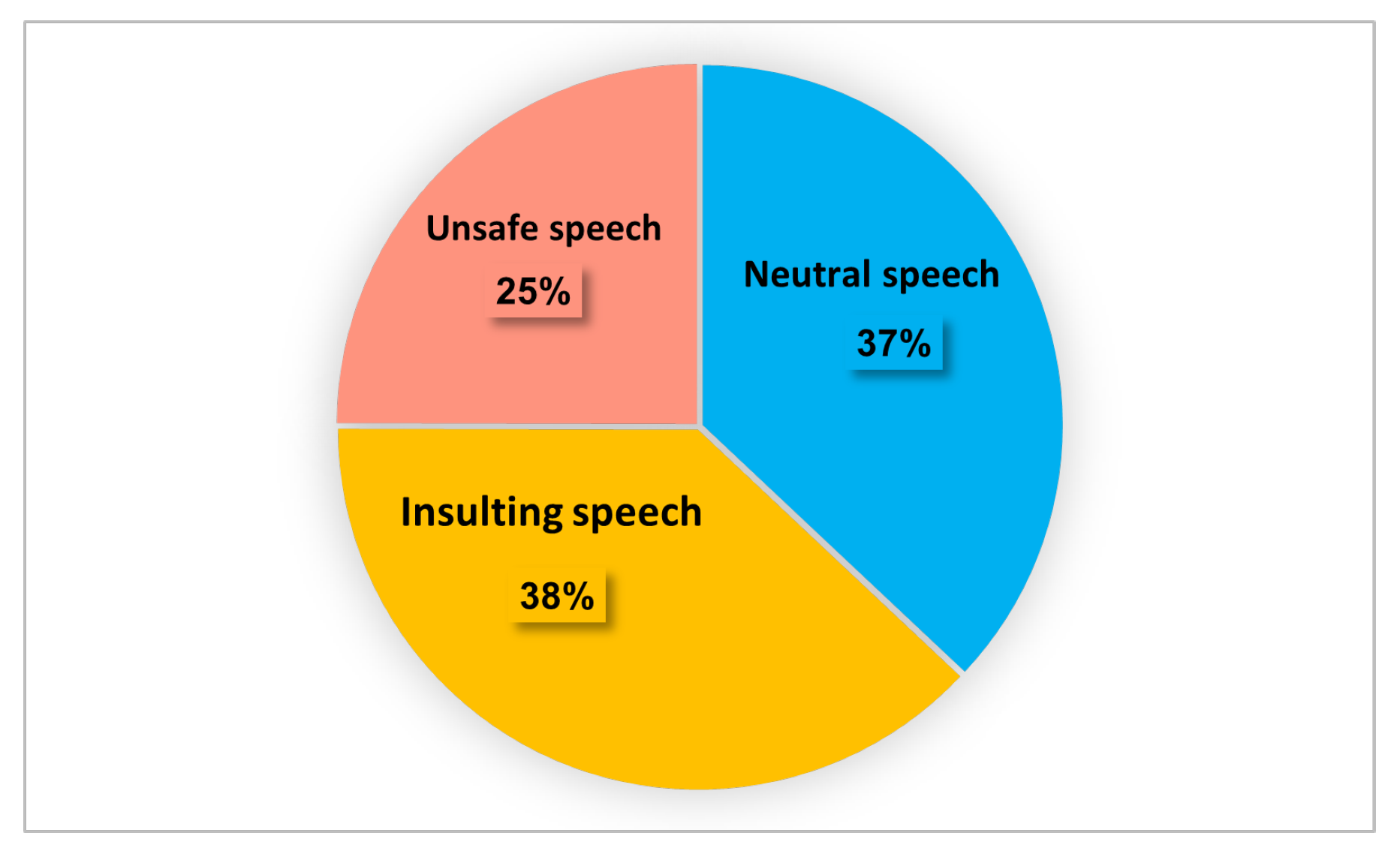

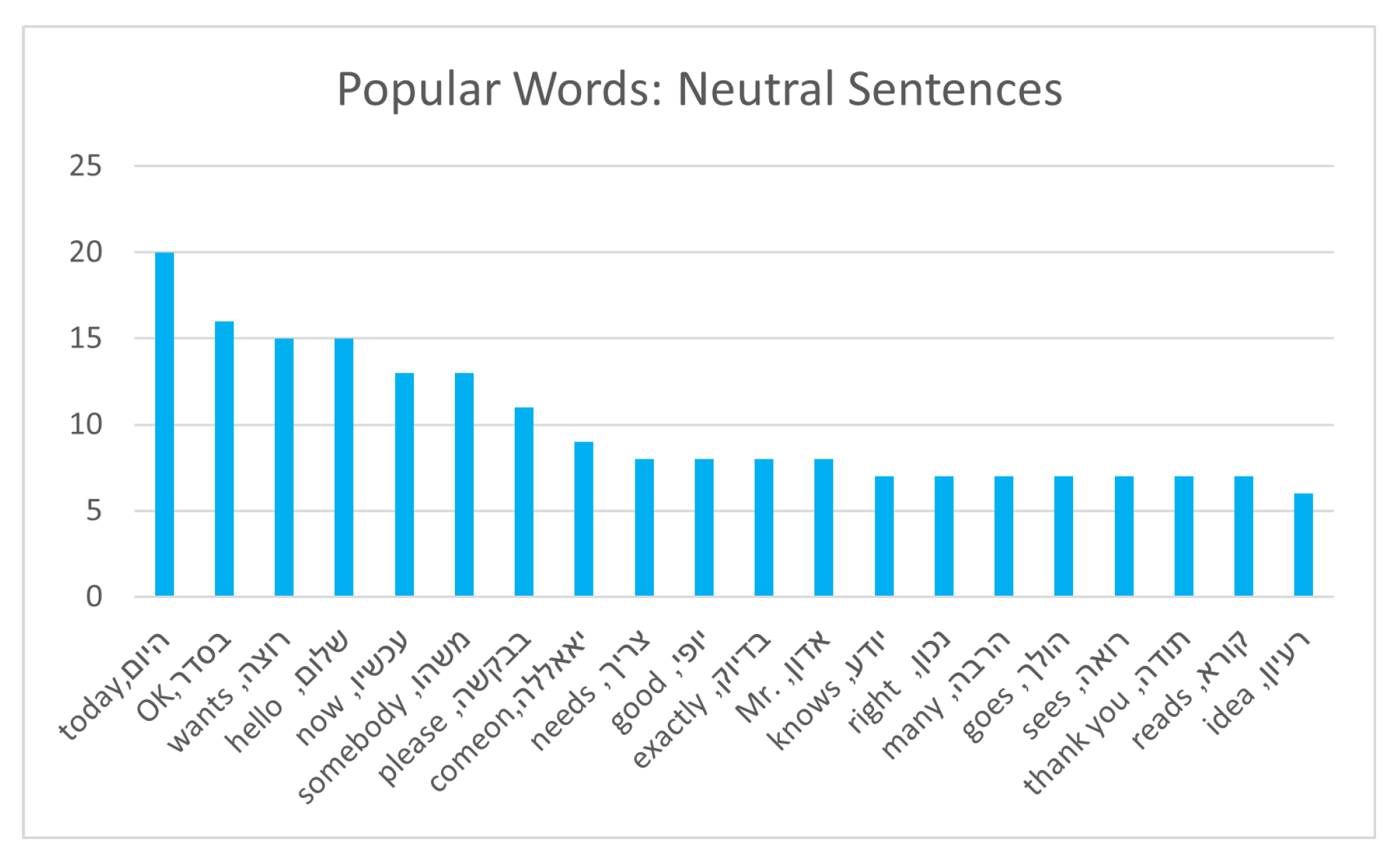

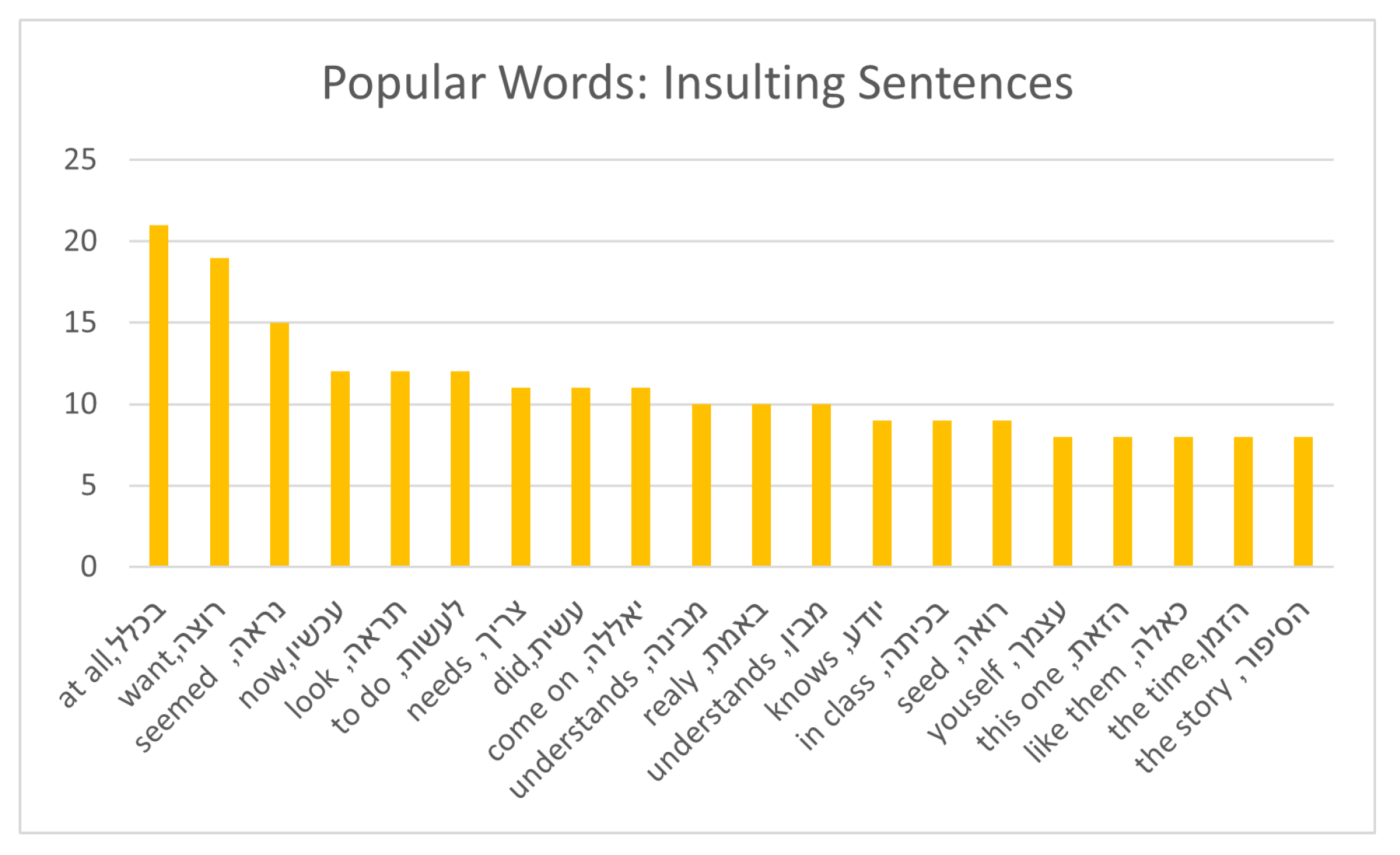

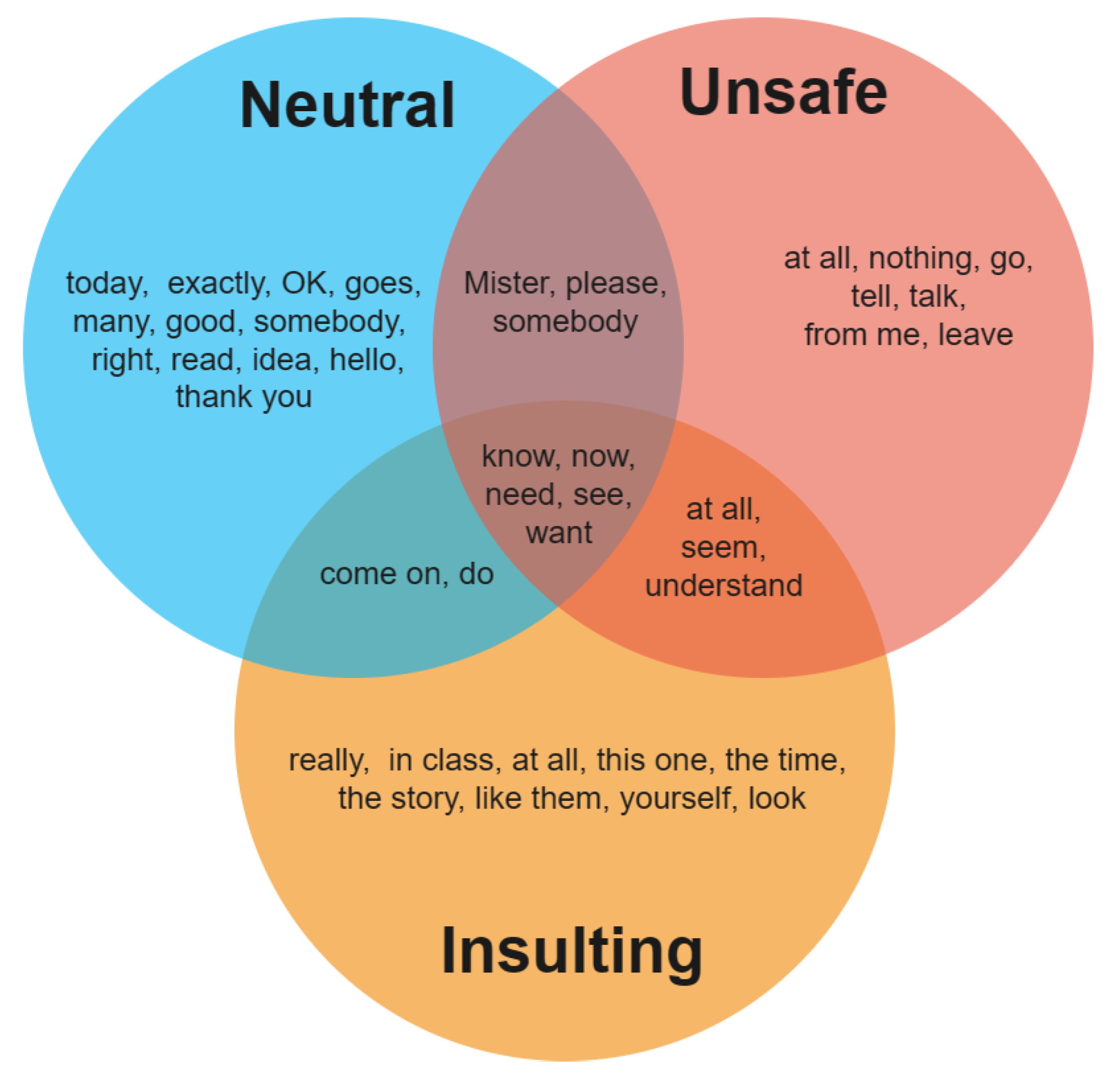

21], we concentrated on insulting sentence recognition using only text content. We generated a dataset consisting of insulting and non-insulting sentences and compared the ability of different classical ML methods of detecting insulting content.

We have further described studies that apply DL methods to sentiment analysis. Socher et al. [

22] proposed a semi-supervised recursive autoencoder network for sentence-level sentiment classification, which obtains a reduced dimensional vector representation of a sentence. In addition, Socher et al. [

23] proposed a matrix-vector recursive neural network, which builds representations of multiword units from single-word vector representations to form a linear combination of the single-word representation.

Kalchbrenner et al. [

24] proposed a dynamic convolutional neural network (CNN) using dynamic k-max pooling for the semantic modeling of sentences. Dos-Santos and Gatti [

25] used two convolutional layers to extract relevant features from words and sentences of any size to perform sentiment analysis of short texts. Guan et al.’s [

26] goal was to identify semantic orientation of each sentence (e.g., positive or negative) in a review. They proposed a weakly supervised CNN for both sentence- and aspect-level sentiment classifications. In our first stages of research, we combined several DL and context-sensitive lexicon-based methods.

Recent studies [

27,

28,

29,

30,

31,

32] applied a long short-term memory (LSTM) network for sentiment analysis. Akhtar et al. [

33] employed several ensemble models by combining DL with classical feature-based models for the fine-grained sentiment classification of financial microblogs and news. This approach achieved slightly better results than Guggilla [

30], who used LSTM for sentiment analysis on a dataset with similar properties. Wang et al. [

29] proposed a regional CNN-LSTM model that consists of two parts: a regional CNN and an LSTM network for predicting the valence arousal ratings of text. However, the results obtained using this method were not as strong as those obtained using previous methods.

Our work is different from typical sentiment analysis, in which the emotions of the writer are detected; instead, we focused on detecting sentences that cause the listener to feel insulted or bullied. This will allow us to guide the child toward more appropriate behavior in the future; for example, choosing not to tell grandma that she is fat. A similar goal was addressed by Kai et al. [

34]. In their study, Kai et al. used a rule-based system with underlying conditions that trigger emotions, based on an emotional model. The dataset comprised text from Chinese microblogs. They used an emotion model to extract the cause components of fine-grained emotions. Gui et al. [

35] addressed the issue of emotion cause extraction, extracting stimuli, or the cause of an emotion. They proposed an event-driven emotion cause extraction method, in which a seven-tuple representation of events was used. Based on this structured representation of events and inclusion of lexical features, they designed a convolutional kernel-based learning method to identify emotion-causing events using syntactic structures.

Chkroun and Azaria [

36,

37] developed Safebot, a chatbot system that converses with humans. Safebots use human feedback to identify offensive behaviors. When Safebot was told that it said something offensive, it apologized and added the offensive sentence to its database. It then avoided using those sentences. While our proposed artificial assisting agent generally relies on its ability to perform sentiment analysis, it also relies on its ability of detecting hate speech, bullying, and insulting speech from two perspectives. From the perspective of children, we would like to detect bullying directed at children with special needs to protect them. From the viewpoint of those who interact with these children, we would like to observe that the child behaves appropriately and refrains from speaking in an insulting manner. The insulting sentences in our domain can be a result of innocent intentions, and in most cases, they do not contain language that is considered as a bullying behavior.

Some prior work addressed the aforementioned issue. Nobata et al. [

38] used Vowpal Wabbit’s regression model and natural language processing (NLP) features to detect hate speech in online user comments from two domains. This approach outperformed a state-of the-art DL approach. Libeskind et al. [

39] detected abusive Hebrew texts in comments on Facebook by using a highly sparse n-gram representation of letters. Since comments on social media are usually short, they suggested four dimension reduction methods that classify similar words into groups and showed that the character n-gram representations outperformed all the other representations. Dadvar et al. [

40] proposed integrating expert knowledge into a system for cyberbullying detection. Using a multicriteria evaluation system, they obtained a better understanding of bullying behavior of YouTube users and their characteristics through expert knowledge. Based on this knowledge, the system assigned a score to users that represented their level of bullying based on the history of their activities.

In a related study, Schlesinger et al. [

41] focused on race talk and hate speech. They described technologies, theories, and experiences that enabled the conversational agent to handle race talk and examine the generative connections between race, technology, conversation, and CAs. By drawing together the technological-social interactions involved in race talk and hate speech, they pointed out the need for developing generative solutions focusing on this issue.

In our study, we utilized Heb-BERT [

5], a Hebrew version of BERT, to analyze and classify texts. The BERT model’s ability to capture context and meaning is useful for understanding and analyzing natural language data. Additionally, its fine-tuning capabilities allow for high accuracy in analyzing specific datasets. By utilizing BERT for research, we gain a more nuanced and accurate understanding of natural language data, making it possible to identify patterns and trends that are challenging to detect using other methods.

To evaluate the performance of Heb-BERT in our study, we compared the accuracy of various machine learning and deep learning methods on TF-IDF sentences, as well as on the Heb-BERT version of the training set, and we also fine-tuned the model using our training data. We achieved notably higher accuracy in detecting abusive and harmful sentences on the Heb-BERT representation of the test set compared to the results obtained by learning methods performed on the TF-IDF representation of the test set. This highlights the significance of using advanced natural language processing techniques such as Heb-BERT in research, especially in situations where accurate detection is critical.

2.2.2. Voice Emotion Recognition

Speech is the fastest and most natural mode of communication among humans. This has motivated researchers to consider speech as an efficient method for human–machine interaction.

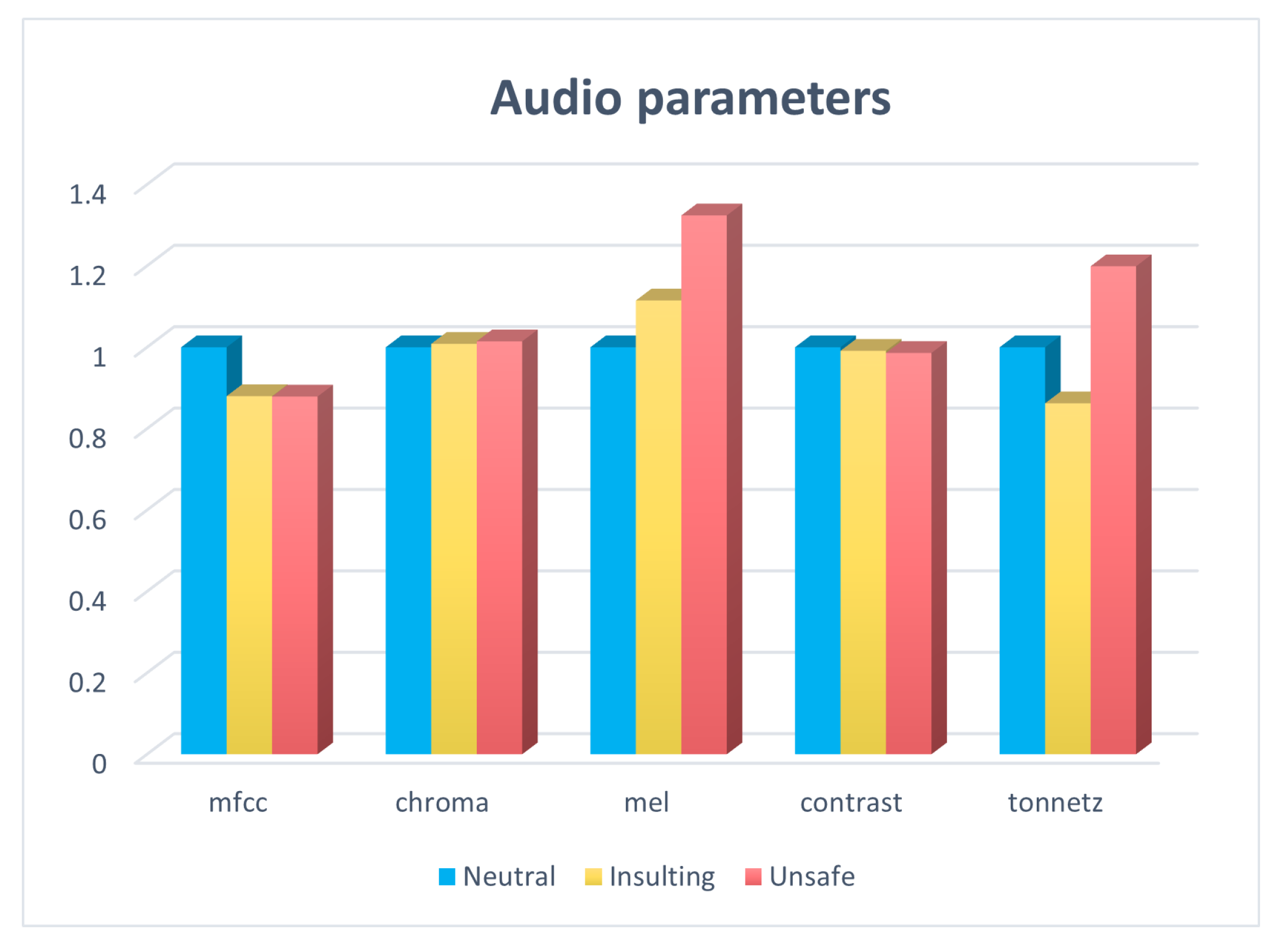

El Ayadi et al. [

42] survey three important aspects of the design of a speech emotion recognition system: the choice of suitable features for speech representation, the design of an appropriate classification scheme, and the proper preparation of an emotional speech database for evaluating system performance. Feature selection is a crucial step in the process of audio based sentiment analysis. It involves identifying the most relevant features or characteristics of an audio signal that can be used to effectively predict the sentiment expressed in it. This can greatly improve the accuracy and efficiency of sentiment analysis algorithms, as well as reduce the amount of data that needs to be processed.

There are several methods for feature selection in audio-based sentiment analysis, each with its own strengths and weaknesses. Some of the most common methods include:

Soleymani et al. [

45] review sentiment analysis studies based on multimodal signals, including visual, audio and textual information. The source data comes from different domains, including spoken reviews, images, video blogs, human–machine and human–human interactions. In our study, the data source used for the risk or insulting context detection, was based on human–human interactions, obtained from YouTube videos of Hebrew children’s movies, and the speech text was extracted from the recordings manually by research assistants.

Vocal speech can be classified using classical ML methods. Noroozi et al. [

46] proposed the use of random forest for vocal emotion recognition. This technique adopts random forests to represent speech signals along with the decision-tree approach to classify them into different categories. The emotions were broadly categorized into six groups. The Surrey audio visual-expressed emotion database [

47] was used in this study. The proposed method achieved an average recognition rate of 66.28%.

Han et al. [

48] proposed using a deep neural network (DNN) for producing an emotion state probability distribution for each speech segment. Nwe et al. [

49] proposed a method representing speech signals and discrete hidden Markov model (HMM) as a classifier using short-time log frequency power coefficients (LFPC). Performance of the LFPC feature parameters was compared with that of the linear prediction Cepstral coefficients (LPCC) and mel-frequency Cepstral coefficients (MFCC) feature parameters commonly used in speech recognition systems. Results show that the proposed system yielded an average accuracy of 78% Li et al. [

50] recently combined DNN and HMM using acoustic models that achieved good speech recognition results over Gaussian mixture model-based HMMs, with a restricted Boltzmann machine (RBM), and achieved an accuracy of up to 77.92%.

A different type of neural network was used by Wu et al. [

51]. They explored spectrogram-based representations for speech emotion classification using the USC-IEMOCAP dataset. They experimented with features from both the speech and glottal volume velocity spectrograms. Their experiments investigated whether classification performance could be improved by filtering out unwanted factors of variation, such as speaker identity and verbal content (phonemes), from speech.

Zadeh et al. [

52] introduced a tensor fusion Network (TFN) that learns both intramodal and intermodal dynamics end to end. The intermodal dynamic was shaped using a fusion approach called Tensor Fusion, which explicitly accumulates unimodel, bimodal, and trimodal interactions. The intermodal dynamics were modeled through three sub-networks that embed models for languages, visual, and acoustic, respectively, and achieved 69.4% accuracy for binary classification.

Jain et al. [

53] used a SVM to classify speech as one of four emotions (sadness, anger, fear, and happiness). They classified these emotional states using a SVM classifier using two strategies: one against all (OAA) and gender-dependent classification. They achieved 85.085% accuracy when using MFCC data.

Our final goal was online emotion recognition to mediate the environment for children with special needs. Research has been conducted on developing online speech emotion recognition (SER) systems. Bertero et al. [

54] built a conventional dialogue system based on modules that enable them to have “empathy” and answer the user while being aware of their emotions and intent. They used a CNN model to extract emotions from raw speech inputs, without feature engineering. This approach achieved accuracy of 65.7%.

Gandhi et al. [

10] survey recent developments in multimodal sentiment analysis, including several fusion technologies, popular multimodal datasets, deep learning models for multimodal sentiment analysis, interdisciplinary applications, and future research directions. Another application of multimodal input of text and audio is sarcasm detection [

55]. Castro et al. created a dataset that includes highly-annotated conversational and multimodal context features. The dataset also provides prior turns in the dialogue, which serve as contextual information.

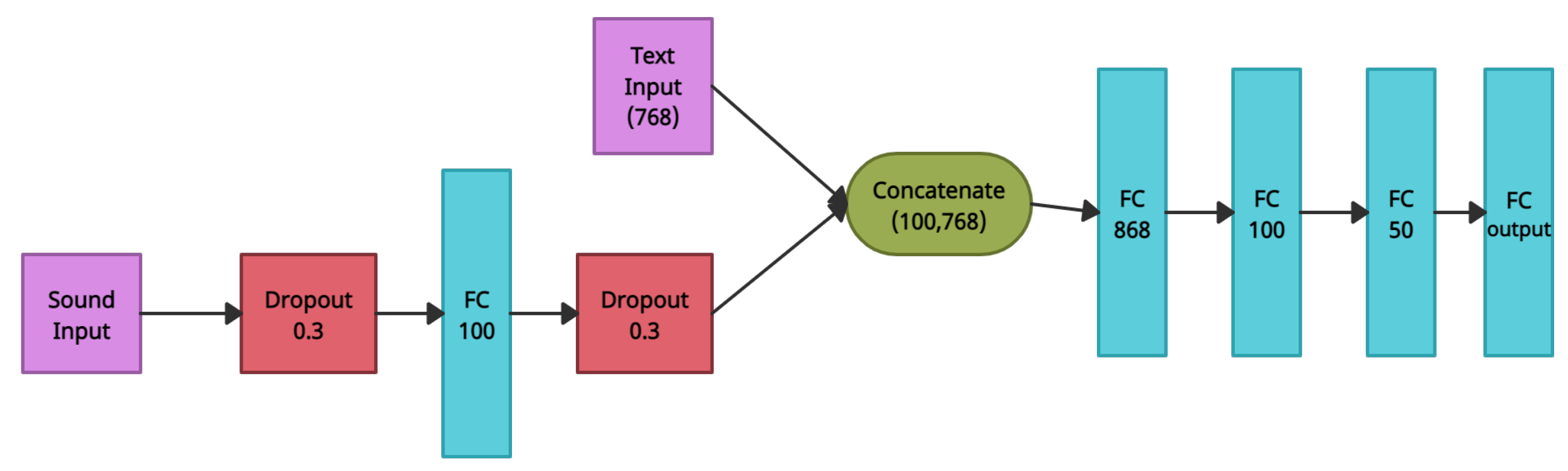

Another interesting work on text- and voice-based categorization was performed by Zhu et al. [

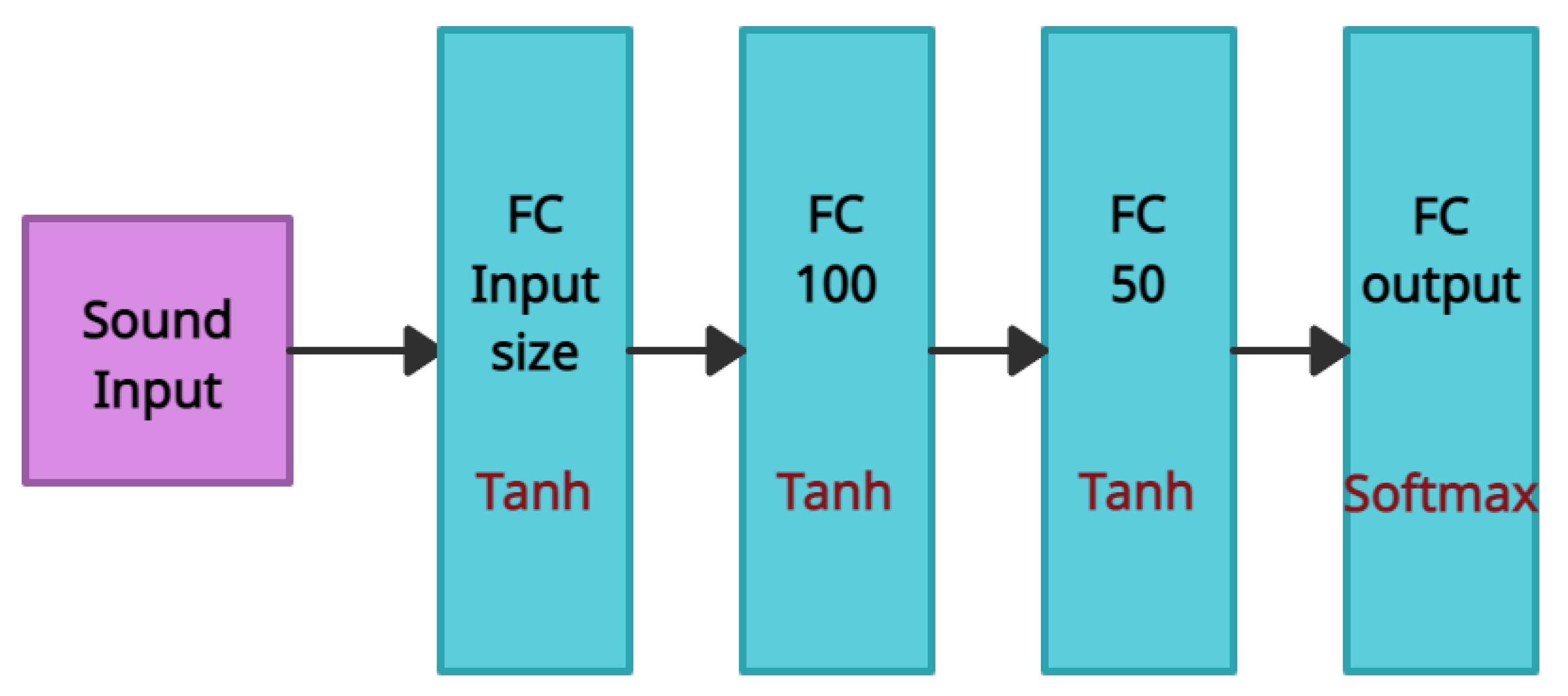

56]; they suggested combining BERT text-embedded vectors with Wav2Vec audio-embedded vectors for dementia detection. They used the Wav2Vec model to generate automatic speech recognition (ASR) transcripts and vectors to fine-tune BERT, followed by inference layers consisting of a convolutional, global average pooling, and fully connected layers for the dementia detection task. Dementia detection was performed based on the BERT embedding vector, where pauses in the audio were recorded as punctuation marks. While speech slowing down and pauses in speech can be a sign for dementia, in our problem, there are almost no clear audio features. Although some audio features do exist, such as a specific type of intonation expressing anger, they do not have clear textual features that can be converted to BERT as performed by Zhu et al. Thus, for the classification task we utilized both Wav2Vec and Hebrew BERT embedded vectors to detect risky and insulting sentences. In particular, we used the Wav2Vec model for fine-tuning and extracting audio features from our data, combined the given embedded vector with the text Heb-BERT embedded vector [

5], and used a concatenate vector to train our DNN model. We achieved accuracy of up to 80%.