Classification of Micromobility Vehicles in Thermal-Infrared Images Based on Combined Image and Contour Features Using Neuromorphic Processing

Abstract

1. Introduction

2. State of the Art

Automated Counting

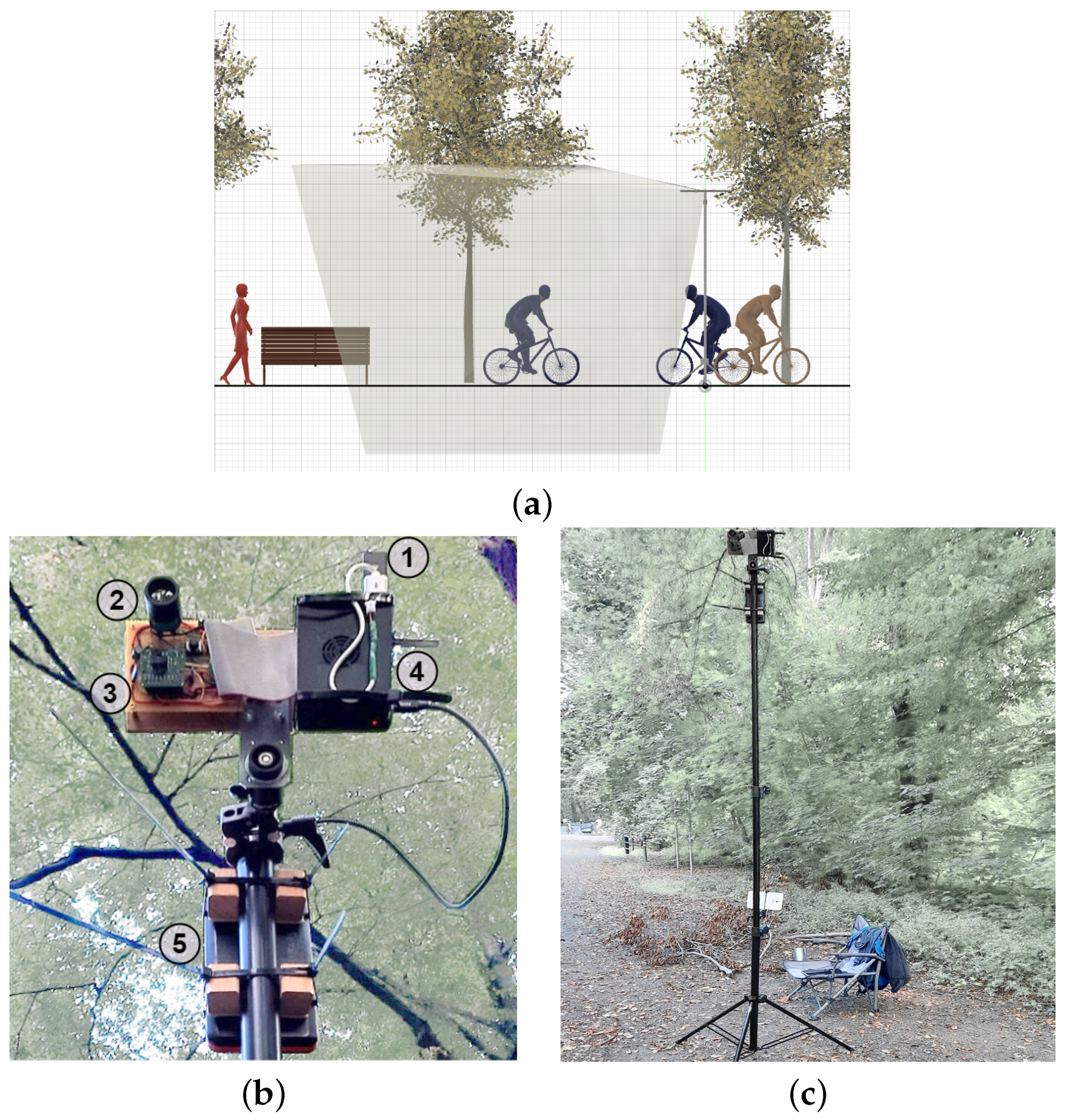

3. System Concept and Hardware

3.1. Concept

- Noninvasive;

- Location-independent;

- Easy set-up;

- Low power consumption;

- Enables classification.

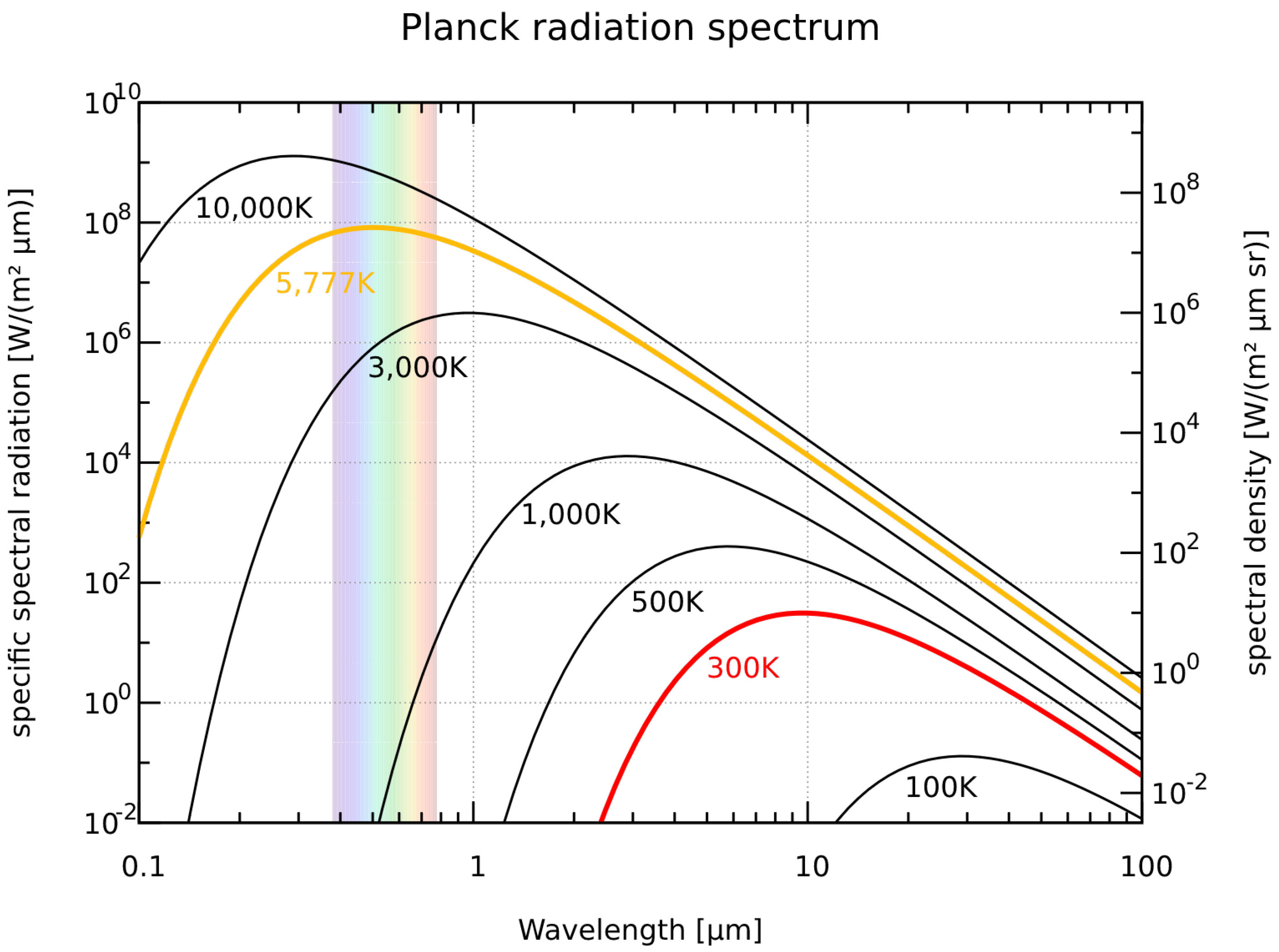

3.2. Sensors

3.3. The Neuromorphic Processor

3.4. Software Concept

4. Evaluation

4.1. Set-Up

4.2. Evaluation Method

4.3. Metrics

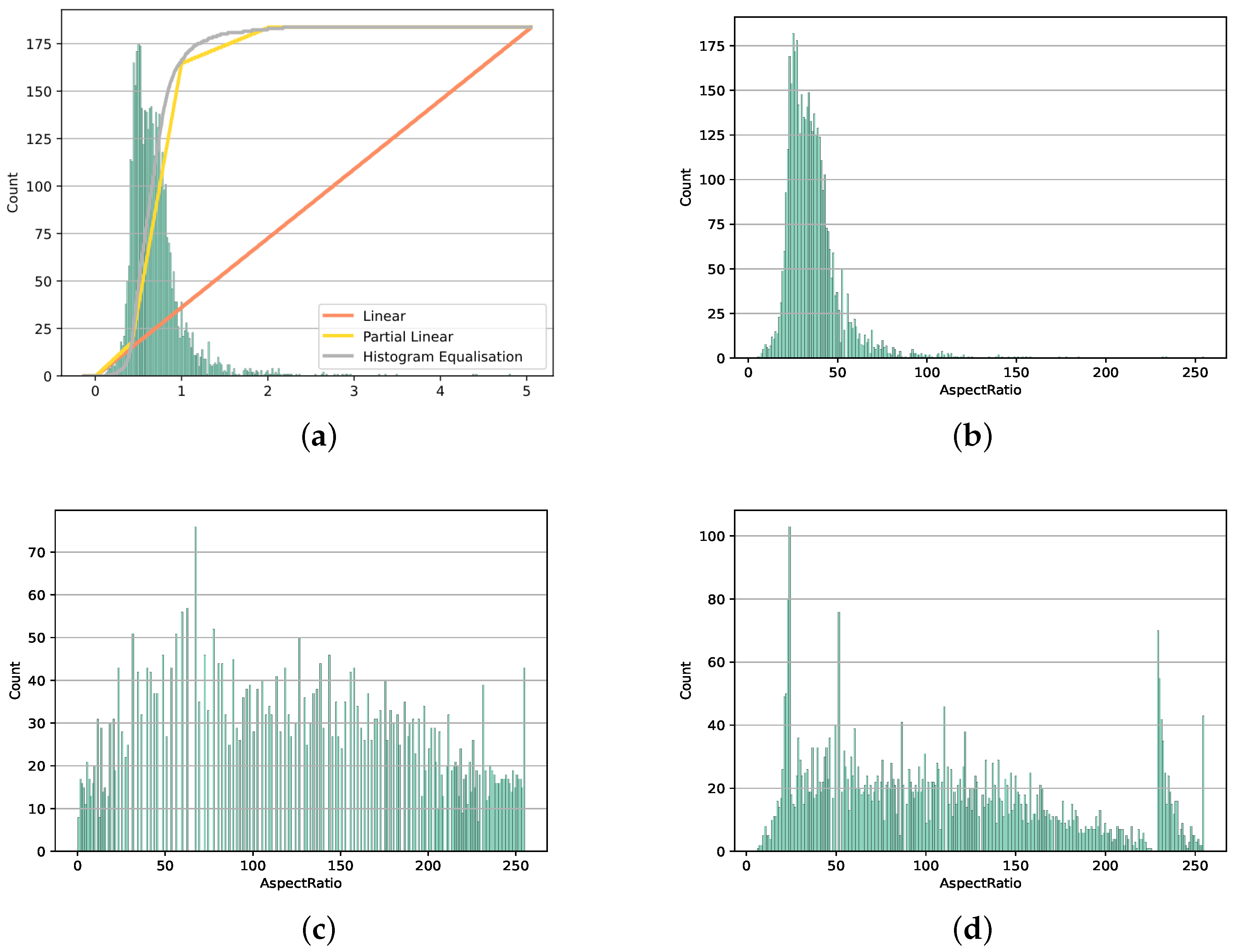

4.4. Feature Extraction

4.5. Training Behavior

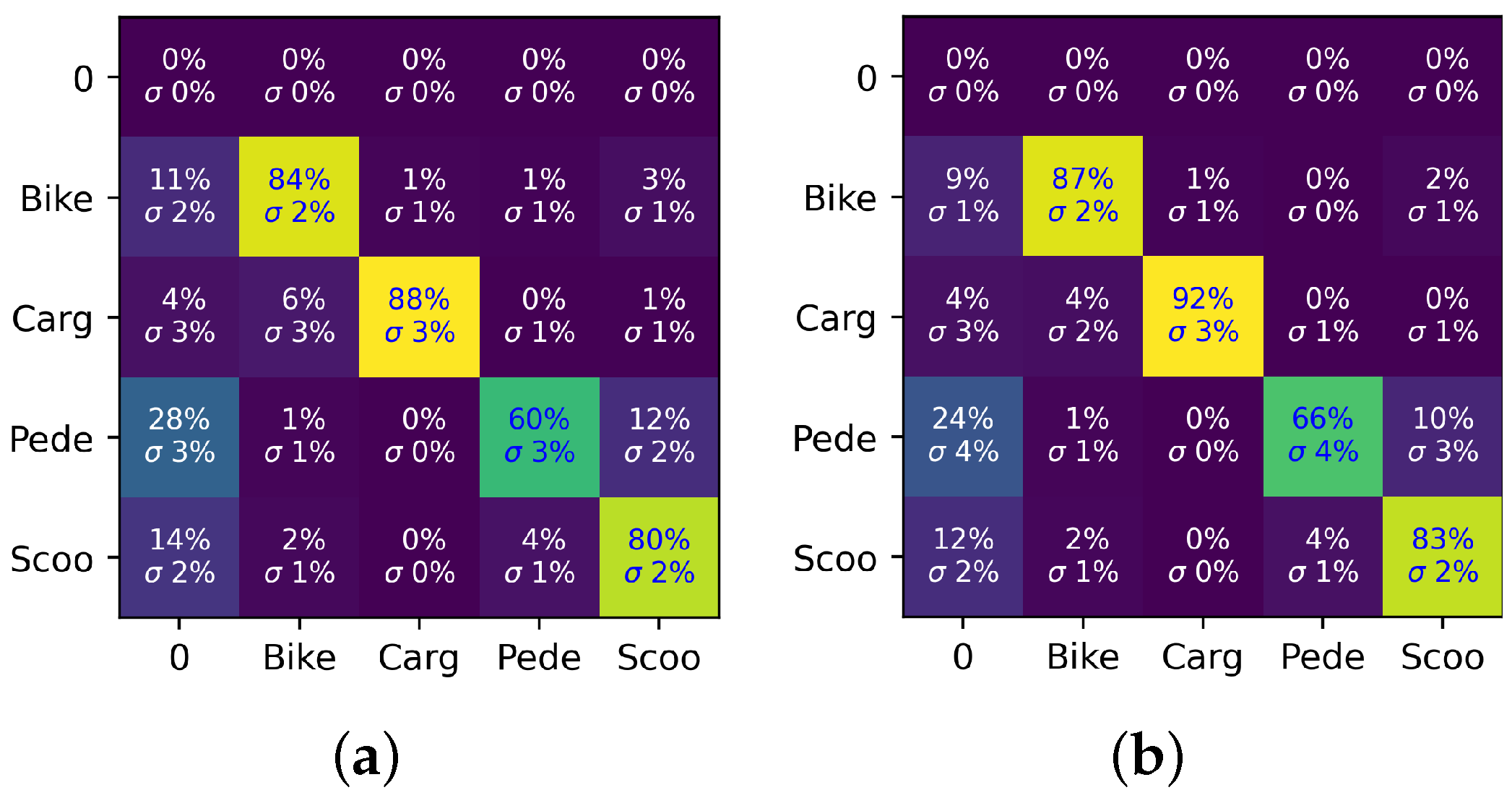

5. Results

6. Conclusions

- Training data:Most important for the next step in development is to conduct further testing in an outdoor environment, acquiring more data.

- Self-learning:The current approach does not yet fully utilize the NM500’s capability for self-learning. It could be used to allow the system to learn during operation and thus independently adapt to new environments.

- Multi-step classification:In this work, we optimized the performance by combining features from different sources into one feature vector. It could also be feasible to perform multiple classifications using different feature sets and interpret the different results in combination.

- Utilization of the NM500:The current task of the NM500 is just the final classification, as preprocessing, segmentation, and detection are still performed on the host system. It should be evaluated which tasks could also be performed by the NM500.

- Sensor fusion:The new generation of our prototype utilizes a low-power radar as a trigger for the infrared sensors. It should be investigated how the information of both sensors could be used in a sensor fusion approach to further enhance the performance.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- CPSC. Micromobility Products-Related Deaths, Injuries, and Hazard Patterns: 2017–2020. Available online: https://www.cpsc.gov/s3fs-public/Micromobility-Products-Related-Deaths-Injuries-and-Hazard-Patterns-2017-2020.pdf?VersionId=s8MfDNAVvHasSbqotb7UC.OCWYDcqena (accessed on 17 February 2023).

- Stahl, B.; Lange, R.; Apfelbeck, J. Evaluation of a concept for classification of micromobility vehicles based on thermal-infrared imaging and neuromorphic processing. In Proceedings of the SPIE Future Sensing Technologies 2021, Online, 15–19 November 2021; Kimata, M., Shaw, J.A., Valenta, C.R., Eds.; SPIE: Bellingham, WA, USA, 2021; p. 6. [Google Scholar] [CrossRef]

- Ozan, E.; Searcy, S.; Geiger, B.C.; Vaughan, C.; Carnes, C.; Baird, C.; Hipp, A. State-of-the-Art Approaches to Bicycle and Pedestrian Counters: RP2020-39 Final Report; National Academy of Sciences: Washington, DC, USA, 2021. [Google Scholar]

- Nordback, K.; Marshall, W.E.; Janson, B.N.; Stolz, E. Estimating Annual Average Daily Bicyclists: Error and Accuracy. Proc. Transp. Res. Rec. 2013, 2339, 90–97. [Google Scholar] [CrossRef]

- Klein, L.A. Traffic Flow Sensors; Society of Photo-Optical Instrumentation Engineers: Bellingham, WA, USA, 2020. [Google Scholar]

- Larson, T.; Wyman, A.; Hurwitz, D.S.; Dorado, M.; Quayle, S.; Shetler, S. Evaluation of dynamic passive pedestrian detection. Transp. Res. Interdiscip. Perspect. 2020, 8, 100268. [Google Scholar] [CrossRef]

- Gajda, J.; Sroka, R.; Stencel, M.; Wajda, A.; Zeglen, T. A vehicle classification based on inductive loop detectors. In Proceedings of the 18th IEEE Instrumentation and Measurement Technology Conference. Rediscovering Measurement in the Age of Informatics (Cat. No.01CH 37188), Budapest, Hungary, 21–23 May 2001; pp. 460–464. [Google Scholar] [CrossRef]

- Yang, H.; Ozbay, K.; Bartin, B. Investigating the performance of automatic counting sensors for pedestrian traffic data collection. In Proceedings of the 12th World Conference on Transport Research, Lisbon, Portugal, 11–15 July 2010; Volume 1115, pp. 1–11. [Google Scholar]

- Planck, M. Faksimile aus den Verhandlungen der Deutschen Physikalischen Gesellschaft 2 (1900) S. 237: Zur Theorie des Gesetzes der Energieverteilung im Normalspectrum. Phys. J. 1948, 4, 146–151. [Google Scholar] [CrossRef]

- Prog. File:BlackbodySpectrum Loglog de.svg. Available online: https://commons.wikimedia.org/wiki/File:BlackbodySpectrum_loglog_de.svg (accessed on 22 January 2022).

- Einstein, A. Über einen die Erzeugung und Verwandlung des Lichtes betreffenden heuristischen Gesichtspunkt. Ann. Phys. 1905, 322, 132–148. [Google Scholar] [CrossRef]

- General Vision Inc. NeuroMem Technology Reference Guide, 5.4 ed.; General Vision Inc.: Petaluma, CA, USA, 2019; Available online: https://www.general-vision.com/documentation/TM_NeuroMem_Technology_Reference_Guide.pdf (accessed on 15 March 2023).

- General Vision Inc. NeuroMem RBF Decision Space Mapping, 4.3 ed.; General Vision Inc.: Petaluma, CA, USA, 2019; Available online: http://www.general-vision.com/documentation/TM_NeuroMem_Decision_Space_Mapping.pdf (accessed on 15 March 2023).

- OpenCV: Contour Properties. Available online: https://docs.opencv.org/3.4/d1/d32/tutorial_py_contour_properties.html (accessed on 7 March 2023).

- Hu, M.K. Visual pattern recognition by moment invariants. IEEE Trans. Inf. Theory 1962, 8, 179–187. [Google Scholar] [CrossRef]

- Lange, R.; Seitz, P. Solid-state time-of-flight range camera. IEEE J. Quantum Electron. 2001, 37, 390–397. [Google Scholar] [CrossRef]

- Lange, R.; Böhmer, S.; Buxbaum, B. 11—CMOS-based optical time-of-flight 3D imaging and ranging. In High Performance Silicon Imaging, 2nd ed.; Durini, D., Ed.; Woodhead Publishing Series in Electronic and Optical Materials; Woodhead Publishing: Cambridge, UK, 2020; pp. 319–375. [Google Scholar] [CrossRef]

| Duration | 1 h | 2 h | 3 h | 9 h | 12 h | 24 h | 1 w | 2 w | 4 w |

| Average error | 48% | 46% | 40% | 34% | 35% | 38% | 22% | 19% | 15% |

| Technology | Pro | Con | Accuracy |

|---|---|---|---|

| Pneumatic tube | easy set-up long battery life | surface-mounted only vehicles tripping hazard | 85% [3] |

| Piezo cable | easy set-up long battery life | surface-mounted only vehicles | 76% [3] |

| Induction loop | high accuracy long battery life | only vehicles requires ground work | 87% [3] |

| IR spot | high accuracy easy set-up long battery life | shading quantity only | 62% [3] |

| IR array | high accuracy classification | mostly indoors power consumption? | 88% [6] |

| Radar | easy set-up side-mounted | shading cost | |

| Camera | easy set-up classification | power consumption varing accuracy privacy | 83–49% [3] 83–26% [6] |

| Global | Local | 10 × 20 | 12 × 19 | 13 × 19 | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| RBF | KNN | RBF | KNN | RBF | KNN | RBF | KNN | RBF | KNN | |

| Median | 83 | 83 | 84 | 84 | 84 | 83 | 84 | 84 | 84 | 84 |

| Sigma | 1.2 | 1.5 | 1.0 | 1.4 | 0.8 | 1.2 | 1.0 | 1.6 | 0.9 | 1 |

| Norm. | Limited | |||

|---|---|---|---|---|

| RBF | KNN | RBF | KNN | |

| Median | 52 | 59 | 59 | 66 |

| Sigma | 1.6 | 1.6 | 1.5 | 1.7 |

| Linear | Part. Linear | Hist. Euq. | ||||

|---|---|---|---|---|---|---|

| RBF | KNN | RBF | KNN | RBF | KNN | |

| Median | 68 | 70 | 68 | 69 | 28 | 30 |

| Sigma | 1.3 | 1.2 | 1.0 | 1.6 | 3.7 | 3.9 |

| Linear | Part. Linear | Log Linear | Log P.L. | Log H.E. | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| RBF | KNN | RBF | KNN | RBF | KNN | RBF | KNN | RBF | KNN | |

| Median | 32 | 34 | 36 | 38 | 61 | 61 | 58 | 60 | 66 | 66 |

| Sigma | 2.0 | 2.3 | 1.6 | 1.5 | 1.1 | 1.3 | 1.9 | 1.6 | 1.3 | 1.2 |

| Linear | Part. Linear | Hist. Euq. | Reduced P.L. | Reduced H.E. | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| RBF | KNN | RBF | KNN | RBF | KNN | RBF | KNN | RBF | KNN | |

| Median | 71 | 70 | 70 | 69 | 76 | 77 | 70 | 70 | 74 | 74 |

| Sigma | 1.1 | 1.7 | 1.4 | 1.3 | 1.4 | 1.7 | 1.4 | 1.6 | 1.6 | 1.4 |

| (a) | (b) | (c) | (d) | |||||

|---|---|---|---|---|---|---|---|---|

| RBF | KNN | RBF | KNN | RBF | KNN | RBF | KNN | |

| Median | 77 | 77 | 57 | 59 | 86 | 87 | 87 | 87 |

| Sigma | 1.0 | 1.2 | 1.9 | 2.0 | 1.0 | 1.2 | 0.9 | 1.1 |

| Scaled ROI | Histogram | Properties | Moments | Combination | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| RBF | KNN | RBF | KNN | RBF | KNN | RBF | KNN | RBF | KNN | |

| Median | 84 | 84 | 59 | 66 | 68 | 70 | 76 | 77 | 87 | 87 |

| Sigma | 1.0 | 1.4 | 1.5 | 1.7 | 1.3 | 1.2 | 1.4 | 1.7 | 0.9 | 1.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Stahl, B.; Apfelbeck, J.; Lange, R. Classification of Micromobility Vehicles in Thermal-Infrared Images Based on Combined Image and Contour Features Using Neuromorphic Processing. Appl. Sci. 2023, 13, 3795. https://doi.org/10.3390/app13063795

Stahl B, Apfelbeck J, Lange R. Classification of Micromobility Vehicles in Thermal-Infrared Images Based on Combined Image and Contour Features Using Neuromorphic Processing. Applied Sciences. 2023; 13(6):3795. https://doi.org/10.3390/app13063795

Chicago/Turabian StyleStahl, Bastian, Jürgen Apfelbeck, and Robert Lange. 2023. "Classification of Micromobility Vehicles in Thermal-Infrared Images Based on Combined Image and Contour Features Using Neuromorphic Processing" Applied Sciences 13, no. 6: 3795. https://doi.org/10.3390/app13063795

APA StyleStahl, B., Apfelbeck, J., & Lange, R. (2023). Classification of Micromobility Vehicles in Thermal-Infrared Images Based on Combined Image and Contour Features Using Neuromorphic Processing. Applied Sciences, 13(6), 3795. https://doi.org/10.3390/app13063795