An Efficient SMOTE-Based Deep Learning Model for Voice Pathology Detection

Abstract

1. Introduction

- This paper introduces efficient deep learning models based on various oversampling methods, such as the SMOTE, Borderline-SMOTE, and ADASYN, and directly applies them to feature parameters for VPD.

- The suggested combinations of the oversampled MFCCs, LPCs, and deep learning methods can efficiently classify pathological and normal voices.

- Several experiments are conducted to verify the usefulness of the developed VPD system using the SVD.

- The results highlight the excellence of the proposed classification system, which integrates a CNN and LPCs based on the SMOTE in terms of monitoring voice disorders; it is an effective and reliable system.

2. Materials and Methods

2.1. Database

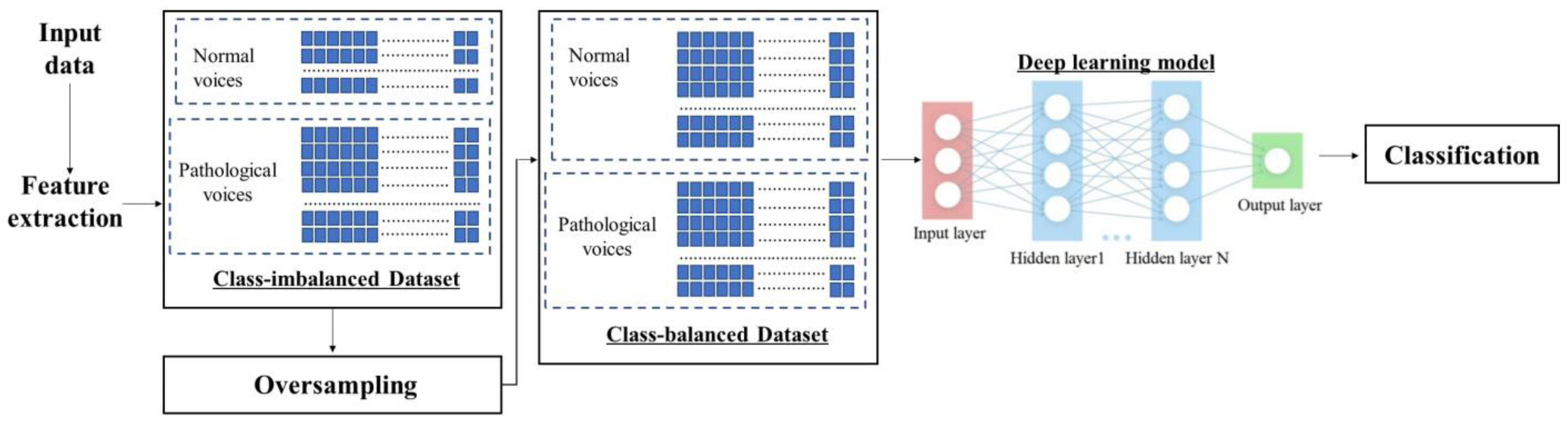

2.2. Overview of the Framework

2.3. Feature Extraction

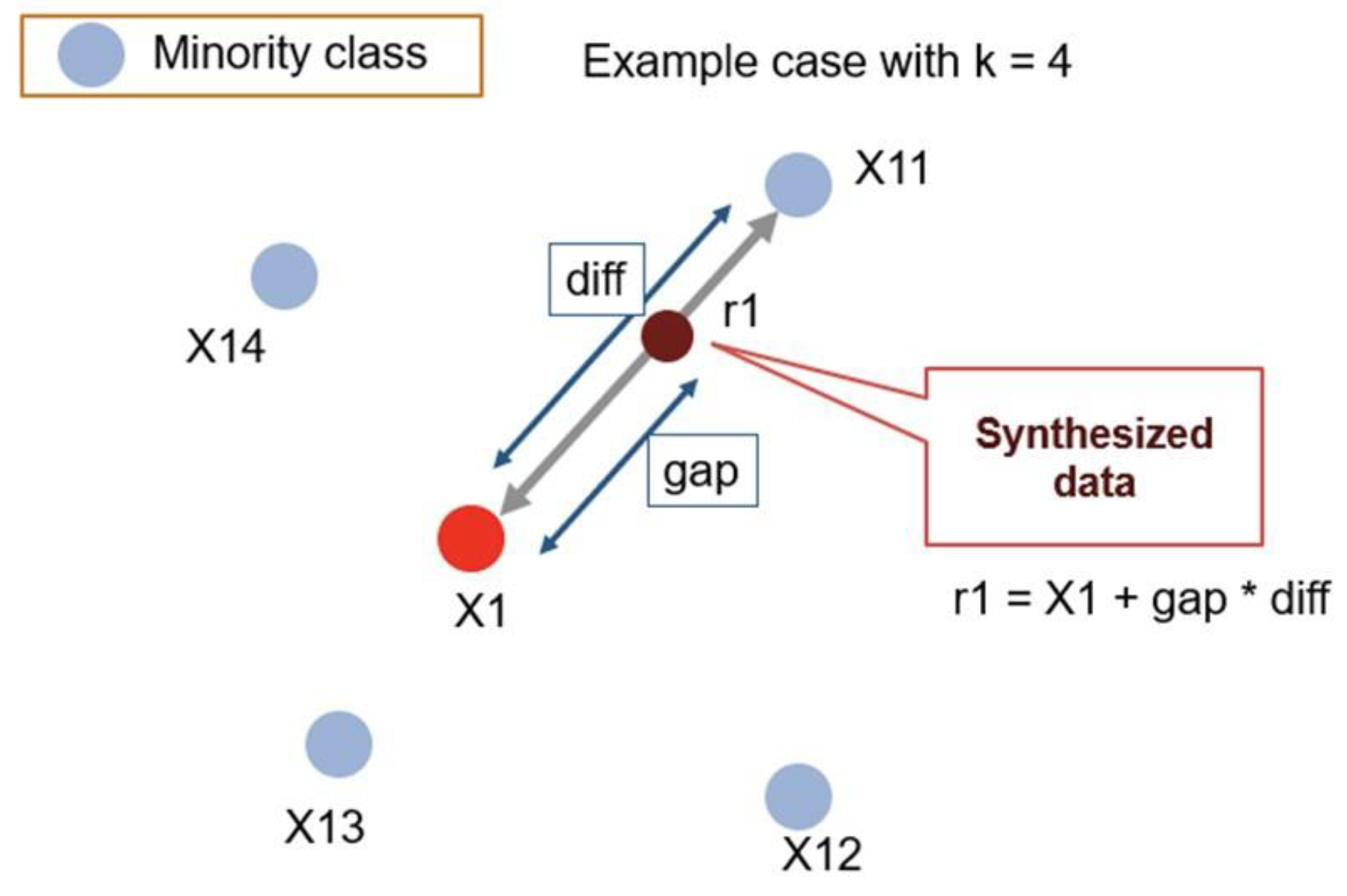

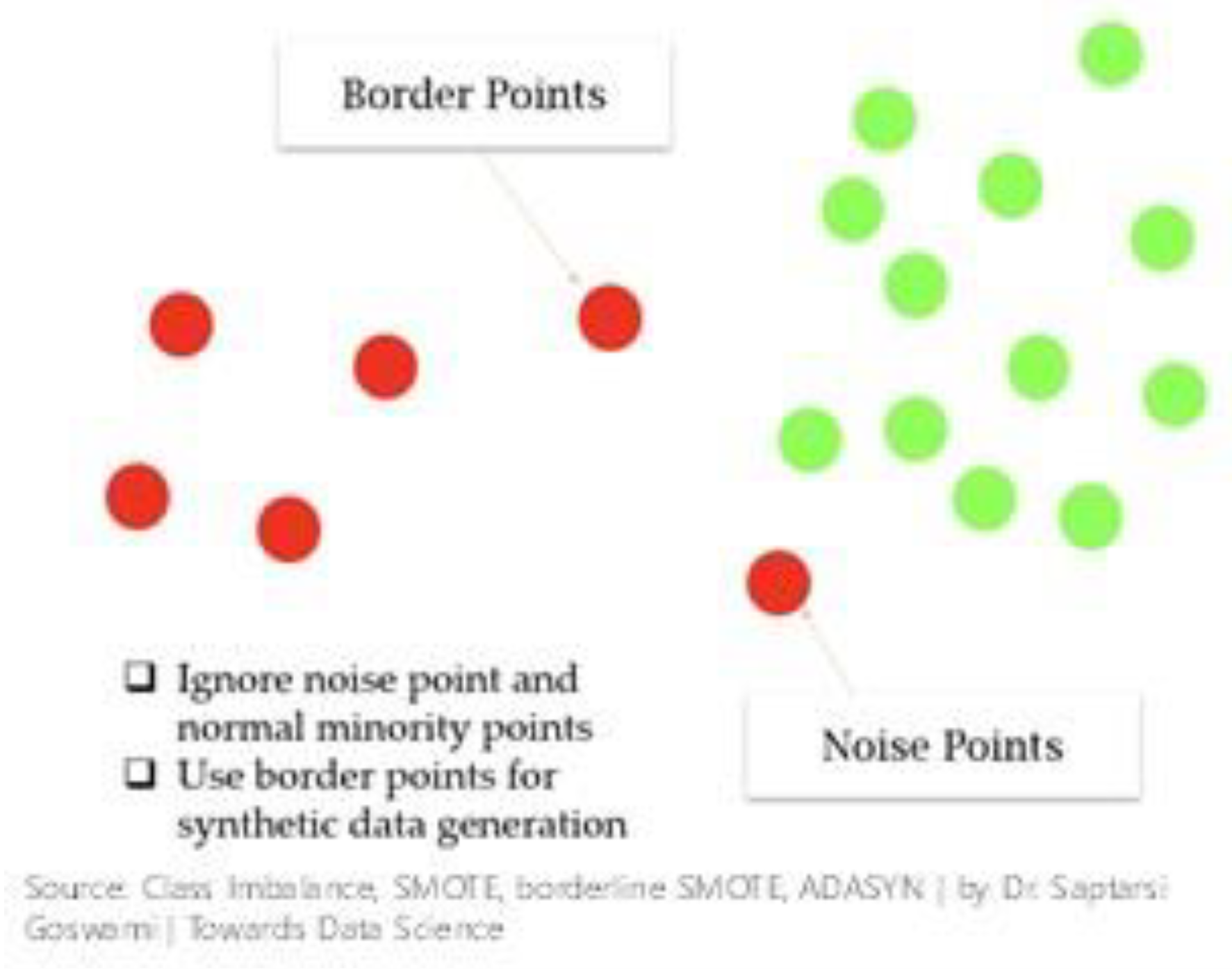

2.4. Oversampling Methods

3. Results

3.1. Experimental Setup

3.2. Model Evaluation Measures

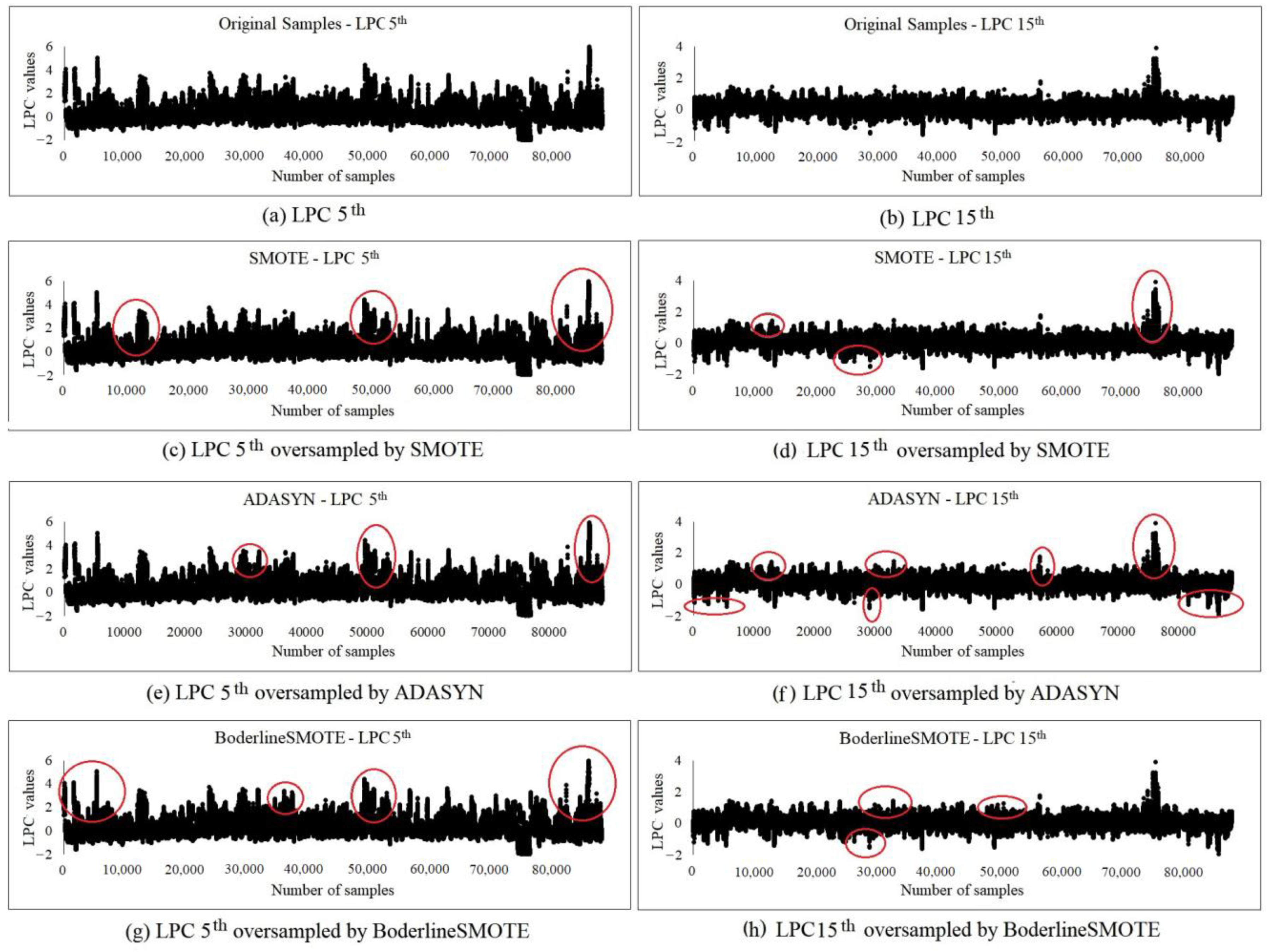

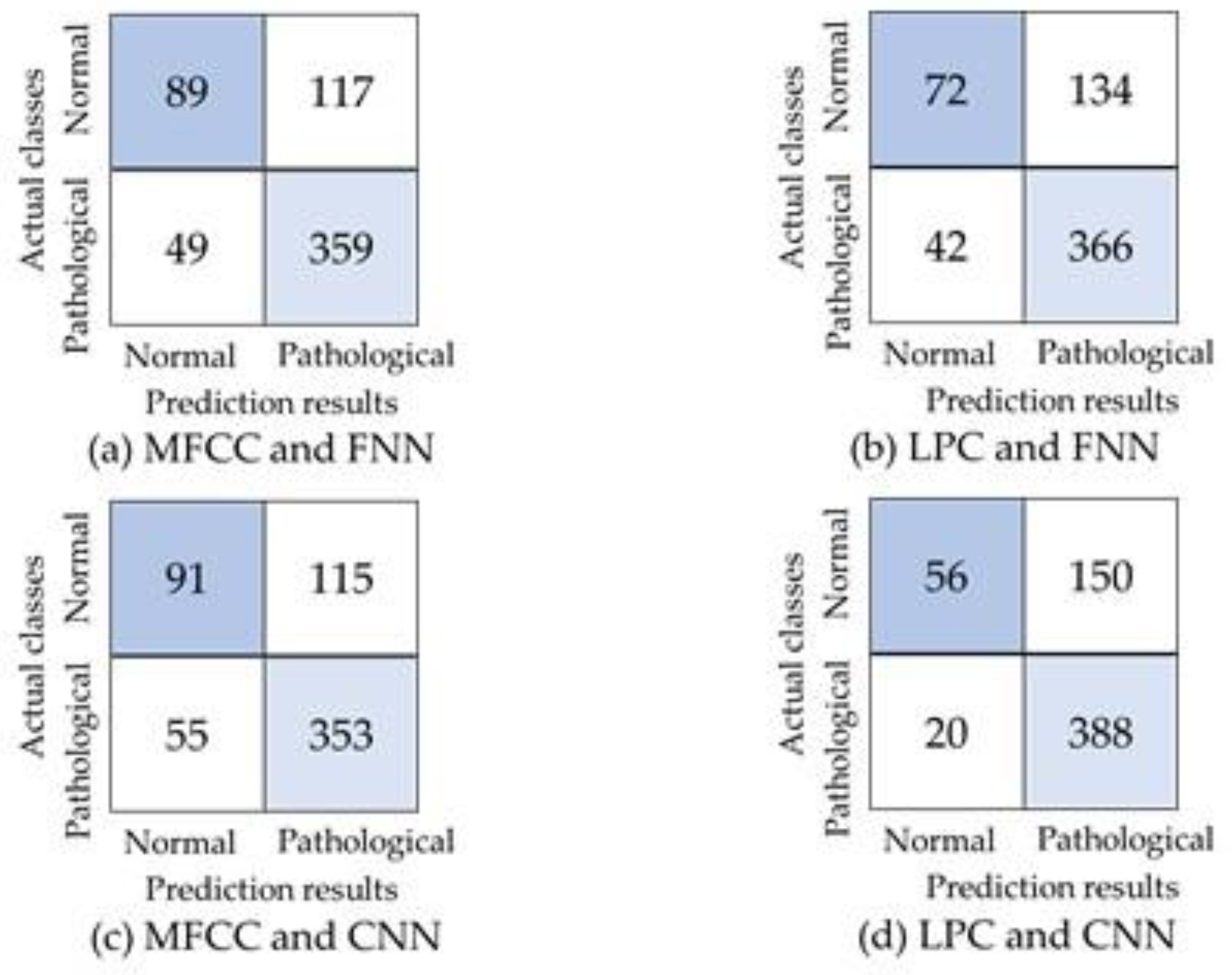

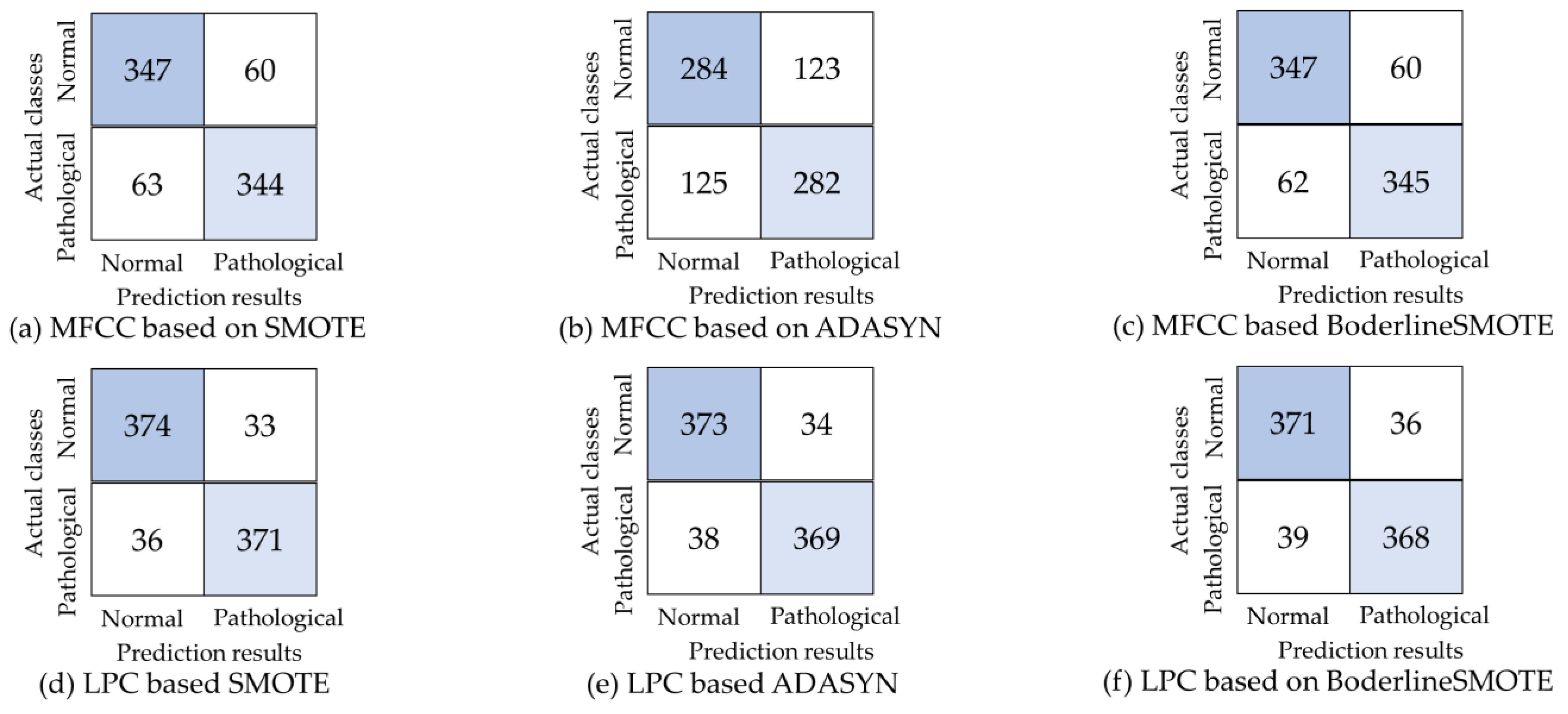

3.3. Oversampling Method Comparison

3.4. Experimental Results and Analysis

3.5. Comparison with Existing Techniques

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Miliaresi, I.; Poutos, K.; Pikrakis, A. Combining acoustic features and medical data in deep learning networks for voice pathology classification. In Proceedings of the 2020 28th European Signal Processing Conference (EUSIPCO), Amsterdam, The Netherlands, 18–21 January 2021; pp. 1190–1194. [Google Scholar]

- Jinyang, Q.; Denghuang, Z.; Ziqi, F.; Di, W.; Yishen, X.; Zhi, T. Pathological Voice Feature Generation Using Generative Adversarial Network. In Proceedings of the 2021 International Conference on Sensing, Measurement & Data Analytics in the era of Artificial Intelligence (ICSMD), Nanjing, China, 21–23 October 2021. [Google Scholar]

- Khan, M.A.; Kim, J. Toward Developing Efficient Conv-AE-Based Intrusion Detection System Using Heterogeneous Dataset. Electronics 2020, 9, 1771. [Google Scholar] [CrossRef]

- Al-Dhief, F.T.; Latiff, N.M.A.; Malik, N.N.N.A.; Salim, N.S.; Baki, M.M.; Albadr, M.A.A.; Mohammed, M.A. A Survey of Voice Pathology Surveillance Systems Based on Internet of Things and Machine Learning Algorithms. IEEE Access 2020, 8, 64514–64533. [Google Scholar] [CrossRef]

- Mohammed, M.A.; Abdulkareem, K.H.; Mostafa, S.A.; Khanapi Abd Ghani, M.; Maashi, M.S.; Garcia-Zapirain, B.; Oleagordia, I.; Alhakami, H.; AL-Dhief, F.T. Voice Pathology Detection and Classification Using Convolutional Neural Network Model. Appl. Sci. 2020, 10, 3723. [Google Scholar] [CrossRef]

- Verde, L.; de Pietro, G.; Ghoneim, A.; Alrashoud, M.; Al-Mutib, K.N.; Sannino, G. Exploring the Use of Artificial Intelligence Techniques to Detect the Presence of Coronavirus Covid-19 Through Speech and Voice Analysis. IEEE Access 2021, 9, 65750–65757. [Google Scholar] [CrossRef]

- Zhang, T.; Wu, Y.; Shao, Y.; Shi, M.; Geng, Y.; Liu, G. A Pathological Multi-Vowels Recognition Algorithm Based on LSP Feature. IEEE Access 2019, 7, 58866–58875. [Google Scholar] [CrossRef]

- Alhusseimn, M.; Muhammad, G. Automatic Voice Pathology Monitoring Using Parallel Deep Models for Smart Healthcare. IEEE Access 2019, 7, 46474–46479. [Google Scholar] [CrossRef]

- Verde, L.; de Pietro, G.; Alrashoud, M.; Ghoneim, A.; Al-Mutib, K.N.; Sannino, G. Leveraging Artificial Intelligence to Improve Voice Disorder Identification Through the Use of a Reliable Mobile App. IEEE Access 2019, 7, 124048–124054. [Google Scholar] [CrossRef]

- Verde, L.; de Pietro, G.; Sannino, G. Voice Disorder Identification by Using Machine Learning Techniques. IEEE Access 2018, 6, 16246–16255. [Google Scholar] [CrossRef]

- Eye, M.; Infirmary, E. Voice Disorders Database, Version. 1.03 (cd-rom); Kay Elemetrics Corporation: Lincoln Park, NJ, USA, 1994. [Google Scholar]

- William, J.B.; Manfred, P. Saarbrucken Voice Database; Institute of Phonetics, Univ. of Saarland: Saarbrücken, Germany, 2007; Available online: http://www.stimmdatenbank.coli.uni-saarland.de/2007 (accessed on 29 December 2022).

- Islam, R.; Tarique, M.; Abdel-Raheem, E.A. Survey on Signal Processing Based Pathological Voice Detection Techniques. IEEE Access 2020, 8, 66749–66776. [Google Scholar] [CrossRef]

- Reddy, M.K.; Alku, P.A. Comparison of Cepstral Features in the Detection of Pathological Voices by Varying the Input and Filterbank of the Cepstrum Computation. IEEE Access 2021, 9, 135953–135963. [Google Scholar] [CrossRef]

- Hemmerling, D.; Skalski, A.; Gajda, J. Voice data mining for laryngeal pathology assessment. Comput. Biol. Med. 2016, 9, 270–276. [Google Scholar] [CrossRef] [PubMed]

- Naranjo, L.; Perez, C.J.; Martin, J.; Campos-Roca, Y.A. two-stage variable selection and classification approach for Parkin-son’s disease detection by using voice recording replications. Comput. Methods Prog. Biomed. 2021, 142, 147–156. [Google Scholar] [CrossRef]

- Wu, Y.; Zhou, C.; Fan, Z.; Wu, D.; Zhang, X.; Tao, Z. Investigation and Evaluation of Glottal Flow Waveform for Voice Pathology Detection. IEEE Access 2021, 9, 30–44. [Google Scholar] [CrossRef]

- Tuncer, T.; Dogan, S.; Özyurt, F.; Belhaouari, S.B.; Bensmail, H. Novel Multi Center and Threshold Ternary Pattern Based Method for Disease Detection Method Using Voice. IEEE Access 2020, 8, 84532–84540. [Google Scholar] [CrossRef]

- Chicco, D.; Jurman, G. The advantages of the Matthews correlation coefficient (MCC) over F1 score and accuracy in binary classification evaluation. BMC Genom. 2020, 21, 6. [Google Scholar] [CrossRef] [PubMed]

- Vandewiele, G.; Dehaene, I.; Kovács, G.; Sterckx, L.; Janssens, O.; Ongenae, F.; de Backere, F.; de Turck, F.; Roelens, K.; Decruyenaere, J.; et al. Overly optimistic prediction results on imbalanced data: A case study of flaws and benefits when applying over-sampling. Artif. Intell. Med. 2020, 111, 101987–102003. [Google Scholar] [CrossRef] [PubMed]

- Jing, X.Y.; Zhang, X.; Zhu, X.; Wu, F.; You, X.; Gao, Y.; Shan, S.; Yang, J.Y. Multiset feature learning for highly imbalanced data classification. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 139–156. [Google Scholar] [CrossRef]

- Chui, K.T.; Lytras, M.; Vasant, P. Combined Generative Adversarial Network and Fuzzy C-Means Clustering for MultiClass Voice Disorder Detection with an Imbalanced Dataset. Appl. Sci. 2020, 10, 4571. [Google Scholar] [CrossRef]

- Fan, Z.; Qian, J.; Sun, B.; Wu, D.; Xu, Y.; Tao, Z. Modeling Voice Pathology Detection Using Imbalanced Learning. In Proceedings of the 2020 International Conference on Sensing, Measurement & Data Analytics in the era of Artificial Intelligence (ICSMD), Xi’an, China, 15–17 October 2020; pp. 330–334. [Google Scholar]

- Fan, Z.; Wu, Y.; Zhou, C.; Zhang, X.; Tao, Z. Class-Imbalanced Voice Pathology Detection and Classification Using Fuzzy Cluster Oversampling Method. Appl. Sci. 2021, 10, 3450. [Google Scholar] [CrossRef]

- Esmaeilpour, M.; Cardinal, P.; Koerich, A.L. Unsupervised Feature Learning for Environmental Sound Classification Using Weighted Cycle-Consistent Generative Adversarial Network. Appl. Soft Comput. 2020, 86, 105912–105943. [Google Scholar] [CrossRef]

- Trinh, N.H.; O’Brien, D. Semi-Supervised Learning with Generative Adversarial Networks for Pathological Speech Classification. In Proceedings of the 2020 31st Irish Signals and Systems Conference (ISSC), Letterkenny, Ireland, 11–12 June 2020; pp. 1–5. [Google Scholar]

- Chawla, N.V.; Bowyer, K.W.; Kegelmeyer, W.P. SMOTE: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Blagus, R.; Lusa, L. SMOTE for high-dimensional class-imbalanced data. BMC Bioinform. 2013, 14, 106–121. [Google Scholar] [CrossRef] [PubMed]

- Hui, H.; Wang, W.Y.; Mao, B.H. Borderline-SMOTE: A New Over-Sampling Method in Imbalanced Data Sets Learning. In Proceedings of the International Conference on Intelligent Computing, Hefei, China, 23–26 August 2005; pp. 330–334. [Google Scholar]

- He, H.; Bai, Y.; Garcia, E.A.; Li, S. ADASYN: Adaptive synthetic sampling approach for imbalanced learning. In Proceedings of the 2008 IEEE International Joint Conference on Neural Networks, Hong Kong, China, 1–8 June 2008; pp. 1322–1328. [Google Scholar]

- Dong, Y.; Wang, X. A New Over-Sampling Approach: Random-SMOTE for Learning from Imbalanced Data Sets. In Proceedings of the International Conference on Knowledge Science, Engineering and Management, Dalian, China, 10–12 August 2013; pp. 10–12. [Google Scholar]

- Douzas, G.; Bacaoa, F.; Last, F. Improving imbalanced learning through a heuristic oversampling method based on k-means and SMOTE. Inf. Sci. 2018, 465, 1–20. [Google Scholar] [CrossRef]

- Wei-Chao, L.; Chih-Fong, T.; Ya-Han, H.; Jing-Shang, J. Clustering-based undersampling in-class imbalanced data. Inf. Sci. 2017, 409–410, 17–26. [Google Scholar]

- Gautheron, L.; Habrard, A.; Morvant, E.; Sebban, M. Metric Learning from Imbalanced Data with Generalization Guarantees. Pattern Recognit. Lett. 2020, 133, 298–304. [Google Scholar] [CrossRef]

- Alim, S.A.; Alang Rashid, N.K. Some Commonly Used Speech Feature Extraction Algorithms. Available online: https://www.intechopen.com/chapters/63970 (accessed on 29 December 2022).

- Makhuol, J. Linear Prediction: A Tutorial Review. Proc. IEEE 1975, 63, 561–580. [Google Scholar] [CrossRef]

- Kumar, S. Real-time implementation and performance evaluation of speech classifiers in speech analysis-synthesis. ETRI J. 2020, 43, 82–94. [Google Scholar] [CrossRef]

- Lee, J.Y. Experimental Evaluation of Deep Learning Methods for an Intelligent Pathological Voice Detection System Using the Saarbruecken Voice Database. Appl. Sci. 2021, 11, 7149. [Google Scholar] [CrossRef]

- Kadiri, S.R.; Alku, P. Analysis and Detection of Pathological Voice Using Glottal Source Features. IEEE J. Sel. Top. Signal Process. 2020, 14, 367–379. [Google Scholar] [CrossRef]

- Amami, R.; Smiti, A. An incremental method combining density clustering and support vector machines for voice pathology detection. Comput. Electr. Eng. 2017, 57, 257–265. [Google Scholar] [CrossRef]

| Imbalanced Class | Balanced Class | |

|---|---|---|

| Number of normal voices | 687 | 1354 |

| Number of pathological voices | 1354 | 1354 |

| Main Hyperparameters | CNN | FNN |

|---|---|---|

| Activation function | ReLU | ReLU |

| Kernel size | (3, 3) | · |

| Optimizer | SGD + momentum | SGD + momentum |

| Number of epochs | 100 | 100 |

| Loss function | Cross-entropy | Cross-entropy |

| Dropout | 0.3 | · |

| Pooling window | Max pooling (2,2) | · |

| Neurons in the dense layer | 512 | · |

| Learning rate | 0.001 | 0.00001 |

| Actual Class | Prediction Results | |

|---|---|---|

| Positive Class | Negative Class | |

| Positive class | TP | FN |

| Negative class | FP | TN |

| FNN | ||

| MFCC | Recall | 0.43 |

| Specificity | 0.88 | |

| G value | 0.62 | |

| F1 value | 0.51 | |

| LPC | Recall | 0.35 |

| Specificity | 0.90 | |

| G value | 0.56 | |

| F1 value | 0.45 | |

| CNN | ||

| MFCC | Recall | 0.44 |

| Specificity | 0.87 | |

| G value | 0.62 | |

| F1 value | 0.51 | |

| LPC | Recall | 0.27 |

| Specificity | 0.95 | |

| G value | 0.51 | |

| F1 value | 0.40 |

| FNN | SMOTE | ADASYN | Borderline-SMOTE | |

|---|---|---|---|---|

| MFCC | Recall | 0.85 | 0.7 | 0.85 |

| Specificity | 0.85 | 0.69 | 0.84 | |

| G value | 0.85 | 0.69 | 0.85 | |

| F1 value | 0.85 | 0.69 | 0.85 | |

| LPC | Recall | 0.92 | 0.92 | 0.91 |

| Specificity | 0.91 | 0.91 | 0.9 | |

| G value | 0.91 | 0.91 | 0.9 | |

| F1 value | 0.91 | 0.91 | 0.9 | |

| CNN | ||||

| MFCC | Recall | 0.88 | 0.8 | 0.82 |

| Specificity | 0.88 | 0.78 | 0.81 | |

| G value | 0.88 | 0.79 | 0.81 | |

| F1 value | 0.88 | 0.8 | 0.82 | |

| LPC | Recall | 1.0 | 0.95 | 0.99 |

| Specificity | 0.97 | 0.92 | 0.98 | |

| G value | 0.98 | 0.94 | 0.98 | |

| F1 value | 0.99 | 0.93 | 0.98 |

| Work | Feature | Database | Methodology | Accuracy |

|---|---|---|---|---|

| [2] | MFCC | SVD | BPGAN and GAN | 87.60% |

| [24] | MFCC | MEEI | FC-SMOTE and RF | 100% |

| MFCC | SVD | FC-SMOTE and CNN | 90% | |

| [22] | · | SVD | IFCM and CGAN | 95.15% |

| [26] | Spectrogram | Spanish Parkinson’s Disease Dataset (SPDD) | Semi supervised GAN | 96.63% |

| Proposed method | MFCC and LPC | SVD | LPC based on the SMOTE and CNN | 98.89% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, J.-N.; Lee, J.-Y. An Efficient SMOTE-Based Deep Learning Model for Voice Pathology Detection. Appl. Sci. 2023, 13, 3571. https://doi.org/10.3390/app13063571

Lee J-N, Lee J-Y. An Efficient SMOTE-Based Deep Learning Model for Voice Pathology Detection. Applied Sciences. 2023; 13(6):3571. https://doi.org/10.3390/app13063571

Chicago/Turabian StyleLee, Ji-Na, and Ji-Yeoun Lee. 2023. "An Efficient SMOTE-Based Deep Learning Model for Voice Pathology Detection" Applied Sciences 13, no. 6: 3571. https://doi.org/10.3390/app13063571

APA StyleLee, J.-N., & Lee, J.-Y. (2023). An Efficient SMOTE-Based Deep Learning Model for Voice Pathology Detection. Applied Sciences, 13(6), 3571. https://doi.org/10.3390/app13063571