A Study on the Super Resolution Combining Spatial Attention and Channel Attention

Abstract

1. Introduction

2. Related Work

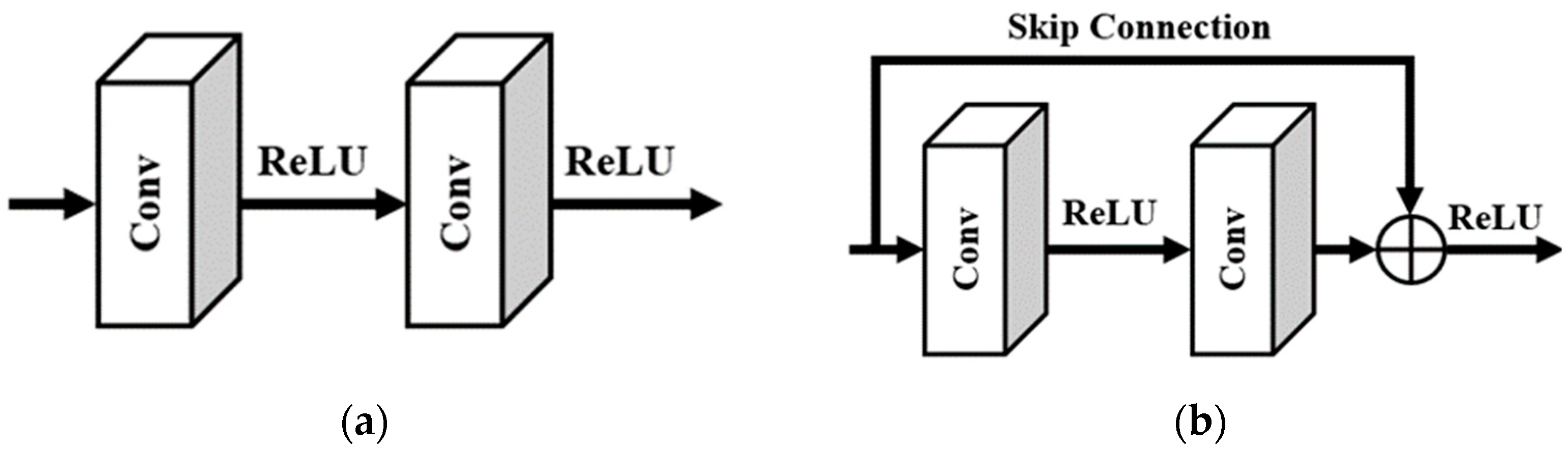

2.1. ResNet

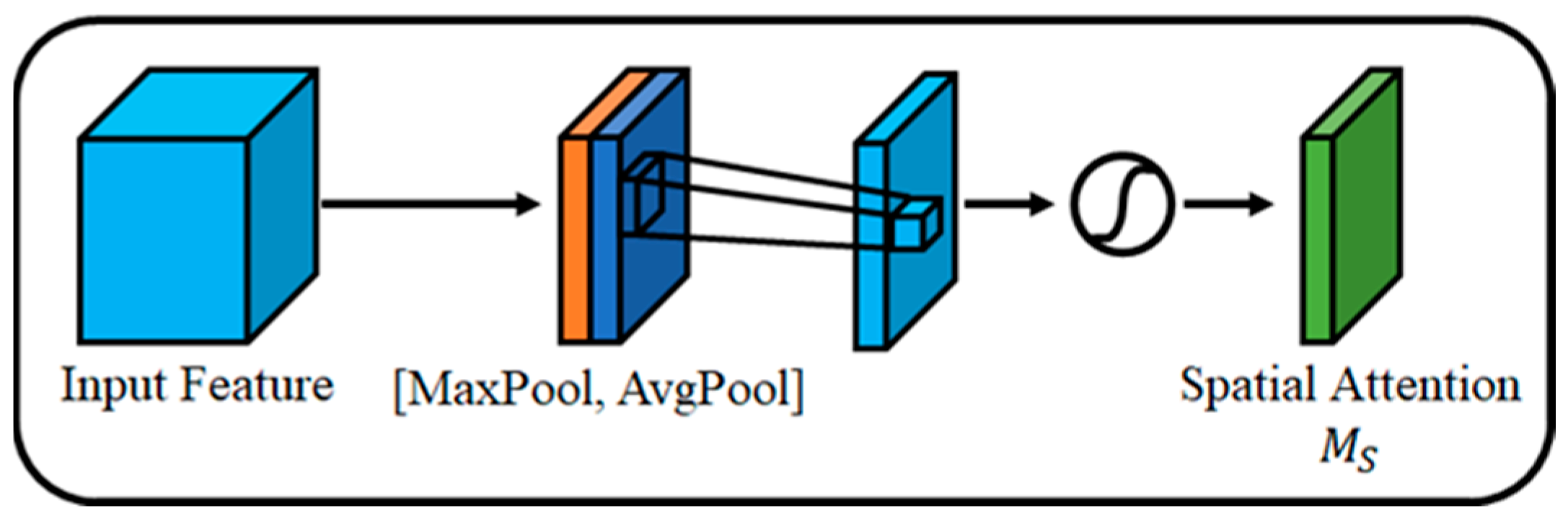

2.2. Attention Mechanism

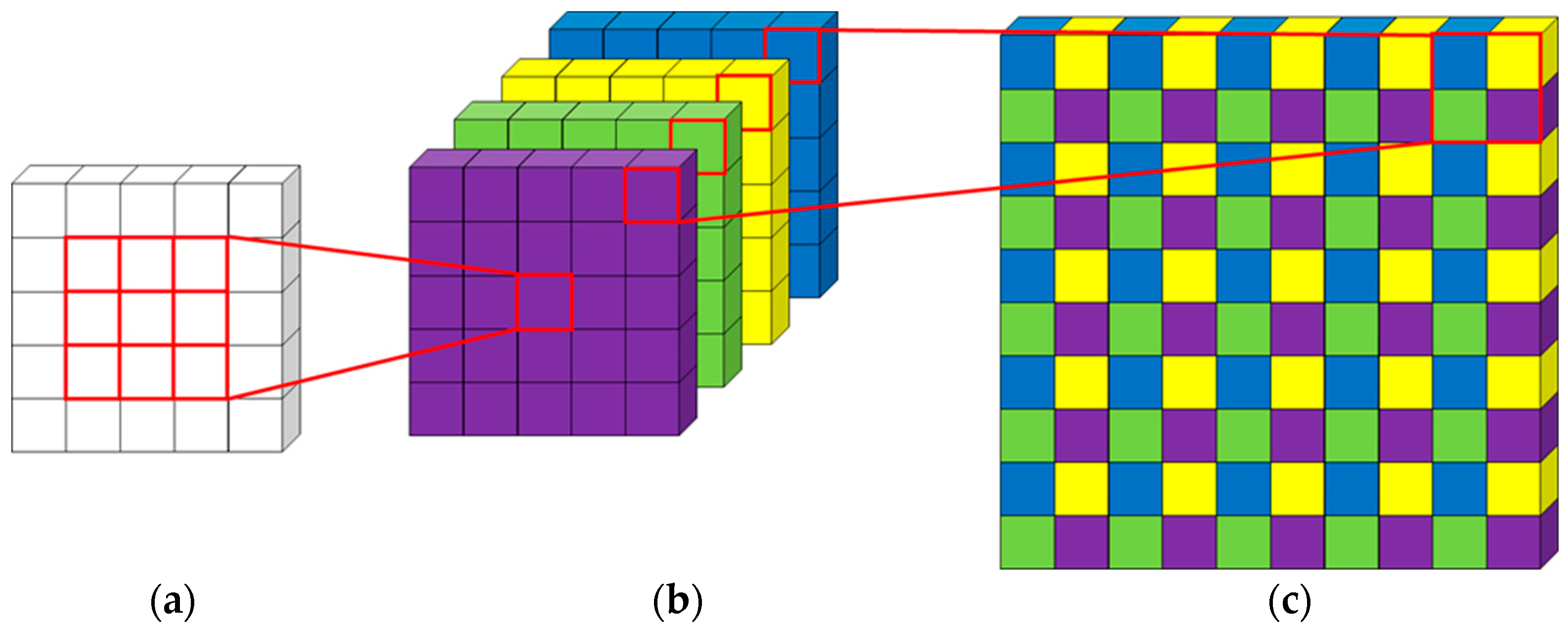

2.3. Sub-Pixel Convolution

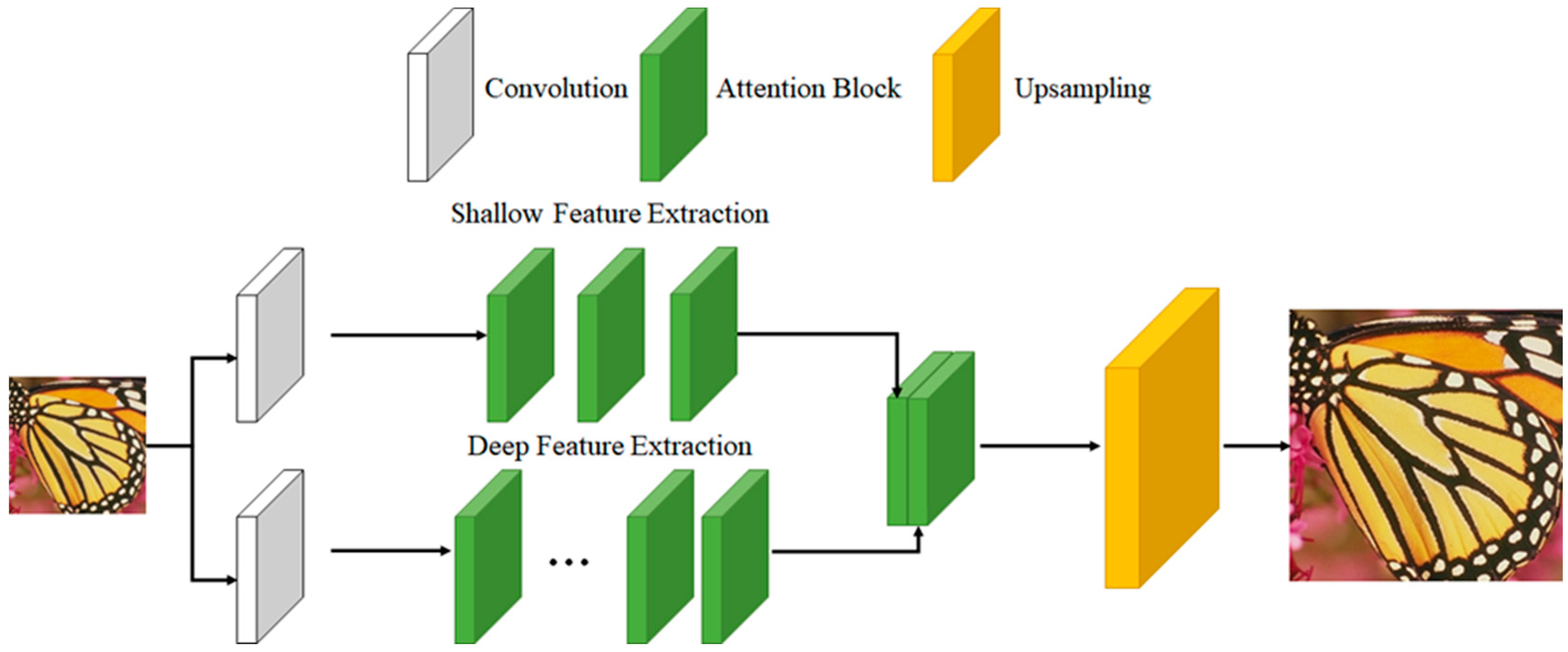

3. Proposed Method

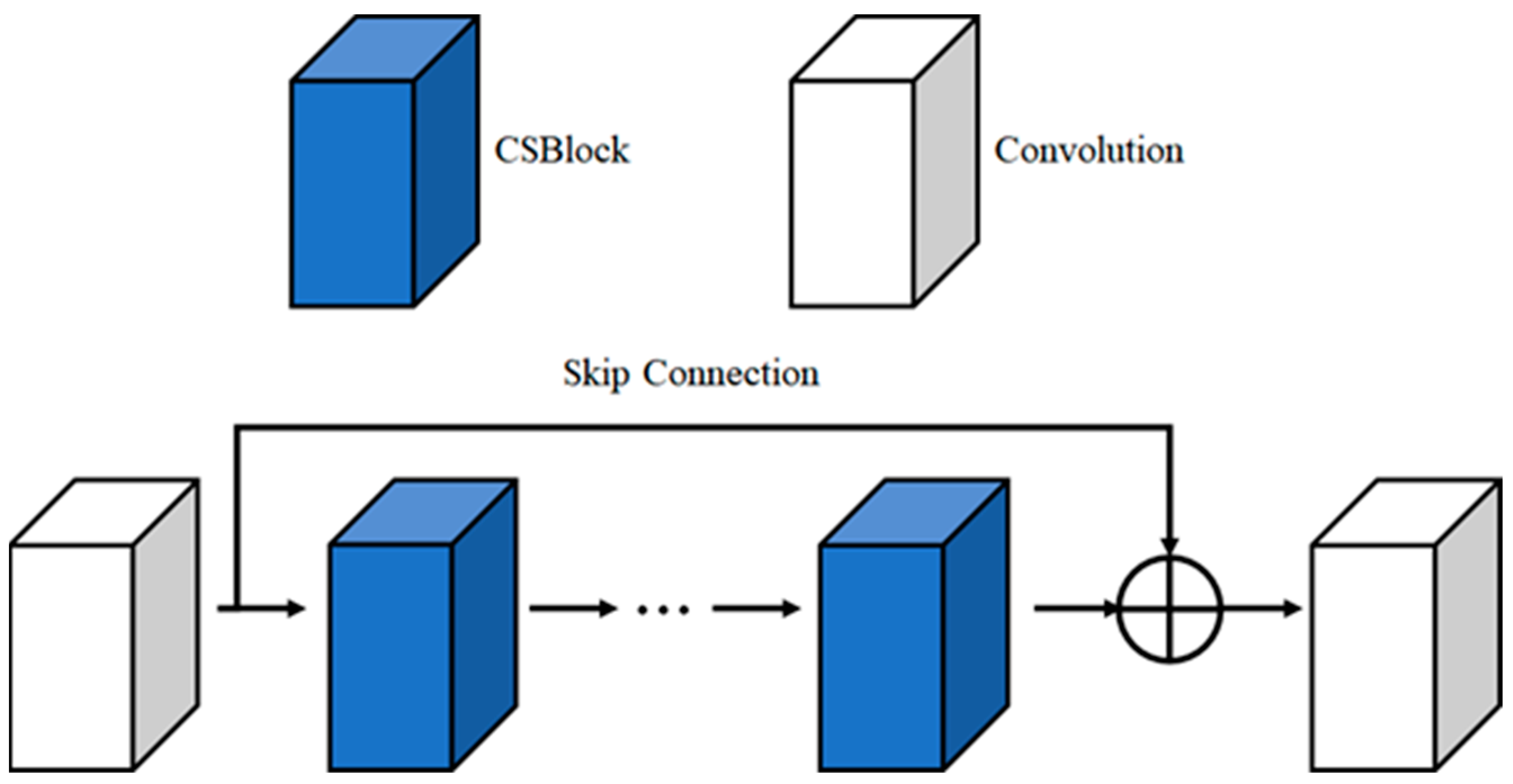

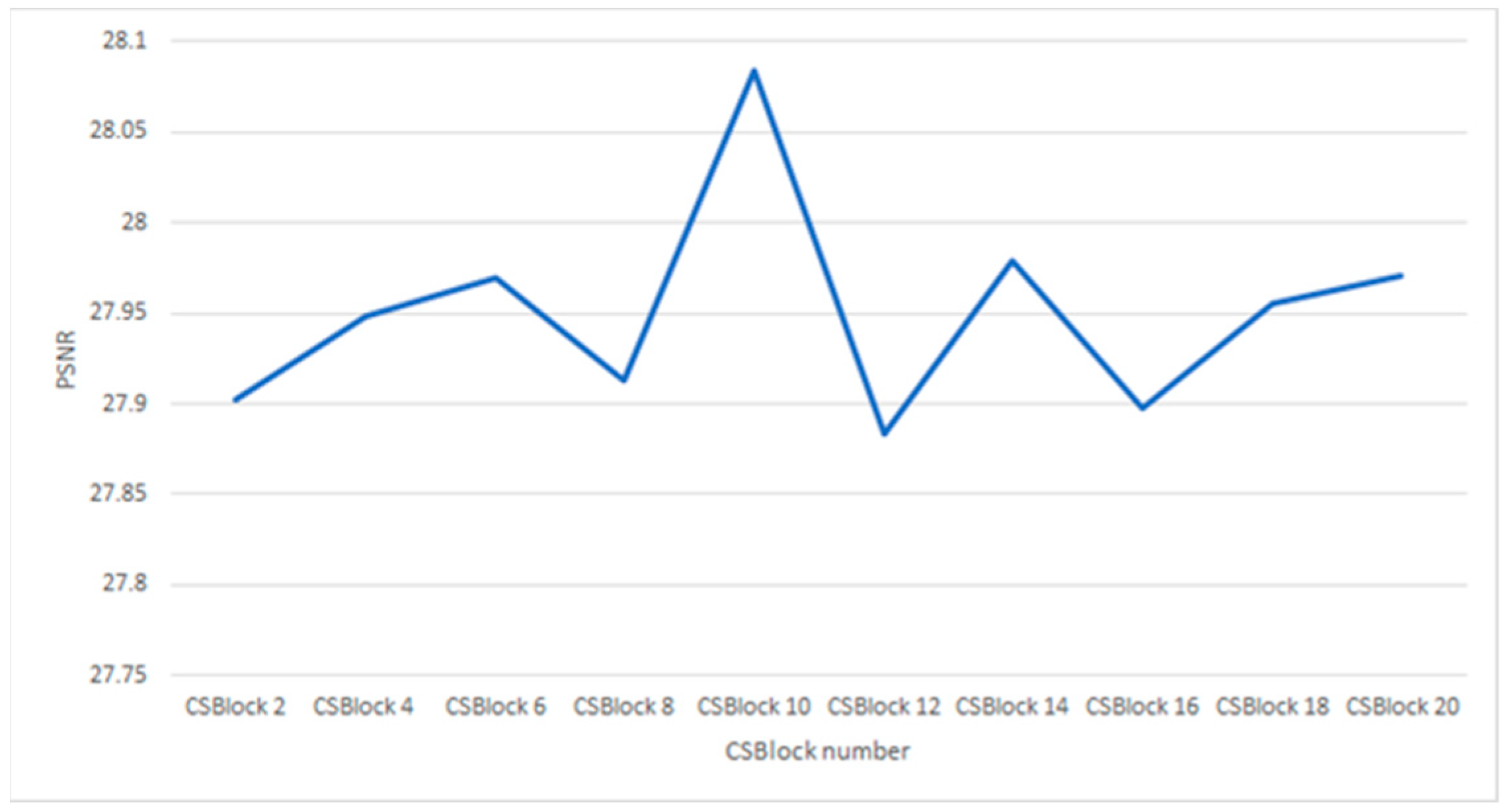

3.1. CSBlock

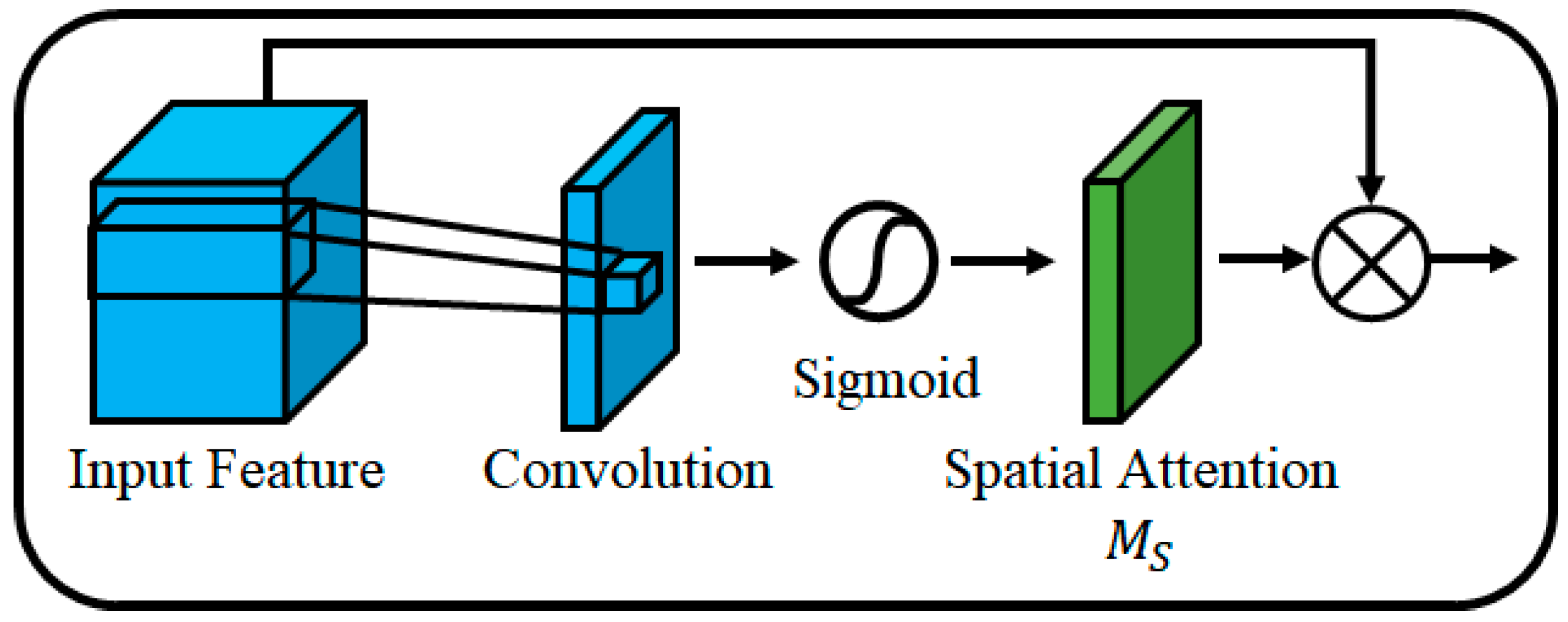

3.2. Attention Block

3.3. Feature Extraction

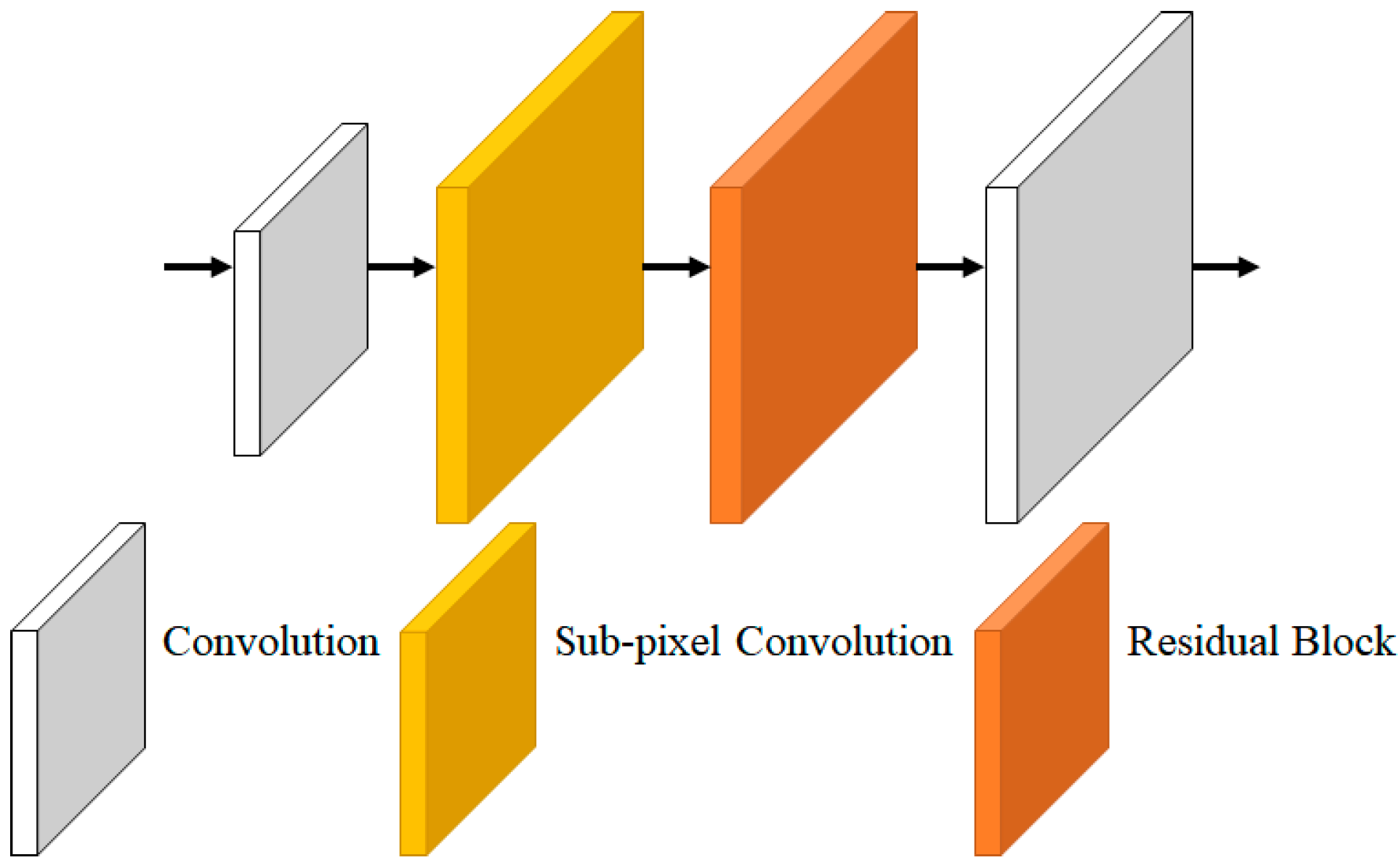

3.4. Upsampling

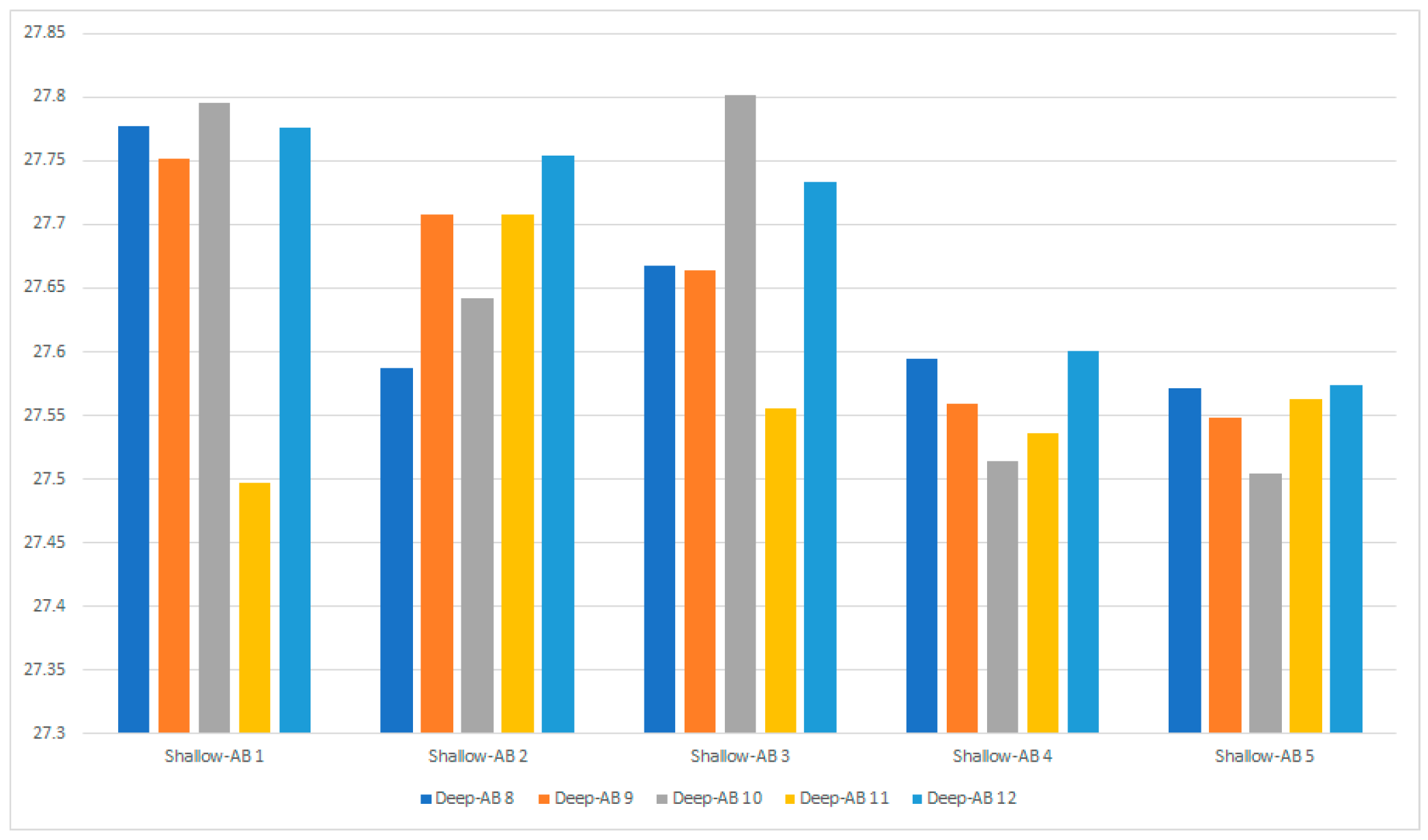

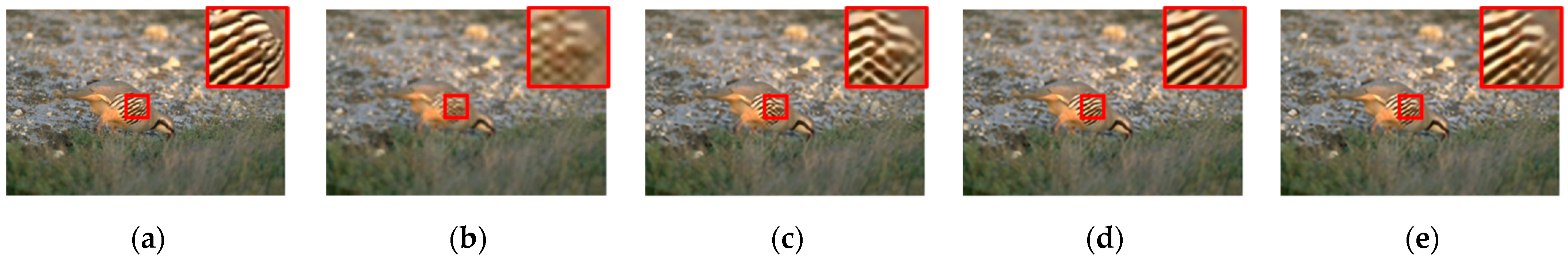

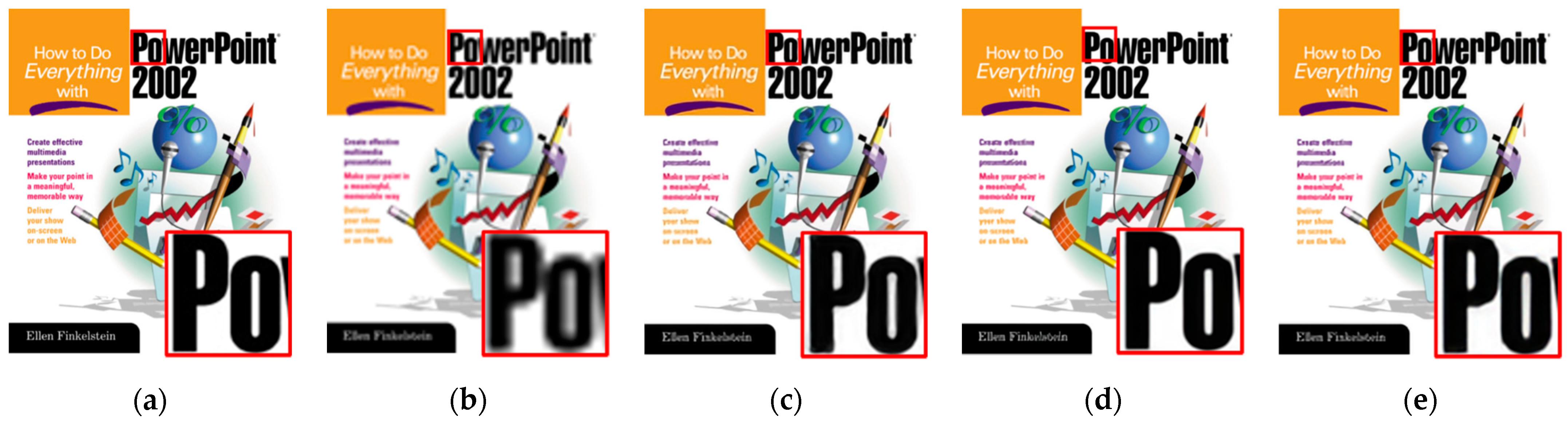

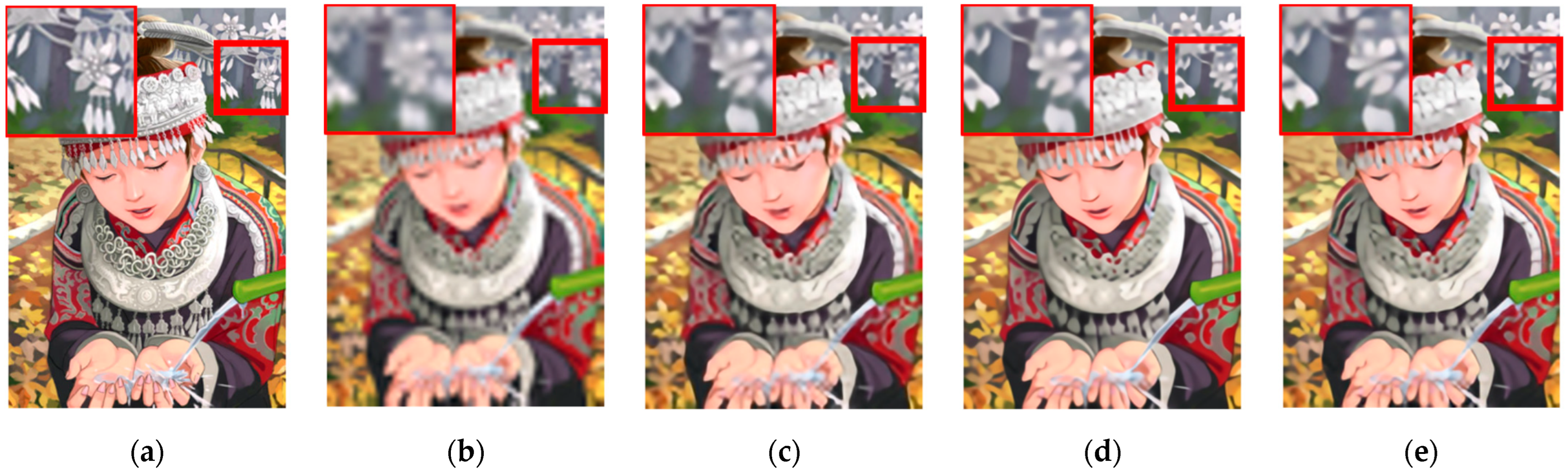

4. Experimental Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Yang, W.; Zhang, X.; Tian, Y.; Wang, W.; Xue, J.-H.; Liao, Q. Deep Learning for Single Image Super-Resolution: A Brief Review. IEEE Trans. Multimed. 2019, 21, 3106–3121. [Google Scholar] [CrossRef]

- Jo, Y.; Oh, S.W.; Vajda, P.; Kim, S.J. Tackling the Ill-Posedness of Super-Resolution through Adaptive Target Generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 16236–16245. [Google Scholar]

- Bai, Y.; Zhuang, H. On the Comparison of Bilinear, Cubic Spline, and Fuzzy Interpolation Techniques for Robotic Po-Sition measurements. IEEE Trans. Instrum. Meas 2005, 54, 2281–2288. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.C.; Tang, X. Accelerating the Super-Resolution Convolutional Neural Network. In Computer Vision-Eccv 2016; Li, P., Leibe, B., Eds.; Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 391–407. [Google Scholar]

- Keys, R. Cubic convolution interpolation for digital image processing. IEEE Trans. Acoust. Speech Signal Process. 1981, 29, 1153–1160. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image super-resolution using deep convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 38, 295–307. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.; Lee, J.K.; Lee, K.M. Accurate Image Super-Resolution Using Very Deep Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1646–1654. [Google Scholar]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Mu Lee, K. Enhanced Deep Residual Networks for Single Image Super-Resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 136–144. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Zhu, H.; Xie, C.; Fei, Y.; Tao, H. Attention Mechanisms in CNN-Based Single Image Super-Resolution: A Brief Review and a New Perspective. Electronics 2021, 10, 1187. [Google Scholar] [CrossRef]

- Fang, S.; Meng, S.; Cao, Y.; Zhang, J.; Shi, W. Adaptive Channel Attention and Feature Super-Resolution for Remote Sensing Images Spatiotemporal Fusion. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 2572–2575. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Hochreiter, S. The Vanishing Gradient Problem During Learning Recurrent Neural Nets and Problem Solutions. Int. J. Uncertain. Fuzziness Knowl.-Based Syst. 1998, 6, 107–116. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to Sequence Learning with Neural Networks. In Advances in Neural In-formation Processing Systems; Solla, S.A., Leen, T.K., Müller, K.R., Eds.; The MIT Press: Cambridge, MS, USA, 2000; p. 27. [Google Scholar]

- Lee, D.W.; Lee, S.H.; Han, H.H.; Chae, G.S. Improved Skin Color Extraction Based on Flood Fill for Face Detection. J. Korea Converg. Soc. 2019, 10, 7–14. [Google Scholar]

- Kim, H.-J.; Park, Y.-S.; Kim, K.-B.; Lee, S.-H. Modified HOG Feature Extraction for Pedestrian Tracking. J. Korea Converg. 2019, 10, 39–47. [Google Scholar] [CrossRef]

- In, K.D.; Kang-Sung, L.; Kun-Hee, H.; Hun, L.S. A Study on the Improvement of Skin Loss Area in Skin Color Extraction for Face Detection. J. Korea Converg. Soc. 2019, 10, 1–8. [Google Scholar]

- Kim, H.J.; Lee, S.H.; Kim, K.B. Modified Single Shot Multibox Detector for Fine Object Detection based on Deep Learning. J. Adv. Res. Dyn. Control. Syst. 2019, 11, 1773–1780. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Shi, W.; Caballero, J.; Huszár, F.; Totz, J.; Aitken, A.P.; Bishop, R.; Rueckert, D.; Wang, Z. Real-Time Single Image and Video Super-Resolution Using an Efficient Sub-Pixel Convolutional Neural Network. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1874–1883. [Google Scholar]

- Zeiler, M.D.; Krishnan, D.; Taylor, G.W.; Fergus, R. Deconvolutional networks. In Proceedings of the 2010 IEEE Computer Society Conference on computer vision and pattern recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 2528–2535. [Google Scholar]

- Noh, H.; Hong, S.; Han, B. Learning Deconvolution Network for Semantic Segmentation. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1520–1528. [Google Scholar]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning Deep Features for Discriminative Localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 21–30 June 2016; pp. 2921–2929. [Google Scholar]

- LeCun, Y.; Boser, B.; Denker, J.; Henderson, D.; Howard, R.; Hubbard, W.; Jackel, L. Handwritten digit recognition with a back-propagation network. In Advances in Neural Information Processing Systems; Jordan, M.I., LeCun, Y., Solla, S.A., Eds.; The MIT Press: Cambridge, MS, USA, 1989; p. 2. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image Quality Assessment: From Error Visibility to Structural Similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

| Dataset | Scale | Bicubic PNSR/SSIM | VDSR PNSR/SSIM | EDSR PNSR/SSIM | Proposed Method PNSR/SSIM |

|---|---|---|---|---|---|

| Set 5 | ×2 | 33.66/0.9299 | 37.53/0.9587 | 38.11/0.9601 | 38.15/0.9589 |

| ×3 | 30.39/0.8682 | 33.66/0.9213 | 34.65/0.9282 | 34.48/0.9291 | |

| ×4 | 28.42/0.8104 | 31.35/0.8838 | 32.46/0.8968 | 32.48/0.8988 | |

| Set 14 | ×2 | 30.24/0.8688 | 33.03/0.9124 | 33.92/0.9195 | 34.02/0.9211 |

| ×3 | 27.55/0.7742 | 29.77/0.8314 | 30.52/0.8462 | 30.43/0.8431 | |

| ×4 | 26.00/0.7027 | 28.01/0.7674 | 28.80/0.7876 | 28.77/0.7866 | |

| Urban 100 | ×2 | 26.88/0.8403 | 30.76/0.9140 | 32.93/0.9351 | 32.97/0.9352 |

| ×3 | 24.46/0.7349 | 27.14/0.8279 | 28.80/0.8653 | 28.71/0.8640 | |

| ×4 | 123.14/0.6577 | 25.18/0.7524 | 26.64/0.8033 | 26.68/0.8035 | |

| B 100 | ×2 | 29.56/0.8431 | 31.90/0.8960 | 35.03/0.9695 | 35.07/0.9701 |

| ×3 | 27.21/0.7385 | 28.82/0.7976 | 31.26/0.9340 | 31.22/0.9337 | |

| ×4 | 23.96/0.6577 | 27.29/0.7251 | 29.25/0.9017 | 29.27/0.9020 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, D.; Jang, K.; Cho, S.Y.; Lee, S.; Son, K. A Study on the Super Resolution Combining Spatial Attention and Channel Attention. Appl. Sci. 2023, 13, 3408. https://doi.org/10.3390/app13063408

Lee D, Jang K, Cho SY, Lee S, Son K. A Study on the Super Resolution Combining Spatial Attention and Channel Attention. Applied Sciences. 2023; 13(6):3408. https://doi.org/10.3390/app13063408

Chicago/Turabian StyleLee, Dongwoo, Kyeongseok Jang, Soo Young Cho, Seunghyun Lee, and Kwangchul Son. 2023. "A Study on the Super Resolution Combining Spatial Attention and Channel Attention" Applied Sciences 13, no. 6: 3408. https://doi.org/10.3390/app13063408

APA StyleLee, D., Jang, K., Cho, S. Y., Lee, S., & Son, K. (2023). A Study on the Super Resolution Combining Spatial Attention and Channel Attention. Applied Sciences, 13(6), 3408. https://doi.org/10.3390/app13063408