Accurate Extraction of Cableways Based on the LS-PCA Combination Analysis Method

Abstract

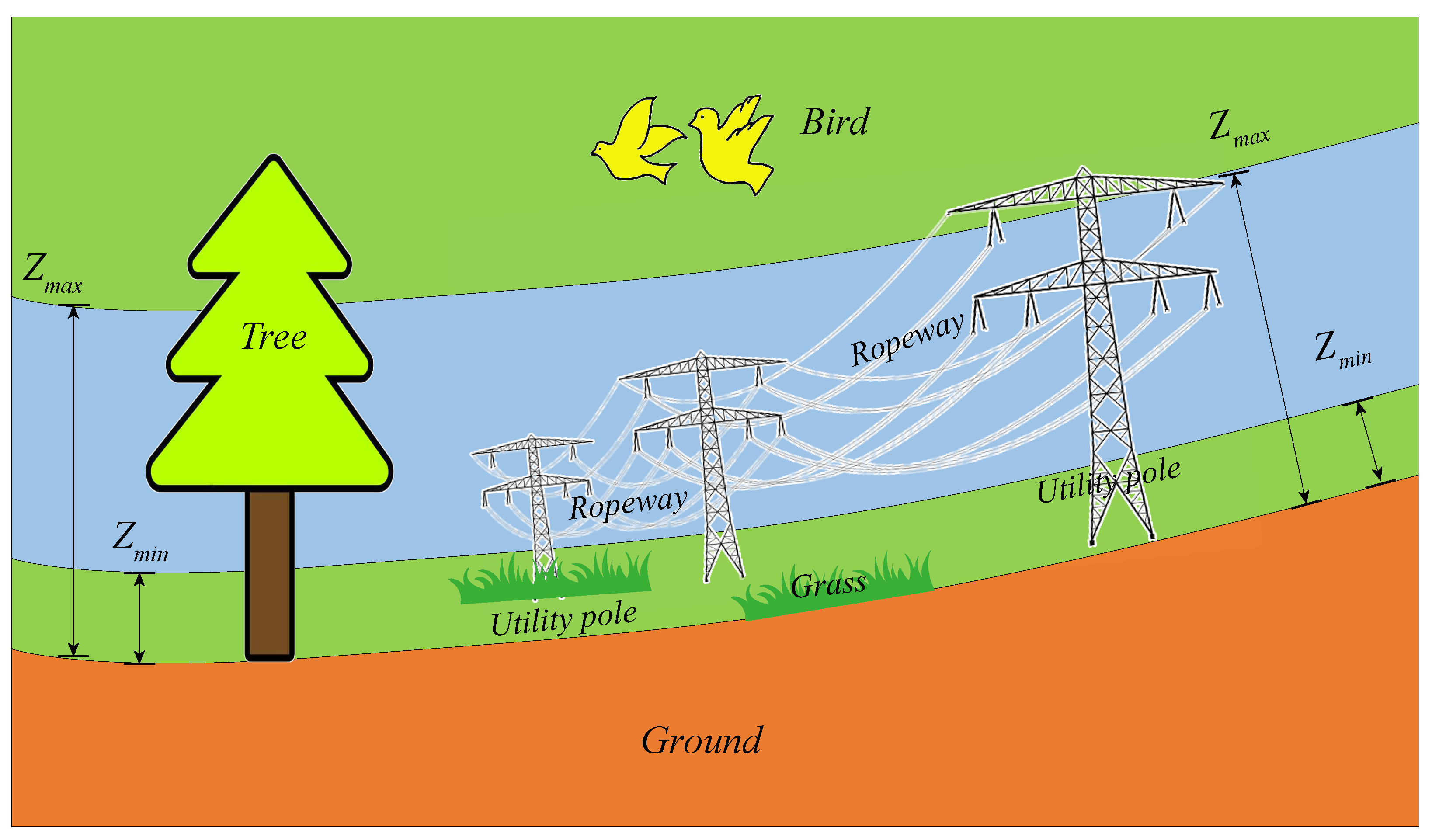

1. Introduction

- We introduce spatial geometric distribution and an entropy function. The segmented upper part is further segmented based on the principle of spatial geometric distribution and the minimization of the entropy function.

- We propose a comprehensive analysis. The final cable extraction is achieved by combining the least squares method and the principal component analysis method.

- We carried out experimental verification. Three groups of samples were selected as the experimental objects, and the experimental results confirmed the efficacy of the proposed method as well as its reliability.

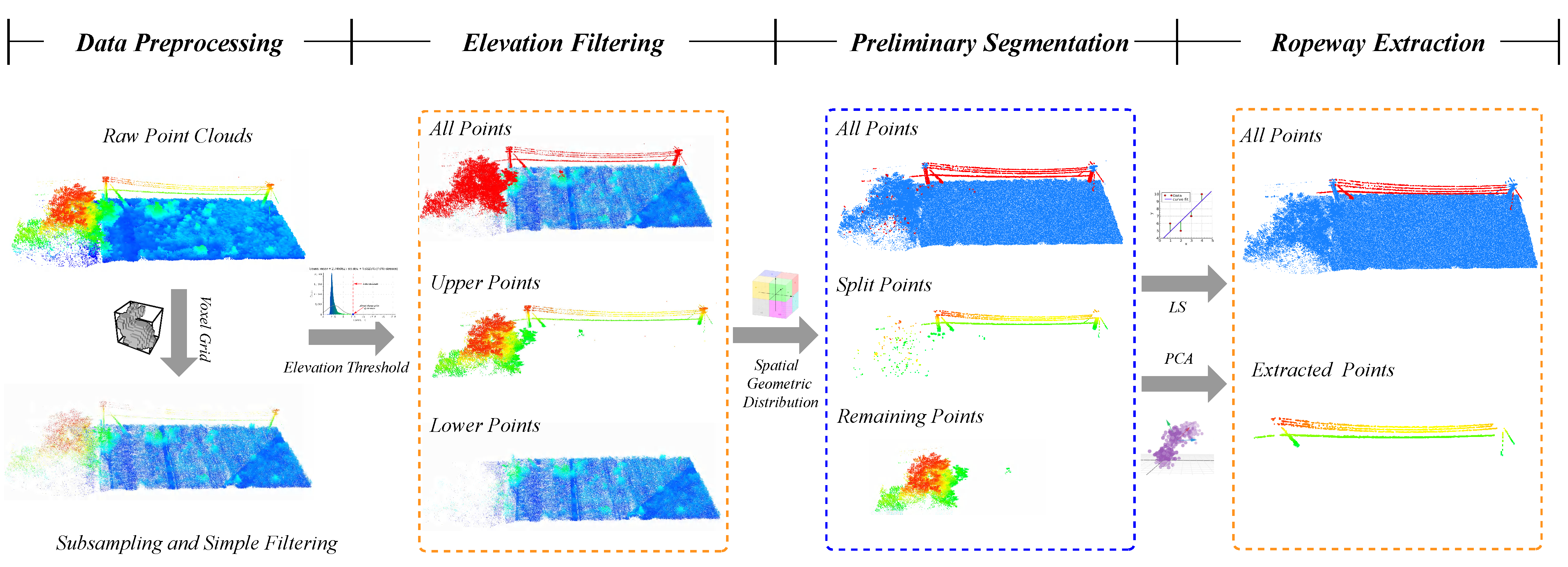

2. Methodology

2.1. Overview

2.2. Preprocessing of Ropeway Data

- In general, the ropeway extends linearly in a narrow and long shape, with a length much greater than a width;

- There are no obvious objects around the cableway from a local perspective, and the individual cables are parallel to each other and maintain a certain distance from one another;

- While cableway points have a discontinuous distribution in the vertical direction, they are closely connected in the horizontal direction.

2.2.1. Filtering by Elevation

- The meshing of point clouds. This is divided into grids and all points within each grid are normalized to the horizontal plane of the grid’s lowest point, as shown in Figure 3a;

- As shown in Figure 3b, the elevation threshold can be determined by drawing the “elevation-point-figure map” of the normalized point cloud;

- Finally, the points within the target area and the points outside the target area are segmented based on their elevation threshold, as shown in Figure 3c.

2.2.2. Preliminary Segmentation of the Ropeway

2.3. Extraction of Ropeways

2.3.1. Least Square Method

2.3.2. Analyses Based on Principal Components

2.3.3. Comprehensive Analysis

- (1)

- By utilizing the least squares method to fit the plane, we can obtain the plane formula and the distance d between each point of the point cloud and the fitting plane.

- (2)

- The error of the distance d obtained from (1) can be calculated for fitting the plane point cloud. If each point is far from , delete it, otherwise keep it.

- (3)

- By performing a principal component analysis on the noise-removed point cloud data, the parameters of the fitting plane are obtained, and the eigenvalues are sorted by the basis size.

- (4)

- The ropeway point cloud is segmented and extracted according to the linear features of the points, and the extracted ropeway points are saved as ropeway points and the non-ropeway points as out-ropeway points.

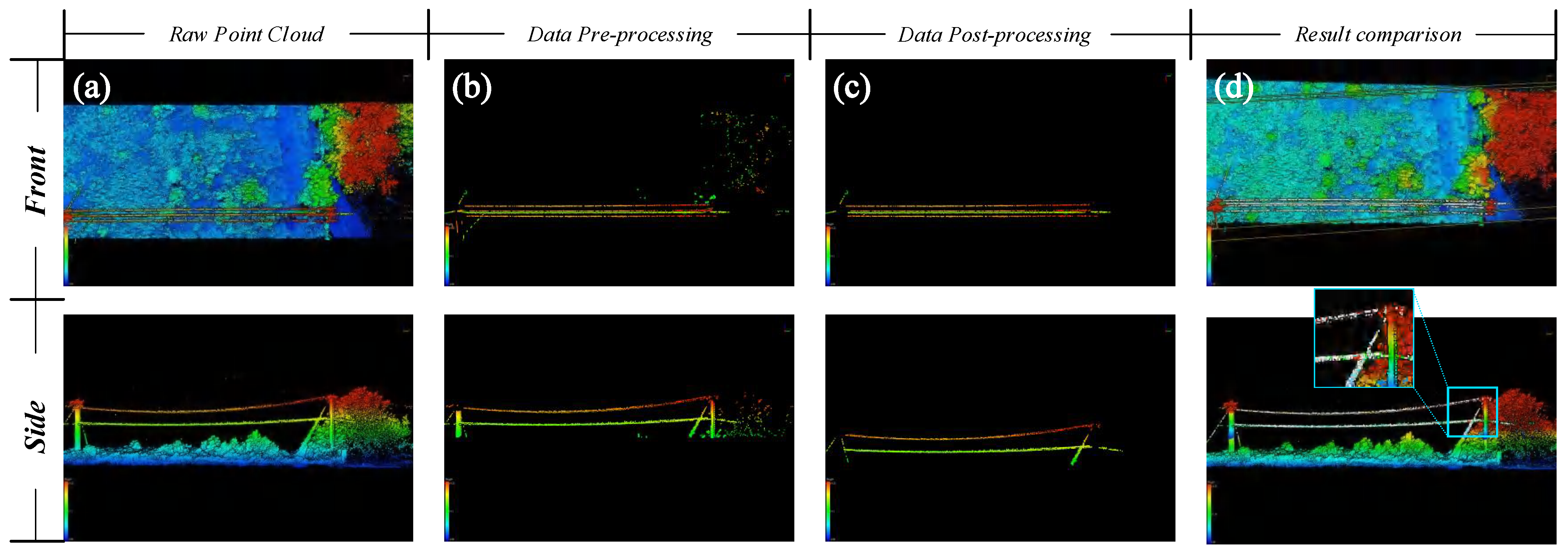

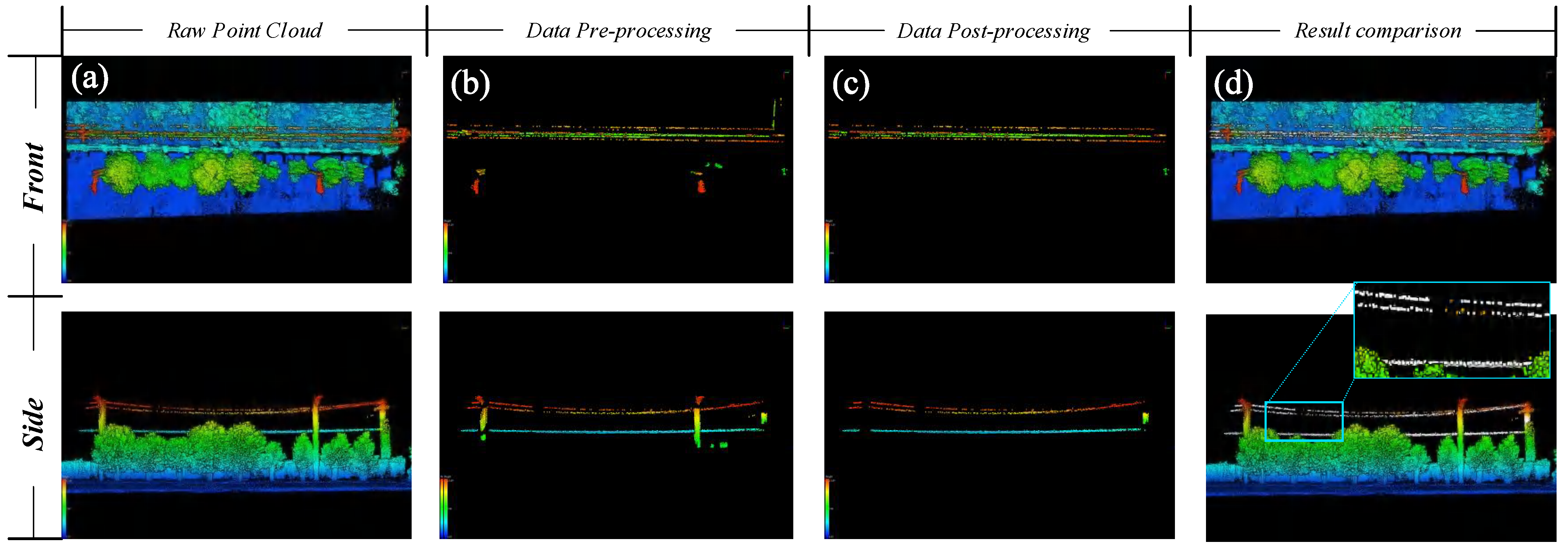

3. Experiment and Results

3.1. Preparation of Experiments

3.2. Experimental Results and Analysis

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| 2D and 3D | two-dimensional and three-dimensional |

| UAV | unmanned aerial vehicle |

| LiDAR | light detection and ranging |

| PCL | Point Cloud Library |

| SVM | support vector machine |

| PCA | principal component analysis |

| LS | least square |

References

- Gilaberte-Búrdalo, M.; López-Martín, F.; Pino-Otín, M.; López-Moreno, J.I. Impacts of climate change on ski industry. Environ. Sci. Policy 2014, 44, 51–61. [Google Scholar] [CrossRef]

- Scott, D.; McBoyle, G. Climate change adaptation in the ski industry. Mitig. Adapt. Strateg. Glob. Chang. 2007, 12, 1411–1431. [Google Scholar] [CrossRef]

- Duglio, S.; Beltramo, R. Environmental management and sustainable labels in the ski industry: A critical review. Sustainability 2016, 8, 851. [Google Scholar] [CrossRef]

- Wolfsegger, C.; Gössling, S.; Scott, D. Climate change risk appraisal in the Austrian ski industry. Tour. Rev. Int. 2008, 12, 13–23. [Google Scholar] [CrossRef]

- Hopkins, D. The sustainability of climate change adaptation strategies in New Zealand’s ski industry: A range of stakeholder perceptions. J. Sustain. Tour. 2014, 22, 107–126. [Google Scholar] [CrossRef]

- Rutty, M.; Scott, D.; Johnson, P.; Pons, M.; Steiger, R.; Vilella, M. Using ski industry response to climatic variability to assess climate change risk: An analogue study in Eastern Canada. Tour. Manag. 2017, 58, 196–204. [Google Scholar] [CrossRef]

- Hendrikx, J.; Zammit, C.; Hreinsson, E.; Becken, S. A comparative assessment of the potential impact of climate change on the ski industry in New Zealand and Australia. Clim. Chang. 2013, 119, 965–978. [Google Scholar] [CrossRef]

- Wen, T.; Hong, T.; Su, J.; Zhu, Y.; Kong, F.; Chileshe, J. Tension detection device for circular chain cargo transportation ropeway in mountain orchard. Nongye Jixie Xuebao = Trans. Chin. Soc. Agric. Mach. 2011, 42, 80–84. [Google Scholar]

- Ogura, K.; Nihonyanagi, K.; Katsuma, R. Rope Deployment Method for Ropeway-Type Vermin Detection Systems. In Proceedings of the 2018 IEEE 32nd International Conference on Advanced Information Networking and Applications (AINA), Krakow, Poland, 16–18 May 2018; pp. 350–357. [Google Scholar]

- Sukhorukov, V.V. Steel Rope Diagnostics by Magnetic NDT: From Defect Detection to Automated Condition Monitoring. Mater. Eval. 2021, 79, 438–445. [Google Scholar]

- Fathy, M.; Siyal, M.Y. An image detection technique based on morphological edge detection and background differencing for real-time traffic analysis. Pattern Recognit. Lett. 1995, 16, 1321–1330. [Google Scholar] [CrossRef]

- Liang, S.; Li, Y.; Srikant, R. Enhancing the reliability of out-of-distribution image detection in neural networks. arXiv 2017, arXiv:1706.02690. [Google Scholar]

- Chum, O.; Philbin, J.; Zisserman, A. Near duplicate image detection: Min-hash and TF-IDF weighting. BMVC 2008, 810, 812–815. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Zhou, Y.; Tuzel, O. Voxelnet: End-to-end learning for point cloud based 3d object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4490–4499. [Google Scholar]

- Schnabel, R.; Wahl, R.; Klein, R. Efficient RANSAC for point-cloud shape detection. Comput. Graph. Forum 2007, 26, 214–226. [Google Scholar] [CrossRef]

- Shi, S.; Wang, X.; Li, H. Pointrcnn: 3d object proposal generation and detection from point cloud. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 770–779. [Google Scholar]

- He, C.; Zeng, H.; Huang, J.; Hua, X.S.; Zhang, L. Structure aware single-stage 3d object detection from point cloud. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11873–11882. [Google Scholar]

- Fernandes, D.; Silva, A.; Névoa, R.; Sim oes, C.; Gonzalez, D.; Guevara, M.; Novais, P.; Monteiro, J.; Melo-Pinto, P. Point-cloud based 3D object detection and classification methods for self-driving applications: A survey and taxonomy. Inf. Fusion 2021, 68, 161–191. [Google Scholar] [CrossRef]

- Diaz, R.; Chan, S.C.; Liu, J.M. Lidar detection using a dual-frequency source. Opt. Lett. 2006, 31, 3600–3602. [Google Scholar] [CrossRef]

- Bo, L.; Yang, Y.; Shuo, J. Review of advances in LiDAR detection and 3D imaging. Opto-Electron. Eng. 2019, 46, 190167. [Google Scholar]

- Hoge, F.E.; Wright, C.W.; Krabill, W.B.; Buntzen, R.R.; Gilbert, G.D.; Swift, R.N.; Yungel, J.K.; Berry, R.E. Airborne lidar detection of subsurface oceanic scattering layers. Appl. Opt. 1988, 27, 3969–3977. [Google Scholar] [CrossRef]

- Zhang, J.; Lin, X.; Ning, X. SVM-based classification of segmented airborne LiDAR point clouds in urban areas. Remote Sens. 2013, 5, 3749–3775. [Google Scholar] [CrossRef]

- Jwa, Y.; Sohn, G.; Kim, H. Automatic 3d powerline reconstruction using airborne lidar data. Int. Arch. Photogramm. Remote Sens. 2009, 38, W8. [Google Scholar]

- Cheng, L.; Tong, L.; Wang, Y.; Li, M. Extraction of urban power lines from vehicle-borne LiDAR data. Remote Sens. 2014, 6, 3302–3320. [Google Scholar] [CrossRef]

- Yu, J.; Mu, C.; Feng, Y.; Dou, Y. Powerlines extraction techniques from airborne LiDAR data. Geomat. Inf. Sci. Wuhan Univ. 2011, 36, 1275–1279. [Google Scholar]

- Chen, C.; Mai, X.; Song, S.; Peng, X.; Xu, W.; Wang, K. Automatic power lines extraction method from airborne LiDAR point cloud. Geomat. Inf. Sci. Wuhan Univ. 2015, 40, 1600–1605. [Google Scholar]

- Mahmmod, B.M.; Abdulhussain, S.H.; Suk, T.; Hussain, A. Fast computation of Hahn polynomials for high order moments. IEEE Access 2022, 10, 48719–48732. [Google Scholar] [CrossRef]

- Abdulhussain, S.H.; Mahmmod, B.M.; Baker, T.; Al-Jumeily, D. Fast and accurate computation of high-order Tchebichef polynomials. Concurr. Comput. Pract. Exp. 2022, 34, e7311. [Google Scholar] [CrossRef]

- Björck, Å. Least squares methods. Handb. Numer. Anal. 1990, 1, 465–652. [Google Scholar]

- Bloomfield, P.; Watson, G.S. The inefficiency of least squares. Biometrika 1975, 62, 121–128. [Google Scholar] [CrossRef]

- Birge, R.T. The calculation of errors by the method of least squares. Phys. Rev. 1932, 40, 207. [Google Scholar] [CrossRef]

- Castillo, E.; Liang, J.; Zhao, H. Point cloud segmentation and denoising via constrained nonlinear least squares normal estimates. In Innovations for Shape Analysis; Springer: Berlin/Heidelberg, Germany, 2013; pp. 283–299. [Google Scholar]

- Maćkiewicz, A.; Ratajczak, W. Principal components analysis (PCA). Comput. Geosci. 1993, 19, 303–342. [Google Scholar] [CrossRef]

- Ringnér, M. What is principal component analysis? Nat. Biotechnol. 2008, 26, 303–304. [Google Scholar] [CrossRef]

- Cheng, D.; Zhao, D.; Zhang, J.; Wei, C.; Tian, D. PCA-based denoising algorithm for outdoor Lidar point cloud data. Sensors 2021, 21, 3703. [Google Scholar] [CrossRef]

- Nurunnabi, A.; Belton, D.; West, G. Robust segmentation in laser scanning 3D point cloud data. In Proceedings of the 2012 International Conference on Digital Image Computing Techniques and Applications (DICTA), Fremantle, Australia, 3–5 December 2012; pp. 1–8. [Google Scholar]

- Furferi, R.; Governi, L.; Palai, M.; Volpe, Y. From unordered point cloud to weighted B-spline-A novel PCA-based method. In Proceedings of the Applications of Mathematics and Computer Engineering-American Conference on Applied Mathematics, AMERICAN-MATH, Puerto Morelos, Mexico, 29–31 January 2011; Volume 11, pp. 146–151. [Google Scholar]

- Hoppe, E.; Roan, M. Principal component analysis for emergent acoustic signal detection with supporting simulation results. J. Acoust. Soc. Am. 2011, 130, 1962–1973. [Google Scholar] [CrossRef]

) in the point cloud data set that represent different altitudes.

) in the point cloud data set that represent different altitudes.

) in the point cloud data set that represent different altitudes.

) in the point cloud data set that represent different altitudes.

| Category | Configuration | |

|---|---|---|

| Software | Operating System | Win 11 |

| Point-cloud Processing Library | PCL 1.11.1 | |

| Support Platform | Visual Studio 2019 | |

| Hardware | CPU | Intel(R)Core(TM)i5-9400F |

| Memory | DDR4 32 GB | |

| Graphics Card | Nvidia GTX 1080 Ti 11 GB | |

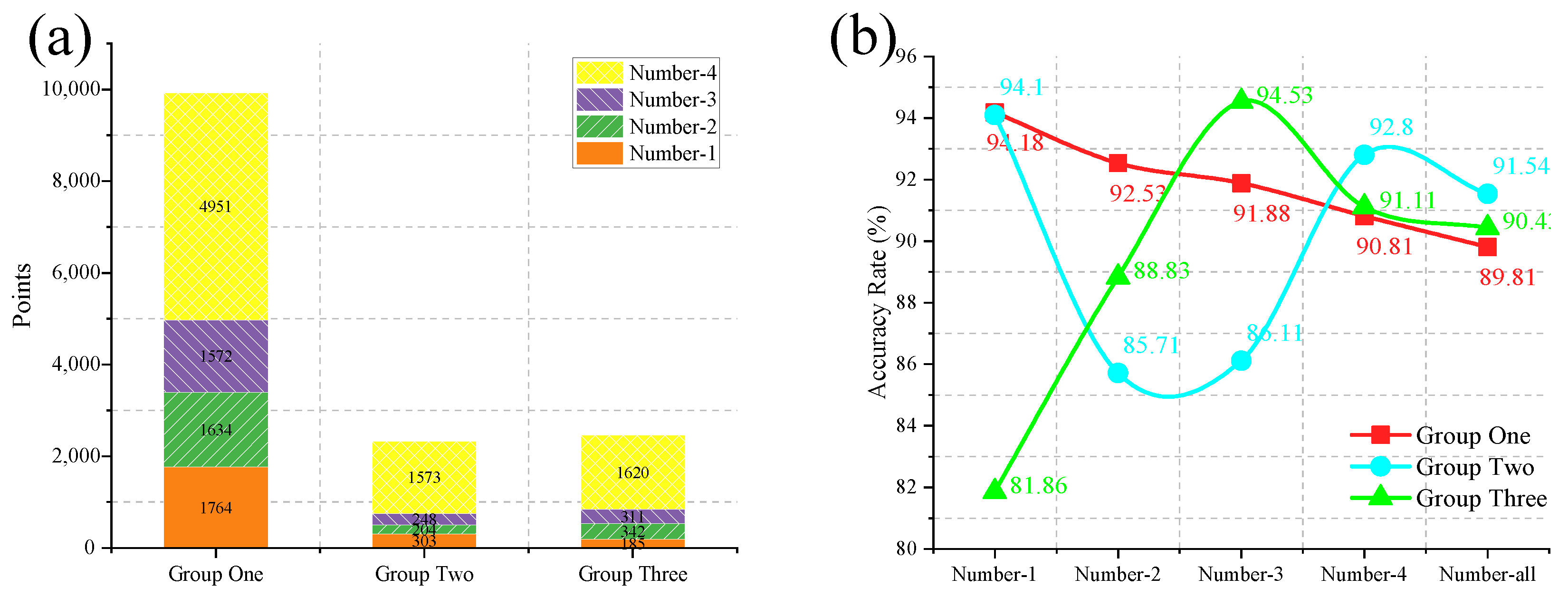

| Data Name | Sample Number | Number of Ropeways | Number of Points |

|---|---|---|---|

| Group One | 1 | 4 | 1,018,921 |

| Group Two | 2 | 4 | 485,735 |

| Group Three | 3 | 4 | 557,785 |

| Data Name | Sample Number | Number of Extracted Points | Number of Real Points | Accuracy Rate/% |

|---|---|---|---|---|

| Group One | 1-1 | 1764 | 1873 | 94.18 |

| 1-2 | 1634 | 1766 | 92.53 | |

| 1-3 | 1572 | 1711 | 91.88 | |

| 1-4 | 4951 | 5452 | 90.81 | |

| 1-all | 12,027 | 10,802 | 89.81 | |

| Group Two | 2-1 | 303 | 322 | 94.10 |

| 2-2 | 204 | 238 | 85.71 | |

| 2-3 | 248 | 288 | 86.11 | |

| 2-4 | 1573 | 1695 | 92.80 | |

| 2-all | 2328 | 2543 | 91.54 | |

| Group Three | 3-1 | 185 | 226 | 81.86 |

| 3-2 | 342 | 385 | 88.83 | |

| 3-3 | 311 | 329 | 94.53 | |

| 3-4 | 1620 | 1778 | 91.11 | |

| 3-all | 2458 | 2718 | 90.43 |

| Name | Accuracy Rate/% ↑ | ||||

|---|---|---|---|---|---|

| RF | GBDT | SVM | VBF-Net | OURS | |

| Number-1 | 68.52 | 92.88 | 67.74 | 78.30 | 92.52 |

| Number-2 | 75.44 | 80.39 | 72.13 | 73.62 | 95.08 |

| Number-3 | 76.09 | 67.08 | 43.66 | 65.05 | 83.43 |

| Number-4 | 86.38 | 84.26 | 78.55 | 86.82 | 92.18 |

| Number-5 | 75.20 | 67.24 | 73.03 | 64.93 | 85.27 |

| Name | Time/ms ↓ | ||||

|---|---|---|---|---|---|

| RF | GBDT | SVM | VBF-Net | OURS | |

| Number-1 | 54.81 | 55.92 | 73.49 | 45.01 | 28.48 |

| Number-2 | 73.25 | 65.63 | 65.33 | 38.69 | 33.25 |

| Number-3 | 37.61 | 34.84 | 29.34 | 19.92 | 25.11 |

| Number-4 | 87.62 | 70.22 | 67.20 | 83.53 | 42.74 |

| Number-5 | 78.23 | 47.50 | 39.41 | 40.63 | 37.29 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, W.; Zhao, C.; Zhang, H. Accurate Extraction of Cableways Based on the LS-PCA Combination Analysis Method. Appl. Sci. 2023, 13, 2875. https://doi.org/10.3390/app13052875

Wang W, Zhao C, Zhang H. Accurate Extraction of Cableways Based on the LS-PCA Combination Analysis Method. Applied Sciences. 2023; 13(5):2875. https://doi.org/10.3390/app13052875

Chicago/Turabian StyleWang, Wenxin, Changming Zhao, and Haiyang Zhang. 2023. "Accurate Extraction of Cableways Based on the LS-PCA Combination Analysis Method" Applied Sciences 13, no. 5: 2875. https://doi.org/10.3390/app13052875

APA StyleWang, W., Zhao, C., & Zhang, H. (2023). Accurate Extraction of Cableways Based on the LS-PCA Combination Analysis Method. Applied Sciences, 13(5), 2875. https://doi.org/10.3390/app13052875