Abstract

Advice-giving systems such as decision support systems and recommender systems (RS) utilize algorithms to provide users with decision support by generating ‘advice’ ranging from tailored alerts for situational exception events to product recommendations based on preferences. Related extant research of user perceptions and behaviors has predominantly taken a system-level view, whereas limited attention has been given to the impact of message design on recommendation acceptance and system use intentions. Here, a comprehensive model was developed and tested to explore the presentation choices (i.e., recommendation message characteristics) that influenced users’ confidence in—and likely acceptance of—recommendations generated by the RS. Our findings indicate that the problem and solution-related information specificity of the recommendation increase both user intention and the actual acceptance of recommendations while decreasing the decision-making time; a shorter decision-making time was also observed when the recommendation was structured in a problem-to-solution sequence. Finally, information specificity was correlated with information sufficiency and transparency, confirming prior research with support for the links between user beliefs, user attitudes, and behavioral intentions. Implications for theory and practice are also discussed.

1. Introduction

With the massive increase in available data in recent years, many techniques and technologies have emerged to help businesses, workers, and customers process such data more efficiently. Various recommender systems (RSs) have been developed since the mid-1990s, and many of these systems have been applied to a variety of tasks [1,2,3]. RSs are interactive systems that must be designed according to human-centered principles. Human–computer interaction (HCI) is the science domain that informs and validates design with respect to user interaction with such a system. In the particular case of an RS, a key design objective is to assure users understand and accept its recommendations. A large corpus of design- and development-focused RS papers exists (c.f. Recommender Systems Handbook by Ricci, Rokach, and Shapira, 2015 [4]).

Among RSs, we can distinguish two popular types of systems: content-based recommendation and collaborative recommendation. There is also an increasing number of hybrid recommender systems that combine different types of RSs. Other systems have appeared in previous years, such as demographic recommendation, utility-based recommendation, and knowledge-based recommendation, but they are less frequent. Differences between these RSs include the elicitation techniques (i.e., how to collect data and user preferences), the recommendation generation algorithm, and the presentation of the recommendation (i.e., text message, image, video, sound, or a combination of these four items). In this study, we focused on an RS that produced recommendations in the form of a text message.

While RSs have received significant attention in recent years, it is important to observe that they are just one of several ‘advice-giving systems’. “These include expert systems, knowledge-based systems, decision support systems, and recommender systems.” [5] (p. 2). However, given the relative obscurity of the term ‘advice-giving systems’ and the much more frequently used term of ‘recommender systems’—even for systems that support user decisions where user preferences are not the core element in the enabling machine-learning algorithm—we use the latter term of recommender systems hereafter to represent systems that generate and present recommendation messages to users.

Due to the considerable opportunities and challenges in many domains (e.g., business, government, education, and healthcare), numerous studies have been conducted on RSs [1,2,3], especially on the comprehension of their performance [6], their design implications [7,8,9], and recommendation techniques [3,10]. Thus, prior research has significantly addressed design implications at the system level [8,9]; however, researchers and scientists have mostly disregarded the design of the interface [11]. In the rare instances where the extant literature has focused on RS interface design, recommendations are produced and put forth following the collection of a user’s interaction data and subsequently juxtaposed against the attributes of artifacts stored in large repositories. Yet, to date, the impact of recommendation message presentation on the user’s perception, attitude, and behavior towards the recommendation has been significantly understudied.

A system’s design alone will not shape its users’ perceptions of trust and the likelihood of them accepting a recommendation. The nature of the message content is also likely to play a significant role in affecting users’ beliefs, attitudes, and behaviors, particularly in the context of managerial decision making [9]. Therefore, as content elements and interaction elements (e.g., buttons) jointly comprise the processing of recommendations by the user, a simultaneous and granular analysis of the effects of both design elements is required. Hence, this study aims to advance the contemporary understanding of RS designs by exploring different ways to optimize the information presentation and/or interaction layers of the user–RS interactions. Related factors influence the adoption of AI in real-life contexts, which is an important aspect of successfully deploying AI. In this study, we investigated the understanding and acceptance of system-generated recommendations, which is a very prominent usage pattern of AI. Specifically, we explored presentation approaches for recommendation messages to increase the likelihood that RS users would trust and accept system-generated recommendations with minimal effort required. Specifically, our work aimed to answer the following research questions:

- What is the effect of message design (characteristics) on a user’s beliefs about system-generated recommendations?

- What is the effect of message design (characteristics) on a user’s beliefs regarding the ease of use and usefulness of the RS?

- What is the effect of message design (characteristics) on a user’s attitudes and behavioral intentions toward the RS and its recommendations?

- What is the effect of message design (characteristics) on a user’s behaviors with respect to decision-making time and the likelihood of accepting system-generated recommendations?

2. Theorical Background

2.1. Recommender Systems

Extensive research has been performed evaluating RSs in their entirety [8,9,12,13]. RSs have progressed technologically to include machine learning and multi-modal interaction elements (e.g., Apple’s Siri, Amazon’s Alexa, etc.). Despite this technological progress and extensive research on RS user experience as a whole, a fundamental investigation into the optimal construction of recommendation messages has not yet been comprehensively conducted, as summarized in the following conceptual piece on the state of RS-related research:

“Explanations can vary, for example, with respect to (i) their length; (ii) the adopted vocabulary if natural language is used; (iii) the presentation format, and so on. When explanation forms are compared in user studies that are entirely different in these respects, it is impossible to understand how these details impact the results. Therefore, more studies are required to investigate the impact of these variables” [5] (p. 425). Hence, to create a more stable foundation for RS researchers and designers, additional studies on the fine-grained presentation details of recommendation messages are required [5].

2.2. Message Design in Recommender Systems

A typical recommendation message contains two core components, i.e., a described problem and a suggested solution, which is a frequent rhetorical pattern used in technical academic writing [14]. For example, in the message “I noticed that you are running out of soft drinks. Shall I order more?”, the first sentence is the problem while the second is the solution. Within the problem and solution construct of recommendation messages, several elements can vary in form, including information specificity—which can relate to either the problem and/or the solution—information sequence, message styling, and situational complexity; these elements are defined below.

Problem specificity and solution specificity are motivated by the functional principle of conveying information in a clear manner [15] and the notion that people have a preference for descriptions with a higher level of detail [16]. Moreover, the accuracy of the recommendation positively affects the decision-making process preceding the uptake of the recommendation [17], while the diversity of recommendations influences user trust, leading to an increase in the adoption rate of recommendations [18]. Information sequence—i.e., presenting the problem then the solution or the solution then the problem—is motivated by extant healthcare literature that indicates merit for both sequences in health communication messages [19].

Situational complexity consists of “simple, technically complicated, socially complicated, and complex situations” [20] and is inversely related to the amount of information available [21]; that is, situational complexity arises when there is uncertainty about the available options in the specified context and how the available options intermingle with cognitive demand due to tensions between contradictory elements [22].

Lastly, the styling of text in recommendation messages (e.g., font-weight properties, such as boldface) may also affect users’ perceptions, according to an empirical study on perceived professionalism in scientific question-and-answer forums [23]. Although text styling was not observed to have an impact in [23], the authors urged for the continued examination of typographical cues (i.e., boldface, italics, and underline) in other applications. For RSs, where decision-making time is critical, styling cues, such as bolding text, could help users focus on the most pertinent information at hand. This study extends prior research [9] by taking a mixed-methods approach to explore the effects of message design (characteristics) on user experience with both the presented information and the RS.

2.3. Hypotheses Development

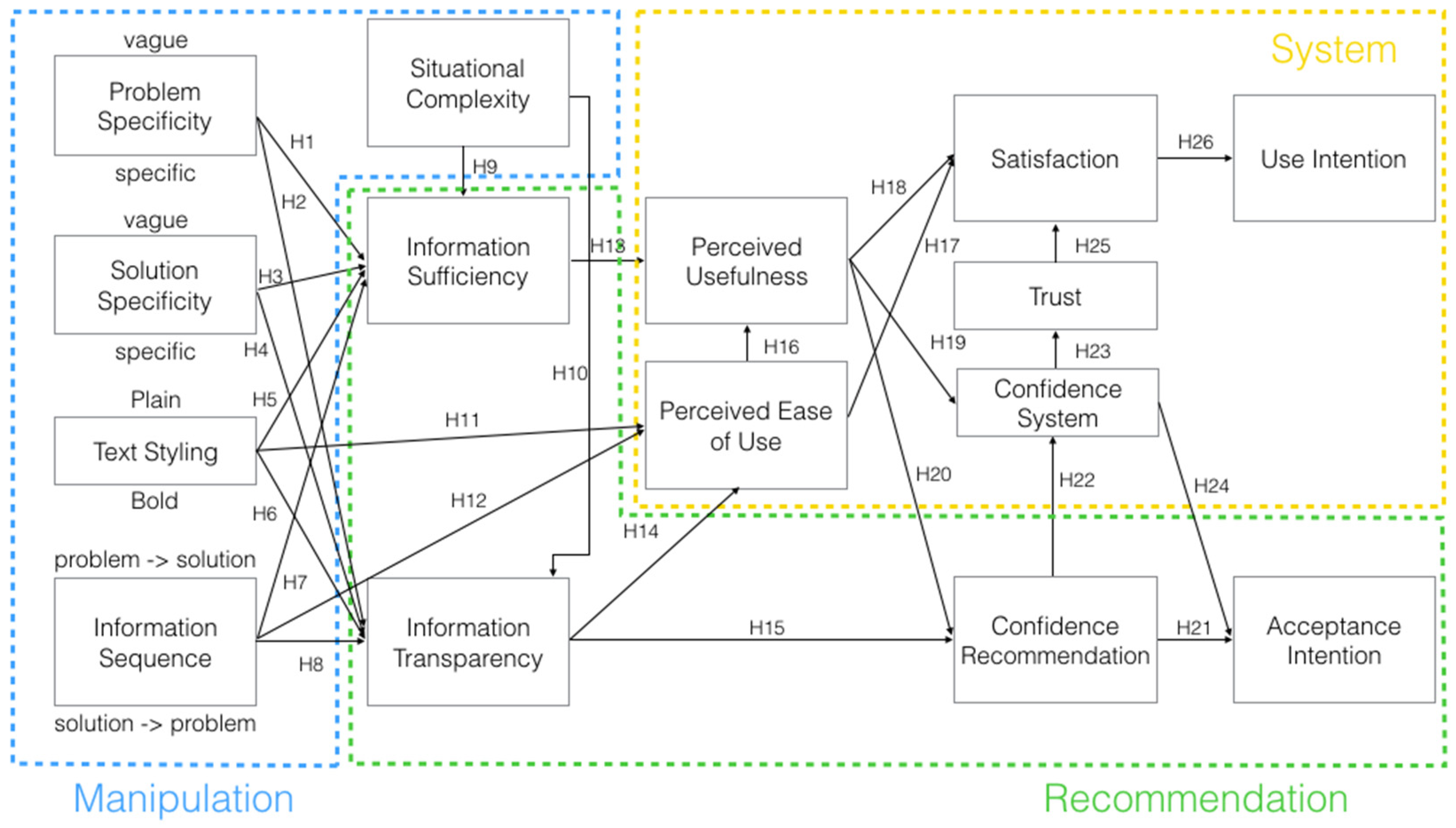

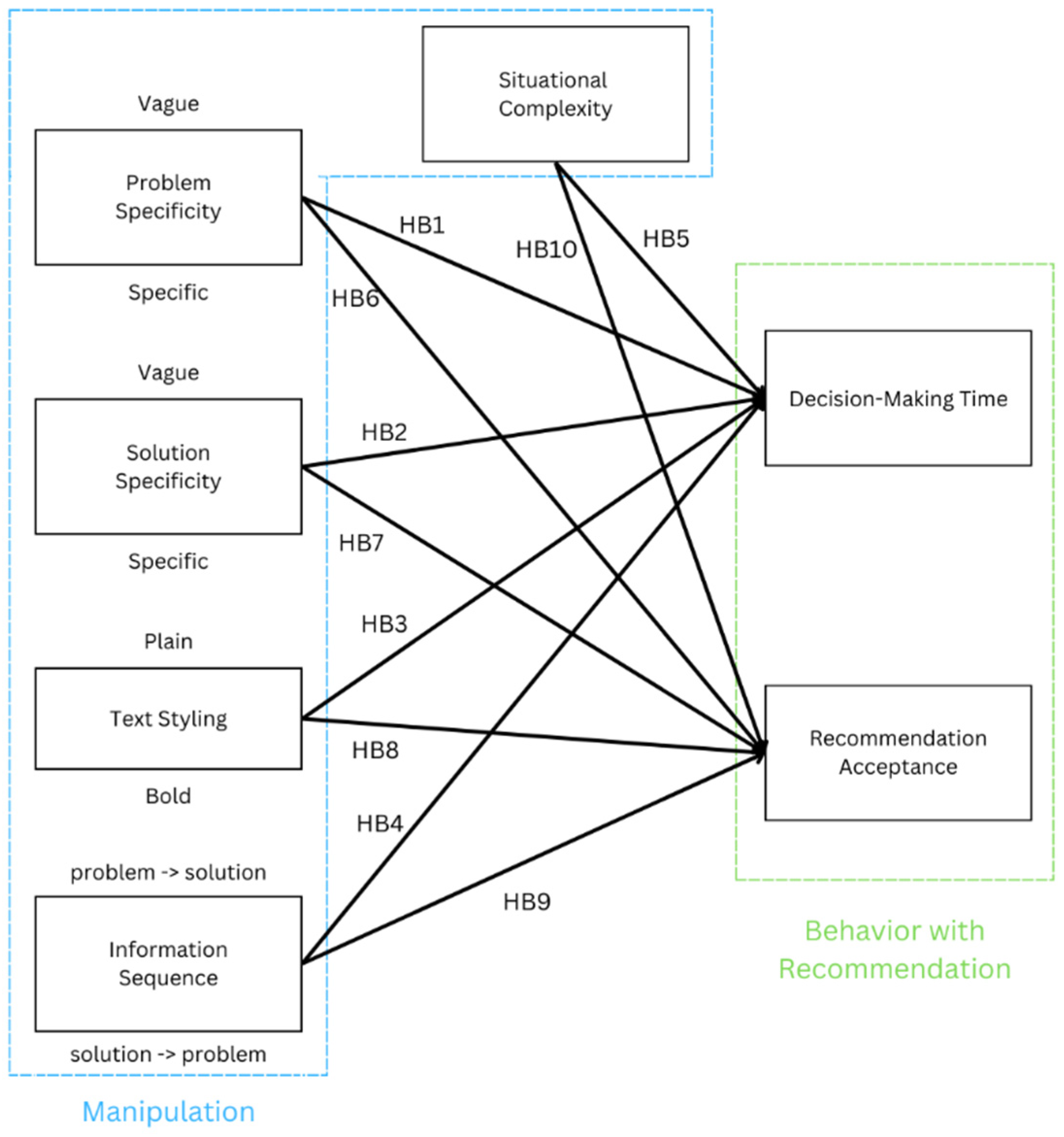

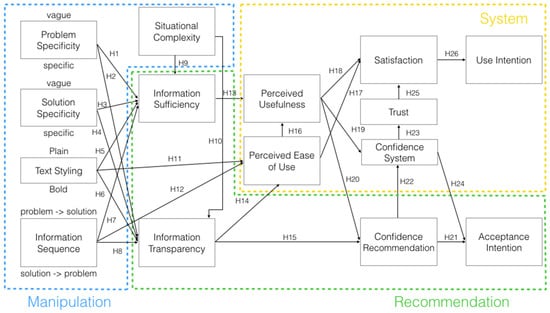

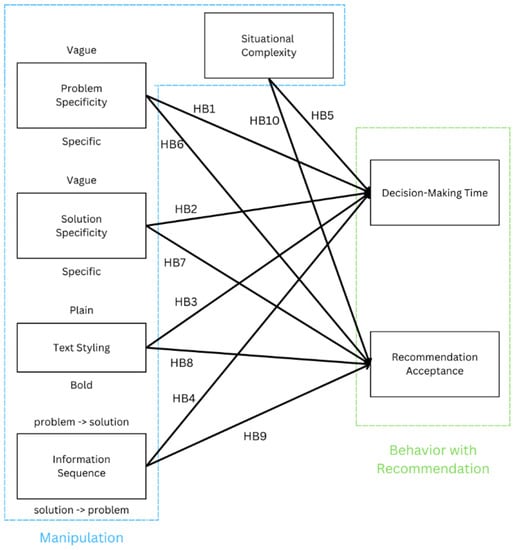

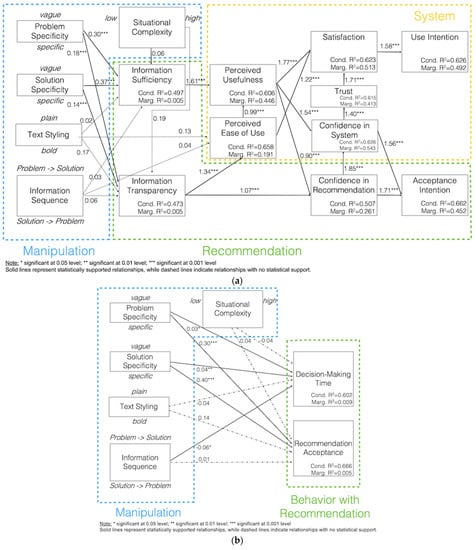

Our hypotheses built on the ResQue (recommender systems’ quality of user experience) model [9] and were partitioned into the following sets of endogenous variables: user beliefs regarding the recommendation message (information sufficiency and transparency) and the system (perceived usefulness and ease of use) as well as user attitudes toward both the recommendation message and system (see Figure 1) and behavioral outcomes (see Figure 2). The reason for the distinction between the self-reported model and the behavioral model is that the former explored the user’s experience with each RS message design explicitly (via the self-reported measures) while the latter did so implicitly (via the behavioral measures). Therefore, the two models provided a complementary and more holistic view of user experience, offering a stronger foundation for emergent implications and recommendations on how to design RS messages. In the following sections, we will present our hypotheses for each set of dependent variables.

Figure 1.

Proposed self-reported research model.

Figure 2.

Proposed behavioral research model.

2.3.1. Information Transparency and Information Sufficiency

Information transparency is an aggregate user assessment of three dimensions: clarity, disclosure, and accuracy [24]. For a recommendation message consisting of two parts of information, a problem and solution, changes in problem and solution specificity are likely to yield changes in user perceptions of the clarity, disclosure, and accuracy of the information in the recommendation message, and by extension perceived information transparency as a whole. Moreover, researchers recommend that RS designers should simplify the reading of the message by the user by using navigational efficacy, design familiarity, and attractiveness [25], which will lead to clarity and transparency [9,25,26]. Indeed, the content and format of the recommendations have significant and varied impacts on users’ evaluations of RS [27]. Linking the latter to message characteristics, it is plausible that user perceptions of information transparency will be positively affected by (i) changing the message (text) styling by bolding key parts of the message (i.e., bolding the object being discussed); (ii) sequencing the information presentation as problem-then-solution (rather than the reverse); and (iii) communicating simple rather than complex situations.

Thus, the following effects of a message’s characteristics were hypothesized:

Hypothesis 2 (H2).

Problem specificity positively impacts information transparency.

Hypothesis 4 (H4).

Solution specificity positively impacts information transparency.

Hypothesis 6 (H6).

Text styling positively impacts information transparency, such that styled (bold) text is associated with greater perceived information transparency than plain text.

Hypothesis 8 (H8).

Information sequence affects information sufficiency, such that a problem-to-solution sequence positively impacts information transparency.

Hypothesis 10 (H10).

Situational complexity negatively impacts information transparency.

Information sufficiency refers to whether the amount of content presented to the user is enough for the user to understand the information, and in some cases to act on it [28]. By changing the degree of information specificity (i.e., problem and/or solution) and situational complexity, the amount of information available, and the way the information is conveyed to the user, user perceptions of information are likely to be augmented [20,21,22]. To ensure that information is clear and easy to access, which will improve information sufficiency, the information should be structured and adapted to the needs of the receivers [26,29]. Explanations in a recommendation have an important impact on the user’s behavior. Indeed, the type of explanation has a direct effect on RS use and should be different according to the desired effect [30]. For example, persuasive explanation supports the competence facet of the RS [30], while negative arguments increase the user’s perceived honesty of the system regarding the recommendation [31]. Moreover, fact-based explanations (i.e., only facts with keywords) and argumentative explanations are preferred by users over full-sentence explanations [32]. The literature recommends proposing recommendations with only pertinent and cogent information [33] and excluding all information and knowledge that are not relevant for answering the request [33]. Long and strongly confident explanations can also be used to increase user acceptance [34]. Thus, choices regarding message (text) styling and information sequence may make it easier for the user to understand the presented information, and a change in information sufficiency may also be observed [25].

Thus, the following effects of a message’s characteristics were hypothesized:

Hypothesis 1 (H1).

Problem specificity positively impacts information sufficiency.

Hypothesis 3 (H3).

Solution specificity positively impacts information sufficiency.

Hypothesis 5 (H5).

Text styling positively impacts information sufficiency, such that styled (bold) text is associated with greater perceived information sufficiency than plain text.

Hypothesis 7 (H7).

Information sequence affects information sufficiency, such that a problem-to-solution sequence positively impacts information sufficiency.

Hypothesis 9 (H9).

Situational complexity negatively impacts information sufficiency.

2.3.2. Perceived Usefulness and Ease of Use

Users’ attitudes are affected by their beliefs regarding a message’s information properties [9]. For example, information sufficiency has been shown to impact the perceived usefulness of RSs [9,35]. In addition, the sufficiency of the information may depend on its quality [36,37,38,39], where the greater the quality of the information presented, the more useful it is found to be [40]. Furthermore, knowledgeable explanations significantly increase the perceived usefulness of an RS [41]. Thus, the following effects of a recommendation message’s characteristics were hypothesized:

Hypothesis 11 (H11).

Text styling positively affects perceived usefulness, such that recommendation messages with styled (bold) text are associated with a greater perceived usefulness of the RS than plain text.

Hypothesis 12 (H12).

Information sequence affects the perceived ease of use, such that a problem-to-solution sequence positively impacts the perceived ease of use of the RS.

Hypothesis 13 (H13).

Information sufficiency positively impacts the perceived usefulness of the RS.

Hypothesis 14 (H14).

Information transparency positively impacts the perceived ease of use of the RS.

2.3.3. System and Recommendation Outcomes

For an RS to be successful with respect to its adoption, users should have confidence in the system-generated recommendations and trust the system [5]. Transparency plays an important role in users’ confidence in recommendations as it may encourage or deter users’ trust in a system [42,43], where recommendations perceived as transparent by users increase their confidence [44]. User perceptions of ease of use and usefulness are positively related to each other [12,45,46,47] and to system attitudes, such as those toward the system’s use and system satisfaction [12,40,46,47,48]. Moreover, explanations contribute to user trust in RSs [30,49,50]. Confidence in the system positively influences trust in the system [51] and the user’s behavioral intentions with the system, including their intention to accept a system-generated recommendation [40]; in addition, the degree of trust users put in the system plays an important role in the acceptance of a recommendation [52]. In the context of I.S. use, trust has been shown to positively affect satisfaction [53], which in turn has been shown to encourage use of the system [54,55]. Hence, the following hypotheses were proposed:

Hypothesis 15 (H15).

Information transparency positively impacts recommendation confidence.

Hypothesis 16 (H16).

RS ease of use positively impacts its usefulness.

Hypothesis 17 (H17).

RS ease of use positively impacts user satisfaction.

Hypothesis 18 (H18).

RS usefulness positively impacts user satisfaction.

Hypothesis 19 (H19).

RS usefulness positively impacts RS trust.

Hypothesis 20 (H20).

RS usefulness positively impacts RS confidence.

Hypothesis 21 (H21).

Recommendation confidence positively impacts recommendation acceptance intentions.

Hypothesis 22 (H22).

Recommendation confidence positively impacts RS confidence.

Hypothesis 23 (H23).

RS confidence positively impacts RS trust.

Hypothesis 24 (H24).

RS confidence positively impacts recommendation acceptance intentions.

Hypothesis 25 (H25).

RS trust positively impacts RS satisfaction.

Hypothesis 26 (H26).

RS satisfaction positively impacts RS use intentions.

2.3.4. Behavioral Outcomes

Both the content and format of a recommendation may influence users’ beliefs and attitudes [8,9], and in turn affect behavioral intentions [5,8,9,12] and actual behaviors. Providing explanations for recommendations may lead to faster decision making by users and drive them to make better choices [56,57]. In addition, the way messages convey the problems faced and related information may impact users’ perceptions and decisions [58,59,60]. Thus, the following hypotheses were proposed:

Hypothesis B1 (HB1).

Problem specificity negatively impacts (i.e., decreases) users’ decision-making time.

Hypothesis B2 (HB2).

Solution specificity negatively impacts user’s decision-making time.

Hypothesis B3 (HB3).

Text styling affects users’ decision-making time, such that styled (bolded) text is associated with a shorter decision-making time than plain text.

Hypothesis B4 (HB4).

Information sequence affects users’ decision-making time, such that problem-to-solution sequencing is associated with a greater decrease in time than solution-to-problem sequencing.

Hypothesis B5 (HB5).

Situational complexity positively impacts (i.e., increases) users’ decision-making time.

Hypothesis B6 (HB6).

Problem specificity positively impacts users’ recommendation acceptance rate.

Hypothesis B7 (HB7).

Solution specificity positively impacts users’ recommendation acceptance rate.

Hypothesis B8 (HB8).

Text styling affects users’ recommendation acceptance, such that styled (bolded) text is associated with a greater recommendation acceptance rate than plain text.

Hypothesis B9 (HB9).

Information sequence affects users’ recommendation acceptance rate, such that problem-to-solution recommendations are associated with a greater acceptance rate.

Hypothesis B10 (HB10).

Situational complexity negatively impacts users’ recommendation acceptance rate.

3. Methodology

3.1. Pilot Study

A pilot study [61] was conducted with three aims: (i) to gauge the appropriateness of the stimuli, (ii) to collect attentional and psychophysiological data to inform the main experiment, and (iii) to offer preliminary support for the hypothesized relationships. An experiment utilizing a within-subjects research design involving four factors, each with two levels (i.e., 2 × 2 × 2 × 2), was conducted. The pilot study involved fewer factors (4 instead of 5) and by extension fewer conditions (16 vs. 32) and stimuli (48 vs. 96), as well as fewer participants (n = 6 vs. n = 614) than the study presented below, which also used a different data collection approach (lab-based pilot vs. Amazon MTurk).

3.2. Experimental Design

A multi-method experiment was conducted employing a counterbalanced mixed (between-within) subjects design involving five (5) factors (i.e., counterbalanced 2 × 2 × 2 × 2 × 2 for a total of 32 conditions), tested using three (3) stimuli per condition (i.e., 96 stimuli). The factors involved (i) information sequence (problem-to-solution vs. solution-to-problem); information specificity, comprising (ii) problem specificity (vague vs. specific) and (iii) solution specificity (vague vs. specific); (iv) text styling (plain vs. bold); and (v) situation complexity (low vs. high). For the latter, complexity was varied by manipulating the product type involved in the situation—soft drinks vs. meat patties—given the following ‘complicating’ considerations: cost (low-cost soft drinks vs. high-cost meat patties), durability (non-perishable soft drinks vs. perishable meat patties), handling (soft drink bottles require no special handling vs. meat patties require special—refrigerated—handling). Hence, the situations with soft drinks were overall of lower complexity than those pertaining to meat patties. Additionally, we manipulated the product’s availability (available vs. unavailable with available substitute product). The factor manipulations are illustrated in the sample recommendation messages in Table 1. Moreover, in order to reduce the time needed to complete a session, the study was divided into 8 groups, each comprising 4 of the 32 conditions (corresponding to 12 stimuli per participant), which required approximately 15 min to complete.

Table 1.

Experimental manipulation of independent variables.

3.3. Participants

Participants were recruited on the Amazon Mechanical Turk (MTurk) online platform. To participate in the study, these “Turkers” were screened for a minimum HIT approval rate of 90% (a human intelligence task, or HIT, is a question that needs an answer); in addition, they had to be located in the U.S. The participants were only allowed to complete a single session. Recruiting a minimum of 100 participants for each of the eight groups (i.e., per 4 conditions) resulted in a total of 843 people being recruited to our study, of which 614 yielded valid responses that were used for subsequent analysis (with a minimum of 70 responses per group). A total of 229 responses were not used, as these participants either failed the attention check (n = 207) or were unable to confirm their participation (n = 22). Participants were compensated USD 1.40 for their time.

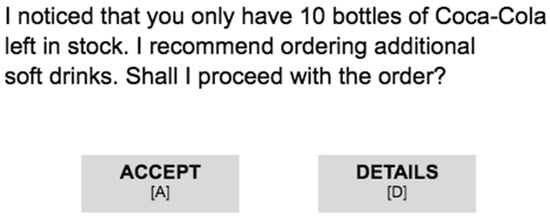

3.4. Experimental Procedure, Stimuli, and Measurement

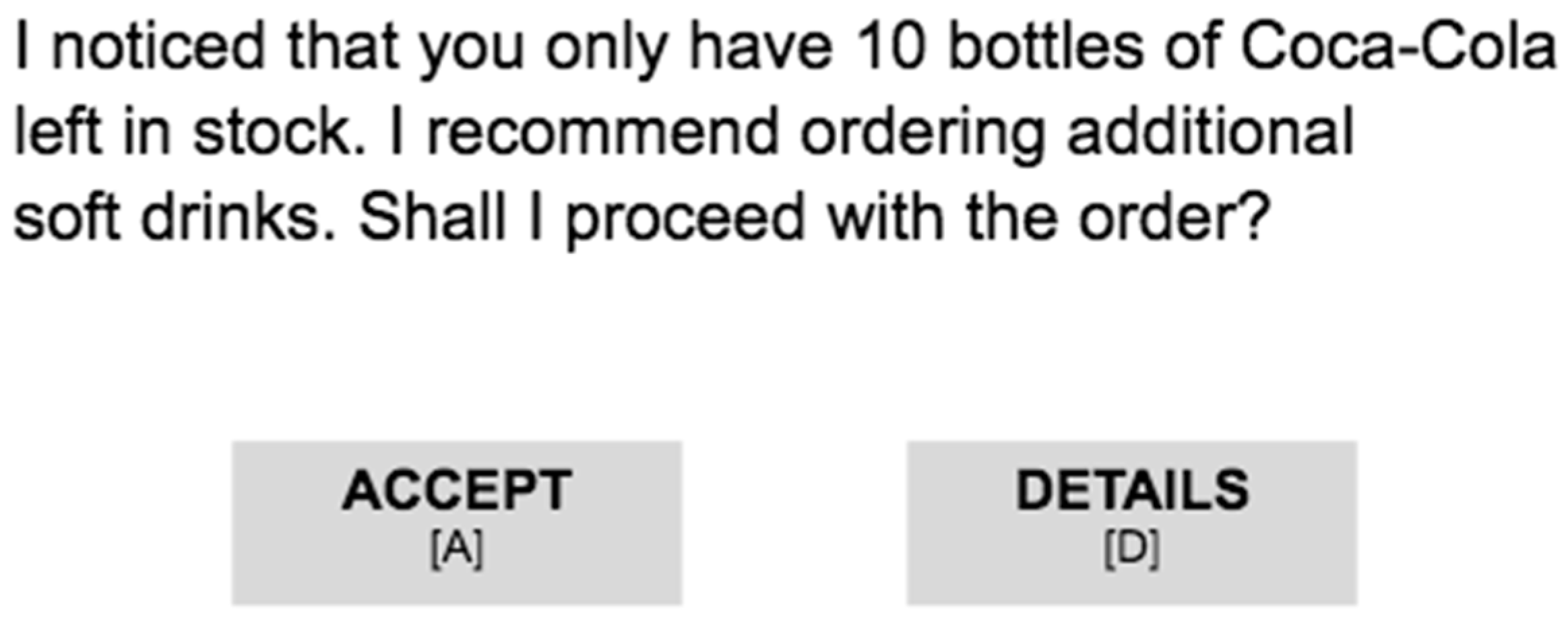

The experiment involved a scenario where participants assumed the role of a restaurant manager in charge of inventory and were required to make logistics decisions regarding inventory replenishment and/or order delivery rerouting based on the recommendations proposed by the RS. The RS messages themselves varied in their presentation according to the abovementioned five factors that were manipulated. Successive text-only messages showing situations (i.e., a problem and an RS-recommended solution) were used as stimuli. Two buttons (“ACCEPT” and “DETAILS”) corresponding to the two decision options available to users were shown below each message (see Figure 3). Participants had to either confirm the recommendation as-is if they felt that the recommendation was appropriate for the shown situation or request additional details if they felt otherwise. The details themselves were not shown to the participant (which was indicated to them in the instructions), as doing so would introduce additional factors to the study beyond the scope of our research questions. Participants entered their choice using a keyboard and were not able to navigate backwards.

Figure 3.

Example of study stimulus.

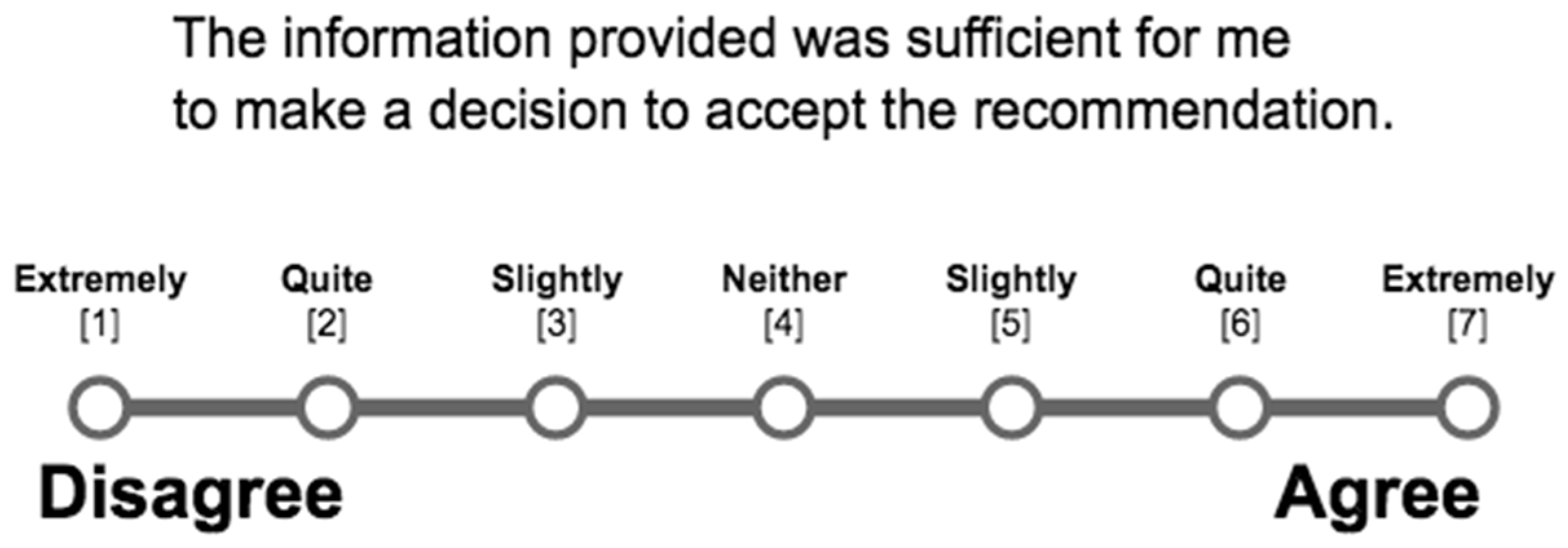

This multi-method experiment used the simulated recommendation messages as stimuli to evoke a reaction from the study participants, which was quantitatively measured via self-reports (survey questions) and behavioral data. Two behavioral measures were collected automatically by the experimental platform (described further below) at this point: (i) the time taken to decide (i.e., the time from stimulus exposure to choice entry) and (ii) the choice entered. After each choice, the participants were asked three (3) questions regarding the recommendation message to measure the perceived sufficiency and transparency of the information and the users’ confidence in the recommendation. Information sufficiency and information transparency are both related to the quality of the information presented by the RS. After evaluating three (3) consecutive recommendation messages, seven (7) questions were asked regarding RS-related perceptions, including ease of use, usefulness, confidence, and trust, as well as participants’ satisfaction with the RS and their intentions to use the RS and/or accept RS-generated recommendations (see Figure 4 for an example). The questionnaire consisted of single-item scales adapted from previously validated scales [9,12] that were used to quantitatively measure the constructs reflected in the proposed research model. Answers were provided along a 7-point Likert scale from extremely disagree (1) to extremely agree (7). To respond, participants could either click on the scale or enter the corresponding number using the keyboard. Constructs were measured through the use of adapted (reduced) single-item constructs [9], a choice that was made given the significant duration and thus cognitive burden of the experiment, as shown in Table 2.

Figure 4.

Example of Likert-scale question.

Table 2.

Measurement items (self-reported).

3.5. Apparatus

Three web-based systems were used to conduct this study. The first was CognitionLib (BeriSoft, Inc., Redwood City, CA, USA), which is a free open-source community for ERTS Scripts that provides an online editor and is currently used by hundreds of academic institutions to create cognitive task paradigms and set up cognitive experiments. Using the ERTS language, we were able to code all the requisite elements for the experiment (as black-and-white to control for the effect of color), including the stimuli in the form of text messages, the survey questions, and the response scales. When the scripts were coded, they were imported into Cognition Lab (BeriSoft, Inc., Redwood City, CA. USA), a web-based runtime environment that hosts experiments. The third platform used was Amazon’s MTurk (Amazon Inc., Bellevue, WA, USA), a crowdsourcing marketplace that connects businesses to individuals who can perform their tasks virtually, from which the participants were recruited.

4. Analysis and Results

The data were analyzed using methods appropriate for the variable types involved, as follows:

- (1)

- For the self-reported model, we used cumulative logistic regression with random intercept for modeling the probability of having lower values. We used cumulative logistic regression because we treated the dependent variables as ordinal variables.

- (2)

- For the behavioral model, we used an approach appropriate for the type of dependent variable as follows:

- (a)

- For decision-making time, we used linear regression with a random intercept because the distribution of time was roughly normal;

- (b)

- For recommendation acceptance, we used logistic regression with a random intercept because the behavioral decision (to accept or request details) was binary.

In all three cases, we used a random intercept model to account for the repeated-measures design; more precisely, the model allowed the intercept to vary by participant to account for the unmeasured participant-specific characteristics that were not correlated with the independent variable but had an effect on the DV (but were not measured). In the following sections, the results from the analyses corresponding to each of the study’s three research questions are presented.

4.1. RQ1. What Is the Effect of Message Design (Characteristics) on a User’s Beliefs about System-Generated Recommendations?

Information specificity impacted information sufficiency (problem specificity effect H1: b = 0.3039, p < 0.0001; solution specificity effect H3: b = 0.3714, p < 0.0001) and information transparency (problem specificity effect H2: b = 0.1814, p < 0.0001; solution specificity effect H4: b = 0.1499, p < 0.0011). On the other hand, the remaining hypotheses corresponding to RQ1 were not supported, i.e., those regarding the effect of text styling on information sufficiency (H5: b = 0.0254, p = 0.8754) and information transparency (H6: b = 0.1729, p = 0.2697) and the effect of information sequence on information sufficiency (H7: b = −0.0357, p = 0.6756) and information transparency (H8: b = 0.0608, p = 0.4865); in addition, situational complexity was not observed to have a significant effect on information sufficiency (H9: b = 0.0672, p = 0.6804) or information transparency (H10: b = 0.191, p = 0.2258).

4.2. RQ2. What Is the Effect of Message Design (Characteristics) on a User’s Beliefs Regarding the Ease of Use and Usefulness of the RS?

The relationship between text styling (bolding) and perceived ease of use was not supported (H11: b = 0.1327, p = 0.5836), and information sequence was not found to significantly impact perceived ease of use (H12: b = 0.0486, p = 0.7519). On the other hand, the effects of information sufficiency on usefulness (H13: b = 1.6193, p < 0.0001) and information transparency on ease of use (H14: b = 1.3461, p < 0.0001) were shown to be significant.

4.3. RQ3. What Is the Effect of Message Design (Characteristics) on a User’s Attitudes and Behavioral Intentions toward the RS and Its Recommendations?

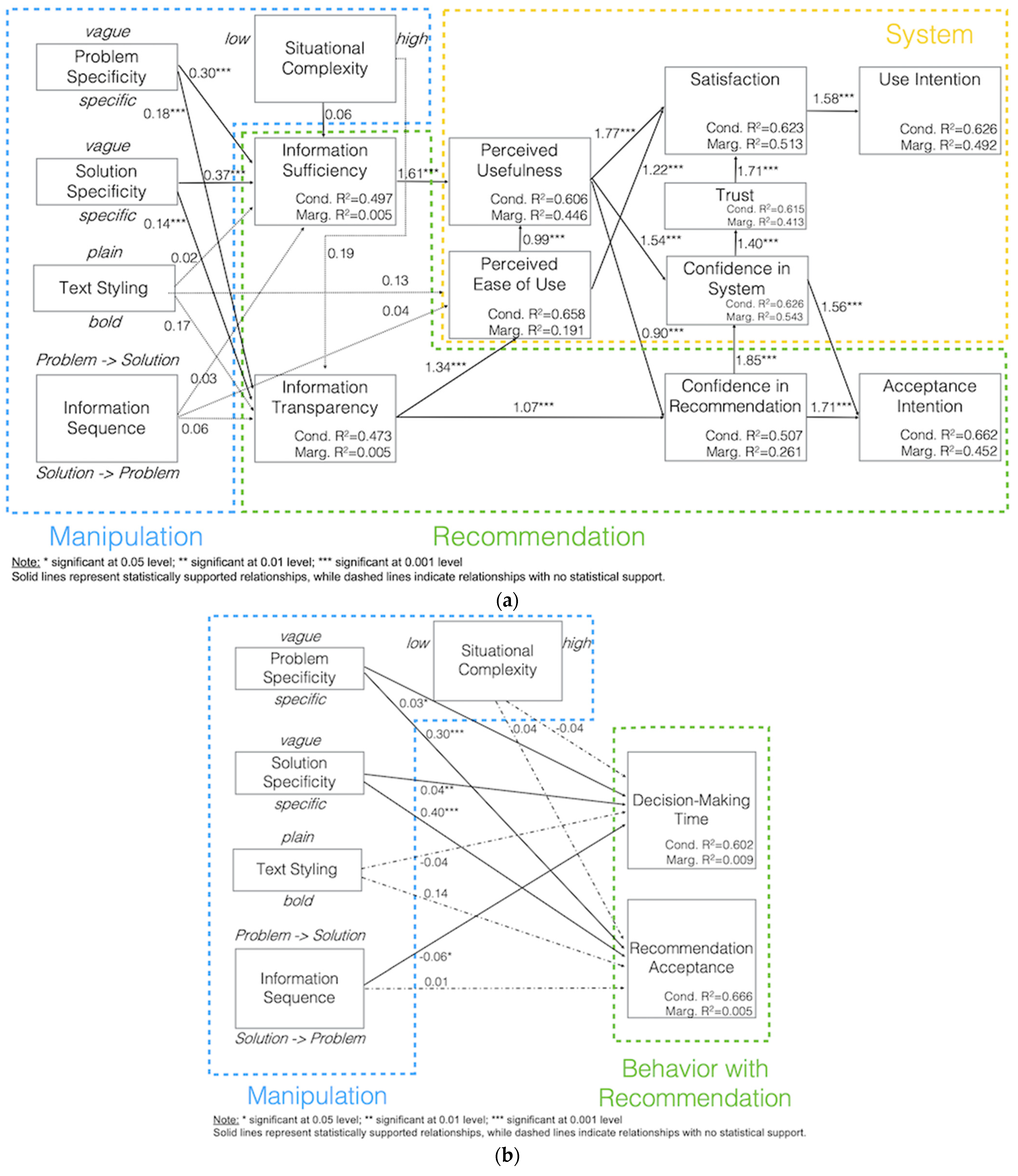

Information transparency positively impacted recommendation confidence (H15: b = 1.0743, p < 0.0001), which was also affected by RS usefulness (H20: b = 0.9111, p < 0.0001) and in turn positively influenced intention to accept the recommendation (H21: b = 1.715, p < 0.0001); in addition, this intention was also affected by RS confidence (H24: b = 1.5625, p < 0.0001). The ease of use of the system positively impacted both system usefulness (H16: b = 0.993, p < 0.0001) and system satisfaction (H17: b = 1.2254, p < 0.0001). Usefulness positively impacted system satisfaction (H18: b = 1.7252, p < 0.0001) and system confidence (H19: b = 1.5487, p < 0.0001). Recommendation confidence had a positive effect on system confidence (H22: b = 1.8567, p < 0.0001), which positively impacted system trust (H23: b = 1.402, p < 0.0001) and finally system satisfaction (H25: b = 1.7122, p < 0.0001). System satisfaction positively impacted the intention to use the recommender system (H26: b = 1.5809, p < 0.0001). Lastly, all system-level mediating constructs demonstrated a good explanation of the variance in their respective DVs, including 45.2% for recommendation acceptance intention and 49.2% for system use intention, as shown in the validated model (see Figure 5a and Table 3).

Figure 5.

(a) Validated research model (self-reported data); (b) Validated research model (behavioral data).

Table 3.

Summary table of all the hypotheses and their results.

4.4. RQ4. What Is the Effect of Message DESIGN (characteristics) on a User’s Behaviors with Respect to Decision-Making Time and the Likelihood of Accepting System-Generated Recommendations?

Problem specificity had a significant positive effect on decision-making time (HB1: b = 0.0337, p < 0.05), as did solution specificity (HB2: b = 0.03746, p < 0.01). In contrast, the use of a problem-to-solution information sequence did not have a significant effect on decision-making time for all messages (b = −0.03805, p = 0.1667); however, when looking at the time-to-decision by type of decision, it was found that information sequence significantly reduced decision-making time for accepted recommendations (HB4: b = 0.06418, p < 0.05) but not for those recommendations for which users requested more details (b = 0.01624, p = 0.7185. On the other hand, text styling and situational complexity did not impact decision-making time (HB3: b = 0.04046, p = 0.4545 and HB5: b = 0.02226, p = 0.6831).

In addition to the observed effect of information specificity on decision-making time, it was also observed that users were significantly more likely to accept the recommendation if the messages stated specific (rather than vague) problems (HB6: b = 0.3006, p < 0.0001) and specific (rather than vague) solutions (HB7: b = 0.4073; p < 0.0001). However, no effect on user behavior with respect to recommendation acceptance was observed for information sequence (HB8: b = 0.1392, p = 0.2674), text styling (HB8: b = 0.1392, p = 0.2674), and situational complexity (HB10: b = 0.03508, p = 0.7815).

All behavioral results are shown in Figure 5b and reported in Table 3. Lastly, a post hoc analysis further reinforced the favorable effect of information specificity: specific recommendation messages were significantly longer (p < 0.05 all cases) in character count, for both problem- and solution-specificity, whether counted with spaces or without spaces, yet the decision-making time on a per character basis was significantly lower (p < 0.001 in all cases).

5. Discussion and Conclusions

This empirical study investigated the effects of message design on user behaviors with respect to the likelihood of accepting system-generated recommendations and the time taken to decide, as well as user attitudes toward the recommendation and the RS. Our findings provide clear, evidence-based answers to the four research questions that initially motivated the study. The answers should be of interest to academic researchers, designers of RSs, and current or potential providers as well as users of RSs; however, they are also generalizable to other use contexts and domains.

5.1. Contributions to Research

Prior research on RS interface design has focused mostly on the system level. Thus, the impact of recommendation message design on the user’s interaction with and behavior toward the recommendation and the RS more broadly has not been studied. This study attempted to extend the literature by identifying new factors of message design that may impact the likelihood of users accepting system-generated recommendations as well as their intention to use the recommender system. Five factors were manipulated including (i) information sequence (problem-to-solution vs. solution-to-problem); information specificity, comprising (ii) problem specificity (vague vs. specific) and (iii) solution specificity (vague vs. specific); (iv) situation complexity (simple vs. complex); and (v) text styling (plain vs. bold). Our findings revealed that the specificity of the information embedded in the recommendation message had a positive influence on users’ perceptions of information sufficiency and information transparency (i.e., information sufficiency and information transparency were considered to be higher when the information regarding either the problem and/or the solution was specific rather than vague). Information specificity also impacted the users’ behaviors. Higher information specificity increased the likelihood that users would accept the recommendation (from the measured acceptance intention), and it offered the additional benefit of the user needing less time to make the decision. Our findings also revealed that information sequence did not have an impact on either user perceptions of information transparency or information sufficiency and rather was found to reduce decision-making time when the information was presented in a problem-to-solution sequence. Neither text styling nor situation complexity were found to affect information transparency or information sufficiency. Focusing on the impact of user beliefs (i.e., perceived usefulness and ease of use) and attitudes (i.e., confidence in the recommendation and the RS, as well as trust and satisfaction with the RS), the study confirmed that users’ beliefs influence their attitudes, which motivate their intentions to accept the recommendation and use the RS.

5.2. Contributions to Practice

The results of this study have improved our understanding of the impact of message design on users’ intention to accept an algorithmically generated recommendation proposed to them and their intention to use the RS that provided the recommendation. This advances our contemporary understanding of RS designs more broadly by looking at ways to optimize the information presentation and/or interaction layers of the user–RS interactions. For example, e-tailers such as Amazon rely on algorithms to observe patterns and identify the optimal candidates to recommend for subsequent purchases to each consumer/user; however, the way that product recommendation is presented will also impact the likelihood of the consumer/user clicking through the recommended product link and potentially proceeding with the purchase of an algorithmically generated recommendation. Similarly, in a decision-support context, a supply chain management (SCM) AI-enabled system presents exception events to its operators/users; how the emergent problem and the recommended solution are presented to a user will impact their ability to process the situational information and to make a decision, thus impacting the efficacy and use of the system.

The findings of this study can inform RS designers in how to construct (design) system-generated recommendation messages in order to either minimize the time needed by the user to make a decision and/or to accept the recommendation or the system at large, for example, if the RS is used during a demo or trial period. Our results showed that information sequence helped to reduce decision-making time. Indeed, when the problem was presented before the solution, i.e., in a situational frame, users experienced less cognitive load and spent less time making a decision than when they were shown recommendations consisting of the solution followed by the problem. Hence, a best practice emerges for RS designers to structure the content of recommendation messages in a manner progressing from framing the situation (problem) to presenting the supporting information and concluding with the recommended action (solution).

However, extending from the scenario and focus of this study on new RS users, it is plausible that as user trust in the system-generated recommendations increases over time (i.e., during the continued use of the RS), users may eventually prefer to quickly review the recommendation and approve it without processing the underlying problem and supporting information. In this special use case, the reverse sequence (i.e., solution-to-problem) may be preferred by the user; if so, a second recommendation might allow for the user to specify the recommendation message’s construction. This would be feasible if at the system level, such recommendations are generated not as event-to-outcome rules, where recommendations are designed in full a priori for each exception event alert specifically, but are instead generated by combining message elements that are marked-up or tagged according to a library identifying each content element by its property (e.g., product name, product quantity, exception event, delivery mode, etc.) and synthesized according to the exception event. However, before formally putting forth such a recommendation, additional research is required to obtain support for this anticipated utility.

Another recommendation emerging from this study’s findings is for RS designers to focus on explainability by embedding sufficient detail regarding both the problem and the solution, thereby boosting perceptions of information transparency and information sufficiency. Such perceptions contribute to a significantly more frequent acceptance of system-generated recommendations, thereby saving users time as they would not need to delve deeper (e.g., by clicking on ‘details’) before making a decision.

The above recommendations when implemented can serve as catalysts for both the adoption and continued use of systems that generate recommendations algorithmically for users to consider in their context. By focusing on the design of interfaces that address user needs, designers can then derive implied user requirements for interactive systems (here, RSs) and ensure that the system design is satisfactory. Consequently, users are more likely to perceive them as useful and will therefore be more likely to adopt and use them. This is especially relevant to systems that are used in corporate settings or mandated by managers, which is likely to be the case with RSs and decision-support systems.

5.3. Limitations and Opportunities for Future Research

Despite the scenario used in this study and the validation of the two levels of situational complexity through a manipulation check prior to their use, situational complexity was not found to have any effects on either user beliefs or behaviors. This was an unexpected finding as the factor and its two levels were tested through two rounds of manipulation checks involving (i) nine participants prior to the start of this study, who reported unanimously that the high-complexity situation was indeed more complex than the low-complexity situation based on the information presented in the recommendation messages, and (ii) thirty participants responding to the question “How challenging was the situation you were faced with?” (simple = 1 … 5 = complex). It is plausible that while the high-complexity stimuli were indeed significantly higher in complexity than the low-complexity stimuli, given the brevity of the messages, the complexity was not sufficiently high to induce significantly higher levels of cognitive load (and by extension, time needed), more negative emotions (either valence or arousal), and/or worse beliefs and attitudes. Another possible explanation for the insignificance of situational complexity could be the likely lack of domain knowledge on the side of participants. Nuances in complexity of messages pertaining to product perishability and ease of product storage might require knowledge that is likely to be unique to those with significant experience in the restaurant or catering industry. Hence, we have two sets of recommendations for future research. First, future research could focus on assessing situational complexity where the role of domain-specific knowledge might be less significant. Second, future research that aims to inform RS design according to situational complexity should first explore for situational complexity ‘thresholds’, above which effects are observed in regard to users’ cognition, emotion, or behavior, and design stimuli accordingly.

While this study undertook an investigation of an extended research model, observing the variance extracted from the mediating and dependent variables, additional explorations of the effects of additional factors on message- and system-level outcomes are needed. Other factors that can be identified in the literature and could be tested in future studies include message detail, message length, the use of subjective versus objective language, personification, affective language use, personality, and vague language [16,62,63,64,65,66,67,68].

Building upon results from this experiment, future work should also involve triangulated attentional and physiological measurements to gain a richer understanding of the mechanisms at play. Using eye fixation-related potential [69], future research could explore the cognitive mechanism involved in user decision making at the moment of the recommendation’s consideration. Lastly, future research should explore potential interactions between message characteristics, e.g., revisiting situational complexity, such user perceptions may in fact be the outcomes of interactions with specificity and information sequence as they are inherently dimensions that add to or reduce message complexity, revealing that situational complexity may be a second-order construct comprised of various message characteristics that add to complexity. Finally, an extended study could also be performed with the three significant factors used here (i.e., problem information specificity, solution information specificity, and information sequence) to find the best combination for the optimal presentation of recommendation messages.

Author Contributions

Conceptualization and methodology, all authors; writing—original draft preparation, A.F. and C.K.C.; writing—review and editing, J.B., W.V.O., S.S. and P.-M.L.; supervision, C.K.C. and P.-M.L.; funding acquisition, C.K.C. and P.-M.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Blue Yonder (IRCPJ/514835-16) and UX Chair (IRC 505259-16).

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Ethics Review Board of (Redacted) (2020-3866, 28 February 2020).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to privacy considerations and adherence to the ethics review board approved protocol.

Conflicts of Interest

J.B. is an employee of the funding organization; however, the funding board of the organization had no role in writing the manuscript the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; and the decision to publish the results was governed by the general framework of the research grant. All other authors declare no conflict of interest.

References

- Candillier, L.; Jack, K.; Fessant, F.; Meyer, F. State-of-the-art recommender systems. In Collaborative and Social Information Retrieval and Access: Techniques for Improved User Modeling; IGI Global: Pennsylvania, PA, USA, 2009; pp. 1–22. [Google Scholar]

- Lops, P.; de Gemmis, M.; Semeraro, G. Content-based recommender systems: State of the art and trends. In Recommender Systems Handbook; Springer: Berlin/Heidelberg, Germany, 2011; pp. 73–105. [Google Scholar]

- Lu, J.; Wu, D.; Mao, M.; Wang, W.; Zhang, G. Recommender system application developments: A survey. Decis. Support Syst. 2015, 74, 12–32. [Google Scholar] [CrossRef]

- Ricci, F.; Rokach, L.; Shapira, B. (Eds.) Recommender Systems Handbook; Springer: New York, NY, USA, 2015. [Google Scholar]

- Nunes, I.; Jannach, D. A systematic review and taxonomy of explanations in decision support and recommender systems. User Model. User-Adapt. Interact. 2017, 27, 393–444. [Google Scholar] [CrossRef]

- Sarwar, B.; Karypis, G.; Konstan, J.; Riedl, J. Application of Dimensionality Reduction in Recommender System—A Case Study. Minnesota Univ Minneapolis Dept of Computer Science, 2000. Available online: https://apps.dtic.mil/sti/citations/ADA439541 (accessed on 1 June 2022).

- Shani, G.; Heckerman, D.; Brafman, R.I. An MDP-based recommender system. J. Mach. Learn. Res. 2005, 6, 1265–1295. [Google Scholar]

- Xiao, B.; Benbasat, I. E-commerce product recommendation agents: Use, characteristics, and impact. MIS Q. 2007, 31, 137–209. [Google Scholar] [CrossRef]

- Pu, P.; Chen, L.; Hu, R. A user-centric evaluation framework for recommender systems. In Proceedings of the Fifth ACM Conference on Recommender Systems, Chicago, IL, USA, 23–27 October 2011; pp. 157–164. [Google Scholar]

- Sharma, L.; Gera, A. A survey of recommendation system: Research challenges. Int. J. Eng. Trends Technol. 2013, 4, 1989–1992. [Google Scholar]

- Bigras, É.; Léger, P.M.; Sénécal, S. Recommendation Agent Adoption: How Recommendation Presentation Influences Employees’ Perceptions, Behaviors, and Decision Quality. Appl. Sci. 2019, 9, 4244. [Google Scholar] [CrossRef]

- Davis, F.D. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Kirakowski, J.; Corbett, M. SUMI: The Software Usability Measurement Inventory. Br. J. Educ. Technol. 1993, 24, 210–212. [Google Scholar] [CrossRef]

- Flowerdew, L. A Combined Corpus and Systemic-Functional Analysis of the Problem-Solution Pattern in a Student and Professional Corpus of Technical Writing. TESOL Q. 2003, 37, 489. [Google Scholar] [CrossRef]

- Pettersson, R. Introduction to Message Design. J. Vis. Lit. 2012, 31, 93–104. [Google Scholar] [CrossRef]

- Schnabel, T.; Bennett, P.N.; Joachims, T. Improving recommender systems beyond the algorithm. arXiv 2018, arXiv:1802.07578. [Google Scholar]

- Gunawardana, A.; Shani, G. Evaluating recommender systems. In Recommender Systems Handbook; Springer: Boston, MA, USA, 2015; pp. 265–308. [Google Scholar]

- Panniello, U.; Gorgoglione, M.; Tuzhilin, A. Research Note—In CARSs We Trust: How Context-Aware Recommendations Affect Customers’ Trust and Other Business Performance Measures of Recommender Systems. Inf. Syst. Res. 2016, 27, 182–196. [Google Scholar] [CrossRef]

- Keller, P.A. Regulatory Focus and Efficacy of Health Messages. J. Consum. Res. 2006, 33, 109–114. [Google Scholar] [CrossRef]

- Chazdon, S.; Grant, S. Situational Complexity and the Perception of Credible Evidence. J. Hum. Sci. Ext. 2019, 7, 4. [Google Scholar] [CrossRef]

- Kosnes, L.; Pothos, E.M.; Tapper, K. Increased affective influence: Situational complexity or deliberation time? Am. J. Psychol. 2010, 123, 29–38. [Google Scholar] [CrossRef]

- Johns, G. The Essential Impact of Context on Organizational Behavior. Acad. Manag. Rev. 2006, 31, 386–408. [Google Scholar] [CrossRef]

- Zhang, Y.; Lu, T.; Phang, C.W.; Zhang, C. Scientific Knowledge Communication in Online Q&A Communities: Linguistic Devices as a Tool to Increase the Popularity and Perceived Professionalism of Knowledge Contributions. J. Assoc. Inf. Syst. 2019, 20, 1129–1173. [Google Scholar] [CrossRef]

- Schnackenberg, A.K.; Tomlinson, E.C. Organizational transparency: A new perspective on managing trust in organization-stakeholder relationships. J. Manag. 2016, 42, 1784–1810. [Google Scholar] [CrossRef]

- Yoo, K.H.; Gretzel, U.; Zanker, M. Persuasive Recommender Systems: Conceptual Background and Implications; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Pettersson, R. Information Design Theories. J. Vis. Lit. 2014, 33, 1–96. [Google Scholar] [CrossRef]

- Mandl, M.; Felfernig, A.; Teppan, E.; Schubert, M. Consumer decision making in knowledge-based recommendation. J. Intell. Inf. Syst. 2011, 37, 1–22. [Google Scholar] [CrossRef]

- Ozok, A.A.; Fan, Q.; Norcio, A.F. Design guidelines for effective recommender system interfaces based on a usability criteria conceptual model: Results from a college student population. Behav. Inf. Technol. 2010, 29, 57–83. [Google Scholar] [CrossRef]

- Pettersson, R. Information design–principles and guidelines. J. Vis. Lit. 2010, 29, 167–182. [Google Scholar] [CrossRef]

- Holliday, D.; Wilson, S.; Stumpf, S. User trust in intelligent systems: A journey over time. In Proceedings of the 21st International Conference on Intelligent User Interfaces, Sonoma, CA, USA, 7–10 March 2016; pp. 164–168. [Google Scholar]

- Lamche, B.; Adıgüzel, U.; Wörndl, W. Interactive explanations in mobile shopping recommender systems. In Proceedings of the 8th ACM Conference on Recommender Systems, Foster City, CA, USA, 6–10 October 2014; Volume 14. [Google Scholar]

- Zanker, M.; Schoberegger, M. An empirical study on the persuasiveness of fact-based explanations for recommender systems. In Proceedings of the 8th ACM Conference on Recommender Systems, Foster City, CA, USA, 6–10 October 2014; Volume 1253, pp. 33–36. [Google Scholar]

- Kunkel, J.; Donkers, T.; Michael, L.; Barbu, C.M.; Ziegler, J. Let Me Explain: Impact of Personal and Impersonal Explanations on Trust in Recommender Systems. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 1–12. [Google Scholar]

- Al-Taie, M.Z.; Kadry, S. Visualization of Explanations in Recommender Systems. J. Adv. Manag. Sci. 2014, 2, 140–144. [Google Scholar] [CrossRef]

- Al-Jabri, I.M.; Roztocki, N. Adoption of ERP systems: Does information transparency matter? Telemat. Inform. 2015, 32, 300–310. [Google Scholar] [CrossRef]

- Coursaris, C.K.; Van Osch, W.; Albini, A. Antecedents and consequents of information usefulness in user-generated online reviews: A multi-group moderation analysis of review valence. AIS Trans. Hum.-Comput. Interact. 2018, 10, 1–25. [Google Scholar] [CrossRef]

- Cheung, C.M.K.; Lee, M.K.O.; Rabjohn, N. The impact of electronic word- of-mouth: The adoption of online opinions in online customer communities. Internet Res. 2008, 18, 229–247. [Google Scholar] [CrossRef]

- Petty, R.E.; Cacioppo, J.T. Communication and Persuasion: Central and Peripheral Routes to Attitude Change; Springer: New York, NY, USA, 1986. [Google Scholar]

- Shu, M.; Scott, N. Influence of Social Media on Chinese Students’ Choice of an Overseas Study Destination: An Information Adoption Model Perspective. J. Travel Tour. Mark. 2014, 31, 286–302. [Google Scholar] [CrossRef]

- Coursaris, C.K.; Van Osch, W.; Nah, F.F.-H.; Tan, C.-H. Exploring the effects of source credibility on information adoption on YouTube. In International Conference on HCI in Business, Government, and Organizations; Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 16–25. [Google Scholar] [CrossRef]

- Tintarev, N.; Masthoff, J. Designing and Evaluating Explanations for Recommender Systems. In Recommender Systems Handbook; Springer US: Boston, MA, USA, 2010; pp. 479–510. [Google Scholar] [CrossRef]

- Pu, P.; Chen, L. Trust building with explanation interfaces. In Proceedings of the 11th International Conference on Intelligent user Interfaces, Sydney, Australia, 29 January–1 February 2006; pp. 93–100. [Google Scholar]

- Kizilcec, R.F. How much information? Effects of transparency on trust in an algorithmic interface. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; pp. 2390–2395. [Google Scholar]

- Sinha, R.; Swearingen, K. The role of transparency in recommender systems. In CHI’02 Extended abstracts on Human Factors in Computing Systems; Association for Computing Machinery: New York, NY, USA, 2002; pp. 830–831. [Google Scholar]

- Davis, F.D. A Technology Acceptance Model for Empirically Testing New End-User Information Systems: Theory and Results. Doctoral Dissertation, Massachusetts Institute of Technology, Cambridge, MA, USA, 1985. [Google Scholar]

- Calisir, F.; Calisir, F. The relation of interface usability characteristics, perceived usefulness, and perceived ease of use to end-user satisfaction with enterprise resource planning (ERP) systems. Comput. Hum. Behav. 2004, 20, 505–515. [Google Scholar] [CrossRef]

- Amin, M.; Rezaei, S.; Abolghasemi, M. User satisfaction with mobile websites: The impact of perceived usefulness (PU), perceived ease of use (PEOU) and trust. Nankai Bus. Rev. Int. 2014, 5, 258–274. [Google Scholar] [CrossRef]

- Joo, Y.J.; Lim, K.Y.; Kim, E.K. Online university students’ satisfaction and persistence: Examining perceived level of presence, usefulness and ease of use as predictors in a structural model. Comput. Educ. 2011, 57, 1654–1664. [Google Scholar] [CrossRef]

- Berkovsky, S.; Taib, R.; Conway, D. How to recommend? User trust factors in movie recommender systems. In Proceedings of the 22nd International Conference on Intelligent User Interfaces, Limassol, Cyprus, 13–16 March 2017; pp. 287–300. [Google Scholar]

- Sharma, A.; Cosley, D. Do social explanations work? Studying and modeling the effects of social explanations in recommender systems. In Proceedings of the 22nd International Conference on World Wide Web, Rio de Janeiro, Brazil, 13–17 May 2013; pp. 1133–1144. [Google Scholar]

- McGuirl, J.M.; Sarter, N.B. Supporting trust calibration and the effective use of decision aids by presenting dynamic system confidence information. Hum. Factors J. Hum. Factors Ergon. Soc. 2006, 48, 656–665. [Google Scholar] [CrossRef] [PubMed]

- Jameson, A.; Willemsen, M.C.; Felfernig, A.; de Gemmis, M.; Lops, P.; Semeraro, G.; Chen, L. Human Decision Making and Recommender Systems. In Recommender Systems Handbook; Springer: Boston, MA, USA, 2015; pp. 611–648. [Google Scholar] [CrossRef]

- Jarvenpaa, S.L.; Shaw, T.R.; Staples, D.S. Toward Contextualized Theories of Trust: The Role of Trust in Global Virtual Teams. Inf. Syst. Res. 2004, 15, 250–267. [Google Scholar] [CrossRef]

- Coursaris, C.K.; Hassanein, K.; Head, M.; Bontis, N. The impact of distractions on the usability and the adoption of mobile devices for wireless data services. In Proceedings of the European Conference on Information Systems; 2007. Available online: https://aisel.aisnet.org/ecis2007/28 (accessed on 1 June 2022).

- Coursaris, C.K.; Hassanein, K.; Head, M.M.; Bontis, N. The impact of distractions on the usability and intention to use mobile devices for wireless data services. Comput. Hum. Behav. 2012, 28, 1439–1449. [Google Scholar] [CrossRef]

- Tintarev, N.; Masthoff, J. A Survey of Explanations in Recommender Systems. In Proceedings of the 2007 IEEE 23rd International Conference on Data Engineering Workshop, Istanbul, Turkey, 17–20 April 2007; pp. 801–810. [Google Scholar] [CrossRef]

- Gedikli, F.; Jannach, D.; Ge, M. How should I explain? A comparison of different explanation types for recommender systems. Int. J. Hum.-Comput. Stud. 2014, 72, 367–382. [Google Scholar] [CrossRef]

- Chan, S.H.; Song, Q. Motivational framework: Insights into decision support system use and decision performance. In Decision Support Systems; InTech: Rijeka, Ceoatia, 2010; pp. 1–24. [Google Scholar]

- Kahneman, D.; Tversky, A. Prospect Theory: An Analysis of Decision under Risk. Econometrica 1979, 47, 263. [Google Scholar] [CrossRef]

- Roy, M.C.; Lerch, F.J. Overcoming Ineffective Mental Representations in Base-Rate Problems. Inf. Syst. Res. 1996, 7, 233–247. [Google Scholar] [CrossRef]

- Falconnet, A.; Van Osch, W.; Chen, S.L.; Beringer, J.; Fredette, M.; Sénécal, S.; Léger, P.M.; Coursaris, C.K. Beyond System Design: The Impact of Message Design on Recommendation Acceptance. In Information Systems and Neuroscience: NeurosIS Retreat 2020; Springer International Publishing: Berlin/Heidelberg, Germany, 2020. [Google Scholar]

- Reiter, E. Natural Language Generation Challenges for Explainable AI. In Proceedings of the 1st Workshop on Interactive Natural Language Technology for Explainable Artificial Intelligence (NL4XAI 2019), Tokyo, Japan, 29 October 2019. [Google Scholar]

- Adomavicius, G.; Bockstedt, J.; Curley, S.; Zhang, J. Reducing recommender systems biases: An investigation of rating display designs. Forthcom. MIS Q. 2019, 43, 1321–1341. [Google Scholar]

- Schreiner, M.; Fischer, T.; Riedl, R. Impact of content characteristics and emotion on behavioral engagement in social media: Literature review and research agenda. Electron. Commer. Res. 2019, 21, 329–345. [Google Scholar] [CrossRef]

- Rzepka, C.; Berger, B. User Interaction with AI-Enabled Systems: A Systematic Review of IS Research. 2018. Available online: https://www.researchgate.net/profile/Benedikt-Berger-2/publication/329269262_User_Interaction_with_AI-enabled_Systems_A_Systematic_Review_of_IS_Research/links/5bffb55392851c63cab02730/User-Interaction-with-AI-enabled-Systems-A-Systematic-Review-of-IS-Research.pdf (accessed on 1 June 2022).

- Li, H.; Chatterjee, S.; Turetken, O. Information Technology Enabled Persuasion: An Experimental Investigation of the Role of Communication Channel, Strategy and Affect. AIS Trans. Hum.-Comput. Interact. 2017, 9, 281–300. [Google Scholar] [CrossRef]

- Matsui, T.; Yamada, S. The effect of subjective speech on product recommendation virtual agent. In Proceedings of the 24th International Conference on Intelligent User Interfaces: Companion, Marina del Ray, CA, USA, 16–20 March 2019; pp. 109–110. [Google Scholar]

- Chattaraman, V.; Kwon, W.-S.; Gilbert, J.E.; Ross, K. Should AI-Based, conversational digital assistants employ social- or task-oriented interaction style? A task-competency and reciprocity perspective for older adults. Comput. Hum. Behav. 2018, 90, 315–330. [Google Scholar] [CrossRef]

- Léger, P.M.; Sénécal, S.; Courtemanche, F.; de Guinea, A.O.; Titah, R.; Fredette, M.; Labonte-LeMoyne, É. Precision is in the eye of the beholder: Application of eye fixation-related potentials to information systems research. Assoc. Inf. Syst. 2014. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).