User Experience of a Digital Fashion Show: Exploring the Effectiveness of Interactivity in Virtual Reality

Abstract

1. Introduction

2. Related Works

2.1. Convergence Contents

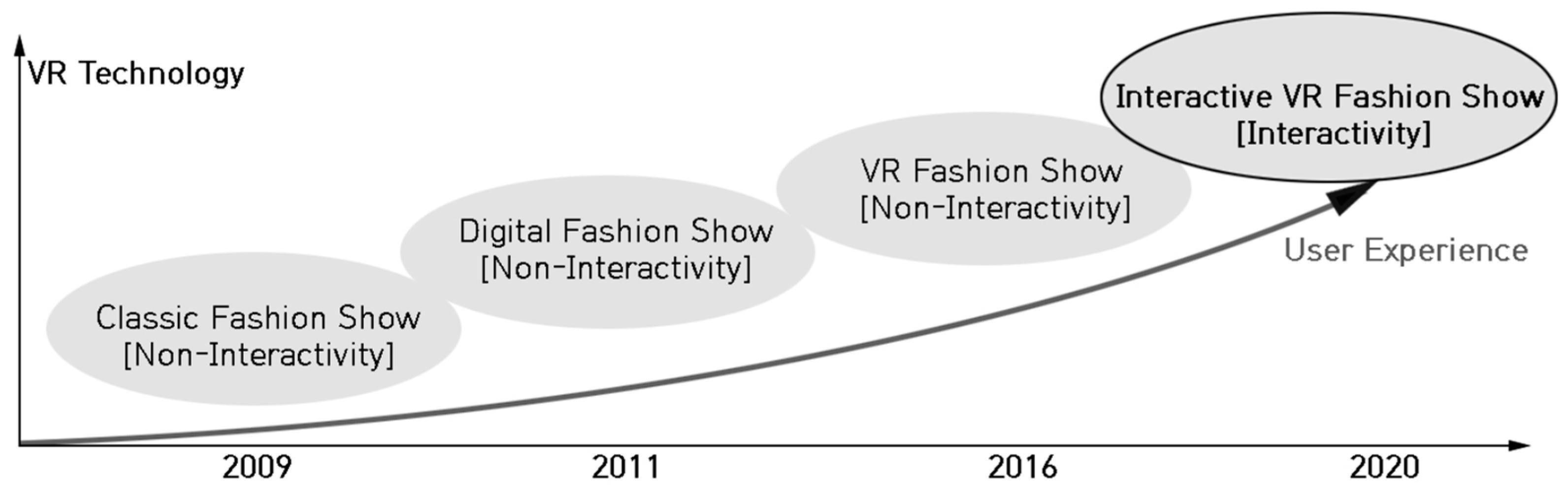

2.2. Digital Fashion Show

3. Design and Implementation of IVR Fashion Show

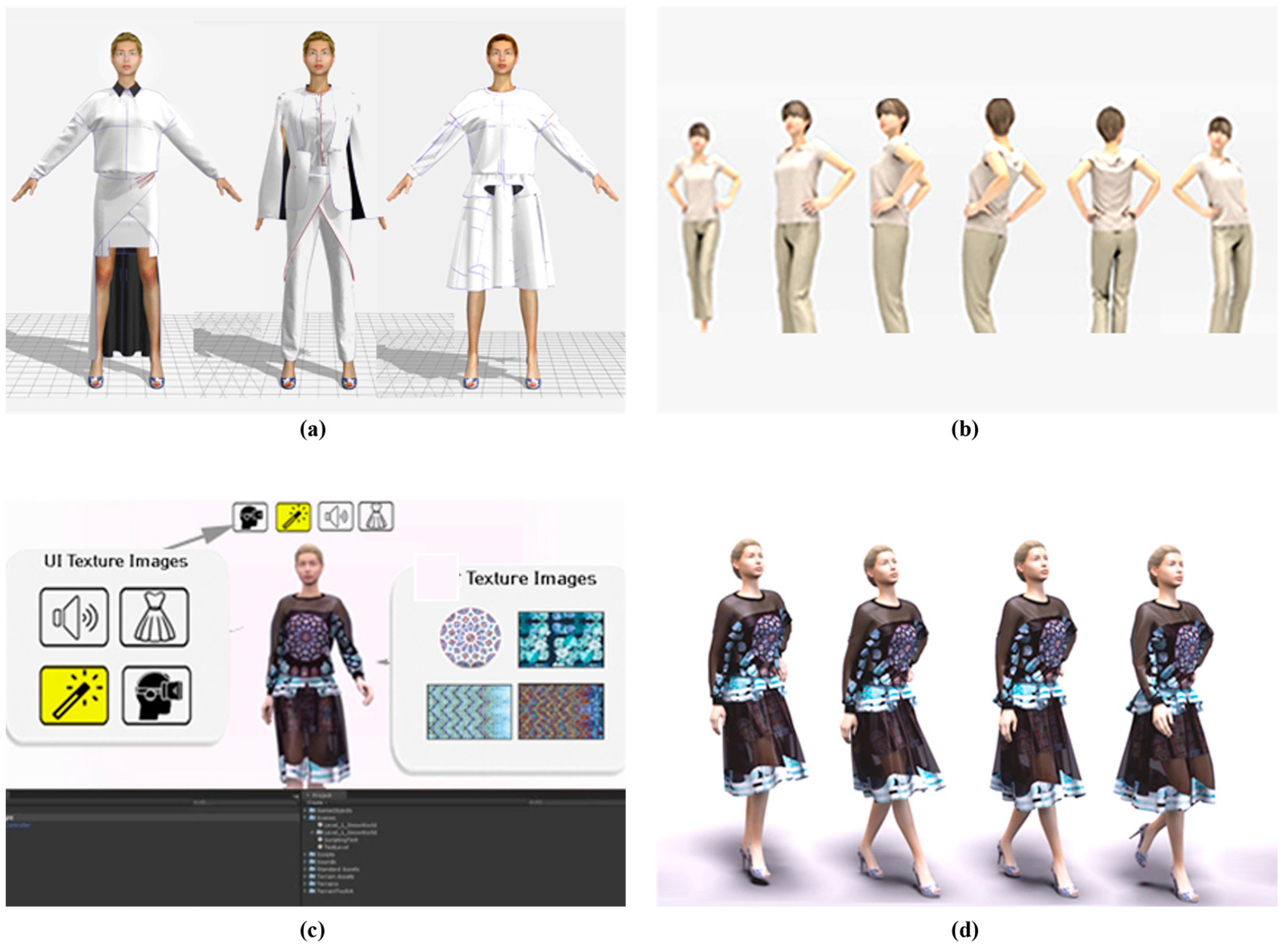

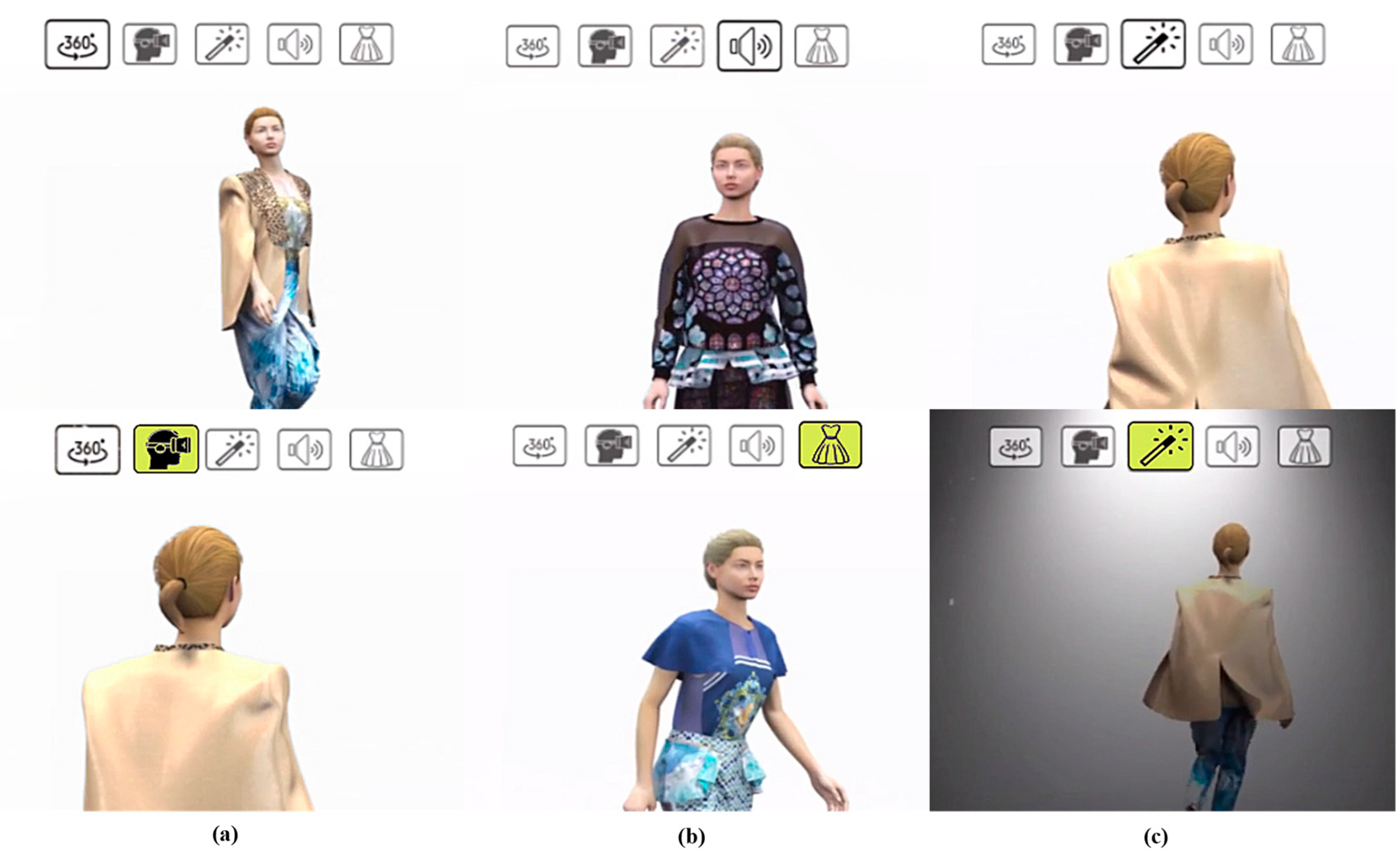

3.1. Interaction Components

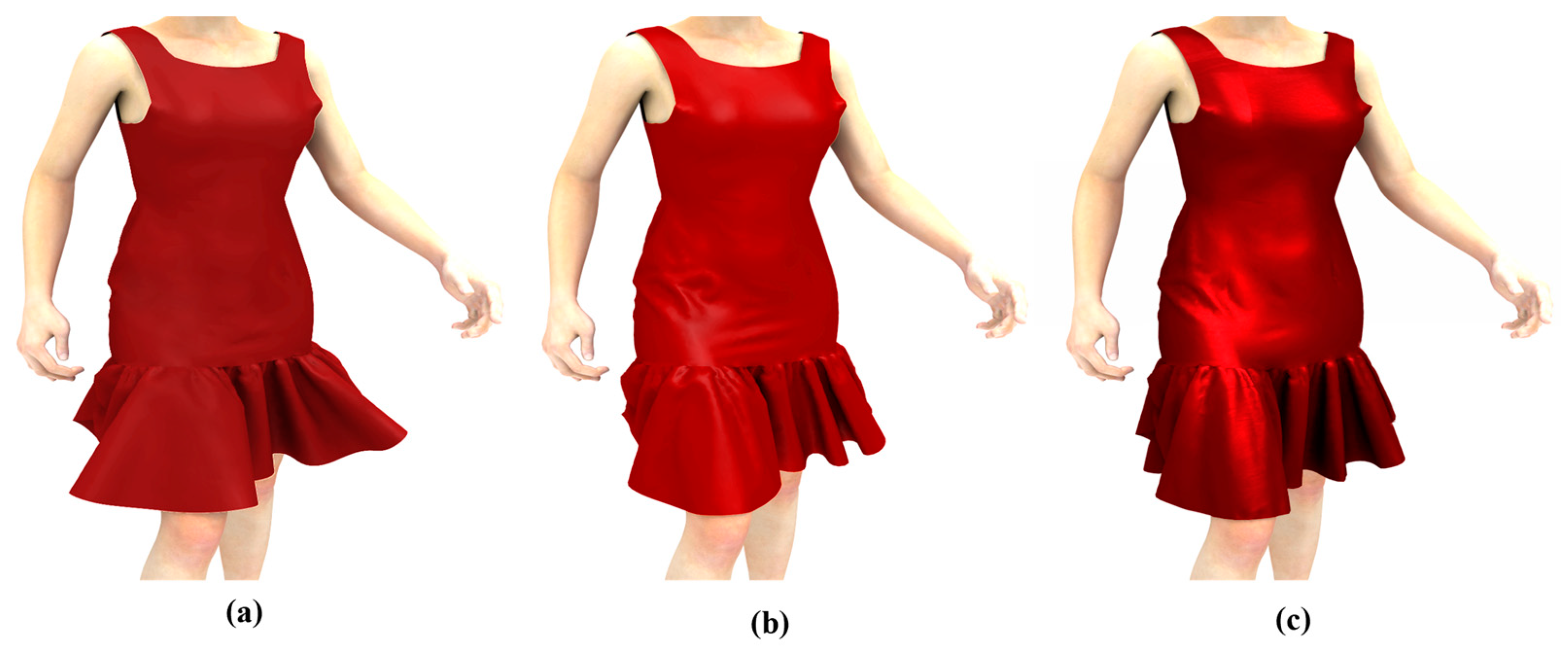

3.2. Digital Clothes

3.3. User Experience

3.4. VR Space

4. Experimental Results

4.1. IVR Fashion Show

4.2. User Test

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Jeong, W.-J.; Cho, J.-H.; Kim, M.-S. A Study of VR Contents problem associated with VR Market Change. In Proceedings of the Korean Society of Design Science Conference, Daegu, Republic of Korea, 18 November 2017; pp. 200–201. [Google Scholar]

- Chun, H.W.; Han, M.K.; Jang, J.H. Application trends in virtual reality. Electron. Telecommun. Trends 2017, 32, 93–101. [Google Scholar]

- Choi, H.-J.; Shin, Y.-O. The effects of the components of a fashion show on viewing satisfaction. J. Fash. Bus. 2008, 12, 45–62. [Google Scholar]

- Diehl, M.E. How to Produce a Fashion Show; Fairchild Books: New York, NY, USA, 1983; ISBN 978-0870051593. [Google Scholar]

- Gao, X.; Chen, M.; Guo, S.; Sun, W.; Liao, M. Virtual fashion show with HTC VIVE. DEStech Trans. Comput. Sci. Eng. 2018. [Google Scholar] [CrossRef]

- Hyun, Y.; Kim, H.C.; Kim, Y.G. A Verification of the structural relationships between system quality, information quality, service quality, perceived usefulness and reuse intention to augmented reality by applying transformed tam model: A focus on the moderating role of telepresence and the mediating role of perceived usefulness. Korean Manag. Rev. 2014, 43, 1465–1492. [Google Scholar]

- Nam, S.; Yu, H.S.; Shin, D. User experience in virtual reality games: The effect of presence on enjoyment. Int. Telecommun. Policy Rev. 2017, 24, 85–125. [Google Scholar]

- Seminara, M.; Meucci, M.; Tarani, F.; Riminesi, C.; Catani, J. Characterization of a VLC system in real museum scenario using diffusive LED lighting of artworks. Photonics Res. 2021, 548–557. [Google Scholar] [CrossRef]

- Jung, J.; Yu, J.; Seo, Y.; Ko, E. Consumer experiences of virtual reality: Insights from VR luxury brand fashion shows. J. Bus. Res. 2021, 130, 517–524. [Google Scholar] [CrossRef]

- Baek, S.; Gil, Y.-H.; Kim, Y. VR-based job training system using tangible interactions. Sensors 2021, 21, 6794. [Google Scholar] [CrossRef] [PubMed]

- Battistoni, P.; Di Gregorio, M.; Romano, M.; Sebillo, M.; Vitiello, G.; Brancaccio, A. Interaction design patterns for augmented reality fitting rooms. Sensors 2022, 22, 982. [Google Scholar] [CrossRef] [PubMed]

- Levinson, P. The Soft Edge: A Natural History and Future of the Information Revolution; Routledge: London, UK, 1998; ISBN 978-0415197724. [Google Scholar]

- Bolter, J.D.; Grusin, R. Remediation: Understanding New Media; MIT Press: Cambridge, MA, USA, 2000; ISBN 978-0262522793. [Google Scholar]

- Dobson, S. Remediation. Understanding new media—Revisiting a classic. Int. J. Media Tech. Lifelong Learn. 2009, 5, 1–6. [Google Scholar]

- Hwang, J.-S.; Park, Y.-J.; Lee, D.-H. Digital convergence and change of spatial awareness. Korean Inf. Soc. Develop. Inst. Rep. (KISDI) 2009, 9, 1–128. [Google Scholar]

- Bamodu, Q.; Ye, X. Virtual reality and virtual reality system components. In Proceedings of the 2nd International Conference on Systems Engineering and Modeling (ICSEM 2013), Beijing, China, 21–22 April 2013; pp. 765–767. [Google Scholar]

- Forlizzi, J.; Battarbee, K. Understanding experience in interactive systems. In Proceedings of the 5th Conference on Designing Interactive Systems: Processes, Practices, Methods, and Techniques (DIS 2004), Cambridge, MA, USA, 1–4 August 2004; pp. 261–268. [Google Scholar]

- Wu, S.; Kang, Y.; Ko, Y.; Kim, A.; Kim, N.; Ko, H. A Study on the case analysis and the production of 3D digital fashion show. J. Fash. Bus. 2013, 17, 64–80. [Google Scholar] [CrossRef]

- McKay, E.N. UI is Communication; MK Morgan Kaufmann: Burlington, MA, USA, 2013; ISBN 978-0123969804. [Google Scholar]

- Ahn, D.-K.; Lee, W.; Lee, J.-W. UI design consideration for interactive VR fashion-show. In Proceedings of the 2019 Spring Korea Game Society Conference, Seoul, Republic of Korea, 17–18 May 2019; pp. 259–261. [Google Scholar]

- Volino, P.; Cordier, F.; Magnenat-Thalmann, N. From early virtual garment simulation to interactive fashion design. Comput. Aided Des. 2005, 37, 593–608. [Google Scholar] [CrossRef]

- Kim, S.; Ahn, D.-K. Analysis of substantial visual elements in 3D digital fashion show. J. Commun. Des. 2016, 57, 305–320. [Google Scholar]

- Ahn, D.-K.; Chung, J.-H. A study on material analysis with usability for virtual costume hanbok in digital fashion show. J. Digit. Converg. 2017, 15, 351–358. [Google Scholar] [CrossRef]

- Marvelous Designer. Available online: https://marvelousdesigner.com (accessed on 1 July 2022).

- Unity. Available online: https://unity.com (accessed on 1 July 2022).

- Norman, D. The Design of Everyday Things; Basic Books: New York, NY, USA, 1988; ISBN 978-0465067107. [Google Scholar]

- Lee, K.-H. UX/UI Design to Create User Experience; Freelec Books: Bucheon, Republic of Korea, 2018; ISBN 978-8965402169. [Google Scholar]

- Purwar, S. Designing User Experience for Virtual Reality Application. Available online: https://uxplanet.org (accessed on 1 August 2022).

- Autodesk. Available online: https://autodesk.com (accessed on 1 July 2022).

- Adobe. Available online: https://adobe.com (accessed on 1 July 2022).

- OptiTrack. Available online: https://optitrack.com (accessed on 1 July 2022).

- VIVE. Available online: https://www.vive.com (accessed on 1 July 2022).

- Kim, Y.; Baek, S.; Bae, B.C. Motion capture of the human body using multiple depth sensors. ETRI J. 2017, 39, 181–190. [Google Scholar] [CrossRef]

- Cheng, D.; Duan, J.; Chen, H.; Wang, H.; Li, D.; Wang, Q.; Hou, Q.; Yang, T.; Hou, W.; Wang, D.; et al. Freeform OST-HMD system with large exit pupil diameter and vision correction capability. Photonics Res. 2022, 10, 21–32. [Google Scholar] [CrossRef]

| Interaction Components | Importance (Scale: 1 to 10) |

|---|---|

| Point of View | 9 |

| Audience | 5 |

| Clothes | 10 |

| Walking Model | 7 |

| Stage | 6 |

| Walking Animation | 4 |

| Illumination Effect | 9 |

| Background Sound | 9 |

| Property | Element | Description | |

|---|---|---|---|

| Thickness |  | Stretch | Relaxation of the slack |

| Bend | Bending strength for the turnaround | ||

| Friction | Deformation upon impact | ||

| Weight |  | Density | Density of garment |

| Air Drag | Resistant strength weight | ||

| Gravity | Strain control on ground | ||

| Color |  | Primary | Adjust primary color |

| Reflection | Reflectance value for light | ||

| Opacity | Control the transparency | ||

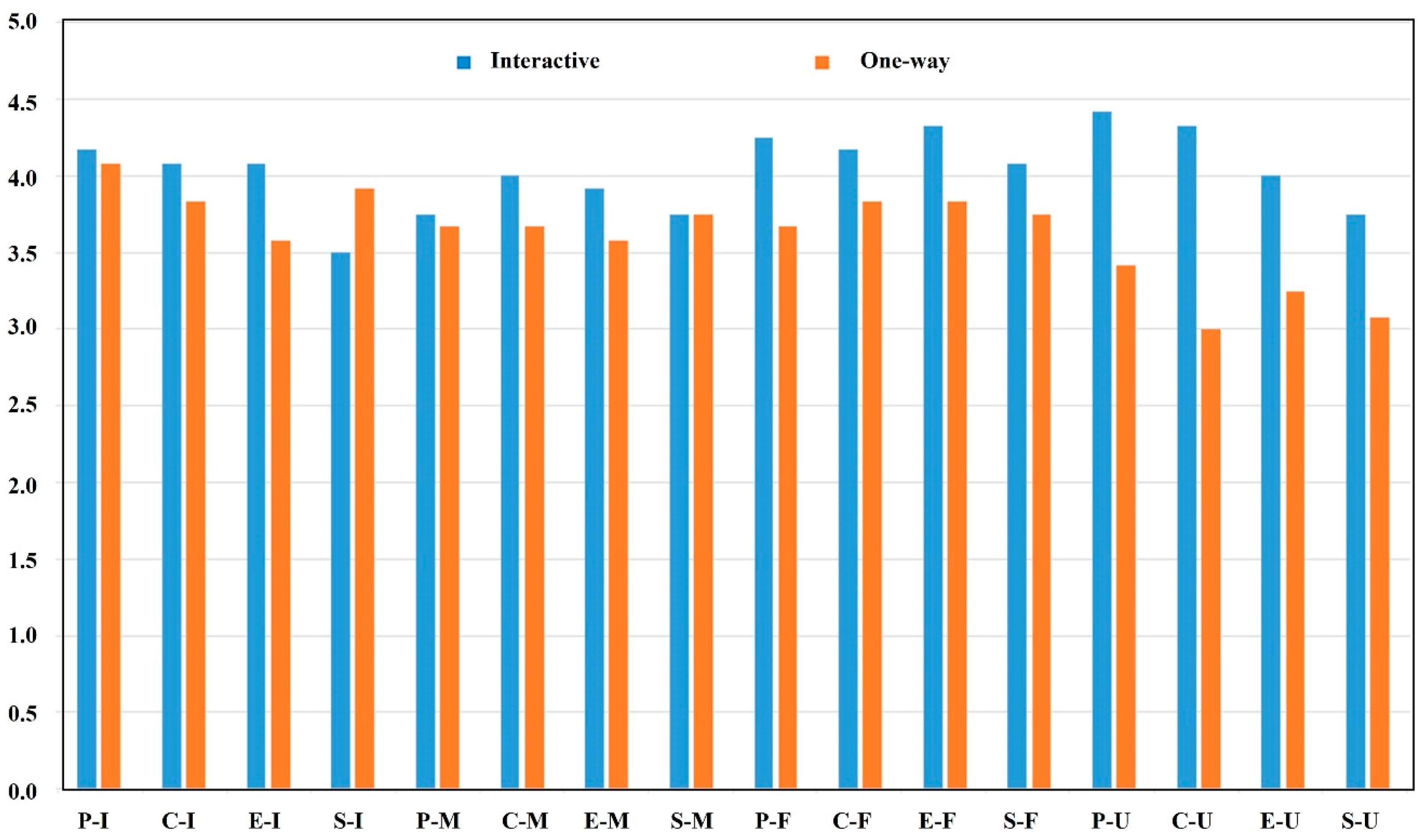

| UX Component | Independent Variable | One-Way Average (S.D.) | Interactive Average (S.D.) | t-Value | p-Value |

|---|---|---|---|---|---|

| Interest | POV | 3.63 (1.04) | 4.34 (0.74) | 6.09 | <0.001 |

| Cloth | 3.59 (0.91) | 4.42 (0.66) | 8.09 | <0.001 | |

| Effect | 3.57 (0.91) | 4.18 (0.74) | 5.70 | <0.001 | |

| Sound | 3.67 (0.92) | 3.82 (0.86) | 2.17 | <0.05 | |

| Fun | POV | 3.43 (1.06) | 4.43 (0.71) | 8.59 | <0.001 |

| Cloth | 3.40 (1.03) | 4.53 (0.59) | 10.43 | <0.001 | |

| Effect | 3.44 (0.99) | 4.37 (0.67) | 8.52 | <0.001 | |

| Sound | 3.41 (1.03) | 4.12 (0.74) | 6.13 | <0.001 | |

| Immersion | POV | 3.70 (0.98) | 4.32 (0.84) | 5.26 | <0.001 |

| Cloth | 3.68 (0.89) | 4.12 (0.87) | 3.87 | <0.001 | |

| Effect | 3.58 (0.99) | 4.00 (0.86) | 3.51 | <0.001 | |

| Sound | 3.78 (0.90) | 3.84 (0.89) | 0.52 | >0.05 | |

| Usability | POV | 3.50 (1.12) | 4.40 (0.84) | 7.04 | <0.001 |

| Cloth | 3.43 (1.03) | 4.31 (0.81) | 7.36 | <0.001 | |

| Effect | 3.21 (1.08) | 3.86 (0.99) | 4.86 | <0.001 | |

| Sound | 3.21 (1.04) | 3.73 (1.01) | 3.93 | <0.001 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ahn, D.-K.; Bae, B.-C.; Kim, Y. User Experience of a Digital Fashion Show: Exploring the Effectiveness of Interactivity in Virtual Reality. Appl. Sci. 2023, 13, 2558. https://doi.org/10.3390/app13042558

Ahn D-K, Bae B-C, Kim Y. User Experience of a Digital Fashion Show: Exploring the Effectiveness of Interactivity in Virtual Reality. Applied Sciences. 2023; 13(4):2558. https://doi.org/10.3390/app13042558

Chicago/Turabian StyleAhn, Duck-Ki, Byung-Chull Bae, and Yejin Kim. 2023. "User Experience of a Digital Fashion Show: Exploring the Effectiveness of Interactivity in Virtual Reality" Applied Sciences 13, no. 4: 2558. https://doi.org/10.3390/app13042558

APA StyleAhn, D.-K., Bae, B.-C., & Kim, Y. (2023). User Experience of a Digital Fashion Show: Exploring the Effectiveness of Interactivity in Virtual Reality. Applied Sciences, 13(4), 2558. https://doi.org/10.3390/app13042558