Point Cloud Repair Method via Convex Set Theory

Abstract

1. Introduction

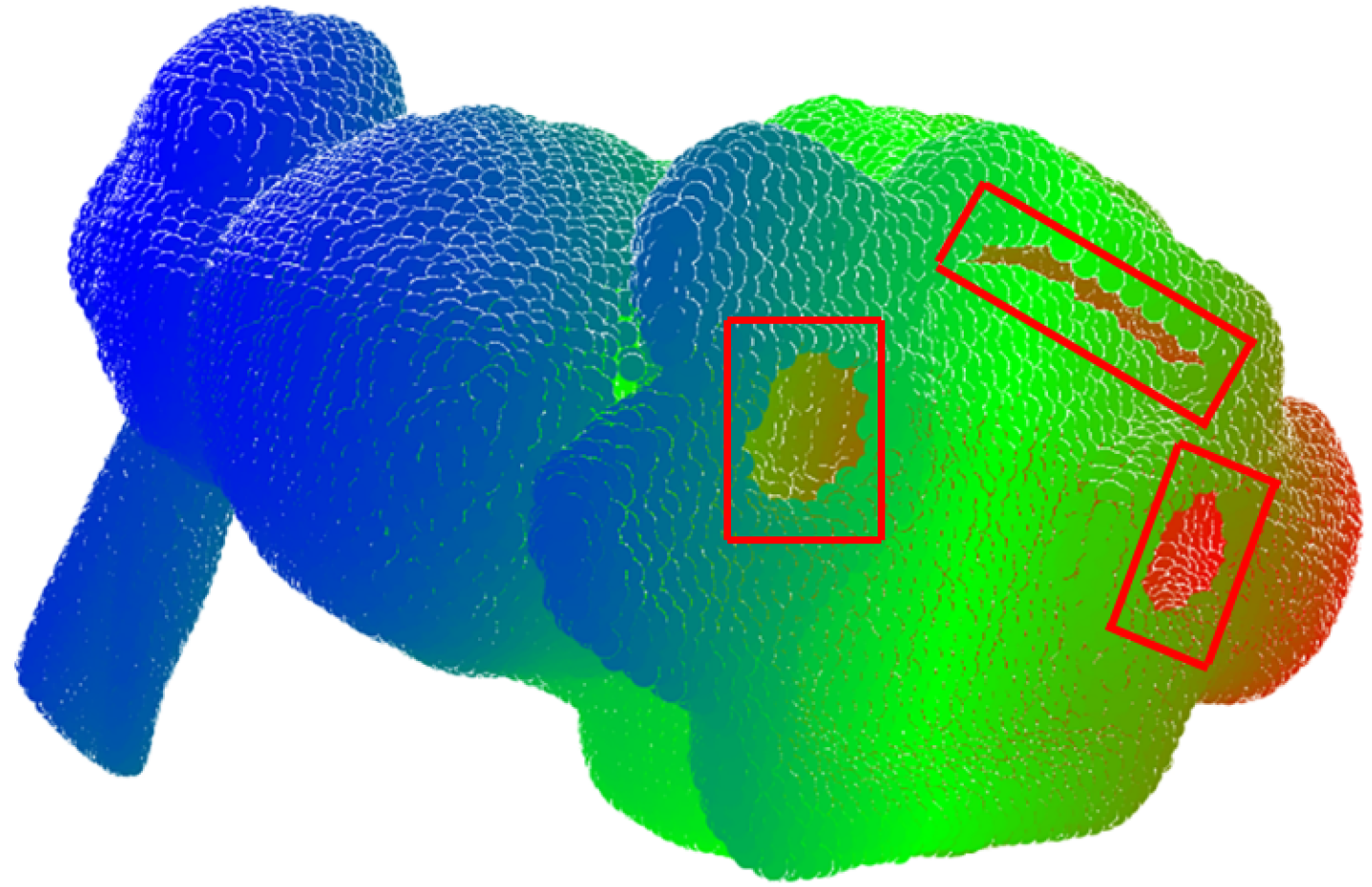

2. Methods

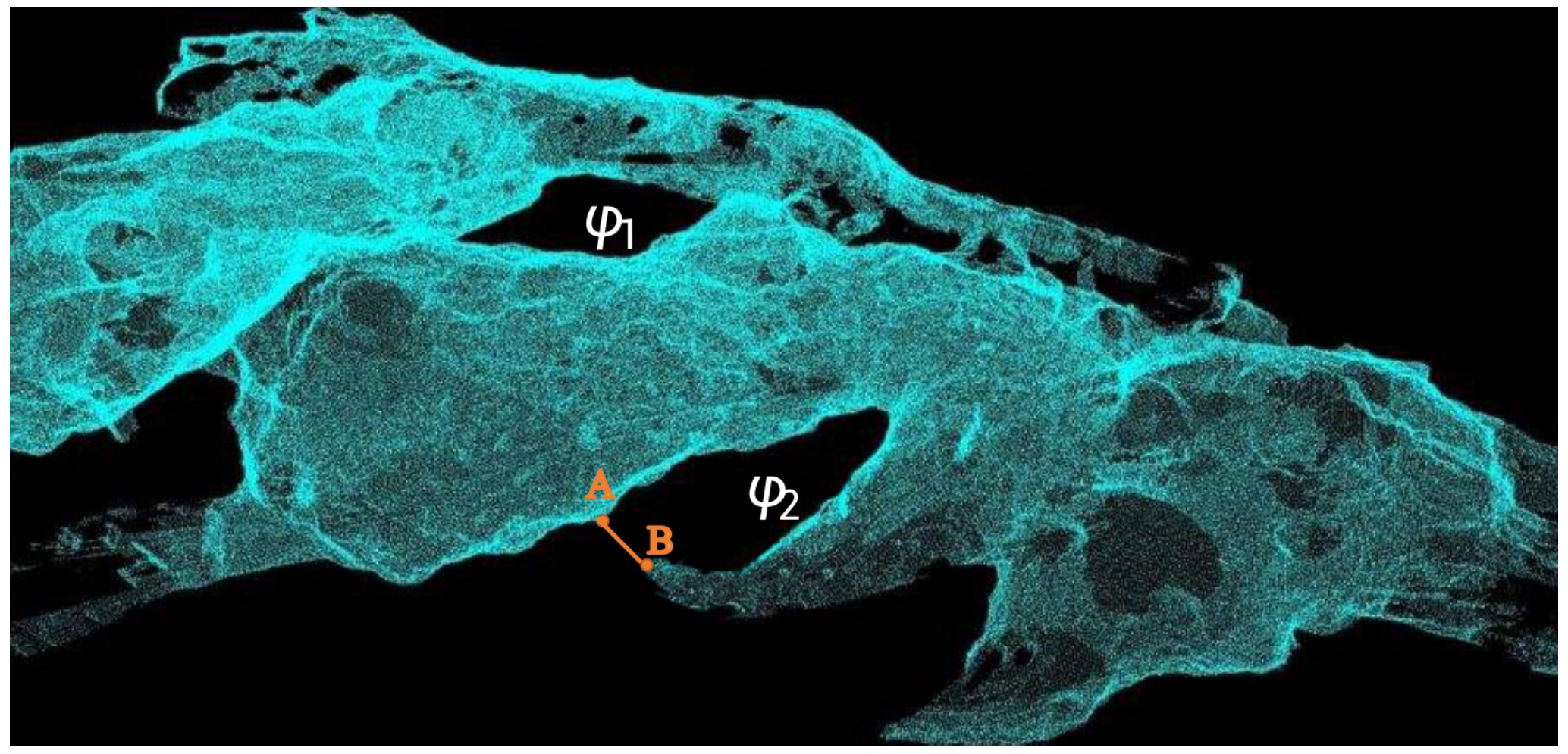

2.1. Hole Definition

2.2. Weakening of Convex Set

2.3. Discretization of Regional Space

2.4. Filtering of Spatial Subunits

2.4.1. Coarse Screening

2.4.2. Fine Screening

2.5. Generate Fill Points

3. Results and Discussion

3.1. Qualitative Analysis

3.2. Quantitative Analysis

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Pernot, J.P.; Moraru, G.; Véron, P. Filling holes in meshes using a mechanical model to simulate the curvature variation minimization. Comput. Graph. 2006, 30, 892–902. [Google Scholar] [CrossRef]

- Bac, A.; Tran, N.V.; Daniel, M. A multistep approach to restoration of locally undersampled meshes. In Proceedings of the International Conference on Geometric Modeling and Processing, Hangzhou, China, 23–25 April 2008; Springer: Berlin/Heidelberg, Germany, 2008; pp. 272–289. [Google Scholar]

- Chen, C.Y.; Cheng, K.Y. A sharpness-dependent filter for recovering sharp features in repaired 3D mesh models. IEEE Trans. Vis. Comput. Graph. 2007, 14, 200–212. [Google Scholar] [CrossRef] [PubMed]

- Harary, G.; Tal, A.; Grinspun, E. Feature-Preserving Surface Completion Using Four Points. Comput. Graph. Forum 2014, 33, 45–54. [Google Scholar] [CrossRef]

- Hu, W.; Fu, Z.; Guo, Z. Local frequency interpretation and non-local self-similarity on graph for point cloud inpainting. IEEE Trans. Image Process. 2019, 28, 4087–4100. [Google Scholar] [CrossRef] [PubMed]

- Sung, M.; Kim, V.G.; Angst, R.; Guibas, L. Data-driven structural priors for shape completion. ACM Trans. Graph. (TOG) 2015, 34, 1–11. [Google Scholar] [CrossRef]

- Sipiran, I.; Gregor, R.; Schreck, T. Approximate symmetry detection in partial 3d meshes. Comput. Graph. Forum 2014, 33, 131–140. [Google Scholar] [CrossRef]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar] [CrossRef]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. Adv. Neural Inf. Process. Syst. 2017, 30, 5099–5108. [Google Scholar] [CrossRef]

- Alliegro, A.; Valsesia, D.; Fracastoro, G.; Magli, E.; Tommasi, T. Denoise and contrast for category agnostic shape completion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 4629–4638. [Google Scholar] [CrossRef]

- Huang, Z.; Yu, Y.; Xu, J.; Ni, F.; Le, X. Pf-net: Point fractal network for 3d point cloud completion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 7662–7670. [Google Scholar] [CrossRef]

- Sarmad, M.; Lee, H.J.; Kim, Y.M. Rl-gan-net: A reinforcement learning agent controlled gan network for real-time point cloud shape completion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019; pp. 5898–5907. [Google Scholar] [CrossRef]

- Xie, H.; Yao, H.; Zhou, S.; Mao, J.; Zhang, S.; Sun, W. Grnet: Gridding residual network for dense point cloud completion. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Cham, Switzerland, 2020; pp. 365–381. [Google Scholar]

- Dai, A.; Ruizhongtai Qi, C.; Nießner, M. Shape completion using 3d-encoder-predictor cnns and shape synthesis. In Proceedings of the IEEE conference on computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5868–5877. [Google Scholar] [CrossRef]

- Liu, M.; Sheng, L.; Yang, S.; Shao, J.; Hu, S.M. Morphing and sampling network for dense point cloud completion. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11596–11603. [Google Scholar] [CrossRef]

- Yu, X.; Rao, Y.; Wang, Z.; Liu, Z.; Lu, J.; Zhou, J. Pointr: Diverse point cloud completion with geometry-aware transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 12498–12507. [Google Scholar] [CrossRef]

- Wen, X.; Li, T.; Han, Z.; Liu, Y.S. Point cloud completion by skip-attention network with hierarchical folding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1939–1948. [Google Scholar] [CrossRef]

- Tchapmi, L.P.; Kosaraju, V.; Rezatofighi, H.; Reid, I.; Savarese, S. Topnet: Structural point cloud decoder. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019; pp. 383–392. [Google Scholar] [CrossRef]

- Yang, Y.; Feng, C.; Shen, Y.; Tian, D. Foldingnet: Point cloud auto-encoder via deep grid deformation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 206–215. [Google Scholar] [CrossRef]

- Wen, X.; Xiang, P.; Han, Z.; Cao, Y.P.; Wan, P.; Zheng, W.; Liu, Y.S. Pmp-net: Point cloud completion by learning multi-step point moving paths. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 7443–7452. [Google Scholar] [CrossRef]

- Wen, X.; Xiang, P.; Han, Z.; Cao, Y.P.; Wan, P.; Zheng, W.; Liu, Y.S. PMP-Net++: Point cloud completion by transformer-enhanced multi-step point moving paths. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 852–867. [Google Scholar] [CrossRef] [PubMed]

- Zhao, X.; Zhang, B.; Wu, J.; Hu, R.; Komura, T. Relationship-based Point Cloud Completion. IEEE Trans. Vis. Comput. Graph. 2021, 28, 4940–4950. [Google Scholar] [CrossRef] [PubMed]

- Xu, M.; Wang, Y.; Liu, Y.; Qiao, Y. CP3: Unifying Point Cloud Completion by Pretrain-Prompt-Predict Paradigm. arXiv 2022, arXiv:2207.05359. [Google Scholar]

- Wang, C.; Ning, X.; Sun, L.; Zhang, L.; Li, W.; Bai, X. Learning Discriminative Features by Covering Local Geometric Space for Point Cloud Analysis. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Wang, C.; Wang, X.; Zhang, J.; Zhang, L.; Bai, X.; Ning, X.; Zhou, J.; Hancock, E. Uncertainty estimation for stereo matching based on evidential deep learning. Pattern Recognit. 2022, 124, 108498. [Google Scholar] [CrossRef]

- Gerhard, H.B.; Ruwen, S.; Reinhard, K. Detecting Holes in Point Set Surfaces. J. WSCG 2006, 14, 89–96. [Google Scholar]

- Trinh, T.H.; Tran, M.H. Hole boundary detection of a surface of 3D point clouds. In Proceedings of the 2015 IEEE International Conference on Advanced Computing and Applications (ACOMP), Ho Chi Minh City, Vietnam, 23–25 November 2015; pp. 124–129. [Google Scholar]

- Lu, Y.; Rasmussen, C. Simplified Markov random fields for efficient semantic labeling of 3D point clouds. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012; pp. 2690–2697. [Google Scholar]

- Zhang, J.; Zhou, M.Q.; Zhang, Y.H.; Geng, G.H. Global Feature Extraction from Scattered Point Clouds Based on Markov Random Field. Acta Autom. Sin. 2016, 42, 7–10. [Google Scholar] [CrossRef]

- Xiang, P.; Wen, X.; Liu, Y.S.; Cao, Y.P.; Wan, P.; Zheng, W.; Han, Z. Snowflakenet: Point cloud completion by snowflake point deconvolution with skip-transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 5499–5509. [Google Scholar] [CrossRef]

- Xie, C.; Wang, C.; Zhang, B.; Yang, H.; Chen, D.; Wen, F. Style-based point generator with adversarial rendering for point cloud completion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 4619–4628. [Google Scholar] [CrossRef]

- Yang, L.; Yan, Q.; Xiao, C. Shape-controllable geometry completion for point cloud models. Vis. Comput. 2017, 33, 385–398. [Google Scholar] [CrossRef]

- Yuan, W.; Khot, T.; Held, D.; Mertz, C.; Hebert, M. PCN: Point Completion Network. In Proceedings of the 2018 IEEE International Conference on 3D Vision (3DV), Verona, Italy, 5–8 September 2018; pp. 728–737. [Google Scholar] [CrossRef]

- Pauly, M.; Mitra, N.J.; Giesen, J.; Gross, M.H.; Guibas, L.J. Example-based 3d scan completion. In Proceedings of the Symposium on Geometry Processing, Vienna, Austria, 4–6 July 2005; Number CONF. pp. 23–32. [Google Scholar]

- Tian, D.; Ochimizu, H.; Feng, C.; Cohen, R.; Vetro, A. Geometric distortion metrics for point cloud compression. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3460–3464. [Google Scholar] [CrossRef]

- Dinesh, C.; Bajić, I.V.; Cheung, G. Exemplar-based framework for 3D point cloud hole filling. In Proceedings of the 2017 IEEE Visual Communications and Image Processing (VCIP), St. Petersburg, FL, USA, 10–13 December 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Rusu, R.B.; Cousins, S. 3D is here: Point Cloud Library (PCL). In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011. [Google Scholar]

- Stanford Data Set. Available online: http://graphics.stanford.edu/data/3Dscanrep/ (accessed on 8 November 2021).

| Model | SpareNet [31] | SnowflakeNet [30] | PF-Net [11] | SCCR [32] | Ours |

|---|---|---|---|---|---|

| Airplane | 3.28 | 2.52 | 2.79 | 1.85 | 0.23 |

| Chair | 4.06 | 3.38 | 4.10 | 1.58 | 1.04 |

| Table | 18.56 | 13.39 | 19.31 | 4.25 | 0.51 |

| Car | 6.33 | 10.30 | 12.39 | 2.96 | 1.13 |

| Monster | 811.98 | 5567.67 | 6092.97 | 240.32 | 107.44 |

| Monkey | 290.11 | 529.26 | 603.14 | 2.77 | 1.21 |

| Sphere | 148.25 | 182.33 | 223.45 | 26.01 | 2.47 |

| Mean | 183.22 | 901.26 | 994.02 | 39.96 | 16.29 |

| Model | SpareNet [31] | SnowflakeNet [30] | PF-Net [11] | SCCR [32] | Ours |

|---|---|---|---|---|---|

| Airplane | 18.54 | 19.31 | 18.01 | 40.38 | 62.41 |

| Chair | 15.15 | 14.48 | 15.11 | 32.05 | 37.71 |

| Table | 19.22 | 19.91 | 13.38 | 41.52 | 58.95 |

| Car | 15.48 | 14.53 | 13.51 | 40.20 | 44.23 |

| Monster | 4.81 | 5.01 | 2.33 | 32.41 | 51.72 |

| Monkey | −2.52 | 3.01 | −3.74 | 37.36 | 50.83 |

| Sphere | −7.17 | −9.31 | −8.63 | 10.17 | 33.45 |

| Mean | 11.78 | 9.56 | 7.14 | 33.44 | 48.47 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dong, T.; Zhang, Y.; Li, M.; Bai, Y. Point Cloud Repair Method via Convex Set Theory. Appl. Sci. 2023, 13, 1830. https://doi.org/10.3390/app13031830

Dong T, Zhang Y, Li M, Bai Y. Point Cloud Repair Method via Convex Set Theory. Applied Sciences. 2023; 13(3):1830. https://doi.org/10.3390/app13031830

Chicago/Turabian StyleDong, Tianzhen, Yi Zhang, Mengying Li, and Yuntao Bai. 2023. "Point Cloud Repair Method via Convex Set Theory" Applied Sciences 13, no. 3: 1830. https://doi.org/10.3390/app13031830

APA StyleDong, T., Zhang, Y., Li, M., & Bai, Y. (2023). Point Cloud Repair Method via Convex Set Theory. Applied Sciences, 13(3), 1830. https://doi.org/10.3390/app13031830