Abstract

In this paper, we proposed an efficient authentication method with dual embedment strategies for absolute moment block truncation coding (AMBTC) compressed images. Prior authentication works did not take the smoothness of blocks into account and only used single embedding strategies for embedment, thereby limiting image quality. In the proposed method, blocks were classified as either smooth or complex ones, and dual embedding strategies used for embedment. Respectively, bitmaps and quantized values were embedded with authentication codes, while recognizing that embedment in bitmaps of complex blocks resulted in higher distortion than in smooth blocks. Therefore, authentication codes were embedded into bitmaps of smooth blocks and quantized values of complex blocks. In addition, our method exploited to-be-protected contents to generate authentication codes, thereby providing satisfactory detection results. Experimental results showed that some special tampering, undetected by prior works, were detected by the proposed method and the averaged image quality was significantly improved by at least 1.39 dB.

1. Introduction

With the progress and development of Internet, digital images are increasingly easy to be transmitted over the network. However, these images are likely to be tampered with either with malicious intent or otherwise, resulting in unauthentic images being received. Therefore, authentication of images has become an important issue. Fragile watermarking [1,2,3,4,5] is a common technique used in image authentication by embedding watermark data into images. If pixels were tampered with, the embedded data could be retrieved to authenticate the image.

The fragile watermarking technique can be applied to images in spatial [6,7,8,9,10] or compressed [11,12,13] domains. In the spatial domain, data are embedded directly by modifying pixel values. Since images of spatial domain have many redundancies for embedding, higher payloads are expected. However, in recent years, most images are stored and transmitted in compressed formats. This is because compressed images have lower storage space and transmission bandwidth requirements. At present, vector quantization (VQ) [14,15,16], joint photographic expert group (JPEG) [17,18,19], and absolute moment block truncation coding (AMBTC) [20,21] are some commonly used compression techniques. Amongst these techniques, AMBTC requires the least computational cost. Therefore, several authentication techniques based on AMBTC images [22,23,24,25,26,27] have been proposed.

AMBTC was proposed by Lema and Mitchell [28], where blocks were compressed into quantized values and bitmaps. In 2013, ref. [22] proposed an AMBTC image authentication method with joint image coding. This method used pseudo random sequence to generate authentication codes which were embedded into the bitmaps. The embedded bitmaps together with the quantized values were, then, losslessly compressed to obtain the final bitstream. The advantage of their method was that it required less storage and achieved good detection accuracy. To improve image quality, ref. [23] in 2016 proposed a novel image authentication method based on a reference matrix. The matrix was employed to embed authentication codes into quantized values. The length of authentication codes was determined by the size of the matrix. Ref. [23] achieved a higher image quality compare to [22]. However, this method could not detect tampering in the bitmaps which were independently generated from the authentication codes. To improve security, ref. [24] also proposed an authentication method that used bitmaps to generate authentication codes. Furthermore, the resulting image had equivalent quality and higher detection accuracy to [23].

In 2018, ref. [25] proposed a tamper detection method for AMBTC images. The most significant bits (MSBs) of quantized values and bitmaps were hashed to generate the authentication codes. The codes were embedded into the least significant bits (LSBs) of the quantized values using the LSB embedding method. To obtain higher image quality, the MSBs of quantized values were perturbed within a small range and used to generate a set of authentication codes. The code with the smallest embedding error was selected for embedment to provide a higher image quality than [22,23,24].

In 2018, ref. [26] also proposed an AMBTC authentication method using adaptive pixel pair matching (APPM). Bitmaps and the position information were employed to generate the authentication codes, which were embedded in the quantized values using APPM. A threshold was used to classify blocks into edge and nonedge ones. If the difference of quantized values was larger than the threshold, the block was considered as an edge one; or otherwise, a nonedge block. Edges in an image were considered more informative than the nonedges; therefore, more bits were embedded in edge blocks than nonedge ones to provide more protection to edges.

In 2019, ref. [27] proposed a high-precision authentication method for AMBTC images using matrix encoding (ME). Bitmaps and position information were used to generate 6-bit authentication codes. The generated codes were divided into two equal parts of 3 bits, and embedded into the bitmaps using matrix encoding. To avoid damages to the bitmaps caused by embedding, the positions of to-be-flipped bits in the bitmap were recorded and embedded into the quantized values. This method resulted in higher image quality as well as higher detection accuracy.

Methods [25,26,27] could detect most tampering and also provided satisfactory image quality. However, some special tampering might escape detection using these methods. For example, the generation of authentication codes in [25] was independent of block position information. Therefore, tampering could not be detected when two blocks were interchanged. In addition, the embedding techniques of these methods did not take the smoothness of blocks into account, which resulted in low image quality. To improve image quality and security, this paper proposes an authentication method for AMBTC images using dual embedding strategies. Blocks are classified into smooth and complex ones according to a predefined threshold, and appropriate embedding strategies are employed based on their smoothness. Blocks are classified as smooth blocks for quantized values less than the threshold, or otherwise, as complex ones. Both quantized values and bitmaps are used to carry authentication codes. However, the difference of quantized values of complex blocks can be large and flipping bits of bitmaps can result in significant distortion. Therefore, the authentication codes of complex blocks will be embedded into the quantized values. In contrast, smooth blocks have lower distortion from flipping bits of bitmaps, and thus authentication codes will be embedded into bitmaps. Since the dual embedding strategies are based on block smoothness, the aim is to obtain higher image qualities. Moreover, the generation of authentication codes in the proposed method is related to the to-be-protected contents, which will increase the probability of detecting some special tampering.

2. Related Works

This section briefly introduces the concepts of AMBTC compression technique. The APPM and matrix encoding techniques used in the proposed method are also presented. The specific procedures are described in the following three subsections.

2.1. AMBTC Compression Technique

AMBTC is a lossy compression technique [28] that uses low and high quantized values and a bitmap to represent a block. The proposed method is to embed the authentication codes into quantized values and bitmaps to protect the AMBTC images, and the limitations of AMBTC is that the compression ratio and image quality are both relatively low. Let be the original image. Divide into blocks of size , and , where represents the -th pixel of . Then, scan each block of . To compress block , calculate the mean value of . Average pixels in that are smaller than and record the result as the low quantized value On the other hand, the high quantized value is the average of pixels greater than or equal to . Compare and the pixel to obtain the bitmap of block . If , then ; otherwise, , where represents the -th bit of . Thus, the compressed code is of . All blocks are compressed in the same way, and the result is the AMBTC compressed codes of image , which is denoted by . To decompress , prepare an empty block with the same size of and is the -th pixel of . If , then ; otherwise, . All codes are decompressed using the same procedures where the decompressed image is .

A simple example is given to introduce the procedures of AMBTC compression technique. Let [36, 34, 41, 42; 37, 35, 43, 46; 35, 39, 44, 41; 36, 41, 42, 48] be the original block of size . Calculate the mean value of , and is obtained. Pixels in less than 40 are 36, 34, 37, 35, 35, 39 and 36, and the average of these pixels is . Similarly, is calculated. To obtain , if , ; otherwise, . Therefore, [0011; 0011; 0011; 0111]. Finally, the AMBTC compressed code (36, 43, [0011; 0011; 0011; 0111]) is obtained. To decompress, bits 0 and 1 in are decoded by quantized values and , respectively, and [36, 36, 43, 43; 36, 36, 43, 43; 36, 36, 43, 43; 36, 43, 43, 43].

2.2. The Adaptive Pixel Pair Matching (APPM) Technique

In the proposed method, we use the APPM embedding technique to embed the authentication codes into quantized values of complex blocks. The limitation of APPM is that it can only embed at most 8 bits into a pair of quantized values, fortunately only 6 bits are required in our method. The principle of APPM [29] is to embed a digit of base into a pixel pair by referring to the reference table , where is a table of size filled with elements of integers in the range . Let be the element located in -th row and-th column of . can be calculated by the following equation:

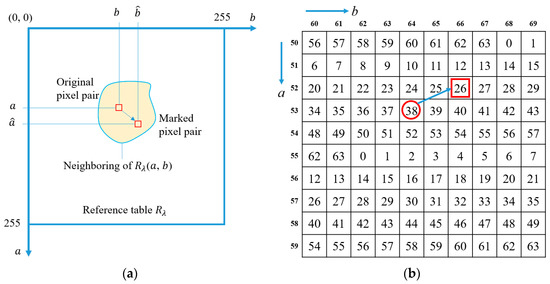

where is a constant, and in this paper. To embed a digit into , first locate a pixel pair in the vicinity of that satisfies and has the minimum distance to . The located is then employed to replace and a marked pixel pair is obtained. When extracting the embedded digit , since and are known, can be calculated by . The schematic diagram of APPM is as shown in Figure 1a.

Figure 1.

The examples of APPM embedding method. (a) The schematic diagram of the APPM; (b) An example of .

Following is a simple example to illustrate the APPM embedding procedures. Figure 1b shows a partial reference table . Let be the original pixel pair used to carry the digit of base 64. Since satisfies and has the minimum distance to , the marked pixel pair is located. To extract the embedded digit, we only need to locate the coordinate in , and can be obtained (see Figure 1b).

2.3. The Matrix Encoding

The Matrix encoding is used to embed the authentication codes into bitmaps of smooth blocks. The limitation of Matrix encoding is that only 3 bits can be embedded at a time, and our method requires 6 bits to be embedded, thus a block has to be embedded 2 times. Matrix encoding is an efficient embedding method [30] based on Hamming code, which is a linear error correction code proposed by Richard Wesley Hamming [31]. represents the secret bits embedded into the vector of length . Matrix encoding is embedded based on a parity matrix . To embed into , is calculated, where T, and mod represent transpose, exclusive-or and modulo-2 operations, respectively. Note that is column vector of length . Let be the decimal value of and initialize as the marked vector of . If , then no bit is required to be flipped in . Otherwise, flip the -th bit of . The secret bits can be extracted directly by calculating .

Next, an example of is taken to introduce the embedding and extraction procedures of matrix encoding. Following equation shows the of (7, 3):

Let [1, 1, 1, 1, 1, 0, 0] be the original vector used to embed the secret bits . Calculate to get . Convert to its decimal value, and . Then, flip the -th bit of the initialized marked vector , and [1, 1, 1, 1, 0, 0, 0]. The embedded secret bits can be extracted by .

3. The Proposed Method

In methods [25,26,27], authentication codes are generated independently of position information and MSBs of quantized values, which can result in some tampering that cannot be detected. Moreover, these embedding techniques are not designed based on block smoothness, leading to a relatively high image distortion. In this paper, the to-be-protected contents, such as quantized values, are used to generate authentication codes to enhance the security of the image. In addition, different embedding strategies based on block smoothness are used to improve image quality. The smoothness is determined by a predefined threshold . Given an AMBTC compressed code of block . If , the block is smooth; otherwise, it is complex. In our method, the authentication codes of smooth blocks are embedded in bitmaps using the matrix encoding, while complex blocks are embedded in quantized values using the APPM. The detailed embedding and authentication procedures are presented in the following subsections. Notice that the detection result is related to the length of authentication codes. In the proposed method, smooth and complex blocks are embedded with the same length of authentication codes, thus they have identical detection performance.

3.1. The Embedment Algorithm of Smooth Blocks

Let be the AMBTC compressed code of a smooth block . Following gives the embedment algorithm of a smooth block .

- Step 1: Divide into and , which are employed to generate and carry , respectively.

- Step 2: Use the bitmap , low quantized value , high quantized value , and position information to generate using the following equation:where is the function that hashes using the MD5 [32] and reduces the hashed results to 6-bit using the xor operation.

- Step 3: The 6-bit is divided into 2 groups of 3 bits denoted as and . The matrix encoding described in Section 2.3 is then employed to embed and into and , and we obtain and , respectively.

- Step 4: Concatenate , , and , and we have the marked bitmap . Finally, the marked compressed code is outputted, where .

A simple example is given to illustrate the procedure of generating an authentication code using the hash function . Suppose the hashed result of is a 32-bit string ‘00110110101101001100010000101100’. Then the first 16 bits are xor-ed with the last 16 bits to create a 16 bits ‘1111001010011000’. Repeat this xor-ed procedure one more time, and we obtain an 8 bits ‘01101010’. Since our method only requires 6 bits, the last 2 bits are discarded. Therefore, the authentication code ‘011010’ is obtained.

3.2. The Embedment Algorithm of Complex Blocks

For a compressed code , if , the block is a complex one and embed the authentication code into the quantized values using APPM with the following algorithm.

- Step 1: Use the following equation to construct the reference table :where is an random integer generated by a key , and .

- Step 2: Use the bitmap and position information to generate the 6-bit by

- Step 3: Once is obtained, of base 64 is embedded into the quantized values using APPM to obtain the marked quantized values and , where is the decimal value of .

In comparison to Equation (1), Equation (4) adds an additional integer to generate the reference table. Actually, the image quality obtained by referring to is equal to that of . However, if is embedded based on , it can be extracted publicly by Equation (1). Therefore, one can tamper with by finding an alternative pixel pair that satisfies to escape detection. In contrast, in Equation (4) can be obtained only if is known. Thus, the embedment using is not only more secure than just using , but also maintains the same image quality.

3.3. Embedding of Smoothness-Changed Blocks

Since the of complex block is embedded into the quantized values , the smoothness of may change to the smooth one if . In this case, it can be processed using the following procedures. Firstly, alter the value of and by one, and the altered results and are obtained. If , use the embedding technique described in Section 3.1 to perform the embedment on and obtain , where . Otherwise, the embedment of is performed using the embedding technique of complex block described in Section 3.2 to obtain , where . From all possible codes of , the code with the smallest embedding error is selected as the final output . Figure 2 shows the embedding framework of the proposed method.

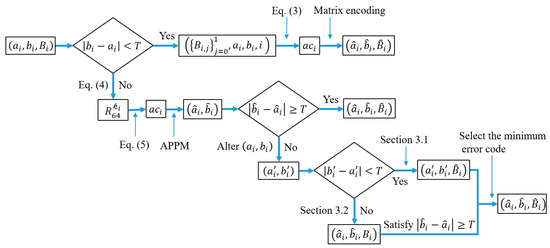

Figure 2.

The embedding framework of the proposed method.

Following is a simple example to illustrate the case where a complex block changes to a smooth one after embedment. Let (67, 73, [0011; 0011; 0011; 0101]) be the original AMBTC code with , and . Since , the is classified as a complex block. Then, use and to generate the authentication code using Equation (5), and employ APPM to embed into to obtain . Suppose the marked quantized values . Since , the smoothness of is different from that of . Thus, the embedment of requires additional processing. Alter the values of by one unit, and the altered results are (66, 72), (66, 73), (66, 74), (67, 72), (67, 73), (67, 74), (68, 72), (68, 73) and (68, 74). The differences of and less than are (67, 72), (68, 72) and (68, 73), and the technique described in Section 3.1 is used to embed them. The differences of and larger than or equal to 6 are (66, 72), (66, 73), (66, 74), (67, 73), (67, 74) and (68, 74), and employ the technique described in Section 3.2 to embed them. Let (67, 72, [0011; 0111; 0011; 0001]), (68, 72, [0011; 0010; 0011; 0111]), and (68, 73, [0010; 0011; 0011; 1101]) are the embedded codes with differences of quantified values less than 6, where the underlined bits are the flipped bits. Suppose that (65, 73, ), (67, 76, ), (66, 73, ), (67, 75, ), (66, 74, ) and (68, 75, ) are the codes with differences of quantified values larger than or equal to 6. Decompress these codes and the original codes using AMBTC to obtain the decompress block and . Then, calculate the squared differences of and , which are 68, 64, 68, 32, 72, 8, 32, 16 and 40. Since the code (66, 73, ) has the least embedding distortion 8, it can be selected and outputted as the final code .

3.4. The Embedding Procedures

In this section, the embedding procedures of the proposed method are described in the following algorithm. Let be the AMBTC codes used to embed the authentication codes. The embedding procedures are listed below.

- Input: AMBTC compressed codes , key , and parameters and .

- Output: Marked AMBTC codes .

- Step 1: Scan each code in and calculate the difference of and .

- Step 2: If , use Equation (3) to hash , , and to generate the 6-bit . Embed into using the matrix encoding described in Section 2.3 to obtain . Concatenate and , and the marked bitmap is obtained. Then, we have the marked code , where .

- Step 3: If , use the key to generate , and construct the reference table using Equation (4). Then, use the APPM to embed into , and we have . If , the marked code is outputted and . Otherwise, is embedded using the technique described in Section 3.3 to obtain .

- Step 4: Repeat Steps 1–3 until all codes are embedded and output the marked codes , key , and parameters and .

3.5. The Authentication Procedures

To authenticate whether the codes have been tampered with, we first regenerate the authentication code using either Equations (3) or (5) according to the difference of and . Then, extract the code embedded in , and compare with . If , the code is untampered with; otherwise, it is tampered with. The detailed authentication procedures are listed as follows.

- Input: To-be-authenticated codes , key , and parameters and .

- Output: The detection result.

- Step 1: Scan each code in and calculate the difference of and .

- Step 2: If , use Equation (3) to hash , , and to regenerate 6-bit . The matrix encoding is employed to extract from .

- Step 3: If , employ to generate , and construct the by Equation (4). Besides, and are employed to regenerate by Equation (5). Then, use APPM to extract embedded in .

- Step 4: Compare and to judge whether the code has been tampered with. If , the code is untampered with. Otherwise, it is tampered with.

- Step 5: Repeat Steps 1–4 until all blocks have been detected, which refers to the coarse detection in our method.

- Step 6: The refined detection, described here, is used to improve detection accuracy. If the top and bottom, left and right, top left and bottom right, or top right and bottom left blocks of an untampered block have been determined as tampered with, the untampered block is redetermined to be a tampered one. Repeat this procedure until no other blocks are redetermined and we have finished the authentication procedures.

4. Experimental Results

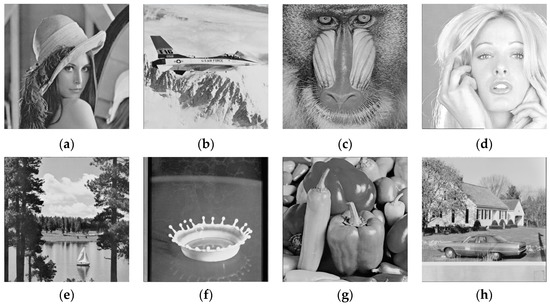

To evaluate the effectiveness of our method, we perform several experiments on a set of grayscale images. Eight images of size 512 × 512, namely, Lena, Jet, Baboon, Tiffany, Sailboat, Splash, Peppers, and House are used as test images for the experiments, as shown in Figure 3. These test images can be obtained from the USC-SIPI image database [33]. Comparisons of image quality and detectability between prior works [25,26,27] and the proposed method are also shown in this section. In the experiments, the peak signal-to-noise ratio (PSNR) is employed to measure the marked image quality:

where MSE is the mean square error of the marked image and the original image. Equation (6) shows that the smaller the MSE is, the larger is the PSNR. The structural similarity (SSIM) metric [34] is also employed to measure the similarity between the marked and original images. The SSIM is calculated by:

where and represent the original and marked images. , and , are the mean value and standard deviation of , , respectively. is the covariance of and , and and are constants. The value of SSIM is within the range [0, 1], and the larger the SSIM is, the higher the visual quality of the marked image is.

Figure 3.

Eight test images. (a) Lena; (b) Jet; (c) Baboon; (d) Tiffany; (e) Sailboat; (f) Splash; (g) Peppers; (h) House.

4.1. The Performance of the Proposed Method

In this paper, we use a threshold to classify blocks into smooth and complex ones and embed them using different techniques. The PSNR and SSIM are related to the value of . Table 1 shows the comparisons between PSNR and SSIM for the test images when the threshold is set from 0 to 10. As shown in the table, the highest PSNR is achieved when or . As becomes smaller or larger, the PSNR decreases gradually. For example, the Jet image has a PSNR of 43.32 dB for , which is higher than when and . Besides, the peak values of SSIM are achieved when . Thus is adopted in the proposed method. This experiment was performed using the Python programming language, and the average time required to embed an image is less than one second when .

Table 1.

PSNR and SSIM comparisons with various value.

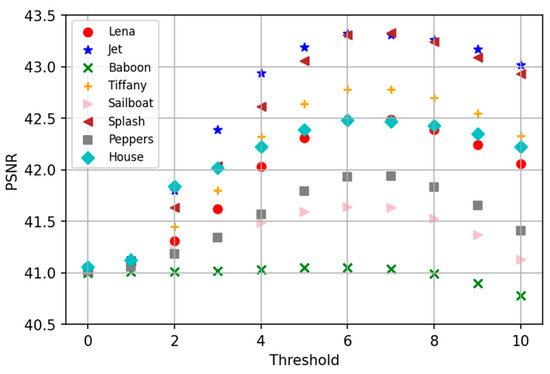

Figure 4 shows the plot of threshold versus PSNR for various test images. When , the PSNRs of the eight test images are around 41 dB. Among these images at , Jet has the highest PSNR, while Baboon achieves the lowest PSNR, which is about 2 dB lower than that of Jet. This is because image quality is dependent on the ratio of smooth blocks for a given threshold, which is demonstrated in the following experiments.

Figure 4.

The plot of threshold versus PSNR for various test images.

Table 2 shows the ratio of smooth blocks and the PSNR of images when . We observe that under the same threshold, the larger the ratio of smooth blocks, the higher the PSNR, implying that the proposed method is more effective. The reason is that the differences of quantized values of smooth blocks are close to each other, thus the error caused by flipping bits of bitmaps is also small. For example, Lena, Jet, Tiffany, Splash and House contain more than 40% smooth blocks, and the PSNR of these images can reach more than 42 dB. However, the PSNR of Baboon is the lowest (41.04 dB), which is due to the fact that it has only 6.99% smooth blocks. PSNR is a metric to measure an embedding method, and higher PSNR means that the embedding method is more effective. In these 8 test images, the PSNRs of all images are higher than 41 dB, implying the effectiveness of our method.

Table 2.

The relation between the ratio of smooth blocks and the PSNR of images.

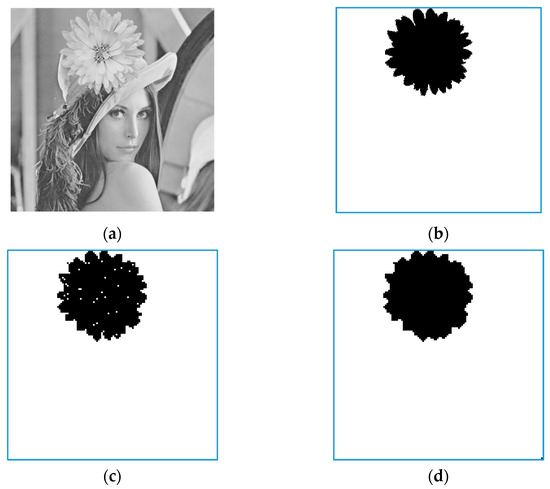

The following experiment demonstrates the detectability of the proposed method. The marked codes of Lena are tampered such that the tampered decompressed image shows a daisy on Lena’s hat, as shown in Figure 5a. Figure 5b shows the tampered region indicated by black blocks, while Figure 5c,d present the coarse and refined detection results of Figure 5a. An image contains a total of blocks, and this experiment tampers with 2176 blocks with a tampering rate of 13.28%. Figure 5c shows that a few scattered blocks are not detected in the coarse detection. Nevertheless, those undetected blocks can be detected in the refined detection, as shown in Figure 5d.

Figure 5.

The tampered and detection results. (a) The tampered image; (b) The tampered region; (c) The coarse detection result; (d) The refined detection result.

4.2. PSNR Comparisons with Prior Works

In this section, we compare the PSNR between [25,26,27] and the proposed method, as shown in Table 3. In this experiment, 6-bit authentication code is embedded in all methods, and is used in the proposed method. As shown in the table, the PSNR of the proposed method is the highest compared to [25,26,27]. The averaged PSNR of our method is 42.36 dB, which is , and dB higher than [25,26,27], respectively. Moreover, if an image contains a larger ratio of smooth blocks, the improvement of PSNR obtained by our method is higher. For example, compared to [27], the improvement of Baboon is dB, while Splash is dB, reflecting the effectiveness of the proposed method.

Table 3.

PSNR comparisons with [25,26,27] (in dB).

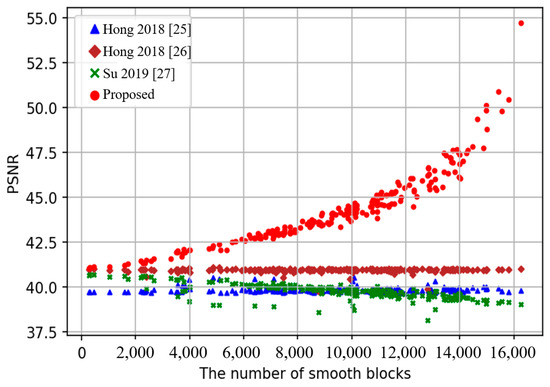

An additional 200 images of sized obtained from BOWS-2 image database [35] are used to explore the applicability of the compared methods influenced by image smoothness. To better evaluate the performance, we sort 200 images according to the number of smooth blocks of images in the ascending order, as shown in Figure 6. The figure shows that when the number of smooth blocks is close to 0, the PSNR improvement of our method is not significant compared to [25,26,27]. However, as the number of smooth blocks increases, the improvement becomes more obvious, as shown in Figure 6. From the above analysis, the proposed method is more suitable for smooth images compared to complex ones.

Figure 6.

Comparisons between PSNR and the number of smooth blocks for 200 images.

4.3. Detectability Comparisons with Prior Works

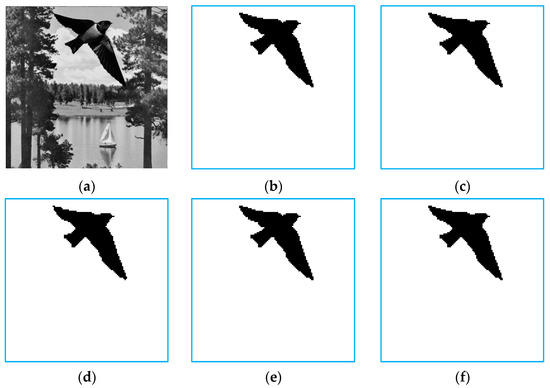

This section shows the detectability comparisons of [25,26,27] and the proposed method. In this experiment, the marked compressed codes of Sailboat are tampered with by adding a bird. Figure 7a,b show the tampered image and the region of tamper, while Figure 7c–f present the detection results of [25,26,27] and the proposed method. The results show all methods can achieve a satisfactory detection result.

Figure 7.

The tampered and detection results of [25,26,27] and the proposed method. (a) The tampered image; (b) The tampered region; (c) Detection result of [25]; (d) Detection result of [26]; (e) Detection result of [27]; (f) Detection result of the proposed method.

Different metrics are used to measure the detection results of Figure 7, as shown in Table 4. The number of tampered pixels (NTB) in Figure 7a is 19,712, and the tampering rate is , where. True positive (TP) represents the number of tampered pixels detected as tampered ones, while false negative (FN) is the number of tampered pixels incorrectly detected as untampered ones. The refined detection rate is calculated by . In all methods, the length of authentication codes embedded in each block is 6. Therefore, the collision probability is , i.e., the coarse detection rate is as high as . The table shows that the refined detection rates of [25,26,27] and the proposed method are up to 99%, which are better than the coarse detection rates and meet the theoretical values.

Table 4.

Detectability comparisons using different metrics.

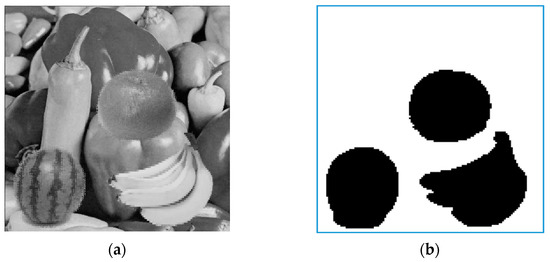

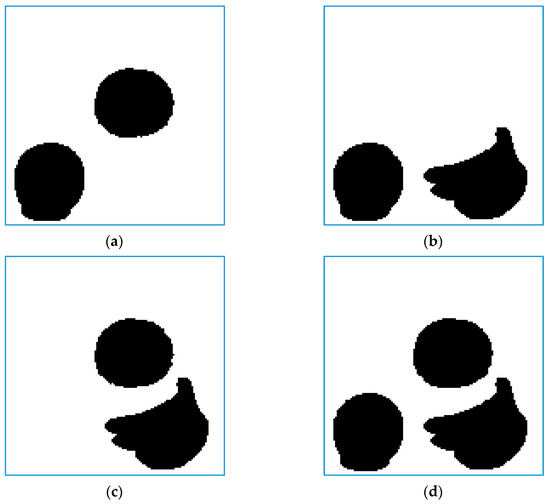

A good detection method should be able to detect any kind of tampering. The following experiments are performed with some special tampering to further compare the detectability of [25,26,27] and the proposed method. The special tampering consisted of the marked codes of Peppers by adding bananas, orange and watermelon, and Figure 8a,b present the tampered image and regions. Let be the marked codes of Peppers. Suppose , and are the codes of the bananas, orange and watermelon, and , and represent the number of blocks of these images, respectively. For tampering with bananas, codes are used to replace . Then, from , find the codes which are the closest to , and the found codes are used to replace . For orange’s tampering, the codes are used to replace . Then, the pixel pair that satisfy and has the minimum distance to is found. Finally, we use to replace for . To achieve the tampering of watermelon, the 5 MSBs of are use to replace that of .

Figure 8.

The tampering of Peppers image. (a) The tampered image; (b) The tampered regions.

Figure 9 shows the refined detection results of [25,26,27] and the proposed method. Figure 9a–c show that [25,26,27] do not detect the tampering of bananas, orange and watermelon, respectively. This is because the generation of authentication codes of [25,26,27] is independent of the protected contents, e.g., position information and MSBs of quantized values. However, the proposed method can detect these tampering, as shown in Figure 9d, demonstrating the superiority of our method against other works.

Figure 9.

Detectability comparisons between [25,26,27] and the proposed method. (a) Detection result of [25]; (b) Detection result of [26]; (c) Detection result of [27]; (d) Detection result of the proposed method.

The components for generating authentication codes, embedding techniques and detectability of methods [25,26,27] and the proposed method are summarized in Table 5. Furthermore, the performance in recovery domain for methods [20,21] are also compared. Comparisons are made between the various detection methods for “Detection of bananas”, “Detection of orange” and “Detection of watermelon” with tampering collages of banana, orange and watermelon, respectively. The table shows that methods [20,25,26,27] cannot detect all these types of tampering. The reason is that the components used for generating authentication codes are not included in all to-be-protected contents. In contrast, method [20] and the proposed method use contents that need to be protected to generate authentication codes, and thus they are able to detect these tampering.

Table 5.

Comparisons between prior works and the proposed method.

5. Conclusions

In this paper, we proposed an efficient authentication method with a high image quality for AMBTC images. Based on the smoothness of blocks, blocks are classified into smooth and complex ones. To enhance the security, to-be-protected contents including position information, bitmaps and quantized values are employed to generate the authentication codes. Moreover, a key is added to construct the reference table used in APPM to further protect the authentication codes. According to the smoothness of blocks, the authentication codes are embedded into the bitmap using matrix encoding for smooth block and in the quantized values using APPM for complex blocks. Experimental results show that in comparisons to prior works, the proposed method achieves a better detection results and higher image quality.

Author Contributions

X.Z., J.C. and W.H. contributed to the conceptualization, methodology, and writing of this paper. Z.-F.L. and G.Y. conceived the simulation setup, formal analysis and conducted the investigation. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Jasra, B.; Moon, A.H. Color image encryption and authentication using dynamic DNA encoding and hyper chaotic system. Expert Syst. Appl. 2022, 206, 117861. [Google Scholar] [CrossRef]

- Hossain, M.S.; Islam, M.T.; Akhtar, Z. Incorporating deep learning into capacitive images for smartphone user authentication. J. Inf. Secur. Appl. 2022, 69, 103290. [Google Scholar] [CrossRef]

- Qin, C.; Ji, P.; Zhang, X.; Dong, J.; Wang, J. Fragile image watermarking with pixel-wise recovery based on overlapping embedding strategy. Signal Process. 2017, 138, 280–293. [Google Scholar] [CrossRef]

- You, C.; Zheng, H.; Guo, Z.; Wang, T.; Wu, X. Tampering detection and localization base on sample guidance and individual camera device convolutional neural network features. Expert Syst. 2022, 40, e13102. [Google Scholar] [CrossRef]

- Hussan, M.; Parah, S.A.; Jan, A.; Qureshi, G.J. Hash-based image watermarking technique for tamper detection and localization. Health Technol. 2022, 12, 385–400. [Google Scholar] [CrossRef]

- Zhao, D.; Tian, X. A Multiscale Fusion Lightweight Image-Splicing Tamper-Detection Model. Electronics 2022, 11, 2621. [Google Scholar] [CrossRef]

- Hussan, M.; Parah, S.A.; Jan, A.; Qureshi, G.J. Self-embedding framework for tamper detection and restoration of color images. Multimed. Tools Appl. 2022, 81, 18563–18594. [Google Scholar] [CrossRef]

- Zhou, X.; Hong, W.; Weng, S.; Chen, T.S.; Chen, J. Reversible and recoverable authentication method for demosaiced images using adaptive coding technique. J. Inf. Secur. Appl. 2020, 55, 102629. [Google Scholar] [CrossRef]

- Wang, Q.; Xiong, D.; Alfalou, A.; Brosseau, C. Optical image authentication scheme using dual polarization decoding configuration. Opt. Lasers Eng. 2019, 112, 151–161. [Google Scholar] [CrossRef]

- Molina, J.; Ponomaryov, V.; Reyes, R.; Sadovnychiy, S.; Cruz, C. Watermarking framework for authentication and self-recovery of tampered colour images. IEEE Lat. Am. Trans. 2020, 18, 631–638. [Google Scholar] [CrossRef]

- Wu, X.; Yang, C. Invertible secret image sharing with steganography and authentication for AMBTC compressed images. Signal Process. Image 2019, 78, 437–447. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, S.; Qian, Z.; Feng, G. Reversible fragile watermarking for locating tampered blocks in JPEG images. Signal Process. 2010, 90, 3026–3036. [Google Scholar] [CrossRef]

- Hong, W.; Wu, J.; Lou, D.C.; Zhou, X.; Chen, J. An AMBTC authentication scheme with recoverability using matrix encoding and side match. IEEE Access 2021, 9, 133746–133761. [Google Scholar] [CrossRef]

- Zhang, T.; Weng, S.; Wu, Z.; Lin, J.; Hong, W. Adaptive encoding based lossless data hiding method for VQ compressed images using tabu search. Inform. Sci. 2022, 602, 128–142. [Google Scholar] [CrossRef]

- Pan, Z.; Wang, L. Novel reversible data hiding scheme for two-stage VQ compressed images based on search-order coding. J. Vis. Commun. Image R. 2018, 50, 186–198. [Google Scholar] [CrossRef]

- Li, Y.; Chang, C.C.; Mingxing, H. High capacity reversible data hiding for VQ-compressed images based on difference transformation and mapping technique. IEEE Access 2020, 8, 32226–32245. [Google Scholar] [CrossRef]

- Battiato, S.; Giudice, O.; Guarnera, F.; Puglisi, G. CNN-based first quantization estimation of double compressed JPEG images. J. Vis. Commun. Image R. 2022, 89, 103635. [Google Scholar] [CrossRef]

- Yao, H.; Mao, F.; Qin, C.; Tang, Z. Dual-JPEG-image reversible data hiding. Inform. Sci. 2021, 563, 130–149. [Google Scholar] [CrossRef]

- Cogranne, R.; Giboulot, Q.; Bas, P. Efficient steganography in JPEG images by minimizing performance of optimal detector. IEEE Trans. Inf. Foren. Sec. 2022, 17, 1328–1343. [Google Scholar] [CrossRef]

- Chen, T.S.; Zhou, X.; Chen, R.; Hong, W.; Chen, K. A high fidelity authentication scheme for AMBTC compressed image using reference table encoding. Mathematics 2021, 9, 2610. [Google Scholar] [CrossRef]

- Lin, C.C.; Liu, X.; Zhou, J.; Tang, C.Y. An image authentication and recovery scheme based on turtle Shell algorithm and AMBTC-compression. Multimed. Tools Appl. 2022, 81, 39431–39452. [Google Scholar] [CrossRef]

- Hu, Y.C.; Lo, C.C.; Chen, W.L.; Wen, C.H. Joint image coding and image authentication based on absolute moment block truncation coding. J. Electron. Imaging 2013, 22, 013012. [Google Scholar] [CrossRef]

- Li, W.; Lin, C.C.; Pan, J.S. Novel image authentication scheme with fine image quality for BTC-based compressed images. Multimed. Tools Appl. 2016, 75, 4771–4793. [Google Scholar] [CrossRef]

- Chen, T.H.; Chang, T.C. On the security of a BTC-based-compression image authentication scheme. Multimed. Tools Appl. 2018, 77, 12979–12989. [Google Scholar] [CrossRef]

- Hong, W.; Zhou, X.Y.; Lou, D.C.; Huang, X.Q.; Peng, C. Detectability improved tamper detection scheme for absolute moment block truncation coding compressed images. Symmetry 2018, 10, 318. [Google Scholar] [CrossRef]

- Hong, W.; Chen, M.J.; Chen, T.S.; Huang, C.C. An efficient authentication method for AMBTC compressed images using adaptive pixel pair matching. Multimed. Tools Appl. 2018, 77, 4677–4695. [Google Scholar] [CrossRef]

- Su, G.D.; Chang, C.C.; Lin, C.C. High-precision authentication scheme based on matrix encoding for AMBTC-compressed images. Symmetry 2019, 11, 996. [Google Scholar] [CrossRef]

- Lema, M.; Mitchell, O. Absolute moment block truncation coding and its application to color image. IEEE Trans. Commun. 1984, 32, 1148–1157. [Google Scholar] [CrossRef]

- Hong, W.; Chen, T.S. A novel data embedding method using adaptive pixel pair matching. IEEE Trans. Inf. Foren. Sec. 2012, 7, 176–184. [Google Scholar] [CrossRef]

- Liu, S.; Fu, Z.; Yu, B. Rich QR codes with three-layer information using Hamming code. IEEE Access 2019, 7, 78640–78651. [Google Scholar] [CrossRef]

- Hamming, R.W. Error detecting and error correcting codes. Bell Labs Tech. J. 1950, 29, 147–160. [Google Scholar] [CrossRef]

- Menezes, A.J.; Van Oorschot, P.C.; Vanstone, S.A. Handbook of Applied Cryptography; CRC Press: Boca Raton, FL, USA, 1996. [Google Scholar]

- The USC-SIPI Image Database. Available online: http://sipi.usc.edu/database/ (accessed on 10 January 2023).

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- BOWS-2 Image Database. Available online: http://bows2.ec-lille.fr/ (accessed on 10 January 2023).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).