Gesture Recognition and Hand Tracking for Anti-Counterfeit Palmvein Recognition

Abstract

:1. Introduction

- Abundant discriminative information: The hand area is large and contains rich discriminative information with a high recognition accuracy;

- Few restrictions: The hand image can still be clearly recognized in low resolution, and even if the palm or fingers are slightly abraded, the accuracy and performance are still satisfactory;

- Low cost: A low-resolution camera can meet the resolution requirement;

- Strong privacy: It is not easy to collect a user’s complete hand information without his/her authorization;

- High user acceptance: Hand features can be collected in non-contact mode, thus avoiding many problems associated with contact collection.

- Small illumination effect: In the infrared environment, the interference of the illumination difference is smaller than that in the visible environment;

- Strong liveness detection: The infrared camera can take images of human veins, which can pass through the skin tissues of the human body for liveness detection;

- Difficult abrasion and forgery: As the veins of the human body belong to the internal physiological characteristics, they are usually hidden under the epidermis of the human body, and there is basically no abrasion. Therefore, it is very difficult to forge veins;

- Few restrictions: The recognition accuracy is less affected by the scars and dirts on the hand surface.

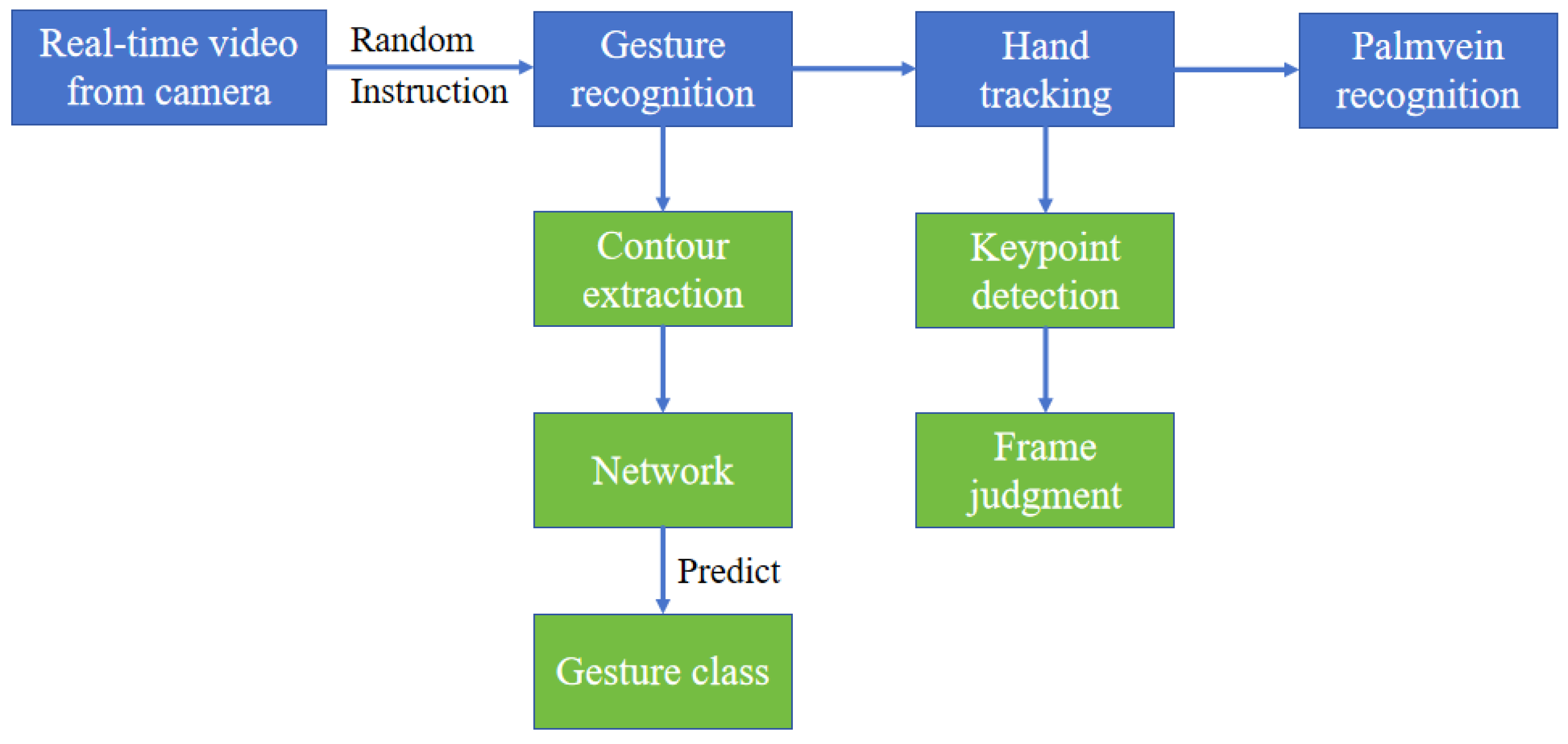

- A hand gesture recognition algorithm is developed in infrared environment. Firstly, the hand gesture contours are extracted from infrared gesture images. Then, a deep learning model is used to recognize the hand gestures from these contours;

- A hand tracking algorithm is developed in an infrared environment, which is based on the detection of key points. Hand tracking is conducted after gesture recognition, which prevents the escape of the hand from the camera view range, so it ensures that the hand used for palmvein recognition is identical to the hand used during gesture recognition.

2. Related Works

3. Methodology

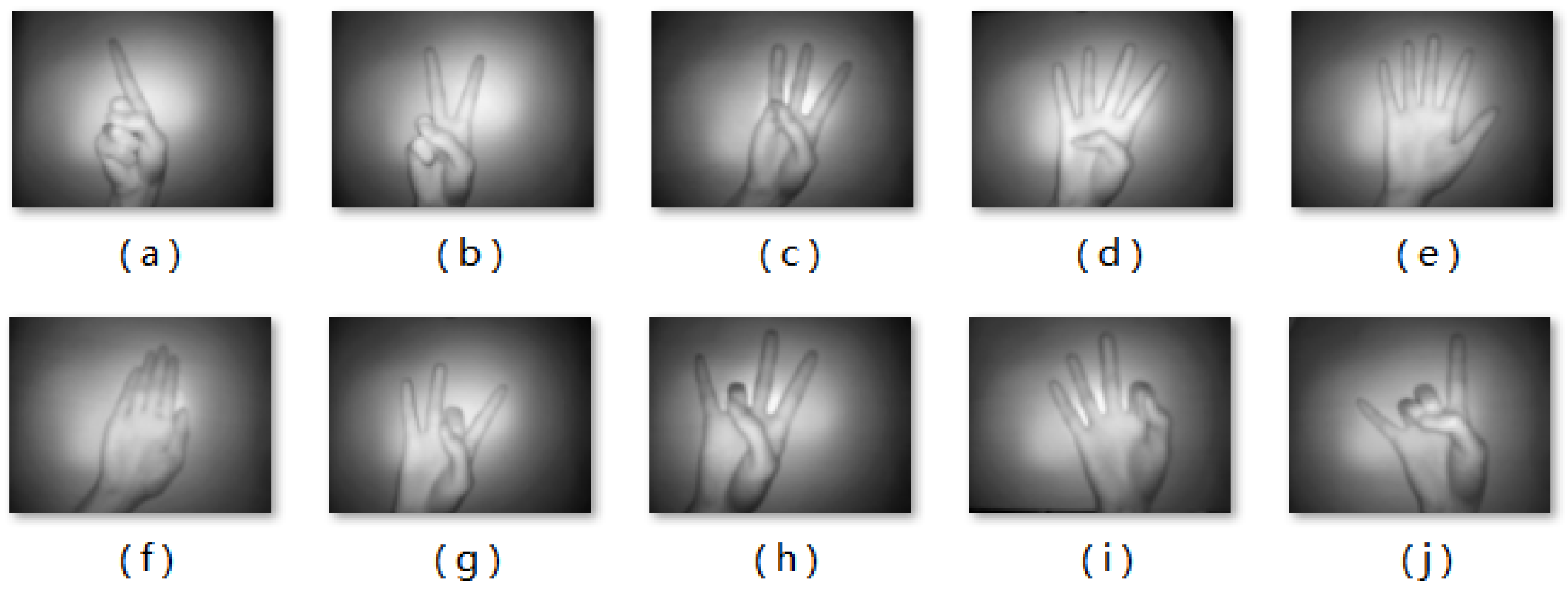

3.1. Data Acquisition

3.2. Pre-Processing

3.3. Gesture Contour Extraction

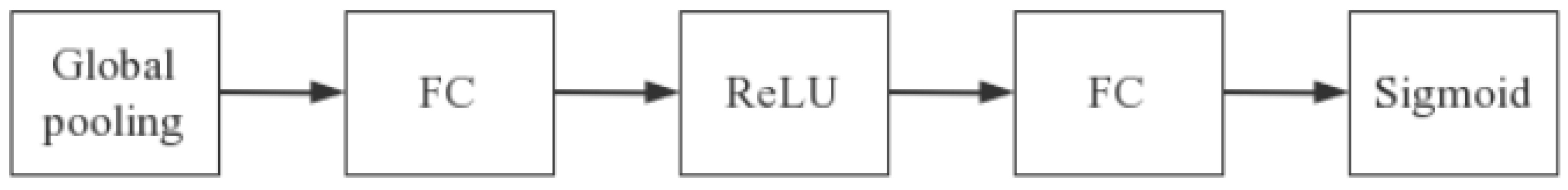

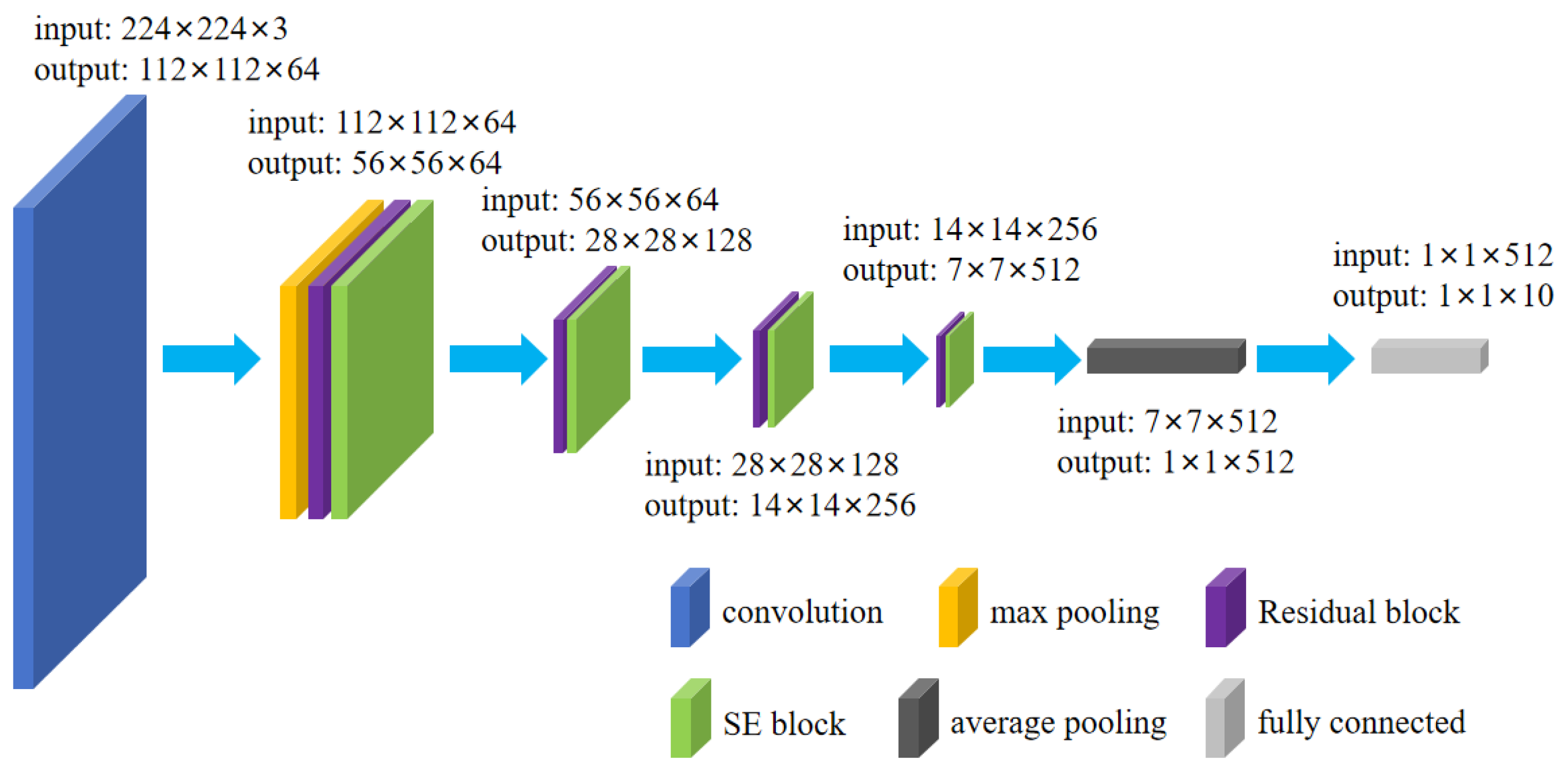

3.4. Network Architecture

3.5. Hand Tracking

3.6. Interactive System

4. Results

4.1. Gesture Contour Extraction

4.2. Accuracy

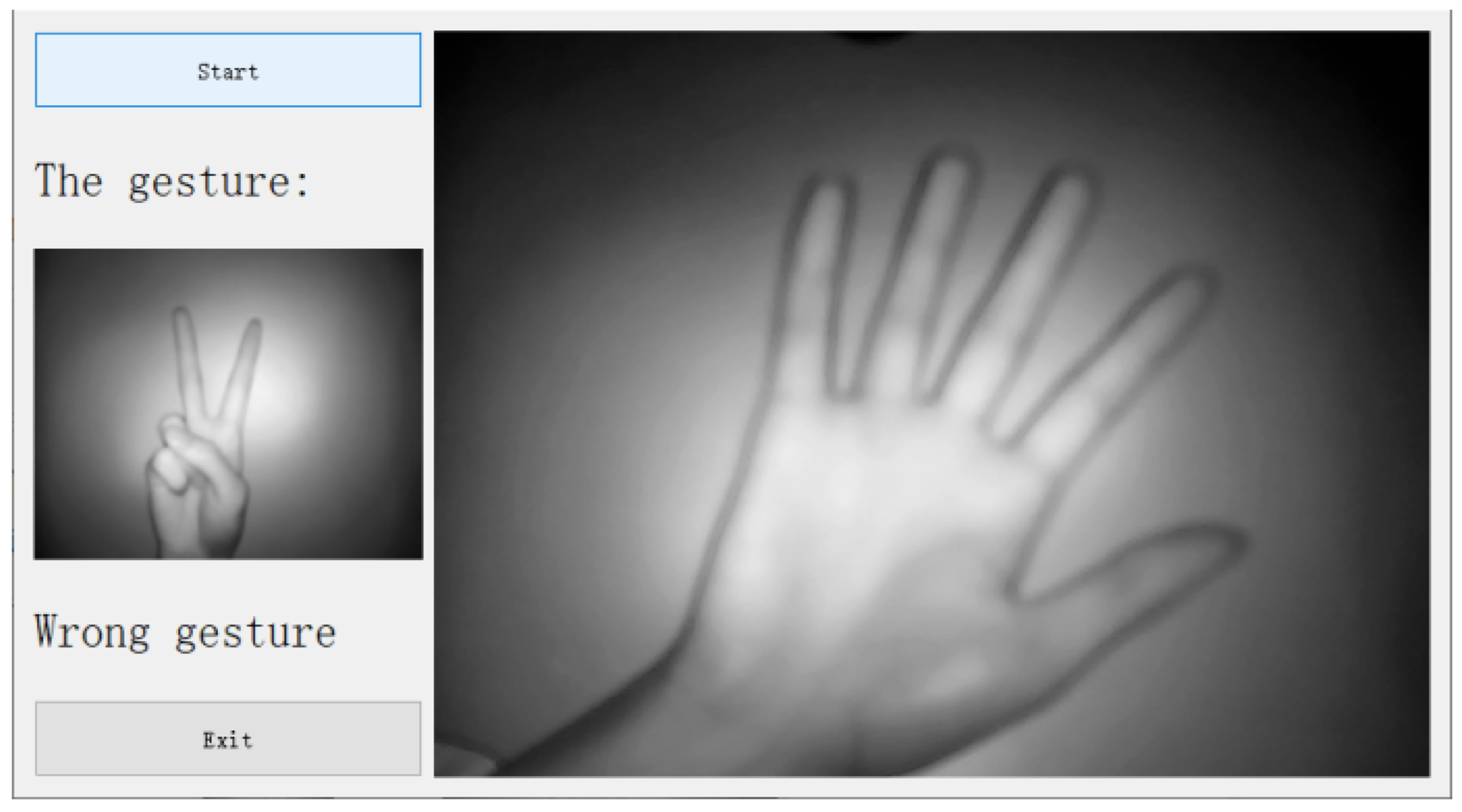

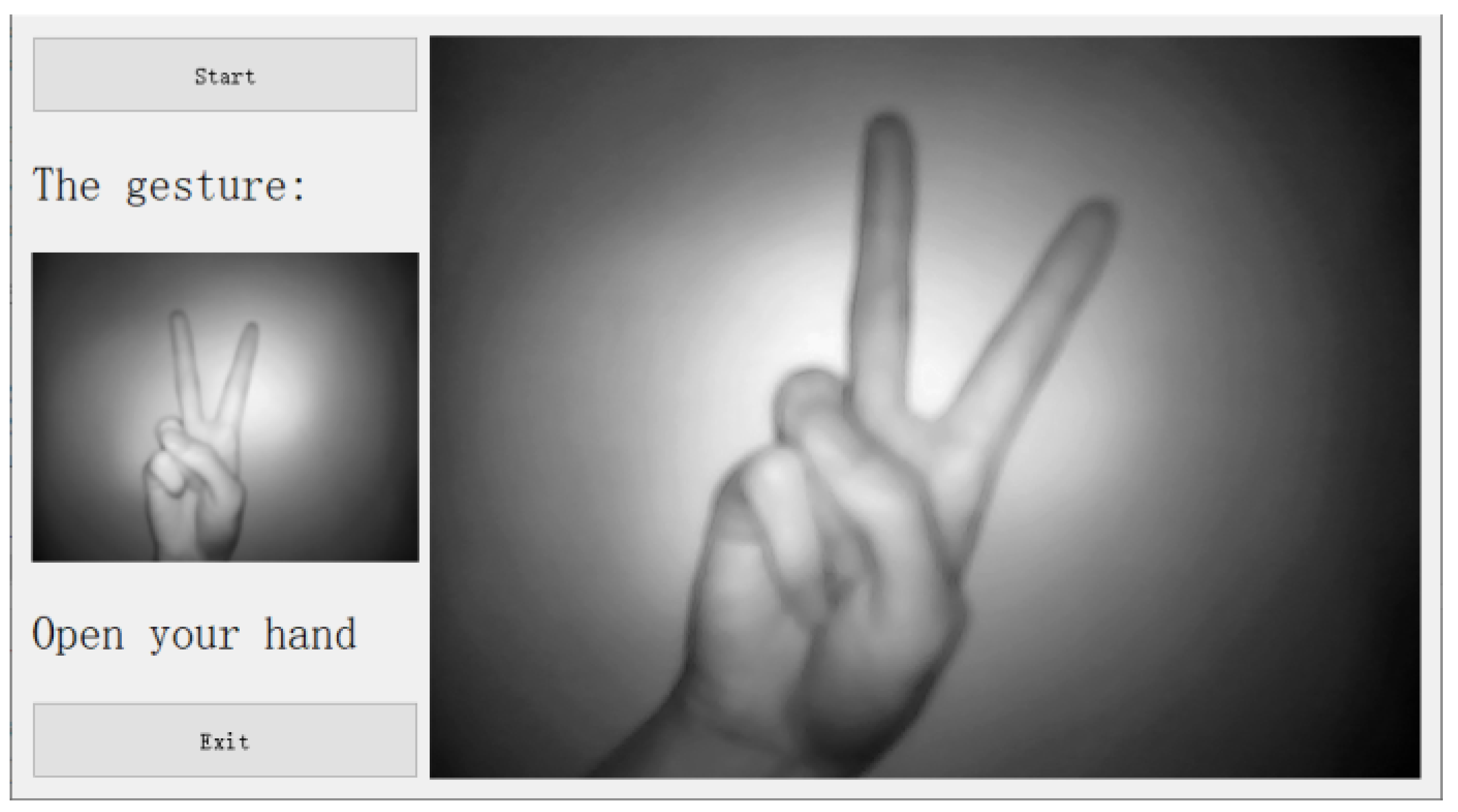

4.3. System Implementation

- 1.

- This paper conducts 30 experiments to validate the performance of the gesture recognition function. In 20 experiments, the users follow the system’s instructions and make the correct gestures. In the other 10 experiments, users intentionally violate the system’s instructions and make incorrect gestures. In a total of 30 experiments, the accuracy of the system’s judgment on gesture recognition is 100%.

- 2.

- This paper also conducts 30 experiments to validate the performance of the hand tracking function. In 20 experiments, the users’ hands intentionally leave the camera view range before palmvein recognition. In the other 10 experiments, the users’ hands remain within the camera view range until the palmvein recognition has been completed. In the first 20 experiments, the corresponding time taken by the system for the user’s hand to leave the camera view range is less than 1 s. In the last 10 experiments, the system successfully judges that the users’ hands remain within the camera view range and do not leave.

5. Conclusions and Future Work

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Guo, L.; Lu, Z.; Yao, L. Human-machine interaction sensing technology based on hand gesture recognition: A review. IEEE Trans. Hum.-Mach. Syst. 2021, 51, 300–309. [Google Scholar] [CrossRef]

- Gomez-Barrero, M.; Drozdowski, P.; Rathgeb, C.; Patino, J.; Todisco, M.; Nautsch, A.; Damer, N.; Priesnitz, J.; Evans, N.; Busch, C. Biometrics in the Era of COVID-19: Challenges and Opportunities. IEEE Trans. Technol. Soc. 2022, 3, 307–322. [Google Scholar] [CrossRef]

- Masi, I.; Wu, Y.; Hassner, T.; Natarajan, P. Deep face recognition: A survey. In Proceedings of the 2018 31st SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI), Parana, Brazil, 29 October–1 November 2018; pp. 471–478. [Google Scholar]

- Jia, W.; Zhang, B.; Lu, J.; Zhu, Y.; Zhao, Y.; Zuo, W.; Ling, H. Palmprint recognition based on complete direction representation. IEEE Trans. Image Process. 2017, 26, 4483–4498. [Google Scholar] [CrossRef] [PubMed]

- Fei, L.; Lu, G.; Jia, W.; Teng, S.; Zhang, D. Feature extraction methods for palmprint recognition: A survey and evaluation. IEEE Trans. Syst. Man Cybern. Syst. 2018, 49, 346–363. [Google Scholar] [CrossRef]

- Zhong, D.; Du, X.; Zhong, K. Decade progress of palmprint recognition: A brief survey. Neurocomputing 2019, 328, 16–28. [Google Scholar] [CrossRef]

- Leng, L.; Teoh, A.B.J. Alignment-free row-co-occurrence cancelable palmprint fuzzy vault. Pattern Recognit. 2015, 48, 2290–2303. [Google Scholar] [CrossRef]

- Qin, H.; El-Yacoubi, M.A.; Li, Y.; Liu, C. Multi-scale and multi-direction GAN for CNN-based single palm-vein identification. IEEE Trans. Inf. Forensics Secur. 2021, 16, 2652–2666. [Google Scholar] [CrossRef]

- Magadia, A.P.I.D.; Zamora, R.F.G.L.; Linsangan, N.B.; Angelia, H.L.P. Bimodal hand vein recognition system using support vector machine. In Proceedings of the 2020 IEEE 12th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management (HNICEM), Manila, Philippines, 3–7 December 2020; pp. 1–5. [Google Scholar]

- Wu, T.; Leng, L.; Khan, M.K.; Khan, F.A. Palmprint-palmvein fusion recognition based on deep hashing network. IEEE Access 2021, 9, 135816–135827. [Google Scholar] [CrossRef]

- Qin, H.; Gong, C.; Li, Y.; Gao, X.; El-Yacoubi, M.A. Label enhancement-based multiscale transformer for palm-vein recognition. IEEE Trans. Instrum. Meas. 2023, 72, 1–17. [Google Scholar] [CrossRef]

- Sandhya, T.; Reddy, G.S. An optimized elman neural network for contactless palm-vein recognition framework. Wirel. Pers. Commun. 2023, 131, 2773–2795. [Google Scholar] [CrossRef]

- Sun, Y.; Wang, C. Presentation attacks in palmprint recognition systems. J. Multimed. Inf. Syst. 2022, 9, 103–112. [Google Scholar] [CrossRef]

- Wang, F.; Leng, L.; Teoh, A.B.J.; Chu, J. Palmprint false acceptance attack with a generative adversarial network (GAN). Appl. Sci. 2020, 10, 8547. [Google Scholar] [CrossRef]

- Erden, F.; Cetin, A.E. Hand gesture based remote control system using infrared sensors and a camera. IEEE Trans. Consum. Electron. 2014, 60, 675–680. [Google Scholar] [CrossRef]

- Yu, L.; Abuella, H.; Islam, M.Z.; O’Hara, J.F.; Crick, C.; Ekin, S. Gesture recognition using reflected visible and infrared lightwave signals. IEEE Trans. Hum. Mach. Syst. 2021, 51, 44–55. [Google Scholar] [CrossRef]

- García-Bautista, G.; Trujillo-Romero, F.; Caballero-Morales, S.O. Mexican sign language recognition using kinect and data time warping algorithm. In Proceedings of the 2017 International Conference on Electronics, Communications and Computers (CONIELECOMP), Cholula, Mexico, 22–24 February 2017; pp. 1–5. [Google Scholar]

- Mantecón, T.; Del-Blanco, C.R.; Jaureguizar, F.; García, N. A real-time gesture recognition system using near-infrared imagery. PLoS ONE 2019, 14, e0223320. [Google Scholar] [CrossRef] [PubMed]

- Kumar, P.; Gauba, H.; Roy, P.P.; Dogra, D.P. A multimodal framework for sensor based sign language recognition. Neurocomputing 2017, 259, 21–38. [Google Scholar] [CrossRef]

- Xu, H.; Leng, L.; Yang, Z.; Teoh, A.B.J.; Jin, Z. Multi-task pre-training with soft biometrics for transfer-learning palmprint recognition. Neural Process. Lett. 2023, 55, 2341–2358. [Google Scholar] [CrossRef]

- Park, H.J.; Kang, J.W.; Kim, B.G. ssFPN: Scale sequence (S 2) feature-based feature pyramid network for object detection. Sensors 2023, 23, 4432. [Google Scholar] [CrossRef]

- Mujahid, A.; Awan, M.J.; Yasin, A.; Mohammed, M.A.; Damaševičius, R.; Maskeliūnas, R.; Abdulkareem, K.H. Real-time hand gesture recognition based on deep learning YOLOv3 model. Appl. Sci. 2021, 11, 4164. [Google Scholar] [CrossRef]

- Qi, W.; Ovur, S.; Li, Z.; Marzullo, A.; Song, R. Multi-sensor guided hand gesture recognition for a teleoperated robot using a recurrent neural network. IEEE Robot. Autom. Lett. 2021, 6, 6039–6045. [Google Scholar] [CrossRef]

- Jangpangi, M.; Kumar, S.; Bhardwaj, D.; Kim, B.G.; Roy, P.P. Handwriting recognition using wasserstein metric in adversarial learning. SN Comput. Sci. 2022, 4, 43. [Google Scholar] [CrossRef]

- Yang, Z.; Leng, L.; Wu, T.; Li, M.; Chu, J. Multi-order texture features for palmprint recognition. Artif. Intell. Rev. 2023, 56, 995–1011. [Google Scholar] [CrossRef]

- Leng, L.; Li, M.; Kim, C.; Bi, X. Dual-source discrimination power analysis for multi-instance contactless palmprint recognition. Multimed. Tools Appl. 2017, 76, 333–354. [Google Scholar] [CrossRef]

- Leng, L.; Zhang, J. Palmhash code vs. palmphasor code. Neurocomputing 2013, 108, 1–12. [Google Scholar] [CrossRef]

- Sahoo, J.P.; Prakash, A.J.; Pławiak, P.; Samantray, S. Real-time hand gesture recognition using fine-tuned convolutional neural network. Sensors 2022, 22, 706. [Google Scholar] [CrossRef] [PubMed]

- Saboo, S.; Singha, J. Vision based two-level hand tracking system for dynamic hand gestures in indoor environment. Multimed. Tools Appl. 2021, 80, 20579–20598. [Google Scholar] [CrossRef]

- Kulshreshth, A.; Zorn, C.; LaViola, J.J. Poster: Real-time markerless kinect based finger tracking and hand gesture recognition for HCI. In Proceedings of the 2013 IEEE Symposium on 3D User Interfaces (3DUI), Orlando, FL, USA, 16–17 March 2013; pp. 187–188. [Google Scholar]

- Houston, A.; Walters, V.; Corbett, T.; Coppack, R. Evaluation of a multi-sensor Leap Motion setup for biomechanical motion capture of the hand. J. Biomech. 2021, 127, 110713. [Google Scholar] [CrossRef] [PubMed]

- Ovur, S.E.; Su, H.; Qi, W.; De Momi, E.; Ferrigno, G. Novel adaptive sensor fusion methodology for hand pose estimation with multileap motion. IEEE Trans. Instrum. Meas. 2021, 70, 1–8. [Google Scholar] [CrossRef]

- Nonnarit, O.; Ratchatanantakit, N.; Tangnimitchok, S.; Ortega, F.; Barreto, A. Hand tracking interface for virtual reality interaction based on marg sensors. In Proceedings of the 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Osaka, Japan, 23–27 March 2019; pp. 1717–1722. [Google Scholar]

- Santoni, F.; De Angelis, A.; Moschitta, A.; Carbone, P. MagIK: A hand-tracking magnetic positioning system based on a kinematic model of the hand. IEEE Trans. Instrum. Meas. 2021, 70, 1–13. [Google Scholar] [CrossRef]

- Mueller, F.; Bernard, F.; Sotnychenko, O.; Mehta, D.; Sridhar, S.; Casas, D.; Theobalt, C. Ganerated hands for real-time 3d hand tracking from monocular rgb. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 49–59. [Google Scholar]

- Han, S.; Liu, B.; Cabezas, R.; Twigg, C.D.; Zhang, P.; Petkau, J.; Yu, T.H.; Tai, C.J.; Akbay, M.; Wang, Z.; et al. MEgATrack: Monochrome egocentric articulated hand-tracking for virtual reality. ACM Trans. Graph. (ToG) 2020, 39, 87:1–87:13. [Google Scholar] [CrossRef]

- Menghani, G. Efficient deep learning: A survey on making deep learning models smaller, faster, and better. ACM Comput. Surv. 2023, 55, 1–37. [Google Scholar] [CrossRef]

- Sharifani, K.; Amini, M. Machine learning and deep learning: A review of methods and applications. World Inf. Technol. Eng. J. 2023, 10, 3897–3904. [Google Scholar]

- Zhuang, Q.; Gan, S.; Zhang, L. Human-computer interaction based health diagnostics using ResNet34 for tongue image classification. Comput. Methods Programs Biomed. 2022, 226, 107096. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Huang, J.; Ren, L.; Zhou, X.; Yan, K. An improved neural network based on SENet for sleep stage classification. IEEE J. Biomed. Health Inform. 2022, 26, 4948–4956. [Google Scholar] [CrossRef] [PubMed]

- Zhang, F.; Bazarevsky, V.; Vakunov, A.; Tkachenka, A.; Sung, G.; Chang, C.L.; Grundmann, M. Mediapipe hands: On-device real-time hand tracking. arXiv 2020, arXiv:2006.10214. [Google Scholar]

- Ghanbari, S.; Ashtyani, Z.P.; Masouleh, M.T. User identification based on hand geometrical biometrics using media-pipe. In Proceedings of the 2022 30th International Conference on Electrical Engineering (ICEE), Seoul, Korea, 17–19 May 2022; pp. 373–378. [Google Scholar]

- Güney, G.; Jansen, T.S.; Dill, S.; Schulz, J.B.; Dafotakis, M.; Hoog Antink, C.; Braczynski, A.K. Video-based hand movement analysis of parkinson patients before and after medication using high-frame-rate videos and MediaPipe. Sensors 2022, 22, 7992. [Google Scholar] [CrossRef] [PubMed]

- Peiming, G.; Shiwei, L.; Liyin, S.; Xiyu, H.; Zhiyuan, Z.; Mingzhe, C.; Zhenzhen, L. A PyQt5-based GUI for operational verification of wave forcasting system. In Proceedings of the 2020 International Conference on Information Science, Parallel and Distributed Systems (ISPDS), Xi’an, China, 14–16 August 2020; pp. 204–211. [Google Scholar]

- Renyi, L.; Yingzi, T. Semi-automatic marking system for robot competition based on PyQT5. In Proceedings of the 2021 International Conference on Intelligent Computing, Automation and Systems (ICICAS), Chongqing, China, 29–30 December 2021; pp. 251–254. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Liu, Z.; Mao, H.; Wu, C.Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A convnet for the 2020s. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 11976–11986. [Google Scholar]

- Chen, J.; Kao, S.h.; He, H.; Zhuo, W.; Wen, S.; Lee, C.H.; Chan, S.H.G. Run, don’t walk: Chasing higher FLOPS for faster neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 12021–12031. [Google Scholar]

| Ref. and Year | Data | Method |

|---|---|---|

| [15] 2014 | Infrared + Visible light | Winner-take-all Hash |

| [16] 2021 | Infrared + Visible light | K-nearest-neighbors |

| [17] 2017 | MK | Dynamic Time Warping |

| [18] 2019 | LMC | Support Vector Machine |

| [19] 2017 | MK + LMC | HMM + BLSTM-NN |

| [22] 2021 | Visible light | YOLO v3 + DarkNet-53 |

| [23] 2021 | LMC | LSTM-RNN |

| [28] 2022 | MK | CNN with score-level fusion |

| Gesture | Fingers | Distance of Fingers | ||||

|---|---|---|---|---|---|---|

| Thumb | Index | Middle | Ring | Little | ||

| a | B | S | B | B | B | |

| b | B | S | S | B | B | |

| c | B | S | S | S | B | |

| d | B | S | S | S | S | |

| e | S | S | S | S | S | Separated |

| f | S | S | S | S | S | Together |

| g | B | S | B | S | S | |

| h | B | S | S | B | S | |

| i | B | B | S | S | S | |

| j | B | S | B | B | S | |

| Network | Accuracy |

|---|---|

| CNN | 82.175% |

| VGG-16 [48] | 92.675% |

| GoogLeNet [49] | 89.800% |

| ResNet-34 [40] | 91.725% |

| SE-CNN | 90.950% |

| ConvNeXt-T [50] | 96.100% |

| ConvNeXt-S [50] | 98.625% |

| ConvNeXt-B [50] | 99.300% |

| FasterNet [51] | 97.775% |

| SE-ResNet-18 | 98.175% |

| SE-ResNet-34 (Ours) | 99.425% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, J.; Leng, L.; Kim, B.-G. Gesture Recognition and Hand Tracking for Anti-Counterfeit Palmvein Recognition. Appl. Sci. 2023, 13, 11795. https://doi.org/10.3390/app132111795

Xu J, Leng L, Kim B-G. Gesture Recognition and Hand Tracking for Anti-Counterfeit Palmvein Recognition. Applied Sciences. 2023; 13(21):11795. https://doi.org/10.3390/app132111795

Chicago/Turabian StyleXu, Jiawei, Lu Leng, and Byung-Gyu Kim. 2023. "Gesture Recognition and Hand Tracking for Anti-Counterfeit Palmvein Recognition" Applied Sciences 13, no. 21: 11795. https://doi.org/10.3390/app132111795

APA StyleXu, J., Leng, L., & Kim, B.-G. (2023). Gesture Recognition and Hand Tracking for Anti-Counterfeit Palmvein Recognition. Applied Sciences, 13(21), 11795. https://doi.org/10.3390/app132111795