Abstract

With the increasing complexity of production systems and manufactured products, operators face high demands for professional expertise and long-term concentration. Augmented reality (AR) can support users in their work by displaying relevant virtual data in their field of view. In contrast to the extensive research on AR assistance in assembly, maintenance, and training, AR support in quality inspection has received less attention in the industrial context. Quality inspection is an essential part of industrial processes; thus, it is important to verify whether AR assistance can support users in these tasks. This work proposes an AR-based approach for quality inspection. For this, pilot AR software was designed and developed. The proposed AR approach was tested with end users. The task efficiency, the error rate, the perceived mental workload, and the usability of the AR approach were analysed and compared to the conventional paper-based support. The field research confirmed the positive effect on user efficiency during quality inspection while decreasing the perceived mental workload. This work extends the research on the potential of AR assistance in industrial applications and provides experimental validation to confirm the benefits of AR support on user performance during quality inspection tasks.

Keywords:

augmented reality; inspection; quality; welding; performance; analysis; efficiency; mental workload 1. Introduction

This research explores the potential of combining Augmented Reality (AR) technology and the visual inspection of weldments in manufacturing. AR is an advanced interactive technology that augments the real world with virtual objects that seem to exist in the same environment and works interactively in real time. With the use of AR technologies, people can access digital data through a layer of information placed over the real environment. In recent years, current hardware (HW) and software (SW) tools have significantly advanced, making this technology more widely available. The rise in recent years in the volume of scientific papers is also consistent with this trend. Due to its capacity to offer an interactive interface for visually represented digital content, augmented reality has begun to find applications in a number of industrial fields, proving the significance of this technology. Augmented reality can provide functional tools that support users in performing tasks, and facilitate data visualisation and interaction by linking physical real space and user perception.

Quality inspection is an essential part of quality management in manufacturing. It is demanding on operators, particularly their continuous attention to detail. Manufacturing faces numerous obstacles as a result of global market developments. Companies are constantly under pressure to increase their productivity, ensure flawless quality, high flexibility, and yet with minimum costs to maintain their market position. One of the tools to achieve this is, among other things, the in-depth monitoring of inspection processes and the digitisation and analysis of available standardised data.

The increase in complexity and variability in industry demands a corresponding process adaptation, resulting in frequent changes in manufacturing inspection [1].

In order for companies to adapt to changes in the market, the necessary changes must be introduced into production processes in a flexible and effective manner [2]. In modern manufacturing processes, the importance of quality inspection cannot be underestimated. Inspection procedures are crucial in production systems since no system is flawless [3]. In the context of quality management, companies tend to use the quality control process to find any deviations before delivering the product to the customer [4]. Ineffective or under-qualified welding inspection can lead to the risk of serious failures due to low welding quality. The consequences of such failures can be very severe, as described in several recent studies [5,6].

In their study of reconfigurable production systems, Koren et al. [7] concluded that quality gates for inspection can be inserted immediately during the various production stages to guarantee product quality. Operators or inspectors undertake quality control when they verify their work while it is being performed or when they check previously completed work to ensure its quality. To maintain or increase productivity and save costs, quality gates are used to stop the spread of defective items to other production stages [8]. In general, the inspection procedure calls for a high level of expertise and concentration. It must be carried out by an experienced worker with expert knowledge and the ability to interpret even complex documentation. Generally, operators are able to handle unpredictable situations well, adapt, and be flexible under different conditions using, among other things, tacit knowledge [9]. However, despite the high qualifications of these specialists, it is always based on the inspector’s subjective assessment and therefore represents a relatively high mental workload for the inspector. The level of concentration can thus fluctuate. Also, as in other industries, the generation of experienced workers is aging, and new workers are hard to find and slow to train to the level required for independent decision making. The inspection process in general does not add value to the product. Moreover, conducting inspections by human operators is costly in terms of human, financial, and time resources [10]. Traditional quality control techniques have certain limitations in the context of modern highly variable small batch production. There is thus a need for faster, more accurate, reliable, and flexible quality solutions [11].

The level of automation in today’s manufacturing companies is increasing [12]. Due to the demands for high quality, speed, and repeatability, automation is also starting to develop in processes that are highly dependent on manual work, such as quality control processes. However, efforts to automate quality inspection are running into problems in some types of manufacturing [13]. To improve quality and efficiency in inspection processes, ICT (information and communication technology), artificial intelligence models, and dynamic data need to be optimally integrated [14]. At the same time, there are many types of production or tasks for which full automation is not suitable or possible for technological or other reasons [15]. Emerging technologies, such as AR and intelligent systems, are providing manufacturing companies with new opportunities to manage quality [16].

The principal aim of this work is to investigate the effects of AR support on the weldment quality inspection process. To achieve this, the following research questions (RQs) were formulated:

RQ1: Can AR be used by weldment quality inspectors to effectively support inspection in an industrial environment?

RQ2: Can HHD (hand-held display) AR facilitate inspectors’ interaction with the inspected product and the process of data interpretation and decision making?

RQ3: How can the AR approach affect user performance during weldment quality inspection?

The main objective of the research is to propose an AR approach for weldment inspection in an industrial environment based on the theoretical research of general inspection models and their experimental verification with end users.

To meet these main objectives, the following sub-objectives have been defined:

- Conceptual design of a general AR approach for quality inspection;

- Design and development of a pilot AR software to support quality inspection;

- Conducting field experimental research with end users in a real working environment with the developed AR approach to validate it;

- Evaluation of the impact of the AR approach on inspector performance in terms of objective metrics compared to traditional paper-based inspection practices;

- Monitoring the impact of the AR approach on the perceived mental workload of users compared to traditional paper-based inspection practices;

- Evaluation of the usability of the proposed AR approach;

- Comparative analysis of the impact of the proposed AR approach on two groups of end users according to their experience in weldment quality inspection.

From the theoretical research, the following hypotheses were defined:

Hypothesis 1 (H1):

Using the proposed AR approach will reduce the time to complete the weldment inspection compared to conventional approaches based on printed documentation.

Hypothesis 2 (H2):

Using the proposed AR approach will reduce the inspection error rate compared to conventional paper-based approaches.

Hypothesis 3 (H3):

Using the proposed AR approach will reduce the mental workload perceived when inspecting a weldment compared to using conventional approaches based on printed documentation.

The first hypothesis, H1, defines the correlation between the performance of users supported by the conventional approach and the proposed AR approach in terms of task completion time. It investigates whether, with the innovative AR approach, users can complete the inspection task in less time.

The second hypothesis, H2, also compares the conventional and the proposed AR approach, this time in terms of the quality of task execution, which is monitored in terms of the error rate observed during the inspection task.

The third hypothesis, H3, addresses the mental workload perceived by users during the inspection task and compares this workload depending on the approach used. It investigates whether a difference in perceived workload can be traced between the approaches used for inspection.

This research brings a new approach to operator support in industrial quality inspection with AR HHD. It examines the potential impact of AR assistance on the efficiency of inspection task completion through a designed and developed pilot AR software and end-user experiment. It follows the authors´ recommendations to explore AR-based decision support for inspectors [17,18,19] and to address the importance of human factors in defect detection and prevention [20].

2. Related Work

This section presents the results of the theoretical research related to the topic. With the new industrial revolution, production systems are evolving, and so should inspection systems. The development of new technologies and the transformation of the industrial environment result in increasing efficiency, higher quality, and reduced costs [21,22,23]. Technological research focuses on adapting quality control to the new industrial revolution by providing faster, more accurate and reliable decision support systems [24]. To support the flexibility of inspectors, it is necessary to increase the availability of information and to link relevant quality characteristics as a basis for product quality assessment. The rising availability of holistic data, such as product and process specifications, historical measurement data, and production efficiency, should be used not only to assess geometric characteristics but also to strategically manage the manufacturing and inspection process in a closed loop [25,26]. This technological transformation will have a significant effect on the nature of work in the industrial environment, as the new working environment has a direct impact on the operator by creating new interactions between people and machines, as well as between the digital and physical worlds [23,27,28,29]. In this perspective, the worker becomes a skilled smart operator whose physical, sensory, and cognitive skills can be enhanced and complemented by new systems and tools equipped with advanced technologies [23,30,31].

According to Refs. [32,33], AR is one of the most promising techniques for enhancing the transfer of information between the digital and physical worlds. With its ability to integrate virtual information into the user’s perception of the real world, AR can support operators directly in their workplace. It can support operators in real time during manual operations and enable them to reduce the risk of human error caused by dependence on the operator’s memory, printed workflows, or printed technical drawings that must be interpreted in advance by the supervisor or technologist [32,33].

AR can improve operators’ skills by providing relevant data in the users’ field of view. Thus, it can play an important role in various industrial applications to support operators, including the following:

- Assembly [34,35,36,37];

- Maintenance [38,39,40,41,42];

- Training [43,44,45,46,47];

- Monitoring and quality assessment [48,49].

The link between inspection models and the Industry 4.0 concept was first identified by Imkamp et al. [50]. The authors have identified five main development areas of quality inspection that must respond to the trends and challenges of the fourth industrial revolution. The first three are mainly related to technological advances. The last two also involve a change in the methodological approach. These challenges include the following:

- Speed;

- Accuracy;

- Reliability;

- Flexibility;

- Holistic approach.

The authors evaluate the use of information systems capabilities, specifically AR, as part of the Industry 4.0 concept as a technological opportunity that has the potential to increase process efficiency and reduce errors by supporting the operator’s attention to the activity being performed by providing visual support [16,51]. By using AR, the operator can focus on the task at hand while receiving visual feedback. By displaying relevant information, AR tools can support workers in less routine tasks or relatively complex operations [31,52]. Augmented reality allows for the display of digital information in a real situation. This presents the potential for quality control processes by, for example, providing instructions or other related data. It can also lead to reduced costs, errors, and inspection time [53,54].

AR is a suitable method to support inspectors in performing internal company tasks due to the following capabilities, as presented by [16,55,56,57]:

- Sharing and disseminating expertise;

- Providing training and incentives to make their work more effective;

- Reducing errors caused by lack of experience, distractions, or other constraints.

From the available literature, it was found that there are prototypes in the early stages of development for augmented reality quality-inspection research. These applications have mostly been tested only under laboratory conditions. Despite the many potential benefits, AR tools that are ready for application are relatively unavailable on the market, and their real benefits in industrial production have not yet been demonstrated [58].

In the field of quality inspection, several systems and tools have been developed in recent years with AR support for many applications. For example, the available works address the following areas:

- Remote monitoring and control of industrial robots [59];

- Support of quality inspectors in the approval of product samples [60];

- Support of assembly operations and subsequent inspection of assembled mechanical components [61];

- Assistance in machine maintenance and inspection by displaying historical and current data of the inspected machine or workplace [62,63].

In the welding process, AR has been investigated as a tool to support the control of spot welding. The inspection was performed using a tablet mounted on a welding gun to display useful instructions for performing operations using AR [64]. Using AR projectors, virtual information was projected onto the metal parts of cars to highlight the location of the spot welding [65]. Other applications of AR technology for inspection activities include the inspection of reinforcement structures and the inspection of the correct positioning of tunnel segments [66,67].

Inspection operations include the detection of manufacturing and assembly errors and design discrepancies between the final physical products and the conditions prescribed by the engineering and design department. As the complexity of the product being inspected increases, the difficulty of the inspection operation rises, which may lead to a reduction in the efficiency of the inspector or risk of error. The correct execution of complex welding operations has a significant impact on the correct functionality and performance of the product and any error must be detected before the product is released to the end customer. In such a case, the aspect of annotating and formalising the detected errors and then sharing the information with other teams or the engineering department is also crucial.

The results of the research show that to adapt quality control to the needs of modern manufacturing, ICT plays a crucial role in the interaction between value-added manufacturing processes, monitoring systems, and control systems. Decision making, interdependence, and the seamless collaboration of quality control resources must be enabled to support compliance. Various studies have focused on investigating the development of ICT to ensure the integration of connectivity into quality control systems [68,69]. Cloud computing is mainly used to achieve this. Making the quality control system accessible to all actors in the product life cycle can allow for greater transparency and enable adjustments based on new control system needs.

In today’s industrial environment, the pressure to produce parts ever faster and with better quality is evident. In general, inspection processes are becoming increasingly important. It must be ensured that these processes are carried out in the shortest possible time and the most efficient manner. Failure to detect defects in a product can lead to significant time and financial losses. Currently, the human factor plays a major role in inspection processes. Responsibility is mainly placed on specialists in inspection activities. In this context, distraction, fatigue, or the lack of practice can lead to errors that jeopardise the effectiveness of the activity. The mental workload perceived by the user during the checking task impacts the productivity level and the consistency of performance. It is therefore an important part of this research.

To the best of our knowledge, no research or experiments have yet been conducted, which test the industrial weldment AR-based inspection process in terms of user performance efficiency, error rate, and also the impact on user mental workload. This research gap motivates our research to contribute to the development of AR technology in industrial applications.

Based on the conducted theoretical research, an AR approach to the control process was proposed by linking general models concerning the objectives of the work. This approach was tested in an experimental user study. For this study, AR software was designed and developed to guide the end user interactively through the inspection process and display all relevant data in a virtual scene in the user’s field of view. This reduces the need to divide attention between the scene being inspected and the drawings, and simplifies the interpretation of the required specifications.

3. Materials and Methods

This section describes the methodological approach that was chosen to answer the research questions and meet the defined objectives. The purpose of this research is to develop valid and value-based knowledge, both from a practical and a general theoretical perspective. The choice of methodological approach was based on the pragmatic view that more research on real industrial problems is needed in a collaborative environment between the academic and industrial world. The research presented in this article was initiated by an industrial need associated with a research gap and was conducted in close collaboration with industrial practice. To reduce the gaps between quality research, augmented reality, and industrial practice, the aim of this manuscript is to increase the understanding of how even partial forms of automation, specifically AR support and AR visualisations, can be used for the industrial quality control of weldments to achieve flexible advanced manufacturing with minimal or zero defects. After identifying the research gap, the main effort was to look for a link between the incorporation of AR in the inspection methodology and the effective work performance of the users.

Theoretical research was conducted to investigate general models and methodologies of inspection processes in industrial environments concerning the Industry 4.0 concept. It showed that many authors agree on the necessity of methodological changes to the quality inspection process, which could contribute to removing limitations in industrial practice. These constraints include the flexibility, speed, and reliability of decision-making processes. Modern emerging technologies, including the use of ICT, AI models, and effective data handling, can be used to facilitate methodological changes.

3.1. AR Pilot Software

For the experimental study, pilot AR software was designed and developed to support users in the inspection of welded products using AR. In this section, the design and development of this tool is briefly presented.

One of the first steps in the design of this tool was to collect experiences in the field of quality control of welded products from industrial practice. At the same time, the characteristics and approaches to inspection processes and their support were investigated in the context of practical experience. After examining the practical characteristics of inspection operations, a search for suitable tools for the actual design and development of an AR-supported technological solution was conducted. Attention was paid to game engines, with the help of which it is possible to design AR applications with an interactive user environment. Furthermore, the research focused on methods of tracked object recognition. For data storage, the possibilities of linking the proposed AR solution with a suitable database were investigated. The research also included the possibilities of using CAD software and graphics programs (see below) to design the necessary models and create visualisations and user interfaces. Last but not least, research on the available hardware devices and their possibilities when used in the experiment was carried out.

The proposed approach assumes efficient data management and communication between users. Google’s Firebase Database was chosen for this function. It is a powerful cloud-based database system that provides interfacing with Unity 3D and offers several features for data storage, synchronisation, and management. Firebase enables real-time data synchronisation and instant updates across devices and users, supporting the interactivity of connected applications. Firebase provides a cloud-based database that allows for data to be stored and managed on servers in a remote data centre. With the help of this database, user identity authentication is also performed.

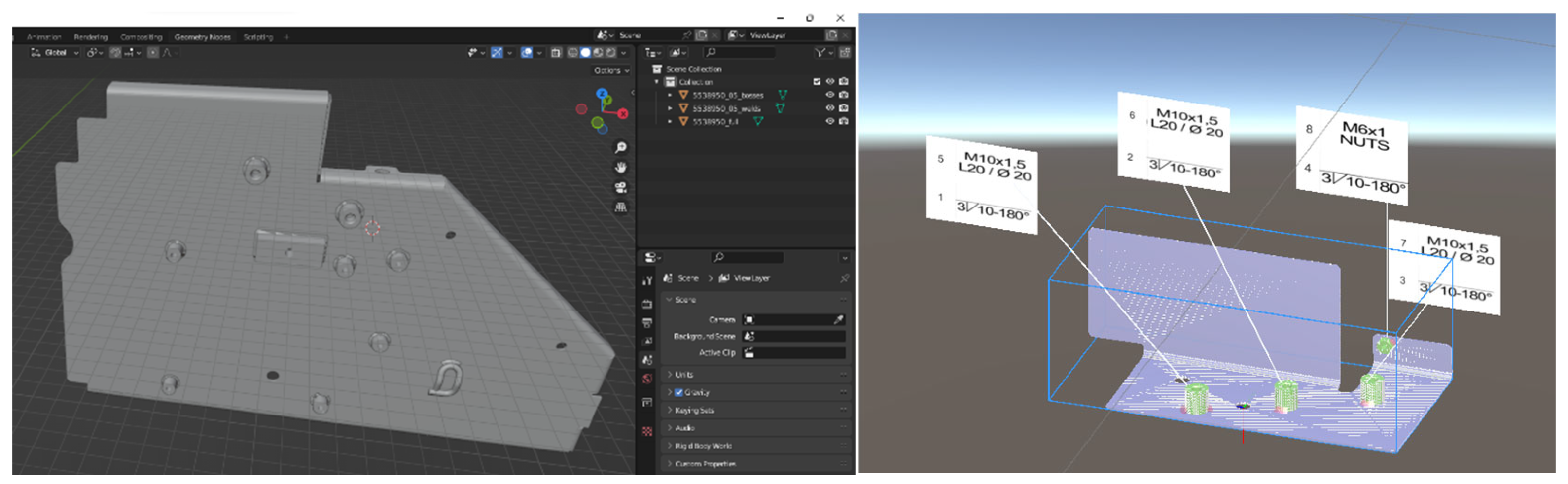

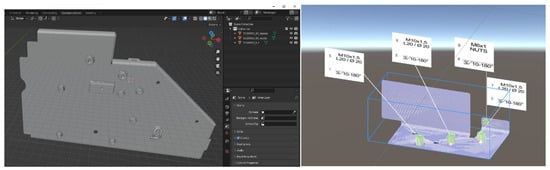

SolidEdge (ST10) and Blender (3.6.4 LTS) software were used to work with 3D CAD models. SolidEdge is a professional powerful software tool that was used to create the necessary models for the experiment. Blender was used to create visualisations of the 3D models. GIMP (2.10.32) and Adobe Illustrator (CS2) were used to create and edit 2D graphics and visualisation elements and the user interface. An example of data creation is shown in Figure 1.

Figure 1.

An example of data creation in Blender and Unity (Czech dimension style).

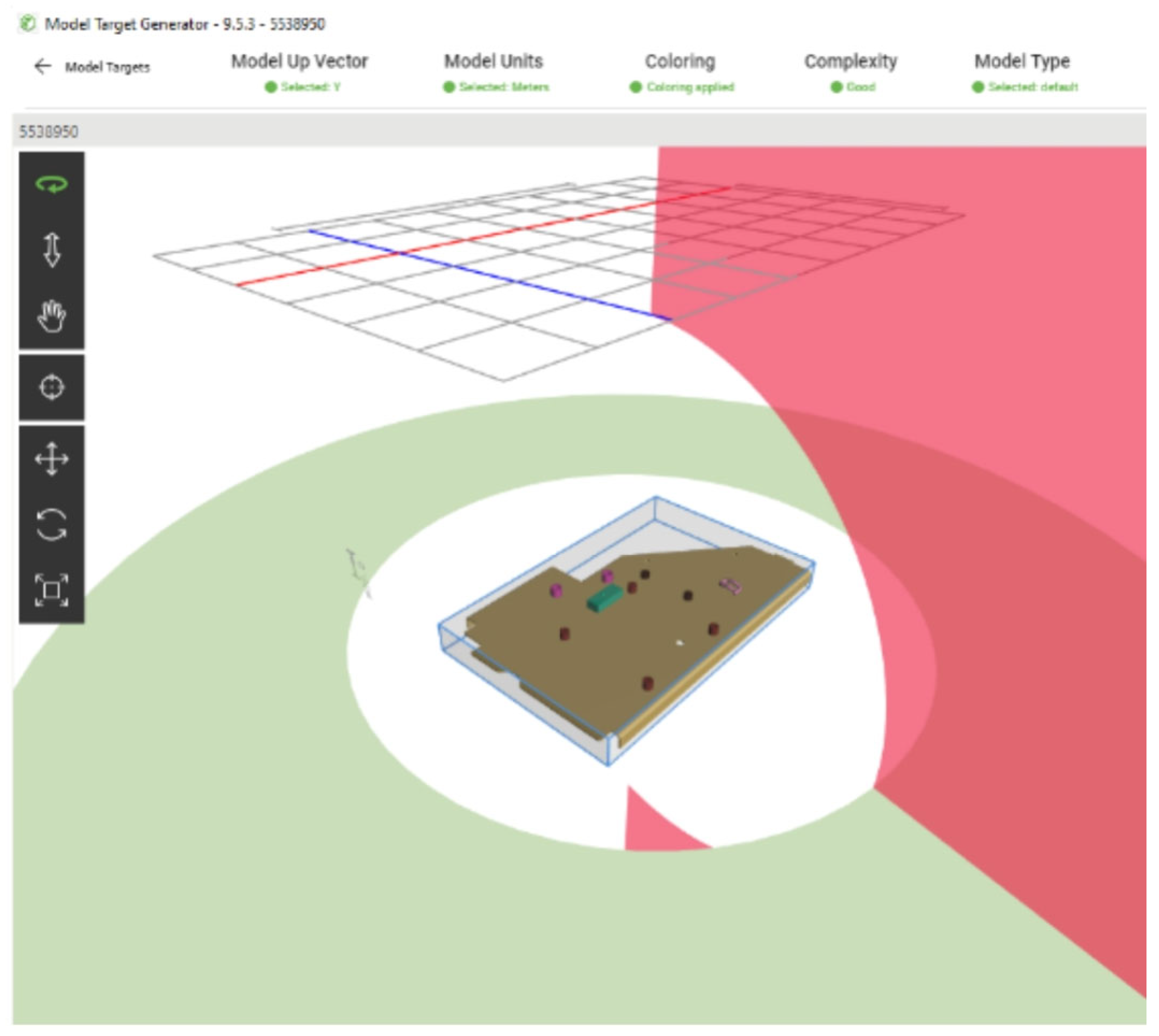

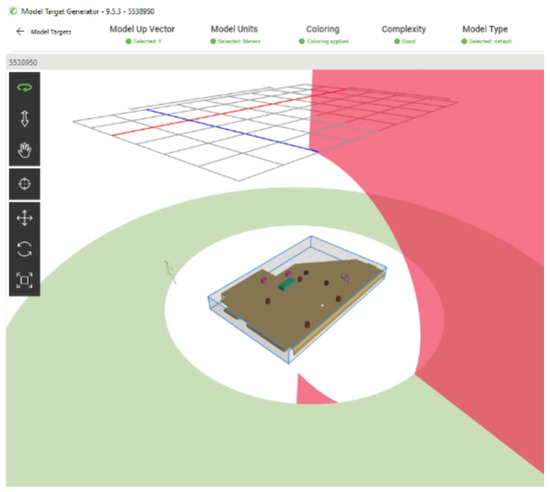

A combination of Unity 3D and Vuforia Engine was chosen. Vuforia was chosen as an AR-dedicated extension for Unity 3D that works with real-time visual recognition and tracking of markers and objects. It allows objects to be identified and to react to virtual elements to create interactive and realistic AR scenes. In this research, an object recognition method is investigated, which makes it possible to recognise an object without artificially added markers. The tracked object itself then acts as a marker, which Vuforia detects and recognises, then tracks it in space and anchors the programmed visualisation to the real scene. For this method to work properly, a database of parts was created in the Vuforia Model Target Generator extension, for which the prepared models were configured to work properly in AR visualisations. Examples of this configuration are shown in Figure 2.

Figure 2.

Model configuration in Vuforia.

Unity 3D is an integrated development environment that offers a wide range of features and tools for creating simulations and applications. It was used to create a tool that interfaces with both the Vuforia and Firebase databases to guide the inspector interactively through the inspection process to support them in identification, the interpretation of prescribed specifications, and the process of deciding whether the inspected product conforms to the quality objectives. Unity and Vuforia are integrated within Unity to create an AR experience. Vuforia’s functionality is accessed and controlled through Unity’s scripting interface, allowing interactive AR experiences to be built within the Unity environment. Unity and Firebase are also integrated within the Unity development environment but are not directly linked in the same way as Unity and Vuforia. Firebase is a separate cloud-based service provided by Google. Unity and Firebase are linked through Firebase’s SDKs (software development kits) and APIs (Application Programming Interface) for Unity. This integration enables data storage, synchronisation, and user authentication features within a Unity application using Firebase services.

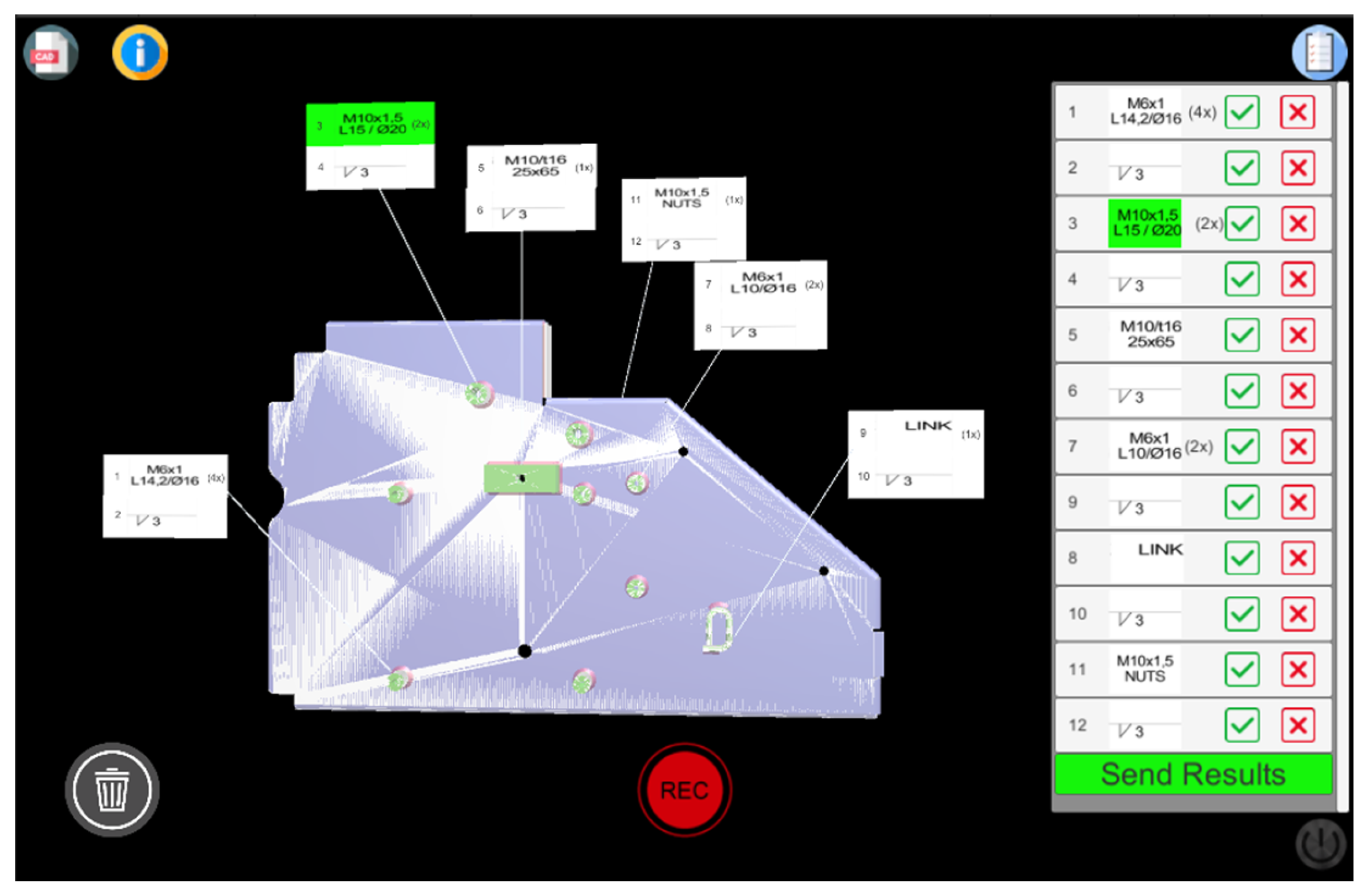

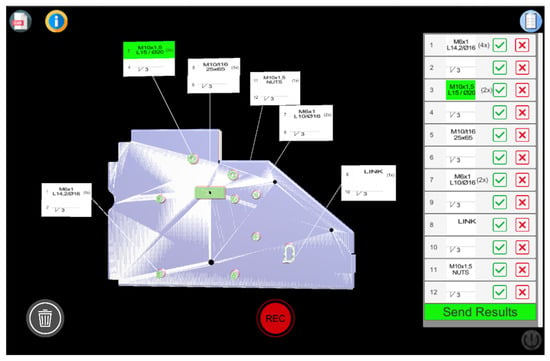

The developed tool is then compiled as an application for Android portable devices. It can also be built as an application for iOS devices. The hand-held device (HHD) variant was chosen considering the advanced familiarity of users with these devices. The nature of the task being performed and the design of the experiment do not place demands on the freedom of both hands, and therefore, the use of the HHD is not significantly limiting as, e.g., in assembly operations. The tablet used for the experiment was a Samsung Galaxy Tab8 (11” LTPS LCD display, 5G, 2.99 GHz, 2.4 GHz, 1.7 GHz processor). The choice of tablet resulted from an initial pilot study in which users agreed on a preference for using a larger display. Once the application is launched, the identity of the registered user is verified in the linked database, or a new user identity is created and uploaded to the database. After the user logs in, the AR module of the developed software automatically starts the live camera recording of the real scene, which is the input source to the tracking and detection module. This video recording along with the functions of Vuforia SDK performs object recognition and detection. After this module detects the object, orientation in the scene is provided with the help of the tracking module. That is, the position of the device relative to the object being tracked is determined, and the programmed visualisation and infographic is displayed in the real scene, which is spatially anchored to the object. This AR scene also includes an interactive checklist that represents the inspection plan and guides the inspector through the inspection with a colour visualisation. A preview of the scene with the checklist is shown in Figure 3. The results filled in the checklist are sent and stored in a linked database. If an error is detected, the inspector can enter an annotation on the form sent to the database about this deviation from the planned quality and disposition of the part. At the same time, this AR scene shows a visualisation of the 3D CAD model overlaid with the real part being inspected. This allows for the quick initial orientation of the inspected object and a fast comparison and evaluation of the quality of the inspected part.

Figure 3.

A preview of the control scene with the checklist (Czech dimension style).

3.2. Participants

A total of 40 probands from industrial practice participated in the main user experiment and were divided into two groups of 20 participants depending on the level of experience they had with weldment inspection.

Group 1 (G1) consisted of 20 workers who have experience in weldment inspection. It was composed of 15 males and 5 females. Their mean age was 40.4 years with a standard deviation of 10.3. The minimum age was 22, and the maximum age was 54. All the workers stated that they had at least 1 year of weld inspection experience.

Group 2 (G2) was composed of 20 workers who had no experience in weldment inspection. But at the same time, all of them have more than 1 year of experience in industrial production. This group consisted of 13 men and 7 women. Their mean age was 41.8 with a standard deviation of 8.46. The minimum age was 29, and the maximum age was 61.

All participants reported having experience with smartphones or tablets. A total of five of them stated that they had used AR at least once, and two of them stated that they had used VR at least once.

3.3. Experiment Design

This research works with two factors: one is the medium to support quality inspection (AR-enabled or paper-based), and the second factor is the level of user experience. In the AR variant, the instructions were displayed by the HHD variant, i.e., through visualisations on portable devices.

The products to be inspected were divided into two sets. Each set contained a total of five types of products in five pieces each; thus, each set consisted of a total of twenty-five items for inspection. These products were chosen so that the dimensions would allow for relatively easy handling during the inspection tasks. Due to the nature of the inspection operations, there is not as much emphasis on the freedom of both hands as, e.g., in assembly operations, during which both hands need to be free to move, and HHD is therefore more limiting. The products were chosen so that they are relatively easy to handle on the inspection board and do not require the use of other auxiliary equipment, such as a crane, to move them. These parts ranged in size from 300 to 800 mm and weighed between 2 and 7 kg. The weldments consisted of 5–17 welded components.

As part of the experiment, a check on parts welded using the MIG method (metal inert gas) was performed to verify the following points:

- All components are selected correctly and none are missing;

- All components are placed in the correct positions;

- All welds are placed in the correct positions in the correct number, size, length, spacing, and quality.

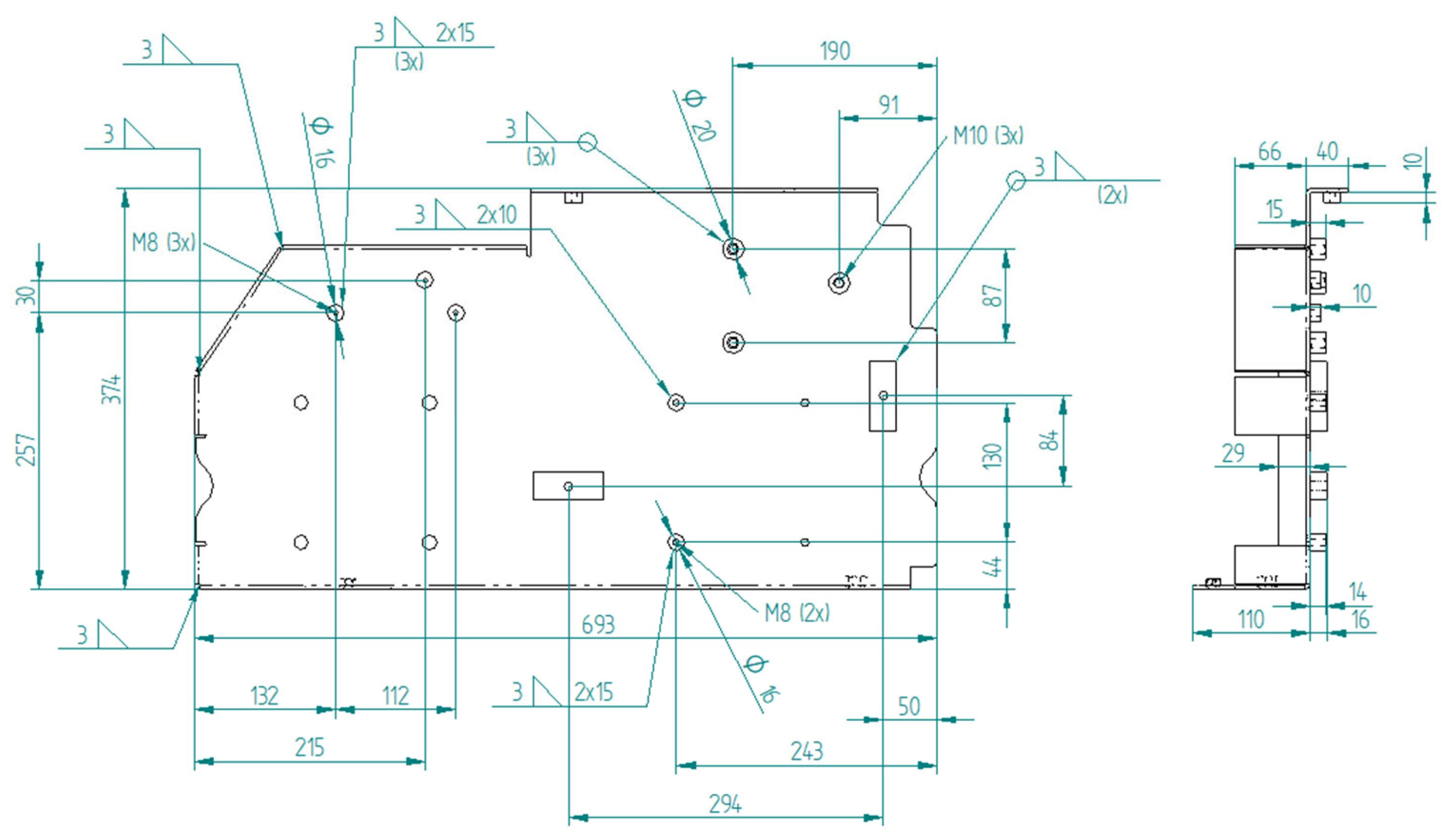

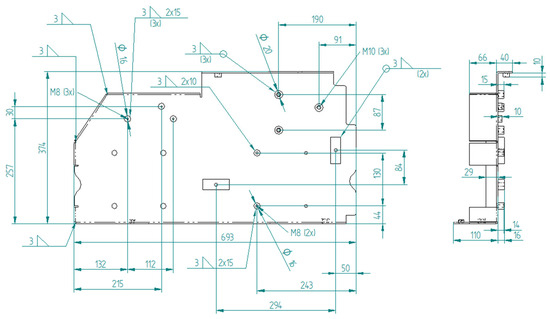

The basic shape of each part is formed by a sheet metal plate with bends from a bending press. Components such as welding nuts, pins, or bosses of various lengths and diameters with different holes or threads are then welded onto this base sheet. In addition to these components, other sheet metal components can be welded to the base sheet. The parts for both sets were chosen to present the same challenge to the inspectors. In addition to being very similar in design, the parts in both sets were chosen so that each set required an inspection of the same number of total points. The detailed drawings of the parts tested are subject to confidentiality and cannot be published in full. An illustrative example is therefore provided to show the complexity of the parts in experiment. For simplicity, only basic drawing views and dimensions (all in mm) are shown in Figure 4; they are not complete production drawings.

Figure 4.

A preview of the inspected part in experiment (all dimensions in the drawing are in mm).

Based on interviews with quality managers, these parts involve relatively complex inspection tasks. Their inspection often requires going through several levels of drawings and BOMs, navigating multiple sheets of very similar drawings, and correctly interpreting auxiliary drawing details and sections. Some parts are very similar to each other, and it is common in the manufacturing process for small batches of several types of such very similar products to be rotated during a shift. This is why mistakes are made when a welder, for example, selects a pin with the wrong length, omits a welding nut, or welds it in the wrong position. It can happen that the welder chooses an incorrect weld layout that does not correspond to the drawing documentation or omits a weld. Often, this type of error does not occur in the entire production batch but occurs due to inattention only partially in the batch. It is therefore challenging for inspectors to reliably detect this type of error, to maintain maximum attention during the inspection of the entire batch, and to perform the inspection in the shortest possible time so that the value stream of the production process is not interrupted.

By the observation and analysis of the internally captured defect records, the following defects were randomly placed in the control set:

- Missing welding component;

- Component welded in the incorrect position;

- Nut welded from the opposite side;

- Missing weld;

- Incorrectly spaced welds.

In the whole set of 25 products, 20 pieces were completely correct (80%) and 5 pieces (20%) had one of the above errors. The same proportion of random distribution of errors also occurred in the second set of control products.

All probands performed the control experiment in two scenarios. In one of them, they inspected the control set using the proposed AR approach and the developed AR software, and in the other they inspected the second control set using the traditional paper-based approach.

3.4. Procedure

The first variant tested in the experiment was a conventional approach to weldment inspection. In this approach, the inspector relied only on printed inspection documents and performed the inspection in a situation of zero automation. After handing the product to the inspector, the inspector started the identification process. Once the product had been identified, a quality check of the product was performed using technical documentation and control plans. First, the inspector needed to interpret the specifications and quality characteristics prescribed by the designers or technologists. After interpreting these requirements, the quality of the part was compared with the prescribed standards from the technical documentation, and conformity or deviation was assessed. In case of conformity, the part was released for further operations. If a deviation was found, it was returned for repair. The results of this process were recorded in a printed checklist.

In this variant, instructions and information were conveyed to users in the traditional manner of a printed drawing and control plan. Control plans and general inspection steps that are commonly used for the parts used in the experiment are not suitable for inexperienced inspectors. In this research, the issue of AR-supported inspection approaches is investigated in terms of, among other things, the experience of the workers with the process. Therefore, participants with and without weldment inspection experience were part of the experiment. So, instead of conventional inspection documents, modified inspection plans were created for the experiment for the parts in the inspection that are suitable for less experienced participants. These plans were tested on a pilot group of probands who do not have extensive experience in reading welding drawings. All confirmed that the visual layout of the control plan was clear, and that they did not experience any difficulty in navigating the plan.

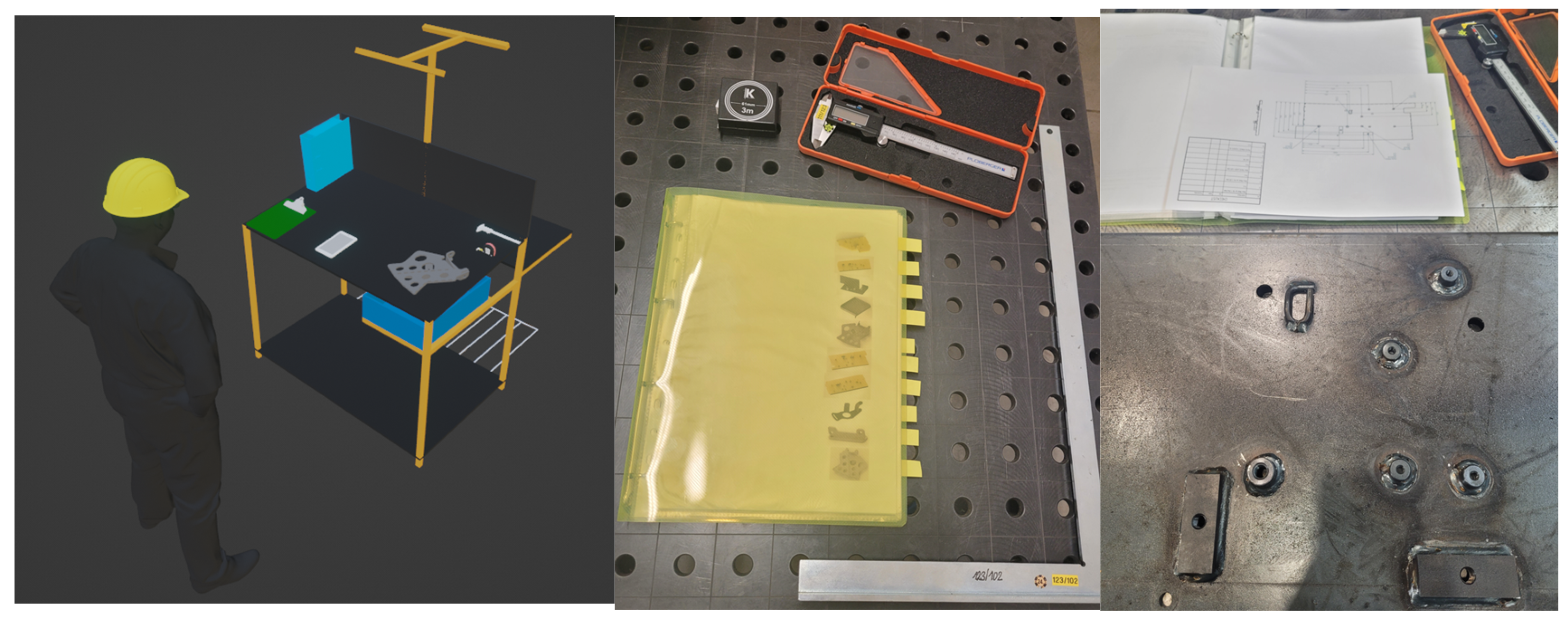

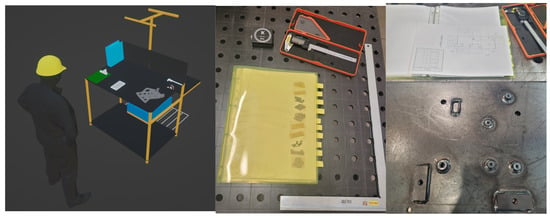

From the control plans produced, a set of documentation was created for product inspection in the experiment, which was the main basis for the implementation of the conventional quality inspection methodology. The preparation of the workstation is shown in Figure 5.

Figure 5.

Workstation for conventional paper-based inspection.

The materials prepared in this way meant that the inspection process was carried out during the experiment without significant downtime or time loss. With the help of the prepared plans, the users were able to identify the part in a relatively short time, find the relevant printed documentation, and start interpreting it. This interpretation was then compared with the quality level found on the real piece.

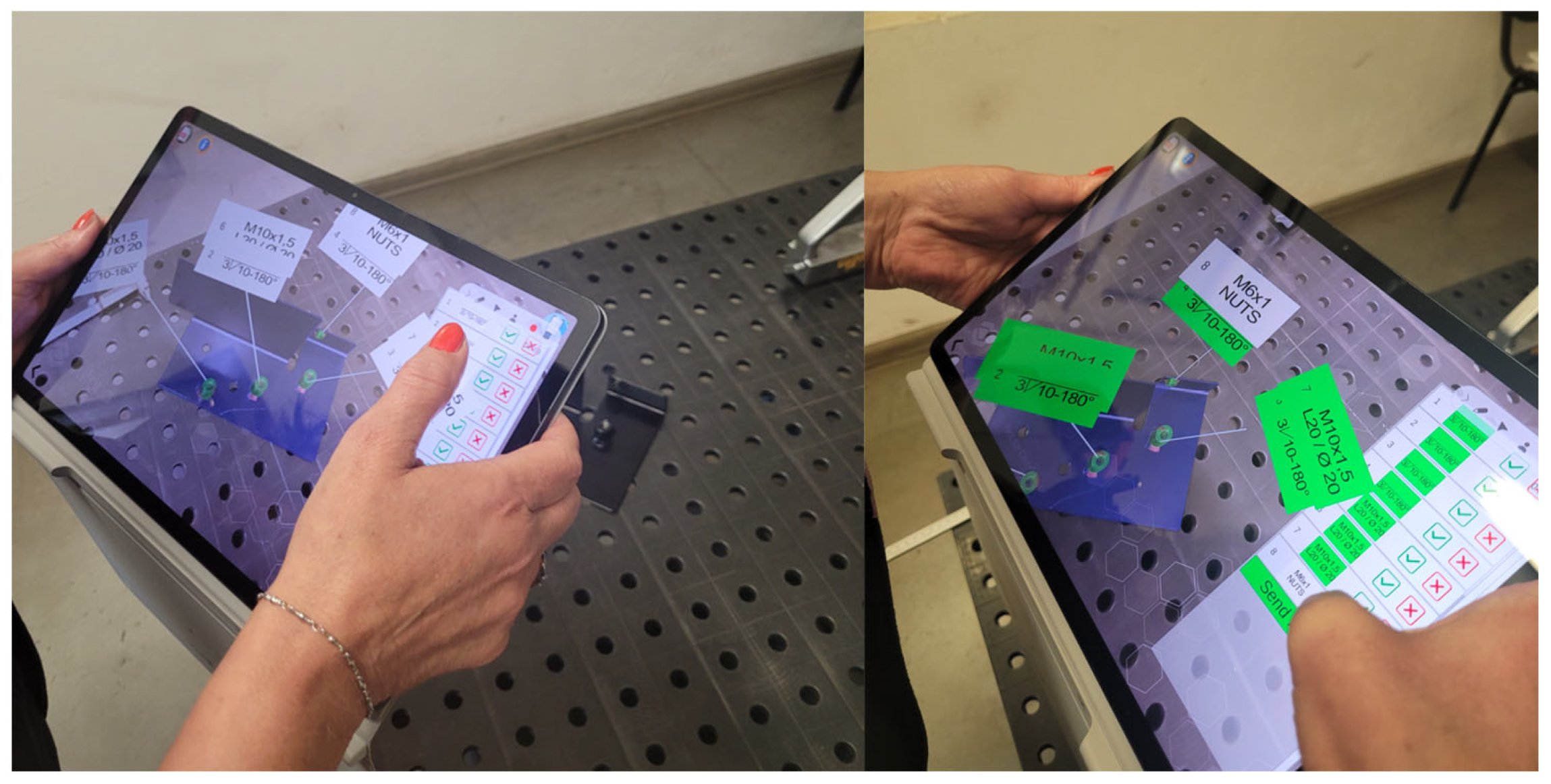

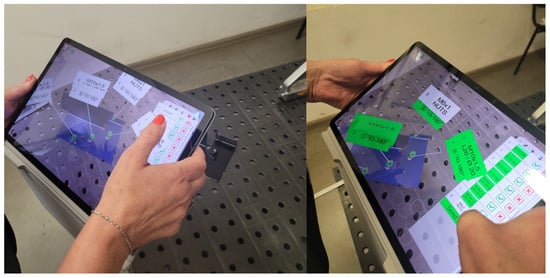

The second scenario was the proposed AR inspection approach. In this case, the participant was guided interactively through the process from the beginning with the support of the developed AR software.

Once the AR scene was started, a video recording of the real scene began as the input for the AR module of the software used. It processed the AR scene with the help of the Vuforia Engine SDK functionalities. With the Vuforia extension, the tracked object acts as a target without the need to add an artificial marker. After the detection and recognition module recognised the tracked object, it deployed the programmed 3D visualisation and user interface created in Unity 3D into the real scene with the help of the tracking module. This environment interactively guides the user through the inspection plan, projects an ideal CAD model onto the real part for an easy comparison of the basic features, and displays the interpretation of the parameters in space on the inspected piece. Conformance decisions are dependent on the judgment of the inspector, who records conformance or deviation from the quality objectives on an AR checklist. This interactively marks the already checked characteristics in an AR scene anchored above the real product being inspected. Once the inspection is complete, the results of the checklist are uploaded to the Firebase database, and if a deviation from the quality is captured, an annotation describing the deviation is added to the checklist before uploading. An example of the AR approach is shown in Figure 6.

Figure 6.

A workstation while performing inspection with an AR approach.

Both the G1 and G2 groups performed the control experiment using the described conventional approach and the proposed AR approach. In total, 50 pieces were inspected in the experiment, which included 10 types of products. One inspection set of 25 products was used for each tested approach. Both control sets included products comparable in complexity, design, and dimensions. They contained the same number of parts with the same error ratio. Group G1 first inspected one set with the AR approach, then the other set in the context of the conventional approach. Group G2 inspected the first set with the support of the conventional approach and then the second set using the proposed AR approach. Thus, all probands eventually inspected all parts, eventually using both inspection approaches. The individual parts came to the probands for inspection randomly.

A brief training session was conducted at the beginning of each phase of the experiment. The participant was first briefly introduced to the process and the operation of the AR application or the structure of the paper-based inspection documentation. Subsequently, the user was given time to test the functions of the AR software. It was explained to the probands how the experiment would proceed, and they were instructed to perform the checks in the shortest possible time while being as accurate as possible with the least number of errors. This phase lasted 5–10 min.

The main phase of the experiment, i.e., the inspection of the two control sets, was then carried out. The results from the AR application were continuously uploaded directly to the Firebase database, while paper results were collected at the end of the process. Time was tracked, and feedback and comments from probands were collected during the control. After completing the review of the first 25 pieces, probands were asked to complete the NASA TLX (Task Load Index) questionnaire to rate the mental workload they perceived during the process. They then proceeded to check the second set of 25 parts. After completing this part, they again completed the NASA TLX. In addition, probands completed the SUS (System Usability Scale) questionnaire, in which they rated the usability of the proposed approach, and an anonymised questionnaire, in which they provided information about their age, gender, work experience, their relationship with IT and technology, and their experience with AR and VR. Subsequently, an interview was conducted with each proband to find out their opinion about the experiment conducted, which method they would prefer in their daily work, how they rate the clarity and potential of each approach, and what they see as the advantages, disadvantages, and limitations of each approach.

3.5. Data Collection

Users were monitored for the total time taken to complete the inspection of the full set with the AR approach and the second set using the conventional approach described above. In the measured times, only the times from the initiation of the piece check to the completion of the check were considered. The time required for manipulation between checks was not included in the total time.

In addition to task completion time, the error rate was also monitored for users. Errors were randomly placed on the inspected parts, and probands were asked to detect them. The same number and types of errors were distributed in both sets. For each participant in both inspection scenarios, the number of errors they made while inspecting the parts was recorded. In most cases, this was an error where the proband missed a defect on the part. In one case, there was an error where the proband marked the correct part as defective.

In addition to the overall task completion time and error rate, the level of mental workload perceived by users during the experiment was evaluated. The NASA TLX standardised method was used to assess this workload. Specifically, a variant of the RTLX (Raw Task Load Index) was used. This mental workload assessment questionnaire was rated by probands after the completion of each of the two phases.

Last but not least, users also evaluated the usability of the proposed solution. The SUS questionnaire was used for this evaluation. This is a standardised method of evaluating the usability of a solution. In this experiment, this method was used to obtain feedback from probands regarding the usability of the proposed AR approach.

4. Results

During the experiment, the task completion time of the AR-based control set and the second set using the conventional approach described above was monitored. In addition to the task completion time, the probands’ error rate was also monitored. Furthermore, the level of mental burden perceived by the participants and the usability rating of the proposed AR solution were evaluated.

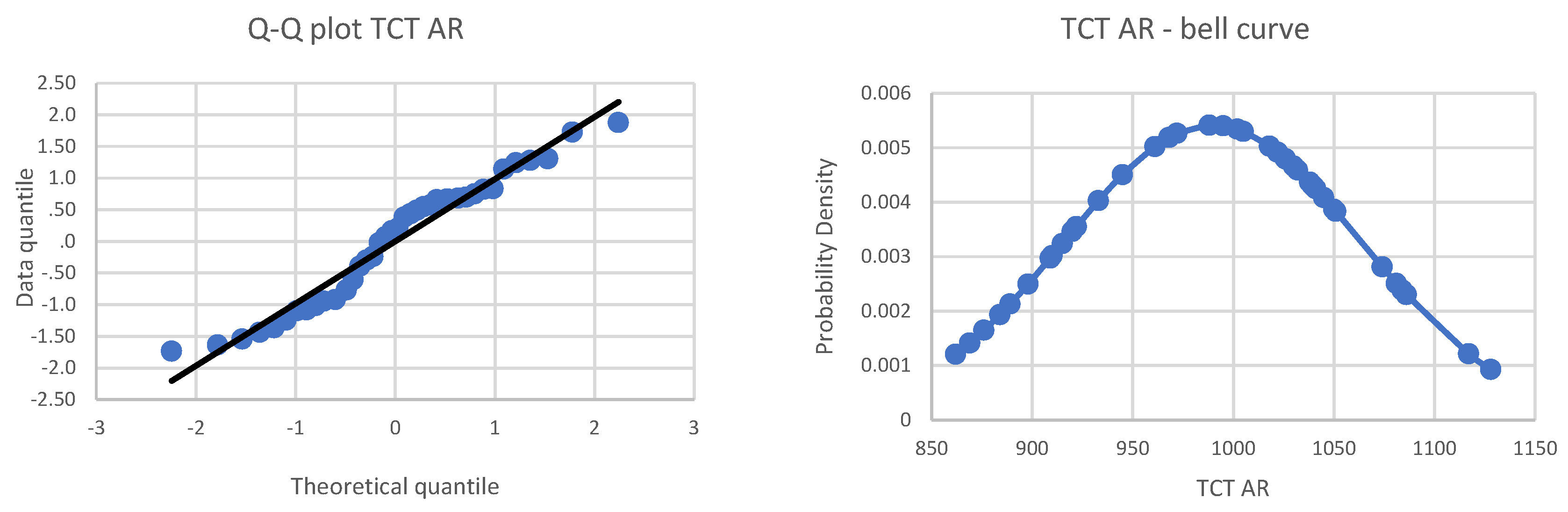

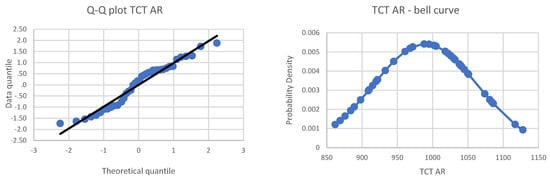

The data obtained from the experiment were then statistically analysed to identify significant associations between the factors studied. Statistical tests used a significance level of α = 0.05. For all data sets, the normal distribution of the data was first verified. A combination of the computational Shapiro–Wilk test, and graphical interpretation of the data using the normal distribution bell curve and Q-Q plot provided a comprehensive approach to assessing the normality of the distribution of the observed data. Figure 7 shows an example of the graphical interpretation of the data for the total control completion time with AR support of all probands’ data analysis.

Figure 7.

Tests of data normality from inspection using AR approach.

4.1. Efficiency

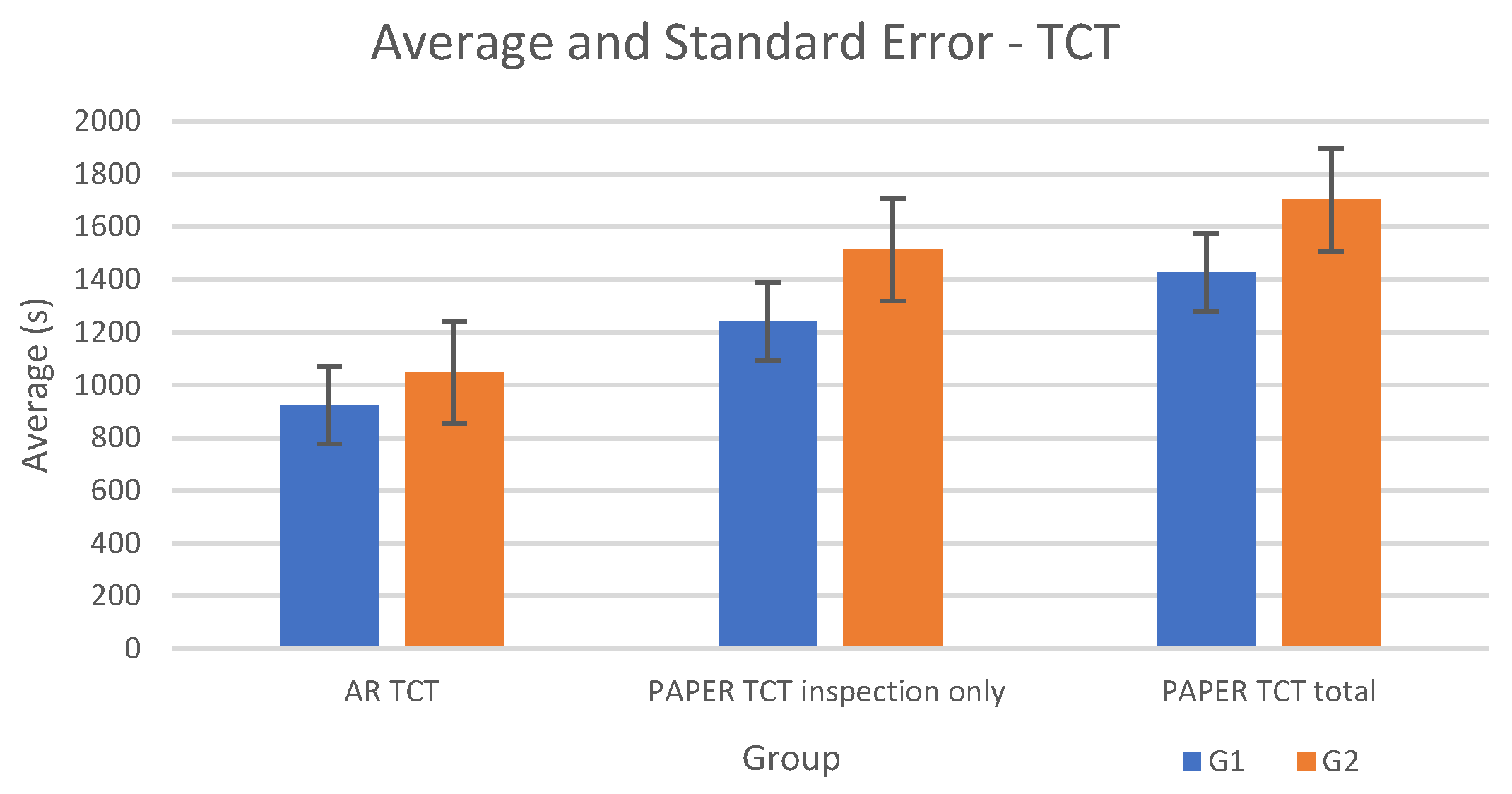

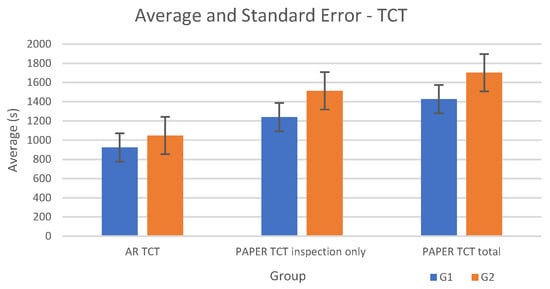

To evaluate the effectiveness of the task performance in the experiment, a two-way analysis of variance, abbreviated as ANOVA, followed by an analysis of residuals [70,71] and a post hoc Tukey test [72] was performed. ANOVA is a form of statistical analysis that divides the total variance in a set of data into sections that are connected to certain factors causing variance in order to test hypotheses related to the model´s parameters [73,74,75]. Figure 8 shows a graph with the means and standard errors for the ANOVA analysis. The graph shows three pairs of bars, with each pair representing one group of data. The first column in each pair represents the G1 group, while the second column represents G2. The columns are divided depending on the approach used, namely the AR approach (first group of columns) and the traditional paper-based approach (second group of columns). The third set of columns includes the inspection time with the conventional approach, which, unlike the second set of columns, also includes the documentation handling time. The standard error is reported in the graph as a measure of the variability of the means between the groups, along with the standard error in this graph, which allows for a visual comparison of the stability and precision of the averages.

Figure 8.

Average task completion time and standard error.

The graph of averages shows that G1 using AR achieves the lowest average task completion times. G2 with AR support shows the second-lowest average. The results from G2 show a higher value of standard error, indicating some variability in the results. With the support of traditional paper-based methods, both groups show longer average completion times. The difference between the average completion time of the control task for G1 and G2 is higher for the traditional paper-based methods than for AR.

In paper-based inspection, the user is forced to divide their attention between the documentation, the part to be inspected, and the inspection and measurement tools. This division of attention leads to increased time for the completion of the inspection, distraction, and the relatively poorer or slower orientation of the inspected piece. In this scenario, higher demands are also placed on the user’s measurement and metrology knowledge. It is therefore a task demanding the time, concentration, and expertise of the worker. In the case of the proposed AR approach, the augmented reality interface supports the user in a quick basic orientation of the product. By comparing it with the ideal state of the model, the user gets a quick idea of the desired layout of subassemblies and welded joints, and they are thus able to complete the inspection of the part in a relatively short time, possibly finding an error. At the same time, thanks to the visualisations used, lower demands are placed on the user’s professional qualifications or experience in reading and interpreting welding drawings.

During the execution of the inspection task during the experiment, most participants showed a gradual decrease in check times. The reason given was that they were able to navigate the drawings and the part itself more quickly when repeatedly inspecting the same type of product. At the same time, however, even the participants with the most extensive experience and professional qualifications stated that, given the complexity of the parts inspected, their similarity, and the total number of product types inspected, they did not reach a state during the experiment where they were able to inspect the part by memory without any supporting documentation. Thus, none of the participants reached a level of part familiarity where the AR approach to inspection would in turn begin to slow them down and negatively affect their performance. However, it is likely that with a different experimental setup, i.e., a different duration for completing the control task or different variability, this learning factor may influence the course and results of the experiment in a different way than in this research.

The results of the two-way ANOVA analysis demonstrated a significant main effect between blocks of research data and suggested significant differences between G1 and G2, as well as between the use of the AR approach and traditional paper-based approaches. The F-test values indicate statistically significant differences between G1 and G2 (F(1.36) = 468.90, p < 0.001). Statistically significant differences were also observed between the user support approaches (F(1.36) = 1696, p < 0.001). A significant interaction between the experience factor and the support method factor was also observed (F(1.36) = 76, p < 0.001).

Based on the high F-test values compared to the critical F-value and the low p-values, it is possible to reject the null hypothesis and accept the alternative hypothesis, which states that the differences in performance between user experience and support approach are statistically significant. G1 achieved a mean task completion time of 927.8 s using the AR approach and 1238.3 s when using the paper-based support. G2 achieved a mean time of 1051.5 s with the AR approach and 1528 s with paper-based support. These findings suggest that using AR support significantly reduces task completion time compared to the traditional paper-based approach. The interaction between experience level and the method of inspector support suggests that the difference in the effectiveness of the AR approach compared to the traditional approach is more pronounced for the G2 group, i.e., inexperienced participants, than for G1, i.e., experienced inspectors. This suggests that the effect of AR support on user performance depends on the level of experience and may have different effects on different users.

Figure 8, with means and standard errors, provides a clear visual comparison between the different groups and the different approaches within the experiment. The results suggest that the use of AR has the potential to reduce task completion time compared to the use of traditional paper-based materials.

Next, Tukey’s post hoc test was performed after ANOVA analysis. The results of the post hoc Tukey test showed that the absolute difference between G1 and G2 groups was 123.7, with a critical value of 27. This result confirmed a statistically significant difference between G1 and G2 under the AR approach.

This experiment confirmed that there was a statistically significant difference between the G1 and G2 groups. These results may provide important insights for the further investigation of the effectiveness and efficiency of the AR-assisted user support methodology in weldment control.

4.2. Error Rate

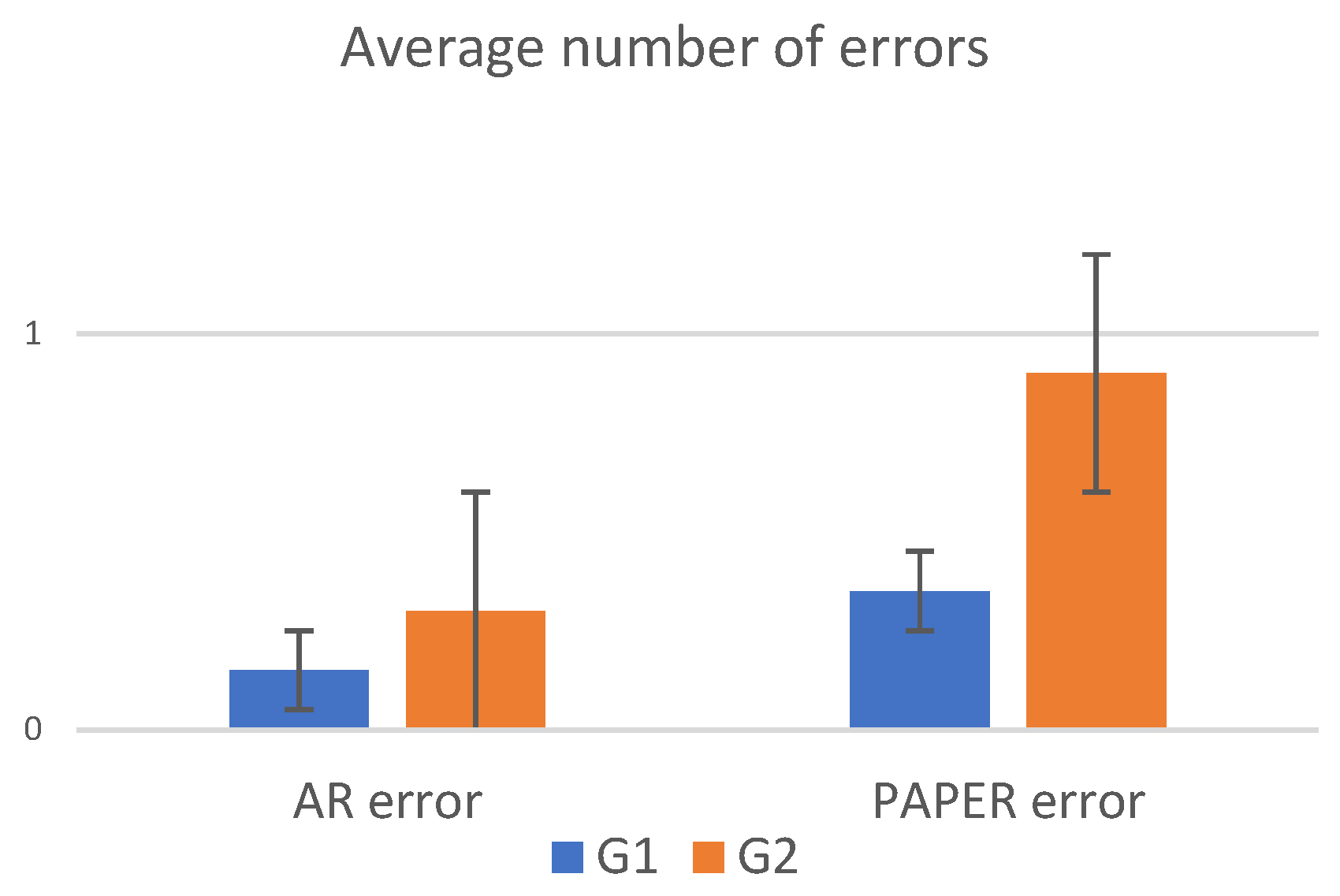

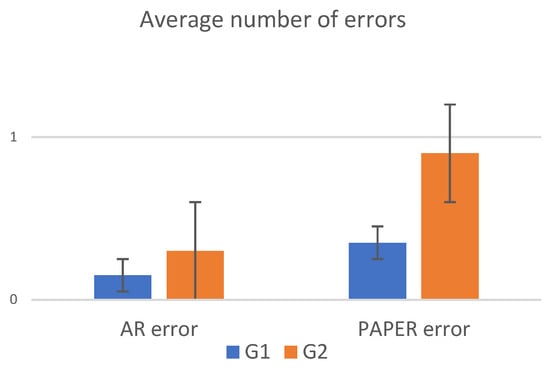

The experiment not only investigated the execution time of the task but also focused on the evaluation of the error rate during this control process.

Figure 9 provides a graphical overview of the mean error rate and standard errors, showing the error rate results for each group and how they compare between the AR and traditional methodologies. This graph shows the average number of errors, where a unit represents a single error, providing a visual representation of the frequency of errors recorded by participants during the research. The lowest average error rate was recorded in group G1 when using the AR method, indicating that this combination achieved the best results. The second lowest average is observed in group G2 when using the AR methodology. Group G1 using the traditional methodology shows a higher average error rate than the previous two groups, while group G2 using the traditional methodology has a relatively and significantly higher average.

Figure 9.

Average number of errors.

For the error rate data, the Shapiro–Wilk test did not confirm the normal distribution of the data. Therefore, a non-parametric Friedman test was performed instead of a parametric ANOVA analysis. By calculating the Friedman test for the measured error rate data, a significance value of 3.09665 × 10−11 was obtained. This value reflects the significance of differences between groups. It indicates statistically significant differences between at least one pair of datasets.

Given these results, post hoc tests were subsequently conducted to identify specific groups between which the differences were statistically significant. For this purpose, the Mann–Whitney U test was used, which is appropriate for comparing two groups. Using the Mann–Whitney U test, there were no statistically significant differences for any controlled pair of data groups (p > 0.05). A possible explanation for this situation may be that the Friedman test is sensitive to any form of differences between groups, whereas the Mann–Whitney U test is primarily aimed at comparing two groups and may not be sensitive enough to small differences between groups. Statistically significant differences between pairs of groups may not be detected using the Mann–Whitney U test, but differences may still exist.

4.3. Mental Workload

Another area monitored in the experiment was mental workload. The NASA standardised Task Load Index (TLX) questionnaire was used to assess the mental load of the participants during the experiment. This questionnaire is used to measure perceived mental workload and is often used in research involving human factors and ergonomics.

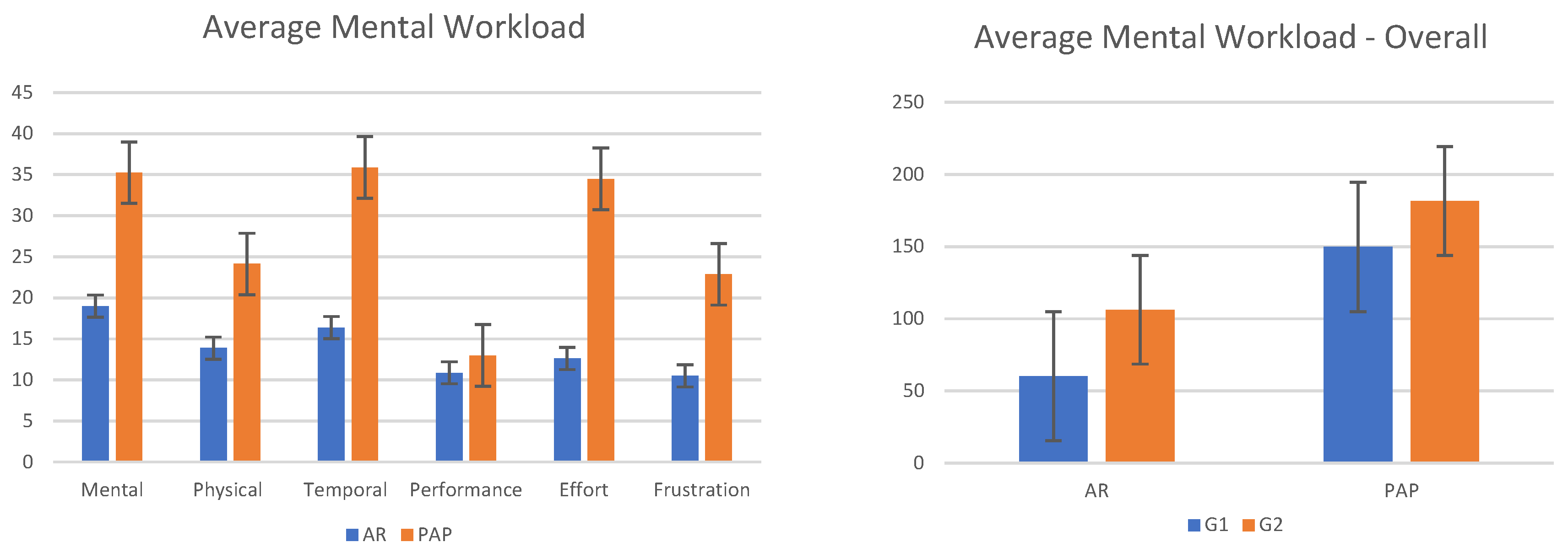

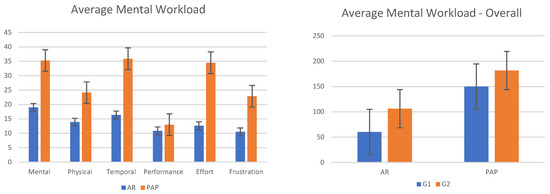

To understand the nature of the data collected, a graphical analysis of the average level of perceived mental workload and standard errors was performed (Figure 10). This analysis allows for a visual comparison of the average mental workload values between the groups, while also providing information on the variance of the data within each group. The figure illustrates the perceived mental workload components assessed using the NASA Task Load Index method. The y-axis represents the subjective ratings for different workload components. NASA TLX scores are not expressed in specific physical units, but rather reflect participants’ subjective assessments of workload, effort, and frustration. The graph is used to visually compare and analyse the relative differences in perceived workload between different tasks or conditions, providing insight into the cognitive and perceptual aspects of task demands.

Figure 10.

Mental workload of the participants.

The graph shows that the lowest mean value of perceived mental workload was achieved in G1 using the AR approach. The second lowest value was perceived in G2 when using the AR approach. This analysis provides valuable information on the differences in perceived mental workload between users depending on the inspection approach chosen and the level of experience. The results suggest that the AR approach may yield a reduction in perceived mental workload among users compared to the conventional approach. The conventional approach shows significantly higher levels of mental workload in most of the areas examined compared to the AR approach. The highest levels were observed using the conventional approach in the areas of mental demand and effort. These areas also showed the highest differences compared to the AR approach. The performance indicator showed the smallest difference between the two approaches.

The results of the two-way analysis of variance (ANOVA) indicated statistically significant differences in perceived mental workload between the different combinations of experience factors and the approach used. Within G1, it was found that the use of the AR approach had a mean mental workload of 60.25, whereas the use of the conventional method had a mean value of 149.75. The overall mean for the G1 group was 105. On the other hand, in G2, it was found that the AR methodology had an average mental workload value of 106.25, while the conventional method had an average value of 181.5. The overall mean for group G2 was 143.88.

The F-value for the approach comparison was 25.20, which is significantly higher than the critical value of Fcrit = 3.97. The p-value, on the other hand, is very low and is therefore statistically significant, indicating that there is a significant difference in perceived mental workload between the AR approach and the conventional approach.

Overall, the results can be interpreted to indicate that the use of the conventional methodology shows a significantly higher mental workload compared to the AR approach. The highest values were observed for time and effort when using the conventional method. The smallest differences were observed in performance. These data support the conclusion that the AR methodology may provide benefits in reducing perceived mental workload compared to the conventional methodology. These findings have implications for the further development and investigation of interactive systems, and can serve as a basis for the design of user interfaces concerning user mental workload.

4.4. Usability

To conclude the statistical analysis, the level of usability of the proposed solution was also examined. Testing was conducted using a standardised questionnaire called the System Usability Scale (SUS). The SUS is a widely used tool for measuring subjective perceptions of the usability of a system, methodology or tool.

The overall mean SUS score in the experiment was 94.69, indicating a high level of applicability of the proposed solution and high satisfaction with the probands (Table 1). When focusing attention on each group, the mean SUS score for G1 was 95.5, and for G2, it was 93.88. The standard deviation was 4.94 overall, 4.97 for G1, and 4.67 for G2.

Table 1.

SUS questionnaire results.

These results indicate that both groups of users rated the proposed solution as highly usable. G1 achieved a slightly higher SUS score than group G2, which may indicate that experienced workers reached a higher level of satisfaction and rated the usability of the system positively.

Although most of the participants involved in the experimental study had no or minimal practical experience with augmented reality, after familiarising themselves with the proposed AR approach, there was a positive attitude towards its potential use in the context of weldment quality inspection in industrial processes. In particular, participants highlighted the good availability of AR solutions to assist inspectors in the field. According to the participants involved in the study, AR, as a flexible tool, can help workers focus more directly on the product under investigation. They rate it as a useful way to obtain relevant information directly on the object under investigation. AR available in this manner can support users in different ways. Users positively evaluated the possibility of the immediate availability of relevant data, including its visual interpretation, which eliminates the need to first manipulate printed documentation to initiate inspection operations. Another participant mentioned support for part identification, where automated recognition of the part being inspected makes it impossible to confuse the part with other very similar products.

At the same time, probands noted the high potential of using AR in complex inspection processes in industrial applications. According to their comments, AR could become a highly effective tool to support task solving. Specifically, one participant commented on the benefits in the case of repeatedly alternating inspection of very similar parts in rapid succession and mentioned that AR could be used as a powerful tool for interactive visualisation and support for even less skilled or inexperienced users.

In several cases, participants also mentioned the fact that for inspectors with less experience or expertise, the proposed approach could help to push the boundary of tasks that these inspectors can assess and decide independently, as opposed to conventional methods where the decision-making process in these cases is beyond their capabilities and they have to seek support from a more experienced supervisor.

Most probands agreed that AR could be very beneficial for solving inspection tasks due to its interactive visualisation capability. Several probands would welcome the use of the AR approach not only as a final inspection but also as a tool for continuous self-control of the welder during operation preparation, weld interpretation, weld layout on a real piece, and continuous checking of the fulfilment of the prescribed characteristics.

One of the limitations was the ease of use. Some participants pointed out a concern about inspecting larger and more complex products. The set of parts for the experimental study was relatively easy to handle and easily accessible in terms of dimensions. This likely contributed to the fact that there were no major problems with part recognition or alignment of the digital visualisation to the real object during the study. Participants agreed that due to the nature of the task at hand, i.e., quality control on the finished weldment, they found that the need to hold the HHD did not limit their required hand movements.

One participant mentioned a concern about the lack of flexibility of an AR solution that requires specialised knowledge of AR technology. This can make it tedious to make even small adjustments to the AR visualisation.

Overall, the proposed solution achieved a high degree of usability, which is a positive indicator of the efficiency and usability of the system from the users’ perspective. These findings confirm the fact that the proposed solution has the potential to satisfy users and contribute to a positive user experience.

5. Discussion

This section discusses the results of the experiment, the benefits to industry and science, and recommendations for future research.

The research aims to evaluate the influence of the AR-based methodology of visual quality control of weldments on the performance of this control and the mental load of users in a real industrial workplace. The results of the experiment with users show that the use of AR in the quality inspection of weldments leads to an increase in the efficiency of the execution of this task, i.e., a reduction in the execution time of this inspection compared to using printed paper documentation. These results are in line with similar studies that observed an increase in the efficiency of task execution with AR support compared to paper instructions [76,77,78] and video instructions [48]. However, some studies have reported the opposite trend, i.e., a decrease in task performance when AR support is involved [79].

The lower efficiency of execution of the assembly operation can be explained by the spatial distribution of instructions and their longer search and orientation during the task. Conversely, the increased efficiency of the control process may be explained by the nature of the design of the experiment. In this experiment, users inspected weldments dimensionally characterised from 300 to 800 mm and 2 kg to 7 kg. It was therefore relatively easy for them to handle these weldments. In contrast to traditional instructions, the inspectors did not have to divide their attention, and by comparing the ideal 3D model displayed over the real weldment, even a less experienced inspector made a relatively quick initial orientation of the part and checked the basic completeness and basic dimensions without having to measure all the dimensions.

In this research, a relatively low average error rate, or failure to detect randomly distributed errors on parts, or incorrectly marking a defect-free part as defective, was observed. A lower error rate was observed using the AR approach compared to conventional printed documents. However, for the chosen α significance level of 0.05, there was no statistically significant decrease in the error rate for the proposed AR solution. In similar studies in the field of assembly research, a decrease in assembly errors was found [80,81,82].

In addition to the effect on efficiency and error rate, the involvement of the AR approach was also investigated in terms of the perceived mental workload on the users. This workload was assessed by the standardised NASA TLX questionnaire. During the experiment, users perceived a lower mental workload compared to the conventional paper-based approach. This positive result confirms that the proposed AR approach can reduce the mental workload in weldment quality control by reducing the division of attention between the prescribed design requirements and the real part. This result confirms that it is possible to reduce mental workload, increase user concentration, and promote the efficiency of inspection task execution. Other available studies confirm similar results [81,82,83,84,85].

In addition to the effectiveness results, the usability and acceptance of the proposed solution were rated positively in the final SUS questionnaires and the post-experiment interviews. The probands preferred the AR solution to the conventional approach with printed documents. The usability of the tool used was rated positively by the probands with an average score of 94.7 on a scale of 0 to 100. This rating corresponds to an A+ level and reflects high user satisfaction with the solution used. The available studies generally agree on an acceptability threshold above 70 points [86,87]. The probands appreciated the possibility of a quick comparison of the inspected part and the ideal model, and fast orientation in interpreting the requirements. According to some available studies dedicated to AR, experience with modern and innovative technologies may also influence the effectiveness of AR-enabled task execution [81]. Close collaboration with industry experts in the design of the AR approach and a pilot study to determine the limits of the proposed solution may have contributed to the high degree of applicability. The use of the HHD made it possible for the user to move freely at the workstation while inspecting the part without any restrictions related to the hardware.

Three main hypotheses were defined and then tested during the experiment. The first addresses the effectiveness of the execution of the control task and the influence of the approach used on this effectiveness. The level of efficiency in the experiment was defined by the total time to complete the task, i.e., the speed with which the proband inspected the entire control set. This indicator was tested in two variants, namely with the support of the conventional inspection approach and with the support of the proposed AR approach. At the same time, the element of user experience was monitored. Statistical analysis provided evidence of a statistically significant difference in user performance depending on the weldment inspection approach. These results support the original hypothesis which predicted that using the AR approach would lead to faster completion of the inspection task compared to the traditional method supported by printed documentation. The findings suggest that the AR approach has relatively greater benefits for a group of inexperienced probands than for experienced inspectors.

The second hypothesis investigates the effect of the methodology used on the error rate. Overall, the results of the analysis can be summarised by stating that statistically significant differences in error rates were found between the data groups, however, the tests performed as part of the post hoc analysis failed to identify specific pairs of groups with significant differences. This finding suggests that more extended statistical methods or an increase in sample size would be appropriate for further research to obtain a more accurate and comprehensive analysis of the error rate data.

The third hypothesis addressed the impact of the approach used on the level of perceived mental distress. During the experiment, it was statistically confirmed that the use of the conventional methodology showed a significantly higher mental workload compared to the AR approach. The highest levels of mental workload were observed for time and effort when using the conventional method. The smallest differences were observed in the area of performance. These data support the conclusion that the AR approach may provide benefits in reducing perceived mental workload compared to the conventional methods.

Users also evaluated the usability of the proposed solution in the experiment. The usability scores were high, corresponding to the usability category rated as “excellent”.

The research presented here also has some limitations. The main one is related to the object detection method used. Unlike the image detection method, it does not require the use of additional markers in the scene, the inspected object itself serves as a target, and therefore, this method has a very high potential for deployment in industrial applications. However, unlike the image target method, it is not yet technologically possible to detect more than one object in a scene. Therefore, it is still necessary to combine these methods when recognising multiple objects.

Further limitations are related to lighting conditions and tracking of the object. During the experiment, object target detection worked smoothly under standard lighting conditions at the workplace without the need to supply an additional light source. During a prolonged inspection or greater movement around the inspected part, at some points the visualisation disconnected from the target, and the scene needed to be re-anchored.

The theoretical contribution of this research is the validation of the proposed methodology for AR-enabled inspection and its potential to increase the efficiency of control execution by end users, to reduce the error rate of their work, and at the same time, to neither increase nor, on the contrary, reduce the mental workload perceived by users. The practical benefit is to bring AR technology closer to specialised areas of inspection in industrial processes where full automation is not appropriate or not possible.

In general, it can be concluded that the proposed AR solution could be used by inspectors and operators in industrial practice in weldment quality control to detect non-conformities with the desired condition and to evaluate the quality of weldments.

6. Conclusions

The conducted research was devoted to the problem of weldment quality control in industrial processes. Inspection tasks are generally among the activities that do not add value to the product but are nevertheless an essential part of all industrial processes. There is a trend in industry towards ever-increasing demands for an error-free quality level, so industrial inspection needs to be carried out with the highest possible efficiency.

With the development of the fourth industrial revolution, the possibilities of automation are also gradually developing. While for some industries a higher degree of automation is possible, for other types of production, a high degree of automation is not appropriate. These include small batch production, products with short life cycles, or a high frequency of technical changes or design modifications.

This research therefore focuses on investigating the possibility of introducing a lower level of automation for these types of production. In the chosen level of automation, the assessment and decision-making process is inspector-dependent. However, the process of requirements interpretation, part assessment, and decision making are supported by the technological solution. In the case of this research, this technological solution is augmented reality support.

The experiment, the statistical evaluation of the experiment data, and the results of hypothesis testing confirmed that by using the proposed AR control approach, it is possible to increase the efficiency of performing weldment inspection in an industrial process while reducing the mental workload perceived by users. The results suggest that the use of an AR solution may also lead to a reduction in error rates. However, more extensive research would be required to allow for statistical confirmation.

A possible direction for future research is to look more closely at the potential for the detailed visual inspection of individual welded joints. This research would focus on the possibility of comparing quality requirements with inspected weld shape, which might allow for the continuous detection of areas that do not fall within a given quality class. The use of such solution would be particularly applicable to single-unit or large-scale structures, as opposed to vision systems that can be installed on production lines.

Another potential direction for future research would be more detailed automated weld inspection, requiring a combination of AR and advanced deep learning methods such as localisation, detection, object classification, or image segmentation. The weld quality could be assessed using a neural network trained on a large amount of image material to detect, evaluate, and identify the type of defects in the material structure through image segmentation and abnormal object detection. The classification of a sufficiently large number of images containing texture anomalous welds would be essential for this research.

Author Contributions

Conceptualisation, K.H., P.H. and P.K.; methodology, K.H. and P.H.; validation, K.H.; formal analysis, P.H. and K.H.; investigation, K.H. and P.H.; resources, K.H., P.H. and P.K.; writing—original draft preparation, K.H.; writing—review and editing, P.H., K.H. and P.K.; visualisation, K.H.; supervision, P.H. and P.K.; project administration, P.H. and K.H.; and funding acquisition, P.H. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Internal Science Foundation of the University of West Bohemia under grant SGS-2023-025 “Environmentally sustainable production”.

Institutional Review Board Statement

The study was approved by the internal university ethics committee (ZCU 012668/2023).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

https://drive.google.com/drive/folders/1T8IbXILidIFrrjdnk8XXJEo9VlfTKf16?usp=sharing (accessed on 6 September 2023).

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

References

- Felsberger, A.; Qaiser, F.H.; Choudhary, A.; Reiner, G. The impact of Industry 4.0 on the reconciliation of dynamic capabilities: Evidence from the European manufacturing industries. Prod. Plan. Control. 2022, 33, 277–300. [Google Scholar] [CrossRef]

- Lu, Y.; Xu, X.; Wang, L. Smart manufacturing process and system automation—A critical review of the standards and envisioned scenarios. J. Manuf. Syst. 2020, 56, 312–325. [Google Scholar] [CrossRef]

- Kim, H.; Lin, Y.; Tseng, T.-L.B. A review on quality control in additive manufacturing. Rapid Prototyp. J. 2018, 24, 645–669. [Google Scholar] [CrossRef]

- Toyota Production System: An Integrated Approach to Just-in-Time—Monden Yasuhiro (9781439820971) | ENbook.cz. Available online: https://www.enbook.cz/catalog/product/view/id/152463?gclid=CjwKCAiA6seQBhAfEiwAvPqu1z8bGhBTjQgAl9Yn6lj9K_Ua9ktOLKG4dkDRZRWzkFYTb8TmPBtO0BoCCXkQAvD_BwE (accessed on 7 August 2023).

- Abd-Elaziem, W.; Khedr, M.; Newishy, M.; Abdel-Aleem, H. Metallurgical characterization of a failed A106 Gr-B carbon steel welded condensate pipeline in a pe-troleum refinery. Int. J. Press. Vessel. Pip. 2022, 200, 104843. [Google Scholar] [CrossRef]

- Feng, Q.; Yan, B.; Chen, P.; Shirazi, S.A. Failure analysis and simulation model of pinhole corrosion of the refined oil pipeline. Eng. Fail. Anal. 2019, 106, 104177. [Google Scholar] [CrossRef]

- Koren, Y.; Gu, X.; Guo, W. Reconfigurable manufacturing systems: Principles, design, and future trends. Front. Mech. Eng. 2018, 13, 121–136. [Google Scholar] [CrossRef]

- Gewohn, M.; Usländer, T.; Beyerer, J.; Sutschet, G. Digital Real-time Feedback of Quality-related Information to Inspection and Installation Areas of Vehicle Assembly. Procedia CIRP 2018, 67, 458–463. [Google Scholar] [CrossRef]

- Romero, D.; Stahre, J. Towards the Resilient Operator 5.0: The Future of Work in Smart Resilient Manufacturing Systems. Procedia CIRP 2021, 104, 1089–1094. [Google Scholar] [CrossRef]

- Dey, B.K.; Pareek, S.; Tayyab, M.; Sarkar, B. Autonomation policy to control work-in-process inventory in a smart production system. Int. J. Prod. Res. 2021, 59, 1258–1280. [Google Scholar] [CrossRef]

- Aust, J.; Pons, D. Comparative Analysis of Human Operators and Advanced Technologies in the Visual Inspection of Aero Engine Blades. Appl. Sci. 2022, 12, 2250. [Google Scholar] [CrossRef]

- Johansen, K.; Rao, S.; Ashourpour, M. The Role of Automation in Complexities of High-Mix in Low-Volume Production—A Literature Review. Procedia CIRP 2021, 104, 1452–1457. [Google Scholar] [CrossRef]

- Javaid, M.; Haleem, A.; Pratap Singh, R.; Suman, R. Significance of Quality 4.0 towards comprehensive enhancement in manufacturing sector. Sens. Int. 2021, 2, 100109. [Google Scholar] [CrossRef]

- Tsuzuki, R. Development of automation and artificial intelligence technology for welding and inspection process in aircraft industry. Weld. World 2022, 66, 105–116. [Google Scholar] [CrossRef]

- Parker, S.K.; Grote, G. Automation, Algorithms, and Beyond: Why Work Design Matters More Than Ever in a Digital World. Appl. Psychol. 2022, 71, 1171–1204. [Google Scholar] [CrossRef]

- Egger, J.; Masood, T. Augmented reality in support of intelligent manufacturing—A systematic literature review. Comput. Ind. Eng. 2020, 140, 106195. [Google Scholar] [CrossRef]

- Urbas, U.; Ariansyah, D.; Erkoyuncu, J.A.; Vukašinović, N. Augmented reality aided inspection of gears. Teh. Vjesn. 2021, 28, 1032–1037. [Google Scholar] [CrossRef]

- Urbas, U.; Vrabič, R.; Vukašinović, N. Displaying product manufacturing information in augmented reality for inspection. Procedia CIRP 2019, 81, 832–837. [Google Scholar] [CrossRef]

- Marino, E.; Barbieri, L.; Colacino, B.; Fleri, A.K.; Bruno, F. An Augmented Reality inspection tool to support workers in Industry 4.0 environments. Comput. Ind. 2021, 127, 103412. [Google Scholar] [CrossRef]

- Powell, D.; Eleftheriadis, R.; Myklebust, O. Digitally Enhanced Quality Management for Zero Defect Manufacturing. Procedia CIRP 2021, 104, 1351–1354. [Google Scholar] [CrossRef]

- Xian, W.; Yu, K.; Han, F.; Fang, L.; He, D.; Han, Q.L. Advanced Manufacturing in Industry 5.0: A Survey of Key Enabling Technologies and Future Trends. IEEE Trans. Ind. Inform. 2023, 1–15. [Google Scholar] [CrossRef]

- Büchi, G.; Cugno, M.; Castagnoli, R. Smart factory performance and Industry 4.0. Technol. Forecast. Soc. Change 2020, 150, 119790. [Google Scholar] [CrossRef]