A Real-Time Simulator for Navigation in GNSS-Denied Environments of UAV Swarms

Abstract

:Featured Application

Abstract

1. Introduction

- (1)

- A real-time simulator for navigation in GNSS-denied environments is developed in order to improve the iteration efficiency of navigation algorithms;

- (2)

- A novel scene matching navigation algorithm called ISMN is proposed; based on the simulator, the ISMN algorithm is validated;

- (3)

- A relative navigation method that does not rely on inter-communication is proposed.

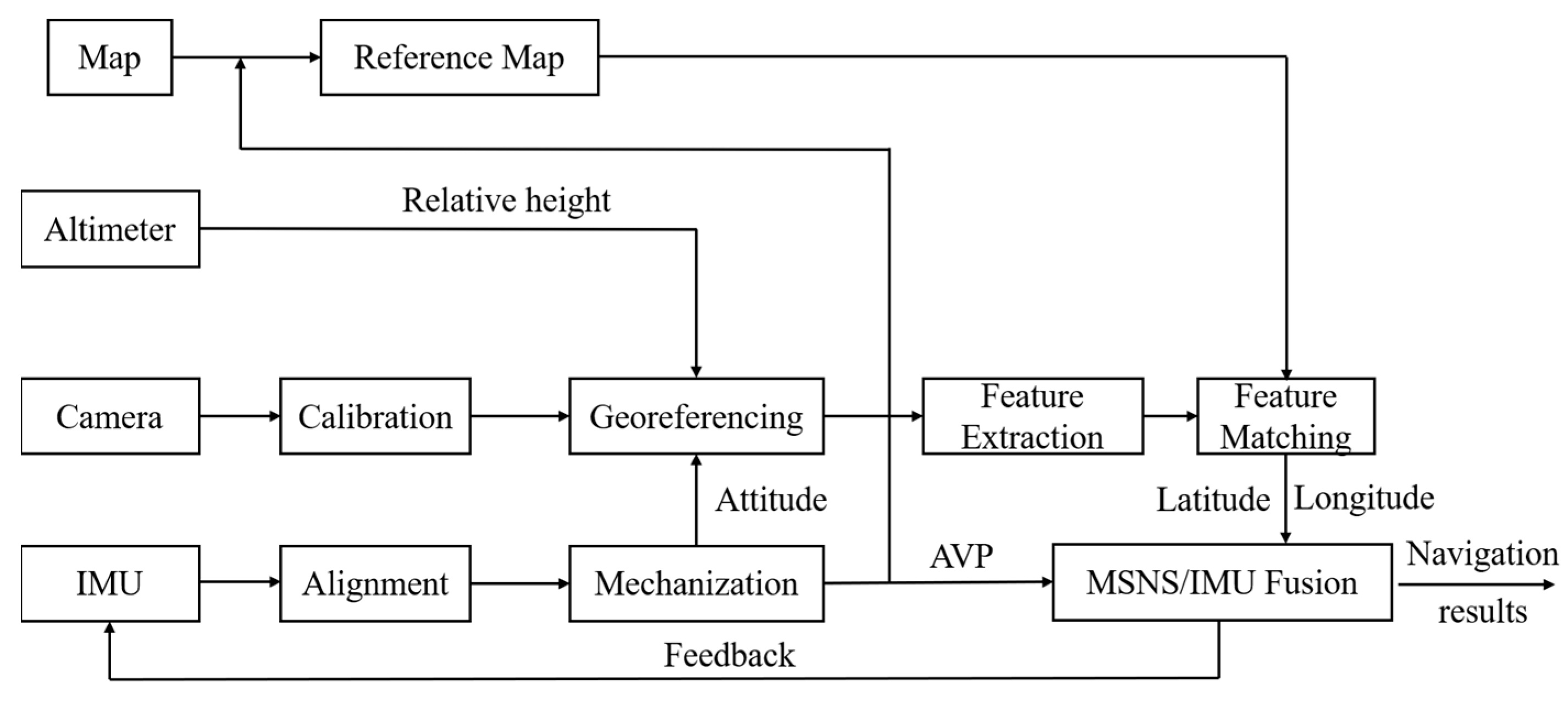

2. Architecture

2.1. Inertial-Aided Scene Matching Navigation (ISMN)

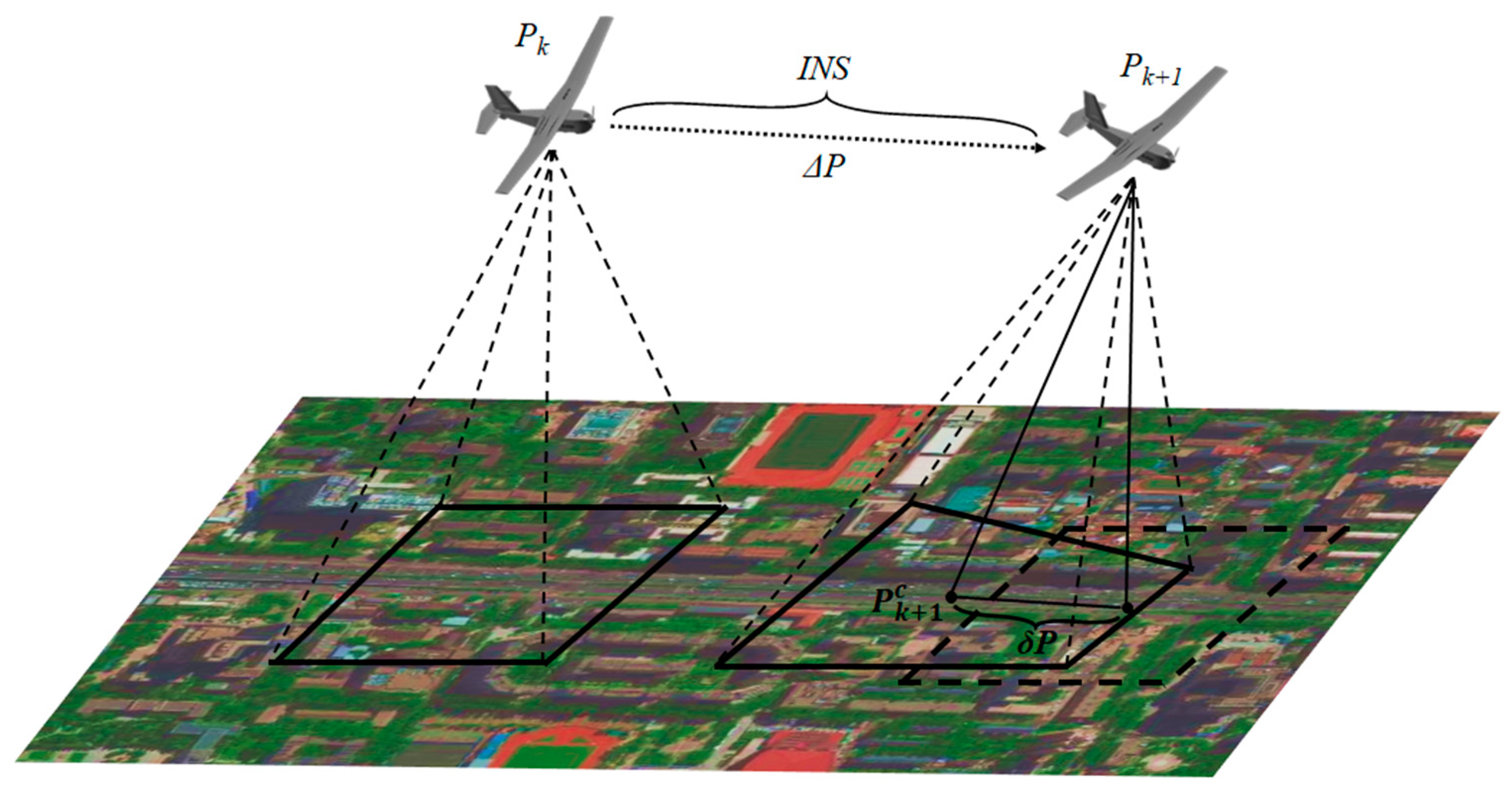

2.1.1. Reference Map from INS Propagation

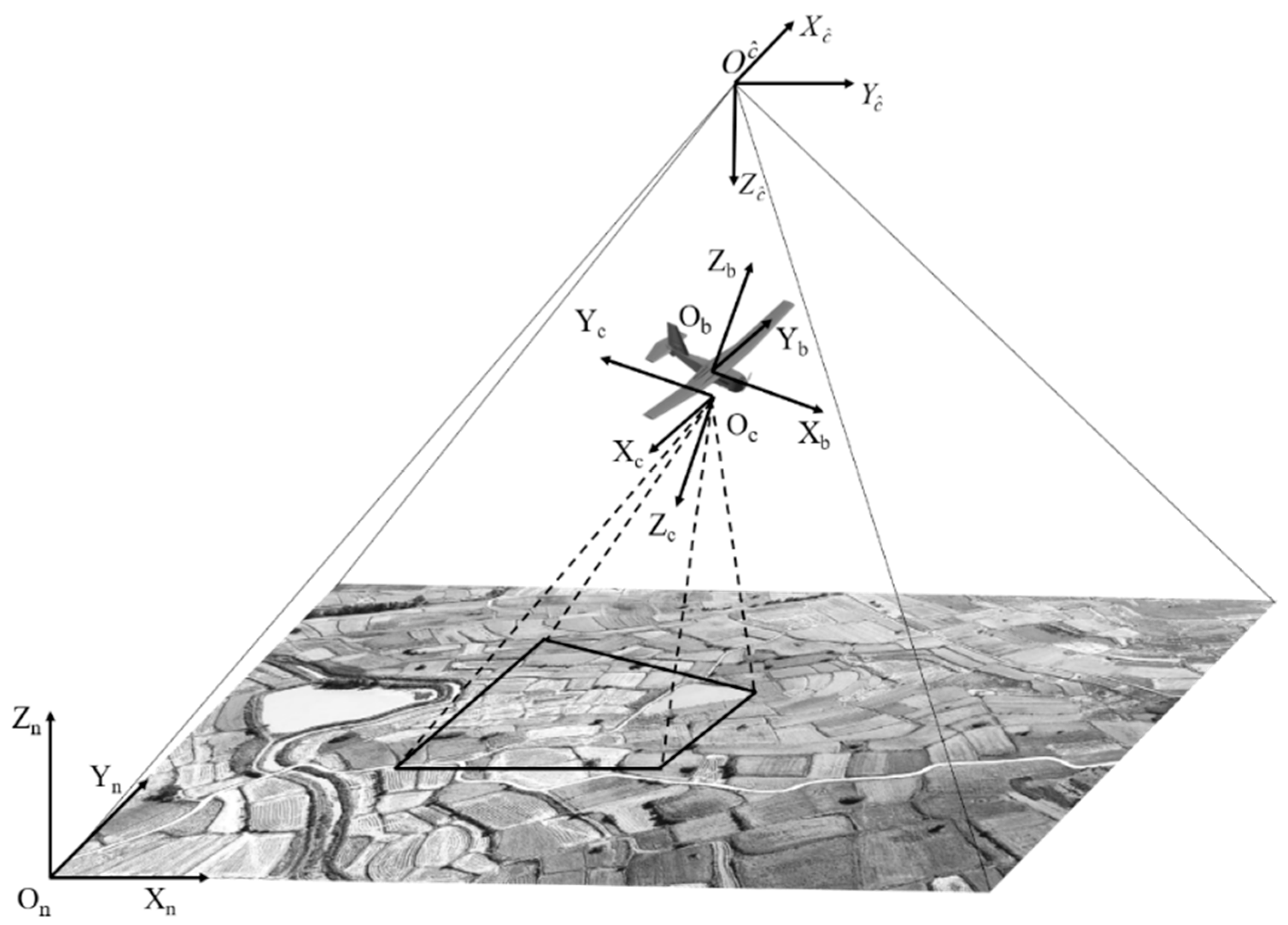

2.1.2. Inertial-Aided Georeference

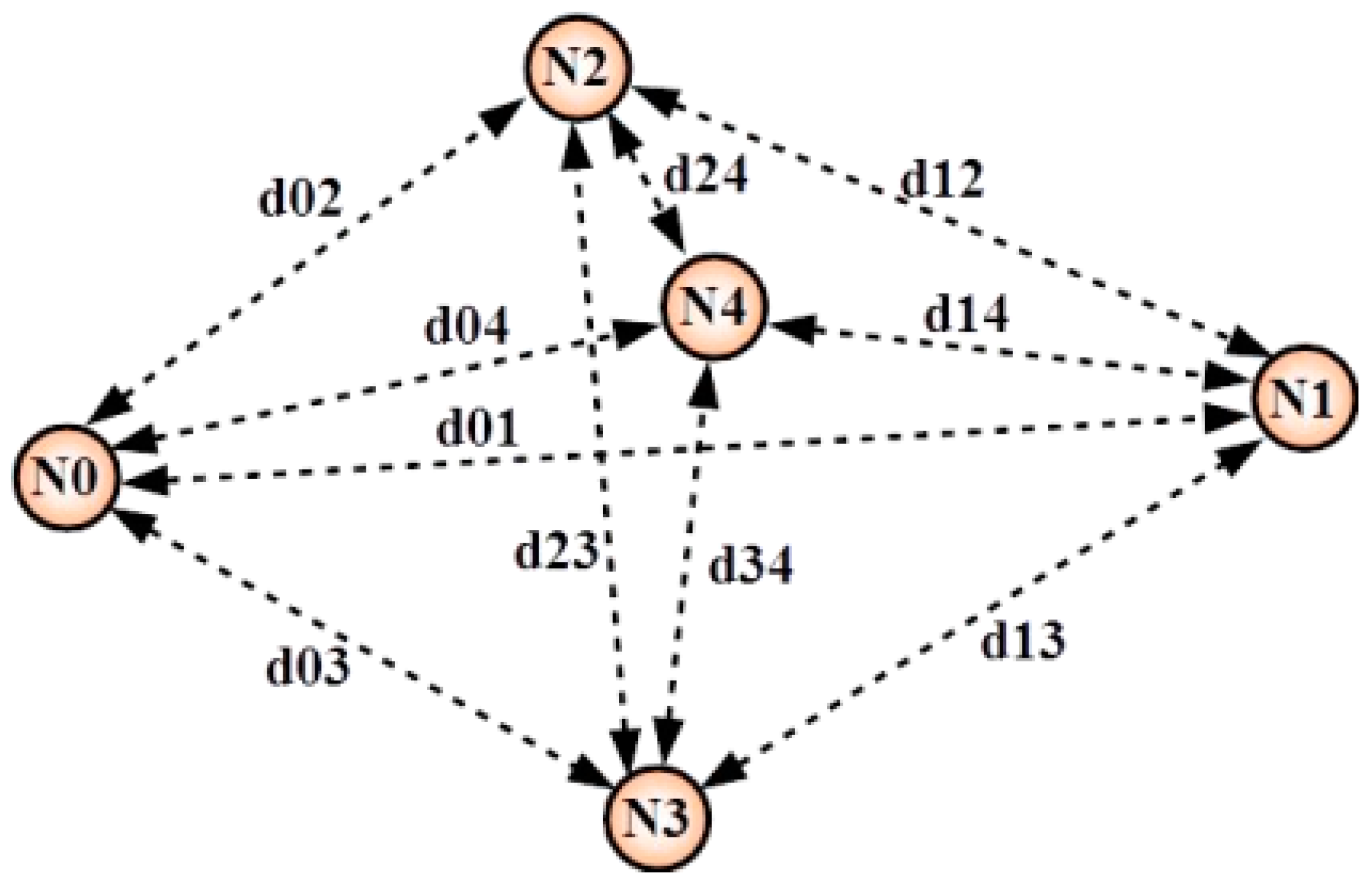

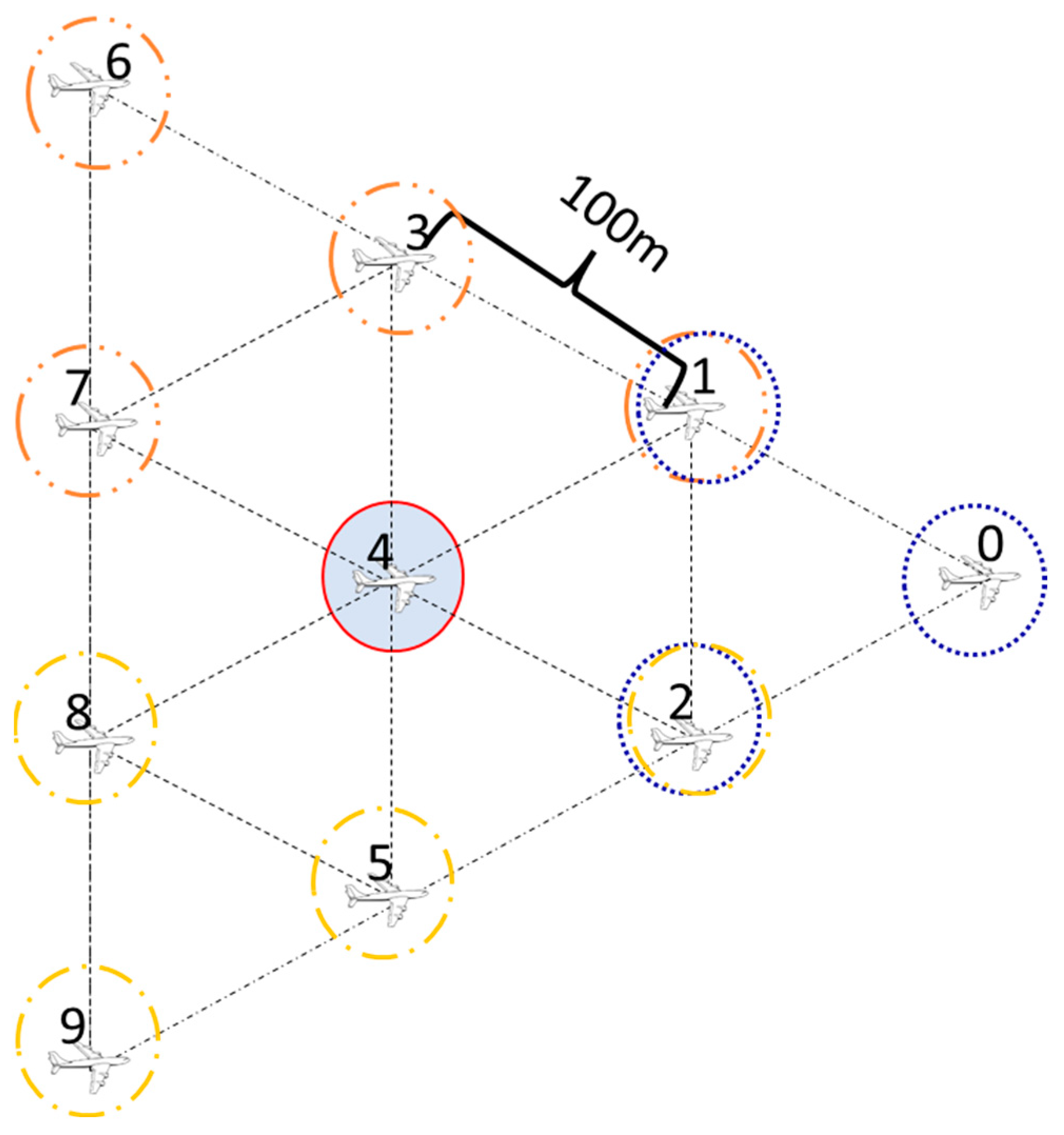

2.2. Relative Navigation Based on Vision and UWB

2.2.1. Detection and Tracking of Adjacent UAVs

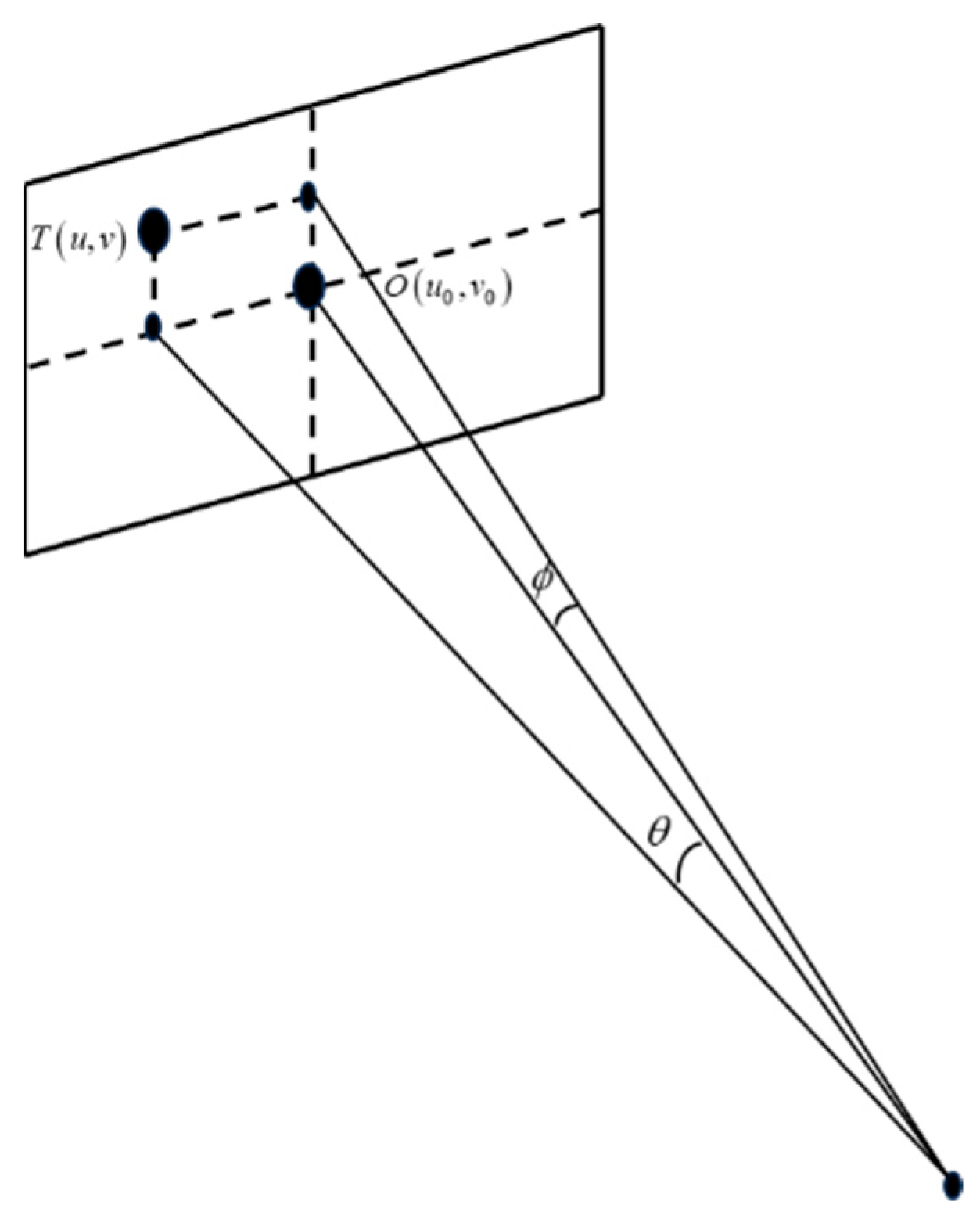

2.2.2. Calculating the Relative Position

3. Configuration of Simulator

3.1. Ultrawideband Model

3.2. Monocular Camera

3.3. Google Earth for Gazebo

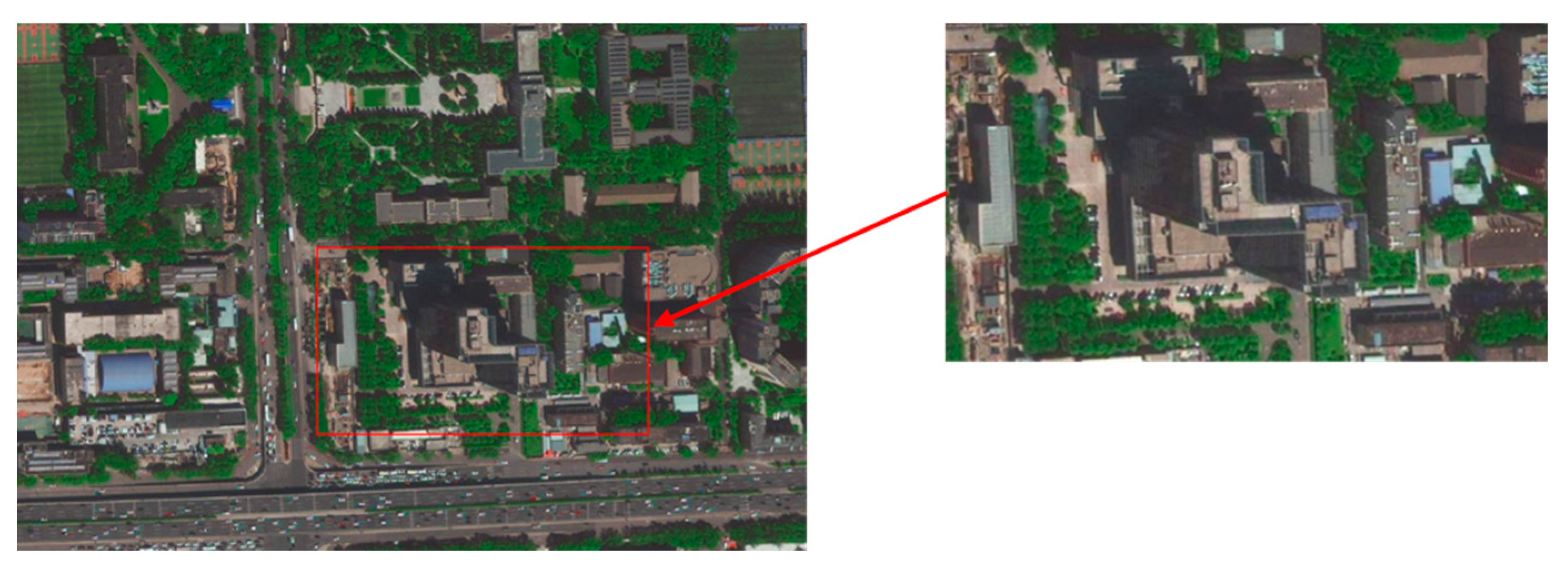

3.4. Hardware Configuration of Simulation Platform

4. Simulation Result

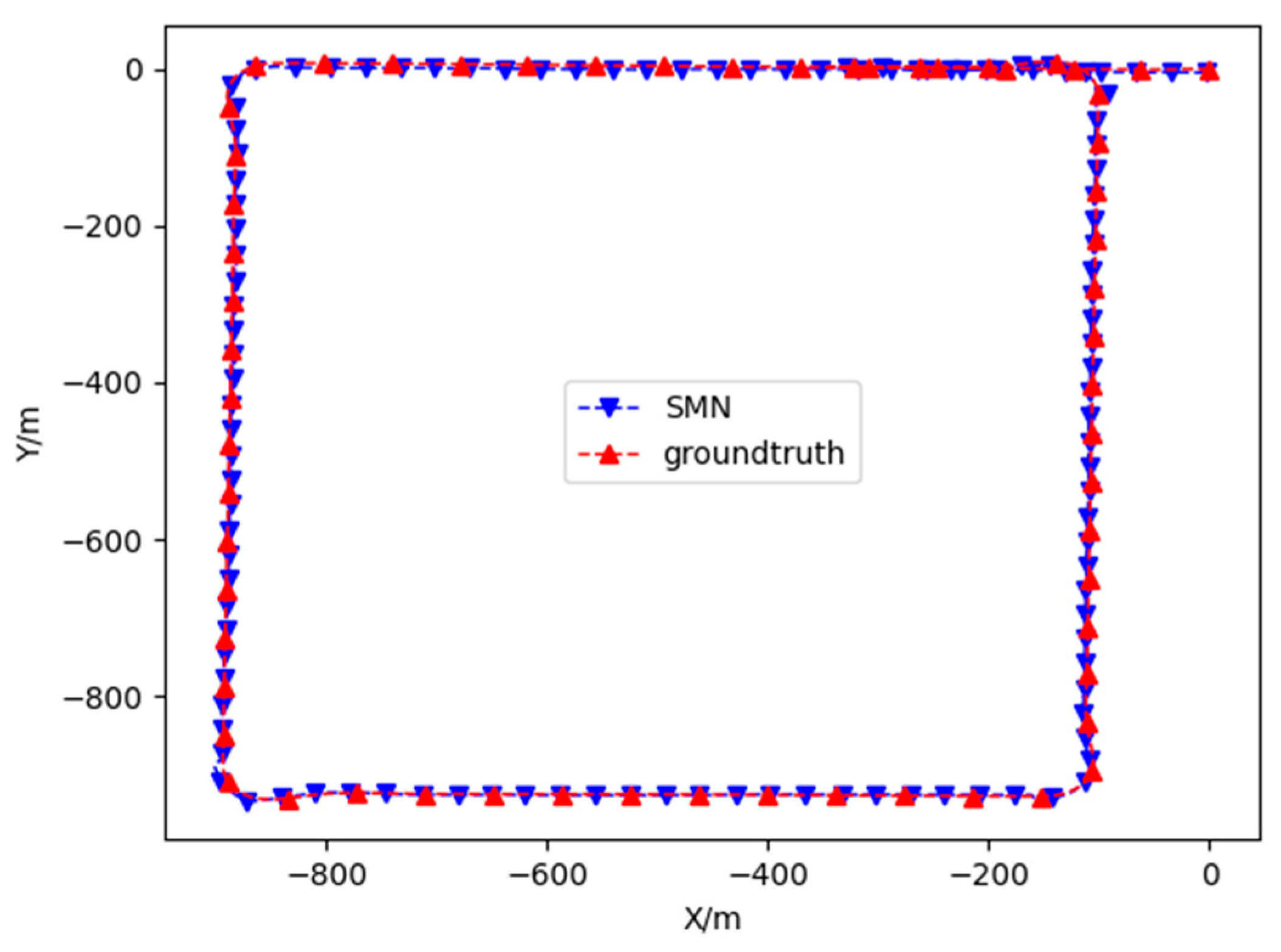

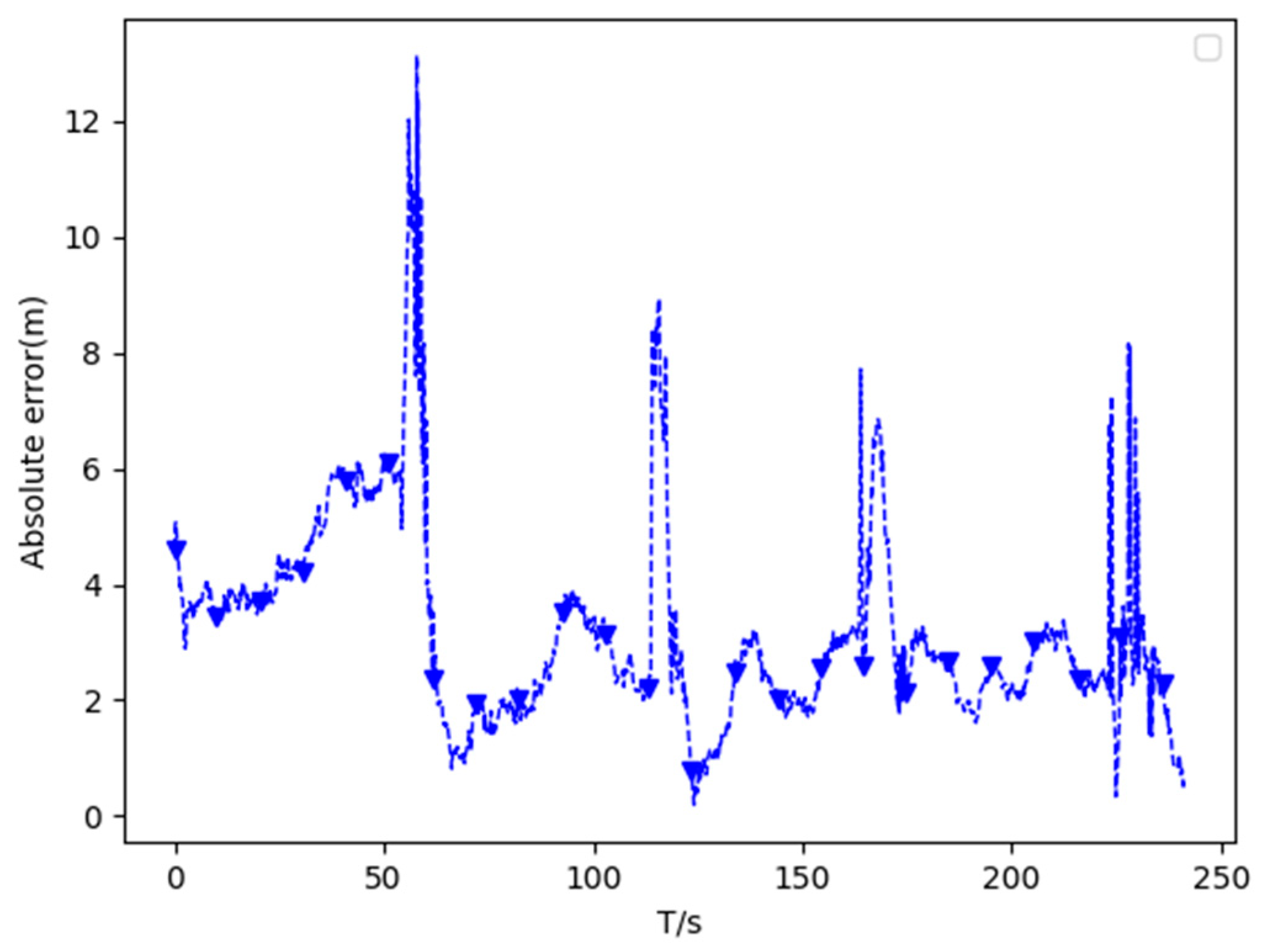

4.1. Simulation for ISMN

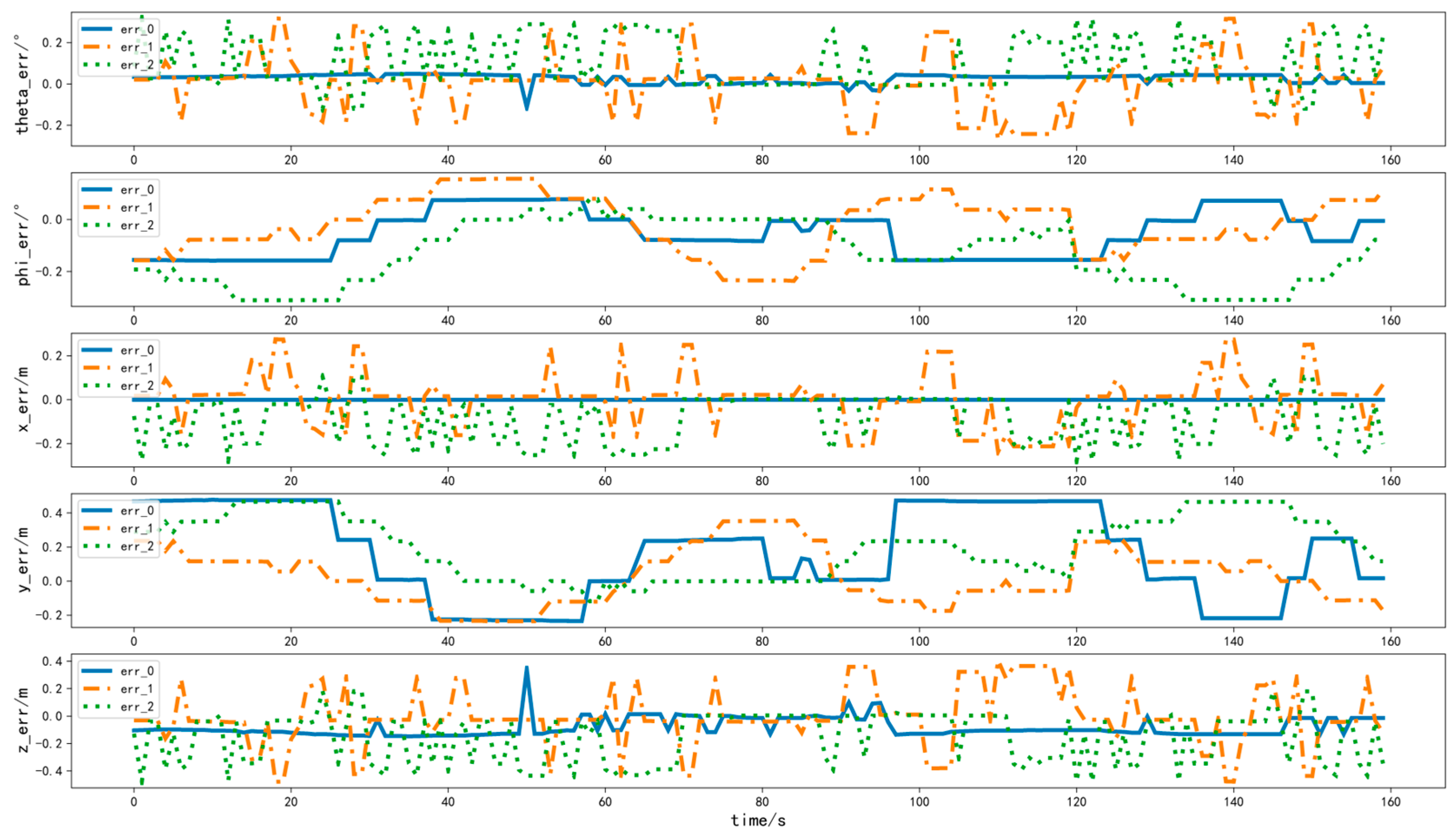

4.2. Simulation for Relative Navigation

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhou, X.; Wen, X.; Wang, Z.; Gao, Y.; Li, H.; Wang, Q.; Yang, T.; Lu, H.; Cao, Y.; Xu, C.; et al. Swarm of micro flying robots in the wild. Sci. Robot. 2022, 7, eabm5954. [Google Scholar] [CrossRef]

- Soria, E.; Schiano, F.; Floreano, D. Predictive control of aerial swarms in cluttered environments. Nat. Mach. Intell. 2021, 3, 545–554. [Google Scholar] [CrossRef]

- McGuire, K.N.; De Wagter, C.; Tuyls, K.; Kappen, H.J.; de Croon, G.C. Minimal navigation solution for a swarm of tiny flying robots to explore an unknown environment. Sci. Robot. 2019, 4, eaaw9710. [Google Scholar] [CrossRef] [PubMed]

- Shan, M.; Wang, F.; Lin, F.; Gao, Z.; Tang, Y.Z.; Chen, B.M. Google Map aided Visual Navigation for UAVs in GPS-denied environment. In Proceedings of the 2015 IEEE International Conference on Robotics and Biomimetics (ROBIO), Zhuhai, China, 6–9 December 2015. [Google Scholar] [CrossRef]

- Prometheus. Available online: https://github.com/amov-lab/Prometheus (accessed on 1 October 2023).

- XTDrone. Available online: https://github.com/robin-shaun/XTDrone (accessed on 1 October 2023).

- Xiao, K.; Ma, L.; Tan, S.; Cong, Y.; Wang, X. Implementation of UAV Coordination Based on a Hierarchical Multi-UAV Simulation Platform. Advances in Guidance, Navigation and Control; Lecture Notes in Electrical Engineering; Springer: Singapore, 2022; Volume 644. [Google Scholar] [CrossRef]

- Dai, X.; Ke, C.; Quan, Q.; Cai, K.Y. RFlySim: Automatic test platform for UAV autopilot systems with FPGA-based hardware-in-the-loop simulations. Aerosp. Sci. Technol. 2021, 114, 106727. [Google Scholar] [CrossRef]

- Wang, S.; Dai, X.; Ke, C.; Quan, Q. RflySim: A rapid multicopter development platform for education and research based on pixhawk and MATLAB. In Proceedings of the 2021 International Conference on Unmanned Aircraft Systems (ICUAS), Athens, Greece, 15–18 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1587–1594. [Google Scholar]

- Qin, T.; Li, P.; Shen, S. VINS-Mono: A Robust and Versatile Monocular Visual-Inertial State Estimator. IEEE Trans. Robot. 2017, 34, 1004–1020. [Google Scholar] [CrossRef]

- Balamurugan, G.; Valarmathi, J.; Naidu, V.P.S. Survey on UAV navigation in GPS denied environments. In Proceedings of the International Conference on Signal Processing, Communication, Power and Embedded System (SCOPES), Paralakhemundi, India, 3–5 October 2016; pp. 198–204. [Google Scholar]

- Mei, C.; Fan, Z.; Zhu, Q.; Yang, P.; Hou, Z.; Jin, H. A Novel Scene Matching Navigation System for UAVs Based on Vision/Inertial Fusion. IEEE Sens. J. 2023, 23, 6192–6203. [Google Scholar] [CrossRef]

- Xia, L.; Yu, J.; Chu, Y.; Zhu, B.H. Attitude and position calculations for SINS/GPS aided by space resection of aeronautic single-image under small inclination angles. J. Chin. Inert. Technol. 2015, 350–355. [Google Scholar] [CrossRef]

- Saska, M.; Baca, T.; Thomas, J.; Chudoba, J.; Preucil, L.; Krajnik, T.; Faigl, J.; Loianno, G.; Kumar, V. System for deployment of groups of unmanned micro aerial vehicles in GPS-denied environments using onboard visual relative localization. Auton. Robot. 2017, 41, 919–944. [Google Scholar] [CrossRef]

- Walter, V.; Staub, N.; Franchi, A.; Saska, M. UVDAR System for Visual Relative Localization With Application to Leader–Follower Formations of Multirotor UAVs. IEEE Robot. Autom. Lett. 2019, 4, 2637–2644. [Google Scholar] [CrossRef]

- Güler, S.; Abdelkader, M.; Shamma, J.S. Peer-to-Peer Relative Localization of Aerial Robots With Ultrawideband Sensors. IEEE Trans. Control. Syst. Technol. 2020, 99, 1–16. [Google Scholar] [CrossRef]

- Xiang, H.; Tian, L. Method for automatic georeferencing aerial remote sensing (RS) images from an unmanned aerial vehicle (UAV) platform. Biosyst. Eng. 2011, 108, 104–113. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Couturier, A.; Akhloufi, M.A. A review on absolute visual localization for UAV. Robot. Auton. Syst. 2021, 135, 103666. [Google Scholar] [CrossRef]

- Cesetti, A.; Frontoni, E.; Mancini, A.; Ascani, A.; Zingaretti, P.; Longhi, S.A. visual global positioning system for unmanned aerial vehicles used in photogrammetric applications. Intell. Robot. Syst. 2011, 61, 157–168. [Google Scholar] [CrossRef]

- Conte, G.; Doherty, P. Vision-based unmanned aerial vehicle navigation using geo-referenced information. EURASIP J. Adv. Signal Process. 2009, 10, 387308. [Google Scholar] [CrossRef]

| Parameter | Description | |

|---|---|---|

| image | width, height | Width and height of pixel |

| format | Format of RGB | |

| noise | mean | Mean of the noise |

| stddev | Std of the noise | |

| inner parameters | fx, fy | Focal length |

| cx, cy | Pixel shifting | |

| distortion | k1, k2, k3 | Radial distortion |

| p1, p2 | Tangential distortion | |

| center | Distortion center | |

| clipping | near, far clipping | Near and far clip planes |

| Parameter | Description |

|---|---|

| center | The map center: latitude and longitude |

| World_size | The desired size of the world target to be covered |

| Model_name | The name of the map model |

| pose | The pose of the map model in the simulation |

| zoom | The zoom level |

| Map_type | The map type to be used, which can be a roadmap, satellite, terrain or hybrid. By default, it is set to satellite |

| Tile_size | The size of map tiles in pixels. The maximum limit for standard Google Static Maps is 640 pixels |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, H.; Miao, C.; Zhang, L.; Zhang, Y.; Li, Y.; Fang, K. A Real-Time Simulator for Navigation in GNSS-Denied Environments of UAV Swarms. Appl. Sci. 2023, 13, 11278. https://doi.org/10.3390/app132011278

Zhang H, Miao C, Zhang L, Zhang Y, Li Y, Fang K. A Real-Time Simulator for Navigation in GNSS-Denied Environments of UAV Swarms. Applied Sciences. 2023; 13(20):11278. https://doi.org/10.3390/app132011278

Chicago/Turabian StyleZhang, He, Cunxiao Miao, Linghao Zhang, Yunpeng Zhang, Yufeng Li, and Kaiwen Fang. 2023. "A Real-Time Simulator for Navigation in GNSS-Denied Environments of UAV Swarms" Applied Sciences 13, no. 20: 11278. https://doi.org/10.3390/app132011278

APA StyleZhang, H., Miao, C., Zhang, L., Zhang, Y., Li, Y., & Fang, K. (2023). A Real-Time Simulator for Navigation in GNSS-Denied Environments of UAV Swarms. Applied Sciences, 13(20), 11278. https://doi.org/10.3390/app132011278