1. Introduction

The dynamic development of sea shipping resulted in an increase in both the number of ships and their size. The observed continuous increase in traffic intensity on the main sea routes has a negative impact on the safety level at sea. Analysis of the available data on accidents at sea leads to the conclusion that the human factor is the most common cause of reported accidents [

1,

2,

3]. An analysis of marine accidents from 1994 to 2003 completed by the Marine Accident Investigation Branch (MAIB) led to the conclusion that collisions represent about 55% of all accidents, and 19% of the vessels involved in collisions were completely unaware of the other vessel before the collision; 24% of them became aware too late; and 57% of them were aware of the other vessel [

4].

It is widely noted that human error accounts for 80–85% of all marine accidents. A total of 135 marine accidents recorded in the MAIB database from 2010 to 2019 were analyzed, and navigator condition was identified as the most common human factor [

2].

Simultaneously, ongoing technological progress has an impact on navigational equipment, which positively affects the safety level. New devices and systems such as Automatic Radar Plotting Aids (ARPA), the Electronic Chart Display and Information System (ECDIS), and the Automatic Identification System (AIS) appeared. The cooperation of these devices as part of an integrated bridge system created better conditions for obtaining and interpreting data [

5]. At the same time, new methods of processing and presenting available information have also been proposed to improve the effectiveness of anticollision maneuvers (e.g., [

6,

7,

8]). All this has an impact on the navigation safety level provided these devices are operated by properly trained and competent navigators. Therefore, it is also necessary to adapt navigator training processes to the new level. In addition, the level of ecological awareness has increased. Economic and financial factors have also gained importance.

As already mentioned, all of these factors together resulted in a fundamental change in the approach to sea crews’ training. The requirement for the ship handling crew to have appropriate qualifications has proven to be of the utmost importance (training and qualification improvement is also for captains and pilots). Relevant regulations gathered in conventions were created. The acquired knowledge had to be confirmed by certificates and appropriate sea practice [

9]. A new form of training-simulator training was becoming more and more important [

10,

11]. The value of the simulation and simulators as tools supporting maritime education was appreciated. Since then, rapid growth in the development of simulators has been observed. The first simulators enabled presentation and training in a specific scope (e.g., radar simulator), gradually increasing their capabilities (radar navigation simulator, ARPA simulator, ECDIS simulator) to the most advanced navigation bridge multitask simulators or engine room simulators. The advantages of simulators used in maritime education have been noticed and supported by the most important international institutions, such as the International Maritime Organization (IMO), by introducing detailed requirements for certification, training, seafarers’ competencies at all advancement levels, equipment, and the teaching process itself (requirements for instructors) [

11]. A main target of the revised International Convention on Standards of Training, Certification, and Watchkeeping for Seafarers (STCW) is to increase the fields of marine simulator use by developing criterion-based competence evaluation rules. The transfer of simulation-based training facilities to ships for crewmembers’ use can be anticipated in the future [

12]. Seafarers receive the possibility to acquire practical competencies not only in real conditions but also in the simulated and modeled world. Thanks to this, the costs of gaining experience are lowered, and the risk of danger and consequences resulting from mistakes are removed.

As mentioned earlier, such a significant development in simulators, their diversity, and increasing availability result from the fact that international institutions, in particular the IMO, have noticed the huge role of training in simulators in improving safety. This is expressed in the regulations of the STCW Convention with the 2010 amendments, which discuss simulators in three thematic groups:

Training and assessment (Regulation I/6, A-I/6, B-I/6). The STCW Convention emphasizes that simulator trainers must also have appropriate qualifications and experience;

Use of the simulator (Regulation I/12, A-I/12, B-I/12), including the standards and guidelines contained, e.g., in the Resolution for ARPA or ECDIS;

Minimum standards of competence, i.e., model courses developed for each qualification level or courses for simulator instructors.

But unfortunately, the STCW Convention does not specify competency assessment methods to be used during examinations. This leaves considerable scope for the interpretation of these methods and the possibility of using different evaluation methods [

13].

A simulation should be understood as the approximate reproduction of an object’s phenomena or behavior using its model. Simulation can be used to indicate the possible real effects of adequate conditions or actions. It can also help when the real system is unavailable, unsafe, or simply does not exist [

14].

In the beginning, a model must be developed. A special type of model is a mathematical model, often written in the form of a computer program. Sometimes, however, it is necessary to use a smaller-scale physical model. The model is a well-defined description of the simulated subject and presents its most important characteristics, such as its behavior, functions, and abstract or physical properties. The model is a system in itself, and simulation is its action over time.

In the case of marine simulators, a more adequate definition is the one adopted by the Intersessional Simulator Working Group (ISWG). According to it, the simulation is a realistic real-time imitation of any ship operation, radar, navigation, propulsion, loading, unloading, and ballasting or any other on-board system with an interface suitable for interactive use by a trainee or a candidate in or outside of an operational environment, and it is in accordance with the performance standards set out in the relevant parts of the STCW Convention [

15].

It should also pay attention to the definition of assessment/evaluation and grade. What do these terms mean?

Assessment is the process of gathering information, making judgments about the information, and making decisions [

16]. In the context of this study, assessment is the process of gathering information about exercise execution, its conditions, and the process of comparing it with a model, pattern, regulations, and guidelines. Its purpose is to value the training level and the validity of the decisions made while the activities are assessed and to motivate and identify areas for improvement. A grade is the result of the above process, a conventional way of qualifying the work and progress of a student/trainee, or an opinion about something or someone made because of the analysis. In navigation simulator exercises, the assessment process is very complex. The assessment is made by the instructor after a careful observation of the simulation. They rely on their knowledge, gained during onboard practice, on pedagogical and didactic training, and a very good knowledge of the simulator. Such an assessment, despite even the highest competence of the instructor, can be classified as intuitive, subjective, and seen as incomplete and unfair. To avoid these claims of an unreliable assessment, the instructor may use the analytical assessment. Such an assessment will be based on the criteria assumed earlier. This will make the analysis process and the final result of the performed simulation more structured and therefore more accurate. The limits (criteria) applied will delineate the area of acceptable norms and standards. The definition of the word criterion is also worth explaining. A criterion is a standard, mark, variable, property, or point of view that makes it possible to distinguish something from something else. It serves as a basis for evaluation and a statement containing practical information on how to achieve a standard and what needs to be done in order to consider a standard met [

17].

The characteristics of analytical and criterion-based assessments are [

18]:

Results comparability;

Testing the specific skills of the student or trainee;

Ease of the measurement tool construction;

Speed of checking;

Possibility to show the results in different layouts and forms;

Lack of psychological aspects influencing the conduct of the exercise; the assessment does not take into account the individual predisposition of the trainee;

All those being assessed are treated the same with strictly defined, prescribed conditions and limitations.

When discussing the analytical assessment, attention should be paid to the problem of criterion selection. This is a very difficult task, but this type of challenge is met by state-of-the-art technology, thanks to which various applications and programs are already available today to support the assessment process.

The aim of the Simulator Exercise Assessment (SEA) application is to provide an objective assessment of the navigator’s competence during the examination scenarios. This is not an easy task due to the huge number of parameters required for the assessment.

It should also be noted that many of the factors influencing criterion values can change dynamically during the exercise execution. The environmental criterion can be considered in many ways with different levels of simulator training.

The whole automatic assessment process can be divided into four parts:

Creation stage;

Analysis and optimization of the used criteria;

Verification of the criteria in order to objectivize the assessment;

Use of test scenarios to assess the trainee.

The most important issue in automatic evaluation is the proper criteria definition. International institutions have not introduced any uniform standards, requirements, or patterns. This has been left to highly qualified simulator instructors.

According to a Safety of Shipping in Coastal Waters (SAFECO) report researching the MARCS risk model (Marine Accident Risk Calculation System) for improving coastal shipping safety, the automatic assessment criteria can be identified based on [

19]:

The experience of instructors;

The averaged results achieved from simulation with experts (pilots, captains, officers with extensive experience, and other instructors);

The analysis of results obtained during previous students’ or trainees’ simulations;

Current regulations and requirements developed by international institutions;

Existing examination and training criteria.

The objectives of this paper are to test the possibility of simulator software used for automatic assessment of navigators’ competence during collision situation solving. A series of simulations were carried out for this task, allowing the creation and subsequent optimization of assessment criteria.

Section 2 and

Section 3 characterize the tools used in this study, i.e., the simulator’s and SEA application’s capabilities.

Section 4 describes the examination scenario and the assessment criteria used.

Section 5 presents the achieved simulation results and the use of expert assessment to modify the automatic assessment criteria. A discussion of the results and conclusions are presented in

Section 6.

2. K-Sim Polaris Simulator Characteristic

The Maritime University of Szczecin (MUS) has a state-of-the-art navigation bridge simulator, the K-Sim Polaris 7.5, manufactured by the Norwegian company Kongsberg Maritime AS. This system makes it possible to provide comprehensive training for students of the MUS and enhances the skills of navigation officers, captains, and pilots operating on various types of vessels. An advantage of the K-Sim Polaris simulator is the ability to customize the configuration to meet the needs of a wide range of various courses. This applies to both hardware and software. The highest level of the system is documented by the Det Norske Veritas–Germanischer Lloyd (DNV-GL) certificate, which expresses full compliance with the guidelines of the STCW Convention on requirements for simulators used during training on specialized courses. These include sections A-I/12, B-I/12, table A-II/1, A-II/2, and A-II/3. It is possible to create and execute exercises within 16 real coast areas and on the open sea. During these exercises, 22 available own ship models with a variety of characteristics can be used (

Table 1).

The available software allows the database to be extended with customized models of ships, targets, or coastlines according to individual needs and requirements. The system can also simulate (visually and acoustically) any weather conditions (fog, rain, snow, and storms) and hydrological conditions (sea state, currents, etc.) at different times of the day.

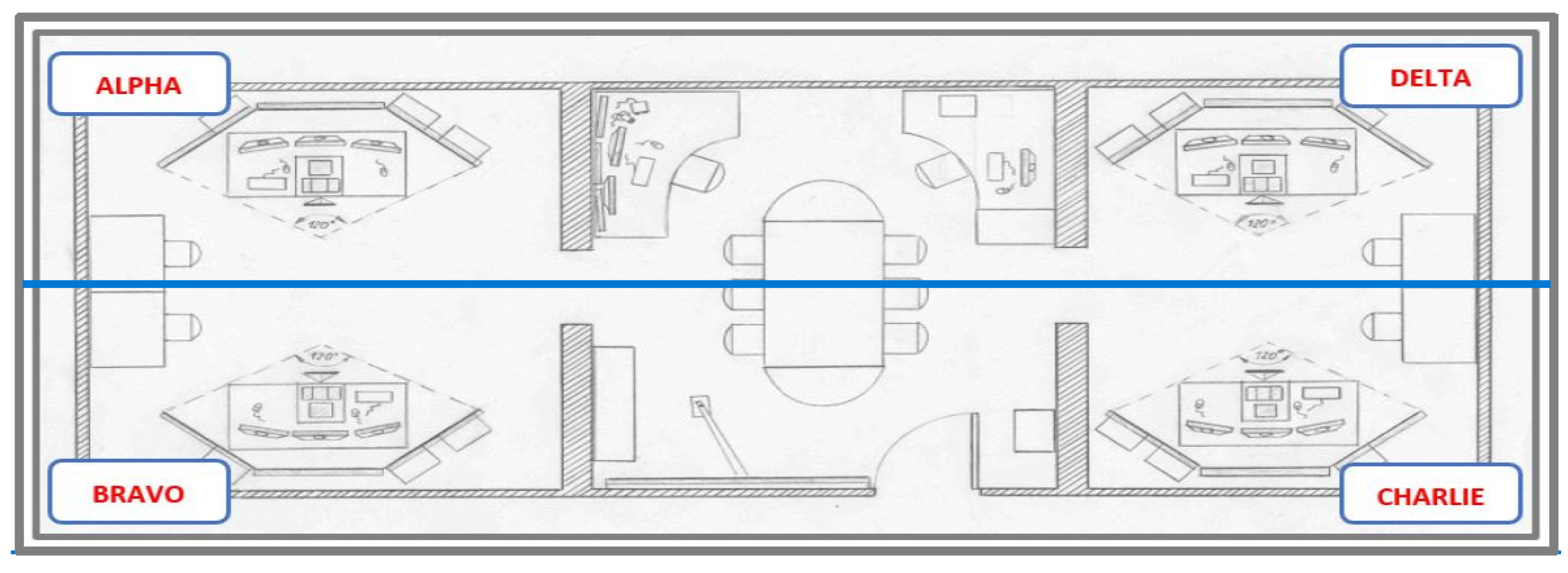

The simulator includes an instructor console, a server, and four equally equipped navigation bridges (

Figure 1).

The instructor station (

Figure 2) consists of the following components:

One PC;

Two operational monitors;

Three monitors with a video preview of the selected navigation bridge;

A panel for switching the display of the image of the selected navigation bridge;

A microphone for voice communication;

Loudspeakers.

Figure 2.

K-Sim Polaris simulator instructor station.

Figure 2.

K-Sim Polaris simulator instructor station.

Each of the four navigation bridges has the following elements (

Figure 3):

Figure 3.

View of simulator navigation bridge.

Figure 3.

View of simulator navigation bridge.

The vision system allows for observation at an angle of 120° with free rotation of the projected image around the own vessel. All modules of the K-Sim Polaris system work together in real time, but it is possible to change the start time of an exercise, stop it, or repeat it. With the right configuration, it is possible to present the correct visualization, simulate the operation of the bridge systems, and ensure effective communication (including communication between bridges).

3. Simulator Exercise Assessment (SEA) Application

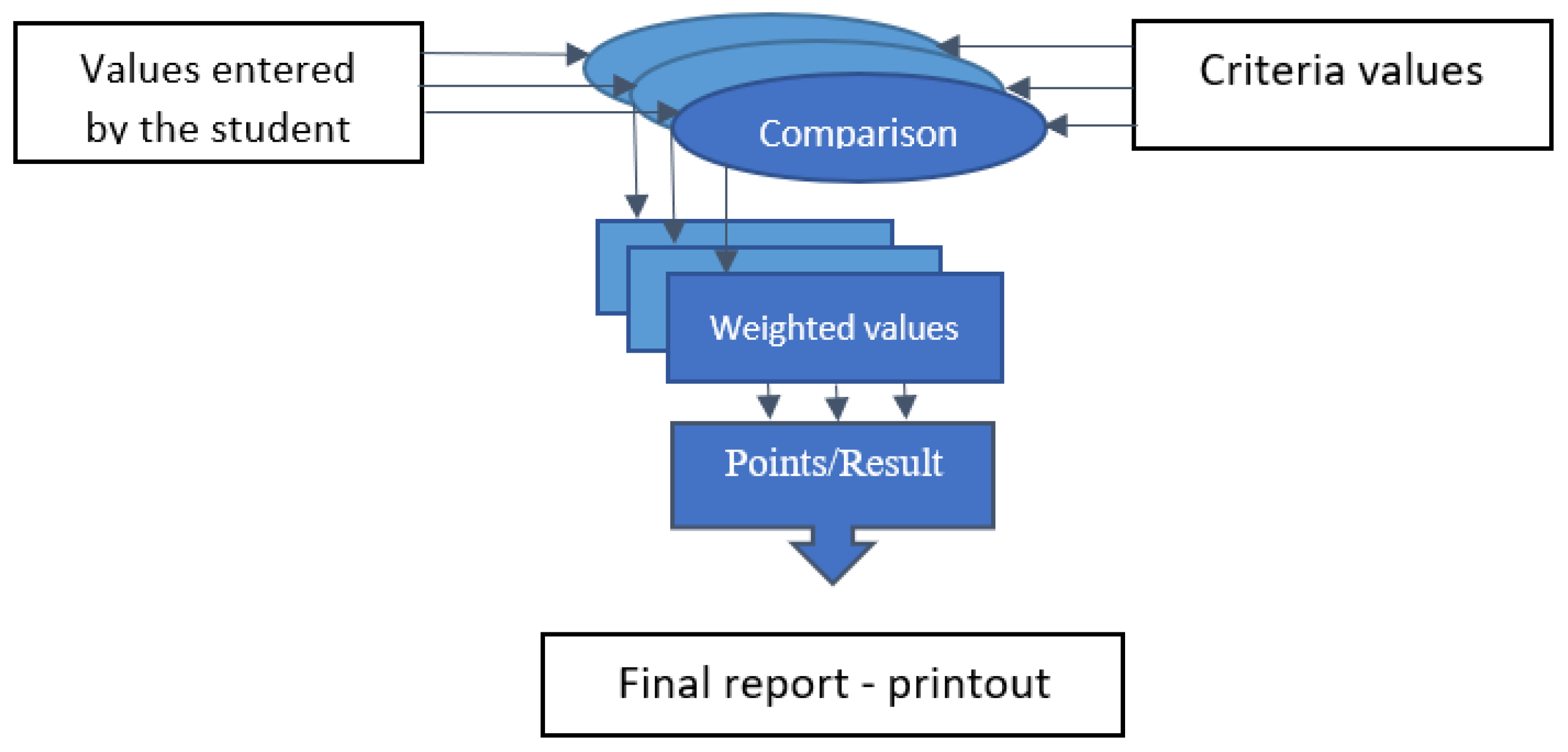

Kongsberg Maritime has developed an application that, after entering the appropriate criteria, performs a detailed, automatic assessment of the trainee activities. This program is the Simulator Exercise Assessment (SEA). The SEA system is an add-on, stand-alone application provided by KM with the entire K-Sim Polaris 7.5 package and is designed to support the assessment process. However, the instructor still plays the main role. It is up to him to prepare the actual exercise scenario and to introduce the evaluation criteria for this simulation. For him, the application is a structured, objective system for tracking multiple parameters and measuring and comparing results. A simplified diagram of SEA work rules is shown in

Figure 4.

The SEA system provides the possibility of assessment at two levels: basic and higher. In both cases, the trainer can choose which values will be monitored during the exercise and then enter the relevant criteria, weighted averages, etc., which will serve as a model of a correctly performed activity. The program automatically compares the data collected during the simulation, considers the factors and criteria, and makes an evaluation. A higher level allows the use of an additional variable, the so-called Environment Links. The classification principle is the same as for the basic assessment. However, it allows a different treatment for the parameter, which was linked, for example, to visibility, approaching a fixed point, etc. In this case, the limits of exercise correctness are dynamically changed.

The instructor, after preparing the exercise scenario and analyzing the correctness of the simulation, enters specific criteria and assessment conditions. They can enter up to 50 conditions (limited number of rows). There are 57 different criterion types to choose from [

21].

To present the 57 criteria in a more structured way, they have been grouped as follows (data from SEA):

Anticollision criteria (Minimum distance from all ships; distance from ship; distance from CPA; minimum distance to banks; minimum TCPA; relative CPA; relative bearing; and true bearing);

Criteria related to the parameters of maintaining the established route or zone (inside all track sectors; inside all track sector-port side; inside all track sector-stbd side; speed in all track sectors; speed in track sector; relative sector zone; true sector zone; distance to PI; minimum distance to fenders; minimum distance from buoys; and depth under the keel);

Criteria related to the radar/ARPA use (radar visible range; radar tracking specific ship; radar tuning; and radar parallel index lines used);

Criteria related to the ship’s movement and equipment settings (heading; course over ground; course through water; speed rate; speed over ground; speed through water; rate of turn; pitch; roll; propeller revolution; RPM order (propeller 1); RPM order (propeller 2); thruster order; rudder order; rudder angle; and anchor chain length);

Criteria related to the main engine and power supply (engine power; start air pressure; and shaft power);

Criteria used in the simulation of antipiracy or SAR operations (radio hail ship; dispatch helo; dispatch rhib; helo source; rhib source; main source; illuminate with main search light; illuminate with helo search light; illuminate with rhib search light; illuminate with para flare; illuminate with flare gun; warning shots 76 MM gun; warning shots 50 caliber gun;eEngage with 76 MM Gun; and engage with 50 caliber gun).

For all criteria selected for evaluation, specific ranges for their activation should be selected. They can also be linked to each other. In this case, a given criterion will be considered only if one or more of the conditions selected for other criteria are met. This approach allows for a more flexible evaluation of the course of the exercise, which is better adapted to real conditions. An example of an evaluation parameter selection sheet is shown in

Figure 5.

4. Testing Exercise

4.1. Test Scenario and Exercise Execution

An exercise to test the ability to correctly assess a situation around a ship using radar equipment was carried out in open water with restricted visibility.

The following hydrometeorological conditions were simulated:

Restricted visibility to 0.5 NM;

Air temperature of 20 °C;

Atmospheric pressure 1080 hPa;

Wind direction 270°, speed 15 kn;

Water temperature 18.7 °C;

Wave height 1 m, direction 090°.

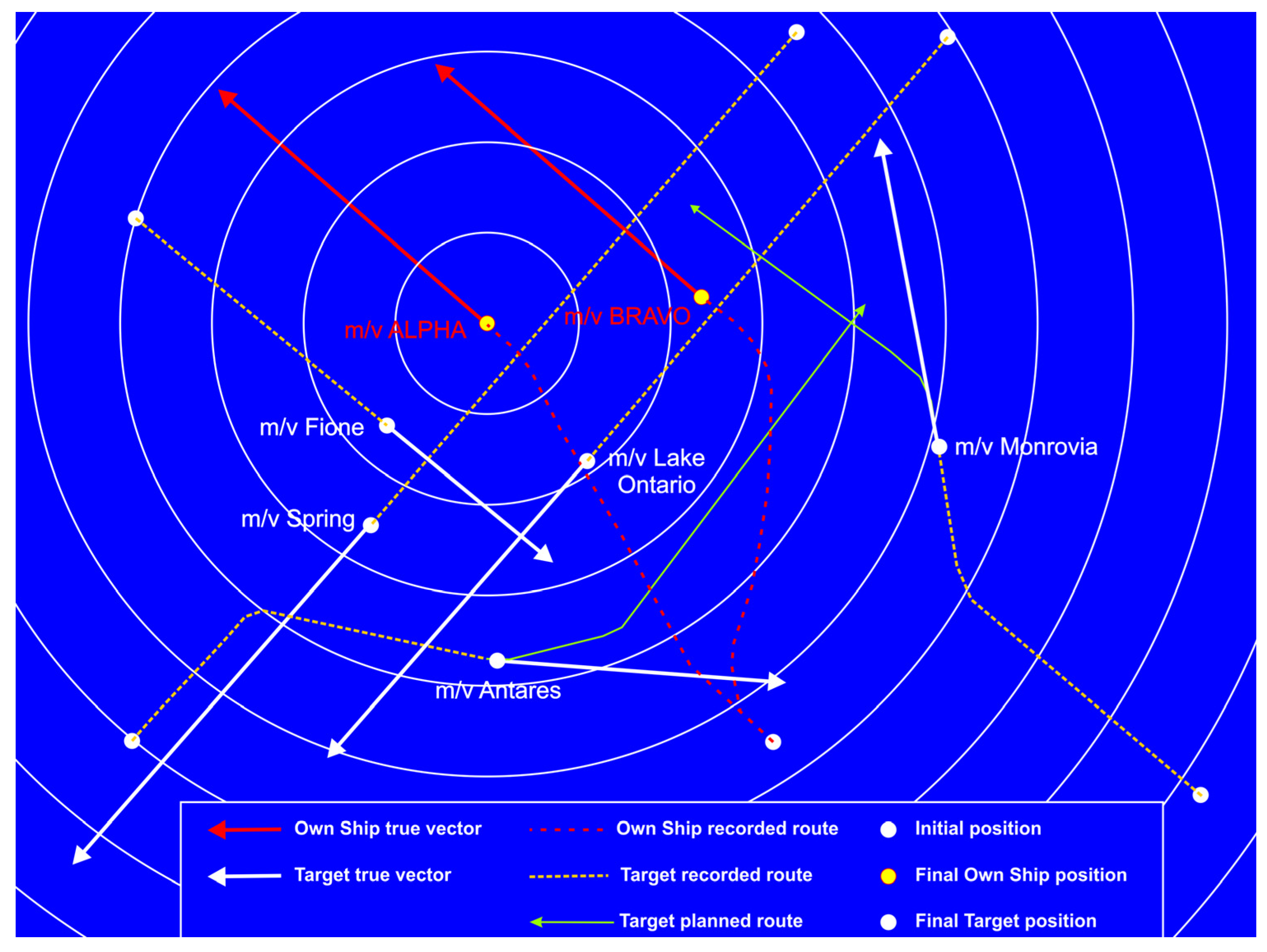

A bulk carrier model (BULKC06L—see

Table 1, Item 1) was used as a mathematical model of the own ship. The movement of five other vessels (targets) was simulated in the area (

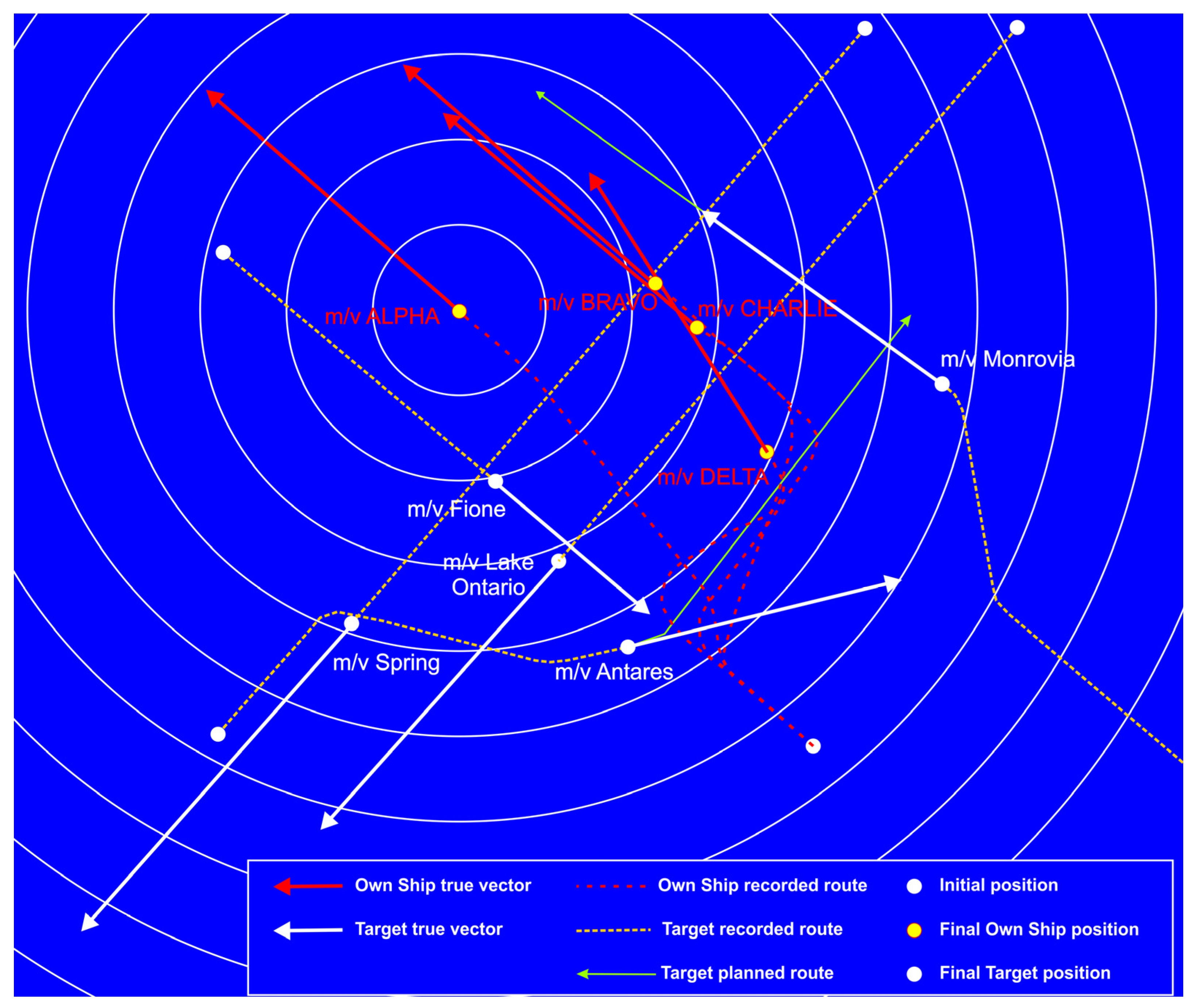

Figure 6). There was no interaction between the own ships, i.e., if more own ships participated in the simulation, then they did not maneuver relative to each other, starting the simulation from the same initial position.

It was assumed that tested persons must be familiar with the basics of the navigational and ship control equipment available on the bridge, as well as the principles of analyzing the surrounding situation and proceeding in collision situations according to the International Regulations for Preventing Collision at Sea (COLREG) rules.

Before the exercise, the person taking part was instructed about the general conditions of the simulation and the required basic safety and evaluation criteria (in this case, a minimum passing distance of 1 NM had to be respected, and the anticollision maneuvers had to be in accordance with the COLREG recommendations and good maritime practice).

During the exercise, the tested person was required to:

Turn on and adjust the ARPA device properly according to described conditions;

Properly acquire targets;

Assess the situation;

Plan and execute anticollision maneuvers (if necessary);

Plan and execute a returning maneuver (if necessary).

The simulation ran for about 25 min.

4.2. Evaluation Criteria Used

Six types of criteria were used for evaluation:

In the case of the described exercise, the use of these criteria for the five simulated targets and their interaction with each other required the creation of 43 different types of conditions. Their number was optimized after careful analysis of the criteria selection and the way they are used. Note that SEA allows the creation of 50 separate conditions only, which is a certain limitation for more complex situations.

Of course, criteria linking allows us to calculate penalty points (PP) in relation to the situation development. Before the exercise started, the correct adjustment of the radar was assessed, and the wrong radar tuning was penalized by 3 PP. The use of the correct operating radar range was also checked (due to the open water and restricted visibility, the initial range of observation should be 12 or 6 NM), and 1 PP could be counted. The proper echo acquisition was also assessed at the beginning, and 3 PP were counted for each untracked object located less than 5 NM from its own ship position. Untracked targets were still monitored, and penalty points were counted when the distance to them decreased below 5 NM during the whole exercise time.

The CPA value was monitored for all tracked targets (the assumption of the exercise was that vessels should pass each other at a distance no less than 1 NM). If the calculated CPA value for a target was less than 1 NM and the vessel was at a distance under 4 NM (this is considered a missing of anticollision maneuver at a safe distance), then 1 PP was counted. The same situation would occur for a such target when the distance decreased under 3 NM. A further decrease in the distance (less than 2 NM) would result in the next 2 PP, and under 0.5 NM in the next 3 PP. Thus, the approach of a dangerous vessel to a distance under 0.5 NM resulted in a total of 7 PPs. But if the distance was reduced below 0.2 NM, the situation was treated as a collision, and this resulted in a negative mark at the end of the exercise (20 PP).

The type of anticollision maneuver was also monitored. It is obvious that course changing to port in restricted visibility is not in accordance with the COLREG regulation. However, penalty points were only counted when the distance to the FIONE vessel (port relative bearing) was less than 4 NM.

Exceeding the threshold of 20 penalty points during the exercise resulted in a negative assessment.

MUS navigation students acted as assessed navigators during SEA application validation testing.

5. Results

In the initial phase of the created evaluation system testing, several simulations were carried out to confirm the appropriateness of the adopted evaluation criteria. After the first tests, however, it became clear that it was necessary to correct them because the obtained grades were too high in relation to the errors made. During subsequent tests, the criteria were gradually adjusted so that the obtained grades matched those given by the expert—an experienced instructor. This stage showed how difficult and time-consuming it is to adjust the criteria and link them together in such a way that more complex conditions are met.

It is very important when working on an automatic evaluation worksheet to repeatedly and multidimensionally check the criteria used and their weights. The instructor must set the goal of the exercise and the level, demonstrate imagination, determine the number of correct solutions, and allow deviations from the assumptions. This is because navigation is an open process, dynamically changing in time, in which the goal is clearly defined, although there are often several ways leading to it. All these aspects should be considered when preparing an automated assessment. Linking conditions in the SEA program can take place on several levels [

22].

The SEA program behavior is closely related to how deeply and precisely the navigational situation will be analyzed, how it will be described using criteria, and how sophisticated a structure will be created to illustrate many layers of the problem. As mentioned in the paper, this is an extremely difficult task for instructors. At the same time, it should be emphasized that after they perform this time-consuming and complicated activity of preparing the sheet for evaluation, they will receive great support in the evaluation process, which is objective information in the form of a printed report. This document clearly indicates the errors made during the simulation. The evaluation made is concrete and not burdened by subjectivity and other elements that reduce its validity.

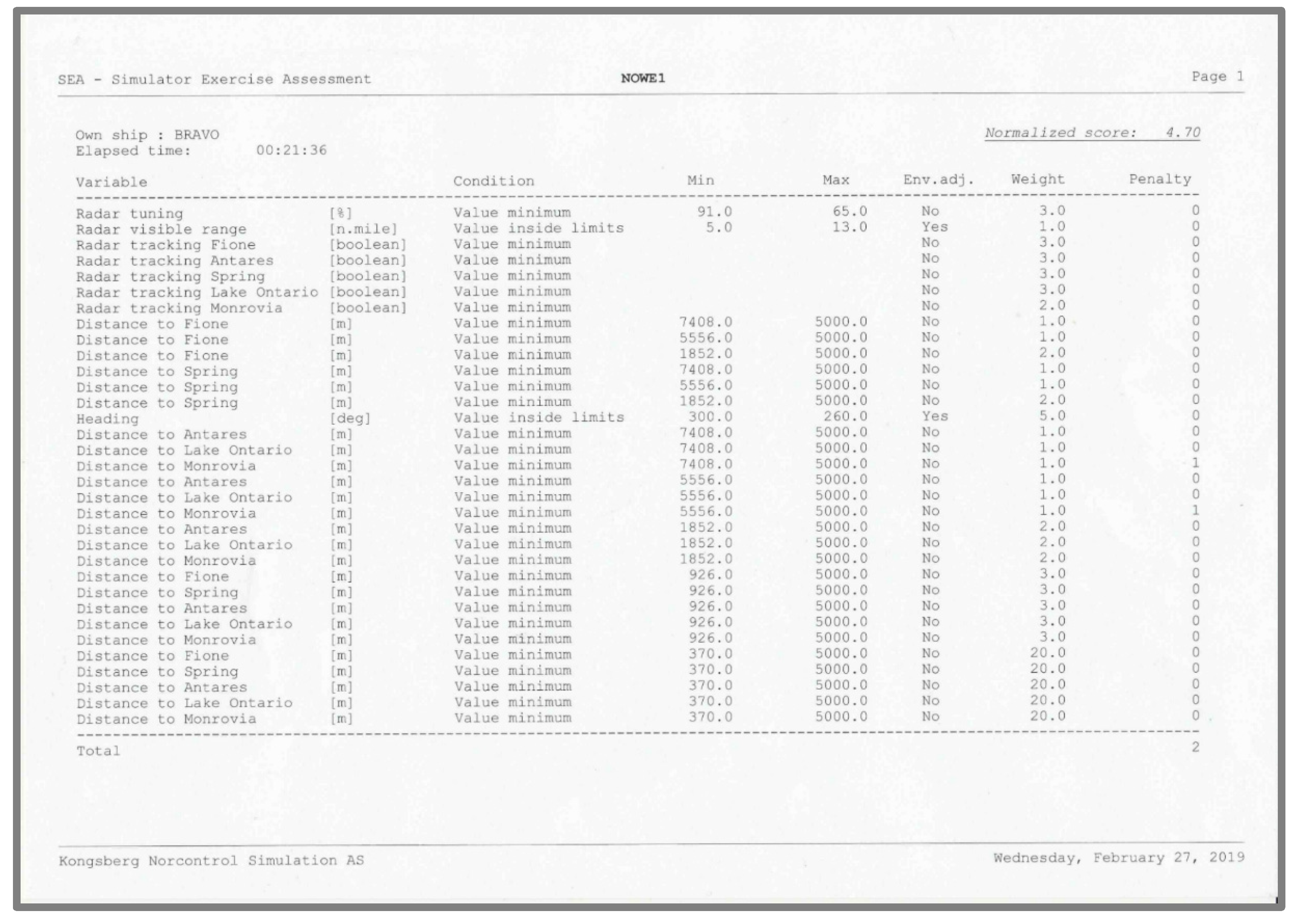

Based on the final version of the criteria sheet, further simulations were carried out with the SEA evaluation program activated. An example of the implementation of the exercise with own ships ALPHA and BRAVO is shown in

Figure 7 (own ships CHARLIE and DELTA did not participate in the exercise). The location and adopted names of the navigation bridges in the simulator are shown in

Figure 1. An example of the final report obtained for the BRAVO vessel is shown in

Figure 8.

The SEA program assessed the simulation results for the ALPHA ship very well (rating of 4.7). She picked up only two penalty points for getting too close to the m/v SPRING during the final phase of the exercise. The ships passed each other safely. The program recorded a distance less than 1 NM astern of the m/v SPRING. It is worth considering later if weights should be differentiated in the situation of approaching ships from the bow and from the stern direction.

Ship BRAVO changed course very efficiently to starboard to pass the astern of m/v SPRING and m/v LAKE ONTARIO. However, the SEA program recorded criteria overrun against the ship MONROVIA (two penalty points). The anticollision maneuver to avoid a dangerous situation was executed at neither 4 NM nor 3 NM to MONROVIA. The recorded CPA in this situation was less than 1 NM. BRAVO was also rated 4.7 (very good).

During one of the next simulations, the student operating the CHARLIE ship received a negative grade (2.00). The program counted the maximum number of penalty points (14) for not acquiring all targets. The correct distances and CPAs were also not respected. Exceeding these criteria resulted in another penalty point. The exercise was not passed. The note indicates the student’s lack of competence at the given level of training.

To further verify the SEA application’s usefulness, the results of several recorded situations were presented to watch officers with various sea experiences to evaluate the correctness of the actions taken. Assessors were additionally provided with information on radar adjustments made and target acquisition. The rating was given according to a scale:

The results of the expert-assessed simulation are shown in

Figure 9, while the results of the evaluations of the experts taking part in the research are presented in

Table 2.

All participants in the research analyzed the traffic situation, determined speeds and distances, and then analyzed the solution to the situation based on the radar image of the discussed simulation. A comparison of the automatic evaluation of the SEA system and the evaluation carried out by the captains and officers of the watch indicates the high similarity of the notes given (especially for the solution proposed by BRAVO). It should be noted that the maneuvers executed by BRAVO were clear, efficient, and executed early enough. Such action is completely in accordance with COLREG recommendations.

On the other hand, there are completely opposite notes issued for the ALPHA route. Some of the experts (four out of thirteen) considered such a route too risky and rated it negatively and, at the same time, as the highest note of most participants (eight out of thirteen). Such variation in evaluations is a clear indication of their subjective nature. It should also be noted that the ALPHA maneuvers are not as efficient as BRAVO maneuvers, and during the exercise, there were some small course corrections visible. This type of collision situation-solving is not recommended when ships are in restricted visibility.

The evaluators made logical analysis, considered all navigational and weather conditions, and even took care of the economic aspect (changing course as little as possible so as not to consume additional fuel and return to the proper course as quickly as possible). The human factor also came into play as the character of the people who judged. The SEA program, by contrast, calculated penalty points based on the criteria and weights entered and, using a specific rating scale, gave a mark.

Some evaluators chose the ALPHA route as the most appropriate and reasonable (eight very good ratings), while others chose the BRAVO route as the best and safest (six very good ratings). Considering the average rating, the best rating was given to BRAVO, while in the SEA program, the highest rating was given to ALPHA. With both assessments, the lowest rating was given to navigators on the CHARLIE and DELTA ships.

In addition to knowledge and experience, it has also been observed that character traits such as conservativeness and lack of self-confidence affect the evaluation. Automatic evaluation, on the other hand, is free of the element of subjectivity; it is based on pure calculation according to established algorithms. The study conducted on a group of people with relevant competencies (experts) is only a preliminary comparison of automatic assessment and traditional human assessment. Even based on such a small group, common elements of these assessments have already been observed.

6. Discussion and Conclusions

The results of the use of the module for automatic assessment of navigator competence are presented in this article. The case described here concerns how to resolve collision situations in the open sea. This paper highlights the increasing role of marine simulators as a major component of ship crew training (supported by amendments made to the STCW Convention). Training with simulators is very effective. A separate aspect is the proper evaluation of trainees’ competencies. Together with changes in bridge equipment and the introduction of simulators into crew training, the methodology of navigator qualification assessment must also change. More and more complex and dynamically changing navigation process requires a great knowledge of instructors/examiners. Thus, it seems necessary to support the evaluation process on the simulator by automatic evaluation possibility. The subject is multithreaded, complex, and difficult, but there is little available literature and conducted research. Previous work on automated procedures for assessing operator efficiency (e.g., the initial GruNT project in 2017) has shown a large degree of agreement between the assessment algorithm for a specific maritime operation and the assessment performed by subject matter experts. The GruNT project was based exactly on the scenario evaluation system in the K-Sim Polaris simulator [

22].

A comparison of the automatic evaluation generated by the SEA program and the evaluation of competent persons (subject matter experts) was also made in this study. The analysis of it indicated the limitations of reliability and the possible subjectivity of the expert assessment. Nevertheless, the observed similarities in the assessment, especially those given by the most experienced captains and officers, suggest the most reasonable use of automatic assessment.

Kongsberg has provided an automatic evaluation program in its K-Sim Polaris simulator, which, in its current form, is useful and effective but should still be improved. Its assumptions, the way penalty points are calculated, and the comparison of preset parameters meet requirements, although the number of available types of criteria could be greater. There is also no way to define user parameters. This puts a requirement for the instructor to carefully analyze these parameters for their optimal use.

Conducted and evaluated simulations in the open sea showed what to pay attention to when using a simulator-based automated competence assessment application.

A major problem of using applications to automatically assess the competence of navigators is the lack of internationally recognized standards and norms to use in the criteria-selecting process.

Indeed, it is not easy to define such standardized conditions. Shipping is a dynamically changing process. Different paths, more or less correct, can lead to the right solution. The same route due to environmental conditions can be treated differently; navigators can choose task sequence and timing. Trying to find an algorithm that takes into account every aspect is a very difficult problem. Therefore, researchers do not often explore this field. Despite this, the development of automated assessment seems a foregone conclusion. It is becoming more important to establish an objective evaluation system that will contribute to a consistent, objective, and transparent assessment of navigators’ skills and competence in the future.

So, the general conclusions are:

The instructor’s qualifications and training have to be in accordance with STCW Convention requirements. They must have many years of experience at sea, pedagogical, didactic, and psychological knowledge, and be familiar both with the simulator and all functions of automatic assessment application;

It is very important to correctly choose the sea area type (open, restricted, approach to the port, approach to the quay, etc.). In each of these areas, it is necessary to pay attention to other parameters/criteria;

Proper preparation of an automatic evaluation sheet requires very precise parameters selection, proper limits and weights adjustment, and the creation of links and relations between tested quantities;

Correct creation of the evaluation sheet requires repeated checking and testing of the exercise;

The limits used for evaluation should meet the requirements of international regulations, follow good maritime practice, and be tailored to the training level;

Actual norms and standards by which the assessment should be performed have not yet been developed, so the instructor is expected to properly construct an automatic assessment sheet adapted to a specific scenario or scenarios.

The analysis of SEA’s application possibilities made during its testing allows us to conclude that:

SEA can support the instructor in the competency assessment process, providing its objectivity;

It gives the possibility to monitor selected parameters used during competence assessment;

By using an automatic assessment program, the instructor can focus more on the assessment of nonmeasurable elements (e.g., navigator’s behavior, relations with other members of the watch, communication skills, logical thinking, etc.);

It provides information on the outcome of the simulation in the form of a printed final report;

Further development of the SEA application should ensure its integration with the core simulation program, which will significantly affect the work stability;

The number of available criteria should be extended, or it should be possible for the user to define their own evaluation criteria, which would ensure that the SEA application can be used more widely.