Mechanical Assembly Monitoring Method Based on Semi-Supervised Semantic Segmentation

Abstract

1. Introduction

2. Related Work

2.1. Fully Supervised Semantic Segmentation

2.2. Unsupervised Semantic Segmentation

2.3. Semi-Supervised Semantic Segmentation

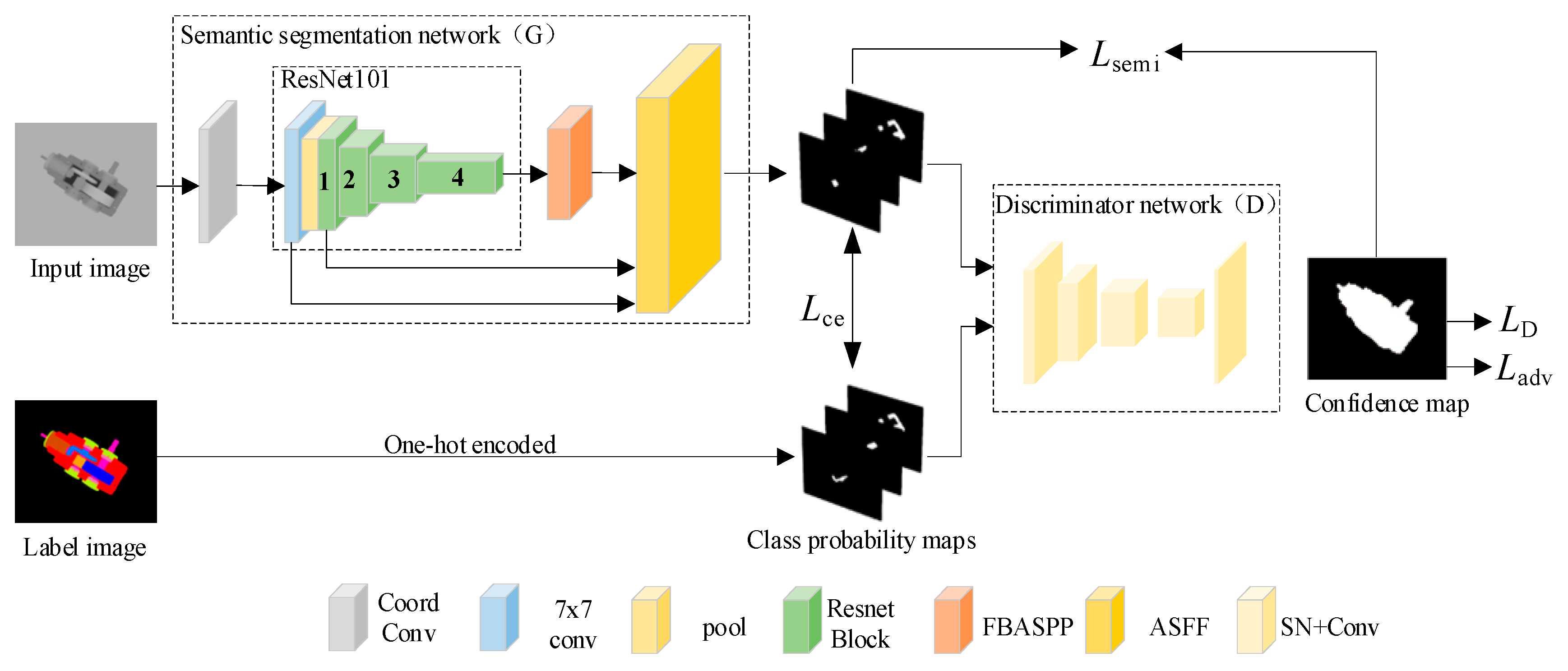

3. Overall Framework

3.1. Structure of the Model

3.2. Adaptive Spatial Feature Fusion (ASFF) Module

3.3. RFASPP Module

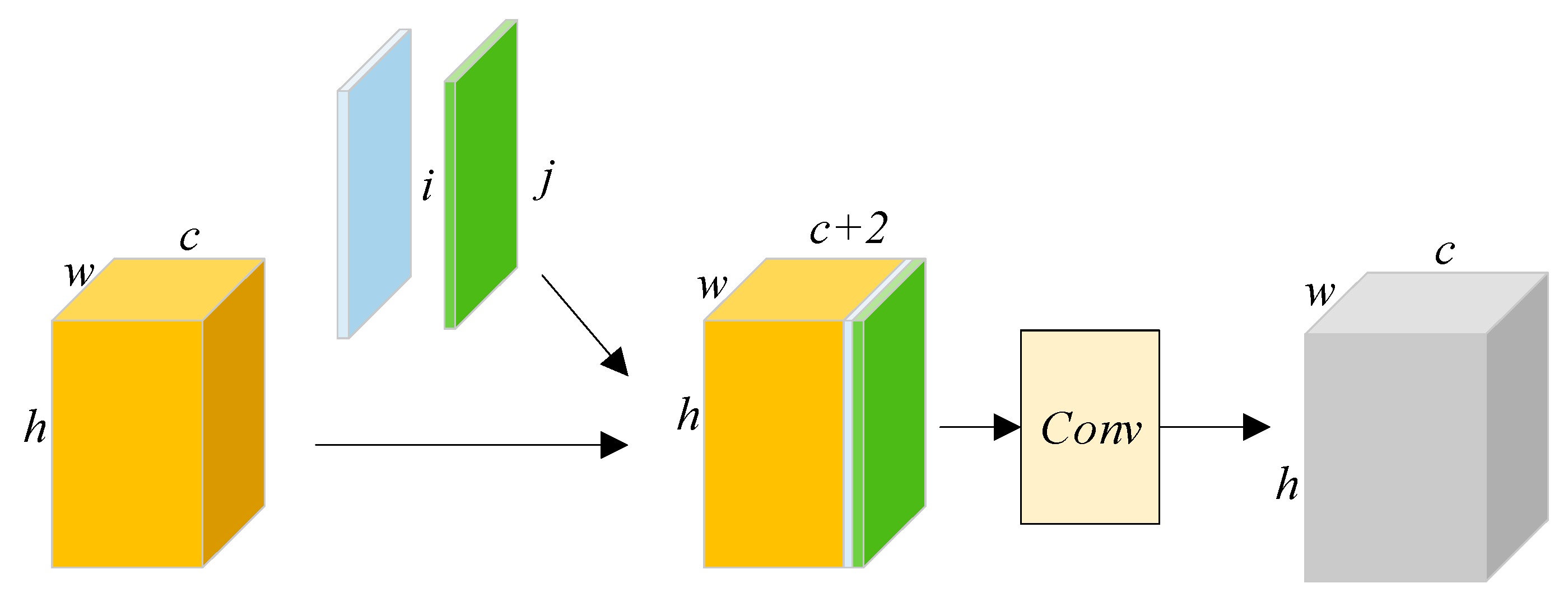

3.4. CoordConv Module

3.5. Network Training

4. Experiments

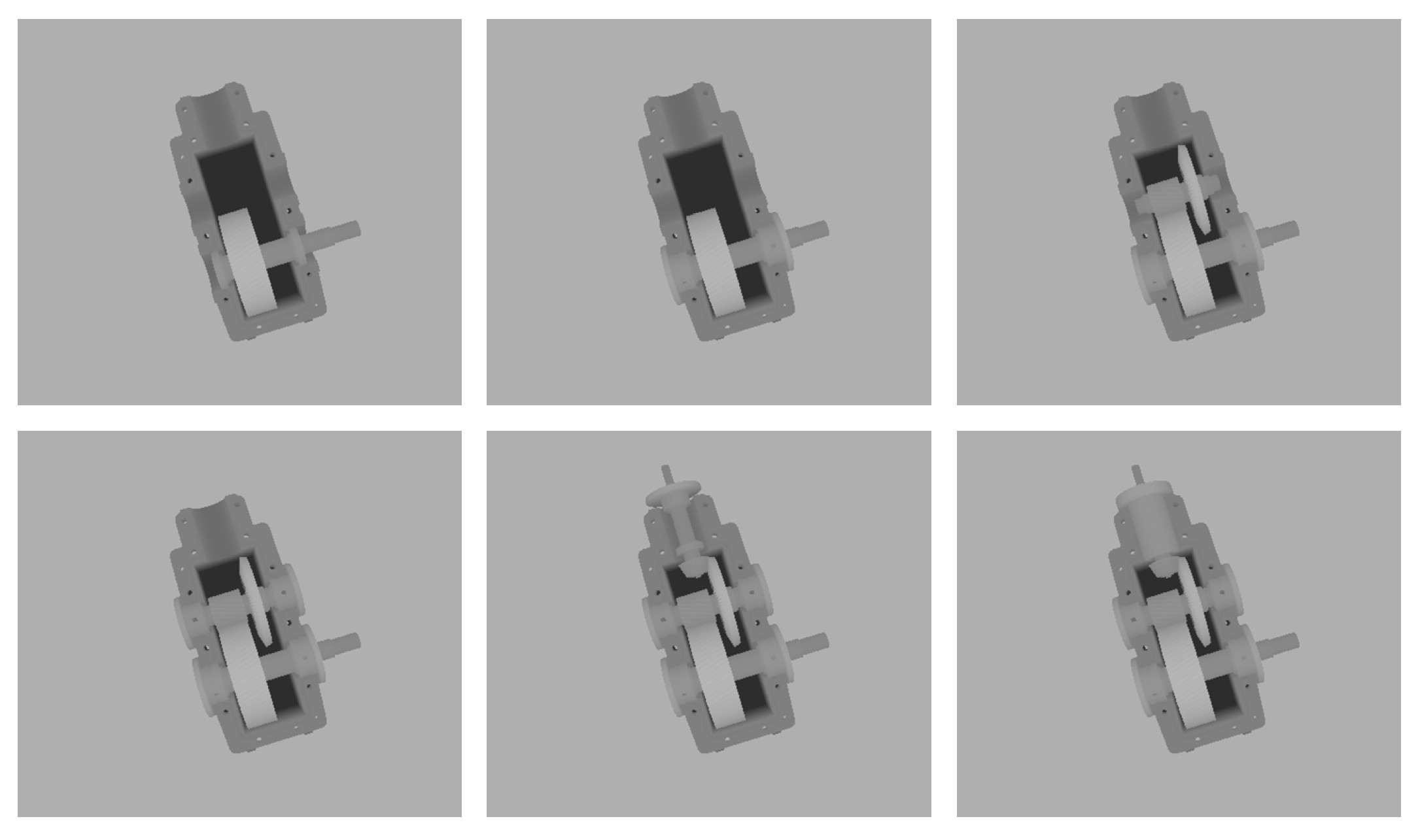

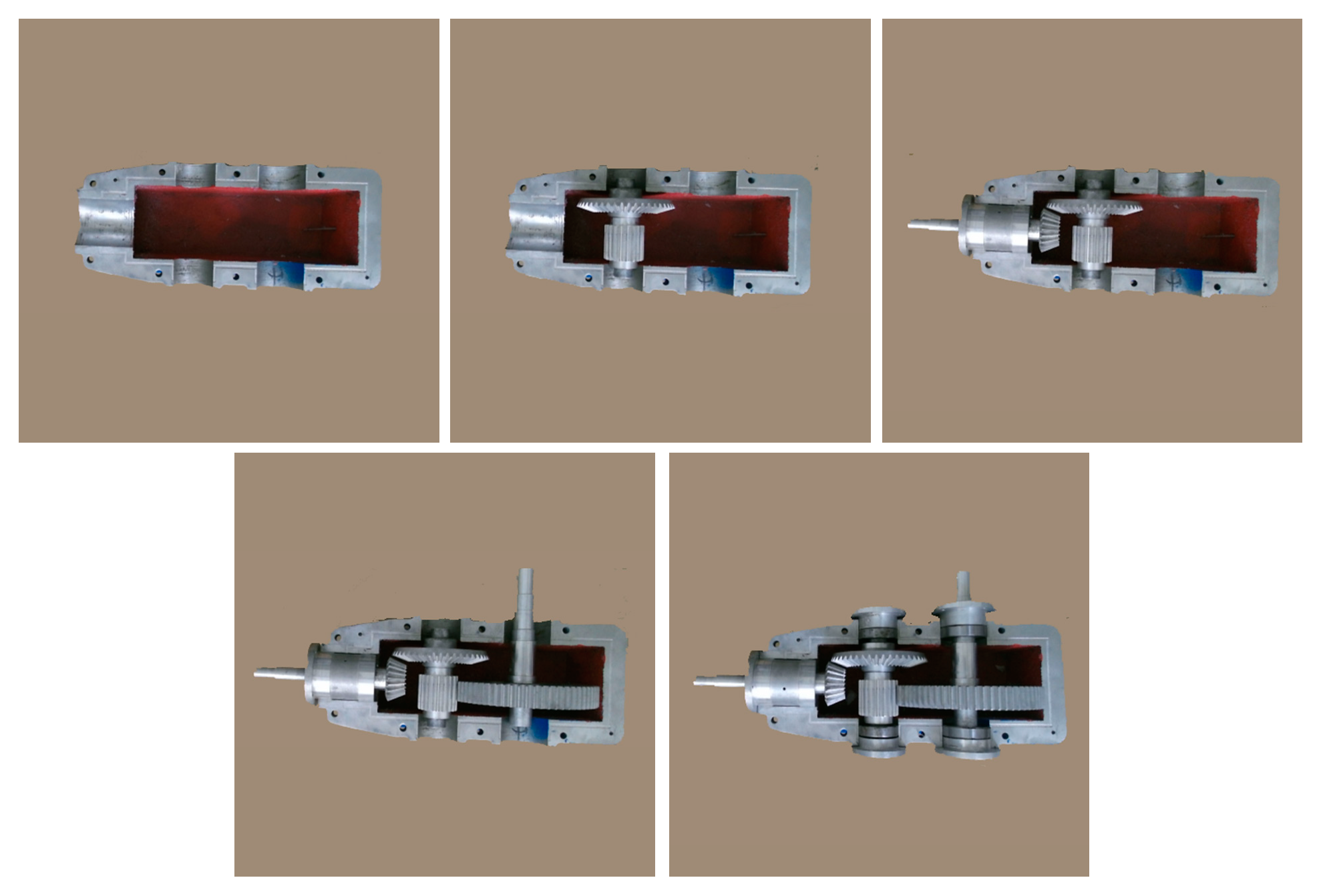

4.1. Assembly Image Semantic Segmentation Dataset

4.2. Evaluation Indicator

4.3. Experimental Environment and Parameter Settings

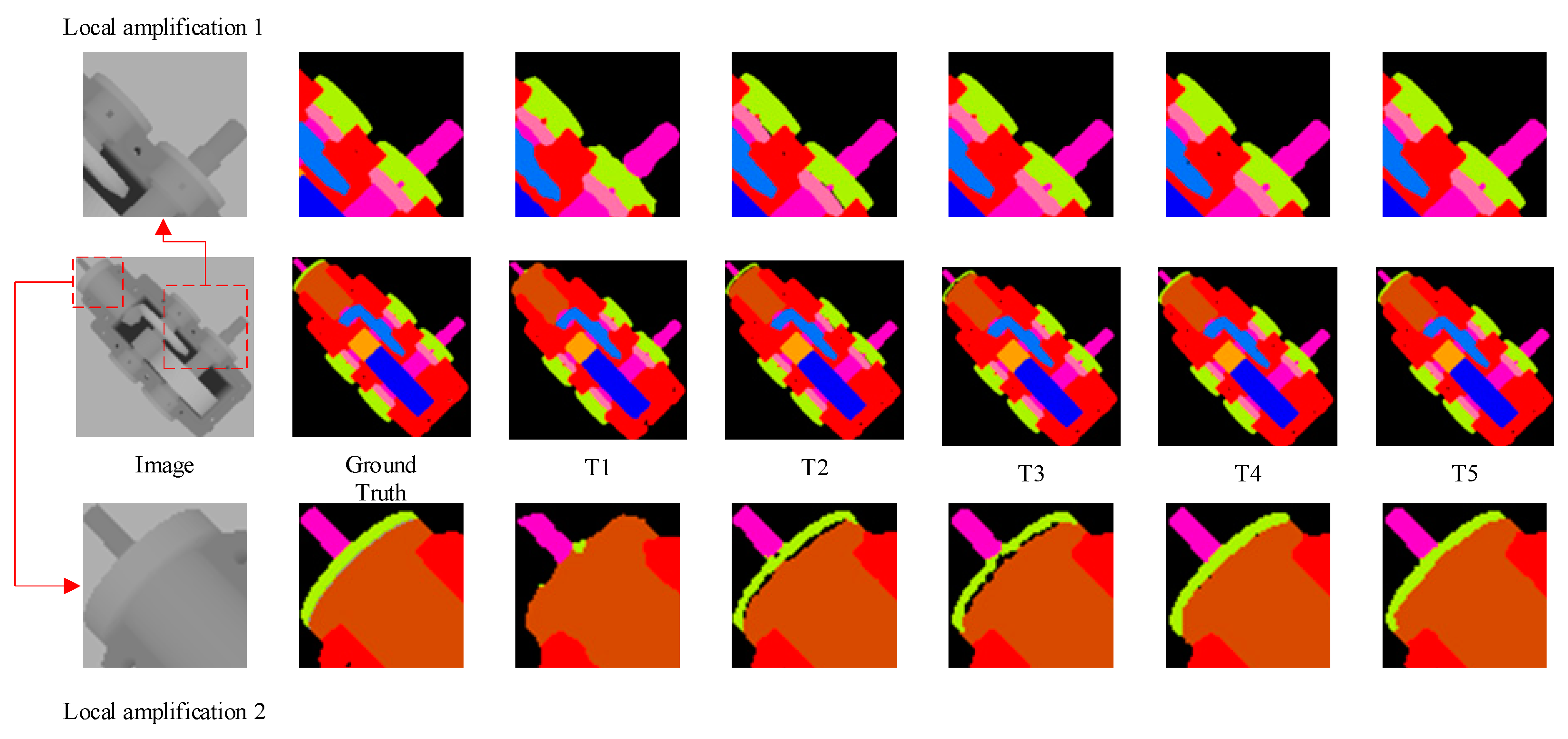

4.4. Ablation Experiments

4.5. Comparison Experiments on the Assembly Image Dataset

4.6. Comparison Experiments on Public Datasets

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Shirmohammadi, S.; Ferrero, A. Camera as the Instrument: The Rising Trend of Vision Based Measurement. IEEE Instrum. Meas. Mag. 2014, 17, 41–47. [Google Scholar] [CrossRef]

- Cyganek, B.; Gruszczyński, S. Hybrid Computer Vision System for Drivers’ Eye Recognition and Fatigue Monitoring. Neurocomputing 2014, 126, 78–94. [Google Scholar] [CrossRef]

- Negin, F.; Ozyer, B.; Agahian, S.; Kacdioglu, S.; Ozyer, G.T. Vision-Assisted Recognition of Stereotype Behaviors for Early Diagnosis of Autism Spectrum Disorders. Neurocomputing 2021, 446, 145–155. [Google Scholar] [CrossRef]

- Fernández-Robles, L.; Sánchez-González, L.; Díez-González, J.; Castejón-Limas, M.; Pérez, H. Use of Image Processing to Monitor Tool Wear in Micro Milling. Neurocomputing 2021, 452, 333–340. [Google Scholar] [CrossRef]

- Riego, V.; Castejon-Limas, M.; Sanchez-Gonzalez, L.; Fernandez-Robles, L.; Perez, H.; Diez-Gonzalez, J.; Guerrero-Higueras, Á.-M. Strong Classification System for Wear Identification on Milling Processes Using Computer Vision and Ensemble Learning. Neurocomputing 2021, 456, 678–684. [Google Scholar] [CrossRef]

- Kaczmarek, S.; Hogreve, S.; Tracht, K. Progress Monitoring and Gesture Control in Manual Assembly Systems Using 3D-Image Sensors. Procedia CIRP 2015, 37, 1–6. [Google Scholar] [CrossRef]

- Hu, J.-J.; Li, H.-C.; Wang, H.-W.; Hu, J.-S. 3D Hand Posture Estimation and Task Semantic Monitoring Technique for Human-Robot Collaboration. In Proceedings of the 2013 IEEE International Conference on Mechatronics and Automation, Kagawa, Japan, 4–7 August 2013; pp. 797–804. [Google Scholar]

- Chen, C.; Li, C.; Li, D.; Zhao, Z.; Hong, J. Mechanical Assembly Monitoring Method Based on Depth Image Multiview Change Detection. IEEE Trans. Instrum. Meas. 2021, 70, 5013413. [Google Scholar] [CrossRef]

- Riedel, A.; Gerlach, J.; Dietsch, M.; Herbst, S.; Engelmann, F.; Brehm, N.; Pfeifroth, T. A Deep Learning-Based Worker Assistance System for Error Prevention: Case Study in a Real-World Manual Assembly. Adv. Prod. Eng. Manag. 2021, 16, 393–404. [Google Scholar] [CrossRef]

- Zamora-Hernández, M.-A.; Castro-Vargas, J.A.; Azorin-Lopez, J.; Garcia-Rodriguez, J. Deep Learning-Based Visual Control Assistant for Assembly in Industry 4.0. Comput. Ind. 2021, 131, 103485. [Google Scholar] [CrossRef]

- Chen, C.; Zhang, C.; Wang, T.; Li, D.; Guo, Y.; Zhao, Z.; Hong, J. Monitoring of Assembly Process Using Deep Learning Technology. Sensors 2020, 20, 4208. [Google Scholar] [CrossRef] [PubMed]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected Crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.-C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking Atrous Convolution for Semantic Image Segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Van Gansbeke, W.; Vandenhende, S.; Georgoulis, S.; Van Gool, L. Unsupervised Semantic Segmentation by Contrasting Object Mask Proposals. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10052–10062. [Google Scholar]

- Tsai, Y.-H.; Hung, W.-C.; Schulter, S.; Sohn, K.; Yang, M.-H.; Chandraker, M. Learning to Adapt Structured Output Space for Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7472–7481. [Google Scholar]

- French, G.; Laine, S.; Aila, T.; Mackiewicz, M.; Finlayson, G. Semi-Supervised Semantic Segmentation Needs Strong, Varied Perturbations. arXiv 2019, arXiv:1906.01916. [Google Scholar]

- Olsson, V.; Tranheden, W.; Pinto, J.; Svensson, L. Classmix: Segmentation-Based Data Augmentation for Semi-Supervised Learning. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 4–8 January 2021; pp. 1369–1378. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Hung, W.-C.; Tsai, Y.-H.; Liou, Y.-T.; Lin, Y.-Y.; Yang, M.-H. Adversarial Learning for Semi-Supervised Semantic Segmentation. arXiv 2018, arXiv:1802.07934. [Google Scholar]

- Mittal, S.; Tatarchenko, M.; Brox, T. Semi-Supervised Semantic Segmentation with High-and Low-Level Consistency. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 1369–1379. [Google Scholar] [CrossRef] [PubMed]

- Miyato, T.; Kataoka, T.; Koyama, M.; Yoshida, Y. Spectral Normalization for Generative Adversarial Networks. arXiv 2018, arXiv:1802.05957. [Google Scholar]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Liu, S.; Huang, D.; Wang, Y. Learning Spatial Fusion for Single-Shot Object Detection. arXiv 2019, arXiv:1911.09516. [Google Scholar]

- Kirillov, A.; Girshick, R.; He, K.; Dollár, P. Panoptic Feature Pyramid Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019; pp. 6399–6408. [Google Scholar]

- Liu, S.; Huang, D. Receptive Field Block Net for Accurate and Fast Object Detection. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 385–400. [Google Scholar]

- Liu, R.; Lehman, J.; Molino, P.; Petroski Such, F.; Frank, E.; Sergeev, A.; Yosinski, J. An Intriguing Failing of Convolutional Neural Networks and the Coordconv Solution. arXiv 2018, arXiv:1807.03247v2. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Advances in Neural Information Processing Systems 32; Curran Associates, Inc.: Red Hook, NY, USA, 2019; pp. 8024–8035. [Google Scholar]

- Liu, Y.; Tian, Y.; Chen, Y.; Liu, F.; Belagiannis, V.; Carneiro, G. Perturbed and Strict Mean Teachers for Semi-Supervised Semantic Segmentation. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 4258–4267. [Google Scholar]

- Wang, Y.; Wang, H.; Shen, Y.; Fei, J.; Li, W.; Jin, G.; Wu, L.; Zhao, R.; Le, X. Semi-Supervised Semantic Segmentation Using Unreliable Pseudo-Labels. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 4248–4257. [Google Scholar]

- Alonso, I.; Sabater, A.; Ferstl, D.; Montesano, L.; Murillo, A.C. Semi-Supervised Semantic Segmentation with Pixel-Level Contrastive Learning from a Class-Wise Memory Bank. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 8219–8228. [Google Scholar]

| Methods | PA/% | MPA/% | F1/% | MIoU/% | Time/s |

|---|---|---|---|---|---|

| T1 | 99.465 | 96.936 | 98.108 | 93.245 | 0.087 |

| T2 | 99.837 | 98.473 | 99.307 | 97.000 | 0.109 |

| T3 | 99.841 | 98.841 | 99.318 | 97.348 | 0.113 |

| T4 | 99.870 | 98.937 | 99.341 | 97.525 | 0.180 |

| T5 (Ours) | 99.886 | 99.043 | 99.401 | 97.728 | 0.181 |

| Methods | PA/% | MPA/% | F1/% | MIoU/% | Time/s |

|---|---|---|---|---|---|

| T1 | 99.438 | 94.231 | 97.619 | 89.676 | 0.150 |

| T2 | 99.672 | 97.883 | 98.580 | 95.081 | 0.188 |

| T3 | 99.674 | 97.956 | 98.674 | 95.199 | 0.199 |

| T4 | 99.668 | 97.986 | 98.703 | 95.370 | 0.238 |

| T5 (Ours) | 99.684 | 97.808 | 98.883 | 95.412 | 0.237 |

| Methods | PA/% | MPA/% | F1/% | MIoU/% |

|---|---|---|---|---|

| AdvSemiSeg | 99.465 | 96.936 | 98.108 | 93.245 |

| AdvSemiSeg + ASFF | 99.837 | 98.473 | 99.307 | 97.000 |

| AdvSemiSeg + RFASPP | 99.264 | 95.178 | 97.450 | 91.388 |

| AdvSemiSeg + CoordConv | 99.160 | 94.500 | 96.957 | 90.560 |

| AdvSemiSeg + spectrally normalized | 99.259 | 93.913 | 97.684 | 92.503 |

| Network | Batch Size | Iteration Times | Pretraining Weight | PA/% | F1/% | MIoU/% (Removing Background) |

|---|---|---|---|---|---|---|

| AdvSemiSeg | 2 | 20,000 | COCO | 99.465 | 98.108 | 93.245 |

| S4GAN | 2 | 40,000 | COCO | 99.373 | 98.181 | 92.918 |

| ClassMix | 2 | 40,000 | COCO | 99.192 | 96.945 | 90.968 |

| PS-MT | 2 | 61,776 | COCO | 99.504 | 98.076 | 93.523 |

| U2PL | 2 | 78,560 | COCO | 99.659 | 98.440 | 94.123 |

| AdvSemiSeg-MA (Ours) | 2 | 20,000 | COCO | 99.886 | 99.401 | 97.728 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, S.; Chen, C.; Wang, J. Mechanical Assembly Monitoring Method Based on Semi-Supervised Semantic Segmentation. Appl. Sci. 2023, 13, 1182. https://doi.org/10.3390/app13021182

Wu S, Chen C, Wang J. Mechanical Assembly Monitoring Method Based on Semi-Supervised Semantic Segmentation. Applied Sciences. 2023; 13(2):1182. https://doi.org/10.3390/app13021182

Chicago/Turabian StyleWu, Suichao, Chengjun Chen, and Jinlei Wang. 2023. "Mechanical Assembly Monitoring Method Based on Semi-Supervised Semantic Segmentation" Applied Sciences 13, no. 2: 1182. https://doi.org/10.3390/app13021182

APA StyleWu, S., Chen, C., & Wang, J. (2023). Mechanical Assembly Monitoring Method Based on Semi-Supervised Semantic Segmentation. Applied Sciences, 13(2), 1182. https://doi.org/10.3390/app13021182