Abstract

Lip reading has attracted increasing attention recently due to advances in deep learning. However, most research targets English datasets. The study of Chinese lip-reading technology is still in its initial stage. Firstly, in this paper, we expand the naturally distributed word-level Chinese dataset called ‘Databox’ previously built by our laboratory. Secondly, the current state-of-the-art model consists of a residual network and a temporal convolutional network. The residual network leads to excessive computational cost and is not suitable for the on-device applications. In the new model, the residual network is replaced with ShuffleNet, which is an extremely computation-efficient Convolutional Neural Network (CNN) architecture. Thirdly, to help the network focus on the most useful information, we insert a simple but effective attention module called Convolutional Block Attention Module (CBAM) into the ShuffleNet. In our experiment, we compare several model architectures and find that our model achieves a comparable accuracy to the residual network (3.5 GFLOPs) under the computational budget of 1.01 GFLOPs.

1. Introduction

Lip reading is the task of recognizing contents in a video only based on visual information. It is the intersection of computer vision and natural language processing. At the same time, it can be applied in a wide range of scenarios such as human-computer interaction, public security, and speech recognition. Depending on the mode of recognition, lip reading can be divided into audio-visual speech recognition (AVSR) and visual speech recognition (VSR). AVSR refers to the use of image processing capabilities in lip reading to aid speech recognition systems. In VSR, speech is transcribed using only visual information to interpret tongue and teeth movements. Depending on the object of recognition [1], lip reading can be divided into isolated lip recognition methods and continuous lip recognition methods. Isolated lip recognition method targets numbers, letters, words, or phrases, which can be classified into limited categories visually. The continuous lip recognition method targets phonemes, visemes (the basic unit of visual information) [2], and visually indistinguishable characters.

In 2016, Google [3] and the University of Oxford designed and implemented the first sentence-level lip recognition model, named LipNet. Burton, Jake et al. [4] used the lip recognition method of CNN and LSTM to solve the complex speech recognition problem that the HMM network could not solve. In 2019, as the attention mechanism was introduced into the field of lip recognition, Lu et al. [5] proposed a lip-reading recognition system using the CNN-Bi-GRU-Attention fusion neural network model, and the final recognition accuracy reached 86.8%. In 2021, Hussein D. [6] improved the above fusion lip recognition model and proposed the HLR-Net model. The model mainly was composed of the fusion model of Inception-Bi-GRU-Attention and used the CTC loss function to match the input and output, and its recognition accuracy reached 92%.

However, the research mentioned above involves English datasets, and the development of Chinese lip-reading technology is still in the initial stage. Compared with English which consists of only letters, Chinese is more complex. This is because Chinese Pinyin has more than 1000 pronunciation combinations and the number of Chinese characters is more than 9000. Moreover, the deficiency of Chinese datasets also makes lip reading more challenging.

Additionally, in recent years, building deeper and larger neural networks is a primary trend in the development of major visual tasks [7,8,9], which requires computation at billions of FLOPs. The high cost limits the practical deployment of lip-reading models.

In this paper, we aim to propose a deep-learning model designed for on-device application on our self-built dataset called ‘Databox’. Our model is improved based on the current state-of-the-art methodology consisting of a ResNet network and a Temporal Convolutional Network. We replace ResNet with a lightweight convolutional network called ShuffleNet with a plug-in attention module called Convolutional Block Attention. In Section 2, we give a detailed description of all the parts of the model. In Section 3, we present and analyze the results of the experiments. In Section 4, we conclude that our model architecture not only reduces computation but also maintains comparable accuracy that is suitable for mobile platforms such as drones, robots, and phones with limited computing power.

2. Lip-Reading Model Architecture

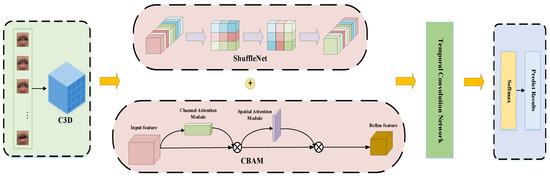

Lip reading is one of the most challenging problems in artificial intelligence, which recognizes the speech content based on the motion characteristics of the speaker’s lips. With the development of artificial intelligence [10,11,12], traditional lip-reading methods are being gradually substituted by deep-learning methods. Depending on the functional characteristics, the lip-reading method mainly consists of a frontend network and a backend network. The frontend networks include Xception [13], MobileNet [14,15], ShuffleNet [16,17], VGGNet, GoogLeNet, ResNet, and DenseNet [18], while the backend networks include Temporal Convolutional Network [19,20], the Long Short-Term Memory (LSTM) [21], and the Gate Control Unit (GRU) [22]. The attention mechanism, aimed at better allocation of resources, processes more important information in a feature map. Our network is composed of ShuffleNet, CBAM and TCN, with the structure shown in Figure 1.

Figure 1.

The architecture of the lip-reading model. Given a video sequence, we extract 29 consecutive frames that have a single channel indicating a gray level. The frames firstly go through a 3D convolution with kernel 5 × 7 × 7 called C3D. A ShuffleNet network inserted with the attention module called CBAM is used for spatial downsampling. Finally, the sequence of feature vectors is fed into the Temporal Convolutional for temporal downsampling followed by a SoftMax layer.

The model consists of five parts:

Input: using the Dlib library for detecting 68 landmarks of the face, we can crop the lip area and extract 29 consecutive frames from the video sequence. The frames go through a simple C3D network for generic feature extraction.

CNN: ShuffleNet performs spatial downsampling of a single image.

CBAM: Convolutional Block Attention Mechanism includes two independent modules that focus on important information of the channel dimension and the spatial dimension.

TCN: models the output of the frontend network and learns the long-term dependencies from several consequent images.

Output: lastly, we pass the result of the backend to SoftMax for classifying the final word.

2.1. ShuffleNet

With the development of deep-learning technology, the concept of the convolutional neural network (CNN) has developed rapidly in recent years. Considered as a fully connected network with multiple layers that can simulate the structure of the human brain, a convolutional neural network can perform supervised learning and recognition directly from images. Convolutional neural networks are mainly composed of the following types of layers: input layer, convolutional layer, ReLU layer, Pooling layer, and fully connected layer. By stacking these layers together, a complete convolutional neural network can be constructed. CNN is great at processing video, which is a superposition of images. It is typically deployed in autonomous driving, security, medicine and so on.

However, with neural networks becoming deeper and deeper, computation complexity increases significantly as well, which motivated the appearance of the lightweight model architecture design, including MobileNet V2, ShuffleNet V1, ShuffleNet V2, and Xception. Under the same FLOPs, the accuracy and speed of these models are shown in Table 1.

Table 1.

Measurement of accuracy and GPU speed of four different lightweight models with the same level of FLOPs on COCO object detection. (FLOPs: float-point operations).

We can see from Table 1 that ShuffleNet V2 has the highest accuracy and the fastest speed among the four different architectures. Therefore, we utilize ShuffleNet V2 as the frontend of our model. It mainly uses two new operations, namely, pointwise group convolution and channel shuffle, which greatly reduce the computational cost without affecting recognition accuracy.

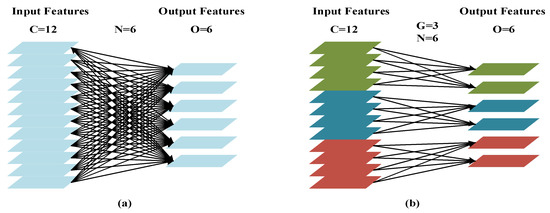

2.1.1. Group Convolution

Group convolution was firstly used in AlexNet for distributing the network over two GPUs, which proved its effectiveness in ResNeXt [23]. Conventional convolution adopts channel-dense connection, which performs a convolution operation on each channel of input features.

For conventional convolution, the width and height of the kernel are K and the C represents the number of input channels; if the number of the kernel is N, the number of the output channel is also N. The number of parameters is calculated as follows:

P(CC) = K × K × C × N (parameters)

(CC: Conventional Convolution)

For group convolution, the channels of the input features are divided into G groups, such that the number of the kernel is C/G; the results from G groups are concatenated into larger feature outputs of the N channel. The number of parameters is calculated as follows:

P(GC) = K × K × C/G × N (parameters)

(GC: Group Convolution)

From the above formulas, we can tell that the number of parameters in group convolution is much smaller than that of conventional convolution.

The conventional convolution and group convolution are illustrated in Figure 2.

Figure 2.

(a) Conventional Convolution (b) Group Convolution. C, N, G, and O correspond to the numbers of the channel, kernel, group, and output, respectively.

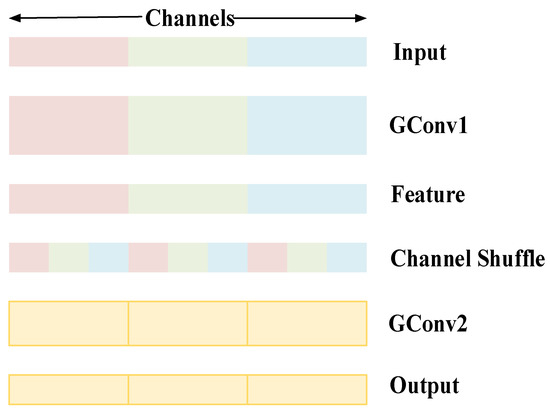

2.1.2. Channel Shuffle

However, group convolution also leads to the problem that different groups can no longer share information. Therefore, ShuffleNet performs a channel shuffle operation on the output features so that information can circulate through different groups without increasing computing costs. The process of the channel shuffle is shown in Figure 3.

Figure 3.

The process of the Channel Shuffle. The GConv2 layer is allowed to obtain features of different groups from the GConv1 layer due to the Channel Shuffle layer. That means the input and output channels will be fully related. (GConv: Group Convolution).

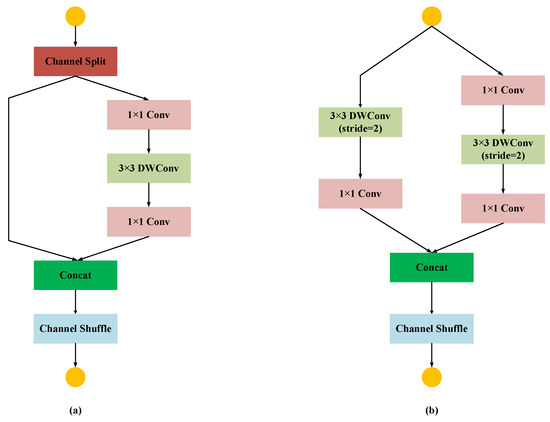

2.1.3. ShuffleNet V2 Unit

As shown in Figure 4a, in ShuffleNet V2 unit 1, a channel split is firstly performed on the input feature map, which is divided equally into two branches. The left branch remains unchanged, whereas the right branch undergoes three convolution operations. When the convolution is completed, the two branches will be concatenated to fuse the features. Finally, Channel Shuffle is used to communicate information between different groups.

Figure 4.

(a) ShuffleNet V2 Unit1 (b) ShuffleNet V2 Unit2. ShuffleNet-V2 is an effective lightweight deep-learning network with only 2M parameters. It uses the idea of group convolution from AlexNet and channel shuffle, which not only greatly reduce the number of model parameters, but also improve the robustness of the model. (DWConv [24]: Depth Wise Convolution).

As shown in Figure 4b, in ShuffleNet V2 unit 2, the channel is not divided at the beginning, and the feature map is directly inputted to the two branches. Both branches use 3 × 3 deep convolution to reduce the dimension of the feature map. Then, the concatenation operation is performed on the output of the two branches.

2.2. Convolutional Block Attention Module

In the field of image processing, the feature map contains a variety of important information. The traditional convolutional neural network performs convolution in the same way on all channels, but the importance of information varies greatly depending on different channels, hence, treating each channel equally can decrease the precision of the network.

To improve the performance of convolutional neural networks for feature extraction, Woo et al. [25] put forward a convolutional attention mechanism named Convolutional Block Attention Module (CBAM) in 2018, which is a simple and effective attention module for feedforward convolutional neural networks and contains two independent sub-modules, namely, Channel Attention Module (CAM) and Spatial Attention Module (SAM), which perform Channel and Spatial Attention, respectively. They added a module that can be seamlessly integrated into any Convolutional Neural Network (CNN) architecture and trained end-to-end with the base CNN to classical networks such as ResNet and MobileNet. The analysis showed that the attention mechanism in the spatial and channel dimensions improved the performance of the network to a certain extent.

Given an intermediate feature map, the CBAM module will sequentially compute attention maps along two independent dimensions (channel and space), and then multiply the attention map with the input feature map for adaptive feature optimization.

It determines the attention region by evaluating the importance of both the channel and spatial orientation of the image to suppress irrelevant background information and strongly emphasize the information of the target to be detected.

2.2.1. Channel Attention Module

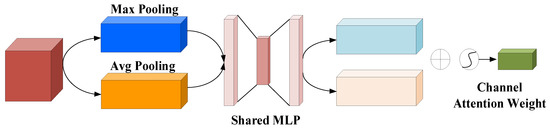

In the channel attention module, the input feature map (H × W × C) is respectively processed by global maximum pooling and global average pooling based on its length and width to extract information and compress spatial information; two different feature maps of size 1 × 1 × C are generated, respectively. Then they are each inputted into a two-layer neural network with one hidden layer for calculation, where the parameters in this network are shared. The first layer has C/r (channel reduction rate, set to r = 16) neurons, and the second layer has C neural units, following the activation function ReLU. Then the output feature map elements are added and merged, and the Hard–Sigmoid activation function is used to generate the feature map (1 × 1 × C) that is inputted to the spatial attention module. In terms of a single image, channel attention focuses on what in the image is important. Average pooling gives feedback to every pixel on the feature map, while maximum pooling gives feedback only where the response is the strongest in the feature map when performing the gradient backpropagation calculation. The channel attention module is shown in Figure 5.

Figure 5.

The channel attention module. The module uses both Max Pooling outputs and Avg Pooling outputs with a shared network.

2.2.2. Spatial Attention Module

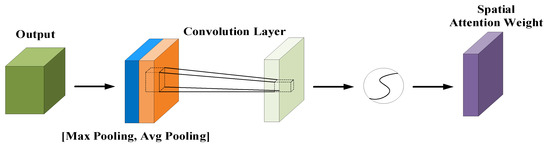

In the spatial attention module, the feature maps outputted from the CAM module are compressed and merged by channel-wise maximum pooling and average pooling separately, and two feature maps of size H × W × 1 are obtained. Then the concatenation operation is performed, and the dimension is reduced after a 7 × 7 convolution kernel operation to obtain the spatial attention feature map (H × W × 1). The spatial attention module is shown in Figure 6.

Figure 6.

The spatial attention module. The module uses two outputs from Max Pooling and Avg Pooling which are pooled along the channel axis and passes them to a convolution layer.

2.3. Temporal Convolutional Network

The topic of sequence modeling has been commonly associated with the Recurrent Neural Network (RNN), such as LSTM and GRU. However, there are bottlenecks in these models. Up until recently, researchers began to consider CNN and found that certain CNN architectures can achieve better performance in many tasks that are underestimated. In 2018, Shaojie Bai et al. [8] proposed the Temporal Convolutional Network (TCN), which is an improvement of the CNN network.

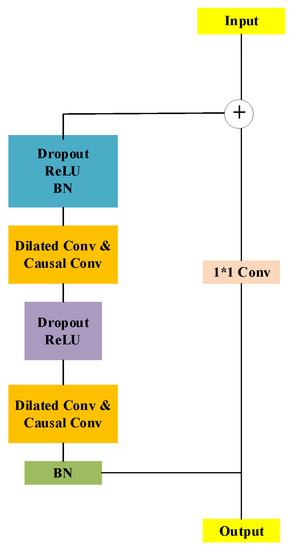

Compared with conventional one-dimensional convolution, TCN employs two different operations7: casual convolution and dilated convolution. It is more adaptive for dealing with sequential data due to its temporality and large receptive fields. In addition, the residual connection is used, which is a simple yet very effective technique to make training deep neural networks easier. The structure of the TCN is shown in Figure 7.

Figure 7.

The structure of the Temporal Convolutional Network. Dilated casual convolution, weight normalization, dropout, and the optional 1 × 1 Convolution are needed to complete the residual block.

2.3.1. Casual Convolution

Causal convolution targets the temporal data, which ensures the model cannot disrupt the order in which we model the data. The distribution predicted for a certain component of the signal only depends on the component predicted before. That means only the “past” can influence the “future”. It is different from the conventional filter which looks into the future as well as the past while sliding over the data.

It can be used for synthesis with

where 0 s represent unknown values.

x1 ← sample (f1 (0, …, 0))

x2 ← sample (f2 (x1, 0, …, 0))

x3 ← sample (f3 (x1, x2, 0, …, 0))

xT ← sample (fT (x1, x2, …, xT−1, 0))

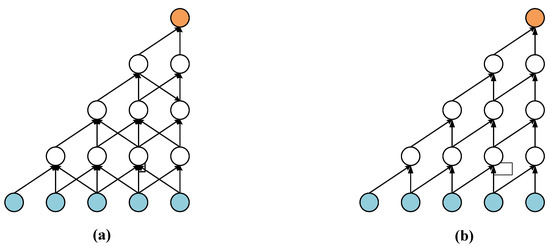

The process of the standard convolution and casual convolution is shown in Figure 8a,b.

Figure 8.

(a) Standard Convolution and (b) Casual Convolution. Standard convolution does not take the direction of convolution into account. Casual Convolution moves the kernel in one direction.

2.3.2. Dilated Convolution

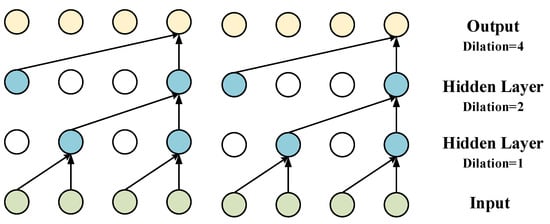

Dilated convolution, is also known as Atrous Convolution. The idea behind dilated convolution is to “inflate” the kernel, which in turn skips some of the points. In a network made up of multiple layers of dilated convolutions, the dilation rate is increased exponentially at each layer. While the number of parameters grows only linearly with the layers, the effective receptive field grows exponentially with the layers. As a result, the dilated convolution provides a way that not only increases the receptive field but also contributes to the reduction of the computing cost. The process of dilated convolution is illustrated in Figure 9.

Figure 9.

The process of dilated convolution. Dilated convolution applies the filter over a region larger than itself by skipping a certain number of inputs, which allows the network to have a large receptive field.

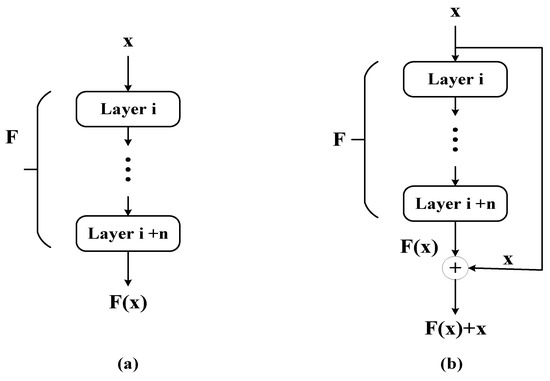

2.3.3. Residual Connection

Data flows through each layer sequentially in traditional feedforward neural networks. They tend to come across problems such as exploding gradients and vanishing gradients, with the network becoming deeper and deeper for better accuracy and performance. To make the training converge more easily, in the residual network, another path is added from the first layer directly to the output layer bypassing the intermediate layers. The residual network has been widely adopted by many models such as ResNet for image processing, Transformer for natural language processing, AlphaFold for protein structure predictions, etc. The process of traditional feedforward with and without residual connection is shown in Figure 10a,b.

Figure 10.

(a) Feedforward without the residual connection. (b) Feedforward with the residual connection. Residual Connection is a kind of skip-connection that learns residual functions with respect to the layer inputs rather than learn unreferenced functions.

3. Experiment

3.1. Dataset

Because Chinese lip recognition technology is in its initial stage, the quality and number of lip-reading datasets available still have a long way to go. Most of the current lip-reading datasets are in English. There are a few influential ones such as:

(1). The AVLetters dataset is the first audio-visual speech dataset which contains 10 speakers, each of whom makes three independent statements of 26 English letters. There are 780 utterances in total.

(2). The XM2VTS dataset includes 295 volunteers, each of whom reads two-digit sequences and phonetically balanced sentences (10 numbers, 7 words) at normal speaking speed. There are 7080 utterance instances in total.

(3). The BANCA dataset is recorded in four different languages (English, French, Italian, Spanish) and filmed under three different conditions (controlled, degraded and adverse) with a total of 208 participants and nearly 30,000 utterances.

(4). The OuluVS dataset aims to provide a unified standard for performance evaluation of audio-visual speech recognition systems. It contains 20 participants, each of whom states 10 daily greeting phrases 5 times for a total of 1000 utterance instances.

(5). The LRW dataset is derived from BBC radio and television programs instead of being recorded by volunteers. It selects the 500 most frequent words and captures short video of the speakers saying these words, thus there are more than 1000 speakers and more than 550 million utterances. To some extent, the LRW dataset meets the requirements of deep learning in terms of data volume. Existing advanced methods trained on the LRW dataset are shown in Table 2.

Table 2.

Existing methods with different architectures trained on the LRW dataset. (LRW: Lip Reading in the Wild).

However, the datasets above are all targeted at English lip-reading, and we still need Chinese datasets. Therefore, we decided to use a self-made dataset to perform our experiment.

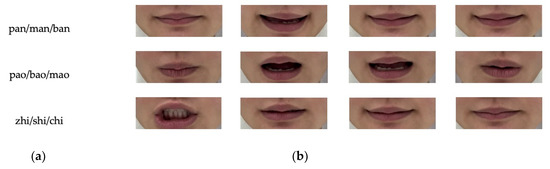

Pinyin, often shortened to just pinyin, is the official romanization system for Standard Mandarin Chinese, consisting of basic phonemes (56), consonants (23), and simple vowels (36). This results in 413 potential combinations plus special cases. Given the four tones of Mandarin, there are around 1600 unique syllables.

Moreover, in Mandarin, if the phonemes such as ‘b’/‘p’/‘m’, ‘d’/‘t’/‘n’, ‘g’/‘k’/‘h’, ‘zh’/‘ch’/‘sh’ are followed by the same final, they show no obvious difference visually in lip movement. They are shown in Figure 11.

Figure 11.

(a) Pinyin with similar lip movement (b) corresponding lip movement.

To make the experiment less challenging, we avoid choosing Mandarin characters with the same Pinyin or similar Pinyin and collect more common and simple two-word characters in daily life for the time being. As soon as we make progress on the current dataset, further research for more complex Mandarin will be studied.

We make a dataset called ‘Databox’ consisting of 80 volunteers, each of whom says twenty specific words ten times, including: ‘Tai Yang (sun)’, ‘Gong Zuo (work)’, ‘Shui Jiao (sleep)’, ‘Chi Fan (eat)’, ‘Bai Yun (cloud)’, ‘Shun Li (well)’, ‘Zhong Guo (China)’, ‘Dui Bu Qi (sorry)’, ‘Xie Xie (thanks)’, ‘Zai Jian (goodbye)’, ‘Xue Xiao (school)’, ‘Wan Shua (play)’, and so on. In the end, we have a total of 16,000 lip-reading videos.

3.2. Experiment Settings

(1) Data Preprocessing:

Firstly, we collect all the videos and put them in different folders according to the class label. We use python libraries os and glob to list and fetch all files respectively and return videos and labels as NumPy arrays. Then we use the OpenCV library to read 29 frames from each video and crop the lip area by detecting 68 landmarks of the face with the dlib library. Afterward, all the frames are resized to 112 × 112, normalized, and converted to grayscale. Video data augmentation also proves necessary to overcome the problem of limited diversity of data, including Random Crop, Random Rotate, Horizontal Flip, Vertical Flip, and Gaussian Blur.

(2) Parameters Settings:

We utilize the open-sourced libraries Tensorflow and Keras, which provide high-level APIs for easily building and training models. The model is trained on servers with four NVIDIA Titan X GPUs. We split the dataset into the training dataset and the test dataset using a ratio of 8:2 and set the epoch and batch size to 6032 using the Adam optimizer with an initial learning rate of 3 × 10−4. The frontend and backend of the network are pretrained on LRW. Dropout is applied with a probability of 0.5 during the training and finally the standard Cross Entropy loss is used to measure how well our model performs.

3.3. Recognition Results

(1) Comparison with the current State-of-the-Art:

In this section, we compare against two frontend types: ResNet-18 and MobileNet v2 on our dataset. ResNet is an extremely deep network using a residual learning framework that obtains a 28% relative improvement over the COCO dataset. MobileNet is a small, low-latency, low-power model designed to maximize accuracy and meet resource constraints for on-device applications. For a fair evaluation, all the models are combined with the same backend: Temporal Convolutional Network. The performances of different models are shown in Table 3.

Table 3.

Performance of different models. The number of channels is scaled for different capacities, marked as 0.5×, 1×, and 2×. Channel widths are the standard ones for ShuffleNet V2, while the base channel width for TCN is 256 channels.

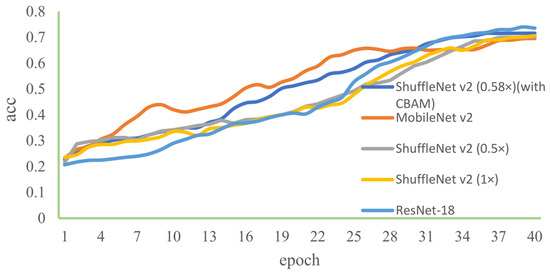

We can see that ResNet-18 has the best accuracy but consumes the most computing power. The accuracy and computational complexity of ShuffleNet v2 (1×) [19] and MobileNet v2 are similar using our dataset. When the channel width of Shufflenet v2 is reduced, there is a decrease in accuracy. But after the attention module CBAM is inserted, the performance of ShuffleNet v2 (0.5×) is almost the same as ShuffleNet v2 (1×) with the decrease of FLOPs. In summary, ShuffleNet v2 (0.5×) (CBAM; the one we propose) surpasses MobileNet v2 by 0.7% in recognition accuracy and reduces computation resources by almost 60% compared with ResNet-18, which has the highest accuracy.

As is shown in Figure 12, the x-axis represents how many epochs the model has been trained for and the y-axis represents the accuracy of the model. The accuracy of the ResNet-18 network rises the most slowly in the beginning, because it has the most parameters and the best accuracy. The model ShuffleNet v2 (0.5×) (CBAM) converges well when the number of epochs reaches 30 and achieves the second-highest accuracy compared with other models.

Figure 12.

Comparison of the accuracy on different models.

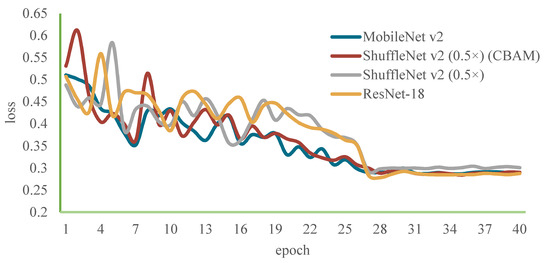

As is shown in Figure 13, the x-axis represents how many epochs the model has been trained for and the y-axis represents loss values, which indicates the difference from the desired targets. The loss function of the ResNet-18 network decreases the fastest among the four architectures because it has the largest number of parameters. When the number of iterations reaches 30, the loss value of the ShuffleNet (0.5×) (CBAM) network starts to change more slowly and becomes stable. It implies that the model fits the data well and at this point the parameters are optimal. Compared with ShuffleNet v2 (0.5×), the ShuffleNet (0.5×) (CBAM) has a lower lost value. The ShuffleNet (0.5×) (CBAM) shares a similar loss with MobileNet but has a faster GPU speed which is already discussed in Table 2.

Figure 13.

Comparison of loss on different models.

A part of the results of different models on some words are shown in Table 4.

Table 4.

Partition of the results of different models on some words.

(2) Comparison of different lengths of the input frame:

Previously in our experiment, we would always extract 29 consequent frames from a video and the word we would pay attention to is at the center of the sequence. However, it is unrealistic to assume such deviations in a practical scenario. To highlight this problem, we design two more experiments: one is to remove N frames ranging from 0 to 4 from the sequence to test the performance of the model trained with a fixed-length sequence, and the other is to train the model with a variable-length sequence to test how robust it is.

As is shown in Table 5, both experiments are conducted on ShuffleNet v2 with CBAM. If a frame is removed during testing, the model trained on a fixed-length sequence is significantly affected and the performance degrades with more frames removed. As expected, the model trained with variable-length augmentation shows more robustness to the missing frame.

Table 5.

Different performance of the model consisting of a ShuffleNet (0.5×) with CBAM.

4. Conclusions

The paper proposes a lip-reading recognition model on our self-built dataset. The current state-of-the-art methodology consists of a ResNet network and a temporal convolutional network. We evaluate and analyze several advanced methodologies and find that our model achieves a reduction in computing power by 60% and comparable recognition accuracy compared with the ResNet network, which has the best accuracy. Firstly, we replace the ResNet network with the lightweight network ShuffleNet to fit into practical scenarios for mobile applications. Secondly, we improve the performance by inserting an attention model, which helps the network focus on the most important information in the feature maps. Thirdly, we train the model with variable-length frames and fixed-length frames, and the results show that the model trained with variable-length frames is more robust if random frames are removed at the cost of loss of recognition accuracy. Finally, we expand the dataset built by our laboratory and in the future we will collect more datasets for Chinese lip-reading research. Abbreviation shows the full name of used symbols in the paper.

Author Contributions

Data curation, Y.F.; Software, Y.L. and Y.F; Supervision, Y.L.; Visualization, R.N.; Writing—review & editing, Y.F. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the National Natural Science Foundation of China (61971007 and 61571013).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

| TCN | Temporal Convolutional Networks |

| CBAM | Convolutional Block Attention Module |

| CNN | Convolutional Neural Network |

| LSTM | Long Short-term Memory |

| CTC | Connectionist Temporal Classification |

| LRW | Lip Reading in the Wild |

| GRU | Gate Recurrent Unit |

| DWConv. | Depth Wise Convolution |

| CAM | Channel Attention Module |

| SAM | Spatial Attention Module |

References

- Palecek, K. Utilizing lipreading in large vocabulary continuous speech recognition. In Proceedings of the International Conference on Speech and Computer, Hatfield, UK, 12–16 September 2017; Springer: Berlin, Germany; Cham, Switzerland, 2017; pp. 767–776. [Google Scholar]

- Mcgurk, H.; Macdonald, J. Hearing lips and seeing voices. Nature 1976, 264, 746–748. [Google Scholar] [CrossRef] [PubMed]

- Assael, Y.M.; Shillingford, B.; Whiteson, S. Lipnet: End-to-end sentence-level lipreading. arXiv 2016, arXiv:1611.01599. [Google Scholar]

- Burton, J.; Frank, D.; Saleh, M.; Navab, N.; Bear, H.L. The speaker-independent lipreading play-off; a survey of lipreading machines. In Proceedings of the 2018 IEEE International Conference on Image Processing, Applications and Systems (IPAS), Sophia Antipolis, France, 12–14 December 2018. [Google Scholar]

- Lu, H.; Liu, X.; Yin, Y.; Chen, Z. A Patent Text Classification Model Based on Multivariate Neural Network Fusion. In Proceedings of the 2019 6th International Conference on Soft Computing & Machine Intelligence (ISCMI), Johannesburg, South Africa, 19–20 November 2019. [Google Scholar]

- Hussein, D.; Ibrahim, D.M.; Sarhan, A.M. HLR-Net:A Hybrid Lip-Reading Model Based on Deep Convolutional Neural Networks. Comput. Mater. Contin. 2021, 68, 1531–1549. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vincent, V.; Andrew, R. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Bai, S.; Kolter, J.Z.; Koltun, V. An empirical evaluation of generic convolutional and recurrent networks for sequence modeling. arXiv 2018, arXiv:1803.01271. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Saberi-Movahed, F.; Rostami, M.; Berahmand, K.; Karami, S.; Tiwari, P.; Oussalah, M.; Band, S.S. Dual Regularized Unsupervised Feature Selection Based on Matrix Factorization and Minimum Redundancy with application in gene selection. Knowl. Based Syst. 2022, 256, 109884. [Google Scholar] [CrossRef]

- Nazari, K.; Ebadi, M.J.; Berahmand, K. Diagnosis of alternaria disease and leafminer pest on tomato leaves using image processing techniques. J. Sci. Food Agric. 2022, 102, 6907–6920. [Google Scholar] [CrossRef] [PubMed]

- Rostami, M.; Berahmand, K.; Nasiri, E.; Forouzandeh, S. Review of swarm intelligence-based feature selection methods. Eng. Appl. Artif. Intell. 2021, 100, 104210. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.-C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for mobilenetv3. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Ma, N.; Zhang, X.; Zheng, H.-T.; Sun, J. Shufflenet v2: Practical guidelines for efficient cnn architecture design. In Proceedings of the European Conference on Computer Vision (ECCV) 2018, Munich, Germany, 8–14 September 2018; pp. 116–131. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18 June 2018–23 June 2018; pp. 6848–6856. [Google Scholar]

- Huang, G.; Liu, Z.; Van, D.M.L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the 2017 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Zhang, R.; Sun, F.; Song, Z.; Wang, X.; Du, Y.; Dong, S. Short-term traffic flow forecasting model based on GA-TCN. J. Adv. Transp. 2021, 2021, 1338607. [Google Scholar] [CrossRef]

- Hewage, P.; Behera, A.; Trovati, M.; Pereira, E.; Ghahremani, M.; Palmieri, F. Temporal convolutional neural (TCN) network for an effective weather forecasting using time-series data from the local weather station. Soft Comput. 2020, 24, 16453–16482. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural. Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Cho, K.; van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning Phrase Representations Using RNN Encoder-Decoder for Statistical Machine Translation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1724–1734. [Google Scholar]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated Residual Transformations for Deep Neural Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5987–5995. [Google Scholar]

- Chollet, F.X. Deep learning with depthwise separable convolution. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Chung, J.S.; Zisserman, A.P. Lip reading in profile. In Proceedings of the British Machine Vision Conference (BMVC), London, UK, 4–7 September 2017. [Google Scholar]

- Themos, S.; Georgios, T. Combining residual networks with lstms for lipreading. In Proceedings of the INTERSPEECH 2017: Conference of the International Speech Communication Association, Stockholm, Sweden, 20–24 August 2017. [Google Scholar]

- Wang, C.H. Multi-grained spatio-temporal modeling for lip-reading. In Proceedings of the 30th British Machine Vision Conference, Cardiff, UK, 9–12 September 2019. [Google Scholar]

- Weng, X.S.; Kris, K. Learning spatio-temporal features with two-stream deep 3d cnns for lipreading. In Proceedings of the 30th British Machine Vision Conference, Cardiff, UK,, 9–12 September 2019. [Google Scholar]

- Luo, M.S.; Yang, S.; Shan, S.G.; Chen, X.L. Pseudo-convolutional policy gradient for sequence-to-sequence lip-reading. In Proceedings of the 15th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2020), Buenos Aires, Argentina, 16–20 November 2020. [Google Scholar]

- Brais, M.; Ma, P.C.; Stavros, P.; Maja, P. Lipreading using temporal convolutional network. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 6319–6323. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).